Accelerating the integration of ChatGPT and other large-scale AI models into biomedical research and healthcare

Abstract

Large-scale artificial intelligence (AI) models such as ChatGPT have the potential to improve performance on many benchmarks and real-world tasks. However, it is difficult to develop and maintain these models because of their complexity and resource requirements. As a result, they are still inaccessible to healthcare industries and clinicians. This situation might soon be changed because of advancements in graphics processing unit (GPU) programming and parallel computing. More importantly, leveraging existing large-scale AIs such as GPT-4 and Med-PaLM and integrating them into multiagent models (e.g., Visual-ChatGPT) will facilitate real-world implementations. This review aims to raise awareness of the potential applications of these models in healthcare. We provide a general overview of several advanced large-scale AI models, including language models, vision-language models, graph learning models, language-conditioned multiagent models, and multimodal embodied models. We discuss their potential medical applications in addition to the challenges and future directions. Importantly, we stress the need to align these models with human values and goals, such as using reinforcement learning from human feedback, to ensure that they provide accurate and personalized insights that support human decision-making and improve healthcare outcomes.

1 INTRODUCTION

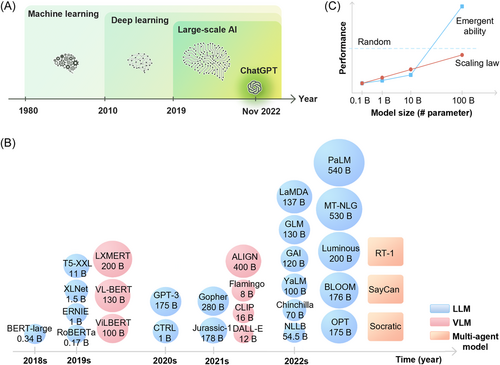

Integrating artificial intelligence (AI) into clinical practice can enhance the quality of health services. AI has shown promise in improving diagnosis accuracy and speed, as well as efficiently reviewing large datasets. It can also reduce healthcare workload and create personalized treatment options. AI can monitor patients and provide dynamic feedback, leading to better and more personalized care. Moreover, AI is contributing to the development of new proteins and drugs, potentially accelerating medical discovery. As AI technology continues to evolve, we are witnessing a shift from small-scale machine/deep learning models to large-scale foundation AI models in the healthcare industry, especially in 2022s, large-scale language models (LLMs) like ChatGPT1 and FLAN models2 have demonstrated exceptional performance (Figure 1A). In general, small-scale AI systems are designed to perform specific and narrow tasks, such as analyzing medical images or monitoring health data. However, physicians are not satisfied with having access to fixed, simple, and repetitive classification labels or instructions provided by AI models; they want to have cognitive labors available to offer novel insights safely and at a low cost. While small-scale AI systems can be useful in many settings, they often lack human-like interfaces to interact and to receive online human feedbacks, making them difficult to understand and learn intellectual tasks that a human physician can.

Currently, there is no precise definition for a “large-scale” AI model. These models usually have a high number of parameters, often in the billions (Figure 1B). The size of an AI model is influenced by the size of the training data set, the processing power needed for training, and the number of model parameters. These factors collectively influence the AI model's performance. Recent research has suggested that some large-scale AI models may exhibit an “emergent ability” when they reach a certain threshold, resulting in a sudden surge in zero-shot performance (Figure 1C).3 It is expected that further scaling of models and data will unlock even more emergent abilities. As a result, the definition of a large-scale AI model is likely to change, with larger models possessing numerous emergent abilities not found in smaller models. To date, only a handful of large language models, including generative pre-trained transformer-3/-3.5 (GPT-3/-3.5), Chinchilla,4 and pathways language model (PaLM),5 have demonstrated emergent abilities, and the reasons for this phenomenon remain unclear. Typically, zero-shot performances increase exponentially when model parameters exceed 100 billion (Figure 1C). This could be attributed to the model's enhanced ability to learn intricate connections between inputs and outputs. Researchers are currently investigating the impact of model size and other factors, such as architecture and training data, on emergent abilities.

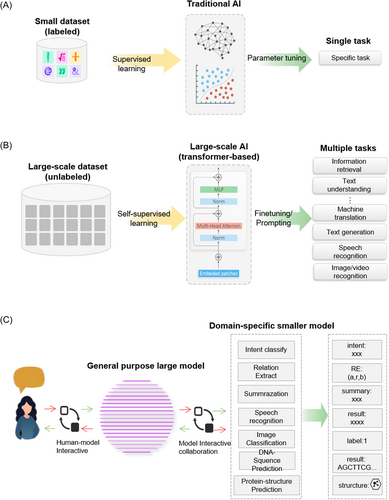

In the medical field, it is crucial to distinguish between models that possess emergent abilities and those that do not. Models with emergent abilities,3 known as EA-LLMs, can be valuable for complex tasks that require prompt engineering and generation, such as medical record abstraction, translation, and case report writing. These models are also suitable for human-like tasks, such as AI–physician interaction and AI–patient dialog, as well as tasks that have little to no annotated data and those that generalize outside of distribution. On the other hand, smaller models without emergent abilities may be more appropriate for tasks that have sufficient annotated datasets for fine-tuning, particularly structured tasks like knowledge retrieval and disease classification (Figure 2A,B). We expect a new intelligent system to emerge where EA-LLMs (instructed by prompt engineering techniques) act as communication channels between doctors and patients. These EA-LLMs can work with smaller LLMs to produce results and provide high-quality data to fine-tune these smaller models (Figure 2C). This system would offer new research directions on deploying EA-LLMs, improving model architectures, and designing prompt instructions for reliable performance. Implementing such a system can expedite the integration of AI into healthcare and medical tasks, such as diagnosis, prognosis, and therapeutic decisions, leading to better patient outcomes and reduced workload for healthcare providers. Moreover, large-scale AI models can facilitate analytics for vast electronic health records (EHRs), clinical, genomic, and image data, enabling personalized and accurate insights for individual tasks. The use of large-scale AI models in healthcare can reduce healthcare costs while enhancing healthcare delivery quality. In the future, multiple intelligent agents in robotics and autonomous systems may collaborate to provide healthcare services. The strong language capabilities of large-scale AI models will enable effective communication and coordination among agents and humans, further improving the accuracy and efficiency of healthcare tasks and ultimately leading to better patient outcomes.

Previous publications before this one has not specifically reviewed the potential applications of large-scale AI models, also known as foundation models, in healthcare. At present, these types of models are not used in medical applications, and there is limited research and discussion on their limitations and challenges.6 Previous reviews have focused on the applications of language models (not necessarily large-scale AIs) in healthcare, particularly in biomedical text pretraining and natural language processing (NLP) tasks. For example, Wang et al.7 reviewed the recent advances and applications of pretrained language models in the biomedical domain, proposing various pretrained models trained on biomedical datasets such as biomedical text, EHRs, protein, and DNA sequences. Kalyan et al.8 provided a comprehensive overview of various transformer-based biomedical pre-trained language models in the biomedical domain. Despite the rise in large-scale AI models, the field is presently lacking a full review. The recent development of ChatGPT1 and GPT-49 has raised hopes for the implementation of large-scale AI in medicine. We present a systematic survey that examines the current state of the field to assist individuals with distinct backgrounds understand, use, and create large-scale AI models for various medical tasks. This review does not focus on diffusion models,10 another class of LLMs. Recently, latent diffusion models have gained popularity due to their ability to produce high-quality medical images that can be fine-tuned by changing the denoising process, such as with text prompting. Kazerouni et al.11 have reviewed the taxonomy and uses of diffusion models in medical imaging (including denoising medical images, detecting lesions, modality translation, and increasing the size of medical image databases).

In this paper, we present an overview of five advanced large-scale AI models: language models, vision-language models, graph learning model, language-conditioned multiagent models, and multimodal models. The structure of the paper is as follows: In Section 2, we provide history and background information on large-scale AI models and their fundamental concepts. In Sections 3, 4, 5, 6, 7, we introduce the language model, the vision-language model (VLM), the graph learning model, and the language-conditioned multiagent models, and multimodal models, respectively, highlighting their opportunities and applications in the medical domain. In Sections 8 and 9, we delve into the challenges and potential future developments of these advanced AI techniques in the medical domain. Finally, in Section 10, we conclude the paper.

The review introduces basic concepts such as LLMs, self-supervised learning, pretraining tasks, and fine-tuning methods, providing readers with a solid foundation. We also explore biomedical embedding types and their medical applications, discuss progress in vision-language and language-conditioned models, and identify limitations and future trends in healthcare. Overall, this review serves as a valuable resource for individuals from diverse backgrounds looking to understand, utilize, and develop large-scale AI models for healthcare tasks.

2 LARGE-SCALE AI MODELS

2.1 Paradigm shifts in AI

There have been many different kinds of large-scale AI models created recently, and they have caused a major change in the field of AI. Diffusion models, variational autoencoders,12 generative adversarial networks,13 transformer models like bidirectional encoder representations from transformers (BERT) and GPT,14 and other architectures like reinforcement learning models, hybrid models, and graph neural networks (GNNs)15 are a few examples. We summarize the two major paradigm shifts in NLP to represent the most recent developments in AI because the field of NLP is developing quickly and its developments can be used for tasks other than language data. We should be mindful that the field is always changing and that new paradigm shifts could occur soon.

The transition from conventional machine learning to deep learning techniques was the first paradigm change in AI that took place in the 2010s (Figure 1A). The ability to train deep neural networks to accomplish state-of-the-art success on a variety of tasks was enabled by the development of more potent hardware and the accessibility of moderate amounts of data.

These deep learning models relied on techniques such as using improved long short-term memory (LSTM)16 and convolutional neural network (CNN) models17 as feature extractors, and using the sequence-to-sequence model with attention as the overall technical framework for specific tasks. The main focus was on improving the capacity and depth of the model by continually adding deeper layers of LSTM and CNN to the encoder and decoder. However, these efforts did not result in significant improvements in solving specific AI tasks compared to nondeep learning methods. The main factors that held back the success of deep learning are: (1) insufficient training data for specific tasks, which resulted in a lack of support for models as their capacity increased; (2) inadequate ability of traditional LSTM and CNN feature extractors to store and effectively utilize the knowledge within the data.

These have led to the second paradigm change in recent years, which is the transition from deep learning to pretrained models. Large-scale pretrained models like BERT18 and GPT-3,19 which can acquire general-purpose language representations that can be tailored for a variety of tasks, have contributed to this shift.

Large-scale pretrained models can effectively acquire knowledge from a large amount of labeled and unlabeled data, due to their massive model parameters and complex pretraining objectives. This knowledge is implicitly stored in the parameters and can be applied to specific downstream tasks through fine-tuning. The current consensus in the AI community is to use large-scale pretrained models as the backbone for downstream tasks instead of learning models from scratch (Figure 2A,B).

The second paradigm shift has technical impacts in twofolds. First, transformer-based models are becoming increasingly popular as feature extractors in different subfields of AI. The Transformer is parallelizable, which means that it can be trained on broad datasets at scale and can be implemented on powerful graphics processing unit (GPU)/tensor processing unit (TPU) hardware. Transformer-based models are also versatile, which means that they can be used for various language, image, and video processing tasks. In addition, these models lean on general language representations and are generalizable to new tasks.

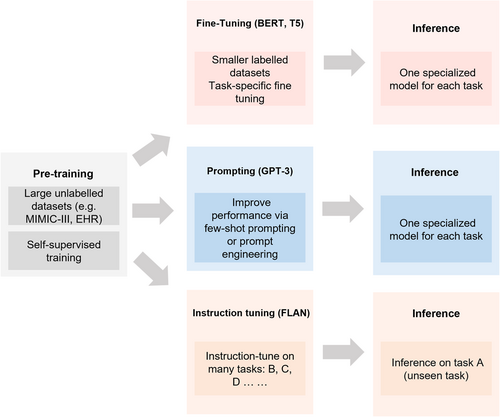

Second, there have been many prompt engineering methods for large-scale AI models, such as prompting20 and instruction tuning.2 The differences between them are illustrated in Figure 3. Fine-tuning involves retraining a pretrained large-scale AI model on a smaller, task-specific data set to improve its performance on specific domains. This process requires many task-specific examples and results in a specialized model for each task. While prompting could improve the few-shot performance of large models. Prompting adds context-rich text to unannotated data during pretraining, which helps the model focus on language generation tasks with masked inputs. Instruction tuning involves fine-tuning the model using a diverse set of natural language instructions, emphasizing language understanding. Unlike prompting, instruction tuning enables the model to process unseen tasks effectively and largely improves the zero-shot performance of models.

Today, AI is already part of medical technology. Some argue that it can never reach the intelligent/reasoning level of the human brain, but is rather a product of computing power and statistical skills. However, recent advances in large-scale AIs like GPT4 suggest that human-like artificial intelligence is possible, and Sam Altman, CEO of OpenAI, suggests that the cost will soon to be near-zero. Importantly, these advances have created a new human–computer interface that allows the general public and healthcare professionals to interact with AI in their laptops or mobile phones without the need for a layer of technical packaging. On March 15, 2023, OpenAI released GPT-4, which differs from GPT-3.5 in that it can recognize and analyze images. GPT-4 can accept both image and text inputs and output text. On March 17, 2023, Microsoft launched Microsoft 365 Copilot, integrating GPT-4 into the Office software system.

2.2 Technical architecture of large-scale AI model

As a healthcare review article, we do not delve into the technical details of neural networks and their math. Instead, we introduce some fundamental transformer concepts. We use ChatGPT, a LLM, as an example to explain the key technical logic involved in its development. By understanding ChatGPT's development process, one can gain insights into the basic ideas and procedures of the computational framework for LLMs. ChatGPT is a combination of “Chat,” which refers to conversational chat, and “GPT,” which stands for generative pretrained transformer. It is a generative pre-trained transformer model that comprises three essential components: generative, pretraining, and transformer. Therefore, our technical discussion will cover the following components: (1) transformer, (2) pretraining, (3) generative mode, and (4) boosting and alignment methods.

2.2.1 Transformer

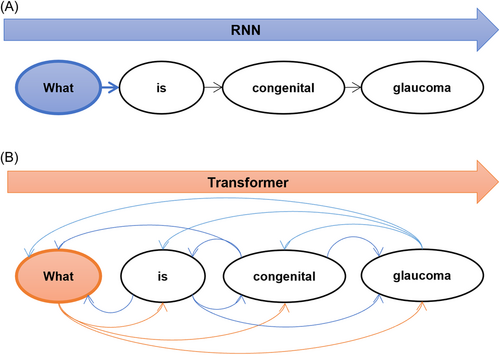

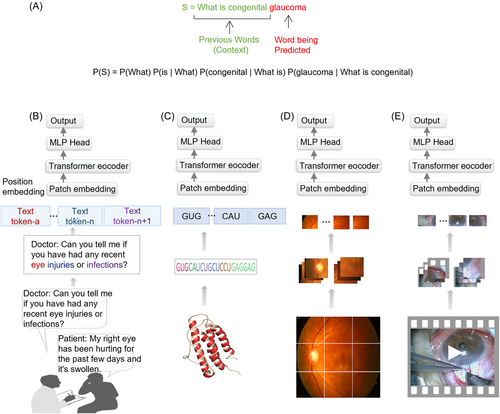

As mentioned above in the paradigm shift paragraph, there are shortcomings in recurrent neural network (RNN) models in the era of deep learning. To clarify this point, we provide an example (with some differences from the actual calculated values) to illustrate how the sentence “What is congenital glaucoma” is computed in the RNN (Figure 4A). First, we need to compute “What” and “What is congenital glaucoma” to get the result set “$What.” Then, based on “$What,” we compute “is” and “What is congenital glaucoma” to get “$is”. We repeat these steps to compute every token in the sentence, including “$congenital” and “$glaucoma.” The calculation process is thus a single-direction pipeline, where each step depends on the previous one, which makes it slow.

The transformer has revolutionized NLP. It uses an attention mechanism that reduces the distance between any two positions in a sequence to a constant. It is not based on a sequential structure like RNN, making it more parallelizable and compatible with existing GPU frameworks. Before the introduction of transformer, AI had been lagging behind in language-based tasks such as medical language processing (MLP). However, transformer quickly became a leading model in the field of MLP and sparked a wave of new tools such as Med-PaLM, which can be trained on large amounts of text data and generate coherent new medical text.

Take the same sequence “What is congenital glaucoma” as an example (Figure 4B). When this sentence is fed into a model, it has four words or tokens. Every word is regarded as a token, and every token contains a word embedding. The highest level of attention “glaucoma” is given to “glaucoma” itself (0.8). “What” and “is” are less relevant, which lowers the attention score (0.4). The link between “congenital” and “glaucoma” is comparatively high and has a higher attention score (0.7). Taken together, the attention matrix for the word “glaucoma” reads like this (0.4, 0.3, 0.7, 0.8).

The underpinning of this procedure is Word2Vec21 embedding technique that turns each word into an N-dimensional vector. By learning the context in which different words appear in the text corpus, Word2Vec maps words that are semantically similar to nearby points in vector space, creating a digital representation of the text. GPT uses these digitized vectors to quantify the relationships between words and explore the connections between them.

Inspire by this processing that divides data into patches and projects them linearly into tokens, transformer architecture is also capable of processing a variety of data types, including texts, biological and chemical sequences, images, and audios (Figure 5). Its emergence has revealed the potential for integration of different subfields of AI, which were previously disconnected. In 2021, vision transformer (ViT)22 was introduced, which has a similar architecture to the original transformer, but can analyze medical images as well as medical texts. Traditional methods of processing language sequences cannot be used to process pixels as it would be computationally expensive. Instead, medical images are divided into square units, which can be adjusted in size based on the resolution of the original image. By processing units in groups and applying self-attention, ViT can quickly process large medical datasets, resulting in highly accurate classifications and diagnoses.

ViTs have shown remarkable results on benchmarks such as ImageNet, COCO, and ADE20k, outperforming CNNs. ViTs' superior modeling capabilities in the medical domain include their ability to: (1) effectively learn long-term dependencies through the attention mechanism, (2) effectively integrate multiple medical modalities, and (3) provide more interpretable models through the multihead attention structure. These advantages make ViTs more efficient and similar to human perception in the medical domain when compared to CNNs. Despite the progress made by ViTs in the field of medical imaging, many new models still incorporate elements from CNNs. This suggests that future models will likely use a combination of transformer and CNN models, rather than completely abandoning the use of CNNs in medical imaging.

We avoid delving into the technical intricacies of the transformer structure and matrix computation methods because this is a review in the medical field. In transformer's architecture, each word in self-attention contains three separate vectors: a key vector (K), a value vector (V), and a query vector (Q). Their particular meanings will not be discussed in this article. Readers interested in these technical details can refer to Jay Alammar's blog (http://jalammar.github.io/illustrated-transformer/).

2.2.2 Pretrained model

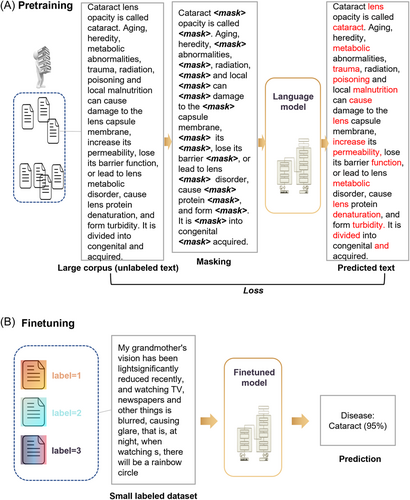

To improve language processing, the GPT23 model has been developed using the transformer architecture, which addresses the constraints of sequential dependency and linguistic dependency. This approach is based on a structural approach that involves unsupervised pretraining without human intervention or labeled datasets (Figure 6A). The model is then refined through supervised fine-tuning to improve its understanding of a specific task (Figure 6B).

2.2.3 Generative mode

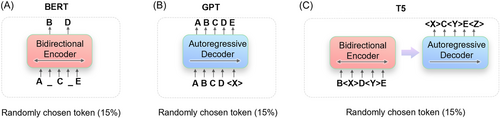

The original transformer model in the Google paper “Attention is all you need” consists of two parts: the encoder and the decoder. The former is used for translation, the latter for generation. Google focused on the encoder and built the BERT model. The “bidirectional” in BERT means that it predicts words using both preceding and following contexts, making BERT more adept at natural language understanding (NLU) tasks.

GPT is based on transformer but simplifies the model by removing the encoder and retaining only the decoder. In addition, unlike BERT's bidirectional context prediction, GPT advocates using only the preceding context to predict words (i.e., unidirectional), making the model simpler and faster to compute and more suitable for natural language generation tasks. GPT's architecture is more like that of a real human, who would infer the next sentence from the previous one.

2.2.4 Boosting and alignment methods

- a.

Reasoning prompting

Large language models have shown their ability to generalize contextually by adapting to downstream tasks with minimal context samples or a natural language task description.19 Chain-of-thought (CoT) prompts24 are a unique set of instructions that generate output by triggering step-by-step reasoning. The traditional CoT prompt begins with the phrase “Let's think step by step.” CoT prompts can be created manually or generated automatically (Manual-CoT).25 Few-shot-CoT typically outperforms zero-shot-CoT.26

-

Self-consistency: Majority voting on the randomly sampled CoT generations.27

-

Ask-me-anything prompting: Prompt-aggregation strategy to improve performance.28

-

Verify-and-edit: Postediting reasoning chains according to external knowledge.29

-

Multimodal-CoT: Incorporating language and vision modalities into a framework.25

- b.

Alignment and scalable oversight

The purpose of alignment is to guarantee that LLMs match human values and expectations.30 This is similar to a student who surpasses their teacher in intelligence, but the teacher can still offer feedback to help the student improve and become more disciplined. To ensure success, humans must establish clear objectives, assess whether the models have met them and adhered to social norms, and provide constructive feedback for enhancement.

This feedback can be fed to large models through reinforcement learning. Reinforcement learning allows the model to learn from its actions and improve based on the feedback it receives. OpenAI's InstructGPT,30 DeepMind's Sparrow,31 and Constitutional AI32 use reinforcement learning from human feedback (RLHF)33 to fine-tune the model. In RLHF, the model's responses are sorted based on human feedback, and these annotated responses are used to train a preference model, which returns a scalar reward to the RL optimizer. Finally, a conversational agent is simulated by training it with reinforcement learning to mimic the preference model. In ChatGPT, OpenAI used proximal policy optimization34 to fine-tune the model to meet human needs. Many other reinforcement learning algorithms can also be used to optimize the policy of the agent in a given environment. The reinforcement learning approach can also be applied to other types of data such as medical images and videos, with the potential to achieve similar results as with ChatGPT. As more research is conducted in this area, we can expect to see larger and more identification of abnormalities in medical images, such as X-rays or computed tomography (CT) scans. Furthermore, they can be employed in image-based drug discovery, by analyzing high-resolution images of cells or molecules.

2.3 Advanced models that better serve human needs

Future models should be situated in real-world environments to interact and learn human causal relationships through physical interaction with the surrounding environment. Embodied AI35 is a focus of some researchers, which are AI agents that can move and interact with their environments in simulations of three-dimensional (3D) virtual worlds. The interactivity of embodied agents allows them to learn in a new way by continuously receiving new observations from the environment that can help correct their behavior. However, current technology is not yet mature or robust enough for these agents to perform daily tasks such as manipulating objects, moving in complex environments, or operating on patients. Additionally, they are not yet safe enough to interact with humans and natural environments.

In the future, with the aid of large-scale AIs, robots are expected to act independently and intelligently in the real world, achieving their goals safely and reliably. This could lead to the development of intelligent robot doctors, capable of diagnosing patients, making clinical decisions, and performing detailed body examinations and surgeries using flexible limbs equipped with multisensors. However, there are still challenges to overcome, such as ensuring patient safety and the reliability of the robot's decision-making processes. Ethical considerations, such as potential job loss for human healthcare providers, must also be taken into account.

2.4 Comparison of democratization and open-source level of large-scale AIs

The level of openness and democratization of LLMs is a topic of concern. Compared with OpenAI's GPT-3, Meta's LLaMA model36 is positioned as an “open-source research tool” that uses various publicly available datasets, including Common Crawl, Wikipedia, and C4 (Table 1). Both models use pretraining data, and LLaMA's pretraining data is publicly available, while GPT-3.5 currently only has CC data available, making LLaMA more user-friendly in terms of data accessibility. The model size of GPT-3.5 is several times larger than LLaMA; GPT-3.5 is a commercial version that can only be accessed through an API, but it is customizable; LLaMA is an open-source noncommercial version that is not customizable. Importantly, LLaMA provides underlying code for users to adjust the model and address risks such as bias, harmful comments, and fabricated facts.

| Features | LLaMA | GTP-3.5 |

|---|---|---|

| Model size | 7B/13B/33B/65B | 175B |

| Availability | Open source (noncommercial) | Not open source (commercial) |

| Customization | Limited customization | Customization for developers |

| Pretrain data source | CC, C4, GitHub, Wikipedia, Books, ArXiv, Stack, Exchange | CC, WebText2, Reddit Links, Books, Journals, Wikipedia |

| Language quality | May not be as powerful | Very sophisticated language |

| Data publicity | All public | Part public |

- Note: GPT-3.5 is a commercial language model that is larger in size, provides the option for customization, and has better Chinese language support and high intelligence and inference abilities. In contrast, LLaMA is an open-source, noncommercial alternative that is smaller in size, provides public access to its pre-training data, but has a weaker ability to process Chinese and may not perform as well in reasoning and generating abilities.

2.5 Large-scale AIs in different modalities for different tasks

-

Large-scale language models (LLMs): These models have the potential to be applied in several medical applications, such as NLP of electronic medical records and biological and chemical sequences. They can also assist in medical diagnosis by analyzing patient data and providing treatment recommendations.

-

Large-scale vision language models (VLMs): These models can be utilized in the identification of abnormalities in medical images, such as X-rays or CT scans. Furthermore, they can be employed in image-based drug discovery, by analyzing high-resolution images of cells or molecules.

-

Large-scale graph learning model (LGMs): These models can stimulate interactions between drugs and proteins, aiding in drug discovery and development.

-

Large-scale language-conditioned multiagent models (LLMMs) and large-scale multimodal models (LMMs): these models can simulate virtual interactions between patients and doctors, enabling training and assessment of medical decision-making and communication skills.

3 LARGE-SCALE LANGUAGE MODELS

-

BERT: Google's BERT is a language model trained on vast amounts of unlabeled text data, including Wikipedia and BooksCorpus, using an encoder-only approach. BERT can be fine-tuned for various medical NLU tasks.18

-

GPT-3: OpenAI's GPT-3 has 175 billion parameters and uses a unidirectional decoder-only autoregressive architecture for text-based generative tasks.19

-

T5: It is a language model that uses an encoder–decoder architecture and can perform multiple tasks by fine-tuning on specific tasks using a smaller data set.40

The main distinctions between these three types of LLMs are illustrated in Figure 8. ChatGPT is derived from GPT-3 but its success does not render BERT and T5 obsolete.

3.1 The use of LLMs in biomedical text

-

BioBERT: A language model that specializes in understanding biomedical text.41 It outperforms other models, including BERT, on various biomedical text mining tasks due to its pretraining on large-scale biomedical corpora. This pretraining enables BioBERT to better comprehend complex biological literature.

-

ClinicalBERT: Trained on clinical notes/EHR in the publicly available MIMIC-III database.42 The model pretrains a BERT-based model using this clinical information and fine-tunes the network to predict the likelihood of hospital readmission. By analyzing healthcare professionals' notes about a patient, ClinicalBERT can update the patient's risk score for readmission, providing a more accurate prediction.

-

PubMedGPT: A biomedical domain-specific model. The Stanford Center for Research on Foundation Models trained a 2.7B parameter GPT on biomedical data from PubMed using the MosaicML Cloud platform, yielding state-of-the-art results on a medical question and answer text from the US Medical Licensing Exam (USMLE).43 Their results showed that it is the initial stage in developing foundation models to assist biomedical research.

-

ChatGPT: It has demonstrated the human-level ability to reason about medical questions. Liévin et al.44 applied the human-aligned GPT-3 (InstructGPT)30 to answer multiple-choice medical exam questions (USMLE and MedMCQA) and medical research questions (PubMedQA). The authors investigated CoT prompts, grounding, and few-shot prompts. They found that InstructGPT performed well but had a tendency to provide biased predictions when unable to answer. The study suggests that further improvement can be made by scaling the model, enhancing prompt alignment, and allowing for better contextualization. Kung et al.45 evaluated the performance of ChatGPT on the USMLE, which is divided into three exams: Step 1, Step 2CK, and Step 3. Without any specialized training or reinforcement, ChatGPT performed at or near the passing threshold for all three exams. Furthermore, ChatGPT showed a high level of concordance (94.6%) and provided insightful explanations. These findings suggest that LLMs may have the potential to aid in medical education and possibly in the decision-making process in clinical settings.

-

Med-PaLM: A large language model designed to answer healthcare-related questions based on the 540-billion parameter PaLM model.46 It was evaluated on the consumer medical question answering datasets of MultiMedQA. A team of medical experts found that Med-PaLM's responses matched those of clinicians in 92.6% of cases.

Overall, LLMs can potentially reach human-level performance on many medical tasks.

3.2 The use of LLMs in the medical dialog system

Medical dialog systems are designed to simulate human-like conversation to assist with medical tasks such as diagnosis, treatment recommendations, and providing information about medical conditions. Recently, LLMs have been fine-tuned for medical dialog tasks.4, 41, 44 The most common approach is to pretrain a language model on a large general corpus and then fine-tune the model using a medical discourse data set, such as MedDialog47 and MedDG.48 However, these models are a major step forward in the field of NLP, but none of them seems to be ready to generate human-like dialog.

ChatGPT, a large language model developed by OpenAI, has been a game changer. It was first released in November 2022 (https://openai.com/blog/chatgpt/) and quickly gained widespread popularity among researchers and developers due to its ability to produce highly human-like text and perform a wide range of NLP tasks. However, it is not entirely clear what factors have contributed to its exceptional performance. One possibility is that OpenAI trained ChatGPT using a technique called RLHF. In reinforcement learning, an agent is trained to complete tasks in an environment where it receives rewards. The agent interacts with the environment iteratively by taking actions, receiving feedback, and modifying its actions to better understand the world and receive greater rewards. To train ChatGPT, the model was prompted with questions, various responses were generated, and then the responses were manually ranked. These rankings were then used to train a reward model. Finally, the language model was fine-tuned to answer queries using reinforcement learning, with the goal of maximizing the output of the reward model.

Google is taking up the GPT challenge to build a PaLM system5 that can generate human-like text, but it remains to be seen if it is able to achieve the same level of human-like text generation as ChatGPT. PaLM utilizes the pathways system, a novel machine-learning technology that allows for the efficient training of very large neural networks using thousands of accelerator processors. This training was done using two Cloud TPU v4 Pods, with data and model parallelism applied at the Pod level, making it the largest TPU-based system configuration used for training to date. Additionally, PaLM utilizes the decoder-only Transformer model architecture and has a parameter size of 540B. The model achieved state-of-the-art results on 28 out of 29 commonly assessed English NLP tasks, such as natural language inference, common-sense reasoning, question-answering, and in-context reading comprehension tasks, due to its large scale of parameters and exceptional few-shot performance.

3.3 The use of LLMs in biological and chemical sequences

In recent years, transformer-based LLMs have been successful in analyzing lengthy DNA sequences. DNABERT49 is a pretrained bidirectional encoder representation that can comprehend global and transferable genomic DNA sequences based on upstream and downstream nucleotide contexts. Enformer,50 developed by DeepMind, is another transformer example that uses self-attention mechanisms to integrate more DNA context, resulting in increased accuracy in predicting gene expression from DNA sequences. Further research is needed to address open questions in this field, such as identifying the functions of multiple trans-acting factors and cis-acting DNA elements, as well as predicting the binding sites of enzyme molecules.

Apart from genomics, BERTs have also been applied to predict the structure or functions of proteins with partially masked sequences. ESM51 and TAPE52 are transformer-based protein language models that have a similar architecture and training objective as BERT. Other models of protein structure predictions include ProteinBert53 and Alphafold.54 On the other hand, GPT-based generative models such as ProtGPT255 and ProGen56 are being used for protein tasks. ProGen is trained on 280 million protein sequences and can accurately create or generate a viable sequence according to the desired properties of a protein.

LLMs have also been used to predict the molecular properties of drug molecules, which can be useful for the discovery of small-molecule drugs. Researchers have used neural encoders to predict randomly masked tokens, similar to BERT, in works such as ChemBERTa,57 SMILES-BERT,58 and Molformer.59

3.4 Summary

Currently, there is limited research on the advantages of pretraining medical-specific models from scratch versus fine-tuning general language models. Nonetheless, it is logical to suggest that constructing medical-specific models from the ground up requires significant time and resources, and may not be environmentally sustainable. A more viable solution is to utilize a pre-existing general language model and subsequently refine it with labeled biomedical data to promote eco-friendliness.

4 LARGE-SCALE VLMs

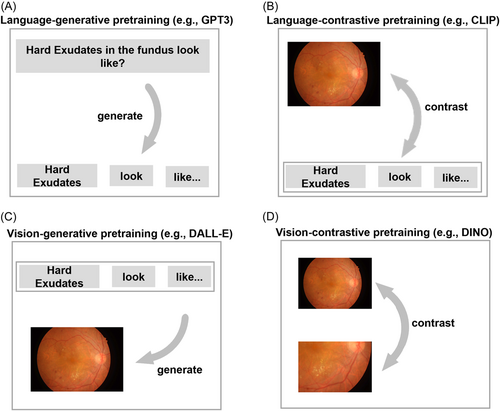

The combination of large language and vision models (VMs) has become a popular trend in AI research in recent years, resulting in the development of impressive applications such as VLMs.

VLMs are AI models that can process and generate natural language text in conjunction with visual data, such as images or videos. These models are typically trained on large datasets that consist of images or videos paired with descriptive text and can learn to generate natural language descriptions of the visual content. These models have the potential to capture relationships between different types of data and gain a more complete understanding of natural phenomena.

VLMs have the potential to be utilized in various medical applications. These include the automatic generation of medical reports, the annotation, and interpretation of medical images and videos, providing clinical decision support through the analysis of visual input, and aiding in medical research by processing large amounts of medical data that may lead to new discoveries and insights.

4.1 Representative VLMs: DALL-E, CLIP, ALIGN, Flamingo

-

DALL-E: Developed by OpenAI that can generate images from text descriptions.60 It utilizes a Transformer architecture and is a multimodal implementation of GPT-3 with 12 billion parameters. It was trained on text–image pairs from the internet and is capable of generating a wide variety of images.

-

CLIP: A pretrained neural network that can predict the most relevant text snippet for a given image, using a contrastive learning approach (contrastive language-image pretraining).61 It is trained on a variety of (image, text) pairs and can be fine-tuned for various NLP tasks, such as image captioning and text classification. Like GPT-2 and 3, CLIP has the ability to perform well on tasks it has not been specifically trained on, a capability known as zero-shot learning.

-

ALIGN: A pretrained transformer-based model that learns to align the representations of images and text using a dual-encoder architecture and contrastive loss functions (attention-based language-image grounding network).62 The model has been shown to perform well on a variety of vision-language tasks such as image-text retrieval and image captioning, and can be fine-tuned for specific tasks with minimal task-specific architectures.

-

Flamingo: An innovative approach that has the potential to improve the performance of a wide range of vision-and-language tasks with few-shot learning capabilities.63 Additionally, the ability to adapt quickly to new tasks makes Flamingo models well-suited for applications in real-world scenarios where data is constantly changing, such as in healthcare or retail. The models also have the ability to handle multimodal data, such as videos and images, making them versatile for a wide range of applications. Overall, Flamingo is a promising development in the field of VLMs and holds great potential for future advancements in the integration of vision and language in AI models.

4.2 VLMs for biomedical research

-

MedViLL: A model that uses BERT architecture for cross-modal embedding to improve performance on diverse vision-language multimodal tasks in the medical domain, particularly using radiology images and unstructured reports (Medical Vision Language Learner).64

-

PubMedCLIP: A fine-tuned version of CLIP for the medical domain based on PubMed articles. The authors of the study fine-tuned the original CLIP on a data set of PubMed articles to make it more applicable to the medical domain.65

-

Contrastive visual representation learning from text (ConVIRT): It can learn diagnostic labels for pairs of chest X-ray images and radiology reports.66

These models are still under development, and more research is needed to fully realize their potential in the medical field. Also, it is important to use these models under the guidance of a medical professional and use them as a support rather than a replacement for human decision-making.

4.3 Potential clinical applications of VLMs

-

Personalized decision-making: VLMs can be used to create multimodal learning models that predict postoperative deterioration events in surgical intensive care unit patients for precise early intervention by utilizing multimodal features from physiological signals and EHR data.67, 68

-

Monitoring patients: VLMs can be used to monitor patients in a remote-monitoring care setting. For example, the integration of data from noninvasive devices such as smartwatches or bands with data from EHRs and other sensors, can be used to improve the reliability of fall detection systems69 and gait analysis performance.70 Additionally, multimodal learning models can be used to analyze EHRs and various vital signs for cardiovascular and respiratory monitoring71, 72 and to monitor patients with chronic or degenerative disorders by analyzing data such as weight, diet, sleep, and exercise. Equipping with ambient sensors can analyze patients' movements in the room and alert the care team when a fall is predicted, which potentially improves remote care systems at home and in healthcare institutions.73

-

Disease diagnosis and prognostication: The use of VLMs has potential to assist in disease diagnosis and prognostication. Several studies used multiple modalities to improve predictive performance. For example, Huang et al.74 proposed a personalized diagnostic tool for automated thyroid cancer classification using multimodal information, and Mayya et al.75 developed an AI-based clinical decision support system for learning COVID-19 disease representations from multimodal patient data. Another bimodal study extracted imaging features from chest X-rays with clinical covariates, improving the diagnosis of tuberculosis in individuals with human immunodeficiency virus.76 Additionally, optical coherence tomography and infrared reflectance optic disc imaging have been combined to better predict visual field maps compared to using either modality alone.77

The integration of data from multiple modalities in VLMs can improve predictive performance and provide more accurate and personalized diagnosis and treatment options for patients.

5 LARGE-SCALE GLMs

Graph language models have been used to analyze biological sequencing data, such as protein and drug molecule sequences. The (DGL)78 framework for GNNs has been upgraded to version 1.0, with the addition of a library called DGL Sparse. This library provides sparse matrix classes and operations specifically for graph machine learning, making it easier to write GNNs from a matrix perspective.

-

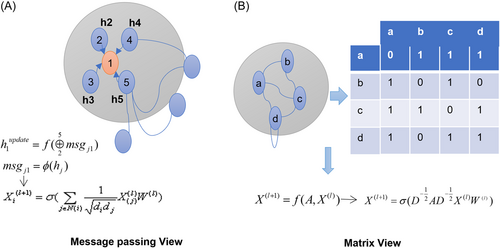

Message-passing view: A node's representation vector is calculated by aggregating and transferring information from its neighboring nodes through a loop. This process is similar to how humans learn knowledge by combining information from their peers with their existing knowledge. The message-passing neural network79 consists of two stages: message passing and readout phase (Figure 9A).

-

Matrix view: Expressing GNN models from a coarse-grained, global perspective, emphasizing operations involving sparse adjacency matrices and feature vectors. Both views are essential tools for studying GNNs and complement each other15, 78, 80 (Figure 9B).

However, GNNs have limitations, such as limited expressive power,81 oversmoothing,82 and overdistortion.83 Over-smoothing occurs when all node representations converge to a constant after enough layers, while over-distortion occurs when too much information is compressed into a fixed-length vector because information from distant nodes cannot effectively propagate through certain “bottlenecks” in the graph. Therefore, designing new architectures beyond neighborhood aggregation, such as transformers, is crucial for addressing these issues.

-

Building transformer blocks on top of GNN

-

Alternately stacking GNN blocks and transformer blocks

-

Parallelizing GNN blocks and transformer blocks

-

Positional/structural encoding

-

Local message-passing mechanism

-

Global attention mechanism

-

Structure-aware transformer (SAT): incorporating structural information into the original self-attention by extracting a subgraph representation rooted at each node before computing the attention. They believe that SAT offers better model interpretability compared to the classic transformer with only absolute positional encoding.87

-

GraphiT: Including graph structure information by leveraging relative positional encoding strategies in self-attention scores based on positive definite kernels on graphs, and by enumerating and encoding local substructures such as paths of short length.88

5.1 Protein

There are similarities between natural language and protein sequences. Natural language, such as English, is composed of letters that form words through fixed combinations to convey meaning. It also has information completeness, meaning that understanding all the letters in a sentence provides complete understanding of the message. Similarly, proteins are composed of amino acid sequences and have reused modules made up of specific amino acid sequences. Once the amino acid sequence is determined, the protein's structure and function are also determined, providing information completeness.

However, there are differences between natural language and protein sequences. Natural language has a clear vocabulary and standardized punctuation, with relatively consistent sentence lengths. In contrast, proteins lack a clear vocabulary and have varying sequence lengths. Specific words in natural language often have a significant impact, while in proteins, this impact is cumulative. Additionally, natural language rarely has distant interactions, while proteins commonly have them due to their 3D network structure, allowing for interactions between distant amino acid residues. Therefore, incorporating graph network learning into protein sequence tasks is essential.

-

AlphaDesign: A new method called ADesign to improve accuracy by introducing protein angles as new features, using a simplified graph transformer encoder, and proposing a confidence-aware protein decoder.89

-

GOProFormer: A GO protein function prediction method that accounts for both protein sequence and the GO hierarchy in its learned representations.90

-

RTMScore: Introducing a tailored residue-based graph representation strategy and several graph transformer layers for the learning of protein and ligand representations, followed by a mixture density network to obtain residue–atom distance likelihood potential.91

-

GraphSite: AlphaFold2-aware protein–DNA binding site prediction.92

-

DProQ: A gated graph transformer for protein complex structure assessment.93

5.2 Drugs molecules

-

DrugEx v3: A drug design approach that utilizes scaffold constraints and reinforcement learning based on graph transformers.94

-

MechRetro: A graph learning framework that employs chemical mechanisms to predict and plan pathways for retrosynthesis in an interpretable manner.95

-

MHTAN-DTI: A hierarchical transformer and attention network that uses metapaths to predict interactions between drugs and targets.96

5.3 Summary

Large graph models have several areas that require improvement, such as slow training, sensitivity to text or sequence length, overfitting, and interpretability challenges. Instead of pursuing more complex models, combining deep graph models with domain knowledge may enhance model performance. In the context of protein research, there are two key methods for improving large graph models: fine-tuning pretrained models and utilizing richer, higher-quality databases. Competitions such as CAFA and CASP promote protein prediction research and provide rigorous testing to evaluate algorithm quality. However, benchmark research for protein computation lags behind NLP and other machine learning fields. Therefore, establishing standardized and objective benchmarks is critical for evaluating different graph representation models and should be a future research direction.

6 LARGE-SCALE LANGUAGE-CONDITIONED MULTIAGENT MODELS

The integration of vision and language in language-VMs has the potential to significantly enhance AI's ability to understand and interact with the real world. Vision can provide a tangible grounding for AI, while language serves as a means of communication between humans and AI, as well as between different AI models. As advancements in this field continue to be made, the developmen of highly versatile AI assistants that can effectively interpret visual information and communicate with humans through language is likely to become a reality.

LLMMs utilize language as an intermediary interface among multiple large models, allowing them to leverage the strengths of each individual model to accomplish tasks that would be difficult for a single model to perform alone. This might include the use of LLMs, VLMs, and visual navigation models to perform more complex and multimodal tasks.

The combination of models from different domains can offer superior performance compared to individual models.97 This approach, known as “multiagent models,” enables the exchange of information between models and can overcome the limitations of individual models.

6.1 Representative LLMMs: Socratic, SayCan, Robotics transformer 1, and Visual ChatGPT

-

Socratic model: A framework that utilizes language as an interface to connect various large AI models for performing complex, multi-modal tasks.98 By combining language, vision-language, and audio-language models through clever prompts, the Socratic Model uses language as an intermediate “glue layer” to prompt large models to accomplish new tasks with the aid of other large models. For instance, the Socratic Model can use a VLM to identify objects in a video, an audio-language model to identify sounds in the video, and then prompt a language model with the outputs from the vision-language and audio-language models to guess the activity shown in the video. This paradigm is powerful and flexible, with many potential applications in the future.

-

SayCan system: Developed by Google's Robotics team, is a method for controlling robots that utilizes three models: A language model, a VLM, and a vision-navigation model.99 The user provides instructions in natural language, which the language model converts into a series of actions for the robot to perform. SayCan uses cameras or sensors to capture images and other types of data, which are then processed by the vision-language and vision-navigation models. These models interact with the language model to determine the most viable plan based on the robot's current state and environment. This framework has been demonstrated to significantly reduce errors compared to nongrounded methods, making it useful in the medical field.

-

Robotics transformer 1: A new AI model designed for real-time robot control.100 It is a multitask model that uses a transformer architecture to take a text instruction and a set of images as inputs. The text instruction and images are then encoded as tokens using a pretrained FiLM EfficientNet model and compressed by the TokenLearner. The model is equipped with a substantial data set from the real world for robotic training, allowing for greater accuracy and adaptability in real-world scenarios.

-

Visual ChatGPT: During the review process of this article, Microsoft introduced Visual ChatGPT, which includes various visual-based models that allow users to interact with ChatGPT in the following ways101: (1) sending and receiving not only language but also images; (2) providing complex visual questions or editing instructions that require collaboration among multiple AI models and multiple steps; and (3) providing feedback and requesting corrections to the results.

These LLMMs hold great potential for improving the understanding of the real-world and the development of more advanced abilities in robots and agents. By efficiently scaling up such patterns, these models can learn to perform complex, human-like tasks with multiple steps. In the healthcare industry, this could lead to the replacement of certain tasks currently performed by healthcare professionals such as surgeons, physicians, nurses, and physician assistants.

6.2 Opportunities of using LLMMs in clinical practices

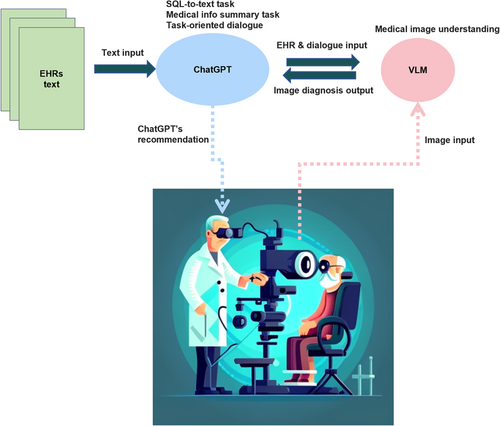

The utilization of LLMMs can enhance capabilities and improve functionality. By combining pretrained models, these agents can perform language-conditioned tasks, engage in multimodal assisted dialog, and accurately perceive and act in the real world. They can also gather information about the environment through cameras and incorporate this data into the model for multimodal analysis. However, currently, AI-powered robots have only been used to a limited extent in hospitals and often require human supervision for tasks that are simple and repetitive. With the integration of LLMMs, robots can become more intelligent and versatile. The future significance of LLMMs in healthcare organizations is undeniable. The following sections will explore the opportunities and challenges presented by the use of LLMMs in virtual medical assistants (Section 6.2.1) and surgical robots (Section 6.2.2). We envision the application of advanced LLMMs in clinical practices in the future, as illustrated in Figures 10 and 11.

6.2.1 Virtual medical assistant

-

Assisting with diagnosis and treatment: AI-powered systems can process patient data and symptoms to aid in diagnosing conditions and recommending appropriate tests and evidence-based treatments. For instance, a virtual assistant might use an ALM to collect patient symptoms, a VM to gather information from cameras and sensors, and a VLM to access imaging reports. By combining these inputs, the assistant can provide possible diagnoses, necessary tests, and treatment recommendations based on medical knowledge.

-

Triaging patients: Multiagent models are potential to analyze patient data, assess their condition, and determine the best course of action. For instance, RoomieBot,102 developed by the start-up Roomie, is being used in Mexican hospitals to triage high-risk COVID-19 patients. The robot takes patients' temperatures, measures their blood oxygen levels, and collects their medical histories upon arrival at the hospital. RoomieBot is powered by Intel-based technology and AI algorithms that run on a vision processing unit and RealSense cameras.

-

Generation of EHRs: After being connected to an EHR, doctors can use a voice-enabled digital assistant, like Suki103 to create notes from patient conversations, make changes by speaking, and retrieve any necessary data.

-

Medical consultations: Advances in LLM-based chatbot algorithms are rapidly revolutionizing human-AI interaction, as demonstrated by the capabilities of ChatGPT. Large language models may potentially be a good option for medical consultations when combine with other model assists. With the increasing use of chatbots, AI, and voice search technology, hospitals and clinics can implement voice-powered virtual assistants to answer patient questions and provide advice and support. For example, Sulli the Diabetes Guru from Roche Diabetes Care104 can answer general questions about diabetes and offer tips on healthy eating, exercise, medications, glucose monitoring, and other lifestyle habits through voice control. Sulli can also help seniors manage their daily routines and chronic illnesses. The World Health Organization technology program has developed a chatbot to fight COVID-19, allowing users to get answers to their questions about protecting themselves from the virus, learn about its facts and news, and help prevent its spread.105

Virtual assistants, such as robots, can assist patients in several ways, including engaging in conversation, reminding them to take medication, and conducting basic checkups, such as measuring blood pressure, blood sugar levels, and temperature.106 Additionally, these robots can assist in preventing falls, especially for patients with visual impairments or blindness, by using depth cameras and sensors to gain an understanding of the surrounding environment and tracking patients' movements during prescribed exercises. They can also provide guidance and support for patients during their recovery.

6.2.2 Surgical robots

The majority of commercial surgical robots, such as the da Vinci system, are remotely controlled by a human operator rather than being powered by AI.107 However, recent advancements in AI-based CV have led to a focus on using AI for imaging navigation, surgical assistance, and guidance in minimally invasive surgery.

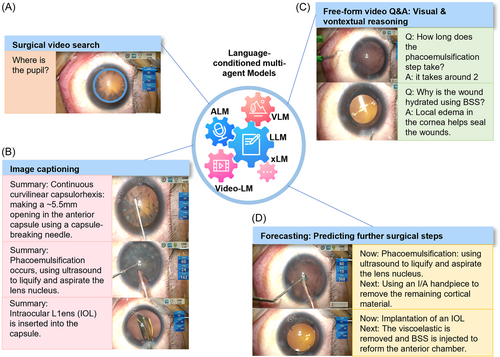

LLMMs, such as Socratic Models98 and SayCan,99 have significant potential in the field of surgical robotics for assistance. These models combine LLMMs, VLMs, and ALMs, allowing the robot to make decisions and perform tasks based on information gathered from its environment. They can perform complex tasks by combining their expertize in various fields, such as video search, image captioning, video Q&A, and predicting future surgical steps. For instance, the VLM can be used to identify objects in a surgical video, the ALM can be used to analyze audio and communicate with doctors, and the LLMM can be prompted to determine the best course of action based on the information gathered by the other models like VLM and ALM. This approach allows the robot to make decisions and perform tasks that it was not specifically trained for, making it more versatile and adaptable in surgery.

Currently, the use of AI-enabled surgical robots is in its early stages. With advancements in technology, AI-based image recognition has the potential to ease the decision-making process for surgeons during surgery. These AI-enabled surgical robots could assist less-experienced surgeons in performing surgeries safely and enhance the skills of practitioners in underserved areas.108 According to Lee et al.'s109 study, a comprehensive computer-assisted robotic surgical system requires various components, including vision, haptics, patient image modeling, and robotics control systems.

7 LARGE-SCALE MULTIMODAL MODELS

-

Architecture unification: Using a unified transformer encoder–decoder for pretraining and fine-tuning, eliminating the need to design specific model layers for different tasks, and reducing the burden on users for model design and code implementation.

-

Modality unification: Unifying NLP, CV, and multimodal tasks into the same framework and training paradigm, allowing easy access to image data and enabling users to explore visual, language, and multimodal AI models even if they are not experts in the CV field. This is mainly divided into single-stream and dual-stream models. The single-stream model fuses the image and text embeddings together and inputs them into a transformer model, while the dual-stream model uses two independent transformers to encode the image and text sides, but can add attention between the two modalities in the middle layer to fuse multimodal information.

-

Task unification: Expressing tasks in Seq. 2Seq form, and training with generation paradigm for both pretraining and fine-tuning. The model can learn multiple tasks simultaneously, allowing a single model to acquire multiple abilities, including text generation, image generation, cross-modal understanding, and so forth.

-

KOSMOS-1: A causal language model based on transformer.110 In addition to various natural language tasks, the KOSMOS-1 model can handle a wide range of perception-intensive tasks natively, such as visual dialog, visual interpretation, visual question answering, image captioning, simple mathematical equations, optical character recognition (OCR), and described zero-shot image classification.

-

PaLM-E: Using different encoders to map information from different modalities into the language embedding space, and then integrating these modality state vectors into a large language model.111 The main modality state vectors include 2D images, which are encoded using ViTs, and 3D-aware information, which is encoded using object scene representation transformer. By incorporating different modality information into the LLM, PaLM-E performs well on zero-shot tasks that require multimodal understanding. In addition to conventional language generation tasks, PaLM-E can also be used for continuous robot control planning, visual question answering, image captioning, and other multimodal tasks. Furthermore, compared to a simple large language model, a multimodal large language model achieves better common-sense reasoning performance, indicating that cross-modal transfer helps with knowledge acquisition.

8 CHALLENGES OF INTEGRATING LARGE-SCALE AI MODELS INTO MEDICINE

The rapid progress and investment in large-scale AI and associated innovations hold great promise for improving health services and addressing resource and administrative challenges. However, significant challenges still exist in applying these techniques to healthcare delivery.

8.1 High-quality data for model pretraining

There is a belief that the “raw corpus” data used in the pretraining process is abundant and does not require the same level of effort as the processing of labeled datasets during the finetuning process. However, this belief may underestimate the importance of data quality in the pretraining process. The underperformance of some large models may be attributed to poor pretraining data. In fact, there are three key considerations for pretraining data for large models: selecting high-quality data through data filtering, removing duplicates to avoid memorization and overfitting, and ensuring data diversity to promote the generalization of the language model.

To select high-quality data, a classifier with good performance is necessary. Careful consideration should be given to the trade-off between data diversity and quality. For example, GPT-3 was trained on 300B tokens, with 60% coming from the filtered Common Crawl data set, and the rest from webtext2 (used to train GPT-2), Books1, Books2, Wikipedia, and code datasets (such as GitHub Code). The proportion of each data set does not correspond to the size of the original data set. Instead, datasets with higher quality are more frequently sampled.

Removing duplicates from the pretraining data set helps to avoid the model memorizing or overfitting on the same data, thus improving its generalization ability. Additionally, the pretraining data set should consider diversity in terms of domain, format (e.g., text, code, and tables), and language. By doing so, the language model can better generalize to new and unseen data, which is crucial for its overall performance.

8.2 High training cost

Large-scale AI models require high development costs in terms of money, time, energy, and technology. The training time for these models can be excessively long, making it costly to train them. Even with the increasing computational power of GPUs, they may not be able to keep up with the massive growth of AI models. For example, the training of BERT required 16 Cloud TPUs and took 4 days to complete. GPT-3, a model with 175 billion parameters, would take over 355 years and $4.6–12 million to train on a single Nvidia Tesla V100 GPU.19 These LLMs require vast amounts of data and computing resources.

To reduce the cost and time of model training, engineers are developing new methods to optimize the performance of deep learning systems. Algorithms must be optimized for efficiency and scalability in terms of memory and computation. Companies such as HPC-AI Tech112 and DeepSpeed113 have developed solutions to speed up the training process and improve resource utilization. For instance, Colossal-AI, created by HPC-AI Tech, is an efficient acceleration software that allows developers to easily train large AI models in a cost-effective manner. It facilitates greater parallelization, increases resource utilization, and minimizes data movement across distributed and parallel training. DeepSpeed is a deep learning optimization software suite that enables unprecedented scale and speed for deep learning training and inference. It reduces the training memory footprint through a novel solution called zero redundancy optimizer (ZeRO),113 which partitions model states and gradients to save significant memory. New generations of chips such as Cerebras' WSE-2107 and Google's latest TPU114 promise to accelerate training processes and reduce emissions. The future trend should focus on energy saving, improving the efficiency of model training, and using less computational power to process larger data.

8.3 Hallucinations

Large-scale AI models, such as LLMs like ChatGPT,1 have demonstrated impressive capabilities, but they also have limitations. One significant limitation is their lack of experience with the real world, which can lead to mistakes that are unreasonable or nonsensical. For example, the Galactica LLM,115 released by Meta Company, was able to generate coherent academic text, but the information within the text was inaccurate. This highlights the challenges faced by AI researchers in making models understand the world. This is an area of active research, with a focus on developing models that can better understand and navigate the complexities of the real world.

Factual hallucinations are not directly entailed in the generated text from the source document but can be based on world knowledge. Nonfactual hallucinations are entities that are neither inferable from the source nor based on world knowledge.

Scientists have investigated ways to reduce harmful information and undesirable behavior in the deployment of LLMs. For example, Perez et al.116 proposed “red teaming LM” as a method, and the other study proposes that combining ChatGPT with strong external knowledge may help to reduce errors in LLMs. Red teaming is a new AI model developed by DeepMind, which consists of two parts: a language model (red teaming LM) that continuously generates test cases (asks questions) to a normal language model (target LM) and acts as an “examiner,” and a classifier that judges the replies of target LM as a “grader.” Red teaming entails automatically identifying harmful behaviors in LMs. The final feedback can also fine-tune the target LM. Meanwhile, integrating ChatGPT with external knowledge sources, such as Wolfram|Alpha,117 could further improve the accuracy of the model and minimize errors. Wolfram|Alpha can provide more formal and precise information to ChatGPT by Wolfram Language.

8.4 Discriminatory outputs

Bias in large-scale AIs is likely to reduce the safety and effectiveness of patients from different populations.118 Medical datasets and clinical trials have a history of bias, and electronic health data often does not represent the general population.119, 120 High-resource hospitals, which were often early adopters of EHR systems, may have larger volumes of high-quality electronic data that can now be used to develop and train AI tools,121, 122 but the data from such hospitals may underrepresent some patient populations. Bias can be introduced in various ways such as selecting data only from certain populations which do not represent all the populations that the models would be used on, inadequate subgroups of patients, and documentation or clinical reasoning being less accurate or systematically different across sites.

Addressing bias in AI can be challenging, as AI relies on data generated by humans or collected by systems created by humans and thus reproducing or increasing existing biases. To ensure fairness, researchers must guarantee that the training and evaluation data for large-scale AI models are sufficiently representative of different sexes, races, ethnicities, and socioeconomic backgrounds. It is important to involve clinicians, policy specialists, and patient representatives in developing appropriate protocols for sharing health data with AI developers and using personal data for AI services.123 Research is also needed to reduce confounding effects and ensure fairness when representative data is scarce.124

9 DISCUSSION AND PERSPECTIVE

-

Context length and model size: ChatGPT will soon be able to handle context lengths of hundreds of thousands or even millions of tokens with efficient attention and recursive encoding methods. MoE125 scaling up to the T-level allows for the size of models and datasets to continue to increase.

-

Medical domain-specific smaller-size models: Due to the lack of high-quality training corpus and hardware limitations, increasing the size of medical-specific models may be challenging. However, smaller-scale models can be valuable in specific situations. It may be more cost-effective to use generic large models for fine-tuning and work in conjunction with medical domain-specific smaller models. Future trends will focus on combining large-scale generic AIs and smaller-size task-specific models.

-

Multimodal learning: Incorporating multimodal data, particularly video data, can significantly increase the training data size and potentially reveal new emergent abilities. For example, a model exposed to various geometric shapes and algebraic problems may learn to solve analytic geometry problems.

-

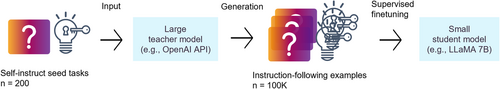

Transfer learning: Large language models can provide high accuracy but can slow down the inference process, resulting in significant cost. Researchers have focused on compressing these models while maintaining their effectiveness through techniques such as pruning, distillation, and quantization. Transfer learning methods, such as prompt-based fine-tuning, enable complex inference using smaller models for practical applications. The core idea is to generate inference samples from a large teacher model using a prompt-based inference chain method and then fine-tune the small student model using the generated samples (Figure 12).

10 CONCLUSION

In conclusion, this review summarized the opportunities and challenges of the latest large-scale AI models in the medical domain. These models, including LLMs, VLMs, GLMs, LLMMs, and LMMs, have the potential to improve the accuracy and efficiency of tasks such as medical dialog, medical image analysis, and other healthcare applications. It is also important that the integration of different data types and alignment of these models with human values and goals through the use of RLHF is crucial to ensure their accuracy and personalized nature. By incorporating a variety of medical data, such as omics data, EHRs, and imaging data, these models can gain a more comprehensive understanding of human health and enable more precise and individualized preventive, diagnostic, and therapeutic strategies. Furthermore, aligning these models with human values and goals ensures their ethical and moral use, ultimately leading to better healthcare outcomes for patients. Future research should focus on exploring ways to leverage the knowledge in general large-scale AI models and transfer it to medical domains.

AUTHOR CONTRIBUTIONS

Ding-Qiao Wang: Writing—original draft (lead). Long-Yu Feng: Supervision (equal); writing—review and editing (equal). Jin-Guo Ye: Methodology (equal); writing—review and editing (equal). Jin-Gen Zou: Methodology (equal); writing—review and editing (equal). Ying-Feng Zheng: Supervision (lead); validation (lead); writing—review and editing (lead). All authors have read and approved the article.

ACKNOWLEDGMENTS

The authors would like to express our gratitude to everyone who contributed to this project. In particular, the authors would like to acknowledge the website (https://www.midjourney.com) that helps us generate part of our Figure 10 by AI image generation. This work was supported by the National Natural Science Foundation of China (NSFC grant 82171034); the High-level Hospital Construction Project, Zhongshan Ophthalmic Center, Sun Yat-sen University (Grant Nos. 303010303058, 303020107, 303020108); National Key R&D Program of China (2022YFC2502802).

CONFLICT OF INTEREST STATEMENT

The authors declare no conflict of interest.

ETHICS STATEMENT

The authors have nothing to report.

Open Research

DATA AVAILABILITY STATEMENT

The authors have nothing to report.