Deep learning with attention modules and residual transformations improves hepatocellular carcinoma (HCC) differentiation using multiphase CT

Abstract

Background

We hypothesize generative adversarial networks (GAN) combined with self-attention (SA) and aggregated residual transformations (ResNeXt) perform better than conventional deep learning models in differentiating hepatocellular carcinoma (HCC). Attention modules facilitate concentrating on salient features and suppressing redundancies, while residual transformations can reuse relevant features. Therefore, we aim to propose a GAN+SA+ResNeXt deep learning model to improve HCC prediction accuracy.

Methods

228 multiphase CTs from 57 patients were retrospectively analyzed with local IRB's approval, where 30 patients were pathologically confirmed with HCC and the rest 27 were non-HCC. Pre-processing of automatic liver segmentation and Hounsfield unit (HU) normalization was performed, followed by deep learning training with five-fold cross validation in a conventional 3D GAN, a 3D GAN+A, and a 3D GAN+A+ ResNeXt, respectively (training: testing ∼ 4:1). Area under receiver operating characteristics curves (AUROC), accuracy, sensitivity and specificity of HCC prediction were evaluated.

Results

Results showed the proposed method had larger AUROC (95%), better accuracy (91%) and sensitivity (93%) with acceptable specificity (88%) and prediction time (0.04s). Deep GAN with attentions and residual transformations for HCC diagnosis using multiphase CT is feasible and favorable with improved accuracy and efficiency, which harbors clinical potentials in differentiating HCC from other benign or malignant liver lesions.

1 INTRODUCTION

There is an increasing burden of liver cancers worldwide. It has been reported that by the year 2030, hepatocellular carcinoma (HCC) is projected to become the third leading cause of cancer-related death.1 Early diagnosis and accurate detection of HCC is critical for treatment options and patients’ survival. However, current clinical evaluation of HCC features lacks inter-observer reliability and can be highly subjective.2 Deep learning, the subset of artificial intelligence (AI), is capable of pattern recognition and object detection,3 which may have great potential in HCC diagnosis that is independent of observers’ expertise level.4

Dynamic contrast enhanced CT (DCE-CT) remains the cornerstone in the classification of focal liver lesions because it's noninvasive, high imaging speed, and high-resolution. According to Liver Imaging Reporting and Data System (LI-RADS) version 2018, CT or MRI permits definitive diagnosis of HCC, where multiphase contrast-enhanced imaging is essential for diagnosis and assess treatment response.5 LI-RADS does not endorse any particular imaging modality since choosing CT or MRI depends on patients and hospitals. Some studies suggest that MRI might be more sensitive than CT with similar specificity.2 However, the difference is small and not statistically significant.6 Similarly, there is no clear evidence showing MRI or CT superiority for assessing HCC response to therapies.7 In light of CT's high speed, high resolution, robust, and clinical availability, DCE-CT is widely used as the first-line imaging for HCC diagnosis.

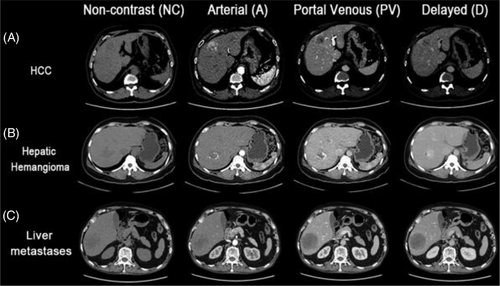

Multiphase CT or DCE-CT images have non-contrast (NC), arterial (A), portal venous (PV) and delayed (D) phases, which provides valuable knowledge to differentiate HCC from other liver lesions, such as the benign lesions of hemangioma, focal nodule hyperplasia (FNH), cyst and the malignant liver metastasis.8, 9 For example, HCC has unique features of capsule appearance, arterial phase hyperenhancement followed by washout in PV or D phases. Current clinical practice exhibits an overall accuracy of 0.6∼0.7 in HCC diagnosis on multiphase CT.8, 9 Computer-aided diagnosis (CAD) via machine learning improves accuracy of HCC evaluation,10 but most CAD studies rely on a few subjective features extracted from single-phase CT, rather than integrating patterns from multiphase CT.11 Machine learning (radiomics) as a subset of AI can generate abstracted models via observations, while deep learning as a subset of machine learning has multiple processing layers to learn generalizable representations with higher levels of abstraction.12 In fact, there are studies discovering vulnerabilities in radiomic signatures,13 which may be conquered by deep learning since feature extraction in deep learning is more independent and objective.14

The input of deep learning prediction models includes multiphase CT,15 whole-slide digitized histological slides,16, 17 or multi-omics of imaging, laboratory and clinical data.18 The output results are focused on predicting post-treatment prognosis,19 survival,20 treatment response,21 macrovascular invasion (MVI),22 early recurrence,23 gene mutation,24 image reconstruction25 and lesion segmentation.26 However, accurate HCC differentiation from other lesions is rare.27

During the last few years, CNN-based networks using multiphase data were proposed to conduct HCC detection. Yasaka et al showed deep learning with CNN could differentiate liver masses using three phases of dynamic CT (non-contrast, arterial, and delayed).4 Kim et al proposed Mask R-CNN for automatic detection of primary liver malignancies using multiphase CT with relatively high sensitivity and low false positive rates.15 Oestmann et al provide proof-of-concept for 3D CNN classification of HCC on multiphase MRI using pathologically confirmed lesions as ground truth.28

Recently, a novel generative adversarial network29 has been applied to medical imaging processing, such as image segmentation,30 synthetic CT generation,31 and dose prediction.32 The unique generator and discriminator structure have been demonstrated to generate real-like samples for data augmentation and further improve the performance of the deep learning model. However, GAN-based deep learning method on multiphase CTs has not been widely used for HCC detection. It is desirable to develop a GAN-based model to accurately and efficiently improve the diagnostic performance of HCC detection.

However, due to the difficulty of optimization and computational complexity, the deep learning algorithms for HCC differentiation seem to be stuck in vanilla CNNs, and no sophisticated architectures such as ResNet33 and Inception34 have been proposed for HCC detection. Recently, a novel architecture RexNeXt35 which inherited ResNet's and Inception's strategy was present to avoid the vanish/exploding gradients or degradation problems for complex model training. The general ResNeXt block stacked a set of transformations with the same topology and applied a shortcut connection for feature reuse. Simultaneously, it embedded the split-transform-merge strategy to reduce the feature redundancy, whose final outputs were aggregated by summation. Compared to the basic ResNet block, the ResNeXt block applied grouped convolutions36 to decrease the number of hyperparameters of the network, which can effectively boost classification performance without a very deep network and huge computational complexity. Hence, the ResNeXt model is considered appropriate for HCC detection.

In addition to modeling powerful deep learning networks, there are few studies of HCC detection using deep learning with attention mechanisms, where attentions can prioritize relevant anatomy, suppress redundancies, facilitate convergence, and improve generalizability.37 For example, integrating attentions to deep neural networks can improve image segmentation,38 dose prediction,32 image synthesis,39 and interstitial needle localization.40 Meanwhile, deep learning with attentions can be more complex, makes the training process more challenging, and requires more GPU memory. So far only two studies related to implementing attention-gated deep learning to HCC-related topics. Zeng et al. applied an attention-based deep learning model to predict MVI in HCC patients using diffusion-weighted magnetic resonance imaging (DWI).41 Gao et al. employed hybrid network with different degrees and attention mechanisms to predict MVI in HCC patients using MRI.42 Both studies show deep learning with attentions achieved better results than without. To the best of our knowledge, no attention added deep learning differentiation of HCC using multiphase DCE-CT has been investigated.

We aim to develop an attention-integrated generative adversarial network (GAN) to direct differentiate HCC from other liver lesions, where we hypothesize the prediction performance may be improved by spatial and channel attention modules. To further improve the diagnostic performance, we proposed an attention-integrated ResNeXt classifier for feature extraction and selection. We also plan to compare the accuracy, sensitivity and specificity, area under receiver operating characteristics (AUROC), time spent per patient prediction, and other parameters of attention-gated GAN (3D GAN with attention-gated CNN classifier and 3D GAN with attention-gated ResNeXt classifier) to those of the conventional GAN with convolutional neural network (CNN) classifier or original ResNeXt classifier.

2 METHODS AND MATERIALS

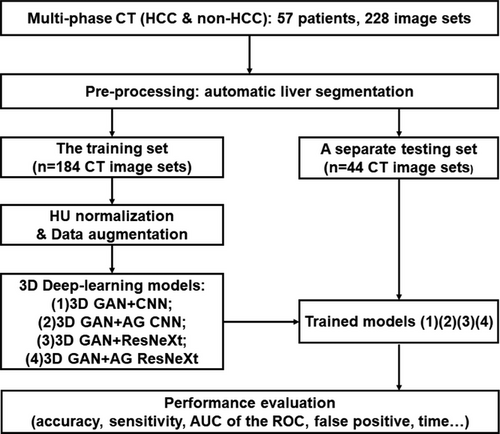

The workflow of our study was depicted in Figure 1. There were four major steps: (1) patient selection and image acquisition; (2) image pre-processing, including a Unet based automatic liver segmentation, image augmentation and HU normalization; (3) deep learning-based prediction model training: 3D conventional GAN with a typical CNN classifier (3D GAN+CNN), 3D GAN with attention-gated classifier (3D GAN+A), and 3D GAN with residual transformed classifier (3D GAN+ResNeXt), and 3D GAN with attention-gated and residual transformed classifier (3D GAN+A+ResNeXt) with 5-fold cross validation on the training set of multiphase CT; (4) performance evaluation in the testing set in terms of sensitivity, specificity, accuracy, AUROC and time spent per patient prediction. Each step was described in detail in Section 2.1 to 2.4, respectively.

2.1 Patient selection and image acquisition

Fifty-seven patients with abdominal multiphase DCE-CT were retrospectively selected between Jan 2021 and June 2022 with local Institutional Review Board (IRB)’s approval. Each patient had four-phase CT, i.e., non-contrast (NC), arterial (A), portal venous (PV), and delayed (D), which made the total number of CT 228. Among all patients (50.0 ± 14.6 years old), 37 were male and 20 were female. Thirty patients were pathologically confirmed with HCC and twenty-seven were non-HCC, as summarized in Table 1.

| Total | Training set | Testing set | |

|---|---|---|---|

| No. of Patients | 57 | 46 | 11 |

| No. of CTs | 228 | 184 | 44 |

| Age | 50.0 ± 14.6 | 50.2 ± 14.7 | 49.1 ± 14.6 |

| Gender | |||

| Male | 37 | 30 | 7 |

| Female | 20 | 16 | 4 |

| Diagnosis | |||

| HCC | 30 | 25 | 5 |

| Non-HCC | 27 | 21 | 6 |

| (1) Hemangioma | 8 | 6 | 2 |

| (2) FNH | 7 | 5 | 2 |

| (3) Liver metastasis | 5 | 4 | 1 |

| (4) Cyst and others | 7 | 6 | 1 |

- Abbreviations: FNH, Focal Nodular Hyperplasia; HCC, hepatocellular carcinoma; non-HCC includes hemangioma, FNH, liver metastasis, cyst and others.

DCE-CT was acquired on a multi-detector CT scanner (Siemens Somatom Definition Flash CT Siemens Medical System, Germany) using the following imaging protocol: tube voltage = 120kVp, tube current = 150mAs, gantry rotation = 0.5s/r, detector collimation = 64×0.6mm, pitch = 1.2, reconstructed slice width = 0.75mm, resolution = 512×512, an in-plane pixel size = 0.5∼0.7mm, original slice thickness = 5mm. An intravenous contrast agent (ioversol350, 350mg/ml, 1.2ml/kg x body weight) was administrated using a power injector at a rate of 3∼4 ml/s, followed by an immediate injection of saline flush at the same rate for 10 seconds. All four-phase CT were obtained including non-contrast (NC), arterial phase (A) with a scan delay of 7s from the 120 HU threshold in the abdominal aorta, portal venous phase (PV) with 24s post-arterial phase, and delayed phase (D) with 120s post-portal venous phase. Several representative multiphase CT examples of HCC vs. non-HCC were shown in Figure 2.

2.2 Pre-processing

Prior to training of deep learning models, image pre-processing was conducted, which included automatic liver segmentation, data augmentation and HU normalization.

2.2.1 Automatic liver segmentation

The purpose of automatic liver segmentation was to reduce redundant information outside the liver.43 A 3D Unet neural network was trained using Liver Tumor Segmentation (LiTS) Challenge 2017 with 104 cases and validated using a separate dataset of 27 cases.44 After clinically acceptable Dice similarity coefficient (0.94 ± 0.03) was achieved, the 3D Unet was adopted to automatic liver segmentation using multiphase CT in our study.

2.2.2 Data augmentation and HU normalization

Data augmentation on multiphase CT was performed, including image rotation, scaling, horizontal flip, and vertical flip, in order to increase the data amount and robustness. After image augmentation was completed, HU values in all four phases of CT were normalized to intensity levels from -1 to 1.

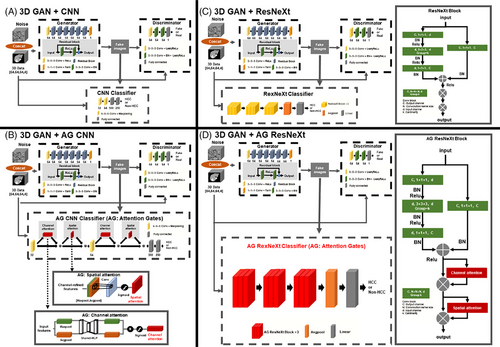

2.3 Training of Four deep learning models

The core of this study was to design and train deep learning neural networks to differentiate HCC from non-HCC hepatic lesions. Four deep learning models were constructed: (a) 3D GAN+CNN, (b) 3D GAN+AG CNN, (c) 3D GAN+ResNeXt and (d)3D GAN+AG ResNeXt (Figure 3). The input of four neural networks was the segmented liver CT of multiphase, which was four phases together with a dimension of 64×64×64×4, while the output was prediction of either HCC or non-HCC. All the deep learning-base models were trained on an Intel Core i9-10980XE CPU @3.00GHz, MVIDIA Quadro RTX 5000 with 16 GB of memory and implemented on the Pytorch framework. The maximum of epochs was set to 50 with an early stop callback. The training batch size of the 3D GAN+CNN, 3D GAN+AG CNN, 3D GAN+ResNeXt and 3D GAN+AG ResNex models was set to 32, 2, 2 and 2, respectively. The noise vector of GAN was sampled from a uniform distribution (-1,1) and the number of dimensions was set to 10. The “cardinality”, which is an essential hyperparameter in ResNeXt-based classifier, was set to 2 in each ResNeXt block. We used the cross-entropy loss function with softmax logits in the classifier of the GAN-based models. Adam optimizer was used in all four models, with the initial learning rate of 0.0002 of generator (G) and discriminator (D), and 0.001 of classifier (C) in four models.

2.3.1 Generative Adversarial Networks with Convolutional Neural Networks classifier

The generative adversarial network (GAN) has been thought the coolest idea of AI and achieved success in medical imaging and diagnosis.29 A typical GAN for classification consisted of a generator (G), a discriminator (D), and a classifier (C), where a generator (G) aims to generate more real-like images from real liver CT and random noise through convolution operations to fool the discriminator, a discriminator aims to distinguish input images from real liver CT or fake images through backpropagation, and a classifier aims to achieve the final prediction of HCC or non-HCC with dual inputs from the real and fake images (Figure 3(A)). In our 3D GAN+CNN model, the generator G had residual blocks to improve feature extraction, eliminate vanishing gradients, and facilitate convergence. Besides, the 3D CNN classifier was based on LeNet45 as depicted in Figure 3(A), which had two convolutional filters to automatically extract features, each followed by a max-pooling layer. Two fully connected layers ended with a softmax output of classification of either HCC or non-HCC. The first fully connected layer had a dropout of 0.5 in order to mitigate overfitting.

2.3.2 GAN with attention mechanisms CNN classifier

The proposed attention-added GAN aims to avoid redundancy and focus on salient features, which denoted the combination of GAN with spatial and channel attention modules, illustrated in Figure 3(B). Convolutional Block Attention Module (CBAM) was described by Woo et al.in46 and it had two sequential sub-modules to improve accuracy with adaptive feature refinement: channel attention and spatial attention, as shown in two solid-line boxes at the bottom of Figure 3(B). The two sub-modules of AG could efficiently help to emphasize important features for HCC and progressively suppress irrelevant features. Here we adopted attentions integrated to the 3D GAN model and gained attentions at every convolutional block in the classifier (C). Specifically, the channel attention sub-module was to capture the inter-channel relationship in multiphase CT, while the spatial attention sub-module was to highlight important regions spatially in feature maps. The combined channel and spatial attention sub-modules could help deep learning neural networks to focus on “what” and “where” is meaningful for the given image. Both channel and spatial attentions could help reduce redundancies within the network and prioritize relevant anatomies for HCC detection.

2.3.3 GAN with ResNeXt classifier

Although going deeper and wider CNNs generally improve final accuracy, the vanish/explosive gradients in backpropagation are prone to problems when trained with small numbers of datasets. In this study, we employed the aggregated residual transformations (ResNeXt) as the classifier to further promote the feature extraction ability for HCC detection (Figure 3(C)). As shown in Figure 3(C), the ResNeXt block contained a first 1×1×1 convolutional layer to produce a low dimensional embedding, following a 3×3×3 grouped convolution to make multi-branch architecture and efficiently extract representative features, and a 1×1×1 convolutional layer to change the dimensionality of the feature channel. Moreover, batch normalization (BN)47 was performed right after each convolution layer to improve the convergence speed. The activation function ReLu was adopted right after the BN layer, except for the output of the shortcut where ReLu came after the summation. The ResNeXt classifier network ended with an average pooling layer, a fully connected layer, and a softmax layer. In order to alleviate overfitting, a dropout operation was addressed after the fully connected layer, and the dropout rate was set to 0.5.

2.3.4 GAN with attention mechanisms ResNeXt classifier

Despite the advantages of feature reuse in ResNeXt, the convolutional in parallel might cause redundancy in the channel and spatial dimensions.48 Hence, based on the superiority of the proposed attention mechanism, we involved the attention scheme after the concatenate layer in each ResNeXt block to reduce the channel and spatial dimensionality and selectively emphasize the relevant features, which we called the “AG ResNeXt block” in Figure 3(D). We add channel attention and spatial attention modules after the shortcut in each AG ResNeXt block. Therefore, the AG ResNeXt block intergraded the advantages of the attention scheme for feature selection and ResNeXt for feature reuse, which can effectively improve the HCC diagnostic performance by simply adjusting the hyperparameter cardinality k.

2.4 Performance evaluation and statistics analysis

The performance of four deep learning models of HCC prediction was evaluated in terms of accuracy, sensitivity, specificity, false positive (FP) and area under the curve of ROC (AUROC) by five-fold cross-validation. Sensitivity is the ratio of patients with HCC who are correctly identified, while specificity is the ratio of patients without HCC or with non-HCC who are correctly excluded. In other words, sensitivity is the ability of a prediction model to correctly identify HCC, while specificity is the ability of a prediction model to correctly identify non-HCC. Theoretically, sensitivity = TP/(TP + FN), specificity = TN/(TN + FP), accuracy = (TP+TN)/(TP+FP+FN+TN), where TP means true positive, TN means true negative, FP means false positive, and FN means false negative. AUROC shows the diagnostic performance to distinguish between HCC and non-HCC. Higher the AUROC indicates better performance of the deep learning model to distinguish between HCC and non-HCC.

Meanwhile, the time consumed of the testing sets (11 patient) was recorded to evaluate efficiency of four deep learning prediction models. Data was analyzed in Python 3.8 and R 4.2.1 (http://www.r-project.org).

3 RESULTS

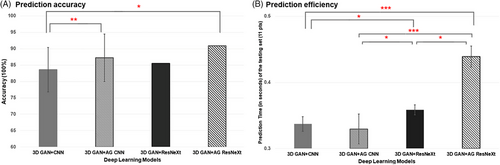

The performance evaluation of four deep learning models was summarized in Table 2, where the 3D GAN+AG ResNeXt model showed best accuracy, AUROC, and sensitivity, reasonable specificity, and FR in multiphase CT model training.

| Methods | Accuracy (%) | AUROC (%) | Sensitivity (%) | Specificity (%) | FP | 95% CI of ROC |

|---|---|---|---|---|---|---|

| 3D GAN+CNN | 83.6 ± 6.8 | 86.8 ± 1.7 | 73.3 ± 13.3 | 96.0 ± 8.0 | 0.4 ± 0.9 | 82.8–88.2 |

| 3D GAN + AG CNN | 87.3 ± 7.3 | 93.3 ± 1.0 | 90.0 ± 13.3 | 85.0 ± 15.0 | 1.8 ± 1.6 | 87.8–92.2 |

| 3D GAN + ResNeXt | 85.5 ± 4.5 | 88.0 ± 4.5 | 90.0 ± 13.3 | 80.0 ± 12.6 | 2.2 ± 1.4 | 87.4–91.2 |

| 3DGAN + AG ResNeXt | 90.9 ± 5.7 | 95.3 ± 6.2 | 93.3 ± 8.2 | 88.0 ± 16.0 | 1.3 ± 1.8 | 91.9–94.7 |

- Abbreviations: AUROC, area under the curve of receiver operating characteristics; CI, confidence interval of ROC; FP, false positive.

- Best results within each column of each model are labeled in red.

Compared to the 3D GAN+CNN model, both 3D GAN+AG CNN and 3D GAN+ResNeXt model achieved better diagnostic performance, with accuracy increases of 3.7% and 1.9%, AUROC increases by 6.5% and 1.2%, respectively. The 3D GAN+AG ResNeXt trained with all four phases CT together outperformed the other three models, with an accuracy improvement of 7.3%, 3.6%, and 5.4%, an AUROC improvement of 8.5%, 2.0%, and 7.3% comparing to the traditional 3D GAN+CNN, 3D GAN+AG CNN, and 3D GAN+ResNeXt, respectively. Additionally, the 3D GAN+CNN model achieved the highest specificity of 96.0% and the lowest sensitivity of 73.3%, from which we inferred that this model tended to classify the input data as non-HCC and had a poor ability to detect HCC compared to the other three deep learning models. Overall, the results indicated the attention and ResNeXt-integrated GAN trained with multiphase CT can learn better representations and improved the prediction performance for HCC detection.

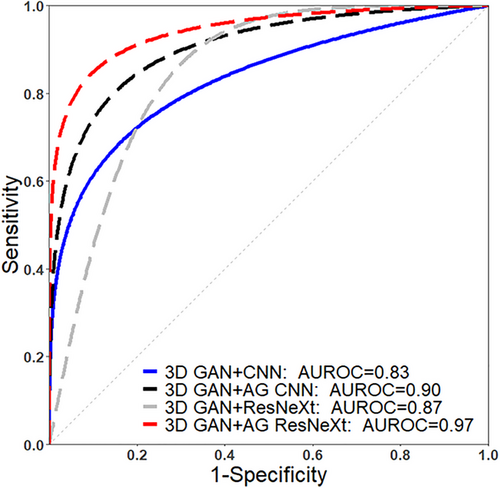

AUROC of four deep learning models trained with multiphase CT were plotted in Figure 4. The AUROC of 3D GAN+AG ResNeXt achieved the maximum prediction value, which was 0.83, 0.90, 0.87, and 0.97 for the 3D GAN+CNN, 3D GAN+AG CNN, 3D GAN+ResNeXt, and 3D GAN+AG ResNeXt model, respectively.

Among four deep learning models, the highest prediction accuracy and the lowest loss entropy value were obtained in the 3D GAN+AG ResNeXt model, indicating the attention and ResNeXt-integrated deep learning model had great potential for fast and accurate prediction of HCC. Although the data augmentation and dropout operation were used in the 3D GAN+CNN and 3D GAN+ResNeXt, the over-fitting phenomenon still occurred in these two models after the 30th epoch. And the validation loss function value of 3D GAN+AG CNN and 3D GAN+AG RexNext models were observed to demonstrate reduced over-fitting risks, faster convergence, and higher prediction accuracy when compared to 3D GAN+CNN and 3D GAN+RexNeXt.

Collectively, the proposed attention and ResNeXt-integrated GAN model was superior to the conventional deep learning models of GAN+CNN and GAN+ResNeXt, with improved overall accuracy (90.9 ± 5.7%) and AUROC (95.3 ± 6.2%), best sensitivity (93.3 ± 8.2%), improved robustness and generalization using multiphase CT. Furthermore, the time consumed in the testing set (i.e., 11 patients) using the 3D GAN+CNN, 3D GAN+AG CNN, 3D GAN+ResNeXt, and the proposed 3D GAN+AG ResNeXt models was 0.3s, 0.3s, 0.3s and 0.4s (Figure 5 (B)), respectively, showing improved efficiency for the proposed attention and ResNeXt-integrated model. The model training time in total for different deep learning models in our study was 33.2 min, 45.0 min, 153.1 min, and 193.2 min for the 3D GAN+CNN, 3D GAN+AG CNN, 3D GAN+ResNeXt, and the proposed 3D GAN+AG ResNeXt models, respectively.

4 DISCUSSION

Firstly, this research has a unique design of adding spatial and channel attentions to the GAN deep learning model. Those two sub-modules of attentions can facilitate to emphasize important features of HCC and to progressively suppress irrelevant information. Specifically, the channel attention sub-module helps to capture the inter-slice relationship in multiphase CT, while the spatial attention sub-module helps to highlight important regions within the same slice of feature maps. The combined channel and spatial attention sub-modules enable deep learning neural networks to focus on “what” and “where” is meaningful for given multiphase CT images. Hence, channel and spatial attentions aid to reduce redundancies within the network and prioritize relevant features for HCC detection. We hypothesized that attentions may highlight relevant features, which makes the AI-based HCC prediction more accurate and more efficient. We then implemented the idea of attention gates (AG) and proved the 3D GAN+AG CNN model was superior to the conventional 3D GAN+CNN models in terms of accuracy, sensitivity, and efficiency.

Secondly, we also integrated the advantages of the attention mechanism and ResNeXt based on grouped convolutions and split-transform-merge strategy to further improve the diagnostic performance for HCC detection. The ResNeXt utilized a homogeneous multi-branch architecture that explored an efficient way to enhance the representational power and reuse the feature maps without increasing huge computing costs, while the attention mechanism can overcome the feature redundancy in the channel and spatial dimensions. Therefore, although the combination of the attention mechanism and ResNext increase the computation intensively and GPU memory consumption, it contributes to a better feature representation and classification accuracy for HCC differential. Specifically, for the 3D GAN+AG ResNeXt model, HCC prediction with multiphase CT provided best accuracy, AUROC, sensitivity, specificity, and FP.

To the best of our knowledge, this study may be the first to integrate attentions and ResNeXt into GAN for differential diagnosis of HCC based on multiphase CT. Another emerging attention-based AI technique is Transformer, which is involved with a global-level self-attention mechanism. However, it has limited ability of localization so that it cannot properly capture local information due to insufficient low-level details, although Transformer has great capability of learning global features.49 Therefore, we didn't apply global attentions using Transformer, but rather implemented channel and spatial attention gates for the prediction task.

The performance of our proposed 3D GAN+AG ResNeXt model compared to that of other deep learning-based HCC prediction studies using multiphase MRI or CT was summarized in Table 3. Although MRI considered to be more sensitive with similar specificity than CT in diagnosing HCC,2 our proposed attention-integrated GAN model demonstrated nearly equivalent accuracy and AUROC, similar sensitivity and specificity in comparison to multiphase MRI studies conducted by Hamm et al50 and Oestmann et al.28 Furthermore, among all deep learning models trained on multiphase CT, our proposed 3D GAN+AG ResNeXt model provides best accuracy and AUROC,4, 15, 51-53 almost the best sensitivity,50 and best false positives,15, 48 which is also much better than radiologists’ diagnostic performance level (∼0.71).52 We implement GAN models for HCC prediction in this study, which is considered as the novel and cool deep learning neural network29 and has demonstrated its’ enhanced accuracy for the task of classification.54 The comparison of performance between our model using multiphase CT and other deep learning models using multiphase MRI or CT demonstrates that our 3D GAN+AG ResNeXt model has advantages in the HCC prediction, probably due to attention modules integrated into GAN.Nevertheless, our study had a limitation of relatively small sample size, which might raise concerns about the generalizability of the results. Our future plan is to collect more images of multi-phase liver CT or MRI for larger datasets, and to leverage unlabeled data by using semi- or unsupervised deep learning models to address the limitations of a small dataset.

| Deep learning models | Training / validation / testing set | Multiphase Images | A | AUC | Sn | Sp | FP |

|---|---|---|---|---|---|---|---|

| Yasaka 20184 @ Japan | 460/FT/100 |

CT (NC, A, D) |

0.84 | 0.92 | 0.71 | – | – |

| Kim 202115 @ Korea | 568/193/589 |

CT (A, PV, D) |

– | – | 0.85 | – | 4.8 |

| Zhou 202151 @HZ, China | 462/FT/154 |

CT (NC, A, PV) |

0.73 | 0.92 | 0.77 | 0.88 | – |

| Gao 202152 @SH, China | 499/113 (5FCV) /111 |

CT (NC, A, PV) |

0.73 | 0.88 | 0.63 | 0.93 | – |

| Cao 202053 @ GZ, China | 410/–/107 |

CT (NC, A, PV, D) |

0.81 | 0.92 | 0.92 | 0.96 | – |

| Wang 202154 @ SZ, China | 7512/385 /556 |

CT (A, PV, D) |

0.81 | 0.88 | 0.89 | 0.74 | – |

| Hamm 201950 @ Yale | 434/MCCV /60 |

MRI (NC, A, PV, D) |

0.92 | 0.99 | 0.90 | 0.98 | 1.0 |

| Oestmann 202128@Yale | 140/MCCV /10 |

MRI (A, PV, D) |

0.87 | 0.91 | 0.93 | 0.82 | – |

| Our 3D GAN+A+ResNeXt model | 184/5FCV /44 |

CT (NC, A, PV, D) |

0.91 | 0.95 | 0.93 | 0.88 | 1.3 |

- Abbreviations: A, accuracy; AUC, area under the curve of ROC; FT, five times model training to validate; MCCV, Monte Carlo cross-validation; nFCV, n-Fold cross-validation; Sn, Sp and FP, sensitivity, specificity, false positives. Yale, Yale University; HZ, SH, GZ, and SZ represents Hangzhou, Shanghai, Guangzhou and Shenzhen city.

In the future, more image modalities of training data needs to be collected, more suitable classifier structure of the 3D GAN+AG-based model needs to be investigated, and more tasks of detecting other liver lesions can be pursued in order to enhance the accuracy, efficiency, and generalization of our deep learning prediction studies. Specifically, the proposed deep learning model for the HCC differentiation using multi-phase CT may have great clinical potential if it is integrated into the current clinical workflow efficiently. For example, after multi-phase CT acquisition, the images are exported and processed as the input to the proposed deep learning model to obtain the diagnosis accuracy.

5 CONCLUSION

The attention and aggregated residual transformations -integrated GAN-based HCC differentiation using multiphase CT is feasible and favorable with improved accuracy and efficiency. Its performance is better than conventional GAN because attention modules can concentrate on salient features and suppress redundancies, while the ResNeXt can effectively enhance the feature representational power. We demonstrate that attention-integrated GAN-based deep learning provides superb outcomes, harbors great clinical potential in differentiating HCC from other liver lesions, which may facilitate treatment strategies and improve overall survival.

CONFLICT OF INTEREST STATEMENT

All authors declare no conflict of interest.

ETHICS STATEMENT

The CT images were obtained from Peking University Shenzhen Hospital. The study was reviewed and approved by the Health Research Ethics Committee at Peking University Shenzhen Hospital (reference number: PKU Shenzhen # 2022–087). As the data used in this retrospective study were deidentified and anonymized, patient consent was not required for this retrospective study and as per the respective Health Research Ethics Committee approval.

Open Research

DATA AVAILABILITY STATEMENT

The datasets related to patient information are not available. Other data used and/or analyzed during the current study are available from the corresponding author upon reasonable request.