Human-in-the-loop machine learning for healthcare: Current progress and future opportunities in electronic health records

Graphical Abstract

Abbreviations

-

- EHRs

-

- Electronic Health Records

-

- HITL

-

- Human-in-the-loop

-

- ML

-

- Machine Learning

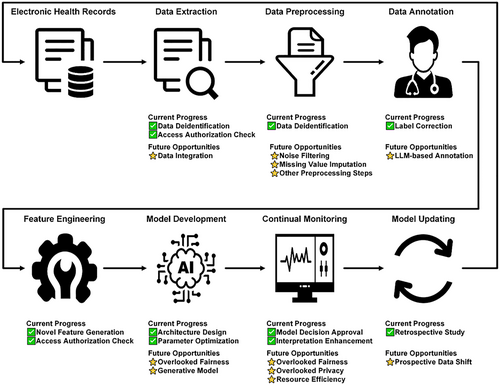

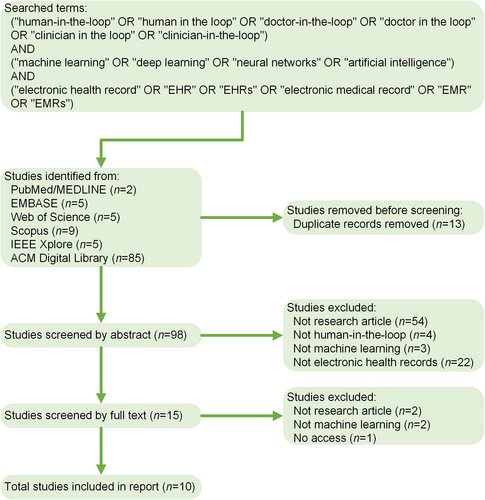

Machine learning (ML), particularly deep learning, has emerged as a fundamental analytical tool for various medical tasks in electronic health records (EHRs) [1]. However, the purely data-driven methods do not serve as a panacea for all encountered problems such as data annotation. To address these issues, human-in-the-loop (HITL) has increasingly gained prominence. It leverages human expertise to improve ML-based analyses [2]. In this commentary, we perform a literature search to identify the current progress (Figure 1), determine research gaps, and highlight future opportunities for HITL across the ML lifecycle, including data preparation, feature engineering, model development, and model deployment.

Pipeline of the literature search of human-in-the-loop machine learning in electronic health records.

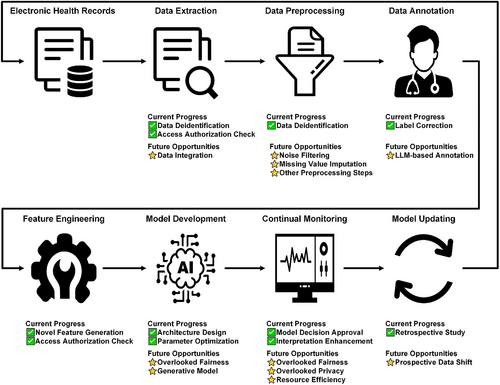

The first phase in which HITL enhances ML is data preparation. This phase includes data extraction, data preprocessing, and data annotation of large-scale raw EHRs into formats operable for downstream modeling [3]. Across the three data preparation steps, data annotation is the focal point in the latest HITL research because the traditional paradigm indiscriminately annotates all available samples by default, which places an unnecessary burden on human experts in time-urgent medical settings. Bull et al. [4] designed an interactive HITL platform that enables clinicians to verify or correct labels predicted by ML. Evaluated on two EHRs databases, the developed platform quickly generated accurate ML models with reduced annotation needs. Similar strategies have been implemented for detecting unauthorized access in data extraction [5] and deidentifying free text [6] in data preprocessing. Given the powerful ability of foundation models in zero-shot inference [7], future studies may use them, such as GPT-4, to replace homemade ML models in computer-aided annotations [8]. Moreover, current studies predominantly focus on data annotation; hence, there remains a vast and unexplored blue ocean for HITL in data extraction, such as data integration, and data preprocessing, such as noise filtering and missing value imputation [9].

Building on well-prepared datasets, the subsequent applications of HITL-ML to EHRs span feature engineering and model development. Feature engineering without HITL relies on either fully automated or fully manual methods, which demand large amounts of computation resources or expert involvement. The incorporation of HITL has enabled the generation of novel features of comparable quality at speeds up to 20 times faster than the original methods [10]. In classic ML, feature engineering has long been deemed essential, preceding model development in numerous contexts because of its demonstrated efficacy in enhancing model performance. However, in recent years, a notable shift has occurred toward the end-to-end paradigm for model development, gradually rendering traditional feature engineering less pivotal [1]. An exemplification of this trend can be observed in artificial neural networks, where shallow layers undertake the task of feature engineering for deep layers, thereby enabling automatic and seamless optimization during model development. Within this paradigm, HITL improves both model architecture design and parameter optimization. Sheng et al. [11] invited doctors to modify the structure and causal relationships of a knowledge graph distilled from EHRs, thereby demonstrating that HITL elevated not only the accuracy but also the interpretability of ML. Rather than adjusting models post-training, Tari et al. [12] applied HITL during the training phase by adding human preferences to the classic optimization target of gold-standard labels. The accuracy [11, 12] and interpretability [11] of ML for EHRs have been augmented by HITL; however, the aspect of fairness has not been sufficiently emphasized. Future researchers may introduce post-hoc recalibrations following [11], or alternatively, embed fairness as an optimization objective in the process of model development like [12] to mitigate potential inequities [13]. Furthermore, the present tasks of model development focus on EHR classification and regression. Future research endeavors could venture into EHR generation to support privacy-preserving analyses on synthetic pseudo samples [14].

Once model development is complete, the final phase of the ML lifecycle is model deployment, which encompasses the continuous monitoring and updating of trained models. HITL has been incorporated into this phase to ensure ML accuracy, interpretability, and compatibility toward temporal and spatial shifts. Doctors have been engaged to double-check intervention suggestions from developed ML models, such as positive infection cases [15] and medication doses [16]. Instead of seeking approval for all decisions from clinicians, Zheng et al. [17] proposed that ML should be able to distinguish difficult and simple cases so that such cases could be solved by medical experts and models, respectively. In addition to ensuring ML accuracy through HITL [15-17], Elshawi et al. [18] and Yuan et al. [19] used clinician-labeled concepts to interpret ML behaviors and clarified their advantages over explanations solely generated by ML. Research on model deployment should also broaden its focus to consider fairness and privacy. Furthermore, the resource efficiency of ML should not be neglected in model deployment because models could be executed on mobile devices with limited computation capability [20]. Even with access to powerful cloud infrastructure, for time and privacy-sensitive medical applications, efficient models should run on edge devices because of their low latency and privacy-preserving benefits. Finally, most previous model deployments were simulated using retrospective EHRs, which prompts future HITL research to resolve performance deterioration in prospective clinical landscapes [21].

In this commentary, we have shown that HITL not only refines data preparation and feature engineering but also catalyzes advancements in model development and deployment, thereby yielding ML tools that are accurate and interpretable. Despite the elucidation of the advantages of leveraging HITL in ML for EHRs, the full potential of HITL is yet to be harnessed. Figure 2 shows an overview of existing gaps and highlights future opportunities. The synergistic interaction among healthcare professionals, ML engineers, and high-performance computers is poised to fulfill the potential of HITL in the enhancement of ML. This human-computer interaction promises not only to improve accuracy, efficiency, and robustness but also to foster interpretability, impartiality, and privacy preservation in healthcare ML systems.

Schematic plot of the current progress and future opportunities of human-in-the-loop across the machine learning lifecycle.

AUTHOR CONTRIBUTIONS

Han Yuan: Conceptualization (lead); data curation (lead); formal analysis (lead); investigation (lead); project administration (lead); visualization (lead); writing – original draft (lead); writing– review & editing (lead). Lican Kang: Data curation (supporting); formal analysis (supporting); writing – review & editing (supporting). Yong Li: Investigation (supporting); methodology (supporting); writing – review & editing (supporting). Zhenqian Fan: Investigation (supporting); methodology (supporting); writing – review & editing (supporting).

ACKNOWLEDGMENTS

None.

CONFLICT OF INTEREST STATEMENT

All authors declare that they have no conflicts of interest.

ETHICS STATEMENT

Not applicable.

INFORMED CONSENT

Not applicable.

Open Research

DATA AVAILABILITY STATEMENT

Data sharing is not applicable to this article as no new data was created or analyzed in this study.