The Use of Large Language Models and Their Association With Enhanced Impact in Biomedical Research and Beyond

ABSTRACT

The release of ChatGPT in 2022 has catalyzed the adoption of large language models (LLMs) across diverse writing domains, including academic writing. However, this technological shift has raised critical questions regarding the prevalence of LLM usage in academic writing and its potential influence on the quality and impact of research articles. Here, we address these questions by analyzing preprint articles from arXiv, bioRxiv, and medRxiv between 2022 and 2024, employing a novel LLM usage detection tool. Our study reveals that LLMs have been widely adopted in biomedical and other types of academic writing since late 2022. Notably, we noticed that LLM usage is linked to an enhanced impact of research articles after examining their correlation, as measured by citation numbers. Furthermore, we observe that LLMs influence specific content types in academic writing, including hypothesis formulation, conclusion summarization, description of phenomena, and suggestions for future work. Collectively, our findings underscore the potential benefits of LLMs in scientific communication, suggesting that they may not only streamline the writing process but also enhance the dissemination and impact of research findings across disciplines.

1 Introduction

The advent of AI1 in various sectors has marked a new era in the production and consumption of digital content [1]. Among the most notable developments is the rapid development of generative AI powered by large language models (LLMs). ChatGPT2, introduced in 2022, is an representative LLM-based tool [2] with uncanny ability to generate text that closely mimics human writing. The ability of ChatGPT and similar models to produce coherent, contextually relevant texts has revolutionized content creation, leading to its adoption across multiple writing forms. Scientific writing, as one major type of content generation, seem to be inevitably influenced by this trend. At the first glance, these AI tools seem to liberate researchers from heavy writing burden. This proliferation has not been without controversy, however, as it raises significant concerns regarding the authenticity, originality, and quality of LLM-generated content [1, 3-5]. Moreover, the potential of these technologies to contribute to information overload by producing large volumes of content rapidly has been a subject of debate among academics and industry professionals alike [6, 7].

Specifically, in the scientific community, the penetration of LLM-generated texts poses unique challenges and opportunities. Scientific writing is typically characterized by its rigorous standards for accuracy, clarity, and conciseness, and some of these tasks could be assisted with LLMs. However, scientific writing also requires the “art” of human inquisition, perception, and a nuanced understanding and explanation of the key observations and findings; these parts of the scientific writing are considered not currently possible with LLM models. Thus, scientific writing may be a crossroads with the integration of LLM-generated content. This context thus makes two questions emerge: (1) Has the science community been widely using LLMs in writing papers? And (2) If yes, will such extensive usage cause a bad influence in the communication of scientific knowledge?

At the time of writing, there are not many studies focused on the first question, that is, a panoramic analysis of how LLM has influenced scientific writing. Some of them concentrate on a single subject, such as computer science [8], while we believe the impact is much more general. For the second question, to our knowledge there's no such studies at all. In this article, we aimed to address these two questions in a comprehensive way. First, we examined the extent to which LLM-generated texts have made their way into general scientific literature. Second, we examined the relationship between LLM usage and paper impact through citation-related metrics.

We adopted submissions to three large preprint platforms to form the data set for analysis, given their timeliness. An LLM usage detector model is established based on the foundation of a recently emerged LLM generation likelihood metrics, Binoculars score [9]. After identified the extent of LLM usage, we analyzed the correlation between it and citation numbers. Our results reveal that contrary to some expectations, LLM usage may enhance research paper impact. Further analysis of text content types shows that rather than being used extensively for altering factual parts like data presentation, LLMs are more frequently employed for “creative” tasks such as hypothesis formulation. This suggests that LLMs might improve communication efficiency by lowering language barriers, which we view as a positive factor in scientific development.

2 Results

2.1 Data Set

The publishing life-cycle of a paper may take various time periods, with some of them longer than a year. On the other hand, LLM-based text generation AI tools like ChatGPT, have gained broad popularity since the end of 2022. Such a short time span makes it difficult to analyze the LLM footprint in officially published literature in all domains. Therefore, we have instead focused on manuscripts submitted to preprint platforms. Such platforms like arXiv are good choices for our purpose for several reasons: First, more authors tend to upload a preprint version before they submit the manuscript to a journal to plant a flag about the timing of their discovery. Thus, the timeliness of content may be the latest we can get from the science community; Second, the number of manuscripts submitted to these platforms are high even in a short interval, making it possible to do more fine-grained analysis; Last, to our knowledge, all preprint platforms are open to bulk access, making large scale analysis possible.

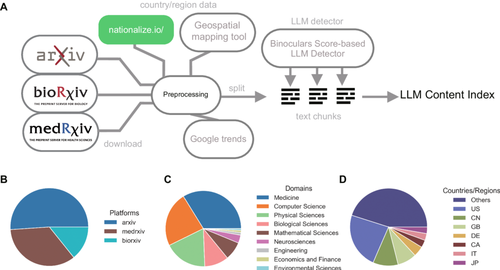

In this study, we collected manuscripts in the form of PDF files from three mainstream preprint platforms: arXiv, bioRxiv and medRxiv (Figure 1A,C), covering domains spanning from math and engineering to biology and medicine (Figure 1D). For all platforms, we downloaded manuscripts from 2022.01.01 to 2024.03.01. We chose this time period because it includes 1 year before and 1 year after the release of ChatGPT in December 2022. For each month, at most 1000 random manuscripts (in some months medRxiv has fewer than 1000 papers submitted) were downloaded in each platform using the provided API. After cleaning and preprocessing (see Method), some invalid documents were removed and 45,129 manuscripts were used for analysis. The domains of these papers are categorized into following classes: Biological Sciences, Computer Science, Economics and Finance, Engineering, Environmental Sciences, Mathematical Sciences, Medicine, Neurosciences, and Physical Sciences. On the other side, since we have no internal access to the specific traffic data of OpenAI's website, to investigate the influence and usage of ChatGPT, we used Google Trends data as a proxy [10]. Daily and Weekly level world-wide Google Trends of the keyword ChatGPT are used for analyses at different temporal resolutions. We also note that as a proxy index, Google Trends data does not fully reveal the extent of people using ChatGPT for academic writing as it could be caused by short-term hype. But to our knowledge, it is one of the most representative publicly available index in measuring the degree of exposure of ChatGPT, and the surge of search index caused by hype can be canceled in the a long period across years.

2.2 More LLM Usage After the Release of ChatGPT

In early language models like LSTM or GRU [11, 12], machine-generated texts could be easily spotted and were generally considered useless in production. However, detecting AI-generated texts nowadays has become challenging due to the transformative power of LLMs. The release of ChatGPT at the end of 2022 further complicated detection, as detectors may not have access to the model parameters. On the other hand, LLMs like ChatGPT can generate seemingly realistic texts, making pure eye-based detection implausible. Detectors that use hidden statistical patterns have become advantageous in this context, as they require no knowledge of the specific LLMs used and little to no training at all.

Some common choices are based on word frequency analysis [13, 14], some others focus on the perplexity of the given text [15-17]. The general idea behind the latter approach is that texts generated by LLMs tend to have lower perplexities. However, this may only work best for texts that are completely generated by LLMs. In the case of scientific writing, authors may rely on LLMs more for revising content rather than using LLMs to generate an entire manuscript from scratch. Detecting such revised texts could be extremely challenging. The Binoculars score [9] is a tool specifically designed to address this issue. When Binoculars is high, it indicates that the input text is more likely generated by humans. When Binoculars is lower than a certain threshold, the text is more suspicious of containing LLM-generated content (Figure 1B). By utilizing two instead of one LLM, Binoculars allows us to detect texts that may have prompts mixed into the content. This feature enables it to outperform several other known LLM detectors, such as Ghostbuster [18], GPTZero3, and DetectGPT [19], in many benchmark tests, including datasets involving arXiv samples. Given its outstanding performance efficiency, we build our LLM usage detector tool based on it in this study (for details, see Methods and Supporting Information S1: Figures S1 and S2).

The prediction is calibrated then using the prior assumption that before the release of ChatGPT there's no LLM-generated content in scientific writing. The false positive rate of this model on a manually constructed balanced data set is 0.281. A detailed description of the process is described in the Supporting Information S1.

For all manuscripts in the data set, we calculated their LLM-like content index. Next, a forward rolling average of this index with a window of 30 days was computed from 2022 to 2024, assuming the current usage of ChatGPT may be reflected in the manuscripts submitted in the near future rather than at the same moment, given that a manuscript usually takes a relatively long time to finish (a 30-day average lag is assumed).

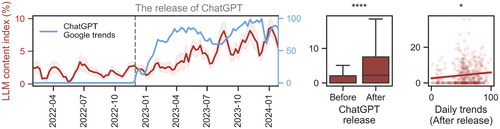

We then compared this index with the weekly Google Trends of the keyword “ChatGPT”, which is used to indirectly measure the usage and popularity of LLM-based AI tools in writing. As shown by the blue line in the left column of Figure 1, the search trend for “ChatGPT” rises after its release on 2022.11.30. Compared with this trend, we noticed that the LLM-like content index clearly correlate with the trend. Further analysis of how specific features (mean, var, min) used by the detector correlate with the trend data is presented in the supporting material.

We further examined whether this relationship holds in an even more refined temporal domain. Similarly, daily-level ChatGPT Google Trends were compared with the LLM-like content index at the same resolution, but only for the time after the release of ChatGPT. The results on the right side of Figure 2 indicate that the correlation persists and is consistent with the weekly level analysis. Moreover, to exclude the possibility that this change is primarily due to “unfiltered” preprints, we conducted the same analysis for the published subset in the data set, and the results are consistent (see Supporting Information S1: Figure S3). For the rest of this study, the entire collection is used for its sample size in extracting effects.

2.3 LLM Usage Is Positively Correlated With Paper Impact

One of the common reasons people worry about the use of LLM is that they may “contaminate” content quality, but is this really the case? Since directly accessing such a subjective measure is hard, we turned to citation numbers as a proxy for a paper's impact. Using the API provided by Semantic Scholar, we collected citation numbers for nearly all manuscripts in the data set. Since the LLM-like content index is still too coarse for text level analysis, we turned to one of its factors, the mean values of the binoculars scores and compared the correlation between the them and the number of citations in two sets: manuscripts submitted before and after the release of ChatGPT.

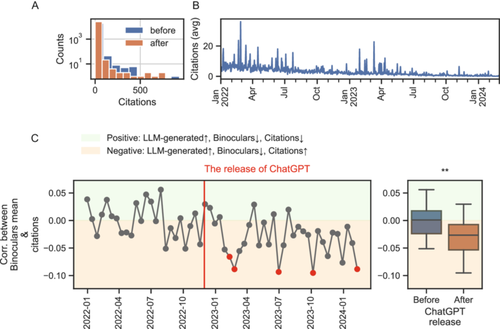

The correlation before the ChatGPT release is not significant (0.004214, p-value = 0.56). However, after the release, the correlation changes to −0.018911, with a p-value of 0.002566. A difference in correlation analysis shows that the change in correlation is significant (p-value = 0.007994). This surprisingly implies that since people can use LLM-based tools like ChatGPT, the more one uses them (lower Binoculars score mean values and thus higher LLM-like content index), the more likely one will get citations (negative correlation between mean Binoculars scores and citations).

To check whether this difference is caused by the time elapsed effect on citations, we conducted a more fine-grained analysis. We first noticed that the distribution of citation numbers is highly imbalanced, with most papers receiving a few citations (Figure 3A). Besides, citation numbers naturally accumulate, and thus more recent papers usually get fewer citations compared with older ones, though their actual impact may be comparable. This is reflected in the “decaying” daily citation average of citation numbers in the data set (Figure 3B). To mitigate the long-term timing effect, we compared correlations between the mean Binoculars scores and citations every 2 weeks, ranging from 2022 to 2024 (Figure 3C), as the accumulation effect could be ignored in such a short interval. The results show that, after the release of ChatGPT, there's indeed a declining trend of this correlation down to negative regions (2023-02-10: p = 0.047; 2023-02-24: p = 0.008; 2023-06-30: p = 0.015; 2023-10-06: p = 0.008; 2024-02-09: 0.013, highlighted red in Figure 3C left). The difference in these biweekly-level correlations (Figure 3C right) is also significant, being consistent with our preliminary analysis above. Like Figure 1, we have also analyzed the published subset and get consistent results (see Supporting Information S1: Figure S4).

2.4 Heterogeneous Use of LLM in Different Content Types

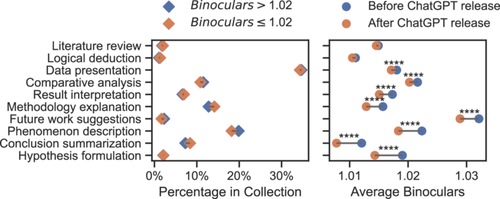

After the correlation analysis between LLM usage and citation numbers, one might naturally ask whether it is the LLM that exaggerates or even cheats on research data during text generation/modification, contributing to the popularity of those papers. This suggests that LLMs may be more used in sections like data presentation and analysis. However, another aspect to consider is the influence of LLM-generated text on content types. Intuitively, content that introduces previous findings or contains more existing information, such as introduction, might be more influenced by LLM, as the training data set could already have the knowledge. In contrast, highly specific content and new findings like results and discussions might be less suitable for generation using LLMs without not up-to-date knowledge. To address the issue that which parts in scientific writing are more influenced by LLMs, we used the NLI-based Zero Shot Text Classification model to categorize all chunks in each manuscript into the following types (for details, see Supporting Information S1: S.6) and check their Binoculars score changes (LLM-like content index is also too coarse in this analysis so we used its factor again like the analysis in Figures 3 and 4): phenomenon description, hypothesis formulation, methodology explanation, data presentation, logical deduction, result interpretation, literature review, comparative analysis, conclusion summarization, future work suggestions, bibliography, and publishing metadata. Excluding the last two types, which are irrelevant to our analysis and are introduced by the parsing process of PDF files, we analyzed the distribution of all these types in the data set.

Specifically, we first checked if the distribution of content types is stable across text chunks with high and low Binoculars score distributions (Figure 4, left). All text chunks were split into two sets: those with mean Binoculars scores higher than the average score of the whole data set (1.02) and those that were lower. We found that the average Binoculars scores of different content types matched our intuition: literature review content has very low Binoculars scores, while contents containing novel information, such as data presentation and phenomenon description, have the highest average Binoculars scores. Additionally, the content type distributions in high and low Binoculars score collections are relatively stable, as the portion shifts are small.

Next, we examined the Binoculars score differences for each content type before and after the release of ChatGPT (Figure 4, right). Although most content types showed signs of a decrease in Binoculars scores, literature reviews did not experience a significant drop after the release of ChatGPT. Other parts that are usually considered rigid like data presentation and comparative analysis also have a small Binoculars score drop. In contrast, contents previously considered “novel” or “creative”, such as hypothesis formulation, conclusion summarization, phenomenon description, and future work suggestions show the largest score drops, indicating ChatGPT is more adopted in writing activities that involve creating previously unseen information, as content types like hypothesis, conclusion and future works may carry the most innovative part in a research paper.

3 Discussion

After analyzing around 45,000 manuscripts from vastly diverse disciplines and language backgrounds, we have empirically identified a growing role of LLM-generated text in scientific writing. This trend follows the release of ChatGPT in late 2022. We have conducted our evaluation using the Binoculars score statistical feature-based classifiers, LLM-like content index, for each manuscript. In this classifier, the lower the Binoculars score, the higher the LLM-like content index and thus the content may be more likely edited by LLMs. We then observed the average LLM-like content index has significantly increased after 2022.11.30, and this correlates with the Google Trends data of the keyword “ChatGPT”, indicating a widespread presence of LLM-generated text in scientific manuscripts. Next, we tracked the evolution of monthly correlations between the mean Binoculars scores and citation numbers. An unexpected trend reversal was noted: before the release of ChatGPT, the correlation was around 0, suggesting that writing style had a minimal impact on a paper's influence. However, post-release, the correlation turned negative, indicating that papers with more LLM-generated content (lower Binoculars scores, more likely LLM content) are more likely to be cited (higher citation counts).

To further investigate if this effect is caused by LLMs exaggerating or even cheating on key fact disclosures like data presentation and comparative analysis, we classified manuscript texts into different content types and checked their Binoculars score changes before and after the release of ChatGPT. First, we noted that the influence of LLM on content type is imbalanced. Second, in contrast to our impression that LLMs may be more used in rigid parts like reviewing previous studies and presenting data, they're more frequently and heavily used in parts like hypothesis formulation and conclusion summarization. This suggests that LLM may not harm the fact parts in a paper, which are usually data presentation and statistical comparative analysis and instead they're more widely used in the “idea” parts.

Compared with other qualitative studies and philosophical reflections on the impact of LLMs on scientific writing [5, 7, 20, 21], our analysis provide quantitative evidence about LLM usage in scientific writing. Some other recent studies either focus on a limited domain or use fixed keywords as a proxy [8, 14], while our results cover vastly different domains and variables that that may contribute to the influence independently. We also examined LLM's usage and its potential in changing the way people citing others' work, a key step in scientific knowledge dissemination.

Regardless of personal preferences and opinions, LLM-based tools like ChatGPT have become embedded in daily human communication. Unlike scenarios where students use ChatGPT for assignments, scientific writing has traditionally been viewed as a means of sharing ideas and new knowledge to humanity. Thus the use of LLM raises practical and ethical concerns beyond just “plagiarism” as narrowly defined [20]. Also, it's well-known that LLMs may produce seemingly trustable text with hallucination [22]. Extra attention must be paid before using them widely in scientific writing. However, out of many negative skeptical perspectives, we think that overall, LLMs may be beneficial to the science community. We reached this conclusion for several reasons. First, our analyses confirmed clear and wide usage trends of LLMs in scientific writing. This process is a one-way street and we can't put the genie back to the bottle given the accessibility of these tools. Disclosure has been proposed as a solution in some platforms, but in fact, we found it is unlikely to be useful; we checked a sampled subset of 1000 low Binoculars score manuscripts and we found 0 declarations on LLM usage (see Supporting Information S1). The actual situation is way more complicated. First, though the mean Binoculars scores have significantly decreased following the introduction of ChatGPT (Figure 1), they remain above 1.01, considerably higher than the threshold of around 0.9 set by [9]. This means that while the use of LLM in scientific writing is widespread, it is not predominantly for generating extensive texts. Authors may primarily use LLM for editing and revising. Second, the citation-Binoculars score correlation analysis indicates that the use of LLM in scientific writing may help to share ideas to peers. From the perspective of communication, this implies a positive role of LLMs in scientific writing. Lastly, we examined the usage of LLMs across different content types and we found key factual parts like data presentation are less likely to be influenced by LLMs. It may be more the wording change in the “creative” parts that leads to the wider popularity of papers used LLMs. We don't see this as a bad sign in writing. With such applications, we cannot find any reasons to oppose the use of LLM in writing, as it could be used to bridge the communication gap caused by region/language barriers. In this case, simple word replacement phenomena caused by LLM [14] could be a byproduct and do not necessarily imply content quality degeneration, though some other study on open review process in computer science indeed shows bad signs [8].

Nevertheless, the analysis pipeline constructed still has a few limitations. First, as pointed out by [9], it is impossible to completely determine whether a text is generated by LLM using some threshold values. There's a chance that improper use and attacks may reduce its reliability [20]. Other tools we used for analysis, like the zero-shot text classification model may also suffer from similar issues. Second, though more authors upload their manuscripts to preprint platforms [23], they still do not cover all scientific papers, not to mention that different domains also have varying tendencies in using preprints as a distribution channel. Besides, changes across versions and the timeliness of these submissions do not perfectly reflect the actual time of writing. Therefore, the data set we used cannot completely represent the entire picture, it is more an early attempt to spot the usage tendency in some major domains. Third, the correlation observed between the Binoculars score (LLM usage) and the number of citations does not indicate a causal effect, but rather an observational relationship. However, despite the limitations outlined above, this study represents, to our knowledge, the first comprehensive quantitative endeavor to reveal the LLM's footprint in scientific writing activities, and links it to research impacts with stable and significant results. For the future, we suggest (1) expanding the scope of analysis to include data from platforms like Dimensions and comprehensive metrics such as Altmetrics, which could offer a more refined basis for analysis, and (2) conducting larger, long-term studies to explore the causal relationship between the use of LLMs and their impact on papers, to determine if the current effects persist over time.

In conclusion, we have empirically shown that LLM-generated text has been used in many settings worldwide, playing a growing role in scientific writing. More importantly, its use is associated with higher citations, suggesting an improvement in research impacts. Based on these findings, beyond transparency and integrity issues, our results make us to keep cautiously optimistic about the use of LLMs in scientific writing.

4 Methods

4.1 Data Source and Preprocessing

To extract submitted manuscript information from bioRxiv and medRxiv, we used the official “details” API in these platforms https://api.biorxiv.org and https://api.medrxiv.org/. For arXiv, manuscript information is downloaded directly from Kaggle (https://www.kaggle.com/datasets/Cornell-University/arxiv) as it provides free bulk access to all submissions on arXiv. For all platforms, we collect at most 1000 submissions in each month, starting from 2022.01 to 2024.03.

All PDF files were directly download from the corresponding platforms using the URLs in the meta data fetched above. Once downloaded, we used pymupdf (https://pymupdf.readthedocs.io/en/latest/) to parse the PDF files and turn them into plain text files. Non-ASCII characters are filtered out in this step, for the convenience of later analyses. Next, for each manuscript, we segment it into chunks of with length 512 for further Binoculars calculation.

4.2 Identification of Country/Region Information

At least one country/region and language is assigned to each manuscript in the whole data set (Figure 1). All platforms do not provide author country/region information directly. bioRxiv and medRxiv provide the corresponding author name and institution information. arXiv provides only a list of author names for each paper.

To simplify and implement our analysis, we made several decisions in this process. First, we assume that corresponding author largely determines the content and writing style of the manuscript. Second, we assume the last author provided by arXiv at most of the time, can be treated as the corresponding author of that paper. bioRxiv and medRxiv do not rely on this assumption as they provide the information directly. for bioRxiv and medRxiv, when the corresponding author is affiliated with more than one institution, we selected the first one as the only institution for our analysis. Third, when the corresponding author's institution information is available, we use geospatial mapping tool (GS(2016)2948) API to get the country/region information of the institution and treat this as the country/region of the paper. Lastly, when the corresponding author's institution information is not available (arXiv), we use nationalize.io's service to infer the country/region of the corresponding author, as one's name statistically can be used to infer their ethnicity/nationality (needs citation). Then the official language is determined using the GB/T 2659.1-2022 Country Codes (also adopted in ISO-3166) and GB/T 4880.1-2005 Language Codes (equivalent to ISO-639). Since nationalize.io also returns unknown for some names occasionally, we excluded such manuscripts for the country/region/language analysis.

4.3 ChatGPT Google Trends

We downloaded worldwide Google Trends data for the keyword “ChatGPT”, both weekly and daily, from https://trends.google.com/trends/. Weekly trends data is downloaded directly from the server. But for the daily trends data, since Google only provides a limited time interval for each query and the results are normalized within the interval from 0 to 100, we downloaded daily data in 2-month intervals and forced different queries to overlap. This approach allows the reconstruction of long-interval daily trends data using the earliest month as the base.

4.4 Binoculars Score

In our analysis, we calculate Binoculars scores for each text chunk in a manuscript. At the manuscript level, we compute the variance, mean, and min values of all Binoculars scores in the manuscript.

4.5 Content Type Classification

To examine the correlation between content types and Binoculars scores, we classified all text pieces into the following 12 content types: phenomenon description, hypothesis formulation, methodology explanation, data presentation, data presentation, logical deduction, result interpretation, literature review, comparative analysis, conclusion summarization, future work suggestions, bibliography, publishing metadata.

This list covers the majority of content found in a typical scientific paper. Subsequently, we employed Meta's NLI-based Zero Shot Text Classification model (https://huggingface.co/facebook/bart-large-mnli) [25, 26] to perform zero-shot text classification using the above list of content types. Except for bibliography and publishing metadata, which are not essential for our analysis, the distributions are analyzed.

Author Contributions

Huzi Cheng: conceptualization, data curation, visualization, validation, methodology, writing – original draft. Wen Shi: validation, methodology, writing – review and editing. Bin Sheng: validation, methodology. Aaron Y. Lee: validation, methodology. Josip Car: validation, methodology. Varun Chaudhary: methodology. Atanas G. Atanasov: project administration. Nan Liu: validation, project administration. Yue Qiu: project administration. Qingyu Chen: project administration. Tien Yin Wong: funding acquisition. Yih-Chung Tham: funding acquisition. Ying-Feng Zheng: funding acquisition, project administration, resources, supervision, writing – review and editing. All authors have read and approved the final manuscript.

Acknowledgments

Tien Yin Wong is supported by the National Key Research and Development Program of China (2022YFC2502800). Ying-Feng Zheng is supported by the National Key Research and Development Program of China (2022YFC2502800) and the National Natural Science Foundation of China (82171034).

Ethics Statement

The authors have nothing to report.

Conflicts of Interest

The authors declare no conflicts of interest.

Endnotes

Open Research

Data Availability Statement

All the source data are collected from publicly available platforms, as detailed in the methods section. Analysis code will be available at github.