Recent advances of Transformers in medical image analysis: A comprehensive review

Abstract

Recent works have shown that Transformer's excellent performances on natural language processing tasks can be maintained on natural image analysis tasks. However, the complicated clinical settings in medical image analysis and varied disease properties bring new challenges for the use of Transformer. The computer vision and medical engineering communities have devoted significant effort to medical image analysis research based on Transformer with especial focus on scenario-specific architectural variations. In this paper, we comprehensively review this rapidly developing area by covering the latest advances of Transformer-based methods in medical image analysis of different settings. We first give introduction of basic mechanisms of Transformer including implementations of selfattention and typical architectures. The important research problems in various medical image data modalities, clinical visual tasks, organs and diseases are then reviewed systemically. We carefully collect 276 very recent works and 76 public medical image analysis datasets in an organized structure. Finally, discussions on open problems and future research directions are also provided. We expect this review to be an up-to-date roadmap and serve as a reference source in pursuit of boosting the development of medical image analysis field.

1 INTRODUCTION

For medical analysis, medical image is one of the most abundant modalities. With the increasing development of computer vision (CV), the medical image analysis can contribute to the clinical practice of doctors. Some specific CV tasks on medical images can be, to some extent, associated with the guide and aid for the doctors. For instance, the segmentation task in CV can help the doctor pick out the abnormal region, which reflects the symptom of disease and provide the preliminary information for the corresponding medical intervention. On the other hand, medical image analysis differs from the general CV task owing to the property of medical images. The medical image data set tends to be relatively small, which make some framework that have performed well in CV fail the medical image analysis task expectancy.

Despite the particularity of medical image analysis, there still exists a strong relation between the CV and medical image analysis. Hence, the shift of CV mainstream method has also been reflected in automatic analysis of medical images. Since the deep learning reshaped the development of CV, convolutional neural network (CNN) has been one of the most influencing frameworks in image processing. Correspondingly, previous attempts had been made to employ the CNN to the detection, segmentation, and other visual tasks on various medical image modalities, such as computed tomography (CT), ultrasound (US), magnetic resonance imaging (MRI). The convolution operation, on which CNNs were generally based, proved to be excellent in local feature extraction.

However, the shortcoming of convolution operation outbroke with the occurrence of Transformer structure tailored for images. Transformer network was initially launched for the natural language processing (NLP). The global dependency of attention mechanism made the Transformer dominate NLP in a short period. Afterward, the introducing of Transformer to the image processing, namely the vision Transformer, was shown to outperform the CNN largely in terms of image recognition. Other than image recognition, many visual tasks, such as image segmentation, image reconstruction also accepted the Transformer structure. Nevertheless, the Transformer structure brought a sharp improvement in efficiency yet a larger consumption of data scale and computation source. Notably, medical image analysis, with a scarcity of data scale, may suffer from the shortcoming of Transformer. It still remains an open question as for how to balance the efficiency of Transformer with its overwhelming computation cost.

In this paper, many attempts to solve this dilemma have been introduced. Considering the complexity of clinical circumstance and disease symptom, many researchers have proposed many innovative methods to utilized the Transformer in medical imaging analysis. Some fuse the Vision Transformer with other network while others tailor the Vision Transformer network according to the specific requirement of clinical demand. To demonstrate these Transformer-based methods more comprehensively and systematically, these attempts are arranged according to their corresponding visual tasks in CV and target diseases. The organization of this paper as follows. Section 2 introduces the mechanism of Transformer and Vision Transformer. Sections 3–9 categorizes the different applications of Vision Transformer in medical imaging. Section 10 introduces some public medical imaging datasets. Section 11 concludes the latest Transformer-based works in medical imaging and discusses the further development.

2 MECHANISMS OF TRANSFORMER

Initially proposed in Vaswani et al.,1 Transformer has shown to perform an excellent task in NLP. In fact, the powerful weapon for Transformer: the global receptive fields and the long-range dependency also applies to the vision task with a trick of image patching. In Section 2, the selfattention module, positional encoding and the Transformer structure are introduced as the preparation for the Transformer-based works in Sections 3–9.

2.1 Self-attention

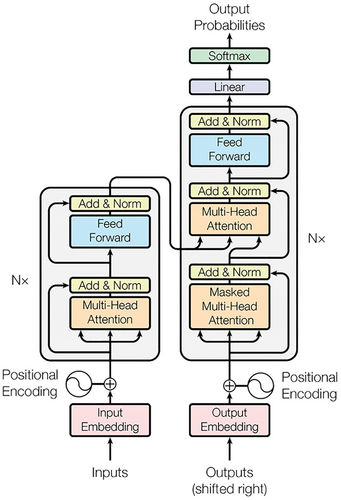

Self-attention is a mechanism adopted in Transformer (as is shown in Figure 1) to achieve the task of sequence labelling. In a seq-to-seq task, in which the inputs are vector sets instead of a single vector, the model may find it difficult to extract the contextual information. Thus, the self-attention is introduced with the help of Scaled dot-product. The contextual weight of each vector is obtained after the Scaled dot-product in pairs and a Softmax operation.

2.1.1 Scaled dot-product

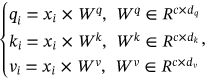

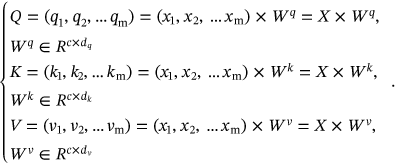

()

() ()

() ,

,  ,

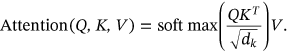

,  are shaped in the form of matrixes. With the matrix Q, K, the attention weight can be calculated by multiplying Q with K. After a

are shaped in the form of matrixes. With the matrix Q, K, the attention weight can be calculated by multiplying Q with K. After a  (dimension) division and a Softmax operation, the compressed attention weight is assigned to the V matrix. In conclusion, the Scaled dot-product attention can be described mathematically as follows:

(dimension) division and a Softmax operation, the compressed attention weight is assigned to the V matrix. In conclusion, the Scaled dot-product attention can be described mathematically as follows:

()

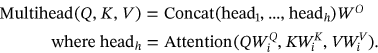

()2.1.2 Multi-Head Attention

()

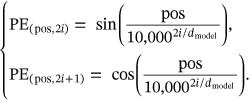

()2.2 Positional encoding

Positional encoding is an effective method to retain positional information, which has also been previously adopted in CNN or RNN. As Transformer take the data as a whole with no regard to distance within sequences, the position information of the data is missing. Therefore, position encoding in Transformer is not complementary to recurrent or convolution, as it tends to do in CNN or RNN, but an essential indicator that carries all of the positional information in Transformer, whether this positional information is relative or absolute.

stands for the position index of a particular word. For the odd dimension and even dimension of the positional encoding, different sinusoid functions are adopted as follows:

stands for the position index of a particular word. For the odd dimension and even dimension of the positional encoding, different sinusoid functions are adopted as follows:

()

()Owing to the property of sinusoid function: product to sum,  can be represented as a linear function of

can be represented as a linear function of  .

.

2.3 Transformer architecture

Designed for sequence-to-sequence tasks, Transformer adopts the encoder-to-decoder architecture, like other excellent neural sequence transduction models. A typical Transformer consists of blocks for Multi-Head Attention, masked Multi-Head Attentionattention,Feed Forward, and layer normalization.

2.3.1 Encoder

, after the Multi-Head Attention block, the sequence gets normalized and added by the input before the Multi-Head Attention. Then, the data sequence gets processed through a Feed Forward layer and a residual connection, an output sequence Z =

, after the Multi-Head Attention block, the sequence gets normalized and added by the input before the Multi-Head Attention. Then, the data sequence gets processed through a Feed Forward layer and a residual connection, an output sequence Z =  is fed to decoder. The computation process of Feed Forward layer can be depicted as follows:

is fed to decoder. The computation process of Feed Forward layer can be depicted as follows:

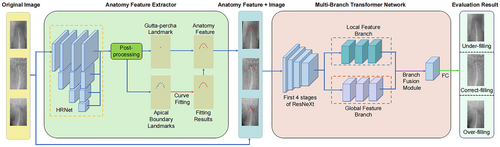

()

()2.3.2 Decoder

Decoder of the Transformer contains three layers. The target of decoder is to generate the output sequence of the whole Transformer model based on the output of the encoder. Notably, the bottom Multi-Head Attention employed in decoder gets masked. As the sequence-to-sequence task takes sequences as both input and output, the mask operation can cut off the influence from the subsequent positions. The following Multi-Head Attention layer and Feed Forward layer are similar to those in the encoder part.

In this review, we consider various types of Transformer models used in the field of medical image analysis in different settings.

3 MEDICAL IMAGE SEGMENTATION

Medical images are suitable for revealing the symptoms of the disease, which can be valuable for both diagnosis and treatment. However, from the pixel-wise perspective, only some part of a medical image make contribution to the latter diagnosis and treatment, namely the tumor part of a CT image. Hence, it remains an important and challenging task for researchers to segment the targeted region, whether the infected area or an abnormal organ, of a medical image. Thankfully, many medical image researchers have introduced the prevalent framework in computer vison: Transformer to solve this automatic medical image segmentation. Some of their efforts are summarized in Table 1.

| Name | Modality | Organ/Method | Disease/Dimension |

|---|---|---|---|

| RANT2 | Laryng | Throat | None |

| MBT-Net3 | Fundus | Eye | Corneal |

| PCAT-UNet4 | Fundus | Retinal | Vessel |

| TransBridge5 | Echocar | Cardiac | Left ventricle |

| GT-Unet6 | X-ray | Tooth | Rootcanal |

| AGMB-Transformer7 | X-ray | Tooth | Rootcanal |

| Chest l-Transformer8 | X-ray | Chest | None |

| GuifangZ9 | X-ray | Catheter | Guide-wire |

| MSAM10 | PETCT | Lung | Lung cancer |

| TransDeepLab11 | Multimodality | Pure Transformer | 2D |

| TransNorm12 | Multimodality | UNet-based | 2D |

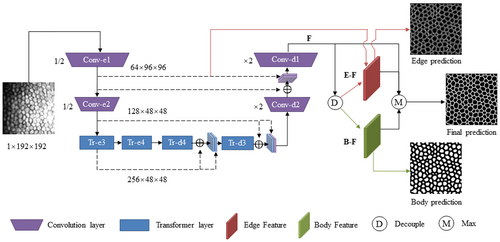

| EG-TransUNet13 | Multimodality | UNet-based | 2D |

| HRSTNet14 | Multimodality | Others | None |

| ScaleFormer15 | Multimodality | CNN-based | None |

| TFCNs16 | Multimodality | Others | None |

| Karimi17 | Multimodality | Pure Transformer | 3D |

| TransUNet18 | Multimodality | UNet-based | 2D |

| UTNet19 | Multimodality | UNet-based | 2D |

| MedT20 | Multimodality | CNN-based | None |

| SwinUnet21 | Multimodality | UNet-based | 2D |

| AFTer-UNet22 | Multimodality | UNet-based | 3D |

| MissFormer23 | Multimodality | Pure Transformer | 2D |

| DS-TransUNet24 | Multimodality | UNet-based | 2D |

| PMTrans25 | Multimodality | CNN-based | None |

| UTran26 | Multimodality | UNet-based | 2D |

| LeViT-UNet27 | Multimodality | Others | None |

| CASTformer28 | Multimodality | GAN | None |

| HiFormer29 | Multimodality | CNN-based | None |

| LViT30 | Multimodality | Others | None |

| DFormer31 | Multimodality | UNet-based | 3D |

| MCTrans32 | Multimodality | CNN-based | None |

| HyLT33 | Multimodality | CNN-based | None |

| TranFuse34 | Multimodality | CNN-based | None |

| UTrans26 | Multimodality | UNet-based | 2D |

| Segtran35 | Multimodality | CNN-based | None |

| Li36 | Multimodality | UNet-based | 2D |

| TransClaw UNet37 | Multimodality | UNet-based | 2D |

| TransAttUNet38 | Multimodality | UNet-based | 2D |

| nnFormer39 | Multimodality | Pure Transformer | 3D |

| VT-UNet40 | Multimodality | Pure Transformer | 3D |

| MSHT41 | Multimodality | CNN-based | None |

| USegTransformer42 | Multimodality | CNN-based | None |

| TUnet43 | Multimodality | UNet-based | 2D |

| ViTBIS44 | Multimodality | Pure Transformer | 2D |

| Atlas-ISTN45 | Multimodality | Pure Transformer | 3D |

| Shen Jiang46 | Micro | Celluar | Tissue |

| CellDETR47 | Micro | Cell | Cell |

| DFANet48 | MRI | Bone | Osteosarcoma |

| Liqun Huang49 | MRI | Brain | Glioma |

| TransBTS50 | MRI | Brain | Brain tumor |

| Swin-UNETR51 | MRI | Brain | Brain tumor |

| BiTr-Unet52 | MRI | Brain | Brain tumor |

| 3D Transformer53 | MRI | Brain | Brain region |

| MRA-TUNet54 | MRI | Cardiac | Atrial |

| HybridCTrm55 | MRI | Brain | Brain tumor |

| Zheyao G56 | MRI | Cardiac | Right ventricle |

| TransConver57 | MRI | Brain | Brain tumor |

| METran58 | MRI | Brain | Stroke |

| SwinBTS59 | MRI | Brain | Braintumor |

| BTSwin-Unet60 | MRI | Brain | Braintumor |

| UTransNet61 | MRI | Brain | Stroke |

| TF-Unet62 | MRI | Cardiac | Atrial |

| CST63 | MRI | Colorectal | Colorectalcancer |

| SpecTr64 | HSI | None | None |

| BAT65 | Dermo | Skin | Melanoma |

| FAT-Net66 | Dermo | Skin | Melanoma |

| Swin-PANet67 | Dermo | Skin | Melanoma |

| Polyp-PVT68 | Colonos | Colorectal | Poly |

| SwinE-Net69 | Colonos | Colorectal | Poly |

| Cotr70 | CT | Multiorgan | 3D organ |

| PHTrans71 | CT | Multiorgan | Abonominal |

| COTRNet72 | CT | Kidney | Kidney cancer |

| HT-Net73 | CT | Multiorgan | Cross region |

| UCATR74 | CT | Brain | Stroke |

| CCAT-net75 | CT | Chest | COVID-19 |

| Danfeng76 | CT | Lung | Lung cancer |

| CAC-EMVT77 | CT | Cardica | CAC |

| DTNet78 | CT | Bone | Cranio |

| Liu79 | ABVS | Breast | Breast tumor |

| MS-TransUNet80 | Multi | UNet | 2D |

3.1 X-ray or radiographic images

3.1.1 Tooth root segmentation

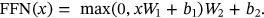

In root canal therapy for periodontitis, both underfilling and overfilling may have a negative influence on patients. However, the automatic assessment for root canal therapy has to be based on an accurate tooth root segmentation. Owing to the fuzzy boundary of tooth root, Li et al.6 proposed an AGMB-Transformer to achieve an efficient and accurate segmentation of tooth root. For the ambiguous boundary and low-resolution imaging, AGMB-Transformer designed the anatomy feature extractor and multibranch Transformer network. Experimental results showed the AGMB-Transformer's superior performance compared with ResNet, GCNet, and BoTNeT. The pipeline for AGMB-Transformer is shown in Figure 2.

3.1.2 Lung disease segmentation

Despite the popularity of weakly supervised deep learning models, these models may not apply to chest radiograph effectively. On the one hand, the lung image is mainly but rigorously symmetrical, which may confuse the learning models; On the other hand, some regions of the chest may be immune to certain diseases while these hidden connections tend to be ignored by the weakly supervised deep learning model. Thus, Gu et al.8 proposed a novel Chest L-Transformer to segment the thoracic disease region and diagnose the disease. Specifically, Chest-L-Transformer employed CNN to achieve the local feature extraction and the Transformer to distribute different attention on the chest radiograph regions with positional embedding. Experimental results on SIIM-ACR Pneumothorax Segmentation datasets showed that Chest L-Transformer's performance.

3.2 MRI

3.2.1 Bone tumor segmentation

As one of the malignant bone tumors, osteosarcoma is highly resistant to chemotherapy and bears a high recurrence rate. For the diagnosis of osteosarcoma, MRI can greatly reflect the soft-tissue, which makes it acute to osteosarcoma. However, the MRI images are accompanied with huge amount data and serious noises. Thus, Wang et al.48 used an Edge Enhancement based Transformer (Eformer) for denoising of the input MRI image and a deep feature aggregation for real-time semantic segmentation (DFANET) to segment the osteosarcoma from the original MRI image of bone.

3.2.2 Brain tumor segmentation

Accounting for 80% of malignant brain tumors, glioma is difficult to automatically diagnosed due to its changeable appearance and ambiguous boundary. Transformer-based methods for glioma included.49-52, 55, 57, 59 Jiang et al.59 proposed a SwinBTS to introduce the SwinTransformer to a U-shape structure to fulfill the task of 3D brain tumor segmentation. With a fusion of convolution operation and attention mechanism, SwinBTS adopted the SwinTransformer as the encoder and decoder. Besides, Jiang et al. also designed an Enhanced Transformer Block based on selfattention to give a further feature extraction if the former encoder failed to grasp the crucial information from the image. SwinBTS proved to reach state-of-the-art results on BraTS 2019, BraTS 2020, and BraTS 2021.

3.2.3 Stroke segmentation

Shortage of blood supply may lead to damage of brain tissue and possibly causes an Ischemic stroke. For the assessment of brain tissue, a precise segmentation method is needed to note the boundary of lesion area. Wang et al.58 proposed a METrans, which intended to extract multiscale features to promote the segmentation quality of stroke lesion area. To be specific, Wang et al. introduced attention-based block: convolutional block attention module (CBAM) to the encoder-to-decoder structure. Meanwhile, to guarantee the presence of low-level features, Wang et al. supplemented the attention-based modules with three encoders for local details. Experimental results on ISLES2018 and ATLAS proved that METran outperformed the state-of-the-art methods in Dice.

3.2.4 Ventricle segmentation

For the diagnosis of many cardiovascular diseases, whether the cardiac structure can be made with high accuracy influences the assessment result. However, the precise segmentation of a right ventricle (RV) structure demands both short-axis (SA) images and long-axis (LA) images, posing a challenge for the current segmentation methods. Fusing the U-Net with Transformer, Chen et al.54 proposed an MRA-TUNet to achieve the segmentation of atrial and ventricle. The performance of MRA-TUNet was confirmed by the experimental results on ACDC and 2018 atrial segmentation challenge. For the ventricle segmentation, dive score of MRA-TUNet for left ventricle was 0.961 and 0.911 for the right; For the atrium, the dice score reached 0.923.

3.3 CT scans

3.3.1 Kidney cancer segmentation

Kidney cancer, as one of the most prevalent cancers all over the world, can be cured efficiently if detected at an early age. For automatic CT diagnosis of kidney cancer, variation of the kidney tumors' location, shape and other properties may pose a challenge for kidney tumor segmentation. Shen et al.72 proposed an end-to-end COTRNet fusing CNN with Transformer. Skip connection operations are also taken in the encoder-to-decoder structure. In 2021 kidney and kidney tumor segmentation challenge (kits21), COTRNet won the 22th place with a performance of 61.6% for average dice and 49.1% for surface dice and 50.52% for tumor dice.

3.3.2 Brain stroke segmentation

Among three main kinds of strokes, it is urgent to diagnose the acute ischemic stroke (AIS) considering its probable deteriorative symptoms. However, the boundary between healthy tissue and AIS legions is not indistinguishable for naked eyes at an early age, which makes the early intervention of AIS quite demanding for doctors. Luo et al. proposed a novel UCATR network to segment the target area, namely the AIS region. Fusing the attention mechanism and convolution operation, Luo et al.74 took an encoder-to-decoder for UCATR. For the encoder, UCATR chose to combine the CNN and Transformer for extracting both global and local features; for the decoder, UCATR utilized Transformer-based network to achieve a depiction of lesion area with high accuracy. Experimental results demonstrated that UCATR reached 73.58% for Dice similarity coefficients, which outperformed three other methods.

3.3.3 Craniomaxillofacial deformity segmentation

For patients who suffer from craniomaxillofacial deformities, their surgery may benefit from an accurate segmentation of bone and an intricate localization of anatomical landmark. Therefore, Lian et al.78 proposed an end-to-end DTNet to fulfill both the segmentation and the localization task. With two communicative branches, DTNet can not only retain sufficient local details, but also have a global receptive field. Besides, a regionalized dynamic learner (RDL) was designed to associate the neighboring landmarks. In comparison with other multitask networks, DTNet achieved the state-of-the-art.

3.3.4 Guide-wire segmentation

A successful cardiovascular interventional therapy requires a precise insertion of guide-wire to build a stent or deliver the drug. However, previous guide-wire segmentation networks are CNN-based and in lack of global dependency. Thus, Zhang et al.9 proposed a novel network introducing Transformer for guide-wire segmentation. Instead of merely taking a single frame as the input, Zhang et al. added some previous frames into input sequences. CNN was used to extract the features of the input frames while the Transformer was utilized for developing a long-range dependency. Considering the scarcity of catheter data set, this network is tested on datasets from three hospitals and experimental results showed that this model outperformed other segmentation models.

3.3.5 2D organ segmentation

The data set in medical imaging task is generally smaller in magnitude than in other computer vision tasks. Thus, Liu et al.71 proposed a PHTrans, which combines both the Transformer and CNN. PHTrans took advantage of the U-shaped encoder-to-decoder design and arranged a series of Trans&Conv blocks into the Parallel Hybrid Module. Inside the Trans&Conv block, a Transformer-based network and CNN-based network are paralleled so that the global and local features can be processed simultaneously. Experimental results showed the PHTrans's superior performance over other state-of-the-art models.

3.3.6 3D organ segmentation

Despite performing a perfect task in constructing a global dependency, pure Transformer is not competent at 3D medical image segmentation owing to its high computational and spatial complexities. Thus, Xie et al.70 combined a CNN and a deformable Transformer so as to balance the computation cost and accuracy. Evaluated on BCV data set, which included 11 major human organs, CoTr outperformed other CNN-based and Transformer-based methods.

3.4 Fundus or optical coherence tomography (OCT)

3.4.1 Corneal endothelial cell segmentation

Zhang et al.3 proposed an MBT-NET to address the blurred cell edge of corneal imaging, which was owing to the uneven reflection and tremor and movement of the corneal endothelial cell. Combing the architecture of CNN and Transformer, MBT-NET firstly used CNN to extract the local feature of the corneal endothelial cell image and give a global analysis through Transformer and residual connection. Experimental results on TM-EM3000 and Alisarine showed that MBT-NET outperformed the UNet and TransUNet on DICE, F1, SE, SP. Figure 3 demonstrates the MBT-NET's structure for segmentation.

3.4.2 Retinal vessel segmentation

The retinal vessel segmentation, if guaranteed high accuracy, can be beneficial to both ophthalmic and systemic disease diagnosis. However, this segmentation task is demanding in both local details and global information interaction, making pure CNN or pure Transformer unsuitable. Thus, Chen et al.4 proposed a PCAT-UNet, which took a U-shape structure with convolution operation to process local features and Transformer to construct global dependencies. In PCAT-UNet, Chen et al. designed two units: PCAT and FGAM for extraction and fusion of features. Experimental results on DRIVE and STARE data set demonstrated the PCAT-UNet's state-of-the-art performance.

3.5 Other modalities

3.5.1 Dermoscopy

Melanoma segmentation on dermoscopy image suffered from the varied appearance and vague boundaries of melanoma, which required sufficient local details. On the other hand, a larger receptive field was required for the accuracy of skin lesion segmentation. Pure CNN or pure Transformer can't tackle the problem of melanoma segmentation. Wu et al.66 propose a FAT-Net by introducing an extra Transformer branch to ensure the global context and sufficient local information. In FAT-Net, three notable adjustments are made on classical Transformer: (1) A dual encoder instead of singular encoder is adopted (2) Three feature adaptation modules (FAM) are employed (3) A memory-efficient decoder is used to combine the both the global context and the local information. These adjustments are proved by experimental results on ISIC 2016, ISIC 2017, ISIC 2018, and PH2.

3.5.2 Microscopy

Jiang et al.46 designed a gated position sensitive axial attention mechanism, which aimed to make Transformer-based network apply to small data set. Unlike the patch division that vision Transformer generally took, the proposed method chose to sample the input image iteratively. Besides, strip convolution module (SCM) and pyramid pooling module (PPM) were adopted to improve the network's capability in interpreting global context. Experimental results on three datasets showed this model outperformed other segmentation models in terms of F1 score and IoU. Instance segmentation of single-cell microscopy image requires much preliminary manual analysis. On the foundation of DETR, Tim et al.47 proposed a CellDETR to achieve an end-to-end instance segmentation of yeast cells. The main architecture of CellDETR was similar to DETR. Otherwise, CellDETR reduced the parameter number of DETR by 10 times and employed learned position encoding so that the network can fulfill the cell-specific instance segmentation with higher efficiency. The experimental result of CellDETR is compared with Mask R-CNN as well as U-Net and shows an improvement in both segmentation accuracy and inference runtime cost.

3.5.3 Endoscope

Laryngeal disease, whether lesion or tumor, can only be detected through an electronic laryngoscope owing to the larynx's distinct structure complexity. For the laryngeal lesion detection assisted by CV, few study focus on multiobject segmentation for electronic laryngoscope image. Hence, Pan et al.2 proposed a novel RANT, which utilized both the vision Transformer and CNN to for not only global context but also sufficient multiscale details. Specifically, four pyramid vision transformers (PVT) are employed to obtain the multiscale features while skip connections are made in each layer of PVT. Experimental results on two public laryngeal datasets showed that RANT achieved 76.63% and 88.77% for mIoU and 83.45% and 93.49% for mDSC.

3.5.4 Echocardiography

For left ventricle region segmentation, manual labelling may consume much time and leads to observer bias. Therefore, Deng et al.5 proposed a TransBridge that employed a lightweight Transformer-based model to segment the left ventricle region automatically with high efficiency. Combining the CNN and Transformer, TransBridge extracted the features using CNN encoder-to-decoder architecture and built a long-range dependency with Transformer. In comparison with CoTr,5 TransBridge reduced the total number of parameters by 78.7%, improved the dice coefficient to 91.4%.

3.5.5 Hyperspectral imaging

Unlike other medical imaging methods, Hyperspectral imaging is achieved by emitting a wide spectrum of light and analyzing the corresponding reflected and transmitted light, the band of which may be unrecognizable for naked eyes. Thus, Yun et al.64 proposed a SpecTr, which introduced Transformer and CNN for the segmentation of hyperspectral image. The authors treated the analysis of spectral band representation as a sequence-to-sequence prediction task. Taking a U-shape structure, the authors set Transformer as the encoder with a sparsity constraint tailored for the property of spectral band. Convolution operations were utilized in both encoder and decoder part for feature extraction and recovery.

3.6 Multimodality

3.6.1 Pure Transformer-based 2D segmentation

Huang et al.23 proposed a MISSFormer, based on pure Transformer to fulfill the task of medical image segmentation. A segmentation network based on pure Transformer was thought to be lacking in local details. To overcome the drawback of Transformer, Huang et al. made two modifications on Transformer-based structure: (1) MISSFormer replaced the typical feed-forward network (FFN) with an improved block: Enhanced Transformer block, which both boosted the global dependency and retained sufficient local details. (2) An enhanced transformer context bridge, designed in this paper, was employed for multiscale feature input for the network.

3.6.2 Pure Transformer-based 3D segmentation

Karimi et al.17 proposed a novel convolution-free 3D medical image segmentation method, which is based on pure Transformer. Specifically, Karimi et al. firstly divided the input 3D image into 3D patches and fed them into the attention-based encoder after a positional encoding. Eventually, the predicted patch represents the spatial distribution of the target region. Through experimental test on brain cortical plate, pancreas and hippocampus datasets, this model outperformed the CNN-based methods in terms of 3D medical image segmentation. Otherwise, for small training set, a pretraining may make the model's performance more superior.

3.6.3 CNN-based segmentation

There exist intrinsic inductive biases in CNN while Transformer requires a large data set. Therefore, combining these two structures can avoid the respective shortcomings. CNN-based Transformer attempts included.15, 20, 25, 26, 29, 32 From a scale-wise perspective, Huang et al. listed two major problems for those who replace the convolution layers with pure Transformer: intrascale and interscale. Targeting at these scale-wise problems, Huang et al.15 proposed a ScaleFormer. For intrascale problem, ScaleFormer designed a Dual-Axis MSA module to correlate the local features extracted from CNN; for interscale problem, ScaleFormer architected a novel Transformer-based network that can communicate between the regions in different scales. Experimental results on three datasets demonstrated the ScaleFormer surpassed the state-of-the-art result.

3.6.4 U-Net based 2D segmentation

U-Net design is naturally lacking in long-range dependency while the Transformer structure is deficit in low-level feature. Thus, a combination of U-Net and Transformer can achieve the balance between the global interaction and local sufficient details.12, 13, 18, 19, 21, 24, 26, 43 attempted to integrate both the U-shaped net with Transformer. Cao et al.21 proposed to integrate the Swin Transformer into a U-shape structure. This novel network, named as SwinUNet, was based on pure Transformer despite its look assimilated U-Net. To be specific, the Transformer-based network was based on Swin Transformer, which adopted the shifted window on the basis of a vanilla Transformer. Substituting the convolution modules in a typical U-Net encoder with Swin Transformer blocks for feature extraction, the SwinUNet's capability of grasping global context got largely improved. Meanwhile, the decoder in SwinUNet also employed the Swin Transformer to achieve the segmentation of the target region through a series of symmetric upsampling.

3.6.5 U-Net based 3D segmentation

Yan et al.31 proposed a D-Former to achieved the 3D medical image segmentation with high precision. This D-Former was capable of making full use of the depth information of the 3D medical images. It was notable that the design of D-Former31 improved the receptive field and boomed the information interaction while the computation of selfattention mechanism remained relatively low. Besides, D-Former achieved the positional encoding dynamically instead of using a singular function as in the vanilla Transformer.

3.6.6 GAN-based segmentation

You et al.28 proposed a CASTFormer, which intended to solve the prevalent drawbacks of Transformer-based models: simple tokenization scheme, scarcity of scale variety and inadequate texture. Based on GAN, CASTFormer consisted of both a generator and a discriminator. For the generator, You et al.28 employed a pyramid structure for sufficient multiscale features and proposed the class-aware Transformer modules to depict the target region from the input medical image. For the discriminator, You et al.28 integrated the ResNet-based encoder and Transformer-based encoder to give a discriminative result. Experimental results on three benchmarks showed that the CASTFormer reached an absolute improvement of 2.54%–5.88% in Dice Coefficients compared with the state-of-the-art result.

3.6.7 Other methods

Refs.14, 16, 27, 30 integrated Transformer with other existing efficient frameworks.

Specifically, Wei et al.14 proposed a HRSTNet which combined the HRNet and Swin Transformer. Li et al.16 adopted the structure of FC-DenseNet joined with ResLinear-Transformer (RL-Transformer) and convolutional linear attention block (CLAB) and proposed the TFCNs.16 Xu et al.27 proposed a LeViT-UNet, which combined both the LeViT and U-Net. Li et al.30 proposed a novel LViT, standing for “Language meets Vison Transformer” to introduce the annotation of medical text as a supplement of limited data set.

4 MEDICAL IMAGE CLASSIFICATION

Medical images, which tend to carry a variety of symptom-specific information, serve as important solid material for doctor to make diagnosis. Considering the fact that this diagnosis is largely dependent on the personal interpretation of medical images, the diagnosis result may be inevitably influenced by some subjective factors, such as individual experience and inducive bias. Thus, the accurate and stable medical image classification is required as a supplement for the diagnosis of doctors. Some state-of-the-art classification methods based on medical images have reached for both the degree of a specific disease and the detailed medical judgement. These medical image classification works based on Transformer are partially listed in the Table 2.

| Name | Modality | Organ/Method | Disease/Dimension |

|---|---|---|---|

| ScoreNet81 | Histopath | Iissue | Breast cancer |

| T2T-ViT82 | Histopath | Cervical | Cervical cancer |

| IL-MCAM83 | Histopath | Colorectal | Colorectal cancer |

| IViT84 | Histopath | Kidney | pRCC |

| Guo85 | Histologic | Lung | Lung cancer |

| MIL-VIT86 | Fundus | Eye | Retinal disease |

| LAT87 | Fundus | Eye | Diabetic retinopathy |

| CheXT88 | X-ray | Chest | Abnormality |

| ViT89 | X-ray | Bone | Fracture |

| Park90 | X-ray | Chest | COVID-19 |

| FESTA91 | X-ray | Chest | COVID-19 |

| Liu92 | X-ray | Chest | COVID-19 |

| Covid-Trans93 | X-ray | Chest | COVID-19 |

| Tuan94 | X-ray | Chest | COVID-19 |

| MXT95 | X-ray | Chest | COVID-19 |

| Park96 | X-ray | Lung | COVID-19 |

| Van97 | X-ray | Multiorgan | None |

| Verenich98 | X-ray | Lung | Abnormality |

| KAT99 | WSI | Pathology | Endometrial |

| GTN100 | WSI | Lung | Lung cancer |

| TransPath101 | WSI | Pathology | Multiorgan |

| ScATNet102 | WSI | Pathology | Skin |

| TranMIL103 | WSI | Pathology | MIL |

| Gheflati104 | US | Breast | Breast cancer |

| POCFormer96 | US | Chest | COVID-19 |

| Qayyum105 | Photo | Toe | DFU |

| LLCT106 | OCT | Eye | Retina lesion |

| RadioTransformer107 | Multimodality | Pure Transformer | 2D |

| Matsokus108 | Multimodality | CNN-based | 2D |

| TransMed109 | Multimodality | CNN-based | None |

| SEViT110 | Multimodality | CNN-based | None |

| M3T111 | Multimodality | CNN-based | 3D |

| Islam112 | Micro | Blood | Malaria parasite |

| ViT-CNN113 | Micro | Lymph | Leukemia |

| BrainFormer114 | MRI | Brain | Brain disease |

| GlobalLocal115 | MRI | Brain | Brain age |

| STAGIN116 | MRI | Brain | Brain connectome |

| mfTrans117 | MRI | Hepatic | Hepatocellular carcinoma |

| MVT118 | Dermo | Skin | Melanoma |

| OOD115 | Dermo | Skin | None |

| DPE-BoTNeT119 | Dermo | Skin | Melanoma |

| CVM-Cervix120 | Cytopathology | Cervical | Cervical cancer |

| ViT& DenseNet121 | Colposcopy | Cervical | Cervical cancer |

| xViTCOS122 | CT + X-ray | Chest | COVID-19 |

| Hsu123 | CT | Chest | COVID-19 |

| Zhang124 | CT | Chest | COVID-19 |

| COViT-GAN125 | CT | Chest | COVID-19 |

| Wu126 | CT | Lung | Emphysema |

| costa127 | CT | Lung | COVID-19 |

| MIA-COV19D128 | CT | Chest | COVID-19 |

| CTNet129 | CT | Chest | COVID-19 |

| Scopeformer130 | CT | Brain | Intracranial hemorrhage |

| xia131 | CT | Pancrea | Pancreatic cancer |

| NoduleSAT132 | CT | Lung | Lung nodule |

| TransCNN133 | CT | Lung | COVID-19 |

| ParkS134 | CT | Lung | COVID-19 |

| covid-ViT135 | CT | Chest | COVID-19 |

| Uni4Eye136 | Ophthalmic | Eye | 2D + 3D |

4.1 X-ray or radiographic images

4.1.1 COVID-19 analysis

Considering the serious damage of COVID-19 on global health and economy, it is urgent to achieve a fast and effective diagnosis of COVID-19. Thus, authors in Refs,75, 90-96, 122-125, 127-129, 133, 135, 137 explored to achieve a rapid and accurate COVID-19 classification using Transformer-based architectures. Shome et al.93 proposed a Covid-Transformer to achieve the automatic examining of COVID-19 according to the X-ray image. To overcome the scarcity of data set, three open-source datasets got amalgamated into a 30 K high-quality data set. For binary classification of COVID-19, Covid-Transformer93 reached 98% for accuracy and 99% for AUC score; for multiclass classification (COVID-19, normal and pneumonia), Covid-Transformer93 achieved 92% for accuracy and 98% for AUC score. Tuan et al.94 proposed a novel network, which integrated both the convolution operation and selfattention mechanism for the classification of COVID-19. Tuan et al.94 designed this network to achieve the classification of three types: normal, pneumonia and COVID-19. To assess the severity of COVID-19, Tuan et al.94 constructed a data set, on which five deep learning models were tested. The result showed the efficiency of automatic chest X-ray diagnosis of COVID-19.

4.1.2 Fracture classification

Musculoskeletal diseases take the lead among the causes for disability. To intervene with musculoskeletal diseases as early as possible and work out the corresponding treatment for fracture, Tanzi et al.89 proposed a novel network to give the automatic classification on fracture subtype according to the CT scan of bones. Specifically, Tanzi et al.89 collected 4207 CT scan images with manual annotations and made the largest labeled data set of proximal femur fractures.89 Superior to the CNN-based methods, this Transformer-based network reached 0.77 for precision, 0.76 for recall and 0.77 for F1-score.

4.1.3 Multiorgan classification

For multiview medical image analysis, images from different views have to be combined. Although these images reflect the same object, the variations in perspective may arouse huge difference in appearance, thus posing challenges to registration. When the registration can not be finished, images from multiple angles can only be integrated through a global fusion of feature vectors. Therefore, Van et al.97 proposed a novel network that examined the spatial feature maps and associate the features extracted from unregistered views. Experimented on multiview mammography and chest X-ray datasets, this model outperformed the previous methods.

4.2 MRI

4.2.1 Brain disease classification

Brain diseases, which leaves no obvious structural lesion, can be reflected through the functional magnetic resonance imaging (fMRI). While functional connectivity has been widely taken as the basic feature in fMRI disease classification, the calculation of functional connectivity may rely too much on predefined regions of interests but jumps voxel-wise details. Thus, Dai et al.114 proposed a BrainFormer, which utilized Transformer for extracting global relations and 3D convolution to supplement the local details. Afterward, a single-stream model was set in BrainFormer114 to combine both the local and global information.

4.2.2 Brain age classification

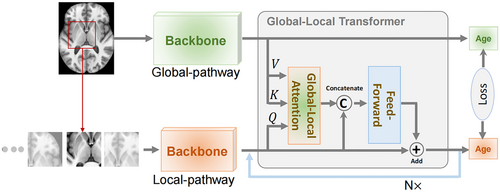

With the help of deep learning, brain age can be estimated rapidly according to brain MRI result. However, previous automatic methods failed to obtain the global information but only concentrate on the local information. Thus, He et al.115 proposed a novel global-local Transformer, which fuse both global and local information for brain age estimation. Specifically, He et al.115 proposed two pathways respectively for global and local information, which got integrated through an attention mechanism. Evaluation on eight public datasets proved the global-local Transformer's performance. Figure 4 illustrated the global-local Transformer and multipatch age prediction in He et al.115

4.2.3 Brain connectome analysis

The temporal correlation in functional neuroimaging modalities can reflect the cross-region functional connectivity (FC) within the brain. Given the network-like property of these connectivity, graph neural networks (GNN) have been introduced to generate the graph representation of brain connectome. However, such attempts fail to incorporate the fluctuating property of functional connectivity network. Kim et al.116 proposed a STAGIN to model a dynamic graph representation of brain connectome. Apart from the GNN structure, Transformer encoder was also used in STAGIN116 to extract the global features. The performance of STAGIN has been validated on HCP-Rest and the HCP-Task datasets.

4.2.4 Hepatocellular carcinoma classification (HCC)

A preliminary preparation for the treatment of HCC is to examine the symptom of HCC quantitatively through the multiphase contrast-enhanced magnetic resonance imaging (CEMRI). Former CNN-based attempts for HCC measurement are lacking in long-range dependency establishment and multiphase CEMRI information selection. Therefore, Zhao et al.117 proposed a multifunction Transformer regression network (mfTrans-Net), which introduced attention mechanism for HCC qualitative measurement. To be specific, three CNN-based encoders were firstly parallelized to extract the features of CEMRI images. Nonlocal Transformer was set then to grasp the long-range dependencies. A multilevel training strategy was adopted for mfTrans-Net to improve the performance of HCC qualitative measurement.

4.3 CT

4.3.1 Emphysema classification

Emphysema can lead to the enlargement of alveoli, which may damage the lung. Based on the CT examine, emphysema is classified as three types: centrilobular emphysema (CLE), panlobular emphysema (PLE), and paraseptal emphysema (PSE). Considering the three types of emphysema demands different methods of treatment, Wu et al.126 proposed CT-based emphysema classification model which was inspired by the structure of vision Transformer. Wu et al.126 sliced the large patches obtained from the original CT images into sequences of patch embedding, which got fed into Transformer encoder after a positional encoding. Afterward, a softmax layer was utilized to give the final classification of emphysema subtype.

4.3.2 Lung nodule classification

The automatic diagnosis for multiple pulmonary nodules is crucial to clinical practice of pulmonary nodule treatment. However, previous studies tend to place emphasis on the single nodule, which may miss the correlation between nodules. Thus, Yang et al.132 proposed a novel NoduleSAT based on multiple instance learning (MIL) approach. NoduleSAT132 examined the patient's multiple nodules as a whole and analyzed the relations between multiple pulmonary nodules. To be specific, NoduleSAT132 introduced 3D CNN to Transformer-based structure and removed the pooling layer. Experiments on LUNA16 and LIDC-IDRI showed NoduleSAT132 achieved an outstanding performance on lung nodule and malignancy classification.

4.3.3 Intracranial hemorrhage classification

For RSNA intracranial hemorrhage classification, Yassine et al.130 proposed a Scopeformer to achieve the identification of different hemorrhage types according to the CT slices. Fusing CNN with Vison Transformer, Scopeformer130 employed Xception CNN for the extraction of feature maps and Vison Transformer for the establishment of long-range dependency of relevant features from different levels. When the CNN module got pretrained, the performance of Scopeformer132 could be improved further.

4.3.4 Pancreatic cancer classification

Pancreatic cancer is rare but fatal. Thus, pancreatic cancer's fatality makes it urgent for preliminary intervention while the its scarcity causes the huge health burden for general screening of whole population to have little positive effect. Therefore, considering the economic cost and complexity of single-phase noncontrast CT scan, Xia et al.131 proposed a novel model to classify the pancreatic ductal adenocarcinoma (PDAC) and other abnormalities (nonPDAC) from the other normal according to the CT image. Xia et al.131 tested their model on a data set that included 1321 patients and reached 95.2% for sensitivity and 95.8% for specificity.

4.4 Fundus or OCT

4.4.1 Retinal disease classification

Medical imaging task, unlike other tasks in CV, may not provide a large data set for the training of automatic classification. Thus, Transformer-based network, with its huge demand for large-scale training data set, may not apply to medical imaging task. To maintain the Transformer's outstanding performance and adapt it to retinal disease classification, Yu et al.86 proposed a MIL-VIT, which pretrained the Transformer model preliminarily on a fundus image data set and the fine-tuned the network for the sake of retinal disease classification. Additionally, a MIL was employed to improve the performance of the model, which was proved on two public datasets to outperform other CNN-based methods.

4.4.2 Diabetic retinopathy (DR) classification

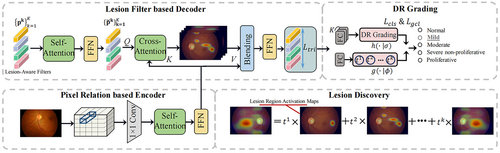

Taking the lead in causing permanent blindness, DR can be recognized at an early stage with the help of automatic classification method, the tasks of which include both DR grading and lesion discovery. Unlike what the previous methods took, Sun et al.87 proposed to achieve the DR grading and lesion discovery simultaneously and therefore introduced a novel lesion-aware Transformer (LAT). LAT87 adopted the encoder-to-decoder structure, in which a pixel relation based encoder and a lesion filter based decoder were set. The performance of LAT137 was tested on Messidor-1, Messidor-2, and EyePACS. Figure 5 showed the structure of LAT in Wang et al.137

4.4.3 Retina lesion analysis

Compared with other images, retina OCT images bear obvious speckle noise, irregularity and vague features. To tackle these problems, Wen et al.106 proposed a novel lesion-localization convolution Transformer (LLCT), which not only classify the ophthalmic diseases, but also localize the target retina lesion region. Specifically, LLCT106 employed CNN to obtain the feature map, which was then reshaped as the input for the Transformer-based network. The gradient weight during the backward propagation was summed to get the lesion location region.

4.5 Histopathology images

4.5.1 Breast cancer classification

The image resolution and high cost for annotations have influenced the progress in digital pathology. For the pathology image classification, patch-based MIL is generally adopted, which give unified attention on each part of the images with only a small fraction being useful. Thus, Thomas et al.81 proposed a ScoreNet to reassign the computational resources according to the distribution of discriminative image regions. With a combination of local and global features, ScoreNet81 could achieve an efficient classification on target regions. Additionally, ScoreMix,81 a novel method for data augmentation, was utilized in ScoreNet.81 Validated on three breast cancer histology datasets, ScoreNet reached a state-of-the-art result.

4.5.2 Cervical cancer classification

There exist only a few public cervical cancer datasets, the quality of which were also unsatisfactory in image quality and sample distribution. Thus, Zhao et al.82 introduced the taming Transformer design to launch a novel cervical cell image generation model: T2T-ViT to improve the classification results of cervical cancer. This Tokens-to-Token Vision Transformers (T2T-ViT) model can provide balanced and sufficient cervical cancer datasets with high quality. With an encoder-to-decoder structure, T2T-ViT introduced SE-block and MultiRes-block in the encoder and SMOTE-Tomek Links82 to adjust the sample numbers and image weights of the data set.

4.5.3 Colorectal cancer classification

Chen et al.83 proposed an IL-MCAM model for the diagnosis of colorectal cancer. Unlike existing approaches focusing on end-to-end classification, IL-MCAM framework place emphasis on human-computer interaction. Fusing attention mechanism and interactive learning, IL-MCAM83 can be correspondingly divided into two stages. In the first stage, automatic learning was achieved via three Transformer-based network and CNN; in the second stage, misclassified images were rejoined to training set interactively for the promotion of performance. Experimental results on HE-NCT-CRC-100K data set demonstrated the superiority of IL-MCAM over other methods.

4.5.4 Renal cell carcinoma (RCC) classification

For papillary (p) RCC, the two subtypes: type 1 and type 2 of pRCC are similar but informative of different information about the symptom of pRCC, such as cellular and cell-layer level patterns. Considering the CNN's incapability of distinguishing these two subtypes, Gao et al.84 proposed an instance-based Vision Transformer (IViT), which utilized Transformer-based network to finish the classification of two subtypes based on representations of input images. To be specific, top-K instances were chosen to be aggregated to obtain the cellular and cell-layer information after attention mechanism.

4.5.5 Lung cancer classification

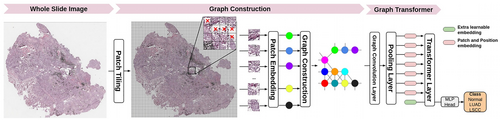

Lung cancer accounts for many deaths all over the world. For nonsmall-cell lung cancer (NSCLC), there existed two subtypes: Lung adenocarcinoma (LUAD) and lung squamous cell carcinoma (LUSC). Histology is generally used by pathologists to give the classification result of lung cancer subtype. To achieve the automatic classification of lung cancer subtypes, Guo et al.85 proposed a novel framework, which employed a pretrained vision Transformer to finish the multilabel lung cancer based on the histology images. Zheng et al.100 proposed a novel Graph-Transformer based framework for processing pathology data (GTP), which made use of morphological and spatial information in predicting the disease grade. The design of Transformer-based GTP85 is shown in Figure 6.

4.5.6 Endometrial classification

Despite the wide appearance of Transformer in whole slide image (WSI) classification, the limitation of effectiveness and efficiency, which was caused by token-wise selfattention design and positional embedding operation in a typical Transformer, handicapped the further development of WSI classification. Zheng et al.99 proposed a kernel attention Transformer (KAT), which transmitted the information tokens via a cross-attention mechanism and used a set of kernels to represent the positional anchors on the WSI. Therefore, KAT99 can balance the detail contextual information of WSI with the computational complexity.

4.5.7 Melanoma classification

It is extremely challenging to recognize the melanocytic lesion according to the pathology image. Generally, only an experienced dermatopathologist can overcome the intra- and interobserver variability and judge whether the invasive melanoma. With the digitalization of whole slide image, some automatic classification methods have emerged as the attempts to replicate the pathologists' diagnosis. Wu et al.102 proposed a novel ScATNet to obtain the multiscale representations of melanocytic skin lesions on WSI modality. Experimental results showed that ScATNet0'superiority to other WSI classification methods.

4.6 Multimodality

4.6.1 Pure Transformer-based

Bhattacharya et al.107 proposed a Student–teacher Transformer-based network, called RadioTransformer to model the radiologists' diagnosis on chest radiography. For radiologists, visual information was crucial to the classification of medical image. With an eye-gaze tracking technology, the behavior of expert can be captured. RadioTransformer107 made full use of the rich detail information for diagnosis. Specifically, RadioTransformer107 took a global-local Transformer encoder-to-decoder structure to extract both global and local information for a visual depiction of attention regions.

4.6.2 CNN-based 2D classification

It has been over a decade since CNN emerged as the dominant method for medical imaging tasks. However, as the Transformer from NLP got modified for vision tasks, the traditional mainstream CNN has been challenged by the newcomer: vison Transformer. A comprehensive comparison between CNN and vision Transformer is needed, which provokes Matsokus et al.108 to raise the question: Is it Time to Replace CNNs with Transformers for Medical Images?.108 Attempting to answer this question after a careful examine on both CNN and ViT's performances, Matsokus et al.108 set some experiments to conclude based on concrete quantitative results.

4.6.3 CNN-based 3D classification

Jang et al.111 introduced a multiplane and multislice Transformer (M3T) network to construct a three-dimension model for medical image classification. Aiming at the Alzheimer's disease, Jang et al.111 integrated CNN of both 2D and 3D with Transformer-based network to achieve the classification of Alzheimer's disease. In fact, these three parts were respectively responsible for different targets: 2D and 3D CNN extracted the local features based on 2D and 3D input images while Transformer-based network developed a long-range relationship on CNN output.

4.7 Other modalities

4.7.1 Dermoscopy

As one of the deadliest diseases all over the world, skin cancer takes thousands of lives each year. To provide intervention for skin cancer at an early stage, the deep learning method is used for the classification and diagnosis of skin cancer. However, the automatic classification of skin cancer is posed with some certain challenges: lower accuracy, deficit of labeled data and poor generalization. For these challenges, Aladhadh et al.118 proposed a medical vision transformer (MVT), a two-stage framework designed to introduce the attention mechanism for skin cancer classification. Nakai et al.119 proposed a novel bottleneck Transformer network (DPE-BoTNeT) by joining convolution network with the Transformer design to supplement the initial network with the capability of extracting global dependency and interpretating the positional information.

4.7.2 US

For breast cancer imaging, US imaging can be extremely helpful owing to its low cost and safety. Among the automatic classification methods based on US image, CNNs have emerged as the most prevalent structure. However, the limitation of CNN in receptive field lead to the loss of global context information. Thus, Gheflati et al.104 introduced vision Transformer into the US-based breast cancer classification. According to the classification accuracy and area under the curve (AUC) metrics on US data set, this method outperformed the state-of-the-art CNNs.

4.7.3 Photo

One out of three diabetic patients may be troubled with diabetic foot ulcers (DFU). With the recent increase in DFU, it is urgent to diagnose the DFU at an early stage before ischemia and infection appears as DFU deteriorates. Qayyum et al.105 introduced a novel network combining both CNN and Transformer to achieve the diagnosis of DFU. Fine-tuning the CNN and Transformer structure on DFUC-21 data set, Qayyum et al.105 chose two of the five Transformers for the feature extraction and finished the DFU detection.

4.7.4 Microscopy

As one of the most dangerous diseases that mosquito bites may arouse, malaria can cause serious consequences, even death. To recognize the existence of malaria timely, microscopy is used to examine whether malaria parasites are present in the blood sample. However, this method consumes much time and effort, which may not apply to a large-scale examine of malaria. Thus, Islam et al.112 proposed a novel method based on multiheaded attention mechanism to diagnose the malaria parasite. This model reached 96.41%, 96.99%, 95.88%, 96.44%, and 99.11% for accuracy, precision, recall, f1-score, and AUC score on testing data set. Among lymph diseases, acute lymphocytic leukemia (ALL) is a cancer with high fatality for both adults and children. To give a timely and accurate diagnosis of ALL, Jiang et al.113 proposed a ViT-CNN ensemble model to find cancer cell images from the other normal cell images. Combining both CNN and vision Transformer, ViT-CNN113 utilized CNN to extract rich features from the input images and Transformer to give the classification result. Experimental results on test set demonstrated that ViT-CNN reached 99.03% for accuracy in terms of diagnosis of ALL.

4.7.5 Cytopathology

As the fourth most common female cancer all over the world, cervical cancer can be diagnosed via cervical cytopathology. The automatic classification of cervical cancer classification based on cytopathology images has correspondingly developed considering the manual screening's time expenses and probable errors. Thus, Liu et al.120 proposed a novel CVM-Cervix to give the cervical cell classification with high speed and accuracy. CVM-Cervix set a CNN module and a vision Transformer module respectively for the extraction of local and global features. Eventually, the classification result was given fusing both local and global feature via a Multilayer Perceptron module.120

4.7.6 Colposcopy

Human papilloma virus (HPV) may cause both the cervical lesions and cervical cancer. As the symptom deteriorates, the precancerous lesion can be classified into three stages: CIN1, CIN2, and CIN3. The classification of these three stages can help the patient with the treatment of cervical cancer. Therefore, Li et al.121 proposed a novel method, which fused both the vision Transformer and DenseNet to classify the subtype of the cervical cancer. Li et al.121 employed the fivefold cross-validation methods to train and fuse the vision Transformer and DenseNet161 model. Experimental results showed that this model reached 68% for accuracy rate.

5 MEDICAL IMAGE DETECTION

Unlike the segmentation and classification, medical image detection place emphasis on detecting the occurrence of a specific malady. In fact, considering the conflict between the limited medical resource and the expanding medical requirement, the automatic medical image detection may be potentially taken into consideration as a powerful tool for disease screening. The automation of medical image detection takes advantage of artificial intelligence, which spared some of doctors so that they can exploit their talent in dealing with more serious diseases. The papers on medical image detection based on Transformer are shown in Table 3.

| Name | Modality | Organ/Method | Disease/Dimension |

|---|---|---|---|

| RDFNet138 | Image | Tooth | Caries |

| MA139 | Fundus | Eye | Microaneurysm |

| AG-CNN140 | Fundus | Eye | Glaucoma |

| TIA-Net141 | Fundus | Eye | Glaucoma |

| Koushik142 | X-ray | Chest | COVID-19 |

| Duong143 | X-ray | Chest | Tuberculosis |

| CLAM144 | WSI | Multiorgan | None |

| MCAT145 | WSI | Multiorgan | Cancer |

| Gheflati100 | US | Breast | Breast cancer |

| UTRAD146 | Multimodality | UNet-based | 2D |

| Tomita147 | Micro | Esophagus | Esophagus tissue |

| DETR148 | MRI | Lymph | Lymph node |

| COTR149 | Colonos | Colorectal | Polyp |

| Liu150 | Colonos | Colorectal | Polyp |

| Rahhal151 | CT + X-ray | Chest | COVID-19 |

| TRACE152 | CT | Kidney | CKD |

| SATr153 | CT | Lesion | None |

| STCovidNet137 | CT | Lung | COVID-19 |

| Chuang154 | CT | Lung | Lung nodule |

| Chest X142 | CT | Lung | COVID-19 |

| POCFormer155 | CT | Lung | COVID-19 |

| SwinFpn156 | CT | Multiorgan | None |

| Effinet143 | CT | Lung | Tuberculosis |

| Islam157 | CT | Kidney | Cyst, stone, tumor |

| Covid-Trans93 | CT | Lung | COVID-19 |

| AANet158 | CT | Lung | COVID-19 |

| Pesce159 | CT | Lung | Lung nodule |

| TR-Net160 | CCTA | Artery | Coronary artery stenosis |

| VIEW-DISENTANGLED161 | MRI | Brain | Brain lesion |

| Name | Modality | Organ/Method | Disease/Dimension |

| E-DSSR162 | Endoscopy | Surgical | Dynamic surgical scene |

| MIST-net163 | X-ray | Cardiac | Image quality improvement |

| CNN-Transformer164 | X-ray | Bone | Long bone |

| SSTrans-3D165 | SPECT | Cardiac | 3D reconstruction |

| ADVIT166 | PET | Brain | Alzheimer's Disease |

| TransEM167 | PET | Brain | Image quality improvement |

| SMIR168 | MRI | Brain | Super-resolution |

| SLATER169 | MRI | Multiorgan | Unsupervised reconstruction |

| ReconFormer170 | MRI | Multiorgan | Accelerated reconstruction |

| DSFormer171 | MRI | Multiorgan | Accelerated reconstruction |

| McMRSR172 | MRI | Multiorgan | Super-resolution |

| PKT173 | MRI | Multiorgan | Undersample reconstruction |

| HUMUS-Net174 | MRI | Multiorgan | Accelerated reconstruction |

| Kspace-Trans175 | MRI | Multiorgan | Accelerated reconstruction |

| SVoRT176 | MRI | Brain | Fetal brain |

| TITLE177 | MRI | Multiorgan | Accelerated reconstruction |

| T2Net178 | MRI | Multiorgan | Joint |

| MTrans179 | MRI | Multiorgan | Accelerated reconstruction |

| SRT180 | MRI | Brain | 2D to 3D |

| FedGIMP181 | MRI | Multiorgan | accelerated reconstruction |

| ASMT182 | MRI | Brain | Super-resolution |

| SAT-net183 | MRI | Cartilage | Acceleration + image quality |

| McSTRA184 | MRI | Multiorgan | Accelerated reconstruction |

| KangLin185 | MRI | Multiorgan | Acceleration reconstruction |

| ASFT182 | MRI | Brain | Super-resolution |

| TranSMS186 | MPI | Multiorgan | Super-resolution |

| CTformer187 | LDCT | Multiorgan | Denoising |

| Liu188 | LDCT | Multiorgan | Degradation |

| TransCT189 | LDCT | Multiorgan | Enhancement |

| TED-Net190 | LDCT | Liver | Denoising |

| transGAN-SDAM191 | l-PET | Brain | Image quality improvement |

| Wu192 | CT | Multiorgan | Image quality improvement |

| DuDoTrans193 | CT | Multiorgan | Image quality improvement |

| TVSRN194 | CT | Multiorgan | Super-resolution |

| Sizikova195 | CT | Lung | 3D Shape Induction |

| Eformer196 | CT | Multiorgan | Denoising |

5.1 X-ray or radiographic images

5.1.1 COVID-19 detection

For COVID-19, the CT-based or X-ray-based detection involves the medical professionals, which may restrict the speed and efficiency of detection. Thus, Krishnan et al.142 attempted to ease this problem by proposing an automatic method for detecting the COVID-19 according to the CT or X-ray images. Krishnan et al.142 fine-tuned a vision Transformer to fit the need of COVID-19 detection task on CT or X-ray images and reached a state-of-the-art performance in terms of accuracy, precision, recall and F1 score. Rahhal et al.151 proposed to perform the Coronavirus detection based on the CT and X-ray images. Specifically, Rahhal et al.151 took a vision Transformer as the backbone and Siamese encoder for feature extraction. After a patch division, input images were processed in the encoder. After the evaluation on CT and X-ray datasets, this framework outperformed other methods in five indicators.

5.2 MRI

5.2.1 Lymph node (LN) detection

For researchers who attempt to assess the lymphoproliferative disease, the LN need to be identified in T2 MRI images. Diverse appearances of lymph nodes made it difficult for radiologists to pick out the LNs according to T2 MRI image. Therefore, Mathai et al.148 proposed the DEtection Transformer (DETR) framework for the localization of lymph nodes. A bounding box fusion technique was adopted in DETR148 to reduce the false-positive rate. Experimental results showed that DETR148 reached 65.41% for precision and 91.66% for sensitivity.

5.2.2 Brain lesion detection

For the location of small brain lesions, the 3D context synthesis conflicts with the computational cost. Therefore, Li et al.161 proposed a view-disentangled Transformer for MRI feature extraction to accurately detect the tumor. Taking a Transformer backbone with view-disentangled Transformer module, this framework modelled a long-range dependency within the different positions. Multiple 2D slice features were extracted and enhanced in this view-disentangled Transformer module.

5.3 CT

5.3.1 Lung nodule detection

In fact, the earlier the lung nodules get detected, the more likely it is for lung cancer patients to survive. However, the varied appearance and location of lung nodules make it difficult for automatic computer-aided detection of lung nodule. To diminish the high false-positive rate of nodule detection, Niu et al.154 proposed a 3D Transformer framework to achieve lung nodule detection. Specifically, the input CT images got sliced into a nonoverlap sequence, each unit of which was analyzed with selfattention mechanism. Besides, Niu et al.154 chose a region-based contrastive method to train the model to promote the training result.

5.3.2 Tuberculosis detection

For the early detection and analysis of tuberculosis, artificial intelligence technology can be beneficial to the automatic recognition of tuberculosis based on the chest X-ray images, which have been proved on the experimental results on some small chest X-ray data set. Duong et al.143 aimed to propose a novel framework, which maintained the encouraging performance on large datasets. Specifically, Duong et al.143 fused three networks for the detection of tuberculosis: modified EfficientNet, modified vision Transformer and modified hybrid model.

5.3.3 Chronic kidney disease (CKD) detection

The shortage of the positive patients and other risk factors have handicapped the development of CKD automatic detection. Wang et al.152 proposed the TRACE (Transformer-RNN Autoencoder-enhanced CKD Detector), which achieved the end-to-end CKD prediction. TRACE adopted an autoencoder with both attention mechanism and RNN unit. Consequently, TRACE152 can analyze the medical history information of the patients comprehensively. Experimental results on a data set based on real-world medical information showed that TRACE152 performed a state-of-the-art task.

5.3.4 Cyst, stone, tumor detection

Although the renal failure, with its severe consequence, has aroused much public attention, few attempts to apply artificial intelligence to diagnose kidney diseases. Setting kidney stones, cysts, and tumors, the three major renal diseases, as the target, Islam et al.157 launched six models, such as EANet, CCT and Swin Transformer. After a comprehensive comparison, the framework based on Swin Transformer have shown to be outperform other methods as for accuracy, F1 score, precision and recall.

5.3.5 Universal lesion detection (ULD)

For ULD, most methods achieved the detection task by extracting 3D contextual information combining a series of adjacent CT slice Slice s. However, these operations affect the globality of feature representation. Li et al. proposed a novel Slice Attention Transformer (SATr), which was joined in a convolution-based structure. This design obtained both a long-range dependency and a local feature extraction, which was validated by the experimental results on testing data set.

5.4 Fundus or OCT

5.4.1 Microaneurysm detection

The size and complexity of retinal fundus makes it difficult to detect the Microaneurysms, which are the early sign of DR. Zhang et al.139 proposed a novel detection model. To be specific, Zhang et al.139 used equalization operations to improve the fundus image quality. Afterward, attention mechanism was adopted to obtain the preliminary features of retinal fundus images. Besides, Zhang et al.139 analyzed the association between the microaneurysm and blood vessel from a spatial perspective. Experimental results on IDRiD_VOC data set showed this method outperformed other attempts in terms of average accuracy and sensitivity.

5.4.2 Glaucoma detection

Associated with vision deprivation, glaucoma was targeted by many automatic detection researchers. But the high redundancy in fundus image influenced the further accuracy improvement of glaucoma detection. Therefore, Li et al.140 proposed AG-CNN, a novel framework fusing convolution operation with attention mechanism. Li et al.140 prepared a large glaucoma database with 11,760 fundus images. For AG-CNN, Li et al.140 designed three subnets, which were respectively responsible for attention prediction, pathological area localization and glaucoma classification.

5.5 Histopathology image

5.5.1 Multiorgan detection

Most computational pathology methods based on deep learning need the manual labelling of many whole slide images. To get rid of the burden of manual efforts, Lu et al.144 proposed Clustering-constrained Attention Multiple instance learning (CLAM) to achieve the automatic multiorgan detection with both efficiency and interpretability. CLAM144 employed the attention mechanism to find out discriminated sub-regions, which were then clustered to refine the target region.

5.5.2 Cancer detection

It is of great difficulty to predict the survival outcome based on the whole slide images (WSIs) of patients. Both computational complexity and data heterogeneity gap pose a challenge for attempts to treat WSIs as the bags for MIL. Therefore, Chen et al.145 proposed a multimodal co-attention transformer (MCAT) framework to solve the above problems. Mapping the WSI features into an embedding space, MCAT145 assimilated how word embeddings picked salient objects. The spatial complexity got specifically reduced when extracting the WSI-based features. Experimental results on five cancer data set demonstrated the MCAT's superior performance.

5.6 Other modalities

5.6.1 Coronary CT angiography (CCTA)

Considering the serious consequence of coronary artery disease (CAD), the corresponding automatic diagnosis is of great importance. To overcome the structural complexity, which has troubled the modelling of coronary artery, Ma et al.160 proposed a Transformer network (TR-Net) to detect the coronary artery stenosis based on the CCTA. TR-Net integrated Transformer-based encoder with convolutional modules to take in the advantages of both. Consequently, TR-Net analyzed the cross-image information and find out the stenosis.

5.6.2 Image-based

Although the dental caries is quite widespread, few studies place emphasis on caries detection. Thus, Jiang et al.138 proposed a RDFNet, which was suitable for portable caries detection. Utilizing the attention mechanism to extract the features from the input images, RDFNet adopted the FReLU activation function to accelerate the caries detection so that it can fit the condition of portable devices. Experimental results on datasets showed that RDFNet outperformed other methods in terms of accuracy and speed.

5.6.3 Dermoscopy

In many vision tasks, such as object detection, image classification and semantic segmentation, Transformer-based models have proved to outperform the CNN-based models. However, Transformer-based network fail to maintain its superior performance on finding out the out-of-distribution samples. Thus, Li et al.197 evaluated four Transformer on two open-sourced medical image datasets. The result showed that the Transformer-based attempts on out-of-distribution were still insufficient.

5.6.4 Microscopy

Tomita et al.147 proposed a novel method for detection of Barrett esophagus (BE) and esophageal adenocarcinoma. To be specific, this method made use of annotations from the tissue level based on the histological patterns on microscopy images. Both convolution operations and attention-mechanism were used in this framework. The testing set for this model include 123 images divided into four classes: normal, BE-no-dysplasia, BE-with-dysplasia, and adenocarcinoma.

5.6.5 Colonoscopy

Colonoscopy is widely adopted for the diagnosis of polyp lesions, which may evolve into the second most mortal cancer: colorectal cancer. To spare the endoscopists from the huge manual efforts on screening out the polyp, Shen et al.149 proposed an end-to-end polyp detection model, named convolution in Transformer (COTR). Inspired by detection Transformer (DETR), COTR149 utilized CNN for the feature extraction, Transformer encoders to encode and recalibrate the features and Transformer decoders to query the object. Experimented on two public polyp datasets, COTR149 outperformed other state-of-the-art methods. Combining the attention mechanism with the convolution layers, Liu et al.150 proposed a novel framework for the accurate polyp detection. Liu et al.150 used a traditional CNN backbone to give a preliminary 2D representation, the result of which got passed to Transformer encoder after a flattening and positional encoding. After the Transformer decoder, a feedforward network (FFN) obtained the output embedding of Transformer decoder to give the prediction of detection.

5.6.6 Multimodality

Chen et al.146 proposed U-TRansformer based Anomaly Detection framework (UTRAD) to overcome the unstable training and ununified judgement for feature distribution evaluation. UTRAD146 employed attention-based autoencoders to describe the pretrained features. Setting not the raw images but the feature distribution to reconstruct, UTRAD146 stabilized the training process and improved the detection accuracy. A multiscale pyramidal hierarchy was adopted in UTRAD146 for the detection of anomalies. Tested on retinal, brain, head data set, UTRAD146 outperformed other methods.

6 MEDICAL IMAGE RECONSTRUCTION

In clinical practice, there may exists an image quality deficit in the obtained medical images. After all, the medical images, unlike the computer simulation, are collected through some medical equipment, the result of which may be influenced by the realistic constraint and accidental factors. Besides, sometimes for the diminishing of side effect, some technology may sacrifice the image quality, namely low-dose computed tomography (LDCT). For low-quality medical images, some researchers have raised their solutions to improvement of the image quality through the image construction Transformer-based AI models. Their trials are collected in Table 4.

| Name | Modality | Organ/Method | Disease/Dimension |

|---|---|---|---|

| E-DSSR162 | Endoscopy | Surgical | Dynamic surgical scene |

| MIST-net163 | X-ray | Cardiac | Image quality improvement |

| CNN-Transformer164 | X-ray | Bone | Long bone |

| SSTrans-3D165 | SPECT | Cardiac | 3D reconstruction |

| ADVIT166 | PET | Brain | Alzheimer's Disease |

| TransEM167 | PET | Brain | Image quality improvement |

| SMIR168 | MRI | Brain | Super-resolution |

| SLATER169 | MRI | Multiorgan | Unsupervised reconstruction |

| ReconFormer170 | MRI | Multiorgan | Accelerated reconstruction |

| DSFormer171 | MRI | Multiorgan | Accelerated reconstruction |

| McMRSR172 | MRI | Multiorgan | Super-resolution |

| PKT173 | MRI | Multiorgan | Undersample reconstruction |

| HUMUS-Net174 | MRI | Multiorgan | Accelerated reconstruction |

| Kspace-Trans175 | MRI | Multiorgan | Accelerated reconstruction |

| SVoRT176 | MRI | Brain | Fetal brain |

| TITLE177 | MRI | Multiorgan | Accelerated reconstruction |

| T2Net178 | MRI | Multiorgan | Joint |

| MTrans179 | MRI | Multiorgan | Accelerated reconstruction |

| SRT180 | MRI | Brain | 2D to 3D |

| FedGIMP181 | MRI | Multiorgan | Accelerated reconstruction |

| ASMT182 | MRI | Brain | Super-resolution |

| SAT-net183 | MRI | Cartilage | Acceleration + image quality |

| McSTRA184 | MRI | Multiorgan | Accelerated reconstruction |

| KangLin185 | MRI | Multiorgan | Acceleration reconstruction |

| ASFT182 | MRI | Brain | Super-resolution |

| TranSMS186 | MPI | Multiorgan | Super-resolution |

| CTformer187 | LDCT | Multiorgan | Denoising |

| Liu188 | LDCT | Multiorgan | Degradation |

| TransCT189 | LDCT | Multiorgan | Enhancement |

| TED-Net190 | LDCT | Liver | Denoising |

| transGAN-SDAM191 | l-PET | Brain | Image quality improvement |

| Wu192 | CT | Multiorgan | Image quality improvement |

| DuDoTrans193 | CT | Multiorgan | Image quality improvement |

| TVSRN194 | CT | Multiorgan | Super-resolution |

| Sizikova195 | CT | Lung | 3D shape induction |

| Eformer196 | CT | Multiorgan | Denoising |

6.1 X-ray or radiography

6.1.1 Cardiac reconstruction