Artificial intelligence in orthopaedic education: A comparative analysis of ChatGPT and Bing AI's Orthopaedic In-Training Examination performance

Abstract

Background

This study evaluated the performance of generative artificial intelligence (AI) models on the Orthopaedic In-Training Examination (OITE), an annual exam administered to U.S. orthopaedic residency programs.

Methods

ChatGPT 3.5 and Bing AI GPT 4.0 were evaluated on standardised sets of multiple-choice questions drawn from the American Academy of Orthopaedic Surgeons OITE online question bank spanning 5 years (2018–2022). A total of 1165 questions were posed to each AI system. The performance of both systems was standardised using the latest versions of ChatGPT 3.5 and Bing AI GPT 4.0. Historical data of resident scores taken from the annual OITE technical reports was used as a comparison.

Results

Across the five datasets, ChatGPT 3.5 scored an average of 55.0% on the OITE questions. Bing AI GPT 4.0 scored higher with an average of 80.0%. In comparison, the average performance of orthopaedic residents in national accredited programs was 62.1%. Bing AI GPT 4.0 outperformed ChatGPT 3.5 and Accreditation Council for Graduate Medical Education examinees, and analysis of variance analysis demonstrated p < 0.001 among groups. The best performance was by Bing AI GPT 4.0 on OITE 2020.

Conclusion

Generative AI can provide a logical context across answer responses through its in-depth information searches and citation of resources. This combination presents a convincing argument for the possible uses of AI in medical education as an interactive learning aid.

Abbreviations

-

- AAOS

-

- American Academy of Orthopaedic Surgeons

-

- ACGME

-

- Accreditation Council for Graduate Medical Education

-

- AI

-

- artificial intelligence

-

- ANOVA

-

- analysis of variance

-

- LLMs

-

- large language models

-

- OITE

-

- Orthopaedic In-Training Examination

1 INTRODUCTION

Large language models (LLMs) represent a groundbreaking advancement in artificial intelligence (AI). Developed through sophisticated deep machine learning algorithms, these models can generate human-like responses [1, 2]. Within the sphere of orthopaedic and broader medical education, LLMs stand poised to supplement and potentially amplify traditional educational paradigms by offering residents an interactive and richly informative learning experience [3]. Generative AI has seen significant applications in both multiple choice standardised tests and written exams in recent months [4].

LLMs, such as ChatGPT 3.5, are crafted through data collection, pretraining, and subsequent fine-tuning processes. Initially, a large amount of text data is amassed from sources, which includes but is not limited to books, articles, and websites. Following this, the data undergoes a rigorous preprocessing phase to ensure its standardisation. Subsequently, the data is segmented into smaller, more manageable chunks for further processing. The LLM is then subjected to iterative training and refinement, where it is fine-tuned on a more specific dataset to tailor it to a particular task. Feedback from reviewers and researchers is utilised to pinpoint areas necessitating improvement, thereby enhancing the model's capabilities through a continuous feedback loop [1, 2].

This study aims to delve into the potential applications of LLMs in orthopaedic education. To accomplish this, we evaluated the performance of two prominent AI models, ChatGPT 3.5 and Bing AI GPT 4.0, using the Orthopaedic In-Training Examination (OITE). The OITE is an annual exam administered by the American Academy of Orthopaedic Surgeons (AAOS), and serves as a standard metric for gauging the knowledge and competence of orthopaedic residents in the United States. The OITE is a comprehensive assessment that covers various aspects of orthopaedics, including anatomy, physiology, diagnostics, treatment modalities, and surgical techniques. The breadth and depth of topics encompassed in this examination make it an ideal testbed to assess the capabilities of AI models, which includes roughly 207–275 questions per year. The OITE covered the following specialities: basic science, foot & ankle, hand & wrist, hip & knee, oncology, pediatrics, shoulder & elbow, spine, sports medicine and trauma. By subjecting ChatGPT 3.5 and Bing AI GPT 4.0 to this rigorous examination, we can gauge their performance, accuracy, and overall competence in responding to orthopaedic-related questions.

Our objective was to study the effectiveness of Bing AI GPT 4.0 and ChatGPT 3.5 in comprehending and responding to OITE questions, thereby exploring the potential of AI in supporting medical education and its capacity to contribute to the learning and training of orthopaedic residents. Understanding how AI models perform on the OITE has substantial implications for medical education. If LLMs can demonstrate a high level of proficiency and accuracy in answering OITE questions, it suggests that these AI tools have the potential to augment traditional learning methods, providing orthopaedic residents with valuable resources, explanations, and insights, which, in turn, can enhance their knowledge base, improve examination performance, and bolster overall knowledge retention.

2 MATERIALS AND METHODS

Two generative AI models were chosen for this analysis: ChatGPT 3.5 and Bing AI GPT 4.0. ChatGPT 3.5 was chosen as the ready-to-use base model, and Bing AI was chosen for its web browsing ability and improved processing capability with GPT 4.0 technology (Microsoft, May 2023). All model testing was performed between the period of 5 May 2023 and 28 May 2023. Questions and their applicable prompts were entered manually into the respective AI models available via the OpenAI ChatGPT website and the Microsoft Bing chat website. ChatGPT 3.5 was limited by its inherent pretraining data up to September 2021. Bing AI GPT 4.0 has improved comprehension built on OpenAI's ChatGPT 4.0 platform and is not similarly limited by pretraining data with the model's web browsing access capabilities through Microsoft Edge.

Standardised sets of multiple-choice questions spanning 5 years (2018–2022) taken from the AAOS official database were used to measure the performance of the two AI models. A total of 1165 questions were presented to each system and no questions were excluded from query and analysis. All answers generated by the AI models were collected and analysis was conducted based on final scoring. Historical data on orthopaedic resident scores was sourced from the annual OITE technical reports from 2018 to 2022. From those reports, scores from Accreditation Council for Graduate Medical Education (ACGME) accredited programs were used as a marker for comparison. These included scores ranging from PGY-1 orthopaedic interns as well as seasoned PGY-5 chief residents.

Due to the impact prompt engineering has been shown to have on generative AI outputs, we standardised the inputs for each question. First, the question prompt from the OITE was entered, followed by a separation and a prompt to choose the best answer from the subsequent answer choices. The available answer choices, numbered 1–5, were then inserted. The AI models were then asked to generate their respective answer choices. Responses were recorded for scoring and subsequent analysis. The same inputs were used across all AI platforms to standardise prompts. A new chat was started for each response to prevent internal learning through completed questions. If there was an image, a minimal description of the image (e.g., wrist radiograph) as well as any text on the image was transcribed into the question prompt following its presentation with a description of the orientation of the text (e.g., from right to left). Any image-based tables were converted into text tables and directly inputted into the AI models. No OITE questions were excluded in this study.

Data analysis was conducted using IBM SPSS Statistics for Windows, Version 26.0. Student's T-tests and one-way analysis of variance (ANOVA) were applied to determine significant differences between the test performance of the two AI models. All statistical tests were two-tailed, and the significance level was set at 0.05. Results were considered statistically significant at p < 0.05. Descriptive statistics included whether the question had an image, classification of question type, and presence of logical response. All authors worked collaboratively, and all uncertain labels were reconciled.

3 RESULTS

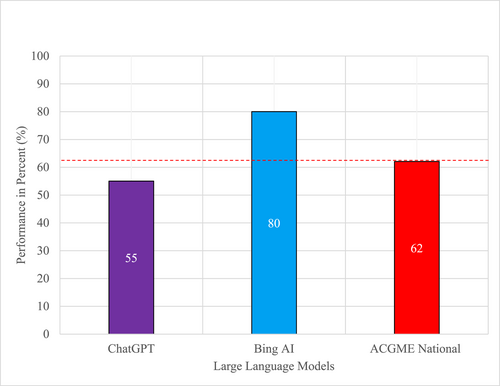

Across the five datasets, ChatGPT 3.5 generated an average of 55.0% correct responses on OITE questions, Bing AI GPT 4.0 generated an average of 80.0% correct responses, and ACGME national accredited programs generated an average of 62.1% correct responses. Bing AI GPT 4.0 outperformed ChatGPT 3.5 by an average of 25.0%, and outperformed ACGME examinees by 17.9% (Figure 1). ANOVA analysis demonstrated p < 0.001 among groups. When comparing ChatGPT 3.5 and ACGME averages results, there was a statistically significant difference (p = 0.004). When comparing Bing AI GPT 4.0 and ACGME averages results, there was a statistically significant difference (p < 0.001). When comparing ChatGPT 3.5 and Bing AI GPT 4.0, there was a statistically significant difference (p < 0.001).

Average performance of large language models on OITE exams. Average performance of ChatGPT, Bing AI, and ACGME orthopaedic residents. Performance is measured by the percentage of correct answers on a set of OITE questions averaged over a 5-year period from 2018 to 2022. The red line represents the extension of the 62% cut off comparison against the orthopaedic resident average. The results show that the Bing AI GPT 4.0 model outperforms the other two models ANOVA (p < 0.001). ACGME, Accreditation Council for Graduate Medical Education; AI, artificial intelligence; ANOVA, analysis of variance; OITE, Orthopaedic In-Training Examination.

The best performance was by Bing AI on OITE 2020 at 83.7% correct (Table 1). The model demonstrated a decline in ChatGPT 3.5 performance over the later years of the OITE test when adjusted to test difficulty based on ACGME national scoring. ChatGPT 3.5 scores ranged from 50.7% to 58.9%. Bing AI GPT 4.0 scores ranged from 78.9% to 83.7%. Orthopaedic resident performance scores ranged from an average of 60.6%–66.0%.

| Year | ChatGPT 3.5 (%) | Bing AI GPT 4.0 (%) | ACGME national (%) |

|---|---|---|---|

| 2018 | 57.4 | 78.9 | 60.6 |

| 2019 | 54.2 | 78.9 | 60.8 |

| 2020 | 54.0 | 83.7 | 61.0 |

| 2021 | 50.7 | 77.9 | 62.0 |

| 2022 | 58.9 | 80.7 | 66.0 |

| Mean | 55.0 | 80.0 | 62.1 |

- Note: Score comparison between the performance of ChatGPT 3.5, Bing AI GPT 4.0, and average score of ACGME national examinees on the OITE over a 5-year period from 2018 to 2022. The average performance of ChatGPT 3.5 was 55.0%, Bing AI GPT 4.0 was 80.0%, and average score of ACGME national examinees according to the AAOS was 62.1%.

- Abbreviations: AAOS, American Academy of Orthopaedic Surgeons; ACGME, Accreditation Council for Graduate Medical Education; AI, artificial intelligence; OITE, Orthopaedic In-Training Examination.

There was appropriate logical justification of responses in all answers from the two AI models. Each response contained elements of internal information from the question as well as outside referenced information that was verified by the authors of this study (Supporting Information S1). No hallucinations were present on responses as verified independently by three authors who specialise in orthopaedics. However, whether or not the logical reasoning was relevant to the question or whether the correct answer was generated was outside of the scope of this quantitative study. In the case of ChatGPT 3.5, information is referenced from pretrained data limited to September 2021, while Bing AI referenced relevant up-to-date Internet search results (e.g., PubMed, AAOS, etc.). Only Bing AI provided clickable in-line hyperlink citations for response justification that directed users to cited articles. Hyperlink citations can be clicked on by the user that brings them to the respective articles. LLMs were limited by tokens, the basic units of language of processing and generating tests, in their response. At the time of this study, ChatGPT 3.5 had 16,000 tokens, while Bing AI GPT 4.0 had about 8000 tokens available.

4 CONCLUSIONS

The advent of AI in the medical field heralds a transformative shift from conventional learning and assessment methodologies [5]. The findings of our study underscore the potential utilisation of AI in navigating the OITE. The noteworthy performance of Bing AI GPT 4.0 illuminates the potential of AI to augment educational resources and tools available to residents [6-8]. In particular, AI systems that are able to reference sources for further research or allow users to verify the accuracy of generated responses may serve as useful teaching tools in the context of orthopaedic education as well as generative AI improvement. However, gaps in knowledge exist with respect to the utility of LLMs to process imaging data, as prior to March 2023, LLMs were limited in their abilities to view images and access web data independently. Future studies can hope to show improved performance with improved integration of text, imaging, and video into a comprehensive LLM.

Comparing our findings with existing literature, it is evident that the application and performance of AI, particularly ChatGPT 3.5, has been a subject of exploration in various medical specialities over the past months since the rise of GPT 4.0 technology in March 2023 [5, 9]. The performance of ChatGPT in the Plastic Surgery In-Service Examination, for instance, was found to be equivalent to that of first-year plastic surgery residents, albeit performing poorly compared to more advanced residents [5]. This aligns with our findings that AI systems, while showcasing a remarkable ability to navigate through medical examination questions, still exhibit limitations and inconsistencies in performance as information funnels down into deeper nuances of esoteric knowledge in subspecialities.

In another study, ChatGPT was evaluated in the context of the Taiwanese Pharmacist Licensing Examination, where it failed to secure a passing score [10]. This resonates with our findings where ChatGPT, despite its logical and coherent responses, was unable to consistently generate passing answers to specialised medical exams such as the OITE. The limitations, particularly in accessing speciality-specific training data and the inability to interpret and analyse visual data, underscore the areas necessitating further research and development in the application of AI in specialised medical fields.

When evaluating generative AI performance on more general medical knowledge, ChatGPT 3 was able to pass National Board of Medical Examiners (NBME) Step 1 and Step 2 by scoring over 60% on NBME-Free exams [11, 12]. These early studies highlight the ability of AI to provide general logic and informational context across complex medical texts to arrive at reasonable answers.

The implications of AI systems, particularly those capable of referencing and validating their responses through real-time data access, are profound in the context of medical education. The ability of AI to serve as a supplementary educational tool, providing logical and informational context across answer responses, is a potential paradigm shift in how medical education can be approached, curated, and delivered [9]. The biggest hurdle remains to be prompt engineering, which is still a black box yet to be fully unravelled, as prompt engineering may be used to dig out the best responses from the trillion parameter thought process of generative AI models, and contributes to the largest diversity of responses [8].

However, it is imperative to navigate the integration of AI in medical education with caution and scrutiny, ensuring that the technology is utilised in a manner that enhances, rather than undermines, the educational experience. The logical justification provided by AI in its responses, while beneficial, necessitates a critical evaluation to safeguard against potential misinformation and to uphold the integrity of the learning process. It is crucial to acknowledge the potential of LLMs for generating hallucinations, which are characterised by confident sounding but incorrect responses. To mitigate this, educators should emphasise the importance of cross-referencing AI generated information with trusted medical sources, while implementing a feedback mechanism for reporting potential hallucinations to further improve the accuracy of responses.

The implications extend beyond the immediate realm of examination preparation and into the broader spectrum of continuous medical education and practice. The ability of AI to consolidate and present information from a wide array of sources can potentially streamline the continuous learning and information update process for practicing professionals, thereby enhancing their ability to stay abreast of advancements and updates in the field by providing readily available links to new articles and educational resources.

The disparity in performance between Bing AI GPT 4.0 and ChatGPT 3.5, two AI models with distinct capabilities and functionalities, highlights the significance of web search integration and real-time data access in enhancing the accuracy and reliability of responses. Bing's ability to pull from a myriad of online resources, such as PubMed and AAOS, and integrate this information to formulate answers, especially in image-based questions, underscores a pivotal advancement in AI application in medical examinations. This is particularly crucial in the realm of orthopaedics, where visual data often play a vital role in diagnosis and treatment planning.

Bing AI GPT 4.0 demonstrated the possible ability to reach a passing score for the OITE. It proved to be the strongest model, pulling from web search integration to outperform in both image-based questions and overall categories. Inputs regarding image descriptions were kept consistent across models, which further demonstrates the difference in comprehension from ChatGPT 3.5. The potential advantages of incorporating cited sources in generative AI serve as an educational tool to consolidate sources and create a cohesive output. This marks a significant milestone in information processing.

However, as indicated in this study, AI systems are not yet at the level of being able to consistently generate passing answers to subspecialities medical exams. This is likely as a result of AI models being able to access less speciality-specific training data like that tested on the OITE than general data for the broader medical field. As it stands, out of all AI systems tested, only Bing AI was able to generate a passing score on the OITE. All AI systems tested also generated multiple inaccurate answers—though with logical explanations that could mislead those seeking to use such systems primarily for learning purposes. It is necessary to remain sceptical of AI models and approach AI generated outputs cautiously by independently verifying and interpreting source information.

The difference in accuracy between the answers generated by ChatGPT 3.5 and Bing AI GPT 4.0 demonstrates that AI systems are part of a rapidly evolving system as AI developers increase the strength of the synthesis, creativity, and problem-solving ability employed by each system. Improvement to these AI systems becomes exponential as millions of data points are added for iterative growth. On the other hand, safety concerns associated with AI systems have led to many limitations now being placed on AI systems that were not present during the initial conception of these systems, some of which are only a few months old. As restrictions accumulate, AI systems are prevented from accessing sensitive information, user operability is reduced, and prompt engineering becomes more crucial to achieve satisfactory performance.

There are several limitations to this study. ChatGPT 3.5 was limited by its lack of image recognition and limited pretraining data up to September 2021. In turn, while the same standardised prompts were used for Bing AI, it is difficult to assess whether its superior results were purely based on better LLM capabilities in general or whether due to its ability to conduct web and meta data searches. Another limitation was the aggregated data of the OITE technical report used, which included aggregated data from orthopaedic residents in all years of training ranging from PGY-1 to PGY-5. The study would be much improved if the scoring breakdown was by years for a more dynamic comparison. Additionally, future studies can include image-based analyses of AI performance without optimised prompt engineering in different subspecialities of orthopaedics, as well as linguistic performance analysis in explanation quality for each answer.

The use of generative AI models in orthopaedics is an interesting and challenging research topic that has implications for both medical education and natural language processing. Bing AI scored an average of 80.0%, which was comparable to ACGME accredited orthopaedic programs that scored an average of 62.1%. By shedding light on the capabilities and characteristics of AI models, we aim to provide valuable insights into the application of these models in orthopaedic education and their potential to influence the learning processes for orthopaedic surgeons.

It is apparent that generative AI systems are here to stay. It is now up to us on how little or how much to incorporate such systems into daily orthopaedic routines. This study helps us gain insight into the strengths and limitations of present AI language models, allowing us to review their potential applications for optimising orthopaedic educational experiences in the future.

AUTHOR CONTRIBUTIONS

Clark J. Chen: Conceptualization (equal); data curation (equal); formal analysis (equal); funding acquisition (equal); investigation (equal); methodology (equal); project administration (equal); resources (equal); software (equal); supervision (equal); validation (equal); visualization (equal); writing—original draft (equal); writing—review & editing (equal). Vivek K. Bilolikar: Conceptualization (equal); data curation (equal); formal analysis (equal); investigation (equal); methodology (equal); project administration (equal). Duncan VanNest: Conceptualization (equal); data curation (equal); formal analysis (equal); investigation (equal); methodology (equal). James Raphael: Project administration (equal); supervision (equal); writing—review & editing (equal). Gene Shaffer: Project administration (equal); resources (equal); supervision (equal); writing—review & editing (equal).

ACKNOWLEDGMENTS

None.

CONFLICT OF INTEREST STATEMENT

The authors declare no conflict of interest.

ETHICS STATEMENT

This article is a practice-oriented retrospective cohort description that made extensive use of secondary information sources and drew upon the professional knowledge of the co-authors and Internet resources. As such, the creation of this case study article did not involve any formal research study, nor did it involve human participation in a research study. As such, IRB review was exempt for this article.

INFORMED CONSENT

Not applicable.

Open Research

DATA AVAILABILITY STATEMENT

The data that support the findings of this study are available on request from the corresponding author. The data are not publicly available due to privacy or ethical restrictions.