Insights into respiratory disease through bioinformatics

ABSTRACT

Respiratory diseases such as asthma, chronic obstructive pulmonary disease and lung cancer represent a critical area for medical research as millions of people are affected globally. The development of new strategies for treatment and/or prevention, and the identification of biomarkers for patient stratification and early detection of disease inception are essential to reducing the impact of lung diseases. The successful translation of research into clinical practice requires a detailed understanding of the underlying biology. In this regard, the advent of next-generation sequencing and mass spectrometry has led to the generation of an unprecedented amount of data spanning multiple layers of biological regulation (genome, epigenome, transcriptome, proteome, metabolome and microbiome). Dealing with this wealth of data requires sophisticated bioinformatics and statistical tools. Here, we review the basic concepts in bioinformatics and genomic data analysis and illustrate the application of these tools to further our understanding of lung diseases. We also highlight the potential for data integration of multi-omic profiles and computational drug repurposing to define disease subphenotypes and match them to targeted therapies, paving the way for personalized medicine.

INTRODUCTION

Asthma, chronic obstructive pulmonary disease (COPD) and lung cancers represent the most common, non-communicable respiratory diseases. Asthma and COPD each affect over 200 million people globally, with asthma being the most common chronic disease among children,1 and COPD the fourth leading cause of death worldwide.2 While lung cancer affects comparatively fewer people (<2 million), it is the most commonly diagnosed cancer and the leading cause of cancer-related deaths.3 Together, these respiratory diseases contribute an immense global health burden, which exceeds €46 billion annually in Europe alone.4 The development of new strategies for the treatment or prevention of these diseases requires a comprehensive understanding of the underlying biology. In this regard, the advent of omics has enabled the generation of rich data sets that span a broad range of cellular and molecular states that underpin the pathogenesis of lung diseases. However, processing large data sets to pinpoint relevant causal pathways and therapeutic targets among the vast array of possible candidates is a challenging task, and below we highlight contributions from bioinformatics towards this goal.

TRANSCRIPTOMICS: FROM SHORT SEQUENCING READS TO BIOLOGICAL MECHANISMS

The transcriptome (the entire set of mRNA transcripts and non-coding RNA expressed at a given time) changes dynamically in response to environmental exposures and disease states. Assessment of the transcriptome can provide important insights into the molecular mechanisms of disease. Notably, the first distinctions between Th2-high and Th2-low asthma were based on expression levels of a panel of IL-13-induced genes.5 RNA-sequencing (RNA-Seq) is currently the method of choice for the assessment of transcriptome, and has several advantages over the previous gold-standard DNA microarray (reviewed).6 In essence, RNA-Seq is performed on short fragments of RNA (typically mRNA) to generate tens of millions of short sequencing reads per sample. The biggest challenge faced by researchers is extracting biological meaning from these reads. Below, we provide an overview of the tools and techniques employed for this purpose.

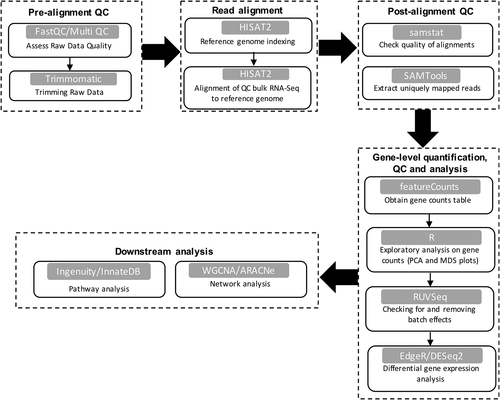

A workflow for the analysis of RNA-Seq data is illustrated in Figure 1. First, raw sequencing reads are checked for overall sequencing quality. FastQC7 has become the standard for performing this task, where each sequencing file is evaluated with respect to several quality control metrics. Each file is assigned a ‘pass’, ‘warning’ or ‘fail’ for each of these metrics, and MultiQC8 is employed to aggregate the FastQC results across all samples. Where necessary, data trimming tools such as Trimmomatic9 can be used to clean up the raw sequencing data prior to alignment of the genome, by removing artificial sequences, or those with low quality scores. These steps can improve the efficiency of the alignment step, and ensure only the best quality data are used for downstream analyses. There are several splice-aware read alignment tools (TopHat,10 HISAT11 and STAR),12 which map each sequencing read to a reference genome, to determine which genes they belong to. The percentage of mapped reads is an important quality metric, and should be around 70–90% in good quality human data sets.13 Post-alignment statistics can be evaluated with SAMStat.14 Next, the number of reads that uniquely align to each gene are counted, ultimately producing a large matrix of gene counts, where each column contains a sample and each row corresponds to a gene. There are a number of tools available for this step including summarizeOverlaps,15 featureCounts16 and htseq-count.17 From here, downstream analyses are typically performed using R or RStudio.18 First, exploratory data analysis techniques such as unsupervised clustering are performed on the gene count data to visualize how the samples group together, and identify outliers or the presence of batch effects. The most common techniques are principal component analysis (PCA) and multidimensional scaling (MDS). Both are data reduction techniques, which are applied to normalized data rather than the raw gene counts to account for any technical differences that may be present between samples. PCA operates directly on the gene count information while MDS is based on a measure of distance between samples (derived from the gene counts). In both analyses, closely related samples are expected to cluster together. Filtering out genes with low counts prior to clustering is recommended, as genes with low counts can be highly variable between samples, and may skew the data. Furthermore, the presence of batch effects or other sources of unwanted variation can significantly impact downstream analyses. RUVseq19 can systematically model unwanted variation in the data, and remove it from the analysis. In addition to finding problems with data quality, clustering techniques can also be used to identify subphenotypes. For example, Kuo et al. employed cluster analysis to transcriptomic data from >90 bronchial biopsies or epithelial brushings from patients with moderate-to-severe asthma.20 By focusing on inflammatory genes, they identified a distinct subgroup of patients, who were characterized by high counts of submucosal eosinophils, high exhaled nitric oxide and high use of oral corticosteroids.

Following initial exploratory data analysis steps, the goal of most RNA-Seq experiments is to identify differentially expressed genes. This can be performed with methods such as edgeR,21 DESeq222 or limma-voom.23 A comparison of these tools is beyond the scope of this article, but is available elsewhere.24 The typical output from a differential gene expression analysis contains a list of genes with an official gene identifier (e.g. Ensembl ID and gene symbol) alongside a test statistic, log fold-change and a significant P-value (both unadjusted and adjusted for multiple comparisons). It is at this point that biological interpretation of the data begins.

Pathways analysis provides important insights into the collective biological functions of a set of genes. Popular pathways analysis tools include DAVID,25 InnateDB,26 Enrichr27 and the Molecular Signatures Database.28, 29 Each tool has inherent biases (e.g. some tools specialize in immune pathways), and in our experience performing pathways analysis with multiple tools can provide complementary information. These analyses, which require a list of differentially expressed genes (upregulated and downregulated) as input, are straight forward to perform. Statistical significance can be assessed using a Fisher's exact test, hypergeometric test or gene-set enrichment test (see below), and P-values are adjusted for multiple testing.

Whilst informative, pathways analyses cannot unveil the regulatory mechanisms that are driving a biological response or disease process. In this regard, Upstream Regulator Analysis can be performed to infer the putative molecular drivers of a set of differentially expressed genes, based on experimentally determined cause-and-effect relationships extracted from the literature.30 Two statistical measures are calculated; the overlap P-value and an activation Z-score. The overlap P-value is derived from a Fisher's exact test to determine whether there is a significant overlap between the user-identified gene list, and the known downstream targets of a candidate regulator. The activation Z-score is used to infer the activation state of a predicted regulator, leveraging prior knowledge of the direction of target gene regulation (up or down) when the predicted transcriptional regulator is ‘activated’ or ‘inhibited’. The predicted regulators identified from Upstream Regulator Analysis can be experimentally validated using gene silencing techniques.31

A limitation of Upstream Regulator and pathways analysis is that they do not provide a holistic, systems-level view of the data. Notably, biological systems are governed by a universal set of organizing principles, or common design elements that are found in other complex interconnected systems such as the stock market and the world-wide-web.32 Network analysis is a powerful tool to study the organization and function of biological systems. The underlying concept is that a biological system can be represented as a network graph of interacting genes or gene products (mRNA transcripts and proteins). The network graph reveals fundamental topological properties of biological systems. Gene networks have a modular architecture and power-law degree distribution. The modular property describes the organization of the network into smaller subnetworks that are functionally and/or topologically isolated; the power-law distribution means that most genes have few interactions, and a few genes have many, behaving as hubs that dominate the network structure are more essential to network integrity. A popular algorithm for network analysis of gene expression data is weighted gene co-expression network analysis (WGCNA).33 This algorithm infers network structure from experimental data based on the correlation patterns between all gene pairs across the samples. Prior to network analysis, gene counts are transformed using a variance stabilizing transformation from the DESeq2 package. Modules of highly co-expressed genes are identified by hierarchical clustering. The resulting network modules can be interrogated using the same pathways analysis tools noted above. Genes can be ranked according to properties such as intramodular connectivity (defined as the sum of the correlation patterns for a given within each module) to identify ‘hub’ genes and prioritize them for follow-up mechanistic studies. WGCNA of sputum transcriptomes was recently utilized to further our understanding of why only a subset of house dust mite sensitized individuals suffer from asthma despite ubiquitous environmental exposure.34 The data showed that Th2-associated inflammation was upregulated in house dust mite allergic subjects regardless of the presence or absence of asthma. However, in the subjects with asthma, Th2-related pathways formed interlinked networks with airway epithelial pathways (EGFR, ERBB2 and CDH1), suggesting that pathological Th2 inflammation involves rewiring of Th2 gene networks with epithelial repair/remodelling networks.

An alternative approach to WGCNA is to build regulatory networks between transcription factors and target genes. The Algorithm for the Reconstruction of Accurate Cellular Networks (ARACNe) uses mutual information to infer statistical dependence between transcription factors and target genes across a gene expression data set.35 The Passing Attributes between Networks for Data Assimilation (PANDA) algorithm starts with a pre-specified network model that is based on transcription factor motif data, and employs message passing theory to integrate information derived from multiple layers (sequence motif data, gene expression profiles and protein–protein interactions) resulting in more accurate network models.36 Both algorithms have extended functionality to extract sample-specific information on gene network patterns, and identify network driver genes that determine biological state transitions.35, 37-39

GENOME-WIDE ASSOCIATION STUDIES

Genome-wide association studies (GWAS) identify associations between genetic markers and phenotypic traits by assessing thousands of individuals in either case–control40-43 or quantitative40, 44 study designs. GWAS leverage the discovery of millions of single nucleotide polymorphism (SNP) across the human genome, and the fact that a subset of these SNP encapsulates common genetic variation via a phenomenon called linkage disequilibrium (LD; process by which a group of correlated SNP tend to be inherited more often than by chance).40, 41, 44-46 This has resulted in a deluge of data, requiring the development and application of bioinformatics tools for pre-processing and analysis. Here, we describe a general framework for the analysis of data from a typical GWAS, and discuss how the application of bioinformatics and multi-omics integrative approaches have led to advances in our understanding of lung diseases.

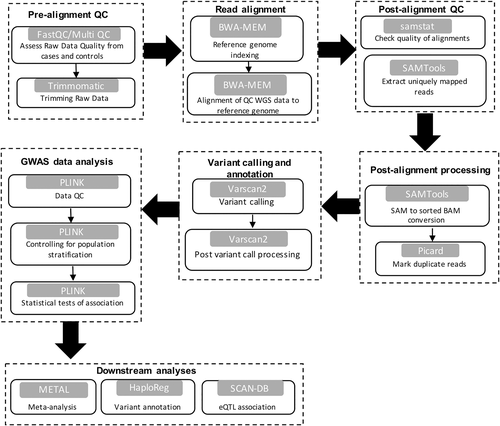

There are three major steps involved in the analysis of data from a typical GWAS (Fig. 2). Whole-genome sequencing (WGS) data would undergo read quality assessment, trimming of low-quality bases, read alignment and post-alignment quality checks as described in the previous section of transcriptomics. However, read alignment would be performed by tools such as SOAP47 and BWA,48 which have been designed to specifically handle WGS data. Additionally, several post-alignment processing steps would be carried out. For instance, PCR duplicates, which arise during DNA amplification,49 would be removed using Picard tools (http://broadinstitute.github.io/picard). The elimination of uniquely mapped reads that fall below a given quality threshold or reads that do not uniquely map in proper pairs may also be required using SAMtools.50

The second step consists of performing variant calling on the output from the first step, to identify all sites where an alternate allele is present either in the case or control samples.51 Some popular tools available for this are Varscan2,52 GATK,51 FreeBayes53 and SAMtools/BCFtools.50 It is likely that the initial variant calling step will produce more SNP than expected. This normally occurs as a result of the reduced sensitivity of the variant callers during this initial step to reduce false negatives. Therefore, post-variant filtering is carried out by the variant caller itself to remove false positives. Otherwise, additional tools such as vcflib (https://github.com/vcflib/vcflib), which are specifically designed for this purpose and required by some variant callers, can be used.

In the third step, several quality control checks40, 44-46 are performed on the filtered variants prior to GWAS statistical analyses as described in Table 1. The majority of these steps (1–4, 7 and 8) can be performed by the popular software PLINK.56 Data imputation (i.e. statistical inference of unobserved genotypes), on the other hand, would be performed with tools such as IMPUTE2,57 MAcH58 and BEAGLE.59 After quality checks, association analysis is performed to find relationships between genotypes and phenotypes. This can be achieved via a single locus analysis40, 44 whereby individual SNP are tested sequentially under the null hypothesis of no association. Several methods available for this purpose include logistic regression, contingency tables (chi-square test and Fisher's exact test) and mixed linear models.40, 44, 60, 61 Given the large number of SNP being tested, the results have to be corrected for multiple hypothesis testing (Table 2).40, 44 However, these stringent corrective measures often lead to the exclusion of important loci. Therefore, the application of multi-locus analyses may be preferred as the joint effects of multiple markers is considered, thus preventing the need for multiple test correction.40, 44, 62

| Step | Explanation |

|---|---|

| (1) SNP missing rate | The number of subjects in a population who are missing information on a specific SNP. SNP with a high missing rate will more likely introduce bias in downstream analyses and should be removed |

| (2) Subject missing rate | Number of SNP missing for a particular subject. High missing rate at the individual level could indicate poor DNA quality or technical issues during library preparation or sequencing |

| (3) Sex discrepancy | Discrepancies between the assigned sex of the individual in the study and the sex determined based on the genotype would be checked. This can only be done if SNP on the X and Y chromosomes have been surveyed. Any inconsistencies would point to a probable sample mix-up |

| (4) MAF | Only SNP that are above an MAF (frequency of the less common allele) threshold would be included. The threshold depends on the population under study and its size, for example large populations would use lower MAF thresholds. SNP with low MAF do not have sufficient power and should be discarded. Such SNP may also indicate genotyping errors |

| (5) HWE | The HWE law assumes that in the absence of selection, mutation or migration, the allele and genotype frequencies in a very large population should not change from generation to generation.46 SNP which deviate from HWE should be removed as their expected frequencies do not match their observed frequencies. Such SNP can arise due to genotyping errors. However, in cases compared to controls, the deviation from HWE can signify true association with disease risk |

| (6) Data imputation | This process determines the identity of missing genotypes in SNP data set. This is particularly useful for meta-analyses of GWAS where different genotyping platforms each with a different set of markers were used. In other words, some markers would be present in some GWAS but absent in others. In such cases, genotype imputation would be carried out whereby LD information from reference panels such as HapMap54 and the 1000 Genomes project55 would be used to estimate the missing genotypes |

| (7) Relatedness | This step determines how strongly two individuals are linked at the genetic level as the inclusion of related subjects could lead to incorrect estimations of standard errors of SNP effect sizes. A standard GWA study assumes that none of the individuals are related unless family data is being analysed |

| (8) Population stratification | GWAS where subjects of different ethnicities are involved would be subject to population stratification. This is due to the difference in allele frequencies that exist in different subpopulations, which can either lead to or hinder false positive and true positive associations, respectively. To correct for population stratification, the ancestry of each subject would be verified using dedicated software or approaches that compare the allele frequencies across their genome to those of the HapMap ethnic groups. Subjects whose genetic profiles do not match the target populations would be excluded. Principal component analysis and multidimensional scaling plots of the genetic data can also be generated to identify outliers |

- HWE, Hardy–Weinberg equilibrium; GWAS, genome-wide association study; LD, linkage disequilibrium; MAF, minor allele frequency; QC, quality control; SNP, single nucleotide polymorphism.

| Test | Comment |

|---|---|

| (1) Bonferroni correction | This method assumes that each SNP association test in a GWAS is independent of the others. This is not necessarily true as SNP in LD are correlated and therefore not independent. This method can be overly stringent and lead to false negatives |

| (2) FDR | This approach evaluates which percentage of the statistically significant results is true false positives and corrects for them. The FDR method is less stringent than the Bonferroni correction |

| (3) Permutation testing | In this approach, the genotype information of a given data set is kept constant, but the sample labels are rearranged n times. P-values are then calculated for each permutated data set to establish a threshold. This is the most computationally intensive method among the three, but it also yields the best results |

- FDR, false discovery rate; GWAS, genome-wide association study; LD, linkage disequilibrium; SNP, single nucleotide polymorphism.

The main limitation of GWAS is the lack of statistical power due to modest effect sizes of the variants identified.40, 44, 45 To overcome this limitation, multiple studies can be combined in a process called meta-analysis. By increasing sample size and surveying more variants throughout the genome, meta-analyses increase power to detect association signals and reduce false positives.40, 44, 45 METAL,63 which is commonly used for this purpose, pools evidence for association from individual GWAS through various statistical approaches revolving around P-values, Z-scores, effect size estimates and standard errors. Examples of how the leveraged statistical power of meta-analyses has revealed novel information in the context of asthma are well-documented.60, 61, 64-66 For instance, Demenais et al.60 utilized GWAS data from ~140 000 individuals of various ancestries and identified more than 800 asthma-related SNP at 18 loci, 5 of which had not previously been associated with asthma. The authors also observed significant overlap between these SNP and those associated with autoimmune, inflammatory and allergic diseases as well as lung cancer, supporting a pleiotropic effect and shared genetic susceptibility across these diseases.

A key challenge of interpreting GWAS is that many candidate variants are not replicated across studies, reflecting the complexity of these diseases and the marked heterogeneity observed among asthma and COPD endotypes. In addition, determining the functional relevance of GWAS hits remains a challenge as most risk variants are located within noncoding regions of the genome. Thus, it is becoming clear that integration of GWAS data with additional biological information is necessary to elucidate the intervening biology that links genetic variation with phenotypic traits, as illustrated in the study by Demenais et al. The authors leveraged reference epigenome data sets to show that the asthma-associated loci were enriched in enhancer marks found in cells of the immune system. Similarly, Ferreira et al.61 searched for shared susceptibility genes across asthma, allergic rhinitis and eczema. They identified 136 disease risk variants at 99 loci across 13 individual GWAS. By comparing these loci with cell type specific regulatory annotations contained within the ENCODE database, the authors showed that within blood, the strongest SNP enrichment occurred within T-helper cell subsets, supporting the well-established role of these cells in allergic disease.

COMPUTATIONAL DRUG REPURPOSING

Bringing a new drug to market is time-consuming (12–15 years) and expensive (>$1 billion), and many drugs with good safety profiles fail due to problems with efficacy.67 These drugs may potentially be useful for the treatment of alternative indications. Through online data repositories such as the Gene Expression Omnibus (GEO; https://www.ncbi.nlm.nih.gov/geo/), bioinformaticians can access data from millions of samples derived from patients with lung diseases. In parallel, large-scale efforts such as the Library of Integrated Network-Based Cellular Signatures (LINCS) are underway to systematically profile the response of multiple cell lines and primary cells to thousands of genetic (gene knockout and overexpression) and small molecule perturbations.68 The LINCS profiles can be interrogated to identify drug compounds/combinations that are predicted to reverse disease-associated expression signatures or mimic therapeutic responses. The LINCS data can be accessed via a web-based search engine (http://amp.pharm.mssm.edu/L1000CDS2/#/index).69 Researchers can query this search engine by submitting lists of upregulated and downregulated genes.

Network analysis can be used in combination with computational drug repurposing to identify compounds that perturb gene network patterns. This approach has been employed to find repurposed drugs that enhance cancer immunotherapy.70 Notably, the development of drugs that block immune checkpoint pathways (CTLA4, PD-1 and PD-L1) was a major advance in the treatment of lung cancer; however, only around one-third of patients respond.71 Employing a mouse model of mesothelioma that was characterized by a dichotomous response to anti-CTLA4 therapy, gene expression profiles were obtained in whole tumours derived from responding and non-responding mice. WGCNA was performed to identify modules associated with treatment response. The module response patterns were compared with drug-induced perturbation signatures from the connectivity map (cMap) database72 to identify compounds that were predicted to reinforce the therapeutic response. This analysis was based on gene set enrichment analysis (GSEA) methods, which utilize Kolmogorov–Smirnov like statistics to determine if a set of genes is overrepresented at the extreme tails of a ranked list of differentially expressed genes.28, 72 This analysis unveiled all-trans retinoic acid as a candidate that was predicted to enhance the response rate to immune checkpoint blockade therapy, and this prediction was confirmed in experimental validation studies.

Transcription factors play a crucial role in driving the molecular states that underpin pathogenic states; however, these proteins are often considered to be undruggable.73 An exception relevant to respiratory disease was the development of a DNAzyme that targets the transcription factor GATA3.74, 75 Using bioinformatics, Shen et al. has developed a systematic approach to prioritize candidate inhibitors of transcriptional regulators.76 The approach relies on an algorithm called VIPER (virtual inference of protein activity by enriched regulon analysis), which estimates the activity of transcriptional regulators in an unbiased way based on the expression profile of their inferred target genes. Compounds that modulate the activity of transcriptional regulators are identified by running VIPER on the cMap or LINCS databases. VIPER requires a network model to be specified that connects transcriptional regulators to their target genes. The networks are constructed from multiple sources including ARACNe analysis of RNA-Seq profiles, systematic studies of transcription factor binding to regulatory regions of target genes and gene knockdown-induced expression profiles from the GEO database. For proof-of-concept, several candidate inhibitors of MYC and STAT3 were identified and experimentally validated. This approach represents a significant advance towards targeting network driver genes.

MULTI-OMIC DATA INTEGRATION

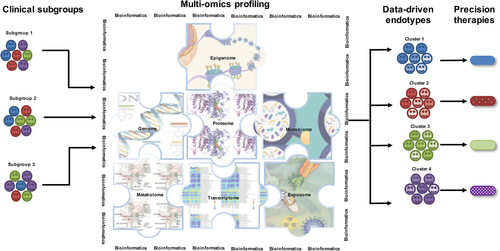

Biological systems are governed by multiple layers of molecular regulation (Fig. 3).77 A complete understanding of disease will therefore require an integrated model that describes the organization and behaviour of the whole system. The integration of multi-omic data is a computationally challenging task and an ongoing area of method development.78 One major challenge is the large number of variables that are measured in comparison to the small number of samples. Another challenge is variation in the scale, complexity and the correlation structure between data sets. For example, patterns of methylation and mRNA expression in the same set of genes can be inversely or positively correlated, or independent,79 and mRNA and protein expression levels are often not strongly correlated.80 Similarity Network Fusion proposes a unique solution to these problems.81 The approach entails constructing networks of patients instead of molecular features to integrate multi-omic profiles. A subject-to-subject similarity matrix is constructed for each type of omic data, and then message passing theory is employed to fuse the networks into a single similarity matrix. Because the number of predictors depends on the number of subjects, rather than molecular features, the method is well suited to integrate highly heterogeneous omic data sets. In addition, the unsupervised nature of the analysis is well suited to the discovery of novel molecular subphenotypes.

Li et al. employed Similarity Network Fusion to integrate multi-omic profiles from COPD patients, healthy non-smokers and smokers with normal lung function.82 They combined nine blocks of omic data across multiple molecular levels (mRNA, miRNA, proteins and metabolites) and anatomical locations (airway epithelium, lung resident immune cells, airway exudates, exosomes and serum). They found that the mean accuracy of subgroup prediction was 0.28 when each omic data block was analysed in isolation. However, combining data from multiple omic platforms increased the mean accuracy of prediction to 0.9. Moreover, the relationship between classification accuracy and sample size was also evaluated, and they found that combining data from at least five omic blocks could classify COPD patients with 100% accuracy, even with group sizes as small as n = 6. These data highlight the utility of Similarity Network Fusion-based data integration for accurate classification of patient subgroups with small number of patients in the presence of the confounding effects of cigarette smoking.

Integration of multi-omic profiles can provide insights into the vast connections between genes and environmental factors that impact on disease risk. In a landmark study, Price et al. followed 108 ‘healthy’ individuals for 9 months and collected biological samples every 3 months.83 Multi-omic profiles were generated, including WGS, gut microbiome, clinical diagnostic tests, proteins and metabolites. The genome sequencing data was summarized into polygenic risk scores for 127 traits. These risk scores provide a single variable that estimates genome-wide risk for a given trait by summing the number of risk alleles for each individual, weighted by effect size estimates from large GWAS.84 An inter-omic correlation network was then constructed to identify pairwise relationships between the omic data layers. The resulting network consisted of 766 nodes and 3470 edges. For example, α-diversity (species richness) of the microbiome was positively correlated with height and β-nerve growth factor levels, and negatively correlated with CSF-1, IL-8 and FLT3 ligand. The clinical diagnostic tests were utilized to identify deviations from wellness—or test results outside of normal reference ranges. The data were used to suggest evidence-based changes to diet (including supplements) and lifestyle (exercise and stress management) that were personalized for each participant and designed to improve their health.

Inferring network structure from multi-omic data is challenging because there are large numbers of highly correlated variables. If the aim of the study is to find a multi-omic signature comprised of a small set of biomarkers to discriminate a biological outcome of interest, statistical methods such as Multiblock Partial least squares (or projection to latent structures, MBPLS) can be employed.78 These methods seek to maximize covariance between summary vectors derived from each omic data block and a biological phenotype or response. The identified molecular features derived from multi-omic layers can be thought of as jointly contributing to a biological trait. Software for performing these analyses is available in Matlab and R.85, 86

CONCLUSIONS

Non-communicable lung diseases represent a significant global health problem. The pathogenesis of chronic lung diseases is determined by interactions between multiple genes and environmental factors, which are subject to multiple layers of molecular regulation. Bioinformatics will be essential to define molecular subphenotypes of disease in an unbiased way, and identify new therapies that are specifically targeted to the molecular drivers of each phenotype. As the field moves from single to multi-omic studies, we will start to develop a more complete understanding of the complex biology that determines disease expression, which in turn will lead to new opportunities for the diagnosis, treatment and prevention of chronic lung diseases.

Acknowledgements

A.B. is supported by a Fellowship from the Simon Lee Foundation and funding from the National Health and Medical Research Council.

The Authors

The main research interests of B.H. from the Telethon Kids Institute are in the implementation and application of bioinformatics approaches in the fields of transcriptomics, systems immunology and cancer genomics for the interpretation and visualization of biological data. The main research interests of E.d.J. from the Telethon Kids Institute are in using bioinformatics approaches to understand the underlying biology of childhood asthma and allergy, in particular assessing transcriptomic profiles of airway epithelium to investigate inherent changes associated with atopic asthma. The main research interests of A.B. from the Telethon Kids Institute are in using network graph theory and computational biology to work backwards (reverse engineering) from genomic profiles of immune responses to reconstruct the wiring diagram of the underlying gene networks. The long-term goal of this work is to unlock the basic mechanisms and principles that govern the functionality of the immune system in healthy and diseased states.

Abbreviations

-

- ARACNe

-

- Algorithm for the Reconstruction of Accurate Cellular Networks

-

- BWA

-

- Burrows-Wheeler Aligner

-

- CDH1

-

- Cadherin 1

-

- CSF-1

-

- colony stimulating factor 1

-

- cMap

-

- connectivity map

-

- CTLA4

-

- Cytotoxic T-Lymphocyte Associated Protein 4

-

- ERBB2

-

- erb-b2 receptor tyrosine kinase 2

-

- EGFR

-

- epidermal growth factor receptor

-

- FDR

-

- false discovery rate

-

- FLT3

-

- Fms Related Tyrosine Kinase 3

-

- GEO

-

- Gene Expression Omnibus

-

- GWAS

-

- genome-wide association study

-

- HWE

-

- Hardy–Weinberg equilibrium

-

- LD

-

- linkage disequilibrium

-

- LINCS

-

- Library of Integrated Network-Based Cellular Signatures

-

- MAF

-

- minor allele frequency

-

- MDS

-

- multidimensional scaling

-

- PCA

-

- principal component analysis

-

- PD-1

-

- programmed cell death protein 1

-

- PD-L1

-

- programmed death-ligand 1

-

- RNA-Seq

-

- RNA-sequencing

-

- SNP

-

- single nucleotide polymorphism

-

- SOAP

-

- Short Oligonucleotide Analysis Package

-

- Th2

-

- T helper cell type 2

-

- VIPER

-

- virtual inference of protein activity by enriched regulon analysis

-

- WGCNA

-

- weighted gene co-expression network analysis

-

- WGS

-

- whole-genome sequencing