Search for medical information for chronic rhinosinusitis through an artificial intelligence ChatBot

Abstract

Objectives

Artificial intelligence is evolving and significantly impacting health care, promising to transform access to medical information. With the rise of medical misinformation and frequent internet searches for health-related advice, there is a growing demand for reliable patient information. This study assesses the effectiveness of ChatGPT in providing information and treatment options for chronic rhinosinusitis (CRS).

Methods

Six inputs were entered into ChatGPT regarding the definition, prevalence, causes, symptoms, treatment options, and postoperative complications of CRS. International Consensus Statement on Allergy and Rhinology guidelines for Rhinosinusitis was the gold standard for evaluating the answers. The inputs were categorized into three categories and Flesch–Kincaid readability, ANOVA and trend analysis tests were used to assess them.

Results

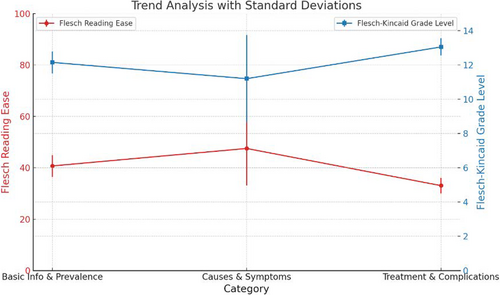

Although some discrepancies were found regarding CRS, ChatGPT's answers were largely in line with existing literature. Mean Flesch Reading Ease, Flesch–Kincaid Grade Level and passive voice percentage were (40.7%, 12.15%, 22.5%) for basic information and prevalence category, (47.5%, 11.2%, 11.1%) for causes and symptoms category, (33.05%, 13.05%, 22.25%) for treatment and complications, and (40.42%, 12.13%, 18.62%) across all categories. ANOVA indicated no statistically significant differences in readability across the categories (p-values: Flesch Reading Ease = 0.385, Flesch–Kincaid Grade Level = 0.555, Passive Sentences = 0.601). Trend analysis revealed readability varied slightly, with a general increase in complexity.

Conclusion

ChatGPT is a developing tool potentially useful for patients and medical professionals to access medical information. However, caution is advised as its answers may not be fully accurate compared to clinical guidelines or suitable for patients with varying educational backgrounds.

Level of evidence: 4.

1 INTRODUCTION

Artificial intelligence (AI) is a rapidly growing field that has the potential to revolutionize many industries, including health care.1 One of the most flourishing subfields of AI is machine learning (ML), which has gained great recognition since the 1990's.2 ML allows computers to learn by automatically recognizing significant patterns and relations within large amounts of data without the need for explicit programming.3 In recent years, ML's algorithm has witnessed great improvements, allowing its applications to become beneficial across many fields.4 Tasks that would normally require human intelligence such as understanding natural language, recognizing images, and making decisions are now being performed by AI.5

With the rise of technology, the use of the internet to search for health-related information (HRI) has become readily accessible for many people around the globe. However, medical information may be inappropriate or even harmful because of existing unverified content and a lack of strict online regulations.6 Moreover, even if the information is accurate, some resources may use language above the lay level understanding of the public, rendering it effectively inaccessible.6

With the exponential evolution of online search demands comes the growing need for AI to revolutionize how we access dependable medical information. AI has already been successfully applied in the health care field in recent years. In the field of Neurology, an AI system was developed to restore the control of movement in patients with quadriplegia.7 In the field of Dermatology, AI-based tools are being used to evaluate the severity of psoriasis8 and to distinguish between onychomycosis and healthy nails.9 In Otolaryngology, Powell et al. provided a proof of concept that human phonation can be decoded by AI to help in the diagnosis of voice disorders.10 In the field of ophthalmology, researchers at Google developed and trained a deep convolutional neural network on thousands of retinal fundus images to classify diabetic retinopathy and macular edema in adults with diabetes.11 In primary care fields, physicians can utilize AI to transcribe their notes, analyze patient discussions, and automatically input the necessary information into EHR systems.12 Nevertheless, AI's ability to provide accurate and comprehensible medical information for various medical topics and disorders has yet to be proven via extensive demonstration.13 Therefore, in this study, we sought to examine whether an AI ChatBot can provide accurate, comprehensive, and understandable information on chronic rhinosinusitis (CRS). CRS was chosen as the prototype for this research due to several compelling reasons. Firstly, CRS is one of the most prevalent conditions in Otolaryngology, affecting approximately 11% of the population and accounting for 15% of otolaryngologic outpatient consultations. This condition leads to significant morbidity, impacting the quality of life of millions of individuals.14, 15 In the US alone, there are over 30 million physician visits related to CRS annually, a figure that exceeds the number of medical visits for hypertension.16 CRS's high health care burden and clinical complexity with its wide range of symptoms, causes, and treatments provides a robust test for AI-generated medical content. By evaluating ChatGPT's performance in providing information on a condition as widespread and multifaceted as CRS, we aim to assess its potential as a reliable tool for medical education and patient information.

2 MATERIALS AND METHODS

2.1 Generating medical information

The following data were generated on April 1, 2024. The website is accessible through OpenAI.

To examine ChatGPT's ability to respond with appropriate medical information, we provided the AI ChatBot with inputs in the form of questions about CRS and recorded the responses. These inputs included queries about CRS symptoms and questions about the disorder. A total of six unique ChatGPT outputs were examined, corresponding to the six questions posed. The requests were then categorized into several categories to fully evaluate the ChatBot's knowledge of CRS. ChatGPT's answers were then compared to ICAR (International consensus statement on Allergy and rhinology guidelines for Rhinosinusitis) guidelines to evaluate their accuracy.

ChatGPT-3.5 uses an algorithm that is probabilistic in nature. In other words, it utilizes random sampling to generate a wide variety of responses, possibly including different answers to the same query. This investigation only included ChatGPT's initial answer to each query without regenerating the answers. Additional clarifications or explanations were not permitted. All queries were entered into a ChatGPT account owned by the author in a single day, guaranteeing accuracy in grammar and syntax. We placed each query into a new dialogue window to eliminate confounding factors and guarantee the accuracy and precision of the responses since ChatGPT-3.5 can adjust from the details of every interaction.

The study did not require institutional review board (IRB) approval from Rutgers New Jersey Medical School IRB since it does not utilize human participants and no patient identifying information was used.

2.2 Data analysis

We conducted a thorough linguistic examination to assess the readability and complexity of the AI-generated responses. To accomplish this, we utilized the Flesch Reading Ease and Flesch–Kincaid Grade Level metrics, which are established measures that provide insights into the readability and the educational level required to comprehend the material. These metrics have been widely applied in numerous studies to evaluate the accessibility of online content for individuals facing various conditions, such as ACL injury, glaucoma, and dog bites.17-19 In our study, we first determined the average (mean) and variability (standard deviation) for both the Flesch Reading Ease and Flesch–Kincaid Grade Level indices, as well as for the percentage of passive sentences, to evaluate ChatGPT's overall readability performance. The reason we also analyzed passive sentence percentage is that it can affect the readability and comprehension of the text. Passive constructions are generally harder to read and understand, particularly for individuals with lower literacy levels.20 Next, we organized the six questions pertaining to CRS into three distinct categories: basic information and prevalence, causes and symptoms, and treatment and complications. For each category, we computed the mean and standard deviation for the Flesch Reading Ease, Flesch–Kincaid Grade Level, and the passive sentence percentage for ChatGPT's responses. These initial steps paved the way for more thorough statistical analyses, including ANOVA and trend analysis, enabling us to investigate whether ChatGPT's readability performance varied across different question categories. ANOVA is used to compare the means of three or more groups to determine if there are any statistically significant differences among them. In this study, ANOVA was used to compare the means of the readability metrics across the three different categories of questions. The assumptions for ANOVA were also verified prior to analysis. Trend analysis was conducted to observe patterns and shifts in the readability metrics across the different categories. The mean and standard deviation for each readability metric were plotted to visualize trends in the data. This analysis helped to identify any systematic changes in readability and complexity of the responses based on the type of information provided. This comprehensive analytical approach allowed us to deeply understand the textual qualities of the AI-generated content and thoroughly evaluate its suitability for patient education.

3 RESULTS

3.1 Qualitative analysis

Figure 1 illustrates the response from ChatGPT when asked about the definition of CRS. Figure 2 displays the ChatGPT-provided prevalence data for CRS. Figures 3-8 detail the causes, symptoms, treatments, and postoperative complications of CRS, respectively, as described by ChatGPT.

We first want to report the accuracy of ChatGPT's answers to each of the questions.

For the definition question (Figure 1), ChatGPT defines CRS as it is commonly defined in the medical literature—with a symptom duration of at least 12 weeks for CRS diagnosis to be established.21 However, when compared to ICAR guidelines, ChatGPT failed to mention that establishing a diagnosis of CRS requires two of four main symptoms (Pressure/pain in face, anosmia/hyposmia, nasal obstruction/blockage, and mucopurulent nasal drainage) to be present for at least 12 weeks in addition to objective evidence on physical exam (purulence from paranasal sinuses or osteomeatal complex, polyps, edema or evidence of inflammation on nasal endoscopy or CT), since symptoms alone have low specificity for CRS diagnosis.21-23 ChatGPT also failed to mention that the presence of polyps further classifies CRS to CRSsNP (CRS without nasal polyps) or CRSwNP (CRS with nasal polyps), an important omission as treatment differs based on disease subtype according to ICAR.23

As for the prevalence question (Figure 2), ChatGPT reported a prevalence of 2%–5% for CRS in the US and Europe, and that the prevalence has been increasing over the past few years. However, ChatGPT's source for this percentage is unclear since many sources reports a rate of >10% in the US and Europe for the general population.24, 25 ICAR, on the other hand, reports a prevalence in the range of 2.1% to 13.8% in the US and 6.9% to 27.1% in Europe.23 ICAR attributes the large difference in range between the lower and the upper limits to the fact that the diagnosis of CRS requires objective evidence, which makes it difficult to determine a true prevalence by ICAR.23

When asked about the causes of CRS (Figure 3), ChatGPT's answer aligned with the ICAR guidelines in that the exact etiology of CRS involves multiple factors.23, 26-30 The ChatBot then proceeded to mention some of the cited causes, including lifestyle and environmental factors (i.e., occupational hazards).31 ChatGPT also explained how each factor can contribute to or increase the risk of developing CRS (i.e., structural abnormalities and nasal polyps can contribute to developing CRS by blocking the sinuses).32-34 In the literature, some lifestyle factors are proven in affecting the development of CRS such as exposure to hair-care products, dust, fumes, cleaning agents, allergens, and even cold, dry and low elevation areas.35-37 Another study even found a 2.5 fold increased risk of CRS development with residential proximity (within 2 km radius) to commercial pesticides application.38 However, according to ICAR guidelines, the link between environmental or lifestyle factors and CRS is very weakly supported.23 The lifestyle factor that is most strongly associated with CRS is tobacco smoke exposure, according to ICAR.23 Even though ChatGPT mentioned that smoking is associated with CRS, it did not highlight the importance of this risk factor and only mentioned it as a potential factor. In other words, in this case, ChatGPT's answer is not entirely correct according to ICAR but correct according to other sources.

When asked about the symptoms of CRS (Figure 4), ChatGPT clearly provided the most common symptoms and even the less common ones according to ICAR.22, 23, 39 At the end, it also correctly provided some caveats: (1) Symptoms may not always be persistent and can also be intermittent in nature. (2) CRS symptoms may fluctuate in severity. (3) Some patients might have mild symptoms, but others may suffer severe symptoms that affect their quality of life. All of the caveats are consistent with ICAR.23, 30, 40

Concerning the treatment options for CRS (Figures 5 and 6), ChatGPT correctly answered that treatment could be medical or surgical.23, 41 The answer also contained a list of medically oriented treatments with accurate corresponding rationales and another list of procedures and surgical techniques.22, 23, 42-45 ChatGPT also delved into some accurate details as to how each procedure is performed.23, 44, 45 Again, ChatGPT aptly mentioned that the treatment plan may vary depending on each individual's case. However, ChatGPT mentioned mucolytics as part of the medical management for CRS, but ICAR did not provide any recommendations regarding their use due to insufficient evidence.23 ChatGPT also did not reveal that most of the people who have CRS report severe symptoms and a lack of satisfaction with the treatment options they currently undergo.46

ChatGPT also identified the most common postoperative complications and provided useful information on their management (Figures 7 and 8).47 One of the most significant postoperative complications that ChatGPT identified was infection, which is a typical concern following any surgical operation48 and aligns with ICAR.23 This risk can be reduced, as ChatGPT correctly noted, by adhering to the proper postoperative care guidelines and taking prescribed antibiotics.23, 47-51 Prophylactic antibiotics have been shown in multiple studies, according to ICAR, to significantly reduce the incidence of postoperative infections in individuals undergoing sinus surgery.23, 49 In addition, ChatGPT, consistent with ICAR, mentioned persistent or recurrent symptoms such as anosmia, epistaxis, swelling, bruising, and scarring, and other common postoperative complications that patients may experience after surgery.23, 50, 51 In general, ChatGPT's response emphasizes the value of consulting a surgeon to learn about the risks and possible complications associated with the procedure as well as the anticipated length of recovery. To reduce the risk of complications, patients should also be provided with suitable postoperative care advice.47, 51

3.2 Quantitative analysis

3.2.1 Statistical analysis

We meticulously assessed the Flesch Reading Ease, Flesch–Kincaid Grade Level, and the percentage of passive sentences across three distinct categories: basic information and prevalence, causes and symptoms, and treatment and complications.

3.3 Overall readability metrics

Across all categories, our findings (Table 1) revealed an average Flesch Reading Ease score of 40.42 (SD = 9.43), signifying that the material is challenging for most readers. The Flesch–Kincaid Grade Level averaged at 12.13 (SD = 1.45) indicates that the content is suitable for an audience with at least a high school reading level. Passive sentence construction was employed in 18.62% (SD = 10.85%) of the sentences, suggesting a moderate use of passive voice.

| Category | Flesch reading ease score | Flesch–Kincaid grade level | Passive sentences |

|---|---|---|---|

| Q1: Definition of chronic rhinosinusitis | 37.7 | 12.6 | 25% |

| Q2: Prevalence of chronic rhinosinusitis | 43.7 | 11.7 | 20% |

| Q3: Causes of chronic rhinosinusitis | 37.3 | 13 | 22.2% |

| Q4: Symptoms of chronic rhinosinusitis | 57.7 | 9.4 | 0% |

| Q5: Treatments for chronic rhinosinusitis | 30.9 | 13.4 | 31.2% |

| Q6: Postoperative complications | 35.2 | 12.7 | 13.3% |

| Mean ± Standard deviation | 40.42 ± 9.43 | 12.13 ± 1.45 | 18.62 ± 10.85% |

3.4 Category-specific readability metrics

Table 2 displays the readability metrics across the three questions' categories: basic information and prevalence, causes and symptoms, and treatment and complications. Mean Flesch Reading Ease, Flesch–Kincaid Grade Level and passive voice percentage were (40.7 ± 4.24, 12.15 ± 0.64, 22.5% ± 3.54%) for basic information and prevalence category, (47.5 ± 14.42, 11.2 ± 2.55, 11.1% ± 15.7%) for causes and symptoms category, (33.05 ± 3.04, 13.05 ± 0.49, 22.25% ± 12.66%) for treatment and complications. This shows variations in Flesch Reading Ease and Flesch–Kincaid Grade Level scores, indicating the complexity of each category. The treatment and complications section, for example, has the lowest Reading ease score and highest Flesch–Kincaid Grade level. Additionally, the Passive Sentences percentage highlights variations in writing style.

| Category | Flesch reading ease score (mean ± standard deviation) | Flesch–Kincaid grade level (mean ± standard deviation) | Passive sentences (%) (mean ± standard deviation) |

|---|---|---|---|

| Basic information and prevalence | 40.7 ± 4.24 | 12.15 ± 0.64 | 22.5 ± 3.54% |

| Causes and symptoms | 47.5 ± 14.42 | 11.2 ± 2.55 | 11.1 ± 15.7% |

| Treatment and complications | 33.05 ± 3.04 | 13.05 ± 0.49 | 22.25 ± 12.66% |

3.5 Statistical significance

Applying ANOVA tests to these metrics, we ascertained the p-values: Flesch Reading Ease (p = .385), Flesch–Kincaid Grade Level (p = .555), and Passive Sentences (p = .601), all suggesting no statistically significant differences in the readability across the different categories.

3.6 Trend analysis

A trend analysis with standard deviations was conducted to visualize the readability shifts between categories (Figure 9). The Flesch Reading Ease scores displayed a nominal increase from basic information to causes and symptoms but dropped in the treatment and complications category. The Flesch–Kincaid Grade Level indicated a consistent upward trajectory, signifying increasing textual complexity. The standard deviations highlighted the variability within each category, particularly pronounced in the causes and symptoms segment for both Flesch Reading Ease and Passive Sentences.

4 DISCUSSION

ChatGPT was able to respond to all questions, from defining CRS to describing the causes of disease, symptoms, postoperative complications, and even detailing the roles that rehabilitation and patient education may play. Each response's sentences closely adhered to appropriate grammatical rules and sentence structure.

As for the statistical analysis, the average Flesch Reading Ease score for all categories combined was moderately low (Table 1). This score aligns with the style typically found in academic or professional documents, suggesting that, while ChatGPT's responses are informative, they may not be entirely accessible to individuals without a higher educational background.

Interestingly, the readability scores did not vary significantly across different content categories, as evidenced by the ANOVA test results. This consistency in complexity and readability is beneficial in one aspect, as it suggests that ChatGPT maintains a uniform level of language complexity regardless of the topic complexity. However, it also implies that the AI does not automatically adjust its language complexity in response to the varying difficulty levels of the subject matter. For instance, one might expect the language around basic information and prevalence to be more accessible than that regarding treatment and complications, which inherently deals with more complex medical procedures and concepts.

Even though ANOVA found no significant differences in the readability scores, it is important to mention that the increasing trend in the Flesch–Kincaid Grade Level across the categories may reflect the intrinsic complexity of the medical information as it progresses from basic definitions to detailed medical procedures and potential complications. However, notably, the causes and symptoms category demonstrated a higher Flesch Reading Ease and a lower Flesch–Kincaid Grade Level compared to the other categories (Table 2). The lower percentage of passive sentences in this category may contribute to its relative readability.

It is important to mention that relying solely on readability metrics to determine if medical material is appropriate for patients has significant drawbacks. Readability metrics like the Flesch–Kincaid assess linguistic simplicity but overlook critical aspects such as health literacy and content accuracy.

Health literacy involves understanding medical terms and concepts, which readability metrics do not measure. Even easy-to-read text can be confusing if it contains medical jargon or complex ideas that are not clearly explained. Furthermore, readability metrics do not ensure the accuracy of the information, which is crucial for patient safety and effective health management.

To create truly patient-appropriate medical material, a comprehensive approach is needed. This approach should combine readability assessments with considerations of health literacy and content accuracy. This means using plain language, explaining medical terms, incorporating visual aids, and having medical professionals review the content for accuracy and relevance.

In the context of patient education, it is also crucial to consider the health literacy of the audience. The National Assessment of Adult Literacy reports that only 12% of U.S. adults have proficient health literacy.52 Given that many adults may struggle with complex health information, the findings of this study suggest that there is a need for further optimization to enhance readability and ensure that the information is comprehensible to all patients, irrespective of their educational background. Future iterations of AI-driven platforms could focus on dynamically adjusting language complexity based on the user's comprehension level, potentially assessed through preliminary questions or interactive dialogue. Furthermore, incorporating visual aids and interactive elements could enhance understanding and engagement, particularly for topics that are inherently complex.53, 54

This study has a few limitations, however. The collection of data was performed at a specific moment in time, which poses a challenge in the rapidly changing domain of AI. Furthermore, the qualitative approach of this study inherently carries the potential for some level of investigator bias. The study also acknowledges the impact of variations in ChatGPT's responses due to differences in how questions are phrased, alongside the restricted range of question sources, as potential areas for further exploration. Future studies could benefit from comparing ChatGPT to other AI models to gain a broader perspective on its effectiveness and limitations in medical and patient education contexts. Future studies can also explore the variance in ChatGPT's responses over multiple instances and with follow-up questions. This is a valuable area for future research and could investigate the consistency and reliability of the AI over repeated interactions, providing a more comprehensive understanding of its performance. Nonetheless, we believe that despite these limitations, our study offers valuable insights into an information source that is increasingly prevalent in today's digital age.

ChatGPT offers several notable advantages in providing medical information. Its primary strength lies in accessibility; it allows users to obtain medical information quickly and easily, regardless of their location. This can be particularly beneficial for individuals in remote areas or those who face barriers to accessing health care professionals. The speed at which ChatGPT generates responses is another significant advantage, providing instant answers to medical queries.

However, there are significant disadvantages to using ChatGPT for medical information. One major issue is accuracy; the inaccuracies in some of ChatGPT's answers highlight the limitation that ChatGPT's responses are only as reliable as the data it has been trained on. Another disadvantage is the omission of critical details; for example, ChatGPT failed to mention the need for objective evidence in diagnosing CRS, which is crucial for accurate diagnosis and treatment planning. The readability and comprehensibility of ChatGPT's responses also pose a challenge, as the analysis revealed that its outputs are often at a high reading level, making them unsuitable for individuals with limited health literacy. Additionally, ChatGPT's responses lack the nuance and context-specific advice that human health care providers can offer, limiting its ability to tailor information based on individual patient histories or specific circumstances.

To enhance the quality of AI-generated medical information, several methods can be implemented. Integrating AI systems with verified medical databases like PubMed and Medline ensures reliance on current and reliable sources. Regularly updating training data with the latest medical research and guidelines can reduce outdated information. A human-in-the-loop approach, where medical professionals review AI-generated content, can identify and correct discrepancies. Improving the AI's contextual understanding and prioritization of critical clinical information can enhance response relevance and completeness. Increasing transparency about how responses are generated and sourced can also build trust and reliability.

Even though ChatGPT should never be considered a replacement to medical professionals' advice, it can enhance professional medical information by serving as a supplementary resource to provide preliminary information and answer common questions, preparing patients for consultations. It can also explain complex terms in simple language to improve understanding, but medical professionals should review its responses to ensure accuracy. By adjusting responses based on health literacy levels and incorporating visual aids, ChatGPT can make complex information more accessible.

In summary, while ChatGPT presents a promising tool for enhancing access to medical information and can serve as a useful starting point for patient education and general inquiries, it should not replace professional medical advice. Ensuring the accuracy, completeness, and readability of its responses, and providing contextually relevant and individualized information, are critical areas for future development. Also, the current level of language complexity highlights an area for improvement. To fully harness the educational potential of AI in health care, there must be a concerted effort to tailor the readability of content to the diverse needs of patients, ensuring that information is not only accurate but also accessible to those it intends to serve.

5 CONCLUSION

ChatGPT is a rapidly developing tool that may, soon, become an invaluable asset to the health care system. As of today, this tool may be useful for patients who have difficulty accessing medical information due to geographic or financial constraints. The AI ChatBot has a user-friendly interface and a unique ability to understand a patient's natural language. Nevertheless, the content generated by the ChatBot may be inaccurate, biased, or hard for many patients to understand so, while promising, it is not yet time for AI to be considered a reliable source for attaining medical information especially for patients with limited health literacy. Moreover, because each patient's case is unique, AI is not yet be able to provide precise recommendations to individual patients in the same way that human physicians are able to provide.

FUNDING INFORMATION

This research did not receive any specific grant from funding agencies in the public, commercial, or not-for-profit sectors.

CONFLICT OF INTEREST STATEMENT

The authors declare that they have no conflict of interest.