Artificial intelligence in the diagnosis of cerebrovascular diseases using magnetic resonance imaging: A scoping review

Yituo Wang and Zeru Zhang contributed equally to this work and shared the co-first authorship.

Abstract

The field of radiology is currently undergoing revolutionary changes owing to the increasing application of artificial intelligence (AI). This scoping review identifies and summarizes the technical methods and clinical applications of AI applied to magnetic resonance imaging of cerebrovascular diseases (CVDs). Preferred Reporting Items for Systematic reviews and Meta-Analyses extension for Scoping Reviews was adopted and articles listed in PubMed and Cochrane databases from January 1, 2018 to December 31, 2023, were assessed. In total, 67 articles met the eligibility criteria. We obtained a general overview of the field, including lesion types, sample sizes, data sources, and databases and found that nearly half of the studies used multisequence magnetic resonance as the input. Both classical machine learning and deep learning were widely used. The evaluation metrics varied according to the five main algorithm tasks of classification, detection, segmentation, estimation, and generation. Cross-validation was primarily used with only one third of the included studies using external validation. We also illustrate the key questions of the CVD research studies and grade the clinical utility of their AI solutions. Although most attention is devoted to improving the performance of AI models, this scoping review provides information on the availability of algorithms, reliability of external validations, and consistency of evaluation metrics and may facilitate improved clinical applicability and acceptance.

Abbreviations

-

- AI

-

- artificial intelligence

-

- CMB

-

- cerebral microbleeds

-

- CNN

-

- convolutional neural network

-

- CVD

-

- cerebrovascular disease

-

- DL

-

- deep learning

-

- ICA

-

- intracranial aneurysm

-

- ML

-

- machine learning

-

- MMD

-

- moyamoya disease

1 INTRODUCTION

In recent years, the field of artificial intelligence (AI)-assisted cerebral disease diagnosis has undergone tremendous development and achieved significant performance [1, 2]. AI tools can increase the accuracy, reliability, and efficiency of image analysis [3] whereas subjective diagnosis is relatively error-prone and time-consuming [4]. The literature on recent developments within AI-assisted cerebral disease diagnosis has primarily focused on brain tumors [5], although the latest advances in AI-assisted imaging of cerebrovascular diseases (CVDs) have received increasing attention.

AI-assisted MR imaging of CVDs has been gradually recognized in clinical diagnosis. In studies on stroke, machine learning or deep learning approaches have been developed to identify stroke within 4.5 h, predict the outcomes of stroke, and segment the lesions resulting from stroke [6-10]. In studies on cerebral microbleeds (CMB), three-dimensional convolutional neural networks (3D CNNs) have been used to detect or segment lesions and to further differential diagnosis [11-14]. Currently, researchers are trying to reduce the False Positive (FP) rate of experimental models. In studies on intracranial aneurysms (ICA), researchers are committed to improving deep learning (DL) models to increase the sensitivity of detection. Commonly used models include U-Net, ResNet, and DeepMedic [15-19]. In the study of moyamoya disease (MMD), DL models are mainly used to identify positive patients or distinguish clinical types [20-22].

AI-assisted diagnostic methods have achieved significant performance in various medical tasks within the field of CVD. However, several main questions remain in this field, including which MRI sequence is most commonly used for automated diagnosis of CVDs, whether there is a specific algorithm with high accuracy, what image preprocessing steps are necessary, what methods are used to validate the results, which evaluation matrices are most commonly used, and what are the key questions of the research studies and how far are their proposed AI systems from clinical practice? To the best of our knowledge, a comprehensive review of AI applications applied to MR imaging of CVDs is still lacking. Therefore, given the rapidly growing literature, we present a scoping review to identify and summarize the technical methods and related clinical applications of the field.

2 MATERIALS AND METHODS

- (1)

Studies covered the period January 1, 2018 to December 31, 2023;

- (2)

The human subjects investigated must be diagnosed with one type of known CVD;

- (3)

A method must be applied to one or more commonly acquired MR sequences;

- (4)

The proposed AI methods should involve machine learning and/or deep learning algorithms;

- (5)

The efficacy of the method should be reported by providing quantitative indicators.

- (1)

Non-English language studies;

- (2)

Nonoriginal studies or studies where the full-text was not available (i.e., letter to the editor, literature review, protocol, or conference abstract);

- (3)

Studies did not perform AI methods or did not provide outcome measures evaluating the method;

- (4)

Studies did not provide information regarding the gold standard or a reference measurement.

2.1 Developing the review questions

- (1)

Which MR sequences are most commonly used for AI applications in the field of CVD?

- (2)

What are the specific tasks and theories behind existing algorithms?

- (3)

How many commonly used methods of model validation were applied?

- (4)

Do the most commonly used evaluation metrics vary according to different algorithm tasks?

- (5)

What are the key questions of the research and how far is the proposed AI from clinical practice?

2.2 Conducting the search strategy

Initial search was conducted using the PubMed and Cochrane Library databases. The Medical Subject Headings (MeSH) used were: “cerebrovascular disease,” “magnetic resonance imaging,” “artificial intelligence,” and their associated terminologies. The search covered the period from January 1, 2018 to December 31, 2023.

2.3 Study selection

- (1)

Title level: exclusion of clearly ineligible articles.

- (2)

Abstract Level: Studies were distributed equally to each of the authors and were reviewed independently. The decision regarding the eligibility of the studies for inclusion was based on the previously mentioned criteria. Any doubts or conflicts among the authors were resolved through discussions and consultations for each article.

- (3)

Full-text level: refinement of inclusion and exclusion criteria based on the literature content; identification of three core characteristics: CVD lesion type; MR data used in the construction of the various models; and AI methods involved in machine learning and/or deep learning algorithms.

2.4 Data extraction

- (1)

Bibliography (article type, publication year, first author's name, and article title);

- (2)

Study cohort (lesion type, sample size of patients, data source and database, and multicenter data);

- (3)

MR sequences;

- (4)

Algorithms and computational theories (classic machine learning [ML], DL, ML + DL, algorithm tasks, preprocessing, and hardware);

- (5)

Validation of algorithms or models (external validation and cross-validation); and

- (6)

Evaluation metrics for different algorithm tasks (classification, detection, segmentation, generation, and estimation).

3 RESULTS

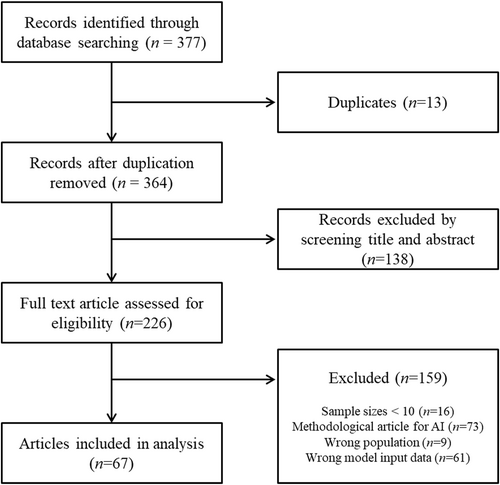

A total of 377 articles were identified in the initial literature review. In the hierarchical screening process, 67 studies met the eligibility criteria and were included in the scoping review. A flowchart outlining the details of the literature search is shown in Figure 1.

Flow chart showing the article selection process.

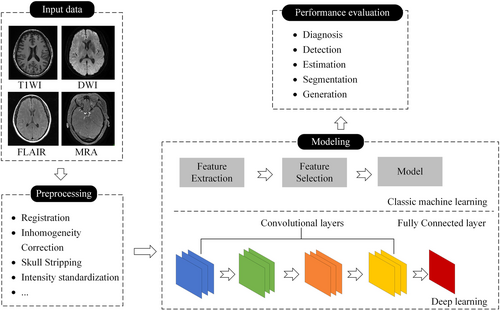

AI-aided medical imaging mainly comprises of the following four parts: input data, preprocessing, modeling, and performance evaluation. AI methods can be primarily categorized as classic ML or DL, as shown in Figure 2.

The workflow of AI-assisted medical imaging systems. AI, artificial intelligence; DWI, diffusion-weighted imaging; FLAIR, fluid attenuated inversion recovery; MRA, magnetic resonance angiography; T1WI, T1-weighted imaging.

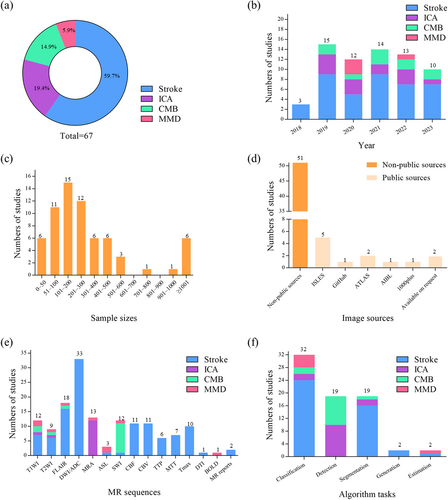

Among the eligible studies, 66 were published in journals and only one was published in conference proceedings. The lesion types and distributions are shown in Figure 3a. Stroke (59.7%) [6-10, 25-59] accounted for more than half of the included articles, while the remaining lesion types were ICA (19.4%) [15-19, 60-67], CMB (14.9%) [11-14, 68-73], and MMD (5.9%) [20-22, 74]. The substantial growth in the field, as represented by the number of studies published annually, is shown in Figure 3b.

Number of lesion types, articles published per year, sample sizes, image sources, MR sequences, and algorithm tasks used in the eligible articles. (a) Number of lesion types; (b) Articles published per year; (c) Sample sizes; (d) Image sources; (e) MR sequences; (f) Algorithm tasks. ASL, arterial spin labeling; ATLAS, anatomical tracings of lesions after stroke; BOLD, blood oxygen level-dependent; CBF, cerebral blood flow; CBV, cerebral blood volume; CMB, cerebral micro bleeds; DTI, diffusion tensor imaging; DWI/ADC, diffusion-weighted imaging/apparent diffusion coefficient; FLAIR, fluid attenuated inversion recovery; ICA, intracranial aneurysm; ISLES, ischemic stroke lesion segmentation challenge; MMD, moyamoya disease; MRA, magnetic resonance angiography; MTT, mean transit time; SWI, susceptibility-weighted imaging; T1WI, T1-weighted imaging; T2WI, T2-weighted imaging; TTP, time to peak.

3.1 Input data

The input data used for the basis of the AI learning vary according to the clinical task, with models using the input data to learn associations between specific features and outcomes of interest. These data may include, but are not limited to, demographics, physical examination notes, and clinical images.

The distribution of sample sizes varied widely between the studies. Of the included studies, 50/67 used more than 100 dataset entries, 6/67 used more than 1000, and 6/67 assessed 50 or fewer participants. Among the eligible studies, 31/67 described a method validated on images obtained from multicenter datasets. Moreover, the majority of image data were collected from nonpublic sources (51/67). Among the studies using data collected from public sources, The Ischemic Stroke Lesion Segmentation Challenge was the most commonly used database (n = 5).

MRI has been widely used for brain analysis and nearly half of the proposed methods used multisequence scans as input (29/67). Various MRI modalities have previously been used for AI to analyze CVDs and extract phenotypic features, and the MR sequences used as input data in the reviewed studies are shown in Figure 3e.

3.2 Preprocessing

Intensity inhomogeneity, various artifacts, and noise pose challenges when automated analysis is applied to MRI and appropriate preprocessing methods are required to remove noise, eliminate irrelevant information, recover useful information, and enhance the detectability of relevant information, thereby reducing the complexity and computation time of image analysis and modeling. The preprocessing procedures are including registration (n = 25), N3/N4 bias field correction (n = 9), skull stripping (n = 24), spatial smoothing (n = 5), intensity standardization (n = 38), and filtering (n = 7) (for explanation of the preprocessing methods see Table S2). To solve these problems, various image processing programs have been applied to MRI images including FSL, BET, Robex, FreeSurfer, and SPM.

3.3 Modeling

Modeling, being the core of AI methods, involves extracting biomarkers for disease identification from the original source data and performing image processing (image segmentation and image generation). Computer-aided diagnosis (lesion detection, disease classification, and physiological index estimation) is then performed based on the extracted features (Figure 3f). Details of particular algorithm tasks are explained in Table S3.

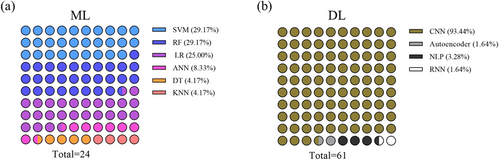

The classic ML method requires the selection of appropriate features using feature extraction and selection methods, which rely on expert experience, whereas useful representations and features are learned automatically in DL. Various classic ML models (n = 14) were used for analyzing CVD including support vector machines (SVM, 50.0%), random forests (RF, 50.0%), logistic regression (LR, 42.9%), artificial neural networks (ANN, 14.3%), decision trees (DT, 7.1%), and k-nearest neighbor (KNN, 7.1%). The deep neural network architecture used in DL (n = 61) varied with the clinical tasks, with most studies using a CNN (93.4%) composed of convolutional layers, pooling layers, and activation layers such as ResNet, VGG, U-Net, and DeepMedic. Other DL methods including autoencoding (n = 1), natural language processing (NLP, n = 2), and recurrent neural networks (RNN, n = 1) were also used. Importantly, 7/67 studies combined ML and DL algorithms. The distribution of the AI methods used for diagnosing CVD is shown in Figure 4.

Numbers of classic machine learning and deep learning methods used in the eligible articles. (a) Machine learning; (b) Deep learning. ANN, artificial neural network; CNN, convolutional neural network; DL, deep learning; DT, decision tree; KNN, k nearest neighbor; LR, logistic regression; ML, machine learning; NLP, natural language processing; RF, random forest; RNN, recurrent neural network; SVM, support vector machine.

3.4 Performance evaluation

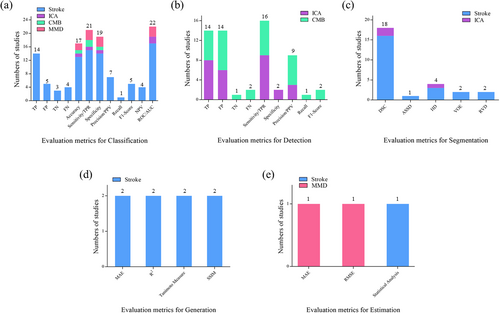

Performance measurement is an essential task when analyzing the feasibility of methods for clinical application. The benchmark measures used to evaluate the reviewed studies are shown in Figure 5.

Numbers of evaluation metrics used for different algorithm tasks in the eligible articles. (a) Evaluation metrics for classification; (b) Evaluation metrics for detection; (c) Evaluation metrics for segmentation; (d) Evaluation metrics for generation; (e) Evaluation metrics for estimation. ASSD, average symmetric surface distance; DSC, dice similarity coefficient; FN, false negative; FP, false positive; HD, Hausdorff distance; MAE, mean absolute error; NPV, negative predictive value; PPV, positive predictive value; RMSE, root mean square error; ROC/AUC, receiver operating characteristic curve/area under the curve; RVD, relative volume difference; SSIM, structural similarity index measurement; TN, true negative; TP, true positive; TPR, true positive rate; VOE, volumetric overlap error.

To validate the generalization ability of the model, cross-validation methods were used to reduce variations and avoid bias within a single dataset. These included k-fold cross-validation (n = 34), nested k-fold cross-validation (n = 1), and leave-one-out validation (n = 1), methods that are suitable for small sample datasets, particularly for medial image analysis where the number of positive samples may be relatively low. External validation defines the extent to which the findings of a study can be generalized to other situations, people, settings, and measures. However, only 24/67 studies used external validation to evaluate their models.

3.5 Key research questions and clinical utility grading

We conducted an analysis of the literature regarding the application of AI to MRI of CVDs and identified and categorized the most critical research questions on the basis of the likelihood of their prompt integration into clinical practice. These questions were classified into the following three categories: A, imminent clinical applicability; B, promising potential for clinical translation; and C, unresolved challenges that impede practical implementation in clinical settings. The results are listed in Table 1.

| Lesion types | Key questions to resolve | Clinical utility |

|---|---|---|

| Stroke | Prediction of when the acute stroke occurs [6, 9, 28, 31, 39, 41, 54-56, 58] | A |

| Detection and segmentation of stroke lesions [8, 10, 27, 36, 43, 45, 46, 48, 51] | A&B | |

| Prediction of the size of the final infarct or the ischemic penumbra [32-34, 38, 40, 42, 49, 52, 53, 57] | B | |

| Evaluation of collateral circulation in acute stroke [25, 26, 29] | C | |

| Predicting the Modified Rankin Scale (mRS) scores at 3 months postinfarct [7, 47, 59] | C | |

| ICA | Lesion detection and segmentation [15-19, 60-67] | A |

| Differential diagnosis [64] | C | |

| CMB | Lesion detection [11-14, 68-73] | A |

| MMD | Diagnosis and differential diagnosis [20-22, 74] | C |

- Note: Grading of the clinical application of AI: A, imminent clinical applicability; B, promising potential for clinical translation; and C, unresolved challenges that impede practical implementation in clinical settings.

- Abbreviations: AI, artificial intelligence; CMB, cerebral microbleeds; ICA, intracranial aneurysms; MMD, moyamoya disease.

4 DISCUSSION

Herein, we have presented a scoping review of AI applications applied to MR images of CVD, following the strict PRISMA extension for scoping reviews.

4.1 Question 1. Which MR sequences are most commonly used as AI input in studies on the different types of CVD?

In recent decades, the application of AI to MR images of CVDs has increased substantially. Among the 67 articles reviewed herein, more than half discussed applications in stroke, while the others described an extremely extensive range of CVDs including ICA, CMB, and MMD. The MR sequences used in the applications also varied widely. Among the articles, the application rates for particular sequences were 49.3% for diffusion-weighted imaging/apparent diffusion coefficient (DWI/ADC), 26.9% for Fluid Attenuated Inversion Recovery (FLAIR), and 17.9% for T1-weighted imaging, rates that may reflect the more easily accessible clinical imaging data sources. These MR sequences are commonly used in clinical practice and are convenient for retrospective analysis. Notably, in some particular types of CVDs, the most advantageous MR sequences for conventional diagnosis are still the most commonly used such as DWI/ADC for stroke (33/40), magnetic resonance angiography for ICA (12/13), and susceptibility-weighted imaging for CMB (10/10). In recent years, functional MR techniques such as blood-oxygen level-dependent-functional MRI, diffusion tensor imaging, and arterial spin labeling have expanded the clinical potential of AI for CVD.

Of the 67 articles analyzed in this study, 51 (76.1%) were collected from nonpublic sources and 17 (25.3%) used fewer than 100 dataset entries. These findings may indicate the limited generalizability of the AI models. In data-driven analyses, image quantity and quality are crucial and decisive. Such small sample sizes are insufficient for clinical validation even if the images have originated from multiple scanners. The quantity and quality of datasets greatly impacts model performance, which will further affect the promotion of research results in clinical practice.

4.2 Question 2. What are the specific theories and tasks of existing algorithms?

The algorithms or models most commonly used in AI methods are the classic ML and DL algorithms. In 7/67 articles, both ML and DL were used in conjunction. There is currently no standardized reporting scheme for AI methods, especially DL models. AI researchers have always adjusted parameters to optimize the model. Although the performance advantage of the optimized model was demonstrated by comparing it with the conventional results in most studies, the clinical value of the model was still limited. From a clinician's perspective, the ultimate purpose of AI is to assist in clinical diagnosis, rather than developing increasingly complex models to confuse doctors [75]. Conversely, the goal of AI scientists is to maximize model training and perform continuous optimization. Therefore, increased collaboration between clinicians and AI scientists will promote the effective integration of AI into clinical practice.

In the included studies, AI algorithms were expanded to perform more complex tasks including image classification, detection, segmentation, generation, and estimation. The differences in algorithm tasks are mainly related to the study purpose and type of CVD. The overall rate of image classification and segmentation was nearly 76.1%, with stroke accounting for the highest proportion (40/51). Meanwhile, the overall rate of image detection reached 28.3%, and ICA and CMB accounted for the entire proportion (19/19). These results are associated with clinicians' requirements for AI-assisted diagnoses of different diseases. For stroke, clinicians are generally more concerned about AI-assisted extraction of key features to facilitate lesion classification and prognosis assessment. However, for ICA and CMB, the greatest application value of AI in clinical practice is to help identify lesions and avoid missing a lesion diagnosis. Moreover, the prediction of ICA rupture and differentiation of CMB etiology are important research directions for AI in the future.

4.3 Question 3. How many commonly used model validation methods were applied?

Considering the limitations posed by low numbers of positive medical image samples and the differences between datasets generated by different centers and scanners, external validation of models is recommended. While some external validations have shown models to have good generalization capabilities, studies have also identified a difference in performance between internal and external validations.

Many studies did not perform external validation of the model because of its time-consuming and labor-intensive nature, instead relying on the results from the internal validation data, which may lead to overestimation of the model performance. This overfitting problem effecting ML methods has been well-described. Many studies have used cross-validation to resolve the overfitting problem presented by internal validation. Among them, k-fold cross-validation has been widely used, with the choice of k being mostly between 5 and 10.

In summary, we conclude that external validation beyond a single dataset should be performed to evaluate model generalizability, and efforts should be made to reach statistical consensus across multiple datasets. Moreover, a cross-validation method should always be attempted as an internal validation for the proposed model, particularly in the absence of external validation. However, it should be noted that the proposed model may not be applicable to other unseen datasets even if external validation is performed.

4.4 Question 4. Do the most commonly used evaluation metrics vary according to different algorithm tasks?

Common model evaluation metrics include True Positive (TP), FP, false negative, true negative, accuracy, sensitivity, specificity, and receiver operating characteristic curve/ area under curve (ROC/AUC). The evaluation metrics selected may differ according to the different tasks. In the studies reviewed here, if the task was classification, ROC/AUC, sensitivity/True Positive Rate (TPR), specificity, accuracy, and TP were commonly used; if the task was detection, TP, FP, and sensitivity/TPR were commonly used; if the task was segmentation, the Dice similarity coefficient and Hausdorff distance were commonly used; if the task was generation or estimation, mean square error, root mean square error, and statistical analysis were commonly used. Our results indicate that a complex array of model evaluation metrics have been used, which might have confused clinicians and led to misinterpretation of the conclusions. Therefore, in future AI research, the evaluation metrics should be standardized and easy to understand to facilitate comparisons between different studies.

4.5 Question 5. What are the key questions of the research and how far are the proposed AI models from clinical practice?

4.5.1 Stroke

The initial question addressed by 10 of the 40 articles involved determining the onset of an acute stroke [6, 9, 28, 31, 39, 41, 54-56, 58]. The methodology hinged on detecting a mismatch between DWI and FLAIR to ascertain if the stroke occurred within a critical 4.5 h window. AI applications have shown promising capabilities, achieving accuracy rates between 0.75 and 0.82, sensitivity between 0.70 and 0.82, and specificity ranging from 0.61 to 0.84. In comparison, manual interpretations by radiologists under similar conditions yield slightly lower metrics, suggesting the potential for AI integration into clinical workflows in the near future.

The second area of interest was the detection and segmentation of stroke lesions with nine articles employing DWI sequence images for this purpose [8, 10, 27, 36, 43, 45, 46, 48, 51]. AI proved effective, achieving a Dice coefficient above 0.5. However, challenges remain in areas such as stroke etiological classification [43], offending vessel localization [51], and localization of specific brain regions [27], because of the need for more specialized imaging sequences and complex image preprocessing steps, indicating that clinical application remains distant for these objectives. For subacute and chronic stroke patients, the utility of high-resolution 3D-T1-weighted imaging has been explored, although its clinical value is currently limited.

A third question focuses on predicting the final infarct size and identifying the ischemic penumbra, with this being tackled by 10 studies [32-34, 38, 40, 42, 49, 52, 53, 57]. This involves using DWI images to delineate the infarct core and Tmax images to outline areas of severe ischemia, a crucial consideration for managing acute stroke and evaluating the potential benefits of thrombolytic therapy. Despite the presence of technical challenges, including inaccurate image registration and the confounding effects of reperfusion therapy on predictive accuracy, the field holds substantial promise for clinical translation. This optimism is underpinned by the critical clinical relevance of accurately predicting stroke outcomes and the imperative need for timely intervention in acute stroke management.

A fourth area of study involves evaluating the collateral circulation in acute stroke, with this being explored in three studies [25, 26, 29]. However, because of complex preprocessing requirements and the necessity for manual intervention, the models developed in this area remain distant from practical clinical application.

Finally, a fifth question addresses the prediction of 3-month postinfarction Modified Rankin Scale scores, with three studies venturing into this territory using different imaging sequences [7, 47, 59]. One of the reasons for the low number of studies in this area might be clinical or research priorities: much of the clinical and research focus in the field of stroke recovery was on immediate survival and short-term outcomes. Although the 90-day mark is a critical point for assessing disability and recovery, it might not receive as much research attention as earlier time points [76]. Another reason might be the complexity of predictive modeling: predicting outcomes at the 90-day mark involves accounting for a multitude of factors including the severity of the stroke, the patient's response to treatment, and the presence of complications. This complexity might deter some researchers from focusing on this time point [77]. Research in this domain is still in its infancy, underscoring the need for an expansion of investigations.

4.5.2 Intracranial aneurysms

AI research on ICA has focused on detecting lesions [60] and has yielded significant advances. AI diagnosis for aneurysms larger than 3 mm resulted in heightened sensitivity [66]. Furthermore, efforts to develop AI-based segmentation methods for lesion delineation [61] and precise maximum diameter measurements on 3D data [65] have shown considerable promise, indicating their potential for near-term clinical integration.

In addition, AI has been applied to the differentiation of dissected aneurysms and complex cystic aneurysms on high-resolution sequences [64], although this has been studied less and remains far from clinical application.

4.5.3 Cerebral microbleeds

The detection of CMB has generally been found to be accurate, with ongoing discussions focusing on identifying technologies that can expedite the process while reducing the FP rate. In terms of technological maturity, some advancements have already enabled the integration of CMB detection into clinical practice. From a clinical perspective, the significance of detecting CMB lies primarily in evaluating small vessel disease or traumatic brain injury [69].

4.5.4 Moyamoya disease

AI has shown promise in aiding the diagnosis and differential diagnosis [21] of MMD and in screening patients at high risk of intracerebral hemorrhage [20]. However, research in this area is still limited, and the application of AI to MMD diagnosis in clinical settings remains a distant prospect.

AI has demonstrated substantial potential within the field of imaging diagnostics for CVDs, particularly in the detection of lesions and estimation of the time since ischemic stroke onset. Although certain applications have achieved notable advances, many remain in their formative stages of development. Retool, an internet-based company, has proposed a descriptive classification of AI maturity that encompasses four stages (https://retool.com/blog/state-of-ai-h1-2024): crawl, walk, run, and fly. This classification serves as a useful framework for characterizing the developmental phase of AI technologies across different domains. We used this easy to perform categorization to evaluate the technological maturity of MR-based AI for the diagnosis of CVDs. Overall, the applications remain in the crawling or walking stages, reflecting their early developmental status. This means that they are still in the learning and developmental phase and are far from widespread adoption and high levels of automation.

5 LIMITATIONS

This scoping review has several limitations. First, the greatest challenge was to ensure the accurate extraction of information from the included articles. While the spreadsheet containing detailed information was well designed and rigorously defined, the criteria we sought in the articles were often not clearly shown or information was scarce. The risk of study selection or information extraction biases seemed unavoidable. For this reason, a meeting between all reviewers was held at least once a week to discuss unclear expressions in the eligible articles and finally reach a consensus. Second, the systematic reviews were limited by the search methods. The reviewed articles were collected using designated MeSH terms. Compared with traditional literature searches, MeSH searches have the advantage of being more precise and efficient, although newly published articles may not be included because the databases need time to provide subject headings for these articles. Nevertheless, the results of this scoping review are valuable and comprehensively summarize the current AI practices in the field of CVD diagnosis.

6 CONCLUSIONS

This scoping review identifies and summarizes the technical methods and related clinical applications of AI applied to MR imaging of CVDs. Although the majority of attention is currently devoted to improving the performance of AI models, this scoping review suggests that the availability of algorithms, reliability of validation, and consistency of evaluation metrics may facilitate better clinical applicability and acceptance.

AUTHOR CONTRIBUTIONS

Yituo Wang: Conceptualization (equal); formal analysis (lead); writing—original draft (lead); writing—review & editing (equal). Zeru Zhang: Conceptualization (equal); visualization (equal); writing—original draft (lead). Ying Peng: Data curation (equal); visualization (equal). Silu Chen: Data curation (supporting). Shuai Zhou: Conceptualization (equal). Jiqiang Liu: Conceptualization (equal). Song Gao: Conceptualization (equal); data curation (supporting). Guangming Zhu: Conceptualization (equal). Cong Han: Project administration (lead); writing—original draft (supporting); writing—review & editing (supporting). Bing Wu: Project administration (lead); writing—original draft (equal); writing—review & editing (equal).

ACKNOWLEDGMENTS

None.

CONFLICT OF INTEREST STATEMENT

The authors declare no conflicts of interest.

ETHICS STATEMENT

Not applicable.

INFORMED CONSENT

Not applicable.

Open Research

DATA AVAILABILITY STATEMENT

The data that supports the findings of this study are available in the supplementary material of this article.