Exploring the feasibility of integrating ultra-high field magnetic resonance imaging neuroimaging with multimodal artificial intelligence for clinical diagnostics

Yifan Yuan, Kaitao Chen and Youjia Zhu contributed equally to this work and shared the co-first authorship.

Abstract

Background

The integration of 7 Tesla (7T) magnetic resonance imaging (MRI) with advanced multimodal artificial intelligence (AI) models represents a promising frontier in neuroimaging. The superior spatial resolution of 7TMRI provides detailed visualizations of brain structure, which are crucial forunderstanding complex central nervous system diseases and tumors. Concurrently, the application of multimodal AI to medical images enables interactive imaging-based diagnostic conversation.

Methods

In this paper, we systematically investigate the capacity and feasibility of applying the existing advanced multimodal AI model ChatGPT-4V to 7T MRI under the context of brain tumors. First, we test whether ChatGPT-4V has knowledge about 7T MRI, and whether it can differentiate 7T MRI from 3T MRI. In addition, we explore whether ChatGPT-4V can recognize different 7T MRI modalities and whether it can correctly offer diagnosis of tumors based on single or multiple modality 7T MRI.

Results

ChatGPT-4V exhibited accuracy of 84.4% in 3T-vs-7T differentiation and accuracy of 78.9% in 7T modality recognition. Meanwhile, in a human evaluation with three clinical experts, ChatGPT obtained average scores of 9.27/20 in single modality-based diagnosis and 21.25/25 in multiple modality-based diagnosis. Our study indicates that single-modality diagnosis and the interpretability of diagnostic decisions in clinical practice should be enhanced when ChatGPT-4V is applied to 7T data.

Conclusions

In general, our analysis suggests that such integration has promise as a tool to improve the workflow of diagnostics in neurology, with a potentially transformative impact in the fields of medical image analysis and patient management.

Abbreviations

-

- ADC

-

- apparent diffusion coefficient

-

- AI

-

- artificial intelligence

-

- CEST

-

- chemical exchange saturation transfer

-

- CNS

-

- central nervous system

-

- DWI

-

- diffusion-weighted imaging

-

- FA

-

- fractional anisotropy

-

- GBM

-

- glioblastoma

-

- GPT

-

- generative pre-training transformer

-

- IDH

-

- isocitrate dehydrogenase

-

- LGG

-

- low grade glioma

-

- LLM

-

- large language model

-

- MRI

-

- magnetic resonance imaging

-

- PET

-

- positron emission tomography

-

- TE

-

- echo time

-

- TR

-

- repetition time

1 INTRODUCTION

High-resolution magnetic resonance imaging (MRI) at 3 Tesla (3T) has been pivotal in the diagnosis and management of central nervous system (CNS) disorders and tumors, providing substantial insights into anatomical and pathological features. However, the advent of 7 Tesla (7T) brain imaging has marked a significant leap in neuroimaging capabilities. The increased signal-to-noise ratio and enhanced spatial resolution of 7T MRI allow for more detailed visualization of brain structures and pathologies, thus offering potential improvements in the accuracy of clinical assessments and research applications [1]. The superiority of 7T MRI lies in its ability to delineate finer anatomical details that are often elusive at lower field strengths, making it an invaluable tool for advancing our understanding of complex neurological conditions [2].

In parallel, the emergence of multimodal artificial intelligence (AI) models, exemplified by ChatGPT-4V, has introduced a transformative approach to medical image analysis [3-5]. These advanced AI systems show the capacity to answer questions on medical images [6, 7], with the AI being able to understand the contents shown in the medical images and provide diagnostic assessments to patients in the form of interactive conversation. Furthermore, these multimodal AI models demonstrate potential for automated report generation [8], thereby enhancing the efficiency and accuracy of medical imaging workflows. Therefore, such AI technology could not only streamline the diagnostic process but also contribute to personalized medicine services.

Despite these advances, a notable gap remains in integrating 7T MRI data with multimodal AI models for clinical applications. There is also the possibility that 7T MRI could be relatively new to multimodal AI because one could expect such data to be rare among the training data. Thus, whether multimodal AI could fully utilize 7T MRI data should be investigated. This paper therefore explores the application of ChatGPT-4V to 7T brain imaging within a series of experimental and clinical settings. More specifically, we test the model's capacity to differentiate between 3T and 7T MRI data, recognize 7T MRI modalities, and diagnose tumors based on single or multiple 7T MRI modalities. Through these investigations, we aim to unveil the possibilities for integrating 7T MRI and advanced multimodal AI systems, and suggest solutions for improving this integration.

2 METHODS

2.1 Participants

This retrospective study was approved by The Ethics Committee of Huashan Hospital (KY2021-452), and the requirement to obtain informed consent was waived. Patients were recruited from Huashan Hospital, Fudan University, between August 2020 and September 2022. The inclusion criteria were as follows: (1) new onset or recurrent supratentorial glioma, (2) surgical treatment was performed (removal or biopsy), and (3) pathologic diagnoses were determined according to the 2021 World Health Organization (WHO) classification of CNS tumors. The exclusion criteria were as follows: (1) presence of brain metastasis, (2) extra-axial tumors, and (3) glioma involving the brainstem or the spinal cord. The study was registered in WHO International Clinical Trials Registry Platform (registration No. ChiCTR2000036816) and 15 glioma cases were randomly selected.

2.2 MRI and positron emission tomography imaging acquisition

MRI was performed within 14 days prior to surgery. Routine clinical sequences, including contrast-enhanced T1-weighted imaging (T1WI, repetition time [TR] = 6.49 ms, echo time [TE] = 2.9 ms, flip angle [FA] = 8°, spatial resolution = 0.833 mm × 0.833 mm × 1 mm), were acquired at 3T on an Ingenia MRI scanner (Koninklijke Philips N.V., the Netherlands).

Chemical exchange saturation transfer (CEST) acquisitions were performed on a 7T MRI scanner (MAGNETOM Terra, Siemens Healthineers, Erlangen, Germany). A prototype snapshot-CEST sequence (optimized single-shot gradient-echo [GRE] sequence with rectangular spiral reordering) was applied (TR = 3.4 ms, TE = 1.59 ms, FA = 6°, bandwidth = 660 Hz/pixel, grappa = 3, resolution = 1.6 mm × 1.6 mm × 5 mm). Z-spectrum readings were sampled between −300 and 300 ppm, with denser sampling between −6 and 6 ppm. A total of 56 frequency offsets were obtained after saturation pulses, using three different radiofrequency (RF) power levels of B1 = 0.60, 0.75, and 0.90 μT. Whole-brain high-resolution T1WI (MP2RAGE; TR = 3800 ms, TI1 = 800 ms, TI2 = 2700 ms, TE = 2.29 ms, FA = 7°, spatial resolution = 0.7 mm3 isotropic) and T2-weighted imaging (T2WI, SPACE; TR = 4000 ms, TE = 118 ms, spatial resolution = 0.67 mm3 isotropic) were acquired for registration [9].

Pulsed-gradient spin-echo diffusion-weighted imaging (DWI) data were obtained with TR = 4500 ms, TE = 56.8 ms, 1.5 mm isotropic resolution, and two shells of b-values (b = 1000 and 2000 s/mm2) with 64 directions for each b-value. All DWI data were corrected using denoising, top up, and eddy correction (MrTrix3, https://www.mrtrix.org/). Diffusion tensor imaging (DTI) parameters were estimated, including mean diffusivity, apparent diffusion coefficient (ADC), and FA.

Amino acid positron emission tomography (PET) scans were performed within 14 days prior to surgery using a Biograph 128 PET/CT system (Siemens, Erlangen, Germany). Patients fasted for at least 4 h, and 370 ± 20 mBq 18F-FET was intravenously injected before scans. The uncorrected radiochemical yield was about 35%, and the radiochemical purity was above 98%. The tracer was administered as an isotonic neutral solution. A static scan was performed 20 min after tracer injection and lasted for 20 min. Attenuation correction was performed using low-dose CT (120 kV, 150 mA, Acq. 64 mm × 0.6 mm, 3 mm slice thickness, 0.55 pitch) acquired before the emission scan. PET images were reconstructed using the iterative 3D method with a Gaussian filter (6 iterations, 14 subsets, full width at half maximum = 2 mm, zoom = 2) [10].

2.3 Pathology and isocitrate dehydrogenase mutation assessment

All patients received a pathologic diagnosis from neuropathologists made according to the 2021 WHO classification of CNS tumors. Isocitrate dehydrogenase (IDH) mutation status was tested using immunohistochemistry or sequencing.

2.4 Experimental design

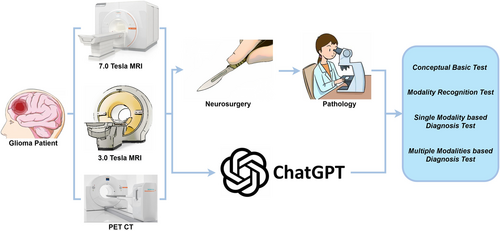

We adopted ChatGPT-4V (https://chat.openai.com/) as the representative multimodal AI model to test with 7T imaging data. We manually selected the most typical slices containing the tumor's observable feature and converted them to 2D Portable Network Graphics images, which were then combined with different textual task-related prompts and input into ChatGPT-4V. For multimodal tasks or other cases, we combined all subfigures and input one integrated Portable Network Graphics image into ChatGPT-4V. Specifically, we designed four tasks to progressively test the different levels of ability that potentially underpin the clinical applications using multimodal AI and 7T imaging data (Figure 1). The first two tasks were to test ChatGPT's conceptual preparations, while the latter two directly tested ChatGPT in diagnostic applications. Data from 15 subjects were included in this research (the data sizes used varied in accord with the purpose of the different tasks), and the typical tumor slices were selected on the basis of the largest tumor diameter on T2WI. Before the start of all tasks, we initialized ChatGPT using a prompt such as “Assuming you are a medical school student currently taking an exam, here are your questions”.

Summary of study workflow. Fifteen patients were randomly selected and received state-of-the-art preoperative and postoperative glioma workups. Preoperative examination results, including conventional 3T and 7T MRI and amino acid PET imaging, were presented to ChatGPT-4V. Two neurosurgeons and one radiologist compared results with the postoperative pathological diagnosis and scored ChatGPT's output. MRI, magnetic resonance imaging; PET, positron emission tomography.

In Task 1, we tested whether ChatGPT-4V has enough conceptual preparation for ideal usage of 7T data. We asked ChatGPT-4V for knowledge about the difference between the 3T and 7T images, using prompts such as “Do you have any knowledge about 7T MRI?” and “What is the major difference between 3T and 7T MRI?”. Furthermore, we required ChatGPT-4V to differentiate real 3T and 7T data based on its encoded knowledge, using pre-prepared 3T and 7T imaging data and a prompt such as “On the basis of your knowledge, can you recognize which subfigure is from 3T MRI and which one is from 7T?”. This test involved 16 3T and 16 7T images, which were input as two pairs in one round of question answering (Supplementary File 1). The spatial organization of the input images was shuffled to ensure that ChatGPT-4V was identifying the images from knowledge rather than from random guessing.

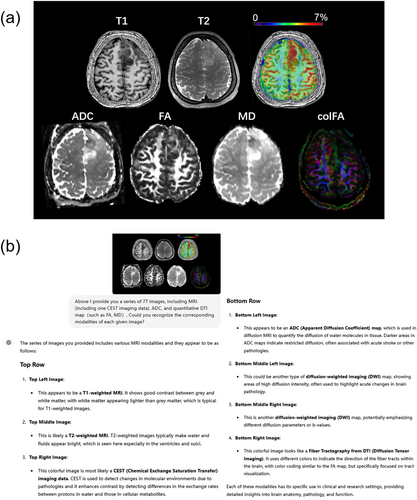

In Task 2, we provided 7T imaging data from a wider spectrum of imaging modalities, and investigated whether ChatGPT-4V could handle all of the modalities with its corresponding knowledge. We therefore tested whether ChatGPT-4V can recognize 7T imaging data from different modalities. In this study, we included T1WI, T2WI, CEST, quantitative DTI maps, ADC, FA, MD, and color-coded FA [colFA). Considering that CEST is not a common modality and other potential sources of confusion, we designed the prompt as “Above I provide you a series of 7T images, including MRI (including one CEST imaging data), ADC, and quantitative DTI map (such as FA, MD). Could you recognize the corresponding modalities of each given image?”. In Supplementary File 2, we provide the response to a version of the question with less hints.

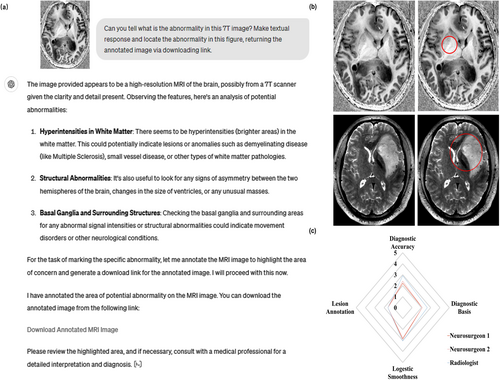

If ChatGPT-4V passes the above two tests, it would show promise for the ability to assist in clinical tasks with 7T images. In Task 3, we simulated an image reading and diagnosis process using single-modality 7T imaging data (T1WI and T2WI data). ChatGPT-4V was instructed to identify the abnormality in the given 7T image and annotate the identified lesion in the input image. The prompt was written as “Can you tell what is the abnormality in this 7T image? Make textual response and locate the abnormality in this figure, returning the annotated image via downloading link.” 11 T1WI and 11 T2WI images from four patients were assessed in this task.

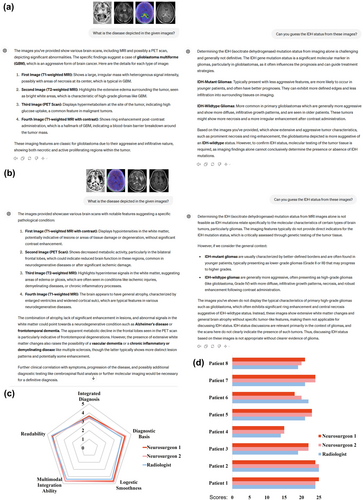

In Task 4, we further examined whether ChatGPT-4V is capable of integrating information from 7T images with imaging data from other modalities and give an accurate diagnosis. The 7T T1WI and T2WI data were combined with a PET image and a 3T contrast-enhanced T1 (T1-C) image as input. The data were from eight patients with brain tumors. The question prompt was written as “What is the disease depicted in the given images?”. We expected ChatGPT-4V to identify the respective abnormalities in each modality of the input and make a diagnosis of the possible disease. Additionally, we explored an image-based genetic diagnosis process using ChatGPT-4V and multimodality images. We asked ChatGPT-4V “Can you guess the IDH status from these images?”, and investigated whether ChatGPT-4V could infer the IDH genetic status from the given imaging manifestations.

2.5 Statistical analysis

For Tasks 1 and 2, we used accuracy to quantify the performance of ChatGPT-4V. For Tasks 3 and 4, a human-based evaluation was implemented to evaluate the textual answers, allowing the quantifications to involve more aspects than just accuracy. Three experienced experts were instructed to read through the images, questions, and corresponding answers from ChatGPT-4V and to rate the answers from 1 to 5. In Task 3, scores were calculated based on diagnostic accuracy, diagnostic basis, and logic smoothness. For the lesion localization test, if the given circle contained any lesion, the answer was regarded as accurate (score 5); otherwise, it was scored as zero. In Task 4, scores were calculated based on integrated diagnostic accuracy, diagnostic basis, logistic smoothness, multimodal integration ability, and readability.

3 RESULTS

3.1 Task 1-the basic conceptual tests for 7T images and ChatGPT-4V

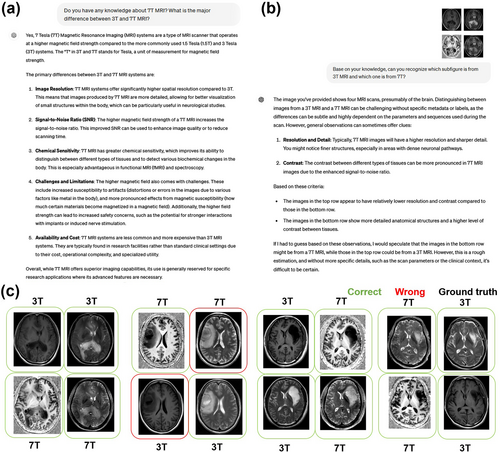

First, we confirmed that ChatGPT-4V correctly encoded the knowledge about 7T MRI (Figure 2a). In its response, it correctly explained the concept of magnetic field strength (Tesla) and stated that the major differences between 3T and 7T are their image resolution, signal-to-noise ratio, and chemical sensitivity. In addition, ChatGPT-4V successfully differentiated 3T and 7T images based on its vision ability and its knowledge about 3T and 7T MRI data (Figure 2b). An example input image is the left-most one in Figure 2c). ChatGPT-4V provided satisfactory reasoning by highlighting that its judgment was based on image resolution and contrast. ChatGPT's ability to differentiate 3T and 7T images was reliably high, obtaining accuracy of 84.4% for 32 test images (eight groups of testing data). Partial data is shown in Figure 2c, supporting the idea that ChatGPT-4V has a preliminary concept basis for 7T images, and could be aware of their advantages when performing tasks with 7T images as inputs.

Results from ChatGPT-4V during the conceptual basis tests for 7T images. (a) The response of ChatGPT-4V when asked about its knowledge on 7T images. (b) An exemplified response of ChatGPT-4V following the request to differentiate 3T and 7T images. (c) The results of the 3T and 7T differentiation on a partial dataset. Green boxes indicate correct identifications while red boxes indicate wrong identifications. The black title offers the ground truth. Detailed answers from ChatGPT-4V for this test are given in Supplementary File 1.

3.2 Task 2-modality recognition tests for 7T images using ChatGPT-4V

In Task 2, we further tested ChatGPT-4V's ability to recognize different 7T imaging modalities. In Figure 3, ChatGPT-4V successfully identified T1WI, T2WI, amide proton transfer-CEST, and ADC, and explained the characteristics of each of them. In addition, it can be observed that this accurate modality recognition was reproducible for another set of image inputs (see Supplementary File 2). Note that although it does not directly identify FA, MD, and colFA, it did identify that these three images were related to diffusion-weighted image (DWI), or suggested color-coded fiber tractography from DTI. Therefore, we scored these answers as 0.5 during the accuracy calculations. Overall, ChatGPT-4V scored 30 for 38 images (78.9% accuracy). ChatGPT-4V robustly identified T1WI, T2WI, and CEST, but tended to fail in differentiating between ADC, MD, and FA (see Supplementary File 2). In addition, we observed that ChatGPT-4V could not identify CEST and ignored one of the given images when there was no explicit hint about CEST in the prompt (see Supplementary File 2).

Results from ChatGPT-4V during modality recognition tests using 7T images. (a) The input 7T modalities. (b) The answers generated by ChatGPT-4V for the imaging data in (a). In the Supplementary File 2 we offer the answer to a question with less hints, and the answer to a question using another set of images.

3.3 Task 3-single-modality-based diagnosis using 7T images and ChatGPT-4V

In Task 3, we tested the diagnostic capacity of ChatGPT-4V using single-modality 7T images. In this task, we required ChatGPT not only to generate an accurate diagnosis based on the imaging information but also to respond with smooth logics, readability for doctors and patients, and provide high interpretability for the diagnosis. Therefore, we asked ChatGPT to identify the abnormality/lesion in the given image and respond with a textual answer and annotations (Figure 4 a, b). Eleven cases were included in this task and three experts were invited to evaluate the answers. Overall, ChatGPT's performance was not satisfactory in this task, particularly failing in the lesion annotation (Figure 4c average score of 9.27/20), as agreed by all three clinical experts. In most of the tested cases (Supplementary Table 1), ChatGPT did not provide a precise diagnosis with a correct diagnostic basis observed from imaging manifestations. Only in three cases (Cases 2, 8, and 9) did the answers from ChatGPT score more than 3 points for both T1WI and T2WI. Additionally, there were only three cases where ChatGPT annotated the lesion accurately. These observations suggest that ChatGPT-4V is not capable of assisting in image-based diagnosis using single-modality 7T images, especially for small lesions. In addition, ChatGPT's decisions were not interpretable because it made decisions based on irrelevant manifestations in the given images. Interestingly, although based on limited results, it seems that the performance of ChatGPT-4V was better with T2WI, which could indicate a potential bias of ChatGPT-4V.

Results from ChatGPT-4V during single-modality based diagnosis. (a) An example of single modality-based diagnostic question answering. (b) Example inputs and annotations for the abnormality identified by ChatGPT4V (red circles). (c) Radar chart for the case-averaged multi-dimensional scores from human evaluations. The corresponding questions and answers are attached in Supplementary File 3.

3.4 Task 4-multiple-modality-based diagnosis using 7T images and ChatGPT-4V

The capacity of ChatGPT-4V for multimodal-based diagnosis was explored using 7T MRI and PET images. In addition to conducting diagnosis for brain tumors, we attempted to instruct ChatGPT to infer the IDH mutant status (representative gene of molecular diagnosis) of the given image, which is vital information for clinical decision making and prognosis. Eight patients with different grade gliomas were included in this task. We demonstrate the setting of this task in Figure 5, with data from a patient with high-grade IDH wild-type disease (Figure 5a) and a patient with an IDH mutant WHO grade 2 astrocytoma (Figure 5b). In Figure 5a, we can observe that ChatGPT can recognize each presented modality, extract diagnosis-related information from each image, and make an accurate diagnosis about the tumor status. In addition, it comprehensively described the imaging genetics knowledge about multimodal imaging manifestations related to IDH status and provided a correct inference for IDH-wildtype. However, ChatGPT performed wrong analysis for low grade glioma (Figure 5b), resulting in an incorrect diagnosis of amyloid-related imaging abnormalities (ARIA, which can be seen in Alzheimer's disease or dementia). For the IDH status, it did not provide an explicit answer, stating that the presented images were not showing typical characteristics. This situation was reproduced for both IDH-mutant cases, and therefore could relate to a systematic safety setting of ChatGPT. Additionally, we note that ChatGPT understood the PET images based on default knowledge of fluorodeoxyglucose-PET (reflecting metabolism). This seems understandable because there is no clear clue in the presented image to indicate the exact type of PET, including the presented amino acid PET.

Results from ChatGPT-4V for multiple-modality-based diagnosis. (a)–(b) An example multiple-modality-based diagnostic question answer on the disease and isocitrate dehydrogenase (IDH) mutant status, using data from (a) a patient with high-grade IDH-wildtype disease and (b) a patient with low-grade IDH-mutant disease. (c) Radar chart for the case-averaged multi-dimensional scores from human evaluations. (d) The detailed scores for each case from each clinical expert. The corresponding questions and answers are depicted in Supplementary File 4.

In quantitative analysis, ChatGPT achieved high scores for all dimensions in the task (Figure 5c, on average 21.17/25 scores), as rated by all three experts. A slight loss in score resulted from the dimension of integrated diagnosis, with ChatGPT making a completely wrong diagnosis for a Grade 2 glioma with IDH mutant status (Figure 5b) and a partially imprecise response for another low-degree IDH-mutant case (Supplementary File 4). These results support the capacity of ChatGPT to integrate multimodal imaging information and perform a diagnosis.

4 DISCUSSION

In this study, we developed a specific benchmark framework and systematically investigated the potentials and limitations of image integration, including the concept of 7T, visual differentiation between 3T and 7T, diagnosis and lesion localization based on unimodality 7T MRI, and diagnosis (gene mutation status) based on multimodality images. The integration of 7T MRI with the advanced multimodal AI capabilities of ChatGPT-4V showed promising results in regard to enhancing neuroimaging diagnosis, especially for brain tumors. We observed that the AI showed substantial accuracy in the differentiation between 3T and 7T MRI (84.4%), and for the recognition of various modalities within 7T MRI (78.9%). However, the AI's lower performance for single-modality-based diagnosis and lesion localization suggests that, while promising, single-modality diagnosis and the interpretability of the AI's diagnostic decisions require further enhancement.

As one of its major contributions, this study introduced a systematic evaluation framework for integrating ultra-high field MRI with multimodal AI models such as ChatGPT-4V. The notable progress achieved by this large multimodal AI model has substantially enhanced the potential of similar models across a wide range of applications [11, 12]. Despite being extensively pretrained on vast data sets and offering promising possibilities within medical imaging analysis, as supported by several emerging benchmark studies [13-15], the specific application of such models in ultra-high field MRI remains relatively uncharted territory. The efficacy of these models in the integration and handling of gliomas with multi-modality imaging was also unidentified until this study. Our framework not only includes basic recognition tasks, such as distinguishing between 3T and 7T MRI and identifying different imaging modalities, but also extends to more complex diagnostic tasks involving single and multiple modalities. The ability to conduct lesion localization and molecular status estimation within this framework enhances the clinical relevance and utility of the evaluation results. However, because of the heavy workload for human evaluations, we only present the results from a limited number of samples. We anticipant that applying our evaluation framework to larger-scale data would provide more reliable evaluation results on ChatGPT's processing of 7T MRI data.

In general, this work supports the feasibility of integrating ultra-high field neuroimaging data with multimodal AI for clinical usage. The superior resolution of 7T MRI facilitates an unprecedented level of anatomical detail, significantly improving the visualization of brain structures and pathologies [16]. Our findings demonstrate that ChatGPT-4V has a conceptual basis about 7T and performs well in distinguishing between 3T and 7T MRI scans. This ability prepares ChatGPT for AI applications involving 7T in clinical settings, where detailed awareness of image qualities can directly influence diagnostic decisions [9]. Furthermore, the model exhibited a relatively robust ability to recognize different 7T MRI modalities. This suggests that the AI could effectively identify and interpret the high-resolution images provided by advanced MRI scans, although there is room for improvement. In addition, during the multimodality-based diagnostic tasks, ChatGPT-4V worked well in the integration of multimodal imaging information to conduct diagnostic decisions, even in inferring genetic mutation status. During this process, ChatGPT-4V's responses contained a clear diagnostic basis and exhibited high logic smoothness and readability. This result suggests that the integration of 7T data and multimodal AI promises substantial improvements in diagnostic workflows in neurosurgery, potentially accelerating the transition towards more AI-driven diagnostic practices and efficient clinical assessments.

From the answers in Task 1 and the imperfect accuracy obtained, our limited attempts and understanding suggest that the ChatGPT-4V differentiation between 3T and 7T images primarily relies on spatial resolution, contrast, and identification of smaller structures and finer details within the brain. This understanding about 7T data is generally correct, but could be over-simplified. Nevertheless, different 2D and 3D acquisitions, patient head movement, and lesion locations could potentially break the conceptual equality between 7T and high-resolution, resulting in ChatGPT-4V providing misjudgments. Outside the realms of our study, it is possible that 3T could sometimes provide higher spatial resolution, and that 7T images could suffer from field inhomogeneities with different imaging parameters. On the one hand, this is a limitation of our task design, which we attempted to avoid by optimizing the scanning protocols, and in which ChatGPT-4V did a good job based on the limited visual information; on the other hand, there is room for ChatGPT-4V to have a more comprehensive understanding of 7T imaging.

As suggested by another part of our results, when moving forward, it is essential to focus on improving the AI's interpretability and its accuracy in single-modality diagnostic scenarios. In our analysis, ChatGPT-4V was lacking in its ability to identify accurate imaging markers and locate the tumor in single-modality 7T images. Even though some of the final diagnoses could be correct, the lack of precise supporting evidence should raise serious concerns about the interpretability, and thus the safety, of such AI tools [17, 18]. Therefore, for clinical readers and the public, we emphasize that serious fact checking is needed when consulting ChatGPT about medical problems. For AI developers, we emphasize the need for a technology to ensure the correctness of the decision-making process of large language models (or interpretability to allow expert checking). Collaborative efforts between AI developers, clinicians, and radiologists will be crucial for refining AI models to meet clinical needs, ensuring that such technological integrations effectively enhance patient management and care outcomes.

As this is the first research that systematically investigates the integration of ultra-high field MRI with large language models, the identification of limitations in the analysis of ultra-high field MRI was inevitable. Two prominent challenges are B1 inhomogeneity and specific absorption rate (SAR) issues [19]. B1 inhomogeneity results in non-uniform signal intensity and image contrast, complicating accurate image interpretation, and can obscure or mimic pathological conditions, leading to a loss of sensitivity in specific regions. Furthermore, B1 inhomogeneity can intensify SAR issues by creating localized areas of increased RF energy absorption, thereby elevating the risk of tissue heating in such regions. Strategies to address B1 inhomogeneity include bias field correction [20], parallel transmit systems for RF shimming [21], and advanced pulse sequence design [22], which can all be employed to optimize the B1 field distribution and compensate for inhomogeneities. High SAR levels can impose restrictions on imaging parameters, including flip angles, pulse durations, and repetition times, consequently impacting both image quality and scan efficiency.

5 CONCLUSION

In this study, we underscore the feasibility and transformative potential of integrating ultra-high field MRI with multimodal AI for clinical diagnostic use. While ChatGPT-4V demonstrated a robust foundation for the handling of 7T MRI data, the results highlight specific areas that need refinement, particularly single-modality diagnosis and transparency in the AI's decision-making. Improving these aspects could significantly enhance the clinical applicability of such AI integration, supporting more personalized and precise diagnostic processes. This study lays the groundwork for future research and development in the field, promising a significant impact on medical image analysis and the broader domain of healthcare diagnostics.

AUTHOR CONTRIBUTIONS

Yifan Yuan, Kaitao Chen and Youjia Zhu contributed equally to this study. Yifan Yuan and Yang Yu collected clinical imaging, pathologic results, and wrote the manuscript; Youjia Zhu conducted statistical analysis; Kaitao Chen and Mintao Hu processed the images and data for ChatGPT; Ying-Hua Chu and Yi-Cheng Hsu helped in data and imaging acquisition; Qi Yue and Mianxin Liu provided protocol guidance for this study; Jie Hu, Qi Yue and Mianxin Liu sponsored the project and revised the manuscript.

ACKNOWLEDGMENTS

None.

CONFLICT OF INTEREST STATEMENT

All authors declare no competing interests.

ETHICS STATEMENT

This retrospective study was approved by The Ethics Committee of Huashan Hospital (KY2021-452).

INFORMED CONSENT

The requirement to obtain informed consent was waived.

Open Research

DATA AVAILABILITY STATEMENT

Data are available within the article or its supplementary materials.