Fairness in artificial intelligence-driven multi-organ image segmentation

Abstract

Fairness is an emerging consideration when assessing the segmentation performance of machine learning models across various demographic groups. During clinical decision-making, an unfair segmentation model exhibits risks in that it can pose inappropriate diagnoses and unsuitable treatment plans for underrepresented demographic groups, resulting in severe consequences for patients and society. In medical artificial intelligence (AI), the fairness of multi-organ segmentation is imperative to augment the integration of models into clinical practice. As the use of multi-organ segmentation in medical image analysis expands, it is crucial to systematically examine fairness to ensure equitable segmentation performance across diverse patient populations and ensure health equity. However, comprehensive studies assessing the problem of fairness in multi-organ segmentation remain lacking. This study aimed to provide an overview of the fairness problem in multi-organ segmentation. We first define fairness and discuss the factors that lead to fairness problems such as individual fairness, group fairness, counterfactual fairness, and max–min fairness in multi-organ segmentation, focusing mainly on datasets and models. We then present strategies to potentially improve fairness in multi-organ segmentation. Additionally, we highlight the challenges and limitations of existing approaches and discuss future directions for improving the fairness of AI models for clinically oriented multi-organ segmentation.

Abbreviations

-

- ADNI

-

- Alzheimer's disease neuroimaging initiative

-

- AI

-

- artificial intelligence

-

- BMI

-

- body mass index

-

- CT

-

- computed tomography

-

- DSC

-

- Dice similarity coefficient

-

- HD

-

- Hausdorff distance

-

- MIMIC-CXR

-

- MIMIC Chest X-ray

-

- MRI

-

- magnetic resonance imaging

-

- SER

-

- social equality ratio

-

- UKB

-

- UK Biobank

-

- VAEs

-

- variational autoencoders

1 INTRODUCTION

Multi-organ image segmentation using efficient and rapid artificial intelligence (AI) techniques to extract the structure of organs and tissues from medical images is widely used in clinical applications. It provides high-precision morphological data for downstream tasks such as disease diagnosis [1-3], disease prediction [4], and other pathological analyses. Additionally, multi-organ segmentation has been extensively studied across almost all imaging modalities, including magnetic resonance imaging (MRI), computed tomography (CT), and X-ray radiography, achieving high efficiency and precision [5-7]. According to their specific needs, researchers typically train deep learning models such as UNet [8] and nnUNet [9] to achieve the segmentation of selected organs on specific modalities. With the increasing prominence of big data, multimodality, and the complexity of computational models used for multi-organ segmentation tasks, foundation models have been proposed [10-12]. These models support the segmentation of multiple modalities and organs and exhibit strong generalization capabilities. In summary, the development of multi-organ segmentation is thriving, and it holds significant application value and potential for improving the efficiency of healthcare.

Objective assessment of multi-organ segmentation performance is of the utmost importance because it directly determines whether the segmentation technology is suitable for specific tasks. To ensure data quality for downstream tasks, the effectiveness of multi-organ segmentation is assessed, providing references for model postprocessing [13]. Furthermore, by designing loss functions based on assessment metrics, performance assessment can guide the learning direction of AI models [14]. Currently, there are various assessment metrics for multi-organ segmentation, with the most common being the Dice similarity coefficient (DSC) [15], which assesses the average performance of the segmentation task on current samples by calculating the overlap between the ground truth and the segmentation results. Unlike DSC, the Hausdorff distance [16] measures the maximum distance between the ground truth and the segmentation result, reflecting the maximum error in the segmentation task. Some studies use multiple metrics for model assessments and multi-task learning to comprehensively assess and improve the performance of multi-organ segmentation models.

However, as big data and multi-center data become more prevalent in multi-organ segmentation tasks, fairness issues in multi-organ segmentation are becoming increasingly severe. For example, the UK Biobank (UKB) dataset collects samples from different ethnic groups, with significant differences in the proportions of samples among these groups [17]. Organ segmentation models trained on the UKB dataset may exhibit varying performance disparities for different groups, leading to biases in performance across different populations, thereby creating fairness issues [7, 18]. This can further result in fairness problems in downstream tasks, ultimately leading to health inequity. Human organ characteristics, such as organ volume, muscle, and fat distribution, also exhibit significant differences due to race, gender, and age [19, 20], which affect the effectiveness of segmentation models. This makes fairness in the field of multi-organ segmentation a pervasive and complex issue.

However, there has not yet been a comprehensive review addressing fairness issues in multi-organ segmentation, which causes many researchers to overlook the importance of health equity in medical AI while predominantly focusing on improving multi-organ segmentation accuracy for single tasks. Therefore, to help address fairness issues in multi-organ segmentation, we have, in this review, compiled and described the factors influencing fairness in multi-organ segmentation and discussed feasible strategies for solving fairness issues in multi-organ segmentation tasks and relevant research.

This review presents a comprehensive overview of fairness in multi-organ segmentation as follows: (1) In Section 2, we describe and define fairness and the sources of unfairness, including data factors (race, gender, age, and population organ characteristics) and model factors (model construction, loss functions, and hyperparameters) (Figure 1). (2) In Section 3, we focus on strategies to improve the fairness of medical image analysis, strategies that could potentially be used in multi-organ segmentation tasks. (3) In Section 4, we discuss the shortcomings of existing fairness assessments and efforts to improve multi-organ segmentation and provide insights into future trends for addressing fairness issues.

Summary of the source of fairness in multi-organ segmentation and strategies for improvement.

2 OVERVIEW OF FAIRNESS IN MULTI-ORGAN SEGMENTATION

In this section, we introduce four kinds of fairness in Section 2.1: individual fairness, group fairness, counterfactual fairness, and max–min fairness. Factors such as dataset characteristics and model design and their impact on multi-organ segmentation fairness are then presented in Section 2.2.

2.1 Definition of fairness

Fairness in AI, particularly in medical imaging, is a complex and multifaceted concept. It encompasses the principle that AI models should perform consistently well across different demographic groups and not exhibit biased outcomes based on sensitive attributes such as race, gender, or age. This review divides fairness into several nuanced categories, providing a multi-dimensional approach to understanding and addressing fairness concerns in medical image analysis, where biases can have significant ramifications on patient care.

2.1.1 Individual fairness

Individual fairness in an AI model refers to the similarity in outcomes expressed for similar individuals within a dataset [21], ensuring fair treatment for each individual at the individual level, free from discrimination arising from individual characteristics such as personal lifestyle habits. In the context of individual fairness and feature extraction, if two individuals in a dataset are similar, the spatial distance between the feature vectors extracted by the model should be small. For example, in multi-organ segmentation, if the feature matrices extracted by the model from the input have small distances, or if the individuals share similar characteristics (they are all male or female), the model's effectiveness (measured by the Dice coefficient) on these individuals should also be similar. This ensures that the model treats similar individuals with consistent accuracy and fairness.

However, individual fairness has shortcomings in regard to fairness measurement. First, the feature distance between individuals is a crucial metric for individual fairness, but the way that this distance is measured can vary and is often subjective. For instance, both the Euclidean distance and Manhattan distance can be used to measure the distance between individual features. However, these different distance metrics emphasize different aspects, which can lead to situations where samples that appear close in Euclidean distance might be far apart when measured by Manhattan distance. This discrepancy can introduce bias into fairness assessments. Second, in fairness improvement, the individual distance is used as a learning target for model training. Because AI models tend to lack interpretability, misusing measurement standards can compromise fairness and even exacerbate model biases. Furthermore, calculating the distance between individuals is a highly time-consuming task because of the need for a big dataset to train the segmentation model and the complex data features of imaging data. Moreover, the measurement of individual fairness is susceptible to outlier data and may guide the model towards learning outlier data with extreme tolerance, thereby weakening the model.

2.1.2 Group fairness

The concept of group fairness is widely applied in medical image analysis. Braocas et al. [22] proposed that the goal of group fairness was to minimize performance imbalances among various subgroups, differentiated by sensitive attributes. Group fairness emphasizes the model's consistent performance across all individuals with various sensitive attributes, contrary to individual fairness, which focuses on consistent output for similar individual inputs. Strategies such as fairness indicator computation and disparity measurement have been proposed to assess and improve group fairness. For example, Puyol-Antón et al. [7] used standard deviation and the social equality ratio to quantify the fairness of nnUNet in cardiac MRI segmentation and assess the fairness of the model when applied to different races.

However, group fairness has limitations. In the downstream tasks of multi-organ segmentation, various sensitive attributes are often used, which may even contradict each other in certain cases. In such a situation, satisfying group fairness for a subgroup may disrupt fairness in other subgroups. Furthermore, a few fairness assessment indicators are overly idealized, which may make it challenging to fully meet the conditions of data security and privacy.

2.1.3 Counterfactual fairness

Counterfactual fairness is a concept based on causal inference put forth by researchers [7]. Alternatively, it focuses on studying the causes of bias in models. Counterfactual fairness is typically associated with a sensitive attribute and explores the consistency of outcomes under the counterfactuals of the sensitive attribute. This consistency reveals the degree of correlation between the model bias and the current sensitive attribute, thereby assessing the fairness of the model. Generative models such as generative adversarial networks or variational autoencoders (VAEs) are used to generate counterfactual samples for further assessments. For example, to assess the fairness of multi-organ segmentation across different races, it is necessary to control for other sensitive attributes and use a generation strategy to create data representing various races. This approach allows for the study of variations in segmentation effectiveness. If the multi-organ segmentation task satisfies counterfactual fairness, the variation in segmentation effectiveness should remain insignificant because the proportion of samples from different races in the dataset changes. However, this process can generate unrealistic features, showing less convincing fairness assessments.

2.1.4 Max–min fairness

Max–min fairness, much like its counterpart in network resource allocation, aims to minimize the gaps in benefits from machine learning between all groups or individuals [23]. More specifically, for a particular task, if a model performs poorly on certain subgroups, improving max–min fairness means guiding the AI model to improve its performance on these subgroups, thereby ensuring a reduction in the overall bias. For fairness assessments, max-min fairness tends to be used to assess the fairness of multiple models for multi-organ segmentation under certain sensitive attributes. For example, higher fairness will appear in the model when its poorest segmentation effectiveness in the specialized subgroup is the best among all the poorest effectiveness cases of the models. Therefore, max–min fairness relies heavily on the selection of models for specialized tasks. Furthermore, the model may compromise its excellent performance on other subgroups while improving its minimum benefit for a group or individual.

2.2 Sources of unfairness in multi-organ segmentation

Fairness is a critical aspect of model assessment that is highly overlooked in the development of multi-organ segmentation models. Although remarkable progress has been witnessed in multi-organ segmentation models, most studies have overlooked the fairness problem in their assessments. This section discusses the various sources of unfairness in multi-organ segmentation, including imbalanced training data and model design (Table 1).

| Sensitive attribute | Dataset | Data modality | Data size | Task | Author | Year |

|---|---|---|---|---|---|---|

| Race | UK Biobank | MRI | 5660 | Cardiac segmentation | Puyol-Antón et al. [7] | 2021 |

| Private | CT | 8543 | Pancreas segmentation | Janssens et al. [19] | 2021 | |

| UK Biobank | MRI | 5903 | Cardiac segmentation | Puyol-Antón et al. [18] | 2022 | |

| ADNI | MRI | 325 | Brain segmentation | Ioannou et al. [24] | 2022 | |

| Age | Private | CT | 8543 | Pancreas segmentation | Janssens et al. [19] | 2021 |

| KiTS 2019 | CT | 210 | Multi-organ segmentation | Afzal et al. [25] | 2023 | |

| Organ | Private | CT | 800 | Multi-organ segmentation | Javaid et al. [26] | 2018 |

| CT-ORG | CT | 140 | Multi-organ segmentation | Rister et al. [27] | 2020 | |

| CHAOS | CT, MRI | 160 | Multi-organ segmentation | Conze et al. [28] | 2021 | |

| Model | Private | CT | 800 | Multi-organ segmentation | Javaid et al. [26] | 2018 |

| Pancreas-CT, BTCV, and private | CT | 90 | Multi-organ segmentation | Gibson et al. [5] | 2018 | |

| Private | DRRs, CT | 968 | Multi-organ segmentation | Zhang et al. [29] | 2018 | |

| ISBI LiTS 2017 and private | CT | 180 | Multi-organ segmentation | Huang et al. [30] | 2021 |

- Abbreviations: ADNI, Alzheimer's disease neuroimaging initiative; BTCV, Multi-Atlas Labeling Beyond The Cranial Vault; CHAOS, Combined Healthy Abdominal Organ Segmentation; CT-ORG, CT volume with multiple organ segmentations; ISBI, International Symposium on Biomedical Imaging; KiTS, Kidney Tumor Segmentation; LiTS, The Liver Tumor Segmentation Benchmark.

2.2.1 Imbalanced training data

2.2.1.1 Race

At a physiological level, various racial phenotypes exhibit substantial differences due to long-term geographic factors, which can manifest macroscopically in the form of skin color and body shape, as well as microscopically in physiological reactions. These differences influence the morphology of the human body, including organ structure and function [20]. These organ characteristics can affect the accuracy of segmentation models. Additionally, because of differences in regional environments and lifestyle habits, different racial groups may have varying prevalence rates of the same disease within the same organ [31, 32]. For example, in the United States, the incidence of liver cancer is higher among Black individuals than it is among non-Hispanic Whites, and this disparity contributes to potential biases in organ segmentation models. Moreover, differences in disease incidence rates among different racial groups can lead to imbalances in the number and proportion of affected individuals across races. Notably, disease symptoms in organs may directly impact organ morphological characteristics, thereby influencing the effectiveness of segmentation models. Therefore, race may also contribute to fairness issues in the segmentation process through differences in disease prevalence.

Racial bias is a major problem in the field of AI because it not only reflects a low generalization ability of the model but also generates various social conflicts and secondary discrimination [31, 33, 34], challenging the paradigm of considering fairness as a formal property of supervised learning with respect to protected personal attributes. AI models used in organ segmentation and other domains, such as facial recognition [35, 36], typically perform well for the predominant race in the dataset compared with other underrepresented races. Several studies have identified race as a key factor for assessing the fairness of AI models [24, 37, 38], having found a greater intergroup effect bias between different races than between other sensitive attributes.

2.2.1.2 Gender

In recent years, AI has profoundly impacted the thoughts and behaviors of people. However, bias in terms of gender has become increasingly pronounced with a gradual increase in the proportion of men participating in technology design [39]. When designing datasets, subjective annotations are required to provide the necessary information for AI models to make decisions. However, these annotations are solely dependent on the personal perceptions of the annotators. Cognitive differences in the field of multi-organ segmentation can influence the annotation of datasets, such as organ identification, edge confirmation, and differential processing for gender-related data. Considering that AI algorithms primarily learn by observing patterns in data, human biases can directly lead to the emergence of bias in AI. Additionally, physiological differences between men and women, such as height and weight, can significantly contribute to differences in organ morphology [19]. For instance, male liver and heart volumes are typically larger than those of females, theoretically leading to better segmentation performance; however, men are more prone to fat accumulation around internal organs. These differences in fat distribution may affect the segmentation of abdominal organs (e.g., liver, kidneys) because adipose tissue can obscure organ boundaries. Gender can also influence organ segmentation results through differences in lifestyle habits. Generally, the rates of alcohol consumption and smoking are significantly higher in men than in women, and these external factors can alter organ morphology. For example, lung changes caused by long-term smoking in men, such as emphysema or lung cancer, may complicate lung imaging, thereby increasing the difficulty of lung segmentation. Similarly, excessive alcohol consumption can lead to cirrhosis, thereby affecting liver segmentation.

Current studies have observed a bias between female and male [17-19, 40]. For example, Larrazabal et al. [40] used three deep neural network architectures and two publicly available X-ray image datasets and dynamically adjusted gender ratios to diagnose various chest diseases under gender imbalance conditions. The substantial differences in the results between the two subgroups highlight the importance of gender balance in the medical imaging datasets used to train AI systems for computer-assisted diagnosis.

2.2.1.3 Age

Human phenotypes undergo substantial changes with age, including facial features, body shape, voice, mobility, intelligence, and organ characteristics [41]. For example, with aging, the volumes of the liver, kidneys, and brain gradually decrease, and the structure of the lungs also degenerates. In younger individuals, organs typically have clearer boundaries and a more uniform tissue density, whereas in older adults, organs may exhibit atrophy, calcification, or other degenerative changes, all of which can affect the segmentation accuracy of algorithms. Aging also impacts tissue density and contrast; for instance, bones in the elderly are prone to osteoporosis, leading to decreased bone density and reduced contrast on X-ray or CT images, thereby increasing the difficulty of segmentation. Moreover, different age groups often face distinct health issues. Younger individuals may more commonly experience traumatic lesions, whereas older adults are more likely to suffer from degenerative conditions or chronic diseases such as arteriosclerosis, diabetes, or cancer. These conditions can alter organ morphology, tissue density, and boundary clarity, thus affecting the outcomes of organ segmentation [5]. As technology advances, newer imaging devices offer higher resolution and lower noise levels, which are crucial for accurate multi-organ segmentation. However, individuals of different age groups may have undergone scans at different times, using different equipment and imaging techniques. This variability in image quality can, in turn, impact the effectiveness of segmentation.

Concerted efforts have been directed towards quantitative assessments of the impact of age on bias, with the aim of improving model fairness. Seyyed-Kalantari et al. [31] conducted disease prediction diagnostics for a chest X-ray model across three datasets and quantified disparities among subgroups divided according to age, gender, and socioeconomic status. Their study reflected the correlation between subgroups and bias at multiple levels. Janssens et al. [19] trained a convolutional neural network on organ segmentation to assist in pancreatic segmentation and identified the lower fifth and 10th percentiles of mean pancreatic Hounsfield units to investigate the association between organ segmentation performance and age. Afzal et al. [25] developed a deep learning segmentation model for kidney and tumor segmentation, stratified the dataset by age, and employed standard deviation and skewed error rate to assess the performance variances of models across various age brackets.

However, the current research studies on bias associated with age in multi-organ segmentation is not sufficiently comprehensive, despite the undeniable influence of age on multi-organ segmentation outcomes. Furthermore, the fairness of multi-organ segmentation according to age is influenced by the size of the age intervals used. For example, narrow age intervals will cause individual fairness (which is mentioned above) that it is not equal to group fairness, whereas excessively wide intervals can obscure the relationship between bias and age. Furthermore, the emergence of outliers can substantially disrupt the conclusions drawn. Unfortunately, there is no current research study on regularizing the size of age intervals for fairness measurement.

2.2.1.4 Organs of interest

Organs vary significantly in morphology, leading to different levels of annotation difficulty. For organs such as the heart, liver, spleen, and kidneys, most technicians can carry out annotation work relatively accurately because of their substantial prior knowledge. However, determining the shapes and edges of various types of vessels, such as the aorta, aortic arch, and cerebral vessels, is quite challenging and can lead to subjective errors. Additionally, different organs exhibit distinct characteristics on imaging. Model performance can vary significantly depending on the organ being segmented, which has direct implications for fairness in the AI models used in medical imaging. For instance, the liver and lungs generally have well-defined shapes in images, leading to relatively good segmentation performance by models. Javaid et al. [26] trained a standard U-Net model based on CT data and used the Dice score to quantify the performance differences in four experimental groups: left lung, right lung, esophagus, and spinal cord, for which the resulting Dice scores were 0.99 ± 0.01, 0.99 ± 0.01, 0.84 ± 0.07, and 0.91 ± 0.05, respectively. Often, the crucial anatomical areas of a single organ (e.g., heart atrium, heart ventricle, and brain regions) also require model segmentation for further disease analysis. However, model performance may exhibit bias because of detailed differences between various areas. For instance, Ioannou et al. [24] segmented MRI data of the brain and assessed the fairness of deep learning models by comparing the Dice scores of various regions of the organ. They observed considerable performance differences across various regions, indicating that the study of fairness in multi-organ segmentation should involve more detailed segmentation and analysis of regions of the entire organ, rather than simply segmenting a single organ. However, studies segmenting organ regions and assessing fairness are scarce.

2.2.1.5 Others

Variations in the modalities used to obtain imaging data contribute to bias in multi-organ segmentation. Mainstream medical imaging modalities include X-ray radiography, CT, and MRI. However, even within the same organ, differences in modalities can lead to biases in segmentation outcomes [42]. These variations between different types of imaging data, even if from the same group of volunteers, can lead to bias in the final segmentation results. For instance, most liver images are obtained using CT and MRI, and these data types can exhibit substantial differences in organ clarity, brightness, contour sharpening degree, and annotation difficulty. Furthermore, even within a single data format, variations in equipment parameter settings can affect the features of organ imaging data, thereby leading to variations in model training results.

Differences in socioeconomic status and education level within the target population can potentially influence the effectiveness of multi-organ segmentation. Socioeconomic status and education level can impact the allocation of medical resources, individual health, and health awareness. Compared with those in poorer regions, populations with higher social status and education levels tend to place greater emphasis on healthy living habits and have access to more medical resources, which can reduce the incidence of organ-related diseases. Consequently, this group is more likely to have normal organ morphology, with clearer contours in medical images, making segmentation easier. Moreover, because of their better economic status and health awareness, this population often undergoes scans using more advanced equipment, resulting in higher-quality imaging data, which can further aid the multi-organ segmentation process.

Other sensitive attributes, such as lifestyle, body mass index (BMI), and health status, can also contribute to segmentation bias [43]. For instance, the physical characteristics of the liver and lungs are significantly correlated with individual smoking and drinking habits. Body mass index can obscure organ contours, making accurate segmentation more challenging, especially in the case of fatty liver. However, such attributes are difficult to include and reflect on in multi-organ segmentation datasets. As a result, while Table 1 lists the current studies and sensitive attributes considered in fairness assessments, no systematic analysis has yet been conducted on multi-organ segmentation research to address attributes, such as BMI, health status, and lifestyle. These factors underscore the complexity of ensuring fairness in multi-organ segmentation models because variations in imaging modalities, socioeconomic status, and other sensitive attributes all contribute to potential biases.

2.2.2 Model design

A model's design, encompassing its structure, loss function, and optimizer hyperparameters, dictates its feature extraction ability and influences performance bias across different segmentation tasks [44]. It is noteworthy that in multi-organ segmentation tasks, models often prioritize understanding the masks provided by technicians and the manually set assessment metrics within medical images, rather than comprehensively capturing the global characteristics of the image data or the complex relationships between organ morphology and sensitive attributes [45]. For example, if male samples dominate the training set, the model may develop a bias towards male organ characteristics during segmentation, leading to poor performance on female subjects, particularly those with smaller organ volumes. This is especially problematic if the model fails to learn the differences in organ characteristics between men and women, or lacks the ability to distinguish whether the input data is from a male or female subject, relying solely on the image masks. It is possible for a segmentation model to be highly sensitive to data and gender, achieving minimal performance differences between male and female groups. However, the model might overly emphasize gender fairness, neglecting organ characteristics associated with race, which could result in more significant bias between different racial groups. In summary, a model's ability to extract image data features—including organ location, contour, size, and the relationships between organ characteristics and sensitive attributes—directly influences its segmentation performance across different subgroups. This performance is also directly affected by the model's architecture [44].

Afzal et al. [25] suggested that model construction impacts the fairness of organ segmentation. They used standard deviation and skewed error rate to assess fairness discrepancies in models, such as U-Net, V-Net, Attention U-Net, and nnU-Net. Their results indicated that Attention U-Net is a favorable choice for fairness concerning gender attributes, whereas the classic U-Net is better suited for age-related fairness. This finding highlights that different models have varying abilities to extract sensitive attribute information from data, leading to differing levels of fairness across groups. Interestingly, the most effective model, nnU-Net, did not demonstrate outstanding fairness.

Beyond structural design, the loss function also plays a critical role in determining fairness. The loss function establishes the optimization objectives of the model, and its composition is closely related to the assessment metrics used to assess model effectiveness. It also directly impacts model fairness. Therefore, incorporating fairness assessment metrics (such as the standard deviation of intergroup effects for each batch) into the loss function and providing the model with the attribute values of samples during the fairness calculation process can help improve fairness. Additionally, the loss function can guide the model in better understanding the feature differences among samples from different groups, thereby improving the fairness of intergroup segmentation performance. For instance, in multi-organ segmentation, introducing an additional classification task that focuses on sensitive attributes allows the model to identify which subgroup, such as men or women, the current sample belongs to before generating the segmentation output. This process enables the model to learn data features more comprehensively, thereby improving its encoding capabilities.

Other aspects of model design, such as the optimizer and its hyperparameters, influence the network's granularity, including the number of neurons involved in computation, the number of network layers, the number of iterations, the activation functions, and the model's learning rate, all of which affect segmentation fairness. Current studies have found that changes in the coefficients of the loss function can impact model fairness [25]. However, the effects of other potential factors (as mentioned above) on multi-organ segmentation fairness have not yet been assessed.

Although models can be improved to better extract data features and increase fairness, this can potentially reduce the model's effectiveness. For example, while nnUNet has demonstrated remarkable accuracy in kidney tumor segmentation, its fairness performance is not optimal [25]. This indicates that the existing models' effectiveness and fairness are not necessarily aligned, underscoring the need for researchers to consider fairness when striving to improve segmentation accuracy.

3 FAIRNESS IMPROVEMENT FOR MULTI-ORGAN SEGMENTATION

In this section, we introduced fairness improvement strategies (Figure 1) for the preprocessing, inprocessing, and postprocessing stages of AI systems in segmentation tasks, focusing particularly on mitigating bias, ensuring equitable outcomes, and current studies on improving the fairness of medical image analysis (Table 2). These strategies aim to address issues of fairness and equity across different stages of the development and deployment of AI algorithms.

| Stage | Strategy | Dataset | Data type | Organ | Task | Author | Year |

|---|---|---|---|---|---|---|---|

| Pre- processing | Data resampling | UK Biobank | MRI | Heart | Cardiac segmentation | Puyol-Antón et al. [7] | 2021 |

| Data resampling |

NIH chest x-ray, CheXpert, and private |

X-ray | Lung | Disease prediction | Brown et al. [46] | 2023 | |

| Data aggregation |

MIMIC-CXR, Chest X-Ray8, CheXpert |

CT | Lung | Disease diagnosis | Seyyed-Kalantari et al. [47] | 2021 | |

| Data aggregation | Private | CT | Lung | Disease diagnosis | Zhou et al. [42] | 2021 | |

| Data embellishment | Private | Fundus image | Retina | Vessel segmentation | Sidhu et al. [48] | 2023 | |

| In- processing | Representation learning | Private | MRI | Brain | Disease diagnosis | Zhao et al. [49] | 2020 |

| Representation learning | Private | MRI | Brain | Disease diagnosis | Adeli et al. [50] | 2021 | |

| Representation learning |

Private, QINHEADNECK, and ProstateX |

PET, MRI and ultrasound | Whole body | Multi-organ segmentation | Taghanaki et al. [51] | 2019 | |

| Representation learning | Private | Fundus image | Retina | Vessel segmentation | Tian et al. [52] | 2024 | |

| Disentanglement learning | CheXpert | X-ray | Lung | Disease diagnosis | Deng et al. [53] | 2023 | |

| Disentanglement learning | KiTS 2019 | CT | Kidney and tumor | Multi-organ segmentation | Afzal et al. [25] | 2023 | |

| Contrastive learning | Private | Dermoscopy | Skin | Disease diagnosis | Du et al. [54] | 2023 | |

| Federated learning | BraTS 2018 | MRI | Brain tumor | Tumor segmentation | Li et al. [55] | 2019 | |

| Federated learning | Private | MRI | Prostate | Prostate segmentation | Sarma et al. [56] | 2021 | |

| Federated learning | Private | MRI and Fundus image | Prostate and retina | Multi-organ segmentation | Jiang et al. [57] | 2023 | |

| Post- processing | Model pruning and fine-tuning | TCIA and BTCV | CT | Abdomen | Multi-organ segmentation | Zhou et al. [58] | 2021 |

| Model pruning and fine-tuning | ISIC and private | Dermoscopy | Skin | Disease diagnosis | Wu et al. [59] | 2022 | |

| Model pruning and fine-tuning | MIMIC-CXR | X-ray | Lung | Disease diagnosis | Marcinkevics et al. [60] | 2022 |

- Abbreviations: BraTS, brain tumor segmentation; BTCV, Multi-Atlas Labeling Beyond The Cranial Vault; CheXpert, Chest eXpert; ISIC, International Skin Imaging Collaboration; MIMIC-CXR, MIMIC Chest X-ray; NIH, National Institutes of Health; PET, Positron Emission Computed Tomography; TCIA, The Cancer Imaging Archive.

3.1 Fairness improvement through preprocessing

The most important factor that affects model performance is data. Unsatisfactory model performance and low fairness are frequently caused by the low quality of a dataset. Therefore, to solve the fairness problem in multi-organ segmentation, data need to be preprocessed, such as being subjected to data resampling, data aggregation, and data embellishment, to reduce the prejudice factors in the data itself.

3.1.1 Data resampling

Imbalances in datasets can lead to disparities in a model's ability to learn from and extract features from various subgroups of data and are one of the main causes of bias. For example, an imbalance in the number of subjects in different subgroups used for model training will lead to differences in the model's inference effects for these different groups, thus causing fairness issues. Data resampling is often used to address problems of dataset imbalance, which can be categorized into undersampling and oversampling [61]. Undersampling involves randomly reducing data to achieve overall dataset balance, in which, for fairness, the data for various subgroups are mapped to a multi-dimensional point, and the points with larger numbers are adaptively reduced to keep the data distribution in the spatial mapping and balance the dataset. Oversampling involves adding data through unsupervised learning to reduce the imbalance between subgroups. Similar to undersampling, a mapping process is used; however, generation strategies such as applying a generative adversarial network are used to add data [62, 63].

A few studies have argued that oversampling is more effective for balancing data [64-66], whereas others have stated that oversampling did not operate appropriately [67]. Medical imaging data are valuable, and the extraction and labeling of imaging data from volunteers or patients require substantial human, financial, and time resources. The imbalance between subgroups (such as race and age) can be remarkable in popular research fields such as AI disease diagnosis and AI cancer site segmentation and prediction. Choosing to delete data in pursuit of balance can substantially increase the relative cost of a project because the data collection process is time-consuming, labor-intensive, and costly. Reducing the amount of data is also a waste of resources, and it can degrade the generalization ability of the model. As an alternative, oversampling is a balancing strategy based on adding data, but the usability and feasibility of the data itself may be somewhat compromised, and experimental results are hard to reproduce because of randomness. Moreover, the data generated by the generation strategy in order to balance the dataset may have data quality problems, and the introduction of outlier data will lead to an overall decrease in the model training effect. However, a few studies have innovatively combined two data-resampling strategies to further improve a model's performance [68].

Brown et al. [46] used undersampling to generate a biased dataset and a balanced dataset from original chest radiography data and found that the balanced dataset achieved a balanced distribution of negative and positive labels across all age groups. Puyol-Antón et al. [7] used batch sampling to ensure racial group balance within each batch to improve the fairness of cardiac MRI segmentation.

3.1.2 Data aggregation

Imbalances in the datasets used for model training can arise because of various real-world factors such as geography, policy, and even culture. For instance, multi-organ segmentation models designed in African countries often use data primarily from Black populations. In contrast, similar models in Europe and America mainly use data from White or Mixed-race individuals. However, these datasets can achieve balance by aggregation and complementation, thereby reducing model bias. In summary, data aggregation involves a strategy combining multiple datasets to solve the data imbalance problem. Because most datasets, especially those used for multi-organ segmentation, have considerable uniformity, achieving balance among various subgroups through data aggregation is highly feasible. Additionally, data aggregation is commonly applied in the multimodal training of AI networks to help the network fully learn data features and achieve more accurate predictions.

Seyyed-Kalantari et al. [47] aggregated MIMC Chest X-ray, Chest-Xray8, and CheXpert datasets to form an integrated dataset, which was then used for model training. Their experimental results indicated that the model trained on this integrated dataset exhibited lower disparity of true positive rate, thereby implying higher model fairness.

Data aggregation is also commonly applied in the multimodal training of AI networks to help them fully learn data features and achieve more accurate predictions. Different data modalities focus on capturing distinct characteristics of the target organ. For example, CT images provide comprehensive information on the density of an organ, whereas MRI images better represent the contrast in soft tissues, and these image features may be influenced by different sensitive attributes. Moreover, single-modality data may suffer from noise issues; for instance, patients from certain racial or age groups may exhibit poor image quality on a specific modality, which can hinder accurate multi-organ segmentation. By integrating multiple modalities, the model can leverage data from other modalities to compensate for this deficiency, thereby balancing multi-organ segmentation performance across different groups. Zhou et al. [42] aggregated CT scans with electronic health records for model training and compared the results with those obtained from training on individual datasets. They observed that the models trained on the aggregated datasets generally performed better across multiple subgroups than the control group, with relatively lower bias among groups, that is, a lower true positive rate gap between the groups. The results of this experiment demonstrate the feasibility of using multimodal dataset integration to address fairness issues.

However, data aggregation is often accompanied by other preprocessing strategies. While on the one hand there may be inconsistencies in the modalities and instrument parameters of different image datasets that requires preprocessing strategies such as data augmentation or image resampling to reduce their impact on the fairness of the model, on the other hand, different datasets may have different measurement units for the same sensitive attribute, which requires unification. For example, BMI may be recorded in one dataset for fairness assessment, whereas in another dataset the classes of overweight, healthy, and underweight are recorded for each sample. In order to aggregate the two datasets, the metric for measuring human obesity needs to be standardized.

3.1.3 Data embellishment

Data embellishment is used for image preprocessing and refers to the filtering out of original data noise through artificially specified rules. For instance, data embellishment can be applied in the preprocessing phase of facial recognition. Noise data, such as skin and hair color, can be filtered out by transforming original facial data into sketch data, reducing the generation of bias [42]. Data embellishment also has considerable application value for multi-organ segmentation. Multi-organ segmentation is often used as an intermediary process in research to facilitate subsequent feature extraction to improve model performance in tasks such as disease diagnosis and lesion analysis. During the data aggregation process, data from various regions may show inconsistencies in image size, brightness, and color due to equipment or parameter differences, affecting the performance of the model and creating bias in various sub-datasets. Image resampling is often employed as a solution for image size discrepancies; however, brightness and color inconsistencies, which may be a form of noise in imaging data, can be filtered out through data embellishment, thereby reducing the bias due to data specification inconsistencies in models trained on diverse sub-datasets. Sidhu et al. [48] used principal component analysis (PCA) to process retinal blood vessel images into grayscale images during the preprocessing step, and then applied contrast-limited adaptive histogram equalization to highlight retinal blood vessels in the grayscale image, effectively eliminating the impact of color on model training.

Data embellishment, which includes data augmentation strategies such as PCA, can be used to eliminate data features that contribute to unfairness issues, resulting in image data with similar features across subgroups. For example, in populations with organ lesions, the use of PCA can reduce the representation of lesion characteristics in the images while preserving the original features of the organs to be segmented. This makes the model less likely to be influenced by the lesion characteristics, allowing it to achieve better segmentation performance, even in areas affected by lesions. However, this approach may lead to the loss of original features that are beneficial for segmentation. For instance, when PCA is used, image features are compressed, and while this reduces the influence of factors such as health conditions, it can also blur organ contours, making segmentation more difficult. Additionally, there are challenges in training and fine-tuning the segmentation model on the processed data; the data improvement process is time-consuming, especially for multi-dimensional CT and MRI data.

3.2 Fairness improvement through in-processing

The inference effectiveness of the model is affected by the model's ability to encode data, which also affects the fairness of the model. For example, some studies consider adding additional model branches to extract the discriminatory factors in the data when designing the model, or introduce a new loss function to improve the fairness of the model as part of the model training goal. This section presents inprocessing strategies for fairness improvement, including representation learning, disentanglement learning, contrastive learning, and federated learning.

3.2.1 Representation learning

Representation learning is the process of converting data types that computers cannot recognize, such as raw images, natural language, and sound, into numerical vectors. This process helps models learn the fundamental features of the data and ignore noise data, such as gender, skin color, and age, thus reducing the gap between various subgroups in the model. For fairness, representation learning performs feature extraction on the original data and focuses on reducing the spatial distance of data features in various subgroups in the spatial mapping, to reduce or even eliminate the model's data prejudice across different subgroups. Adeli et al. [50] proposed a feature extraction model based on adversarial learning and introduced a loss function based on the adversarial learning mechanism to reduce the correlation between the bias and the learned features. Furthermore, data features will contain confounding factors, and these covariates will affect the fairness of the model. For example, a model may be trained for multi-organ segmentation and the training data for a particular organ may be smaller in an elder subgroup. In this case, age performs as a confounding effect in the multi-organ segmentation process. To reduce the effect of confounding factors, Zhao et al. [49] proposed an end-to-end training strategy to extract features in the data that did not change with confounding factors such as age and gender.

3.2.2 Disentanglement learning

Disentanglement learning decomposes the factors of variation in the feature representations of a dataset into independent subfactors, each representing a certain real-world meaning. In machine learning, the bias a model exhibits across various subgroups arises from its inadequate learning of the dataset's features. This means that a few features related to sensitive attributes are also integrated into representations generated by the model, leading to a correlation between the model's output and the sensitive attributes. The subfactors unrelated to sensitive attributes in the dataset can be separated out through disentanglement learning, and the unbiased features so-obtained are particularly feasible for fairness improvement. Sarhan et al. [69] disentangled meaningful and sensitive representations from the original autism brain imaging data exchange dataset, using a forced orthogonal constraint as a proxy for independence. They enforced a reduction in the correlation between meaningful representation and sensitive representation through entropy maximization to obtain unbiased representations. Afzal et al. [25] incorporated a DenseNet architecture as a novel branch for gender classification into their segmentation model, thereby introducing a gender detection module into an existing multi-organ segmentation framework. This improvement assisted the model in distinguishing between sensitive attributes and common features, thereby mitigating spurious correlations between sensitive attributes and the representations learned for the segmentation task. Deng et al. [53] adjusted the relationship between the target representation and sensitive representation in the column space and row space, respectively, when disentangling the features of the CheXpert dataset, thereby achieving orthogonality in the representation space for the independence between target and multi-sensitive representations.

3.2.3 Contrastive learning

Contrastive learning is based on the unsupervised learning paradigm, with the objective of generating an encoder to encode similar data within a dataset in a similar manner while encoding various data as distinctively as possible [70]. Du et al. [54] introduced an additional neural network branch into disentanglement learning to act as a contrastive learning module, and this aided in the disentanglement of target representation and sensitive representation. The disentangled target representation demonstrated a lower correlation with the sensitive attribute owing to the contrastive learning mechanism, thereby further reducing the model bias across various subgroups.

3.2.4 Federated learning

In machine learning, federated learning is a distributed computing strategy that allows for the training of models on data distributed across various devices and servers while preserving data privacy [71]. Compared with traditional machine learning strategies, federated learning necessitates maintaining a distributed data step on each local server. The initial model from the central server is shared with all local servers that hold data for model training, and the central server ultimately aggregates and updates the model. This not only protects data privacy but also addresses the problem of data imbalance by aggregating the training results from multiple local servers, thereby substantially improving model fairness. Conversely, model fairness may decrease due to a decline in data diversity when the model relies on a single dataset. In radiology, federated learning has been applied in areas such as multi-organ segmentation, lesion detection, organ image classification, and disease tracking based on specific modalities through multi-institutional collaboration [72, 73].

Jiang et al. [57] proposed federated training through contribution estimation and used standard deviation, Pearson correlation, and Euclidean distance to assess fairness in two organ segmentation tasks, demonstrating the effects federated learning had on improving organ segmentation.

3.3 Fairness improvement through postprocessing

In the realm of postprocessing for fairness improvement in machine learning tasks, a strategy of model pruning and fine-tuning can be adopted [58, 74, 75]. “Model pruning” is a strategy for optimizing a neural network model by eliminating unnecessary parameters and connections. It augments the efficiency and generalizability of a model by removing redundant connections and reducing model complexity. Model pruning can be performed on the basis of the importance of parameters, or based on other pruning strategies [76]. Conversely, “fine-tuning” serves as a model correction mechanism when substantial bias across experimental groups emerges in the results. Primary strategies include category threshold adjustments and cost-sensitive learning.

In multi-organ segmentation, every parameter in the segmentation model holds diverse significance for effectiveness across various subgroups. By pruning corresponding parameters, the accuracy discrepancy between the privileged and unprivileged groups can be reduced, thereby improving fairness without a substantial decrease in accuracy. Wu et al. [59] and Marcinkevics et al. [60] employed pruning and fine-tuning strategies for postprocessing pretrained models and adjusting model parameters for deployment. They proposed simple, yet highly effective, strategies that could be effortlessly applied when protected attributes remained unknown during model development and testing phases.

Moreover, model interpretability can serve as a tool to help uncover features leading to bias among subgroups during the postprocessing phase. Techniques such as SHapley Additive exPlanations and Integrated Gradients can improve model interpretability, with a noteworthy function being the ability to quantify the importance of features [77]. However, ref. [78] demonstrated a substantial correlation between unfairness and sensitive features with higher SHapley Additive exPlanations values, providing guidance for the causative analysis of fairness and unfairness mitigation. Another study revealed that ethnicity ranked as one of the most critical features leading to bias across ethnicity, gender, and age subgroups [37, 38]. This finding aligned with that obtained in ref. [24], where comparative experimentation demonstrated the race bias effect to be more pronounced than that of gender.

4 DISCUSSION

Fairness is not just a fundamental measure of AI usability but a cornerstone for its responsible application in medical imaging, particularly in multi-organ segmentation. This capability offers a novel avenue for model optimization. However, addressing model fairness remains a significant challenge, particularly given the morphological variations in human organs that are influenced by sensitive attributes such as BMI, health status, and lifestyle. Unfortunately, there is a lack of systematic fairness research on these sensitive attributes, and they often correlate with race, gender, and age. Cross-research involving multiple attributes, rather than independent fairness assessments based on each attribute, will be beneficial in understanding the causes of fairness issues and in addressing them effectively.

Most existing studies focus on multi-organ segmentation models trained on unimodal data, which can be influenced by a single sensitive attribute, leading to noise, artifacts, or resolution limitations that can affect model performance. For instance, with MRI scans, certain racial groups may exhibit lower image quality due to equipment calibration biases, leading to poorer model performance for these groups Therefore, this leads to inconsistent fairness assessment results for the same segmentation model across different modalities. To provide more objective fairness assessment results or improve model fairness, it is necessary to incorporate multimodal datasets into model training.

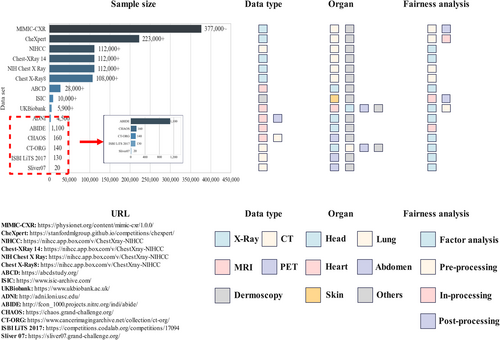

Figure 2 provides information on public datasets used in current fairness studies, and reveals that most datasets are unimodal and lack organ diversity. Consequently, single public datasets are insufficient to support fairness research in multi-organ segmentation. Additionally, the inconsistency in the types of sensitive attributes recorded across different datasets poses challenges for conducting joint fairness analyses. In this context, private datasets offer advantages in fairness research. Private datasets do not suffer from inconsistencies in sensitive attributes across datasets. Furthermore, various sensitive attributes, including BMI and health status, can be independently collected. More importantly, private datasets can be tailored to include scans of different organs and modalities, as needed.

Public datasets that have been used for assessing fairness of multi-organ segmentation. ABCD, the adolescent brain cognitive development; ABIDE, the autism brain imaging data exchange; ADNI, the Alzheimer’s disease neuroimaging initiative; CHAOS, combined healthy abdominal organ segmentation; CheXpert, Chest eXpert; CT-ORG, CT volumn with multiple organ segmentations; ISBI LiTS 2017, ISBI The Liver Tumor Segmentation Benchmark 2017; ISIC, International Skin Imaging Collaboration; MIMIC-CXR, MIMIC Chest X-ray; NIHCC, National Institutes of Health Clinical Center; NIH Chest X Ray, National Institutes of Health Chest X Ray; Sliver07, Segmentation of the Liver Competition 2007.

The issue of dataset imbalance for model training is difficult to overcome. Data collection is costly and time-consuming, and without additional intervention, controlling the distribution of collected data is challenging. While data resampling is used to balance data, it has limitations in both subsampling and oversampling. However, data imbalance can be addressed through data aggregation and federated learning, and sensitive attribute information can be used effectively. Nonetheless, the combined dataset requires further preprocessing to address differences in modalities and data collection parameters across datasets.

As big data, multimodality, and high computational complexity become increasingly prevalent in multi-organ segmentation tasks, foundation models have been proposed. These models support segmentation across multiple modalities and organs and exhibit strong generalization capabilities. By integrating datasets with varying characteristics, including modality, image size, and equipment parameters, foundation models demonstrate stronger compatibility with private datasets in comparison with traditional multi-organ segmentation models, and they support multi-organ segmentation. Consequently, the emergence of these foundation models facilitates researchers' use of private datasets for multi-organ segmentation tasks and fairness assessments. However, while foundation models present a promising approach to addressing fairness in multi-organ segmentation, they may also introduce new challenges, particularly when applied to diverse datasets with varying attributes. Currently, no systematic or comprehensive studies have assessed fairness in multi-organ segmentation tasks using foundation models. For instance, Li et al. [79] used three models—Segment Anything in medical scenarios, driven by Text prompts, Medical Segment Anything, and Segment Anything—to segment the liver, kidney, spleen, lung, and aorta, and found that the Segment Anything in medical scenarios, driven by Text prompts model showed significantly more fairness concerning gender, age, and BMI. However, this study was limited by the small number of organs examined and focused solely on the DSC metric for evaluating fairness.

Research studies that are focused on improving fairness in multi-organ segmentation are currently limited. As shown in Table 2, most strategies designed to improve fairness are primarily applied to medical image analysis tasks, such as disease diagnosis. Notably, these strategies also have the potential to be adapted for fairness improvement in multi-organ segmentation, suggesting that researchers should consider transferring these approaches to this field. Additionally, with the increasing prevalence of foundation models in multi-organ segmentation and the growing significance of their fairness issues, it is meaningful to explore the adaptation of the fairness improvement strategies traditionally applied to conventional segmentation models to use in foundation models, and this warrants further investigation.

In summary, considering the expanding application of AI in medical imaging, the demand for precise and fair multi-organ segmentation has increasingly become a central focus, presenting numerous challenges for researchers. Fairness is poised to emerge as a key aspect of model optimization. Future studies should delve deeper into the role of fairness in multi-organ segmentation because many untapped patterns and solutions remain to be discovered. Moreover, as foundation models gain prominence, the need for systematic fairness assessment in multi-organ segmentation tasks will become increasingly critical, paving the way for the next wave of research in medical AI.

5 CONCLUSIONS

This review has examined fairness in multi-organ segmentation, identifying factors contributing to bias and strategies to improve fairness during model development. It also highlights current gaps in fairness research within the field, offering insights for future model optimization and application in medical practice. As AI advances in medical imaging, addressing fairness is essential to improve accuracy and reduce disparities across diverse subgroups. Researchers should apply fairness strategies from other medical imaging tasks, such as disease diagnosis; with the rise of foundation models, the fairness of these models in multi-organ segmentation needs particular attention.

AUTHOR CONTRIBUTIONS

Qing Li: Methodology (lead); writing—original draft (lead); writing—review & editing (lead). Yizhe Zhang: Supervision (lead); writing—review & editing (equal). Longyu Sun: Writing—review & editing (equal). Mengting Sun: Writing—review & editing (equal). Meng Liu: Writing—review & editing (equal). Zian Wang: Writing—review & editing (equal). Qi Wang: Writing—review & editing (equal). Shuo Wang: Supervision (lead); writing—review & editing (lead). Chengyan Wang: Supervision (lead); writing—review & editing (lead).

ACKNOWLEDGMENT

The computations in this research were performed using the Computing for the Future at Fudan platform of Fudan University.

CONFLICT OF INTEREST STATEMENT

The authors declare no conflicts of interest.

ETHICS STATEMENT

Not applicable.

INFORMED CONSENT

Not applicable.

Open Research

DATA AVAILABILITY STATEMENT

Data sharing is not applicable to this article as no new data were created or analyzed.