Large language models debunk fake and sensational wildlife news

通过大型语言模型揭露虚假和耸人听闻的野生动物新闻

Editor-in-Chief & Handling Editor: Ahimsa Campos-Arceiz

Abstract

enIn the current era of rapid online information growth, distinguishing facts from sensationalized or fake content is a major challenge. Here, we explore the potential of large language models as a tool to fact-check fake news and sensationalized content about animals. We queried the most popular large language models (ChatGPT 3.5 and 4, and Microsoft Bing), asking them to quantify the likelihood of 14 wildlife groups, often portrayed as dangerous or sensationalized, killing humans or livestock. We then compared these scores with the “real” risk obtained from relevant literature and/or expert opinion. We found a positive relationship between the likelihood risk score obtained from large language models and the “real” risk. This indicates the promising potential of large language models in fact-checking information about commonly misrepresented and widely feared animals, including jellyfish, wasps, spiders, vultures, and various large carnivores. Our analysis underscores the crucial role of large language models in dispelling wildlife myths, helping to mitigate human–wildlife conflicts, shaping a more just and harmonious coexistence, and ultimately aiding biological conservation.

摘要

zh在当前网络信息快速增长的时代,区分事实与耸人听闻或虚假的内容是一项重大挑战。本文探讨了大型语言模型作为一种工具在检查关于动物的假新闻和耸人听闻的内容上的潜力。我们对最流行的大型语言模型(ChatGPT 3.5 和 4,以及 Microsoft Bing)进行了查询,要求它们量化 14 种野生动物群体杀害人类或牲畜的可能性,这些动物群体通常被描述为危险的或耸人听闻的。然后,我们将这些分数与从相关文献和/或专家意见中获得的 “真实 “风险进行比较。我们发现,大语言模型得出的可能性风险得分与 “真实 “风险之间存在正相关关系。这表明,大型语言模型在核对有关水母、胡蜂、蜘蛛、秃鹫和各种大型食肉动物等普遍被误传和令人广为恐惧的动物的信息方面具有广阔的潜力。本文的分析结果展现了大型语言模型在消除野生动物流言、帮助缓解人类与野生动物冲突、塑造更加公正和谐的共存关系以及最终助力生物保护方面的关键作用。【审阅:孟义川】

Plain language summary

enIn today's digital age, distinguishing accurate information from misinformation, sensationalized, or fake content is very challenging. We investigated the effectiveness of large language models, such as ChatGPT and Microsoft Bing, in fact-checking fake news about animals. We asked these large language models to evaluate the likelihood of wildlife, often portrayed as dangerous, killing humans or livestock. We selected 14 wildlife groups, including jellyfish, wasps, spiders, vultures, and various large carnivores. The scores from the large language models were then compared to data from scientific literature and expert opinions. We found a clear positive correlation between the risk assessments made by the large language models and real-world data, suggesting that these models may be useful for debunking wildlife myths. For example, the large language models accurately identified that animals like vultures pose no measurable risk to humans or livestock, while some large carnivores are more dangerous to livestock. By accurately identifying the true risks posed by various wildlife species, large language models can help reduce fear and misinformation, thereby promoting a more balanced understanding of human–wildlife interactions. This can aid in mitigating conflicts and ultimately promote harmonious coexistence.

简要语言摘要

zh在当今的数字时代,区分准确的信息与错误的、耸人听闻的或虚假的内容非常具有挑战性。我们研究了大型语言模型(如ChatGPT和Microsoft Bing)在检查关于动物的假新闻方面的有效性。我们选取了通常被描述为危险的,会杀死人类或牲畜的14个野生动物类群,包括水母、胡蜂、蜘蛛、秃鹫和各种大型食肉动物。然后使用这些大型语言模型来评估野生动物对人与牲畜产生伤害的可能性,并将大型语言模型的得分与科学文献和专家意见的数据进行比较。

我们发现大型语言模型的风险评估与现实世界数据之间存在明显的正相关关系,这表明这些模型可能有助于揭开野生动物的流言。例如,大型语言模型准确地识别出像秃鹫这样的动物对人类或牲畜没有可测量的风险,而一些大型食肉动物对牲畜更危险。通过准确识别各种野生动物带来的真实风险,大型语言模型可以帮助人们减少恐惧和错误信息,从而促进对人类与野生动物交互的更平衡的理解。这有助于缓解人与野生动物的冲突,最终促进和谐共处。

Practitioner points

en

-

Large language models, such as ChatGPT and Microsoft Bing, can provide accurate and balanced assessments of the true risks posed by wildlife to humans and livestock.

-

Large language models correctly classified animals that pose no threat to humans and wildlife from others that are more dangerous, aligning well with real-world data.

-

By providing accurate risk assessments, these models can help promote coexistence between humans and wildlife.

实践者要点

zh

-

大型语言模型(ChatGPT和Microsoft Bing)可以准确和均衡地评估野生动物对人类和牲畜造成的真实风险。

-

大型语言模型能够正确分类那些对人类和牲畜不构成威胁的动物与其他更危险的动物,其分类结果与真实世界数据高度吻合。

-

通过提供准确的风险评估,这些模型可以帮助促进人类和野生动物之间的共存。

1 INTRODUCTION

The 21st century has seen an explosion of online information and media growth. The rapid pace at which information is disseminated online, while fostering increased access to diverse perspectives, has concurrently given rise to a significant challenge: discerning facts from fake news and sensationalized content (Lazer et al., 2018). The boundaries between facts and deceptive narratives are often blurred, making it arduous for individuals to navigate an increasingly polluted information ecosystem (Lazer et al., 2018). Therefore, it is necessary to explore new tools that can be widely used to debunk false narratives.

In 2023, large language models (hereafter LLMs), such as ChatGPT, surged to prominence, revolutionizing diverse fields (e.g., medicine, public policy, technology, and industry) with their advanced natural language processing capabilities. LLMs are advanced artificial intelligence systems trained on vast text data sets, enabling them to understand, generate, and manipulate human language text with high accuracy. From enhancing communication tools to driving breakthroughs in artificial intelligence (AI) research, the rise of LLMs marks a transformative leap in the way we interact with language and technology. Currently, generative AI is generally considered a potential ally in biological conservation, for example by speeding up evidence reviews towards conservation policy (Tyler et al., 2023). Hence, there is an urgent need to quantify the potential of these models in shaping information landscapes to propagate or debunk misinformation, fake, or sensationalized news (e.g., Jones, 2024; Xinyi et al., 2024).

Animals perceived as “dangerous” can arouse strong emotional responses in humans, making animal-related sensationalized content powerful clickbait—often through the mechanism of cognitive attraction (Acerbi, 2019). Cognitive attraction underscores a psychological tendency of individuals to align with ideas, stimuli, or patterns based on cognitive processes rather than emotional or sensory responses. Over time, a negative and sensationalized framing of wildlife, as often seen in online media news (e.g., for large carnivores [Nanni et al., 2020], spiders [Mammola et al., 2022], or bats [Okpala et al., 2024]), may propel the “illusory truth effect.” This effect posits that repeated exposure to a statement increases the likelihood of individuals accepting it as true, irrespective of its veracity (Brashier & Marsh, 2020). The illusory truth effect can shape people's beliefs and emotions toward the natural world (Soga et al., 2023), potentially to the extent of influencing conservation policies (Knight, 2008). With the increasing reliance on LLMs as a primary information source in contemporary society, it becomes critically important to evaluate the accuracy and reality alignment of the information they disseminate. This examination is particularly crucial in the context of emerging issues characterized by divergent perspectives on human–nature interactions. One such issue is human–wildlife conflict, which arises from direct encounters between humans (or their livestock) and wildlife (Zimmermann et al., 2020). Given that these conflicts often stem from misconceptions about wildlife, frequently depicted as dangerous or sensationalized, LLMs hold a significant, yet unexplored, potential to mitigate these negative encounters. They can achieve this by offering the public balanced and factual information about commonly misrepresented animal species.

Therefore, this study explores the potential of LLMs as a tool to fact-check fake news and sensationalized content about animals. This is relevant and timely because the vast amount of emerging information available to date is mainly fact-checked manually, for example, by consulting credible sources or fact-checking organizations. Specifically, we first quantify the likelihood, sourced from generative AI models, of 14 wildlife groups often portrayed as dangerous or sensationalized, killing humans or livestock. We then compare these LLM-derived likelihoods with the actual risk that these wildlife groups pose to humans and livestock, as sourced from authoritative sources. Given the wide range of training information to which LLMs are exposed, including scientific literature, we predict that the likelihood scores of the 14 wildlife groups causing harm, as assessed by the LLMs, will closely align with the real conditions based on scientific evidence. Ultimately, we expect that LLMs will be effective in debunking fake and sensational news about wildlife groups often portrayed as dangerous or sensationalized.

2 MATERIALS AND METHODS

To quantify the potential of generative AI as an ally for fact-checking information about animal group interactions with people and livestock, we queried three of the most popular LLMs: ChatGPT 3.5 and 4, and Microsoft Bing. For the latter, we used the “precise” conversation style option, as we were interested in fact-checking. For each query, we recorded the likelihood of selected animal groups killing humans or livestock. We selected 14 target animal groups based on their (i) perceived danger to humans and/or livestock (Correia & Mammola, 2023); and (ii) their frequent targeting by fake or sensational information. For consistency and as a control, we included all 14 animal groups in both queries related to killing humans and livestock. Therefore, some unlikely interactions, such as between jellyfish and livestock, which inhabit different realms, were also included. The animal groups considered are jellyfish (Vandendriessche et al., 2016), spiders (Mammola et al., 2022), wasps (Sumner et al., 2018), sharks (Nanni et al., 2020), snakes (Bolon et al., 2019), crocodiles (Nanni et al., 2020), vultures (Lambertucci et al., 2021), bears (Nanni et al., 2020), dogs (Overall & Love, 2001), wolves (Nanni et al., 2020), lions (Nanni et al., 2020), leopards (Nanni et al., 2020), elephants (Maurer et al., 2024), and hippos (van Houdt & Traill, 2022). In the case of dogs, we refer to domestic, feral, or free-ranging dogs. The formulation of each query was “On a scale from extremely low, very low, low, moderate, high, very high, extremely high, can you give me a score of how likely (animal group 1–14) are to kill humans/livestock?”

Given the stochastic nature of LMMs, which can provide different answers to the same query at each interaction, we made 10 queries per question and LLM separately to obtain a consensus based on multiple answers to the same question. This resulted in 840 queries [14 animal groups × 2 interaction targets (human or livestock) × 3 LLMs × 10 repetitions]. All queries were conducted by the same user, who had no prior LLM training on this topic. We further validated the robustness of the scores obtained by repeating the queries in two of the most popular spoken languages other than English: Mandarin Chinese and Spanish. The results were qualitatively similar to those obtained in English, indicating the consistency of the LLMs responses across different languages.

Next, we quantified the “real” likelihood (details below) of each selected target animal of fake/sensational news to kill humans or livestock. For the likelihood of killing humans, we obtained the yearly global number of human deaths (yrd) provoked by each of the 14 species. We used three categories: high (yrd ≥ 1.000), moderate (1000 > yrd > 100), and low (yrd ≤ 100). For some species, the yearly global number of human deaths was not available. In such cases, we estimated the global number of human deaths according to literature review and expert opinions (see details in Supporting Information S1: Table S1). For the likelihood of killing livestock, the yearly global number of livestock deaths was not available for the majority of the 14 species. Therefore, we scored the likelihood of killing livestock into three categories: high (yrd ≥ 1.000), moderate (1000 > yrd > 100), and low (yrd ≤ 100). These scores were based on information available in the literature for specific geographical areas and expert opinions (Supporting Information S1: Table S2).

Finally, we quantified whether the likelihood risk score for both humans and livestock obtained from the LLMs aligns with the “real” likelihood detailed in the previous paragraph. To achieve this, we built two linear mixed effects models using the “lmer” function from the lme4 package (Bates et al., 2015), assuming a Gaussian distribution. One model was for the likelihood of killing humans and the other for livestock. We used the LLM-derived score from each query as the response variable (sample size = 420 for each of the two models), converting the six scoring categories (extremely low, very low, low, moderate, high, very high; with no scores obtained for the extremely high category) to a numeric continuous variable representing the likelihood risk from lowest (score 1) to highest (score 6). As the single covariate, we used the “real” likelihood, which was also converted to a continuous numerical variable from 1 to 3 (representing the risk categories low, moderate, and high). We included the type of LLM (with three levels) as a covariate and the species (14 levels) as a random effect to account for possible pseudoreplication stemming from multiple scores for the same species by the same LLMs. We validated the model assumptions using the “check_model” function from the performance package (Lüdecke et al., 2021). Both models fit the assumptions well, confirming the statistical appropriateness of treating the “real” likelihood covariate (three values) as continuous. All the data analyses and visualization were performed in the R software v. 4.3.1 (R Core Development Team, 2021).

3 RESULTS

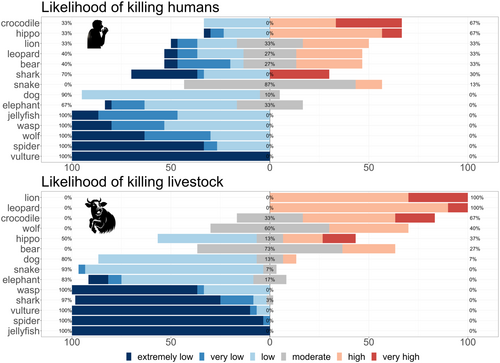

The LLM scores for the likelihood of the 14 focal animal groups to kill humans or livestock span across the full risk gradient, from very dangerous to very innocuous animals (Figure 1). Among the animal groups deemed most dangerous to humans by the LLMs are crocodiles and hippos. Mammalian carnivores, such as lions, leopards, and bears, are generally scored as moderate risk by the LLMs, with a similar number of scores in the low and high-risk categories. Within the mammalian carnivores, wolves represent an exception, being scored as of low to extremely low risk to humans, along with other animal groups such as vultures, spiders, wasps, and jellyfish. Shark scores indicate a strongly polarized view by the LLMs, with 70% of scores indicating low to extremely low risk to humans, and 30% indicating very high risk. The scores for the same animal groups changed quite substantially when considering the likelihood of killing livestock (Figure 1). Lions, leopards, and crocodiles were identified as the most dangerous. Wolves and bears now scored as moderate to high risk, hippos are associated with a range from low to high risk, while all other groups are largely associated with low to extremely low risk of killing livestock.

Scores of the likelihood of killing humans or livestock obtained using queries made in Mandarin Chinese and Spanish (Supporting Information S1: Figures S1 and S2) are strongly consistent with the scores based on English queries. Mandarin Chinese-based scores, however, show high polarization, with animal groups classified as either dangerous or nondangerous. Still, the relative risk of each animal group broadly aligns with that of the English-based results (Figure 1).

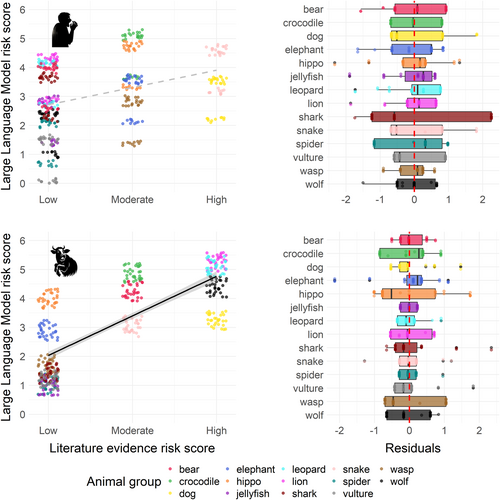

We found a positive relationship between the likelihood risk score obtained from LLMs and the “real” risk obtained from literature and/or expert opinions (Figure 2). Based on the model results, this relationship was weak for the likelihood of killing humans (β = 0.60 ± 0.38 standard error [SE], t = 1.59, p = 0.14), likely due to the large variation in the scores, but it was highly significant for the likelihood of killing livestock (β = 1.37 ± 0.33 SE, t = 4.09, p = 0.002). The model residuals by animal group further highlight that the LLM-generated risk scores are largely aligned with those expected from the “real” risk, with no significant under- or overestimation (Figure 2). An exception appears to be the risk of dogs killing livestock, which seems underestimated by the LLMs (low risk on average) compared to the expected “real” risk being high.

4 DISCUSSION

We provide evidence that LLMs can be highly effective in delivering accurate and balanced responses concerning animal groups often targeted by fake or sensationalized news coverage in the media. For example, there has been a recent surge of misinformation about vultures supposedly killing livestock (Lambertucci et al., 2021). If people relied on LLMs to verify such information, they would learn that the likelihood of vultures killing livestock is extremely low (Lambertucci et al., 2021). Similarly, spiders are routinely portrayed in the media as highly dangerous to humans (Mammola et al., 2022). In contrast, the LLMs correctly indicated their likelihood of killing people as low to extremely low, aligning with real-world data on spider-attributed fatalities (e.g., Stuber & Nentwig, 2016). Interestingly, LLMs also correctly score the risk of carnivore species, such as lions, leopards, and wolves, as high, reflecting their significant role in human–wildlife conflict through livestock depredation.

In the comparative analysis of risk assessments generated by LLMs and empirically observed risk data, there is a high degree of concordance across the majority of the 14 animal groups examined regarding the likelihood of killing humans or livestock. However, this alignment is only statistically significant in the context of livestock killing. The large variation in the scores for the likelihood of killing humans likely stems from regional patterns, where an animal group may be perceived as very dangerous in one specific region but not in another. An exception to this broad alignment between LLMs scores and reality is noted in the risk scores assigned to dogs for killing livestock. Here, LLMs tend to underestimate the risk, with 80% of the scores classifying this interaction as low risk. Empirical data instead suggest a high risk, with annual incidents of livestock deaths from dog attacks greatly exceeding 1000 globally (Home et al., 2017; Wierzbowska et al., 2016). This discrepancy may be attributed to the longstanding and close relationship between humans and dogs, which could influence the prevalence of positively skewed information available online. Consequently, negative aspects, such as dogs harming livestock, might be underrepresented in the data sets used to train LLMs. This data bias is likely the cause of the underestimation of the risk associated with dogs in LLM assessments.

It is well known that human behaviors are very difficult to modify, so trying to avoid misperceptions or the adoption of negative perceptions that may influence our habits is fundamental for conservation science (Ecker et al., 2022). In a rapidly changing world where new AI models may emerge overnight and quickly become popular, it is important to verify their potential to aid or hinder biodiversity conservation. A possible limitation of this study is that it only focused on a limited set of LLMs (all trained or using technology from OpenAI), while many others are emerging rapidly (e.g., Google Gemini, Claude, and LLaMA 3, among others). The power of LLMs to debunk fake and sensational news about wildlife largely lies in the fact-checking and filtering of the training material, which forms the base knowledge for these algorithms to generate output. Indeed, we contend that this is a critical step that should have a prominent place in the development of these LLMs if they are to create a just, equitable, and sustainable world. We demonstrate that, in the case of wildlife, LLMs hold promise for debunking fake and sensational information. This underscores their potential to mitigate the emergence of human–wildlife conflicts in the future and shape a more just and harmonious coexistence between humans and wildlife.

AUTHOR CONTRIBUTIONS

Andrea Santangeli: Conceptualization; data curation; formal analysis; investigation; methodology; supervision; validation; visualization; writing—original draft; writing—review and editing. Stefano Mammola: Conceptualization; writing—review and editing. Veronica Nanni: Data curation; methodology; writing—review and editing. Sergio Lambertucci: Conceptualization; writing—review and editing.

ACKNOWLEDGMENTS

A. S. acknowledges support from the European Commission through the Horizon 2020 Marie Skłodowska-Curie Actions individual fellowships (grant no. 101027534). S. M. acknowledges the support of the National Biodiversity Future Center, funded by the Italian Ministry of University and Research, P.N.R.R., Missione 4 Componente 2, “Dalla ricerca all'impresa,” Investimento 1.4, Project CN00000033. V. N. was supported by the Italian National Interuniversity PhD course in Sustainable Development and Climate Change (link: www.phd-sdc.it). The present research was carried out within the framework of the activities of the Spanish Government through the "Maria de Maeztu Centre of Excellence" accreditation to IMEDEA (CSIC-UIB) (CEX2021-001198).

CONFLICT OF INTEREST STATEMENT

The authors declare no conflict of interest.

Open Research

DATA AVAILABILITY STATEMENT

All scores obtained from the large language model queries are available as supplementary data along with this study.