What went wrong with variant effect predictor performance for the PCM1 challenge

Maximilian Miller and Yanran Wang contributed equally in this study.

Abstract

The recent years have seen a drastic increase in the amount of available genomic sequences. Alongside this explosion, hundreds of computational tools were developed to assess the impact of observed genetic variation. Critical Assessment of Genome Interpretation (CAGI) provides a platform to evaluate the performance of these tools in experimentally relevant contexts. In the CAGI-5 challenge assessing the 38 missense variants affecting the human Pericentriolar material 1 protein (PCM1), our SNAP-based submission was the top performer, although it did worse than expected from other evaluations. Here, we compare the CAGI-5 submissions, and 24 additional commonly used variant effect predictors, to analyze the reasons for this observation. We identified per residue conservation, structural, and functional PCM1 characteristics, which may be responsible. As expected, predictors had a hard time distinguishing effect variants in nonconserved positions. They were also better able to call effect variants in a structurally rich region than in a less-structured one; in the latter, they more often correctly identified benign than effect variants. Curiously, most of the protein was predicted to be functionally robust to mutation—a feature that likely makes it a harder problem for generalized variant effect predictors.

1 INTRODUCTION

The Pericentriolar material 1 (PCM1) gene encodes the PCM1 protein, which is a fundamental component of centriolar satellites (CS) (Tollenaere, Mailand, & Bekker-Jensen, 2015). The CS structures are, in association with PCM1, essential for microtubule- and dynactin-dependent attraction of proteins to the centrosome (Dammermann & Merdes, 2002). PCM1 is involved in the maintenance of microtubule arrays and their anchoring at the centrosome during interphase (Hori & Toda, 2017). A dysfunctional PCM1 protein has been associated with defects in the neural developmental process (Keryer et al., 2011; Zoubovsky et al., 2015). Furthermore, variations in the PCM1 gene have been linked to psychiatric and severe developmental disorders (Datta et al., 2010; Gurling et al., 2006; Hashimoto et al., 2011; Kamiya et al., 2008; Moens et al., 2010; Page et al., 2018). In addition, PCM1 based fusion genes have been linked to myeloid/lymphoid neoplasms (Baer, Muehlbacher, Kern, Haferlach, & Haferlach, 2018), acute myeloid leukemia (Lee et al., 2018), and chronic myeloproliferative neoplasm (Ghazzawi et al., 2017). Even though various studies have attempted to better characterize PCM1 (Dammermann & Merdes, 2002; Hashimoto et al., 2011; Hoang-Minh et al., 2016; Kamiya et al., 2008; Wang, Lee, Malonis, Sanchez, & Dynlacht, 2016), we still lack a comprehensive understanding of its functionality. PCM1 knockouts, as well as heterozygous PCM1 deficiency, result in clear phenotypes in mice, for example, change in overall brain volume, impairment in social interaction, and changed exploratory activity (Zoubovsky et al., 2015).

One challenge of the recent Critical Assessment of Genome Interpretation (CAGI) provided a benchmark data set of 38 amino acid substitutions in PCM1. Participants were tasked with estimating the impact of the provided variants on overall PCM1 functionality. Notably, the experimental evaluation reported the change in brain ventricular volume due to PCM1 mutation. Multiple computational approaches have been designed to predict variant effects (functional change, pathogenicity, stability, etc.; Thusberg, Olatubosun, & Vihinen, 2011), including screening for nonacceptable polymorphisms (SNAP; Bromberg & Rost, 2007), sorting intolerant from tolerant (SIFT; Ng & Henikoff, 2003), PolyPhen-2 (I. A. Adzhubei et al., 2010), and nearly 200 others (Miller, Vitale, Rost, & Bromberg, 2019); these tools vary according to their preferred methods for model building, training data set selection, and descriptive features used for prediction.

For the PCM1 challenge of CAGI-5 described above, six submissions were submitted by five different groups. Our winning submission used SNAP predictions, thresholded to produce the originally requested (by the challenge rules) tri-class categorization of variant effects. Here we also show that SNAP without thresholds outperformed 24 additional, widely used variant effect prediction tools on the same benchmark data set. However, even SNAP performance was lower than expected (Bromberg & Rost, 2007). Following up on the reasons for this performance we suggest that methods fail (a) particularly due to the specifics of PCM1 features, for example, its conservation and structure patterns, and/or (b) due to the discrepancy between the experimental measures (brain ventricle volume change) and the predictor goals (pathogenicity, function or stability changes, etc.). The former indicates that mutation effects in proteins similar to PCM1 are likely to also be mispredicted by existing methods. The latter reinforces the notion that no single predictor can answer all different variant impact questions.

Here, we also further discuss the results of our second submission, where we applied a recently developed functional Neutral Toggle Rheostat predictor (fuNTRp; Miller et al., 2019) method. funtrp categorizes protein positions into three functional types based on the expected range of effect upon variation (no/weak effects = Neutral, severe effects = Toggle, intermediate effects = Rheostat). These types could then be used as a baseline for variant effect identification. Details of the funtrp model training and evaluation are described in (Miller et al., 2019). The predicted distribution of funtrp position types observed in PCM1 is characteristic of proteins involved in cell cycle/mitosis and/or necessary for maintaining cellular structure/integrity. Further, these results suggested a very robust and variation-insensitive protein functionality. This is particularly important for PCM1 due to its involvement in critical and essential processes in the cell.

2 MATERIALS AND METHODS

2.1 Experimentally derived PCM1 benchmark data set

The experimental data were provided by Dr. Maria Kousi (MIT) and Dr. Nicholas Katsanis (Duke University; CAGI-5 PCM1 challenge, manuscript in preparation). The benchmark set consisted of 38 PCM1 variants evaluated in a zebrafish brain development model. Variants referenced in this study are reported by the challenge to affect RefSeq (O'Leary et al., 2016) transcript NM_001315507.1 and protein NP_006188.3. The relative effect due to PCM1 mutations was defined as the volume difference in the brain ventricle area between zebrafish injected with wildtype and mutant mRNA. For every variant, the experimentally measured volume change and the significance of its difference from wildtype was provided (t test, p value). Based on the p value thresholds established by the data providers (Table S1), variants were categorized into benign (same function as wildtype), hypomorphic (partial loss of function), or pathogenic (loss of function). Participants were asked to predict the p value and effect type for all 38 variants. Note, the assumption made here was that the difference in ventricle size directly correlates to functional change, that is same volume = benign and different volume = (partial) loss of function. An in-depth description of the PCM1 challenge is available on the CAGI-5 website.

2.2 PCM1 challenge submission

We submitted the results of two different approaches based on SNAP (Bromberg & Rost, 2007; Bromberg, Yachdav, & Rost, 2008) and funtrp (Miller et al., 2019) predictions. SNAP is a neural-network-based computational method for predicting variant functional effects (Bromberg & Rost, 2007; Bromberg et al., 2008). funtrp, on the other hand, is NOT a variant effect predictor. Instead, it distinguishes protein sequence positions as either Neutral, Rheostat or Toggle on the basis of variant impact that can be expected at each position. Variants at Neutral positions are expected to have mostly minor impact, while variants at Toggle positions are prone to have severe functional consequences. Finally, Rheostat positions show a range of effects and thus allow for tuning of protein functionality (Miller, Bromberg, & Swint-Kruse, 2017).

For our Submission 1, we compiled SNAP prediction reliability scores and assigned those (a) in the [2,9] range as pathogenic; (b) in the [−9,−2] range as benign; and (c) in the [−1,1] range as hypomorphic.

In Submission 2, we applied funtrp and assigned any variants (a) at Rheostat positions as hypomorphic, (b) at Neutral positions as benign, (c) and at Toggle positions as pathogenic.

2.3 Performance evaluation

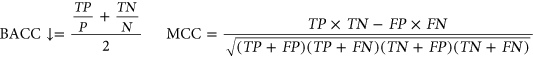

(1)

(1)2.4 Additional postchallenge prediction analysis

We extracted precomputed predictions for all 38 variants in the PCM1 benchmark data set from the VarCards (Li et al., 2018) database. VarCards contains effect annotations from 23 commonly used computational variant effect predictors (Supporting Information Text S2). For most of these methods, a threshold-based binary classification was available, categorizing variants as either tolerant/benign/neutral or damaging/effect/nonneutral. Note that here we will use effect/no-effect wording. For methods with multiclass classifications, we assigned any prediction that was not labeled tolerant, benign, or neutral to the effect class. The two variants with missing predictions (NA) from MutationAssessor (Reva, Antipin, & Sander, 2011) and M-CAP (Jagadeesh et al., 2016) were labeled incorrect. We also added SNAP predictions as 24th method to this analysis.

(2)

(2)2.5 Extracting protein features

Domain annotations (e.g., for coiled-coil regions) were extracted from UniProt Knowledgebase (UniProtKB; UniProt Consortium, 2018). To better understand the reasons for the observed differences between computational predictions and experimental effect annotations in PCM1, we analyzed SNAP predictions in the context of protein position structural features. We used the PredictProtein pipeline (Yachdav et al., 2014) to predict, using sequence alignments, three protein per-residue features commonly used by variant effect predictors (solvent accessibility and secondary structure from PROFphd (Rost & Sander, 1994) and conservation from ConSurf (Ashkenazy et al., 2016).

The CAGI-5 PCM1 benchmark data set was based on the canonical sequence of PCM1 (isoform-1; NP_006188.3, UniProt Q15154-1) and contains 16 benign, 10 hypomorphs, and 12 loss of function variants. UniProt lists five PCM1 isoforms; isoforms −1, −2, −4, and −5 are approximately 2,000 residues long, while −3 is by far the shortest with 530 residues. Isoform-1 aligns (Smith & Waterman, 1981) without gaps to the positions 1–263 and 303–530 in isoform-3—an overlap of 491 residues (~93% of isoform-3). In this study, we separately evaluated the variants localizing to the overlap region (OR) of isoforms −1 and −3 in comparison to the isoform-1 exclusive (I1) regions. As isoform-3 is observed in other species (≥95.8% sequence identity over the entire sequence) for example, chimpanzee, gibbon, and orangutan, we assumed that it is fully functional as is, that is it does not require additional domains found in isoform-1. Fourteen of the 38 variants benchmarked in the PCM1 challenge were present in both isoforms; that is one of the 16 benign, seven of the 10 hypomorphs, and six of the 12 loss of function variants were OR-specific.

3 RESULTS

3.1 Submission 1 outperforms both the CAGI-5 submissions and other predictors

Our SNAP-based submission (Submission 1) performed best (BACC = 0.66, MCC = 0.35; Equation 1 and Table S3) in the CAGI-5 PCM1 challenge. Our Submission 2 (funtrp-based) performance, however, was roughly average (BACC = 0.49, MCC = −0.04; Table S4), predicting the majority (36 of 38) of positions Neutral and, thus, the variants affecting these positions to have no-effect. Note that as funtrp is not specifically a variant effect predictor we expected a lower performance compared with other predictors.

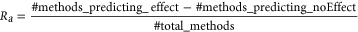

We further compared the BACC performance of all CAGI-5 submissions to that of other publicly available methods, whose predictions we extracted from the VarCards database (Methods; Figure 1 and Table S5). Our Submission 1 was still the best even in this increased pool of methods. Here, PROVEAN (Choi & Chan, 2015) and GERP++ (Davydov et al., 2010) were next (BACC = ~0.58, MCC = 0.22 for both). Note that these top three methods remained in the lead even when performance was ranked by MCC. Interestingly, conservation-driven methods, GERP++, phyloP (Pollard, Hubisz, Rosenbloom, & Siepel, 2010), and LRT (Chun & Fay, 2009), occupied position 3–5 in a ranking by BACC—ahead of many more advanced methods; phyloP and LRT were worse when evaluated by MCC (positions 7 and 8; Table S5). Note that the SNAP (Bromberg & Rost, 2007), thresholds instituted in Submission 1, had shifted the default SNAP method cutoff to predict fewer no-effect variants. This shift resulted in an improved performance of Submission 1, placing regular SNAP into the 8th place by BACC and 6th by MCC rankings (BACC = 0.54, MCC = 0.12).

Comparing predictor performance for benchmark set variants. The balanced accuracy (BACC) of the CAGI-5 PCM1 challenge submissions (triangles) was compared with that of 24 commonly used variant effect predictors (squares and rhombi). Predictors in the legend are arranged according to their BACC: lowest for Group 5 and highest for Submission 1 (Group 3). CAGI, Critical Assessment of Genome Interpretation; PCM1, Pericentriolar material 1

3.2 Variant effect predictions correlate with region structural features

To better understand why all predictors performed worse than expected, we further evaluated predictions separately for variants in the isoform-1 exclusive (I1) region compared with those found in the overlap of isoforms −1 and −3 (OR). These two regions differ drastically in their structural compositions: I1 lacks regular secondary structure (48% of all residues predicted to be loops), while the OR region is more ordered (98% predicted to be in a helix). Note, that I1 contained most of the no-effect (15/16) and nine of the 22 effect variants; thus, OR had only one no-effect variant and 13 effect variants.

As for all 38 PCM1 variants, the top three methods (Submission 1, GERP++, and PROVEAN) remained the same for I1 variants and their performance even slightly improved (Table S5). Of the other methods, some performed better for this subset of variants (e.g. SIFT and SiPhy), while others did worse (e.g. phyloP and LRT; Table S5). For the OR variants, the predictor ranking changed, likely due to the imbalance in the data set (only one no-effect variant). Here, a method predicting all variants as the effect would do well but getting the single no-effect variant correct (no false negatives) could drastically improve performance. The six top scoring (Table S5) predictors, had all predicted that one benign variant correctly; MutationAssessor (Reva et al., 2011) in addition annotated six effect variants correctly, while the following five methods (DANN (Quang, Chen, & Xie, 2015), SiPhy (Garber et al., 2009), Submission 1, Group 1, Group 2) identified five of the effect variants. Note that only one of the top three overall predictors, our Submission 1, remained at the top of the OR ranking; PROVEAN also got the benign variant right, but only identified two of the 13 effect variants, while GERP++ had identified 11 effect variants correctly but mispredicted the benign variant.

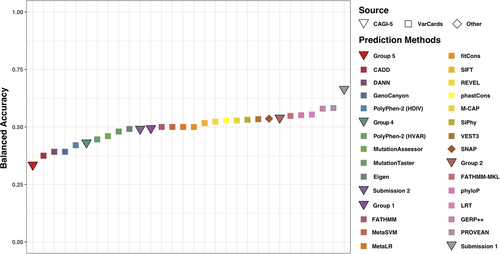

The above variance in performance suggested a qualitative difference in predictor assessment of variants between the two regions. There were clearly different trends in the agreement of the predictors (Ra ratio, Equation 2) between I1 and OR variants (Figure 2). Effect (Loss of function and hypomorph) OR variants were predicted as an effect by more predictors (Ra ≥ 0) more often (38%) than those in I1 (33%). Notably, OR hypomorphs were more often misclassified as no-effect than OR loss of function variants. This is expected, as most predictors are trained is separating extreme loss of function versus no-effect cases and mild or moderate changes are harder to grasp (Bromberg, Kahn, & Rost, 2013; Miller et al., 2017). Note that it was impossible to compare no-effect predictions across regions, as there was only one benign variant in OR (correctly identified by 13 of 24 predictors, Ra= −0.08). However, two I1 benign variants were correctly identified by more predictors as no-effect (Ra< 0) nearly half (47%) the time—a significant improvement over the effect predictions in this region. This observation reaffirms the finding that effect variants in a structure-rich region and benign variants in a less-structured region are easier for predictors to identify.

Distribution of predictor agreement-ratios (Ra) across experimental effect classes in different PCM1 regions. The distribution of Ra ratios is different for variants of different effect classes and between the different PCM1 regions. Medians are highlighted in bold. Only a single experimentally benign variant is present in the OR. OR, overlap region; PCM1, Pericentriolar material 1 protein

3.3 Conservation as a driving factor for predictions

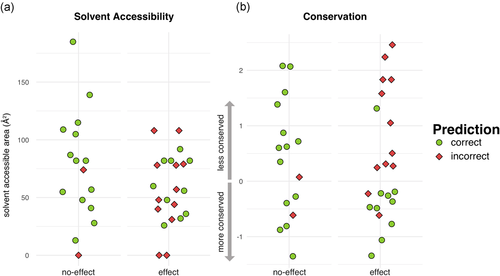

Most (if not all) methods that predict functional effect use conservation as an input. As the best-performing representative, Submission 1 mispredictions were examined for conservation patterns. For comparison, we also evaluated their relationship to the affected residue predicted solvent accessibility. Solvent accessibility was similar for correctly predicted and mispredicted both benign and loss of function/hypomorph variants (Figure 3a). Conservation, on the other hand, was clearly different for loss of function/hypomorph variants, even if it was similar for benign variants (Figure 3b). In fact, the majority of mispredicted loss of function/hypomorph variants show low conservation (high ConSurf scores; median = 0.78) while correctly predicted ones are more conserved (low ConSurf scores; median = −0.44).

Conservation and solvent accessibility distributions compared for correct and incorrect Submission 1 predictions. Submission 1 predictions are more accurate for effect variants in the conserved positions but are uncorrelated with solvent accessibility. ConSurf (conservation) scores are standardized so that the average mean/standard deviation for a given protein are =0/1. Solvent accessibility is reported as predicted residue solvent accessible area (angstrom squared, Å2)

- (i)

Easy benign: p.Ser804Arg, p.Asn1125Ser, p.Cys1361Tyr

- (ii)

Difficult benign: p.Arg883Thr, p.Lys1275Glu, p.Glu1535Lys

- (iii)

Easy loss of function/hypomorph: p.Glu311Gln, p.Glu369Gly, p.Gly892Trp

- (iv)

Difficult loss of function/hypomorph: p.Glu23Asp, p.Leu472Val, p.Gly1556Asp

By definition, most methods get all the easy cases right and mispredicting the difficult cases. Submission 1 was no exception, correctly classifying all easy cases (i and iii) and making the wrong prediction for four of the five variants difficult loss of function/hypomorph (iv). However, it correctly predicted two of the three difficult benign variants (ii), whereas many other predictors were wrong (Ra p.Lys1275Glu = 0.33 and p.Glu1535Lys = 0.42). As expected, conservation played a role in this result. The correctly predicted difficult benign variants exhibited low levels of conservation, as did the mispredicted difficult loss of function hypomorph variants.

3.4 funtrp predictions indicate that PCM1 is functionally robust

We further explored PCM1 in terms of the mutagenize-ability type of its positions (Miller et al., 2019). funtrp predicted 95% (36) of all 38 variant protein sequence positions as Neutral, that is where variants of only weak or no functional effect were expected. Only two positions (982 and 1,275) were predicted as Rheostat, allowing for a broader range of effects upon variation (Table S4). However, prediction confidence scores for these were rather weak, that is close to Neutral classification. Our Submission 2 made use of funtrp predictions but did not attain better performance. Here, we further analyzed PCM1 funtrp annotations to glimpse the possible reasons for this failure.

We predicted position classes for all 2,024 PCM1 sequence positions and found that the vast majority of these were predicted Neutral (83.9%), some (15.6%) were Rheostats, and only 11 (0.5%) were Toggles, where variants are expected knock out functionality altogether. This distribution is unusual—on average, proteins have 53% Neutrals, 33% Rheostats, and 14% Toggles (Miller et al., 2019).

A high fraction of funtrp Neutral residues suggests robust/variation-insensitive protein functionality and, thus, a possible contribution to poor variant effect predictor performance. This protein characteristic is certainly beneficial for critical processes/pathways. However, it may also indicate that PCM1 has few canonical (e.g., binding, catalytic, etc.) active/functional sites, identified by Toggle and Rheostat predictions. Outside of these sites, variation would then rarely result in a severe functional impact.

We found PCM1 is one among a set of 668 Swiss-Prot (Boutet et al., 2016) proteins, which have a similar fraction of Neutral positions (>80%; Miller et al., 2019). Most proteins of this Robust set (>99%) are nonenzymes and 74% contain at least one coiled-coil domain. Interestingly, most of these proteins appear to be structural components of the cell. Of the 414 (62% of the Robust set) proteins with a Gene Ontology biological process annotation, 240 (58%) are responsible for the cellular component organization. Of the 561 (84% of the Robust set) that have cellular component annotations, 35% map to the cytoskeleton and 27% are in the nucleus. In line with these findings, five proteins of the 131 proteins in the Robust set that could be mapped to a structure in the PDB were histone proteins.

We further queried the ConsensusPathDB-human (Kamburov, Stelzl, Lehrach, & Herwig, 2013) for interaction networks involving the Robust set proteins; 300 (44.4%) were present in at least one pathway, many of which were cell cycle/mitotic pathways, and PCM1 was found in the 16 highest ranked of these pathways (Table 1).

| ID | q-vala | Pathway details | Size | Overlap |

|---|---|---|---|---|

| 1 | 5E-35 | M phase | 340 | 18.2% (62) |

| 2 | 8E-31 | Cell cycle, mitotic | 481 | 13.9% (67) |

| 3 | 1E-27 | Cell cycle | 564 | 12.1% (68) |

| 4 | 3E-27 | Mitotic prometaphase | 186 | 22.6% (42) |

| 5 | 2E-22 | AURKA activation by TPX2 | 72 | 36.1% (26) |

| 6 | 2E-22 | Anchoring of the basal body to the plasma membrane | 97 | 29.9% (29) |

| 7 | 1E-21 | Loss of Nlp from mitotic centrosomes | 69 | 36.2% (25) |

| 8 | 1E-21 | Loss of proteins required for interphase microtubule organization from the centrosome | 69 | 36.2% (25) |

| 9 | 2E-21 | Recruitment of NuMA to mitotic centrosomes | 79 | 32.9% (26) |

| 10 | 4E-20 | Regulation of PLK1 Activity at G2/M Transition | 87 | 29.9% (26) |

| 11 | 5E-20 | Recruitment of mitotic centrosome proteins and complexes | 80 | 31.3% (25) |

| 12 | 5E-20 | Centrosome maturation | 80 | 31.3% (25) |

| 13 | 4E-19 | Cilium assembly | 187 | 18.2% (34) |

| 14 | 2E-18 | Organelle biogenesis and maintenance | 240 | 15.4% (37) |

| 15 | 5E-17 | G2/M transition | 137 | 20.4% (28) |

| 16 | 6E-17 | Mitotic G2-G2/M phases | 139 | 20.1% (28) |

- Abbreviation: pCM1, Pericentriolar material 1 protein.

- a q-value are p values corrected for multiple testing; Source of all 16 pathways: Reactome.

4 DISCUSSION

4.1 We do not see better performance with more features or more exotic algorithms

Submission 1 was the best predictor in this challenge, but conservation-based predictors did as well or better than more complicated methods. SNAP is a neural-network-based variant effect predictor which uses a variety of biochemical properties and sequence/structure-based features as input for model training. In comparison, the conservation-based GERP++ obtained the 3rd best performance with the only marginal difference to PROVEAN which ranked 2nd. The latter uses a new alignment-based metric which improves the predictions of in-frame insertions and/or deletions. FATHMM-multiple-kernel learning (MKL), which was ranked 6th, applies a based algorithm and uses ten feature groups including amongst others GC content, histone modification, transcription factor binding site as well as conservation. Interestingly, the latter was outperformed by the less complex and conservation centered LRT approach, a likelihood ratio test to identify deleterious mutations that disrupt highly conserved residues. Thus, the increase in number and complexity of features and/or choice of algorithms do not necessarily improve overall prediction performance.

4.2 PCM1 is a hard protein to evaluate

Prediction performance is generally evaluated for a wide range of samples (i.e., genes or proteins from the whole genome). Datasets, such as the PCM1 set, represent the variants of one specific protein, which may or may not be well characterized in training sets and learned by a particular tool. Thus, the choice of a benchmarking data set plays a big role in a given tool's prediction accuracy. According to one study (Mahmood et al., 2017), for example, CADD had an area under the receiver operating characteristics (ROC) curve of AUCROC = 0.87 when making predictions for TP53, but only =0.56 when looking at BRCA1; some other methods did poorly for both proteins. Different tools attained different levels of accuracy in evaluating PCM1 (Figure 1), but performance was lower than expected for many of them (I. Adzhubei, Jordan, & Sunyaev, 2013; Carter, Douville, Stenson, Cooper, & Karchin, 2013; Lu et al., 2015; Quang et al., 2015; Rentzsch, Witten, Cooper, Shendure, & Kircher, 2019; Reva et al., 2011). Thus, it appears that PCM1 is a hard protein for variant effect prediction.

One of the reasons for poor performance may be that the PCM1 protein 3D structure is unknown, and secondary structure prediction suggests that, in large part, it may lack structure altogether. For methods that use structural information as a feature, this piece of information is missing for PCM1.

Another reason may be that PCM1 (and other cell structure-relevant proteins) seem to be poorly represented (Kawabata, Ota, & Nishikawa, 1999) in experimental evaluations of functional effects; it is unclear whether this is because (a) the impact on these proteins is harder to quantify than, for example, enzymatic activity, (b) genes encoding enzymes (or their complexes) are more numerous, or simply because (c) enzymes and receptor proteins are currently of more pharmacological interest.

- (1)

The experiment may not measure directly the same thing that the predictor evaluates, that is protein function change or pathogenicity. In this challenge, the function of PCM1 is represented by the change of zebrafish brain ventricle volume. However, the change of zebrafish ventricular volume after injection of mutant mRNA might not reflect the protein functional deficiency, nor the variant-associated pathogenicity.

- (2)

Experimental functional assays could fail to reflect variant pretranscription effects. Injecting mRNA removes the effects of mutation from the organism genomic context, possibly ignoring the effect of variation on the transcript stability and introducing artifacts of overexpression (Gibson, Seiler, & Veitia, 2013).

- (3)

Prediction tools measure different types/aspects of variant impact and thus their results hold different meanings. For example, SNAP was trained on PMD variants, specifically using the change in molecular functional activity as the label. CADD, on the other hand, learned from observed and simulated variants, learning to differentiate the likelihood of variant “existence” – a proxy for deleteriousness. Although this deleteriousness may correlate with functional impact, it is not a direct prediction of function. All tools reflect their specific hypotheses and their predictions should be interpreted accordingly.

4.3 What can we do better in variant effect prediction?

Participation in CAGI challenges provides valuable insights, which can be applied to develop improved variant effect prediction approaches. Also, some conclusions of this study may be useful for future CAGI challenge planning and design. As we find that computational methods should be evaluated using more fitting datasets, it may be of interest to ask experimentalists to design particular types of assays–testing precisely what the specific categories of methods predict. As we realize that this is an unlikely scenario, it may be useful to encourage testing strong predictions of variants in the genes of their interest. Finally, of utmost importance is properly evaluating the so-called no-effect/neutral variants, that is the ones that require the most work for the least “profit”.

For the CAGI-5 PCM1 benchmark data set, we observed a large diversity in the agreement of the computational variant effect predictors with the experimental results. As discussed above, there are many reasons for why this may be the case. These include method failure, incompatibility of effect assays, complexity of the affected interaction pathways, specifics of the protein features, and so forth. Thus, interpretation of computational predictions, while valuable in a research setting, still has limited use in actionable, for example, clinical, settings.

ACKNOWLEDGMENTS

We would like to thank the CAGI organizers and data providers for giving us the possibility to test and benchmark our methods and thus gain valuable new insights. We are also grateful to Dr. Chengsheng Zhu, Dr. Kenneth McGuinness, Yannick Mahlich and Zishuo Zeng (all Rutgers) for all creative discussions and to Dr. Sonakshi Bhattacharjee (Columbia) for discussions and help with the manuscript. We would also like to express gratitude to all people who deposit their data into publicly available databases and to those who maintain them. Y.B., Y.W., and M.M. were supported by the NIH U01 GM115486 grant. M.M. was also supported by the NIH R01 MH115958 01 grant. The CAGI experiment coordination is supported by NIH U41 HG007346 and the CAGI conference by NIH R13 HG006650.