Bayesian longitudinal tensor response regression for modeling neuroplasticity

Abstract

A major interest in longitudinal neuroimaging studies involves investigating voxel-level neuroplasticity due to treatment and other factors across visits. However, traditional voxel-wise methods are beset with several pitfalls, which can compromise the accuracy of these approaches. We propose a novel Bayesian tensor response regression approach for longitudinal imaging data, which pools information across spatially distributed voxels to infer significant changes while adjusting for covariates. The proposed method, which is implemented using Markov chain Monte Carlo (MCMC) sampling, utilizes low-rank decomposition to reduce dimensionality and preserve spatial configurations of voxels when estimating coefficients. It also enables feature selection via joint credible regions which respect the shape of the posterior distributions for more accurate inference. In addition to group level inferences, the method is able to infer individual-level neuroplasticity, allowing for examination of personalized disease or recovery trajectories. The advantages of the proposed approach in terms of prediction and feature selection over voxel-wise regression are highlighted via extensive simulation studies. Subsequently, we apply the approach to a longitudinal Aphasia dataset consisting of task functional MRI images from a group of subjects who were administered either a control intervention or intention treatment at baseline and were followed up over subsequent visits. Our analysis revealed that while the control therapy showed long-term increases in brain activity, the intention treatment produced predominantly short-term changes, both of which were concentrated in distinct localized regions. In contrast, the voxel-wise regression failed to detect any significant neuroplasticity after multiplicity adjustments, which is biologically implausible and implies lack of power.

1 INTRODUCTION

In the event of stroke, highly interconnected neural systems are disrupted due to cell death in the core lesioned brain areas, cell dysfunction in the perilesional brain areas, and compromised activity in remote brain areas due to hypometabolism, neurovascular uncoupling, and aberrant neurotransmission (Pekna et al., 2012). These neurobiological changes are expected to result in considerable neuroplasticity in the stroke brain, defined as the phenomenon of spontaneous or treatment-enhanced restoration and reorganization of brain functioning that supports relearning of lost functions (Crosson et al., 2019; Pekna et al., 2012; Reid et al., 2016). Aphasia is a stroke-related acquired language impairment disorder characterized by brain lesions, which has been widely studied in literature (Watila & Balarabe, 2015). One of the key aspects of aphasia is that it is possible to design behavioral interventions that can result in clinically meaningful language gains even during the chronic phase that are potentially governed by the principles of neural plasticity (Cappa, 2000; Wilson & Schneck, 2021). The disease severity and overall disease prognosis, as well as neural plasticity and associated recovery, may depend on the type of aphasia, which is partially characterized by the location and size of brain lesions (Crosson et al., 2019). Given that aphasia outcomes have heterogeneity across neural regions, time, and subjects, there is a growing need to better understand how to effectively apply targeted clinical interventions to improve outcomes. This type of approach may require one to go beyond the routinely used group-level analysis to predict personalized neural plasticity changes that account for heterogeneity.

Functional magnetic resonance imaging (fMRI) techniques for investigating neural plasticity changes in aphasia have been around for two decades and are particularly appealing in terms of allowing researchers to investigate functional changes in the brain after stroke. However, the findings from these studies have been highly variable. Some studies have supported a role for the right hemisphere (Blank et al., 2002; Crinion & Price, 2005; Turkeltaub et al., 2012), while others have reinforced the importance of residual left hemisphere language areas (Fridriksson et al., 2012; Griffis et al., 2017; Saur et al., 2006). Most recently, several studies have suggested that domain-general networks may play a role in supporting recovery from aphasia (Brownsett et al., 2014; Geranmayeh et al., 2014). Researchers generally agree that all of these types of mechanisms are likely to play some role in recovery from aphasia, and that the relative importance of different mechanisms probably depends on the location and extent of the lesions, the phase of recovery, and other clinical characteristics. However, there is often no consensus as to which specific regions are more likely to be activated in poststroke aphasia (PSA) compared to healthy individuals, and this scenario is further exacerbated by typically small sample sizes in stroke studies. Such variability in findings in aphasia literature is potentially due to inherent heterogeneity between samples, which is often overlooked by current approaches relying on group-level comparisons. Existing methods essentially tend to ignore the heterogeneity within each treatment group, which may arise either spontaneously or from clinical, demographic, or other characteristics. Hence, there is a critical unmet need of developing robust statistical approaches for mapping personalized neuroplasticity trajectories from heterogeneous samples in aphasia studies that go beyond simple group-level comparisons.

Standard analytical methods in aphasia literature routinely use a voxel-wise approach (Benjamin et al., 2014; Naylor et al., 2014) that performs the analysis independently for each voxel. Unfortunately, this approach has several pitfalls that are often overlooked. First, a voxel-wise analysis is only able to include those samples that do not have a lesion present at a given voxel, resulting in a loss of effective sample size and power coupled with unreliable estimation. This is particularly not desirable in the presence of moderate to small samples routinely encountered in aphasia and other brain lesion studies. Second, the total number of model parameters in voxel-wise regression models increases linearly with the number of voxels, which typically ranges from a few thousand to close to a hundred thousand in neuroimaging applications. The resulting lack of parsimony in voxel-wise methods results in overfitting issues. Finally, a voxel-wise analysis is unable to respect the spatial configurations of the voxels, nor is it able to pool information across neighboring voxels. Thus, it essentially treats voxels as independently distributed, and it ignores the fact that functional imaging data usually involve simultaneous signal changes in spatially distributed brain regions (Van Den Heuvel & Pol, 2010). Moreover, these voxel-wise methods require stringent multiplicity adjustments to account for a large number of hypothesis tests (Eklund et al., 2016). Classical multiplicity adjustments such as the Bonferroni method often result in overly conservative estimates and do not have any mechanism to account for the correlations between neighboring voxels. A more common solution is to impose spatial clustering in the voxel-wise significance maps via cluster-extent inference (CEI; Chumbley et al., 2010). Typically, this method first fits the model voxel-wise and subsequently performs multiplicity corrections at the level of clusters of voxels. Other methods such as the one proposed in Park and Fiecas (2021) first compute voxel-level test statistics that are combined within clusters and scaled by a spatial covariance matrix (obtained via a permutation approach) to derive a global test statistic to be used for inference. While such heuristic approaches are useful, they may still result in inadequate voxel-level coefficient estimation, and inferential results are not guaranteed to be optimal.

In this article, we develop a powerful alternative to voxel-wise methods by proposing a novel longitudinal Bayesian tensor response regression (l-BTRR) model for longitudinal brain imaging studies that overcomes the aforementioned challenges. The proposed approach treats the brain image as a tensor-valued outcome, which is regressed on covariates via low-rank coefficient matrices involving voxel- specific effects that respect the spatial configuration of voxels. The low-rank structure results in massive dimension reduction, resulting in model parsimony that is critically important for high-dimensional neuroimaging applications involving tens of thousands of voxels. It is also designed to borrow information across neighboring voxels to estimate effect sizes, which results in increased accuracy and precision for coefficient estimation. Another desirable feature is the ability of the Bayesian tensor model to impute outcome values for missing voxels where needed. These features are advantageous compared to a univariate voxel-wise analysis or an alternate multivariable regression approach that simply assigns a unique regression coefficient corresponding to each voxel. Moreover, the Bayesian framework naturally enables one to infer significant covariate effects as well as neuroplasticity changes over visits, and also report measures of uncertainty. This is achieved via Bayesian joint credible regions that respect the shape of the posterior distribution and provide improvements over the routinely used coordinate-wise credible interval approach. The proposed approach is implemented via an efficient posterior computation scheme involving Markov chain Monte Carlo (MCMC) developed in this article.

The proposed method differentiates between group-level effects that may be either time-invariant or time-varying and are learned by pooling information across samples, from individual-specific effects that define unique characteristics specific to a given subject. By accommodating subject-specific effects, the proposed approach allows us to infer personalized neuroplasticity trajectories over visits that are not feasible under the routinely used group-level comparisons. Incorporating heterogeneity is critical for our aphasia neuroimaging application comprising a group of poststroke subjects at varying stages of aphasia, who were randomly assigned to either the intention therapy (Crosson, 2008) or the control therapy group at baseline and were followed up over 3 months post-intervention. At the group-level, coefficient estimation and feature selection are also much improved in our aphasia data analysis, such that the proposed approach infers several clusters of brain regions with significant neuroplasticity changes. In contrast, the voxel-wise regression analysis for this dataset is unable to infer any group-level significant neuroplasticity changes after multiplicity adjustments. This seems biologically implausible and underlines the limitations of voxel-wise analysis. To validate the operating characteristics of the proposed model, we additionally conduct extensive simulation studies, where the proposed approach is compared to voxel-wise regression methods and cross-sectional tensor models.

Although motivated by stroke literature, the proposed approach can be applied to a wide range of longitudinal neuroimaging studies where it is of interest to regress the brain image on covariates to infer significantly associated voxels. To our knowledge, this work is one of the first Bayesian tensor-based methodology developed for high-dimensional longitudinal neuroimaging applications involving heterogeneous samples that go beyond the limited and fairly recent literature on cross-sectional tensor models that is summarized here. In the frequentist paradigm, Rabusseau and Kadri (2016) constructed a regression model with a tensor response exploiting a low-rank structure, but without inducing sparsity that is often required to identify important tensor nodes and cells. Li and Zhang (2017) proposed an envelope-based tensor response model relying on a generalized sparsity principle that is designed to identify linear combinations of the response irrelevant to the regression. More recently, Sun and Li (2017) developed a new class of models, referred to as STORE, that impose element-wise sparsity in tensor coefficients. While useful, frequentist approaches are unable to perform inference required for feature selection and cannot quantify uncertainty, both of which are naturally possible under Bayesian methods. In the Bayesian paradigm, Guhaniyogi and Spencer (2021) proposed a BTRR approach that is built upon a multi-way stick-breaking shrinkage prior on the tensor coefficients. Spencer et al. (2019) further generalized the approach by Guhaniyogi and Spencer (2021) to jointly identify activated brain regions due to a task and connectivity between different brain regions. In a recent paper, Guha and Guhaniyogi (2021) expand on previous work to develop a generalized Bayesian linear modeling framework with a symmetric tensor response and scalar predictors. Unfortunately, none of the above existing approaches cater to the scenario of longitudinal imaging studies, which presents challenges due to subject- and voxel-specific trajectories of neuroplasticity and is the focus of this article.

The rest of the article is organized as follows. Section 2.1 provides a primer on tensor-based modeling, Section 2.2 develops the l-BTRR modeling approach, Section 2.3 develops a novel feature selection strategy using Bayesian joint credible regions, and Section 2.4 outlines the method to infer neuroplasticity maps from the proposed model. Section 3 develops the MCMC steps for efficient posterior computation scheme. Section 4 describes extensive simulation studies that compare the performance of the proposed method with state-of-the-art competing approaches, and Section 5 reports the results from the aphasia data analysis.

2 METHODS

2.1 Overview of tensor models

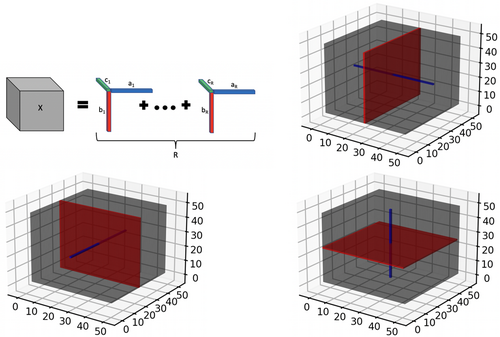

One of the earliest proposed techniques for tensor modeling is known as PARAFAC decomposition (Kolda & Bader, 2009), which is a special case of the more general Tucker decomposition (Kolda & Bader, 2009), and will be used for our purposes throughout the article. In mathematical terms, the mode tensor denoted as (and belonging to the space ) may be expressed as the sum of independent outer products of rank-1 tensor tensor margins. In particular, one may write , where denotes an outer product, the set of vectors are the so-called tensor margins having rank 1, for any given , represents the weight for the rth channel, and represents the chosen rank of the tensor. In practice, we often have for three-dimensional images in our applications. Given the above definition, the element of the rank-R tensor can be expressed as . We note that the tensor margins are only identifiable up to a permutation and multiplicative constant, unless some additional constraints are imposed. However, the lack of identifiability of tensor margins does not pose any issues for our setting, since the tensor product is fully identifiable, which is sufficient for our primary goal of coefficient estimation. Hence, we do not impose any additional identifiability conditions on the tensor margins, which is consistent with Bayesian tensor modeling literature (Guhaniyogi et al., 2017). The tensor decomposition is visually illustrated in Figure 1 for the three-dimensional case.

In addition to the tensor margins, other lower dimensional objects are naturally embedded within a tensor structure. These include tensor fibers that can be visualized as a thin thread of points generated when varying only one of the tensor modes, while keeping the other modes fixed. For example for a three-way tensor , mode-1 fibers correspond to the collection of d1-dimensional vectors defined as . Mode-2 and Mode-3 fibers can be defined similarly. On the other hand, tensor slices are defined as lower dimensional subspaces of a tensor that are generated by varying two tensor modes simultaneously, while keeping the third tensor mode fixed (for the case). For example, the tensor slice corresponding to the point on tensor mode-3 may be represented as a collection , where . The tensor fibers and slices are illustrated in Figure 1b–d, and these structures will be useful for understanding different aspects of the proposed model. More importantly, tensor slices will be directly instrumental for estimating the tensor margins even in the presence of missing voxels, as outlined in the following section.

2.2 Proposed model

2.2.1 Notations

Consider the observed three-dimensional brain image for the ith subject and the tth visit, with , , voxels along the three dimensions, and corresponding to the baseline and follow-up visits. Our method can accommodate varying numbers of visits across subjects in a relatively straightforward manner. The brain image can represent the chosen measure of brain activity or structure as determined by the specific application, and it is treated as a tensor object in our article. Let us denote the measurement corresponding to the vth voxel of as , where , with denoting the space of all voxels within the brain mask. Further denote the set of covariates for the ith sample as , where and induce time-varying and time-invariant effects of the outcome, respectively, and induces effects of time-varying covariates. We further denote as the time duration between baseline and visit for the ith sample ( assumed to be zero at baseline).

2.2.2 Longitudinal tensor response regression model

We note that the proposed model can be interpreted as a non-trivial adaptation of routinely used linear mixed models in longitudinal studies to tensor-valued outcomes. Simultaneously, it may be also be considered an extension of the Bayesian tensor method in Guhaniyogi and Spencer (2021) proposed in the context of cross-sectional single-subject fMRI time-series data, to longitudinal neuroimaging studies involving multiple subjects. In addition to the longitudinal set-up, there are additional differences in the prior specifications on the tensor margins as elaborated below.

2.2.3 Prior specification

| Parameter | Description | Range |

|---|---|---|

| Size of tensor dimensions for 3D tensor | ||

| AUC outcome tensor for visit and subject | ||

| Set of observed voxels corresponding to the ith subject | ||

| Observed time of follow-up for tth visit and ith subject | ||

| Covariates with time-varying effects for ith subject | ||

| Covariates with time-invariant effects for ith subject | ||

| Time-varying covariates with time-invariant effects for ith subject and ith visit | ||

| Population-level intercept | ||

| Subject-specific intercept | ||

| Population-level time slope | ||

| Subject-specific time slope | ||

| Time-varying effect of mth clinical covariate | ||

| Time-invariant effect of sth clinical covariate | ||

| Time-invariant effect of qth time-varying covariate | ||

| Random residual error | ||

| Variance of residual error (equal across voxels) | ||

| Tensor margin for dth dimension and rth channel for | ||

| Tensor margin for dth dimension and rth channel for | ||

| Tensor margin for dth dimension and rth channel for | ||

| Tensor margin for dth dimension and rth channel for | ||

| Tensor margin for dth dimension and rth channel for | ||

| Tensor margin for dth dimension and rth channel for | ||

| Tensor margin for dth dimension and rth channel for | ||

| Global tensor margin variance scaling term | ||

| Covariance matrix for dth tensor margin and rth channel | ||

| AR-1 covariance matrix for dth tensor margin and rth rank channel | ||

| Rate parameter corresponding to diagonal elements of | ||

| Lengthscale/smoothness parameter used to define |

The construction of the covariance matrices is expected to explicitly capture spatial correlations (in an unsupervised manner) corresponding to neighboring voxels, which is more flexible compared to specifying independence on the tensor margins as in Guhaniyogi et al. (2017). The extent and strength of such correlations will depend on posterior distributions (see Section 3) that combines the likelihood resulting from model (1) with the priors in (2). The posterior distribution will be used for estimation under a Bayesian framework, resulting in data-adaptive correlation estimates that are allowed to vary over brain regions. In our experience, the resulting set-up yields model flexibility while simultaneously ensuring model parsimony. Having specified the prior distributions, we now highlight the specific advantages under the tensor modeling framework below.

2.2.4 Dimension reduction via tensor structure

2.2.5 Preserving spatial configurations

Before fitting the tensor model, the voxels in the image are mapped to a regularly spaced grid that is more amenable to a tensor-based treatment. Such a mapping preserves the spatial configurations of the voxels that provides significant benefits over a univariate voxel-wise analysis or a multivariable analysis that vectorizes the voxels without regard for spatial configurations. Although the grid mapping may not preserve the exact spatial distances between voxels, this is of limited consequence in our experience, given the ability to capture correlations between neighboring elements in the tensor margins as elaborated previously. To better understand how spatial smoothing is induced between the regression coefficients for neighboring voxels, note that the tensor coefficients for corresponding to the neighboring voxels and for are given as and , respectively. These coefficients share many common elements from the tensor margins and that induces correlations that is given by . Under the proposed prior in (2), we note that , which implies . This covariance decreases with the distance between the voxel indices for the first margin, thereby inducing spatial smoothing. Clearly, this is a desirable feature when one expects voxel activations that form spatially distributed clusters in different regions of the brain, as supported in literature (Woo et al., 2014).

2.2.6 Pooling information across voxels

A desirable feature of the tensor construction is that it is able to estimate voxel-specific coefficients using the information from neighboring voxels by estimating the tensor margins under the inherent low-rank structure. This feature yields more robust and reproducible brain maps illustrating significant voxels that are more robust to missing voxels and noise in the images. As a natural byproduct, this feature can be handily used for robust imputation of imaging outcomes corresponding to missing voxels, as empirically validated via our extensive numerical studies in Section 4. In contrast, the ability to borrow information across neighboring voxels is completely missing in voxel-wise analysis, which treats the coefficients across voxels as independent without regard to their spatial configurations.

For example, consider a toy scenario involving the estimation of the element that is expressed as in model (1). The estimation of coefficients proceeds through the estimation of the tensor margins . We note that the elements are inherently contained in the tensor coefficients corresponding to all voxels in the tensor slice given by (refer to Figure 1). Similarly, the tensor margin elements are contained in the tensor coefficients corresponding to the tensor slice . A similar interpretation holds for the tensor margin elements that can be learned using the voxels lying on the tensor slice . Hence, by pooling information across voxels contained in suitable tensor slices, the tensor margin parameters can be learned in an effective manner that is more robust to missing voxels as elaborated below.

2.2.7 Accommodating missing/redundant voxels

Another desirable feature of the proposed model is that it is able to produce accurate results even when there is small to moderate proportion of missing voxels in the image. This is important for applications in stroke studies, where voxels lying in lesion areas show no activity and are considered missing. Consider the set of voxels that is observed for the ith subject . In the presence of subsets of missing or redundant voxels that may vary across individuals, the proposed model (3) may be modified by considering voxels , where denotes a subject-specific set of observed voxels. Clearly, the only difference is that this model assumes varying set of missing voxels across samples, instead of an identical set of missing voxels in (3). However, even with , the proposed model is still able to preserve its appealing features such as dimension reduction, accounting for spatial contiguity, and robust estimation in the presence of subsets of missing/redundant voxels. Since the tensor regression coefficients are expressed as a low-rank decomposition that involves outer products of tensor margins, each element in the tensor margin is learned by pooling information across corresponding subsets of tensor slices that comprise non-missing voxels. This facilitates the estimation of the voxel-specific coefficients corresponding to missing voxels in the image. Although there is some loss of information in not being able to use all the voxels on a tensor slice for estimating the tensor margins due to missingness, such loss is manageable when the proportion of missing voxels is not overly large (see simulation studies in Section 4). When multiple samples are present with varying locations of missing voxels (as inevitably occurs in stroke samples due to the varying size, shape, and location of lesions), the performance of the method is expected to improve provided there is lesser overlap in the locations of the missing voxels across samples. On the other hand, voxels that are missing across all samples are considered redundant and it is not of interest to estimate the corresponding coefficients. Such voxels, which may lie in common lesion areas or even outside the brain mask, or exhibit limited variability across samples, are excluded from further analysis.

The above features of the proposed tensor response regression model result in distinct advantages over a voxel-wise regression approach, in terms of handling missing voxels. Voxel-wise methods proceed by fitting the model separately corresponding to each voxel, after eliminating the subset of samples for which the corresponding voxel information is missing. Hence, the estimation accuracy for the voxel-level coefficients corresponding to missing voxels may deteriorate due to loss of the effective sample size. Further, different subsets of missing voxels across different individuals may potentially result in an imbalance in the effective sample size across voxels. The voxel-wise analysis is expected to be deeply affected by such a reduction in sample size, whereas the tensor-based method is more robust to such issues given the fact that it pools information across voxels to learn tensor margins.

2.3 Feature selection

The Bayesian approach provides a natural inferential framework that can be used to determine significant effects via credible intervals derived from MCMC samples. However, simply computing the credible intervals for the parameters of interest in order to determine significance may not provide adequate control for multiplicity and it does not account for the underlying correlations between voxels. Multiplicity adjustments are required when testing for significant effects on a large number of voxels. Typically used adjustments in the Bayesian setting such as the Bonferroni correction adjusts the significance level with respect to the number of tests (i.e., number of non-missing voxels), but have important limitations. First, the number of MCMC iterations needs to sufficiently exceed the number of voxels for one to construct suitable Bonferroni corrections. This is typically challenging in the presence of a large number of voxels. Second, the underlying spatial correlations across voxels are not accounted for, which is undesirable for neuroimaging studies. Although alternate approaches such as the CEI method (Chumbley et al., 2010) use post hoc adjustments to account for spatial correlations at the cluster level resulting in improvements, the performance may still be sensitive to the quality of the estimated coefficients that may be sub-optimal under a voxel-wise regression analysis.

To address these pitfalls, we use joint credible regions for inference and feature selection, which respects the correlations in the posterior distribution and incorporates a naturally in-built multiplicity adjustment mechanism. In particular, we use the “Mdev” method relying on simultaneous credible bands that were introduced by Crainiceanu et al. (2007) and later adopted by Hua et al. (2015). The joint credible regions are constructed using the posterior samples of the tensor coefficients and respect the shape of the joint posterior distribution and dependence across the coefficients. More concretely, given posterior samples across elements of a tensor-valued coefficient, denoted after burn-in, the Mdev method first computes the posterior sample average curve , and pointwise and quantiles, and , respectively, for all . In order to borrow information across voxels jointly and provide in-built multiplicity adjustments, the next step of Mdev involves computing maximal deviations and . Based on these, the credible bands for each voxel are computed as . These joint credible regions naturally provide an inferential framework. For example, the coefficient corresponding to voxel is considered significant if the credible interval does not contain zero; otherwise it is considered to be nonsignificant. Extensive numerical experiments show superior control over false positives (higher specificity) compared to analysis without multiplicity adjustments (Eklund et al., 2016), and superior power to detect important features compared to voxel-wise methods with Bonferroni corrections, under the proposed joint credible regions approach. As a byproduct, the credible regions can also be used for uncertainty quantification, which is another desirable quality in neuroimaging studies.

2.4 Inferring group and individual neuroplasticity brain maps

Using the BTRR approach, it is possible to infer both group-level as well as individual neuroplasticity maps indicating voxels with significant neuroplasticity. The latter clearly provides deeper insights compared to a standard group-level comparison that is typically reported in neuroplasticity studies, and it is of independent interest for studying personalized trajectories of response to treatment.

In order to obtain the group-level neuroplasticity maps, we average over the individual neuroplasticity maps within a given group. Consider two groups of interest. Then the neuroplasticity maps corresponding to is obtained using the MCMC samples , and similarly for the other group . For group , the MCMC samples can be used to determine significant neuroplasticity maps based on the joint credible regions method in Section 2.3. We note that the groups under consideration are predetermined based on the scientific questions of interest for a given application.

3 POSTERIOR COMPUTATION

The model parameters in (1)–(2) are unknown and estimated via posterior distributions that are obtained by combining the prior and the likelihood under the Bayesian paradigm. Since it is typically challenging to obtain closed-form joint posterior distributions for the model parameters, an MCMC sampling scheme is employed that is able to draw samples from the joint posterior in a computationally cohesive manner. Under the MCMC scheme, it is possible to estimate the full posterior distribution that is then used to derive point estimates and provide uncertainty quantification. The MCMC steps for most parameters in the model such as the tensor margins and the scale parameters involve fully Gibbs updates with closed-form posterior distributions, that result in good mixing. The only parameters that cannot be updated using fully Gibbs steps involve the lengthscale parameters in the covariance matrices for the tensor margins, which are updated using Metropolis-Hastings steps. Under the assumed prior structure, the number of such lengthscale parameters is reasonable, and a Metropolis random walk is used for updating these parameters. In particular, we use the proposal density , where indexes the MCMC iteration, and denotes the variance term that is fixed. We run the Gibbs sampling steps for a total of 5000 MCMC iterations with a burn-in of 2500. The full set of posterior computation steps is provided in the Supplementary Materials.

3.1 Choosing the tensor rank (R)

We use the deviance information criterion (DIC) to select the tensor rank R, which is a goodness of fit measure that strikes a balance between the quality of the model fit and the number of parameters involved in the model (Shriner & Yi, 2009). Such a criterion was also used in Guhaniyogi and Spencer (2021) and resulted in suitable performance in a wide variety of studies. The expression for DIC is provided in Supplementary Materials.

4 SIMULATION STUDIES

4.1 Data generation schemes

Model performance was conducted using several distinct data generation schemes (see Table 2). For each scheme, 50 replicates of simulated longitudinal data were generated according to model (1) but without a subject-specific time slope (i.e., ). The covariates and and noise terms were simulated from Gaussian distributions. For each scheme, tensor-valued outcomes of size were generated (total of 4096 voxels) across 14 subjects (indexed by ), and for 3 Longitudinal visits (indexed by ). We specified the mean signal-to-noise (SNR) ratio across all voxels as approximately 0.75, where a lower SNR signals greater noise in the images. Additional SNR levels were also investigated and produced similar results, but they are not presented here due to space constraints. After generation, the data were split into a training set that contained all voxels from the first two visits and a randomly selected subset of 75 and 50% voxels in the third visit, and a test set that contained the remaining holdout voxels (25 and 50%) in the third visit. The proposed model was fit on the training data and feature selection results were reported based on this fit, whereas the trained model was used to make out of sample prediction on the test set data. The reported results were averaged over replicates.

| Setup options | Scheme 1 | Scheme 2A | Scheme 2B | Scheme 3A | Scheme 3B |

|---|---|---|---|---|---|

| Coefficient type | Low-rank | Spherical | Cubic | Spherical | Cubic |

| Number of time-varying coefficients | 0 | 0 | 0 | 1 | 1 |

| Total number of covariates | 4 | 4 | 4 | 4 | 4 |

| Number of time-varying covariates | 2 | 2 | 2 | 2 | 2 |

| Percentage of true coefficient zeros | 75% | 75% | 75% | 50–93% | 50–93% |

| Mean SNR | 0.75 | 0.75 | 0.75 | 0.75 | 0.75 |

- Abbreviation: SNR, signal-to-noise.

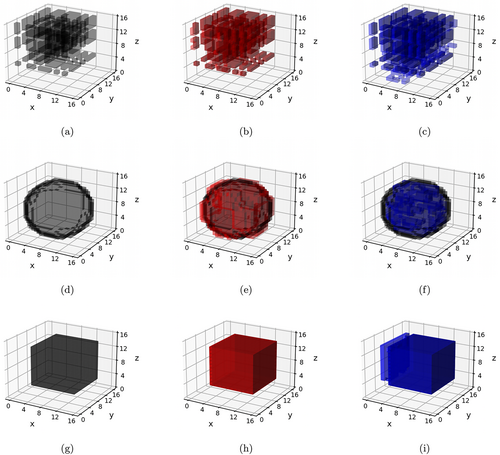

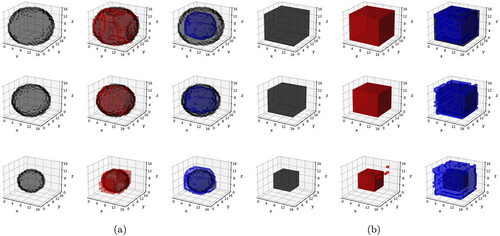

The data generation schemes differed in terms of the structure of the tensor-valued coefficients in model (1). In Scheme 1, no time-varying coefficients were assumed (i.e., ), and all other coefficients (including the intercept terms) were generated from rank 2 tensor decompositions with binomially-distributed tensor margins for four different covariates. For each tensor margin, the probability that a given element would be zero was fixed at 0.55 such that after constructing the low-rank tensor coefficients, approximately 75% of voxels in each coefficient consisted of true zeros across replicates. Scheme 2 was similar to Scheme 1 in terms of not having any time-varying effects, but differed with regard to how the remaining coefficients were generated. In particular, these coefficients were set to be nonzero for approximately spherical (Scheme 2a) and cubic (Scheme 2b) volumes of voxels with randomly chosen centers. The volumes of these shapes were fixed such that an average of 75% of voxels consisted of true zeros across coefficients.

In contrast to Schemes 1 and 2, data under the Scheme 3 were generated based on both time-varying as well as time-invariant effects. Three covariates were chosen to have time-invariant effects in Scheme 3, and the pattern of these effects was chosen similarly as in Schemes 2a and 2b, corresponding to Schemes 3a and 3b, respectively. Only one covariate (generated from a binomial distribution) was assigned to exhibit time-varying effects in Scheme 3, and these effects assumed spherical (Scheme 3a) and cubic (Scheme 3b) shapes. The volume of these time-varying shapes varied across the three visits, with the proportion of true zeros increasing from 50% at the first visit to 93% at the third visit. Examples of the different classes of signals in Schemes 1–3 are summarized in Table 2, and visually illustrated in Figures 2 and 3.

4.2 Competing methods and performance metrics

To compare the performance of the l-BTRR method, five competing methods were used that can be categorized into cross-sectional and longitudinal methods, as well as tensor-based modeling and voxel-wise approaches that are routinely used. The only competing approach that accounted for the spatial configuration of the voxels was the cross-sectional BTRR (cs-BTRR), which fits the BTRR model by ignoring the within-subject dependence across longitudinal visits, that is, treating the longitudinal visits as exchangeable. Hence, this approach does not pool information across visits to estimate subject-level effects. The cs-BTRR approach is obtained by modifying model (1) to exclude the effects corresponding to and , and further assuming is the same for all visits , which translates to a time-invariant effect for all covariates. Both the l-BTRR and cs-BTRR methods consisted of 5000 MCMC iterations per replicate, using a burn-in of 2500. For each replicate, tensor coefficient ranks between 1 and 5 were considered, where each coefficient rank was set as equal for simplicity. Optimal rank was determined by finding the minimal DIC score as in Figure S1 in the Supplementary Materials. To yield more comparable evaluation metrics between the l-BTRR and cs-BTRR methods, we performed the DIC rank selection for the l-BTRR method, and that chosen rank was manually specified for the cs-BTRR approach. For the Bayesian methods, significant features were inferred by computing the joint simultaneous credible bands as per Section 2.3, where Type I error rate was set to . Point-wise credible bands without multiplicity correction were also computed for the Bayesian methods, to illustrate the benefits of feature selection under the joint credible regions (see Tables S2–S4 in Supplementary Materials).

The remaining four competing methods are all voxel-wise approaches, meaning they perform the analysis separately for each voxel. The voxel-wise approaches were fit using either the ordinary least squares (OLS) technique, or the Lasso approach (Tibshirani, 1996). These methods include cross-sectional approaches such as voxel-wise cross-sectional OLS (vcs-OLS) and voxel-wise cross-sectional Lasso (vcs-Lasso), as well as longitudinal methods such as the voxel-wise linear mixed modeling (vl-OLS), and the voxel-wise longitudinal Lasso with random intercepts (vl-Lasso). Unlike l-BTRR, the longitudinal voxel-wise approaches (i.e., vl-OLS and vl-Lasso) fit the voxel-level model (3) separately for each voxel, while still incorporating subject and time dependence through a random intercept and time-varying effects as in (3). This construction is consistent with linear mixed modeling for longitudinal data literature (Curran & Bauer, 2011). On the other hand, the voxel-wise cross-sectional methods ignored the within-subject dependence across visits and assume time-invariant effects across all visits. The voxel-wise Lasso approaches were fit using the glmmLasso and glmnet R packages, and resulted in sparse estimates for the regression coefficients. For both vl-Lasso and vsc-Lasso, the tuning parameter was selected as the parameter which produced minimum cross-validation error in a 10-fold cross-validation scheme.

For each scheme and each chosen level of holdout voxels (25 and 50%), performance metrics were selected to assess the out-of-sample predictive performance and feature selection accuracy. For out-of-sample prediction, overall root mean squared error (p-RMSE) was calculated by averaging the squared error across all subjects, visits, and hold-out voxels. Additionally, out-of-sample correlation (p-Corr) was computed comparing the vectorized observed and predicted outcome for all subjects, visits, and holdout voxels. Probability of coverage was computed for each method by obtaining multiplicity-adjusted credible or confidence intervals on the fitted outcome and determining the proportion of holdout voxels whose interval contained the true value (without simulated noise) across replicates. The average width of these confidence and credible regions are presented along with coverage probability to get a sense of the precision of each method. Coefficient estimation accuracy was obtained by computing the RMSE between the true and estimated coefficients across voxels (c-RMSE). For feature selection, sensitivity, specificity, and F1 score were calculated by comparing significance estimates with true coefficient values. In particular, the F1 score is computed as the harmonic mean between recall (sensitivity) and precision, that is, 2 × recall × precision/(recall + precision). Here, sensitivity is the power to detect the true positives, computed as the proportion of truly nonzero coefficients that were inferred as significant, and precision is defined as the ratio of the number of true positives over the total number of significant signals detected. We also report specificity which is defined as the proportion of truly zero coefficients that were correctly identified as such. All feature selection metrics were averaged for the coefficients , and in model (1), which correspond to population-level effects of simulated covariates , and .

The significance coefficient estimates are obtained differently for each class of methods. For the BTRR methods, simultaneous credible bands are used to infer significant voxel-specific estimates from MCMC samples (see Section 2.3), and point-wise credible bands without multiplicity correction are computed for comparison. The simultaneous credible bands for the Bayesian tensor approach automatically result in multiplicity adjustments. For OLS methods, we implement a version of CEI that utilizes the Benjamini–Hochberg procedure to adaptively select a threshold that distinguishes between significant and nonsignificant clusters of voxels (Chumbley et al., 2010). The details for this implementation are provided in the Supplementary Materials. In order to compute coverage probabilities for the voxel-wise methods, we first fit the model for each holdout voxel using training data from the first and second visit and subsequently obtained the multiplicity-adjusted confidence intervals for prediction of the outcome using covariates from the third visit. For Lasso methods, all effects with a magnitude greater than 0.001 were deemed to be significant for a given voxel. Hence, we were not able to include multiplicity adjustments for the Lasso approaches, and therefore the corresponding probability of coverage was not computed.

4.3 Simulation results

The simulation results are presented in Tables 3–5. The proposed l-BTRR method has a significantly lower out-of-sample prediction RMSE, significantly higher correlation for the predicted outcome values, and consistently improved regression coefficient estimation (lower c-RMSE values) compared to all other competing methods and across all settings. These results point to the clear advantages in prediction and parameter estimation under the proposed longitudinal tensor approach. The higher c-RMSE values in the voxel-wise methods correspond to more isolated patterns in the estimated coefficients that fail to preserve spatial contiguity that is seen in the true signal. In contrast, the tensor-based methods are able to preserve the spatially distributed signals in the estimated coefficients, as seen in Figures 2 and 3. Additionally, these figures illustrate that the tensor-based methods are adaptive to the spatial discontinuity of the signals, given that the estimated signals preserve the sharp edges of the true signal, especially for the l-BTRR method. To explore this further, we examined the coefficient estimation and feature selection of all competing methods within a thin strip around the perimeter of the true signals in Scheme 2, where the discontinuity is most pronounced. These results are consistent with the overall results and are shown in Table S1 in the Supplementary Materials.

| Metric | Comparison | p-value |

|---|---|---|

| Holdout 25% | ||

| p-RMSE | l-BTRR vs. cs-BTRR | 1.494e−11 |

| p-Corr | l-BTRR vs. cs-BTRR | <2.2e−16 |

| c-RMSE | l-BTRR vs. cs-BTRR | <2.2e−16 |

| Sens | cs-BTRR vs. vl-Lasso | 0.001303 |

| Spec | vl-OLS vs. l-BTRR | 0.009652 |

| F1 | l-BTRR vs. cs-BTRR | 0.02463 |

| Coverage | vl-OLS vs. l-BTRR | 2.576e−05 |

| Coverage Width | cs-BTRR vs. l-BTRR | 2.507e−14 |

| Holdout 50% | ||

| p-RMSE | l-BTRR vs. cs-BTRR | 1.158e−13 |

| p-Corr | l-BTRR vs. cs-BTRR | <2.2e−16 |

| c-RMSE | l-BTRR vs. cs-BTRR | <2.2e−16 |

| Sens | cs-BTRR vs. vl-Lasso | 0.003701 |

| Spec | vl-OLS vs. l-BTRR | 8.576e−08 |

| F1 | l-BTRR vs. cs-BTRR | 0.003816 |

| Coverage | vl-OLS vs. l-BTRR | 7.646e−06 |

| Coverage Width | cs-BTRR vs. l-BTRR | 1.45e−15 |

| Metric | Comparison | p-value |

|---|---|---|

| Sphere: Holdout 25% | ||

| p-RMSE | l-BTRR vs. cs-BTRR | 0.0005473 |

| p-Corr | l-BTRR vs. cs-BTRR | 7.691e−08 |

| c-RMSE | l-BTRR vs. cs-BTRR | 0.5851 |

| Sens | cs-BTRR vs. vl-Lasso | 0.0348 |

| Spec | vl-OLS vs. l-BTRR | 2.886e−12 |

| F1 | l-BTRR vs. cs-BTRR | 0.004291 |

| Coverage | vl-OLS vs. l-BTRR | 1.761e−09 |

| Coverage Width | cs-BTRR vs. l-BTRR | 0.007892 |

| Sphere: Holdout 50% | ||

| p-RMSE | l-BTRR vs. cs-BTRR | 7.936e−07 |

| p-Corr | l-BTRR vs. cs-BTRR | 3.641e−09 |

| c-RMSE | l-BTRR vs. cs-BTRR | 0.6447 |

| Sens | cs-BTRR vs. vl-Lasso | 0.02026 |

| Spec | vl-OLS vs. l-BTRR | 3.708e−10 |

| F1 | l-BTRR vs. cs-BTRR | 0.00226 |

| Coverage | vl-OLS vs. l-BTRR | 6.952e-09 |

| Coverage Width | cs-BTRR vs. l-BTRR | 1.479e-06 |

| Cube: Holdout 25% | ||

| p-RMSE | l-BTRR vs. cs-BTRR | 1.424e−05 |

| p-Corr | l-BTRR vs. cs-BTRR | <2.2e−16 |

| c-RMSE | l-BTRR vs. cs-BTRR | 3.314e−07 |

| Sens | cs-BTRR vs. vl-Lasso | 7.475e−06 |

| Spec | vcs-OLS vs. l-BTRR | 6.452e−05 |

| F1 | l-BTRR vs. cs-BTRR | 0.3728 |

| Coverage | vl-OLS vs. l-BTRR | 0.006359 |

| Coverage Width | cs-BTRR vs. l-BTRR | 8.773e−09 |

| Cube: Holdout 50% | ||

| p-RMSE | l-BTRR vs. cs-BTRR | 1e−07 |

| p-Corr | l-BTRR vs. cs-BTRR | 2.763e−16 |

| c-RMSE | l-BTRR vs. cs-BTRR | 0.01841 |

| Sens | cs-BTRR vs. vl-Lasso | 0.0002161 |

| Spec | vl-OLS vs. l-BTRR | 7.659e−06 |

| F1 | l-BTRR vs. cs-BTRR | 0.002884 |

| Coverage | vl-OLS vs. l-BTRR | 0.02383 |

| Coverage Width | cs-BTRR vs. l-BTRR | 6.827e−12 |

| Metric | Comparison | p-value |

|---|---|---|

| Sphere: Holdout 25% | ||

| p-RMSE | l-BTRR vs. cs-BTRR | 0.0003372 |

| p-Corr | l-BTRR vs. cs-BTRR | 2.653e−09 |

| c-RMSE | l-BTRR vs. cs-BTRR | 3.082e−08 |

| Sens | vl-Lasso vs. cs-BTRR | 0.2477 |

| Spec | vcs-OLS vs. vl-OLS | 0.04815 |

| F1 | l-BTRR vs. cs-BTRR | 0.9536 |

| Coverage | vl-OLS vs. l-BTRR | 0.002442 |

| Coverage Width | cs-BTRR vs. l-BTRR | 6.22e−06 |

| Sphere: Holdout 50% | ||

| p-RMSE | l-BTRR vs. cs-BTRR | 6.215e−06 |

| p-Corr | l-BTRR vs. cs-BTRR | 3.597e−14 |

| c-RMSE | l-BTRR vs. cs-BTRR | 0.09499 |

| Sens | vl-Lasso vs. cs-BTRR | 0.283 |

| Spec | vcs-OLS vs. vl-OLS | 1.15e−05 |

| F1 | l-BTRR vs. cs-BTRR | 0.9731 |

| Coverage | vl-OLS vs. l-BTRR | 4.75e−05 |

| Coverage Width | cs-BTRR vs. l-BTRR | 1.85e−08 |

| Cube: Holdout 25% | ||

| p-RMSE | l-BTRR vs. cs-BTRR | 2.226e−11 |

| p-Corr | l-BTRR vs. cs-BTRR | 5.116e−13 |

| c-RMSE | l-BTRR vs. cs-BTRR | 4.856e−15 |

| Sens | cs-BTRR vs. vl-Lasso | 0.3456 |

| Spec | vcs-OLS vs. vl-OLS | 2.695e−15 |

| F1 | l-BTRR vs. cs-BTRR | 0.02376 |

| Coverage | vl-OLS vs. l-BTRR | 0.002032 |

| Coverage Width | cs-BTRR vs. l-BTRR | 5.18e−04 |

| Cube: Holdout 50% | ||

| p-RMSE | l-BTRR vs. cs-BTRR | 2.262e−09 |

| p-Corr | l-BTRR vs. cs-BTRR | 4.219e−13 |

| c-RMSE | l-BTRR vs. cs-BTRR | 1.207e−08 |

| Sens | cs-BTRR vs. vl-Lasso | 0.3648 |

| Spec | vcs-OLS vs. vl-OLS | <2.2e−16 |

| F1 | l-BTRR vs. cs-BTRR | 0.2494 |

| Coverage | vl-OLS vs. l-BTRR | 0.00063 |

| Coverage Width | cs-BTRR vs. l-BTRR | 4.129e−07 |

In terms of feature selection, the proposed method consistently has the highest F1 score, which validates the superior performance. The cs-BTRR approach and longitudinal Lasso method often report the highest sensitivity that exceeds the sensitivity under the multiplicity-adjusted l-BTRR method. While this is expected, the higher sensitivity reported under the Lasso methods (without multiplicity correction) comes at the cost of considerably lower specificity that also percolates to significantly lower F1 score under these approaches. In contrast, the F1 score under the proposed approach is always greater than 0.75. The proposed method reports the second highest specificity after voxel-wise OLS (vl-OLS) methods for all schemes. We note that the specificity values for the l-BTRR method correspond to false discovery rates which are relatively close to the nominal value of 0.05, which is expected given the built-in multiplicity adjustment under the simultaneous credible bands. The vl-OLS methods always have specificity close to 1 after applying CEI multiplicity correction, which is due to the fact that it detects very few significant features as evidenced by low sensitivity values. This is clearly not desirable and indicates the pitfalls of voxel-wise model fitting for feature selection in simulated longitudinal cases with small sample size and relatively large numbers of voxels. The considerably poor performance under the voxel-wise approaches also stems from their inability to pool information across voxels and to account for their spatial configurations. Overall, the proposed l-BTRR method illustrates robust and accurate feature selection in the presence of time-varying as well as time-invariant signals and covariates.

Moreover, the multiplicity-adjusted cs-BTRR performs the second best in general, consistently registering improvements over the voxel-wise methods. However, the cross-sectional approach as implemented cannot accommodate time-varying covariate effects, which results in poor performance compared to the proposed l-BTRR method. Further, the improvements under the joint credible regions for feature selection under the tensor-based methods is evident from higher F1 scores compared to the point-wise credible regions without multiplicity adjustments (see Tables S2–S4 in Supplementary Materials). This further points to the importance of using joint credible regions for feature selection, which is able to respect the shape of the posterior distribution.

In terms of probabilities of coverage, we observe that the proposed l-BTRR method had the second highest coverage behind the longitudinal vl-OLS method in all schemes after applying multiplicity corrections to obtain confidence intervals of prediction for the outcome. However, the width of the intervals used to obtain coverage probabilities was substantially lower for the l-BTRR method than the vl-OLS method. Coupled with the fact that the coverage probabilities for the l-BTRR method were always above 90%, this finding illustrates the improved balance of accuracy and precision of the l-BTRR method over the voxel-wise competing approaches.

While the MCMC sampling scheme tends to be more computationally expensive in practice compared to voxel-wise methods, our implementation is fairly efficient and runs within an hour for the example replicate we considered. Across all Schemes and replicates, average run-times were 445 and 393 s per thousand iterations for l-BTRR and cs-BTRR, respectively, whereas faster run-times of 142, 4, 187, and 129 s were observed for vl-OLS, vcs-OLS, vl-Lasso, and vcs-Lasso, respectively.

5 APHASIA ANALYSIS

5.1 Data description

Fourteen subjects with poststroke chronic aphasia (at least 6 months following stroke) were recruited for this study (Altmann et al., 2014; Benjamin et al., 2014; Krishnamurthy et al., 2021). After recording demographic information about subjects, including age (67 ± 11 years), gender (6 females, 8 males), and cerebrovascular accident type (11 ischemic and 3 hemorrhagic), each subject participated in both an MRI scanning session and task-fMRI language assessment session. For the MRI session, a 1 mm3 isotropic high-resolution T1-weighted anatomical image for registration to Montreal Neurological Institute template space was acquired using turbo field echo acquisition (echo time = 3.7 ms, repetition time = 8.1 ms, field of view = 240 × 240 mm2, flip angle = 8°, matrix size = 240 × 240). MRI scans were used to identify lesion volume and location for each subject. In tandem with MRI sessions, subjects underwent language assessment sessions. The language assessment included administration of the Western aphasia Battery-Revised (Kertesz, 2006), which generated an index of aphasia severity known as WAB-AQ. Language treatment sessions included word retrieval probes to monitor progress, which consisted of both category-member generation and picture-naming trials. Subjects were randomly assigned to one of two treatment groups—control or intention . Both treatment groups underwent standard language therapy involving picture naming and category exemplar generation. In the intention group, an additional treatment involving complex left-hand motion during picture naming was administered, as described in Benjamin et al. (2014).

Task fMRI was used to survey brain activity during category-member generation over a total of three visits per subject (i.e., baseline, 2-week follow-up, and 3-month follow-up), except for one subject who dropped out of the study prior to the final follow-up visit. The task-fMRI scans involved a set of word retrieval tasks, where fMRI was used to survey brain activity during these tasks. In particular, for a total of 60 trials of 6.8 s duration each (6 runs of 10 trials), subjects heard and were shown text for a category (e.g., “Tools”) and were instructed to speak a loud singular example of that category (e.g., “Wrench”). Intertrial intervals were of random duration between 13.6 and 17 s and consisted of subjects viewing a fixation cross while being instructed not to speak or move.

Task-based fMRI allows one to map treatment-induced brain reorganization and/or restoration when the person with aphasia is engaged in a language task. One of the primary focuses of our study is to evaluate how brain neuroplasticity may vary with respect to the two treatments. The first step is to choose a suitable metric for capturing brain activity and associated neuroplasticity changes over longitudinal visits. In this project, we use the voxel-wise area under the curve (AUC) to measure brain activity induced by the task experiment and compute the longitudinal AUC differences across visits to evaluate neuroplasticity, which is more robust to noise (Krishnamurthy et al., 2020). The AUC is an integration of percent change BOLD activity underneath the estimated hemodynamic response function for a given voxel. It is agnostic to treatment-specific and session-specific variability in peak amplitude changes, which is desirable given that estimates of peak amplitude can be heavily influenced by false-positive artifacts, fMRI properties (e.g., sampling rate), and modeling assumptions (e.g., not adequately accounting for temporal variation) (Lindquist et al., 2009; Miezin et al., 2000). From a physiological and biophysical viewpoint, AUC is a good marker for task-induced brain energetics and thereby is suitable for evaluating treatment-induced neuroplasticity changes (Krishnamurthy et al., 2020).

5.1.1 Screening out missing voxels

In practice, each subject is expected to have a subset of voxels in the brain image that are treated as “missing” or redundant, especially in stroke studies. These voxels are considered redundant due to the fact that (i) they lie outside of the brain mask; (ii) they belong to the lesion areas with disrupted brain activity and hence are not expected to show neural plasticity changes that are of primary interest; or (iii) they record zero or close to zero brain activity in terms of AUC values across all samples (i.e., they show no evidence of hemodynamic response activity in any subject at any time) and hence are not discriminatory for our analysis. The set of redundant voxels in (ii) is expected to vary across individuals depending on the lesion characteristics, while the set of voxels in (iii) is common across all samples. We implement a screening step to exclude these redundant voxels, which is a practical step that leads to a dimension reduction for the outcome image used without loss of accuracy. This screening step also makes the tensor-based methods more comparable to the univariate voxel-wise analysis, which requires these voxels to be excluded from analysis, since they either have zero AUC values across all or most samples, preventing a reasonable effort to fit a model corresponding to these voxels. After screening, the number of remaining voxels for analysis had a range of 26,469–30,200 across the 14 study individuals. Furthermore, all subjects had AUC scores for three clinical visits—baseline , posttreatment , and 3-month follow-up —except for one subject who dropped out after visit .

5.2 Model for aphasia study

5.3 Results

The results below report group-level as well as individual neuroplasticity changes under the proposed tensor model, which are inferred using the joint credible regions approach. We note that we also performed a voxel-wise regression for the aphasia dataset. We observed that using this approach, there was a large proportion of voxels for which the full model including all covariates and subject-specific terms did not converge. For these voxels, we therefore had to refit the voxel-wise model by excluding the subject-specific time-slope. However, this approach did not yield any significant neuroplasticity changes due to treatment after CEI-based multiplicity adjustments, and therefore no voxel-wise results are presented under this analysis. Such results are biologically implausible and point to the challenges under the voxel-wise analysis, as highlighted in the Introduction and Section 2.

5.3.1 Group-level neuroplasticity maps

Our focus is to evaluate the differences in neuroplasticity maps between different levels of covariates included in the model, based on the approach presented in Section 2.4. This stratification strategy is designed to investigate the impact of clinical factors on neuroplasticity. We present the neuroplasticity maps between baseline and the posttreatment visit, as well as between the posttreatment and 3-month follow-up visits, corresponding to (i) the two treatment groups; (ii) two age groups (<65 and ≥65 years); (iii) moderate and mild aphasia severity (WAB-AQ score between 50 and 75 and 75 and 93.8, respectively); and (iv) varying levels of lesion volumes (lower, middle, and upper tertile).

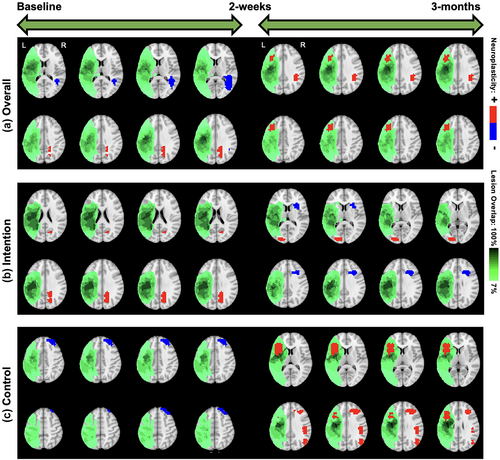

We start with overall neuroplasticity maps that represented the group-level changes without any stratification based on covariates (top panel of Figure 4). For the overall neuroplasticity involving all 14 subjects, decreased activity was observed within the right middle temporal gyrus (R-MTG), and increased activity was observed within the right precuneus between baseline to posttreatment. In contrast, only activity increases were observed within the right angular gyrus (R-AG) and left middle frontal gyrus (L-MFG) between posttreatment and 3-month follow-up (Figure 4).

5.3.2 Maps stratified by treatment

Within the intention treatment group, the right precuneus displayed increased activity between baseline to 2 weeks posttreatment while the right inferior frontal gyrus (R-IFG), specifically pars opercularis, displayed decreased activity while the left middle occipital gyrus (L-MOG) displayed increased activity between 2 weeks and 3 months posttreatment (middle and bottom panels of Figure 4). Within the control group, the right superior frontal gyrus displayed decreased activity between baseline to 2 weeks posttreatment while the L-IFG (pars opercularis), right MFG (R-MFG), right supramarginal gyrus, and right MOG (R-MOG) displayed increased activity between the 2 weeks and 3 months posttreatment (Figure 4). Both the overall neuroplasticity maps (3 months post baseline) and the control treatment maps show long-term activity increases in pericavitational and perilesional brain areas.

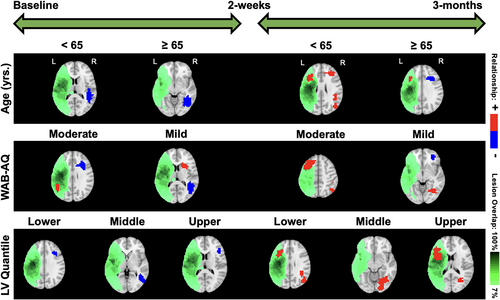

5.3.3 Maps stratified by age

In terms of age (Figure 5), for participants <65 years old, the right superior temporal gyrus (R-STG) decreased activity from baseline to posttreatment while bilateral MFG (L and R-MFG) and R-STG showed increased activity from posttreatment to 3-month follow-up. For participants ≥65 years old, the R-MTG showed decreased activity from baseline to posttreatment while the left IFG (L-IFG) showed increased activity from posttreatment to follow-up. In contrast, the contralateral R-IFG showed decreased activity from posttreatment to 3-month follow-up. Overall, according to Ellis and Urban (2016), participants younger than 65 have more rehabilitation potential to benefit from treatment-specific plastic changes, and those older than 65 may need more tailored and additional treatments to recover long-term increased brain activity.

5.3.4 Maps stratified by aphasia severity

For the moderate aphasia severity group, the R-IFG negatively influenced short-term neuroplasticity, while the contralateral L-IFG and R-MTG positively influenced long-term neuroplasticity. On the other hand, participants in the mild aphasia severity group displayed significant clusters in the R-STG that negatively influenced short-term neuroplasticity and some subcortical areas such as the right caudate and putamen that positively influenced short-term neuroplasticity. In terms of long-term neuroplasticity, the R-MOG was found to have a positive influence while the R-MFG was found to have a negative influence. The moderate severity group exhibited long-term increased neuroplasticity changes in pericavitational and perilesional brain areas. These results are visually illustrated in Figure 5.

5.3.5 Maps stratified by lesion volume

In terms of the lesion volume, participants within the lower one-third quantile displayed significant clusters in the R-MFG that decreased activity from baseline to posttreatment while both the L-IFG and R-MTG increased activity from posttreatment to 3-month follow-up. Participants within the middle one-third quantile displayed significant clusters in the R-MOG that decreased activity from baseline to posttreatment while the right lingual gyrus (R-LG) increased activity from posttreatment to 3-month follow-up. Participants within the upper one-third quantile displayed significant clusters in the right precentral gyrus that decreased activity from pre-treatment to follow-up while the left insula and R-LG increased activity from posttreatment to 3-month follow-up. The group with higher lesion volume showed long-term activity increases in brain regions lying close to the lesion areas. These results are visually illustrated in Figure 5.

5.3.6 Summary comments

It is interesting to observe that the model provided consistently increased brain activity estimates for long-term changes when all participants were pooled together irrespective of specific (i.e., standard or intention) therapy. Further, when the participants were separated based on the type of treatment, our novel modeling approach was able to identify unique potential biomarkers for treatment-specific neuroplasticity changes. While the control therapy showed a long-term activity increase, the intention treatment provoked both short- and long-term activity increases. On the other hand, activity decreases were in short-term measures for the control treatment and only in long-term measures for the intention treatment. Considering that intention treatment involved additional non-gestural circular hand movements on top of the standard therapy, we hypothesize that such cognitively (i.e., intention) driven nonsymbolic hand movements facilitate cognitive control and gating of information flow (Gratton, 2018).

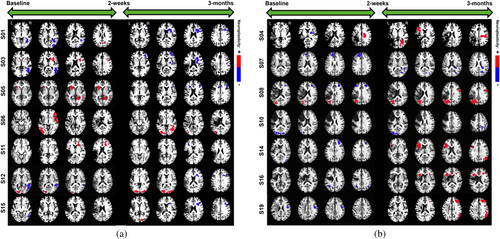

5.3.7 Individual neuroplasticity maps

In addition to the above group-level findings, our novel model also allows to extract neuroplasticity maps for each individual over visits. Common markers of short-term neuroplasticity within the intention group participants included the R-IFG (pars triangularis) for increased activity changes and right inferior temporal gyrus for decreased activity changes whereas long-term neuroplasticity estimates were more common within the R-MFG for decreased activity and the R/L-MOG for increased activity (Figure 6). Other, less common, significant clusters were found within right hemisphere subcortical regions corresponding to increased short-term activity as well as decreased long-term activity changes. Common markers of short-term neuroplasticity within the control group participants included the L-MOG and R/L-MFG for decreased activity changes whereas long-term neuroplasticity estimates were more common within the R-MFG and L-IFG (pars triangularis) for increased activity changes (Figure 6). Considering the heterogeneity of the lesion profile and associated poststroke recovery, generating personalized diagnostic and prognostic biomarkers are very pragmatic and attractive in stroke rehabilitation. Our results not only show that our novel approach has the potential to generate such individualized maps, but also the individualized results show consistent trends in neuroplasticity changes that are treatment and time point specific. These personalized treatment-specific spatial maps of neuroplasticity also allow for potential triaging of participants into individualized treatment plans that are tailored to their baseline clinical profiles.

5.3.8 Validation using longitudinal prediction

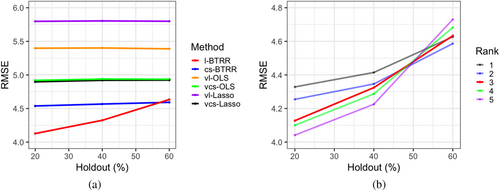

To assess the suitability of the tensor model for the aphasia data, we evaluated the out-of-sample prediction performance of the proposed model and other competing methods (cs-BTRR, vl-OLS, vcs-OLS, vl-Lasso). The training set comprised all voxels from the first two visits for all 14 subjects, whereas the test set comprised varying levels of holdout voxels from the third visit. In particular, we considered randomly chosen 20, 40, 50, and 60% of non-missing voxels pertaining to visit 2 across all subjects, to construct the test set. For each holdout level and method, the out-of-sample RMSE was computed to examine predictive performance. For the l-BTRR method, five different choices of ranks were used (i.e., ) to ascertain the effect of rank on predictive performance. As seen in Figure 7a, l-BTRR (using rank 3) had superior out-of-sample predictive accuracy compared to all competing methods for low to moderate holdout (i.e., roughly less than 60%), but the performance becomes comparable to the cs-BTRR for higher levels of holdout voxels. These findings validate the prowess of the l-BTRR approach over both cs-BTRR and voxel-wise methods when the number of missing voxels is a small to moderate. Moreover, the voxel-wise methods have consistently inferior predictive accuracy compared to tensor-based approaches that is not surprising given our simulation findings.

Figure 7b shows the out-of-sample RMSE for l-BTRR with a choice of rank between 1 and 5, demonstrating how higher choices of rank have better predictive performance for low holdouts but are more sensitive to the size of the training set and perform worse on high holdouts. This is expected, given that tensor models with higher ranks contain more parameters and require larger sample sizes for optimal training. To further assess the optimal choice of rank, the DIC score was computed for each level of holdout (20–60%) and rank (1–5). We found that the rank 3 model fits yielded a lower DIC than all other examined ranks across all levels of holdout. Given these results, rank 3 was selected for the full aphasia analysis, as it provided a balance of model parsimony and predictive performance.

5.3.9 Model diagnostics

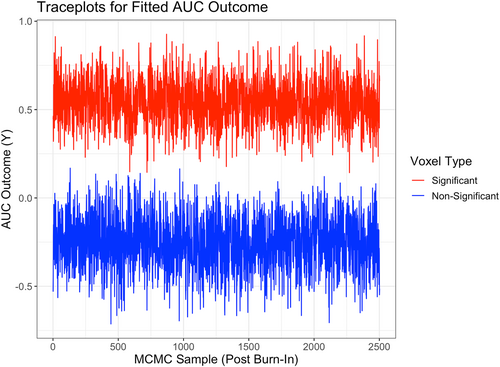

In order to further evaluate the performance and biological validity of the proposed l-BTRR when fitting to the aphasia dataset, we examined various other criteria. In particular, we looked at convergence diagnostics of the MCMC samples for the estimated model coefficients and fitted AUC outcome in Model 5 at each voxel. Figure 8 provides example traceplots of the fitted AUC outcome (using the l-BTRR method) across 2500 post-burn-in MCMC samples for two randomly selected voxels, one with significant (nonzero) fitted AUC and one with nonsignificant (zero) fitted AUC. These traceplots provide visual evidence for convergence in the MCMC chain under the l-BTRR approach. To test for convergence more rigorously, we also ran the Dickey–Fuller test (Dickey & Fuller, 1979) on each post-burn-in MCMC chain of the fitted AUC outcome, averaged across all subjects and time points. Of all the traceplots for the 30,200 non-missing voxels, 26,425 (87.5%) voxels had corresponding Dickey–Fuller test p-values of less than .05, providing evidence that these voxels were stationary. In comparison, we performed the same set of tests on the cs-BTRR model fit to the aphasia dataset and found that 27,572 voxels (91.3%) had evidence of stationarity under the cross-sectional tensor framework. The convergence rate could potentially be increased further by increasing the number of MCMC samples. Moreover, we note that simplifying the proposed l-BTRR approach by omitting model terms and fitting it as a cross-sectional model increases the efficiency of the MCMC sampler, leading to higher rates of convergence across voxels. However, the increased efficiency of the cs-BTRR MCMC algorithm comes at the cost of losing capability for individual-level inference and having worse estimation and out-of-sample prediction. Given the fact that the number of voxels with stationary traceplots did not differ drastically across methods, we conclude that the advantages of the l-BTRR method outweigh the costs in this application. The mixing of the MCMC chain also depends on additional tuning parameters, such as , which is involved in the Metropolis-Hastings step. More details regarding the choice of tuning parameters can be found in the Supplementary Materials, along with additional insights about spatial correlations in the model coefficients and fitted brain images (see Table S5, Figures S2 and S3).

6 DISCUSSION

Our analysis of data from an aphasia longitudinal neuroimaging study revealed distinct spatial patterns of neuroplasticity that may vary by treatment and/or with respect to clinical covariates. We conclude that neuroplasticity changes may consist of activity increases or decreases depending on treatment. These general findings, that brain functionality is heterogeneous across space, time, and subject, are consistent with the literature. It is also worthy of comment that long-term changes in brain activity occurred between posttreatment and 3-month follow-up fMRI. Such long-term changes occurred for both the intention and control treatments but were more pronounced in the control group. Long-term brain activity changes continuing 3 months beyond treatment might reflect the improvement in discourse output. In the short-term (posttreatment), the intention group resulted in brain activity changes whereas the control group led to decreases in brain activity. Thus, the pattern of short-term and long-term brain activity changes is fairly distinct between the treatment groups, which may potentially contribute to differences in discourse gains. Additional work is needed for a more systematic interpretation to determine the clinical significance of those brain regions that are most highly affected.

One must be careful when interpreting the meaning of the neuroimaging results from the aphasia study. Since the goal of rehabilitation is to change functional behaviors, the ultimate determination of whether changes in brain activity are adaptive, maladaptive, or neutral depends on whether they are associated with improvement in target rehabilitation language behaviors. This study focused on improving analysis techniques to determine more accurately the brain regions in which activity increased or decreased. Therefore, the implications of these changes in the light of modifications in language behaviors were beyond the scope of this article and will be considered in future work. Indeed, there is previous evidence of association between language behavior and changes in brain activity. In particular, in an analysis based on a voxel-wise approach, a rightward shift in posterior perisylvian activity from baseline to posttreatment was found to be associated with improved word retrieval only for the intention but not for the control group (Benjamin et al., 2014). Hence, the next step in our research is to incorporate language outcome data into the analysis models for neuroplasticity under the elegant framework of Bayesian tensor modeling. Such analysis will enable us to confirm/disconfirm previous findings and potentially shed light on new findings that were not discovered under a voxel-wise approach, likely due to the limitations outlined in this article.

While it is worthwhile to identify that lesion volume influences long-term plasticity, our results indicate that age is a critical factor in producing increases in brain activity. This is consistent with the observations in Ellis and Urban (2016) that participants younger than 65 have more rehabilitation potential to benefit from treatment-specific plastic changes, and those older than 65 may need more tailored and additional treatments to gain increased brain function in the long-term. Modeling clinical factors such as age, aphasia severity, and lesion volume brought out activity decreases from baseline to posttreatment. This outcome is not negative, per se. As pointed out above, its clinical significance depends on whether it is associated with desired changes in language behavior. Further, since these analyses were collapsed across the competing treatments, it is unclear how much influence each treatment had on these findings. Additionally, one emerging principle pertaining to short-term synaptic plasticity is that changes in the balance of parallel excitation and feedforward inhibition can be used for gating information flow in an activity-dependent manner (Anwar et al., 2017; Bao et al., 2010). Since excitation in a structure would tend to increase neural activity while the results of feedforward inhibition in the same structure would tend to decrease activity, this phenomenon represents a challenge for visualization and quantification using advanced neuroimaging tools. Yet, understanding these phenomena could be useful in treatment planning tailored to a participant's baseline clinical profile. This is particularly relevant given the ability to upregulate or downregulate focal brain activity with noninvasive brain stimulation techniques, which is emerging as a promising technique in aphasia treatment (see review in Crosson et al., 2019). The subject-specific neuroplasticity maps inferred under the proposed method can potentially serve as an important tool for determining such personalized treatment interventions. Finally, we note that the proposed tensor-based approach succeeds in providing accurate results for the aphasia study with a small sample size, which is encouraging and highlights the robustness of the method. We note that such small sample sizes are routinely encountered in stroke as well as brain tumor literature, which are rare disorders. The proposed approach can be generalized to other studies with image outcomes and larger sample sizes in a straightforward manner.

The proposed tensor-based approach involves a careful selection of the tensor rank using the DIC score, which is routinely used in literature. However, this strategy requires us to fit separate models for each choice of rank which can be potentially time-consuming. A possible alternative is to perform trans- dimensional MCMC where the tensor rank is learned in an adaptive manner, which would also provide uncertainty estimates about the tensor rank. This method could be potentially explored in future work. A limitation of most tensor-based approaches is that they are expected to deteriorate when the level of discontinuities becomes increasingly high or where there are sharp localized discontinuities (e.g., sparse cortical thickness images). Such applications may need higher choices of tensor rank or more specialized choices of basis functions such as wavelets.

7 CONCLUSION

In this article, we developed a novel and scalable tensor response regression approach that models voxel- level brain imaging features based on covariates of interest for longitudinal neuroimaging studies, which is designed to provide considerable advantages over routinely used voxel-wise approaches. The proposed approach is able to preserve the spatial configurations of the voxels, it accommodates heterogeneity between samples while also allowing for group-level inferences, and it is able to pool information across voxels, yielding model parsimony and improved feature selection accuracy. The importance of the tensor-based approach for the analysis of aphasia data becomes evident from superior out-of-sample predictive performance over voxel-wise methods and given the fact that the voxel-wise approach is unable to infer any significant neuroplasticity changes after multiplicity adjustments. The proposed approach should be applicable to a wide array of longitudinal imaging studies and can be used in lieu of voxel-wise analysis to produce more accurate results.

AUTHOR CONTRIBUTIONS