AI in Neurology: Everything, Everywhere, All at Once Part 1: Principles and Practice

Additional supporting information can be found in the online version of this article.

Abstract

Artificial intelligence (AI) is rapidly transforming healthcare, yet it often remains opaque to clinicians, scientists, and patients alike. This review, part 1 of a 3-part series, provides neurologists and neuroscientists with a foundational understanding of AI's key concepts, terminology, and applications. We begin by tracing AI's origins in mathematics, human logic, and brain-inspired neural networks to establish a context for its development. The review highlights AI's growing role in neurological diagnostics and treatment, emphasizing machine learning applications, such as computer vision, brain-machine interfaces, and precision care. By mapping the evolution of AI tools and linking them to neuroscience and human reasoning, we illustrate how AI is reshaping neurological practice and research. We end the review with an overview of model selection in AI and a case scenario illustrating how AI may drive precision neurological care. Part 1 sets the stage for part 2, which will focus on practical applications of AI in real-world scenarios where humans and AI collaborate as joint cognitive systems. Part 3 will examine AI's integration with extensive healthcare and neurology networks, innovative clinical trials, and massive datasets, expanding our vision of AI's global impact on neurology, healthcare systems, and society. ANN NEUROL 2025;98:211–230

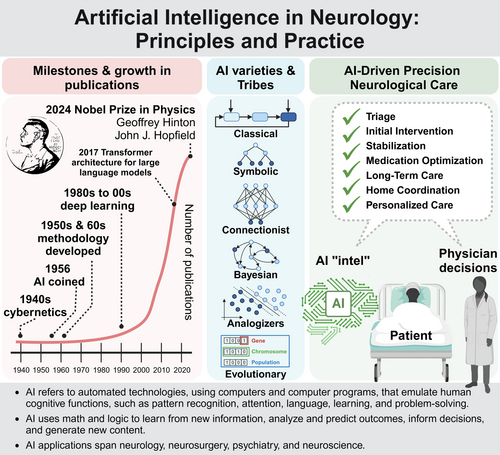

Graphical Abstract

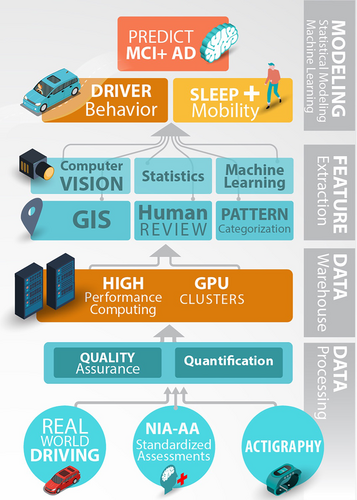

Data are the lifeblood of modern neurology practice, knowledge, and learning and necessary to advance mind and brain health, care, and cures for all peoples and communities. These data are present everywhere within the “exposome,” the sum of all real-world chemical, physical, biological, behavioral, and social factors that influence human health over the lifespan.1, 2 The exposome, in turn, interacts with genetics and various “omics” biomarker data, shaping individual susceptibility, resilience, and phenotypes in neurological health and disease throughout life.

Sensors ubiquitously embedded in devices, vehicles, buildings, homes, appliances, clothing, people, and urban infrastructures—collectively part of the “internet of things”—continuously monitor performance, behavior, and physiology within varying social and environmental contexts. These real-world “digital biomarker” data can be combined with self-reports and ecological momentary assessments (experience sampling) on cognitive, affective, and conative status (related to volition and goal-directed behavior), providing unprecedented longitudinal observations of the “brain in the wild.”3

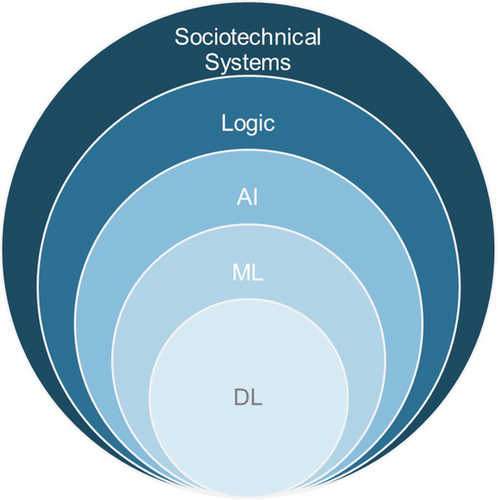

New artificial intelligence (AI) and machine learning (ML) strategies and techniques can classify, aggregate, reduce, and analyze these torrents of health informatics data to drive medical discovery.4 AI overcomes the “curse of dimensionality”5 in highly complex neurological data sets, which are often not addressed by conventional biostatistical and epidemiological methods. This review on AI in neurology outlines current applications as well as applications on the horizon, underscoring priority issues for further development. There is no universal consensus on definitions of the terms used here, nor in how topics relate together conceptually and historically. No one can claim expertise across the “big bang” expanse of AI, and must achieve proficiencies—native, fluent, or conversational—in facets most pertinent to their professional and daily lives, acting locally while thinking globally.

Part 1 covers AI definitions, milestones, varieties, logic, biases, model selection, and various AI types, such as ML, deep learning, deep neural networks (DNNs), and generative AI (Fig 1). Part 1 will also cover the proliferation of AI applications in neurology and neuroscience, including computer vision, human brain-machine interfaces, and disease prediction. Part 2 will examine real-world applications and the development of AI-enabled social and emotional robots and their looming sentience and the social, legal, and ethical foundations of AI, including concerns on privacy and protection of vast neurological data. The introduction of new tools generates deep questions on large-scale integration of AI into many facets of society, paving the way for discussion on sociotechnical systems in part 3 (Fig 1). A glossary spans all sections.

As we shall see, AI, combined with modern organizational principles and practices,6 ever-increasing computing power, analytic insights, and systems science, are key to neurological advances, which seem to affect everything we do in neurology and healthcare, everywhere, all at once.

What Is AI?

AI refers to the ability of automated technologies, using computers and computer programs, to emulate human cognitive functions, such as learning and problem-solving. AI technologies use math and logic to learn from new information, analyze and predict outcomes from large datasets, make decisions from incomplete data using probabilistic models, and generate new content and synthetic data. AI spans a variety of application areas and tools, including ML, robotics, computer vision, natural language processing (NLP), knowledge representation, reasoning and problem solving, and expert systems that mimic human thinking in specific domains.

ML, an AI subset, develops algorithms that help computers learn from experience to make decisions. Algorithms (named after the 9th century mathematician Al-Khwārizmī7) are sets of rules or instructions for processing data inputs to generate outputs. ML algorithms manifest as computer programs that create models by learning from data, their quality and integrity largely dependent on the quality of the training data.

A model is a mathematical representation that recognizes and interprets patterns in data. AI uses models to derive insights, often represented as probabilities. Probabilistic approaches include Bayesian networks (graphical models), hidden Markov models (statistical models), and Markov Chain Monte Carlo methods (algorithms that sample from probability distributions).8 Mathematical models generally represent processes in various fields, for example, Newton's laws describe physical phenomena, financial models predict market behaviors, and the susceptible, exposed, infected, recovered/removed model in epidemiology represents the spread of diseases. In AI, the same algorithm can be adapted to different models depending on the data it is trained on, demonstrating the versatility of these computational tools.

Therefore, algorithms are the procedures for processing data, whereas models are the resulting structures that encapsulate the learned knowledge from the data. Algorithms can code a Fibonacci sequence, control traffic lights, or alphabetize words in a dictionary. Models, fit by computer programs, represent a part of the world mathematically.

One real-world example of AI in neurology is the use of ML algorithms to analyze medical imaging data, such as magnetic resonance imaging (MRI), computed tomography (CT), and positron emission tomography (PET) scans, identifying patterns and anomalies. These algorithms train models by fitting them to vast amounts of imaging data, optimizing their ability to generalize and detect clinically relevant features. Well-fitted models enhance diagnostic accuracy, enabling AI systems to provide insights that human clinicians might miss. This leads to earlier detection and more accurate diagnoses of neurological conditions, such as Alzheimer's disease, stroke, and brain tumors. Throughout this review, we will explore how AI applications in neurology continue to refine patient care.

AI Milestones

John McCarthy coined the term AI at the 1956 Dartmouth Conference, seemingly marking the field's debut. However, AI is rooted in earlier innovations dating back centuries originating from deeper understandings of logic, causal reasoning, mathematics, and statistics (Table 1). Nineteenth century mathematical innovations, along with early 20th century hypothesis testing and statistical methods, set the stage for more complex AI systems.

| Epoch/decade | Key contributions and developments |

|---|---|

| Early 19th century | Legendre9 and Gauss10 introduced regression analysis for astronomical data, a foundational method for understanding relationships between variables spread across scientific disciplines. Cauchy11 introduced the method of steepest descent (gradient descent), foundational to optimizing ML algorithms. |

| Mid-19th century | Boole12 published “An Investigation of the Laws of Thought,” introducing Boolean algebra, fundamental to the development of digital logic and computer science. Lovelace13 created the first algorithm intended for a machine (the Analytical Engine), contributing to early conceptualization of computer science and programming. |

| Early 20th century | Key advances in statistical methods with hypothesis testing and “Student's” t test,14 Fisher15 expanded with the introduction of ANOVA. |

| 1940s | Emergence of cybernetics by Wiener16; McCulloch and Pitts17 advanced early models of neural networks; Hebb synapse18 of “neurons that fire together wire together.” |

| 1950s | McCarthy19 coined the term AI in 1956; Munro20 advanced gradient descent; Rosenblatt (1958) developed the perceptron.21 |

| 1960s | Traditional computer programming methods and early AI systems like DENDRAL and MYCIN19, 22; Bayesian networks by Judea Pearl23; principal component analysis advances by Pearson24 and Hotelling25; support vector machines by Vapnik and Chervonenkis.26 |

| 1970s | Development of genetic algorithms by Holland27; popularization of clustering techniques by MacQueen28; further development of logic programming and constraint satisfaction problems. |

| 1980s | Ensemble learning methods like bagging and boosting; backpropagation introduced by Rumelhart, Hinton, and Williams29; structural causal models by Pearl.30 |

| 1990s | Development of reinforcement learning frameworks like Q-learning and policy gradient methods31; prominence of Hidden Markov models by Rabiner.32 |

| 2000s | Introduction of generative adversarial networks by Goodfellow33; emergence of diffusion models34; popularization of deep learning by LeCun, Bengio, and Hinton35; advances in differential privacy by Dwork.36 |

| 2010s | Development of variational autoencoders by Kingma and Welling37; revolution in natural language processing by attention mechanisms and transformers38, 39; introduction of frameworks like Google's AutoML40; advances in Explainable AI driven by DARPA41; breakthroughs with Google AlphaGo42 and AlphaFold.43 |

| 2020s | Popularization of the term “stochastic parrots” by Bender et al44 highlighting limitations and ethical considerations of large language models; rise of AI-powered conversational agents like ChatGPT and Claude. |

- History of innovation cascades leading to AI applications, originating from deeper understandings of logic and causal reasoning, mathematics, statistics, hypothesis testing, and various ML “tribes” and other tools under the umbrella term of AI.

- Abbreviations: ANOVA = analysis of variance; AI = artificial intelligence; ML = machine learning.

In the 1940s, Norbert Wiener16 introduced cybernetics, a field crucial for understanding control systems and communication in animals and machines, significantly influencing early AI development. McCulloch and Pitts advanced early models of biologically inspired neural networks, laying foundational concepts for future AI.17 The 1950s and 1960s witnessed the development of essential AI methodologies, such as search algorithms, logic programming, and optimization techniques.

The recent AI revolution launched with the emergence of deep learning, which Hinton, LeCun, Bengio, and others had been exploring for some time, from the 1980s through the early 2000s (see “Neural Networks: History and Underpinnings” section).35, 45 A deep learning model significantly outperformed other types of models, primarily support vector machines, at the 2011 to 2012 ImageNet competition, compelling researchers to reconsider neural networks as serious contenders for building AI systems following a period of skepticism.46

Discriminative deep learning models, such as convolutional neural networks (CNNs) and recurrent neural networks (RNNs) excel at image classification, object detection, and language translation, with great success, for instance, in medical document and image analysis.47-50 Generative deep learning models, such as generative adversarial networks (GANs) and variational autoencoders (VAEs), learn from the underlying distribution of input data and rapidly gained popularity for their impressive outputs, like realistic images, music, and text.51, 52

“Attention is All You Need” by Vaswani et al38 introduced transformers neural networks, which leverage a mechanism called self-attention to process entire input sequences in parallel, unlike previous models that processed data sequentially. Parallel processing capability has rapidly advanced the field of NLP and underpins large language models (LLMs) like ChatGPT, used in many applications, including neurology practice, research, and education. The “Stochastic Parrots” paper raised concerns about this evolution, emphasizing the ethical implications and limitations of LLMs, urging caution (see part 2).44

Spectacular AI innovations continue to spring from human imagination, reasoned exploration of hypothetical and counterfactual “what if” scenarios (see “AI and Causation” section), open-ended discussion, and determined experimentation. These advances are revolutionizing the neurological sciences and unlocking new insights into the complex workings of the brain and healthcare systems.

AI Varieties

AI varieties include ML and older established approaches rooted in traditional computer programming and computer science (Fig S1). ML is a prominent subset of modern AI. In “The Master Algorithm,” Domingos53 classifies ML into 5 “tribes”, symbolists, connectionists, evolutionaries, Bayesians, and analogizers, each offering unique methodologies with significant implications for neurological care, research, and education (Table 2A). Symbolists use logic and symbols to represent knowleadge and make inferences, relying on inductive logic programming and other methods.65, 66 In neurology, symbolists develop diagnostic decision support systems that identify conditions based on patient symptoms and medical history (e.g., diagnosing multiple sclerosis or epilepsy).55 Connectionists, inspired by the brain's neural networks, adjust weights in neural network connections based on input–output pairs.17, 60 This approach, including deep learning, effectively processes medical images, like MRI and CT scans, detecting anomalies and diagnosing tumors or strokes.

| (A) Five tribes of AI | ||

|---|---|---|

| Tribe | Key algorithmic methods | Neurology applications |

| Symbolic | Decision trees, rule-based systems, knowledge graphs, inverse deduction, random forest | Diagnostic decision support systems for diagnosing diseases like multiple sclerosis or epilepsy55 |

| Connectionist | Artificial neural networks, deep learning, convolutional neural networks, backpropagation | Processing medical images (MRI, CT scans), detecting anomalies, diagnosing tumors or strokes56 |

| Evolutionary | Genetic algorithms, evolutionary strategies, genetic programming | Customizing treatment plans, for example, optimizing spinal cord stimulation for treating chronic pain57 |

| Bayesian | Bayesian networks, Bayesian optimization, Gaussian processes, Bayesian Inference | Probabilistic reasoning for patient diagnosis and treatment, predicting disease progression58 |

| Analogizers | Case-based reasoning, analogical reasoning, similarity-based learning, support vector machines | Classifying neurological conditions, early detection of rare diseases, Alzheimer's disease, seizure classification in epilepsy59 |

| (B) Classical AI tools | ||

|---|---|---|

| Technique | Algorithms | Description and/or neurology applications |

| Expert systems (1960s) | Rule-based algorithms, inference engines | Uses rules and logic to mimic human decision-making,22 aid in diagnosing diseases, like multiple sclerosis or epilepsy |

| Search algorithms (1950s) | Breadth-first search, depth-first search, A* algorithm, Dijkstra's algorithm | Navigate problem spaces to find optimal solutions,60 such as surgical paths or neuroimaging data analysis |

| Logic programming (1970s) | Resolution, unification, backtracking | Infers conclusions from rules and facts,54 modeling neurological knowledge for diagnosis based on symptoms and medical history |

| Optimization algorithms (1950s) | Genetic algorithms, simulated annealing, particle swarm optimization, gradient descent | Identify the best solutions among many possibilities,27 like optimizing treatment plans for neurological disorders |

| Symbolic AI (1950s) | Semantic networks, knowledge representation frameworks, ontology-based algorithms | Manipulates symbols for tasks, such as natural language processing of neurological research literature,61 including semantic networks and knowledge graphs |

| Planning (1960s) | STRIPS partial-order planning, hierarchical task network planning | Creating action sequences to achieve goals,62 such as automated systems planning patient care pathways or rehabilitation protocols |

| Rule-based systems (1970s) | Forward chaining, backward chaining | Derive conclusions or perform actions using “if-then” rules,63 as seen in clinical decision support systems guiding diagnoses and treatments |

| Constraint satisfaction problems (1970s) | Backtracking, constraint propagation, local search algorithms, arc consistency algorithms | Solve issues defined by constraints,64 such as scheduling patient appointments or resource allocation in neurology departments |

- This table highlights foundational AI methods and their applications in neurology. (A) Five tribes of machine learning with key algorithmic methods and neurology applications.53, 54 (B) Classical AI tools from traditional computer science, detailing their introduction dates, algorithms, descriptions, and neurology applications

- Abbreviations: AI = artificial intelligence; CT = computed tomography; MRI = magnetic resonance imaging; STRIPS = Stanford Research Institute Problem Solver.

Evolutionaries use algorithms inspired by natural selection, such as genetic algorithms, to optimize solutions. In neurology, these methods customize treatment plans and personalize therapy based on genetic and clinical data27, 67 (e.g., optimizing treatment for chronic pain).57 Bayesians apply Bayes' theorem to update the probability of hypotheses and model parameter estimates as more evidence becomes available. Bayesian methods are useful in neurology for probabilistic reasoning in diagnosis and treatment, incorporating prior knowledge and new evidence to predict disease progression and treatment efficacy.30, 68 Analogizers learn by drawing analogies between similar instances, using techniques like support vector machines and nearest neighbor algorithms.26, 108 Analogizers classify neurological conditions based on similarities with previously diagnosed cases, aiding in the early detection of neurological diseases.

Although Domingos classifies ML varieties, LeCun et al focus on how ML algorithms learn from data or interactions,35 categorized into supervised, unsupervised, and reinforcement learning, particularly suited to DNNs with multiple layers that model complex patterns and representations in data (see “Artificial Neural Network Anatomy and Applications” section). Russell and Norvig60 and Poole and Mackworth67 provide broader taxonomies and additional foundational perspectives on ML/AI (Table S1).

Several key AI approaches predate ML, including expert systems, search algorithms, logic programming, optimization algorithms, symbolic AI, planning, rule-based systems, and constraint satisfaction problems (Table 2B). Classical approaches often complement newer AI paradigms, like ML and deep learning, enhancing robustness, interpretability, and effectiveness in solving contemporary problems in neurology and other fields.60, 61, 69 Researchers combine approaches to create hybrid or ensemble models, improving their ability to diagnose, treat, and manage neurological conditions. Applications span NLP, planning and scheduling, predictive analytics, computer vision, robotics, speech processing, and more.

Although these applications demonstrate AI's current capabilities, its foundations in computer science and mathematics are equally important for understanding its limitations and potential in neurology. For instance, the “polynomial time (P) vs nondeterministic polynomial time (NP)” problem,70 is a fundamental challenge in AI and computer science, addressing how efficiently problems can be verified and solved. The relevance of this problem becomes evident when tackling the computational challenges posed by large and complex datasets in neurology. These datasets or “data lakes” (discussed in part 3) are being aggregated by nationwide consortia focused on intensively studied neurologic conditions like stroke, Parkinson's disease, Alzheimer's disease, and related disorders. Currently, AI addresses such complex problems through approximations and heuristics, which are effective but suboptimal. Advances like quantum computing may one day provide the breakthroughs needed to tackle the “P vs NP” problem directly, unlocking new possibilities for neurology, such as personalized medicine and complex disease modeling.

Many of the scientific underpinnings of AI—such as linear algebra, calculus, probability, and statistics—likely align with neurologists' academic backgrounds. Ananthaswamy's “Why Machines Learn”71 offers accessible explanations of how these principles underpin ML and contrast its data-driven learning approach with traditional rules-based computer programming.60

AI and Laws of Thought

AI, like neurology and neuroscience, is deeply rooted in philosophy, psychology, and logic, leveraging deductive, inductive, and abductive reasoning. These forms of reasoning, integral to human practitioners and AI systems dedicated to advancing neurological care, education, and research, deserve greater understanding and critical review to maximize their potential.

Deductive reasoning draws certain conclusions if the premises are correct. Socrates deduced, “All men are mortal. Socrates is a man. Therefore, Socrates is mortal.” Descartes famously stated, “Cogito ergo sum” (I think, therefore I am). Although central to mathematical proofs, deduction is not the primary mode for subtle diagnostics, which often require a blend of reasoning methods.

Induction generalizes from specific observations to generate probabilistic conclusions. David Hume argued that inductive reasoning arises from custom and habit, but that we can never truly know if one event causes another.72 Karl Popper asserted that induction has no place in the logic of science.73 Nevertheless, neuroscientists apply induction to generate hypotheses they test by experimentation, which can be rejected but never fully confirmed. Induction's limitation, the empirical constraint, can lead to flawed conclusions from novel encounters. For example, a neurologist might misdiagnose rare disorders as common conditions based on past experiences—a “black swan” scenario.74 Similarly, an AI algorithm relying on typical cases might miss atypical situations, akin to Russell's inductivist turkey, which, being fed every day, failed to anticipate its eventual slaughter. Dealing with ambiguous or unusual neurological presentations benefits from an open mindset, intellectual integrity, avoiding inductive biases, and exploring alternative hypotheses through abductive reasoning.75, 76

Abduction, coined by Charles Sanders Peirce, infers preconditions from consequences and making educated guesses about causes.77 Abduction is crucial for diagnosing complex neurological disorders by forming hypotheses to explain atypical symptoms that do not fit known patterns. This requires synthesizing information, considering multiple explanations, and using deep neurological knowledge. Abduction helps identify faults or factors that likely cause problems, refined through successive observations, following the principles of probabilistic inference of Reverend Thomas Bayes (b, 1701, P = 0.75, as the data show).78 Human experts' abductive reasoning currently surpasses AI, which excels at pattern recognition but struggles with nuanced reasoning and creative problem-solving in complex cases.

AI's logical reasoning capacities are advancing through ML research and deeper explorations of causal inference (see “AI and Causation” section). For example, case-based reasoning systems may potentially improve AI's abductive capabilities by storing libraries of past cases and using similarity metrics to retrieve the most relevant examples for new problems.79, 80 Combining neural networks with symbolic logic are being investigated to integrate pattern recognition with explicit reasoning.81 AI tools assist neurological diagnosis, such as analyzing brain scans for abnormalities and enhancing diagnostic reasoning through the integration of ML and symbolic approaches.81, 82 However, these tend to focus on specific narrow tasks rather than holistic clinical reasoning.

AI is increasingly valuable for augmenting neurologists' reasoning, especially in complex cases requiring extensive information synthesis. Human expertise is crucial for supervising AI and contextualizing insights, enhancing diagnosis, and patient care. Key challenges include handling heterogeneous clinical data, modeling uncertainty, promoting generalizability, avoiding overfitting, incorporating background knowledge, improving efficiency, and ensuring transparency and explainability to foster clinician trust. Understanding and mitigating AI biases in reasoning are fundamental to all applications.83

AI and Bias in Logical Reasoning

Bias in logical reasoning challenges humans and AI. Logic helps human brains and AI explain complex, ambiguous data and situations. Explanatory models start from hunches and rely on assumptions that may never be proven.84

Beliefs affect human interpretations and conclusions based on facts and evidence. Confirmation bias (Fig S2) is the tendency to seek and prefer information that supports our beliefs while ignoring contradictory data.76 Understanding how emotions bias us is crucial for logical decision-making and civil argumentation.85 Recognizing our belief systems and mitigating biases, such as the notion that AI is dangerous, is essential.86, 87

AI can develop biases (Table S2), even without human emotion or investment in beliefs, based on poor training or unsound assumptions. Obermeyer et al88 highlighted significant racial bias in a widely used healthcare algorithm, relevant to neurology, underscoring this issue. Understanding and addressing these biases is crucial for developing fair and equitable AI systems.

AI and Causation

Understanding principles of causal inference is central to neurology and AI, enabling researchers and clinicians to identify true cause-and-effect relationships in complex neurological systems, model brain function more accurately, deepen understanding of disease mechanisms, enhance diagnostic tools, and develop effective personalized treatments and public health strategies.

Pearl and Mackenzie89 envisioned a 3-rung ladder of causation, essentially “seeing, doing, imagining,” which frames our understanding of AI capabilities and neurological applications (Fig S3). The first rung involves learning by association, a capability of current AI and most animals. The second rung pertains to learning the effects of specific actions, like how babies acquire causal knowledge. The top rung, counterfactual learners, can imagine worlds that do not exist and infer reasons for observed phenomena, pondering “What if?” and “If only!” Capable AI should reason at all levels.

AI-emulated deduction, induction, and abduction reasoning (see “AI and Laws of Thought” section) map onto Pearl's ladder (Table S3). Deduction derives causal relationships from assumptions or causal models,90 fitting at lower rungs. Induction falls in the middle, generating causal models to learn from data and make predictions. Abduction occupies the top rung, developing and refining causal models, including counterfactual propositions and hypotheses.

Classical statistics, which predate and contribute to AI (“AI and Synergies with Biostatistics” section), can also be contextualized within Pearl's ladder of causation. At the bottom rung, classical statistical analyses assess parameters of a distribution from samples to infer associations among variables91 (e.g., correlation, linear regression, and risk ratios), which generally do not address causal concepts except for situations like randomized clinical trials. Methods like instrumental variables, propensity score matching, and structure equation models92-95 advance the framework to the middle rung. The counterfactual top rung tackles complex causal questions, such as, “Does traumatic brain injury cause Alzheimer's disease?” (see example below), which requires sophisticated tools and methodologies that extend beyond simple associations. Causal analyses infer probabilities under changing conditions, beyond methods that rely solely on the joint or distributional distribution of observed variables, such as correlation or regression. Causal concepts—at the upper rungs of Pearl's ladder—require an understanding of interventions and dynamic changes that basic statistical methods at the lowest rung do not capture (Data S1).91

Although Pearl's framework helps explain AI capabilities, emerging AI tools are bridging gaps across Pearl's ladder, creating a cycle of progress. AI-driven causal algorithms are transforming neurological diagnosis, care, and treatment by clarifying causal relationships among key factors, such as genetics, environment, and lifestyle across a patient's lifespan. These tools are unravelling the complexities of Alzheimer's96 and Parkinson's97 diseases. Bayesian causal models98 and probabilistic causal reasoning improve inferences despite measurement errors or incomplete patient histories,99 enhancing diagnostic accuracy. Causal transfer and generalization methods enhance model adaptability across diverse patients and populations. Causal models support better decision-making in treatment options and lifestyle interventions—such as critical care strategies83, 100—and informing public health policies to preserve cognitive function.

Modern neuroepidemiology often combines emerging AI tools and advanced causal reasoning, such as Pearl's approach, with traditional heuristics, like Hill's criteria, to rigorously assess potential causal relationships. Neurologists may recall Sir Austin Bradford Hill's principles of causality, which include strength, consistency, specificity, temporality, biological gradient, plausibility, coherence, experimental evidence, and analogy.101 These principles assess causality without formal modeling, as exemplified by studies linking tobacco to cancer.102 However, their flexibility can lead to subjective interpretations, particularly regarding specificity. Single causes can exert multiple effects, and single effects may arise from multiple causes, complicating the isolation of clear causal pathways. In neurology, for example, Hill's criteria can explore associations between traumatic brain injury and Alzheimer's disease. Although strength, consistency, temporality, and experimental evidence are relevant, specificity is less critical because of traumatic brain injury's multiple potential outcomes and complex causal pathways. In contrast, Pearl's approach offers deeper insights by modeling interventions and exploring hypothetical outcomes through mathematical and graphical tools, like Bayesian networks and do-calculus. This allows a more rigorous analysis of causality, surpassing the observational focus of Hill's criteria.

AI techniques enhance both Pearl's and Hill's frameworks. For Hill's criteria, methods like supervised learning, regression, meta-analysis algorithms, clustering, temporal models, graphical models, simulation models, and knowledge graphs contribute to various aspects of causal assessment. Pearl's approach benefits from AI-driven pattern identification, reinforcement learning, and causal inference algorithms for interventions and applies counterfactual models for scenario simulation. Together these AI integrations make causal analysis more precise and comprehensive, for clearer understanding of complex neurological relationships. Causal inferences on the effects of interventions from observational studies are a critical topic in healthcare.103 Bareinboim et al104 discuss how integrating causal reasoning into AI systems can enhance fairness and transparency.

AI and Synergies with Biostatistics

AI tools and classical statistics are increasingly intertwined, complementing each other to greatly benefit the neurological sciences (Table S4). Neurologists and neuroscientists have traditionally collaborated with biostatisticians to design studies and analyze data, including prespecified statistical plans, hypotheses, outcome and control variables, methods, and data presentation to avoid “fishing” and to mitigate false-positive findings.105

Classical statistical methods assume a probabilistic data model to relate predictors to outcomes, known as the “Data Modeling Culture.”106 Model validation is typically performed by goodness-of-fit tests, examining residuals, and hypothesis testing. These methods rely on George Box's adage, “All models are wrong; some are useful,” which also applies to AI. For example, in a study on how age predicts cognitive levels in elderly individuals, a simple linear regression model might capture the essence of the data over certain age ranges (Pearl level 1). Interpreting results can be straightforward, such as the average change in cognition per year of age. However, the linear relationship might be too simple to accurately predict or extrapolate. Additionally, the results assume normality of the residuals, which technically is not possible unless the cognitive metric is unbounded.

High-dimensional data, common in neuroscience, often overwhelm conventional statistical methods.107 Classical methods like regression analysis and survival analysis can struggle to handle the complexity and volume of modern neurological data sets. The K-nearest neighbors (KNN) algorithm,108 a simple yet powerful non-parametric method, bridges the domains of statistics, geometry, and ML. However, KNN faces challenges in high-dimensional spaces, where distance metrics like Euclidean or Manhattan distance (between neighboring points in a grid-like Manhattan's) lose their discriminative power because of the aforementioned “curse of dimensionality.” In these spaces, data points tend to become equidistant, making it difficult for KNN to distinguish between neighbors. This issue arises in brain imaging applications, such as functional MRI or diffusion tensor imaging analysis of Alzheimer's disease, where the complexity of the data requires advanced AI techniques to extract meaningful patterns and predict disease progression.

Statistical approaches are evolving alongside AI to tackle the “curse of dimensionality.” Techniques like least absolute shrinkage and selection operator, or LASSO, have become routine for analyzing high-dimensional data without preliminary dimension reduction.109 Adaptive modeling and data assimilation techniques can enhance model accuracy in complex systems.110 Smoothed online learning tools111 can manage high-dimensional data in real-time, augmenting traditional statistical methods.

The “Algorithmic Modeling Culture” uses AI tools that permit the relationship between predictors and outcomes to be complex and unknown.112 Whereas classical statistical tools generally handle more static datasets, AI handles high-dimensional, dynamic, time-sensitive datasets that continuously evolve, discovering patterns that conventional methods might miss. Nevertheless, core AI methods, such as model training, testing, and validation, depend on classical statistics. In turn, AI tools enhance the entire data workflow (Fig 2), serving as inputs for these statistical methods (Fig S4). Models are validated by their predictive accuracy, preferably using test datasets not used to build and train the model. However, overfitting remains a key challenge in neuroscience AI applications.

Overfitting occurs when a model performs well on training data, but poorly on new, unseen data because it has fit the noise and random fluctuations in the training set in addition to the genuine underlying patterns (Fig S5). This often occurs from overparameterization (i.e., model has more parameters than necessary to fit the data) leading to excessive flexibility in capturing specific anomalies rather than general patterns (see “Artificial Neural Network Anatomy and Applications” section). Interestingly, the human brain learns efficiently from limited examples, suggesting it possesses inductive biases—built-in tendencies to favor certain solutions—that help avoid the pitfalls of overparameterization often seen in AI models.113 By studying these inductive biases, researchers may develop AI models that generalize better from small datasets, improving performance and robustness.

Useful models must generalize well to new data, across different patients or brain scans. Techniques like cross-validation (e.g., k-fold or leave-one-out) robustly assess model performance and mitigate overfitting risks. Additional methods, such as early stopping, data augmentation, ensemble methods, dropout, and regularization, enhance model robustness and generalization.114 Transfer learning allows models to leverage knowledge from other tasks, using pre-trained networks fine-tuned for specific applications, reducing the risk of overfitting and improving generalization.113

Underfitting occurs when the model is too simple to learn patterns in the data, resulting in poor performance on both training and new data. This can be mitigated by increasing model parameters and extending training.115

Overall, the neurological sciences and other fields benefit from integrating biostatistical techniques with AI-driven designs and mathematical approaches.116 As both fields continue to evolve,117 more statisticians are training in AI tools, fostering collaborations that facilitate robust analyses and enhance the understanding and care of neurological conditions.

Neural Networks: History and Underpinnings

AI draws heavily on analogies to the nervous system and key neurological concepts, making it a special focus and pride of the neurological sciences. Neural networks (Fig S6) underpin many modern AI algorithms, tracing their origins to McCulloch and Pitts' 1943 paper, “A Logical Calculus of the Ideas Immanent in Nervous Activity.”17 They modeled neurons as binary devices that do or do not fire based on their inputs, creating a neural activity model using logic gates, which laid the theoretical foundation for artificial neural networks. Subsequently, Donald Hebb, often regarded as a “father of neuropsychology,” proposed his influential theory in 1949 that neural connections strengthen when neurons fire together, underpinning neural plasticity and brain learning.18

Next, Frank Rosenblatt21 designed the perceptron, which had a single layer (i.e., an input layer, not counted in the original perceptron model, with a single output layer and no hidden layers) and introduced adjustable weights. Although the perceptron also operated with binary inputs and outputs, its learning rule for adjusting weights was flexible compared to the McCulloch-Pitts model, which did not include learning or weight adjustment. In 1969, Minsky and Papert118 published “Perceptrons: An Introduction to Computational Geometry,” a critical analysis of single-layer perceptrons. These networks could not solve some problems, like the exclusive OR (XOR) function, which outputs “true” only when 2 inputs differ, a limitation stemming from their reliance on linear separation, making them incapable of recognizing non-linear patterns. The analysis underscored the need for more advanced architectures, such as multi-layer perceptrons, which incorporate hidden layers and activation functions to process non-linear patterns and solve complex real-world problems.

Although Rosenblatt had already begun exploring multi-layer perceptrons, effective training methods were not yet available, reinforcing Minsky and Papert's skepticism and lowering interest in and funding for neural network research. Rosenblatt's pioneering work ultimately laid the foundation for modern deep learning, but his tragic death in a boating accident in 1971 left many of his ideas unrealized. This period of doubt contributed to the “AI winter” of the late 1970s and early 1980s, with stalled progress in neural networks until more advanced methods and computational power reignited interest.

AI rebounded powerfully in the 1980s when advances such as backpropagation and increased computational power enabled more effective training of complex neural networks. Interest surged with new architectures, like Hopfield networks (Data S1),119 using binary units inspired by McCulloch-Pitts' neurons, and Hebbian learning to facilitate pattern storage and recognition.

The remarkable scientific journey from McCulloch and Pitts, through Hebb, Rosenblatt, and early perceptrons to modern deep learning showcases the vibrancy and evolution of AI. Today, neural networks drive breakthroughs in computer vision, NLP, and reinforcement learning, although also advancing numerous impactful neurological applications (reviewed in parts 2 and 3). To crown these brilliant scientific achievements, John Hopfield and Geoffrey Hinton were awarded the 2024 Nobel Prize in Physics “for foundational discoveries and inventions enabling machine learning with artificial neural networks.”120

Artificial Neural Network Anatomy and Applications

A neural network consists of interconnected nodes, analogous to neurons, organized into layers: an input layer receives data, hidden layers perform computations, and an output layer produces results (Fig S6, Table S1). Simple neural networks have 1 or 2 hidden layers, whereas deep learning systems have multiple hidden layers, increasing complexity and power, but diminishing explainability of its operations.35 Neural networks learn through “backpropagation”29 of errors between predicted and actual outcomes, which feedback to adjust synaptic weights to improve performance. An activation function, applied to each neuron's output, introduces non-linearity, allowing the network to learn complex patterns in the data. Early neural networks used the sigmoid function, which could cause the gradient vanishing problem during backpropagation, making it difficult for the network to update weights effectively, especially in deep networks. Modern systems use the rectified linear unit function to mitigate this issue, more effectively training deep networks.

Different network structures enhance capabilities for various tasks. Deep learning models depend on DNNs, includes CNNs, RNNs, and generative AI like GANs and LLMs, with distinct structures and uses (Table 3). CNNs, designed for grid-like data, excel in image tasks. RNNs handle sequential data, making them ideal for language modeling and time series. GANs generate realistic data, often used in image synthesis. LLMs, based on transformers, excel in NLP, like translation and summarization. Complex deep learning models, such as DNNs, CNNs, GANs, and LLMs, are at risk of overparameterization, typically because they have a large number of weights and biases. This can lead to overfitting, which can be mitigated through approaches such as transfer learning mentioned (see “AI and Synergies with Biostatistics” section).

| Model type (yr) | Structure | Algorithms used | Use cases | Key characteristics | Examples in neurology and neurosurgery |

|---|---|---|---|---|---|

| Feedforward neural networks (1940s–1970s) | Input layer, 1 or more hidden layers, output layer. Fully connected neurons | Backpropagation, gradient descent | General-purpose tasks, regression, classification | Started shallow; no cycles or loops; information flows forward121 | Predicting patient outcomes based on clinical data |

| Convolutional neural networks (1980s) | Convolutional layers, pooling layers, fully connected layers | Convolutional algorithms, pooling algorithms, backpropagation | Image and video recognition, object detection, segmentation | Captures spatial hierarchies and local patterns in data35 | MRI and CT scan analysis for tumor detection |

| RNNs (1980s) | Cyclic connections with hidden state | BPTT, truncated BPTT | Sequential data, time series forecasting, language modeling, speech recognition | Processes variable-length sequences, suffers from vanishing/exploding gradients122 | Analyzing sequential brainwave data for seizure prediction |

| Autoencoders (1980s) | Encoder compresses input, decoder reconstructs input | Backpropagation, gradient descent | Dimensionality reduction, denoising, anomaly detection | Learns compact data representation, captures essential features123 | Identifying abnormalities in neuroimaging data |

| RBMs (1986) | Two-layer neural network, consisting of a visible layer and a hidden layer | Contrastive divergence, Gibbs sampling | Feature learning, dimensionality reduction | Learns a probability distribution over its set of inputs121, 123 | Learning features from neuroimaging data |

| LSTMs (1997) | Special RNN with input, forget, and output gates | LSTM cell algorithms, BPTT | Sequential data with long-term dependencies, text, time series | Handles long-term dependencies, mitigates vanishing gradient problem124 | Predicting disease progression from longitudinal patient data |

| Graph neural networks (2005) | Operates on graph-structured data | Graph convolutional algorithms, message passing algorithms | Social network analysis, molecular structure prediction, recommendation systems | Captures dependencies and patterns in graph data125 | Modeling brain connectivity networks and their abnormalities |

| Deep belief networks (2006) | Stacked RBMs, where each layer's hidden units are treated as visible units for the next | Contrastive divergence, Gibbs sampling | Feature learning, generative modeling | Combines multiple RBMs, capable of learning hierarchical representations45 | Analyzing neuroimaging data for brain disorders |

| VAEsa (2013) | Autoencoder with probabilistic latent variable models | Stochastic gradient descent, variational inference | Generative tasks, data sample generation | Combines autoencoders with probabilistic modeling52 | Generating synthetic brain images for data augmentation |

| Deep reinforcement learning (2013) | Neural networks combined with reinforcement learning | Q-learning, policy gradients, deep Q-networks | Game playing, robotic control, autonomous vehicles | Learns optimal actions through trial and error | Optimizing surgical robot control in neurosurgery126 |

| GANsa (2014) | Generator and discriminator in competition | Adversarial training, backpropagation, gradient descent | Generating realistic data samples (images, videos, audio) | Capable of producing high-quality synthetic data, challenging to train51 | Creating realistic synthetic neuroimaging data for training models |

| GRUs (2014) | Simplified LSTM with fewer gates | GRU cell algorithms, BPTT | Sequential data tasks like LSTMs | Computationally more efficient than LSTMs with similar performance127 | Modeling patient recovery trajectories after neurosurgery |

| Transformer networksa (2017) | Self-attention mechanisms, processes data in parallel | Self-attention mechanisms, multi-head attention, position-wise feed-forward networks | Natural language processing, machine translation, text generation, sentiment analysis | Efficient at handling long-range dependencies, scalable to large models38 | Interpreting clinical notes and patient records for diagnosis support |

| Capsule networks (2017) | Layers of capsules that output vectors (instantiation parameters) rather than scalars | Dynamic routing algorithms | Image recognition, object detection | Encodes spatial hierarchies in data, robust to affine transformations128 | Brain tumor classification from MRI scans |

| Diffusion modelsa (2020) | Iteratively refine random noise to generate data by reversing a diffusion process | Denoising diffusion probabilistic models | Generative tasks, high-quality image synthesis, data augmentation | Capable of generating high-quality images, different approach to generation compared to GANs and VAEs129 | Generating realistic neuroimaging data for enhancing training datasets |

- Summary of deep learning models, including year introduced, structural components, commonly used algorithms, use cases, key characteristics, examples in neurology/neurosurgery, and references. The overview addresses each model type's function and relevance in medical applications.

- aGenerative models include transformers, autoencoders, GANS, VAE, and diffusion models, which have seen marked development or popularization in recent years, having conceptual origins or predecessors from earlier periods.

- Abbreviations: BPTT = backpropagation through time; CT = computed tomography; GAN = generative adversarial networks; GRU = gated recurrent units; LSTMN = long short-term memory networks; MRI = magnetic resonance imaging; RBM = restricted Boltzmann machines; RNN = recurrent neural networks; VAE = variational autoencoders.

Deep learning models have many applications in neurology, neurological science, and healthcare systems (Table 3). For instance, NLP analyzes clinical notes and patient records, predictive analytics forecast disease progression and treatment outcomes, and speech recognition aids patients with communication disorders. These diverse applications underscore the broad potential of neural networks to enhance various aspects of neurological care and research.

Computer vision is a pivotal application that leverages complex neural networks to interpret and make decisions based on visual data (Table 4). The process typically involves image acquisition, preprocessing to enhance quality and remove noise, feature extraction, object detection and recognition, and interpretation based on spatial relationships. CNNs are central to this method, processing images through multiple layers that extract increasingly complex features. CNNs and DNNs trained on labeled visual data identify patterns, edges, shapes, and features, refining their parameters through backpropagation to enhance performance.130

| Application | Description |

|---|---|

| Automated diagnosis of neurological disorders | Using brain MRI or CT scans to diagnose Alzheimer's disease, Parkinson's disease, and multiple sclerosis130-132 |

| Quantitative analysis of brain lesions and atrophy | Analyzing brain lesions and atrophy in neurological conditions133, 134 |

| Segmentation and volume estimation of brain structures | Segmenting and estimating the volume of brain structures, such as the hippocampus and ventricles135 |

| Detection and monitoring of cerebral microbleeds and microvascular disease | Predict evolution of white matter intensities in brain MRI134 |

| Analysis of retinal images | Early detection of neurological disorders using retinal images136, 137 |

| Intraoperative guidance during neurosurgery | Using computer vision techniques for guidance during neurosurgery and follow up brain imaging138, 139 |

| Tracking eye movements and gaze patterns | Diagnosing and monitoring neurological conditions by tracking eye movements and gaze patterns140 |

| Automated analysis of neuronal morphology and connectivity | Analyzing neuronal morphology and connectivity in microscopy images156, 157, 179 |

| Quantification of gait and movement disorders | Using video analysis to quantify gait and movement disorders141, 142 |

| Facial expression recognition | Assessing neurological conditions affecting facial muscles through facial expression recognition143, 144 |

- Computer vision uses AI algorithms to automatically process and extract insights from images and videos, with powerful applications in neurology. For instance, AI has significantly advanced MRI technology by enabling image reconstruction from under sampled data, thereby reducing scan times. It also enhances image quality by minimizing noise and artifacts, supports automated lesion detection for identifying tumors, ischemia, and multiple sclerosis plaques, and optimizes sequence protocols for patient-specific clinical applications

- Abbreviations: AI = artificial intelligence; CT = computed tomography; MRI = magnetic resonance imaging.

CNNs process input data through local receptive fields and apply filters at different resolutions, creating a feature map for efficient analysis. This architecture mimics the hierarchical structure of the human visual cortex, inspired by how neurons respond to visual stimuli.35, 145 CNNs can have hundreds of layers, each learning to detect increasingly complex image features,46 essential for recognizing faces, gaits, or medical images like gliomas, melanomas, or edematous retinas.

AI models such as you only look once, region-based convolutional neural network, and single shot multiBox detector detect and localize objects in images or videos, providing categories and positions via bounding boxes. Semantic segmentation assigns class labels to image pixels using fully convolutional networks and encoder-decoder architectures.127 U-Net is widely used in medical imaging for its ability to handle small datasets and capture fine structures.147

Versatile neural network applications extend to various domains, including autonomous vehicles, surveillance systems, and robotics. Neurologists and other healthcare professionals must select the appropriate tools for their specific tasks to maximize the benefits of these advanced AI technologies.

AI Model Selection and Process Flow in Neurology

Selecting AI models in neurology (Fig S4, Table S5) depends on data characteristics, such as structure, size, noise, missing values, and distribution.148 Models serve various purposes: regression for continuous variables, classification for categorical outcomes, clustering for patterns, dimensionality reduction for feature selection, time series for temporal data, deep learning for complex patterns, and NLP for text analysis.149 AI can also aid in defining the research question, for instance through NLP, which can help review literature to identify gaps and trends, making research data-driven. AI can also gather diverse neurological data, such as MRI/CT scans, electroencephalography (EEG)/magnetoencephalography (MEG) signals, genetic information, and clinical records.

Researchers, with the help of AI, preprocess data for quality control.131 MRI/CT scans are normalized and the skull is stripped, artifacts are removed and features extracted from EEG/MEG signals,150 and features are selected from genetic data.146 Statistical methods and AI algorithms handle missing data and noise. Deep learning automates imaging processes and AI-driven feature extraction identifies biomarkers.146 Clinical data integration provides a comprehensive patient view.

For model building, data are split into training, validation, and test sets (e.g., 60–80% for training, 10–20% for validation, and 10–20% for testing). Supervised learning predicts outcomes for labeled data, ideal for classifying disorders133 and prognosis prediction.134 Unsupervised learning identifies patterns in unlabeled data, which is useful for brain network clusters157, 158 and neuroimaging data reduction. Reinforcement learning trains models through reward-based decision-making,161, 163 which is useful in robotics and adaptive treatments. Masked autoencoders use self-supervised learning to improve efficiency and accuracy, particularly in image and NLP tasks.151 Hybrid models adapt to various use cases.

Models are refined through hyperparameter tuning,152 feature selection,136 cross-validation, and algorithm selection.115, 152, 153 These hyperparameters influence how the model learns patterns from data and impact its overall performance. Examples of hyperparameters in DNNs include the learning rate, batch size, number of epochs (times the DNN processes the entire dataset), number of layers, number of neurons per layer, dropout rate, activation function, and optimizer, all of which are set to control the learning process.

The generalizability of the refined model is tested, and evaluation metrics include accuracy, sensitivity, specificity, precision, recall, F1-score, and mean squared error.115, 153 Considerations include interpretability, resources, and expertise.154 Continuous refinement and ensemble methods, like random forests and gradient boosting, enhance performance.155, 185, 187

Effective models identify biomarkers,157 understand disorder mechanisms,156 and provide personalized treatment recommendations.141 Translating insights into practice improves diagnosis, prognosis, and treatment.142, 143, 160 Collaboration between AI and neurology experts, leveraging advanced techniques and refining models, enhances patient outcomes.

A Case Scenario for Transformative AI-Driven Precision Neurological Care

This case highlights the transformative potential of AI in acute and long-term care—right now and in the near future. A 45-year-old woman is admitted to the neurocritical care unit after a severe brain and spinal cord injury from a crash while her vehicle was in autopilot mode. AI plays a key role in triaging her to the appropriate caregivers. On arrival, AI-powered systems track her vital signs, respiratory mechanics, and neurological status in real-time, detecting subtle patterns that might elude clinicians.

AI continuously monitors her EEG for seizure activity, predicting seizures and enabling timely interventions. As her condition stabilizes, AI shifts focus to preventing complications, like sepsis and brain herniation. When she shows readiness for extubating, AI advises on the best course of action, including identifying optimal drugs by analyzing her genetic data, medical history, and current condition.

The patient survives with quadriplegia and epilepsy. AI-powered assistive technologies become critical for her long-term care and rehabilitation, with neural implants connected to an exoskeleton providing support. AI organizes her schedules with healthcare professionals.

On discharge, AI continues to monitor her health, tracking vital signs, respiratory function, and other indicators, remaining vigilant for complications. It also gathers health-related data from devices like cell phones and fitness trackers. Personalized rehab programs, driven by ML, adapt to her progress, whereas virtual reality platforms provide engaging therapy exercises. AI-powered mental health tools offer psychological support.

LLMs assist her in interacting with digital devices, enabling control of her environment, communication, and access to information. By analyzing her medical history, genetic profile, and current health, an NLP system identifies suitable clinical trials. AI also monitors the flow of people and information in the hospital, ensuring critical information reaches the right personnel.

In her home, AI systems continue monitoring her health and social interactions. For recreation, she and her caregivers play games like chess and Go with AI opponents. Social and emotional robots offer companionship, cognitive stimulation, and assistance with daily activities. The AI systems learn and adapt through ongoing interaction. In a learning health system (see part 3), AI uses data from multiple patients to improve hospital and clinic operations.

By enabling personalized, proactive, and adaptive care, AI improves outcomes and quality of life for patients with severe neurological conditions. Standardizing and integrating vast healthcare data to fuel AI models is a monumental task. Ensuring the explainability, safety, and fairness of AI in medical settings is a challenge (see part 2). The costs and infrastructure requirements also pose barriers to adoption. As research progresses and real-world implementations expand, the partnership between humans and AI is poised to redefine neurological care (Tables 5, 6).

| Task | Emerging AI tools and descriptions |

|---|---|

| Acute triage | AI assesses severity, prioritizes needs, and matches the patient with specialists for timely care. |

| Initial monitoring and intervention | AI tracks vitals, detects subtle patterns, and uses CNNs and long short-term memory networks to identify critical changes. It monitors electroencephalograms for seizures and analyzes images with CNNs and generative adversarial networks for abnormalities.150, 158, 166 |

| Stabilization and complication prevention | AI integrates data using ML (random forests, gradient boosting machines, Hidden Markov models) to predict life-threatening events, triggering early interventions against sepsis.32, 159, 185, 186 |

| Ventilation weaning | AI analyzes respiratory and neurological trends, using ML to determine optimal weaning time and suggesting a personalized protocol.187 |

| Medication optimization | AI analyzes genetics and history to recommend effective, low-side-effect drugs. It accelerates drug development by analyzing large datasets. |

| Long-term care and rehabilitation | AI-powered exoskeletons and neural implants adapt to needs using reinforcement learning, enabling natural movement control.188 |

| Healthcare and home coordination | AI optimizes team schedules, ensuring equitable distribution and minimizing conflicts. It manages data flow using ML, ensuring timely communication and care both in hospital and at home, with NLP and computer vision alerting caregivers to issues.82, 162 |

| Rehabilitation support | AI adapts rehab programs to progress. Virtual reality provides therapy, and large language models enhance interaction with devices.163, 189 |

| Research trials | NLP identifies suitable trials by analyzing history, genetics, and current health.187 |

| Recreational and Emotional Support | AI plays chess and Go, using algorithms to provide challenging interaction.190 AI robots offer companionship, cognitive support, and assistance, using NLP, RL, and computer vision to enhance well-being (See part 2, “Chatbots, Social and Emotional Robots” section). |

| Personalized Care | AI continuously adapts care based on responses and feedback.112, 114, 115 |

| Learning Health System | AI analyzes patient data to optimize hospital operations and care protocols. (see part 3, "AI and LHS" section.) |

- Summary of the tasks involved in managing a complex neurological care scenario and the specific AI tools and techniques applied to each task. From acute triage to long-term rehabilitation and personalized care, AI systems are used to enhance decision-making, optimize treatments, and improve patient outcomes. The tools include various ML algorithms, neural networks, and natural language processing, each tailored to specific aspects of patient care and coordination

- Abbreviations: AI = artificial intelligence; CNN = convolutional neural networks; ML = machine learning; NLP = natural language processing.

| Application Area | Example |

|---|---|

| Neurology | Neuroimaging for diagnosis (Alzheimer's disease, Parkinson's disease)97, 164 |

| AI-based decision support165 | |

| Electroencephalography data for seizure prediction166 | |

| NLP for clinical notes167 | |

| Generating synthetic MRI scans for training168 | |

| Data augmentation169 | |

| Neurosurgery | Deep learning for surgical planning170 |

| AI-powered surgical navigation171 | |

| Cranial nerve segmentation, instrument tracking172 | |

| Enhancing tumor segmentation models with synthetic data129 | |

| Psychiatry | NLP and ML for mental health173 |

| ML for neuroimaging, genetic biomarkers174, 187 | |

| AI and VR for exposure therapy175 | |

| AI-based conversational agents176 | |

| Basic neuroscience | ML, deep learning for neural data analysis177 |

| AI for neural connectivity data178 | |

| GANs for synthetic neural data51, 52 | |

| AI for neural morphology analysis157 | |

| Modeling complex brain connectivity patterns52, 178, 179 | |

| Data imputation180 | |

| Simulating progression of neurodegenerative diseases132 | |

| Real-time monitoring of patient status from EEG data55, 122, 166 |

- Examples of AI/ML tools—like deep learning, GANs, VAEs, diffusion models, NLP, and VR—are being applied across different neuroscience fields. Tables include specific use cases and references to relevant studies, highlighting advances and potential benefits for diagnosis, treatment, and research.

- Abbreviations: AI = artificial intelligence; GANs = generative adversarial networks; ML = machine learning; MRI = magnetic resonance imaging; NLP = natural language processing; VAEs = variational autoencoders; VR = virtual reality.

Explosion of AI in the Neurological Sciences

Kurzweil foresaw “The Singularity Is Near,” where humans merge with machines to become super-intelligent beings or, at least, more effective human-machine systems.181 This future is rapidly approaching thanks to advances in AI (Fig S7), materials science, and large-scale initiatives, like the National Institutes of Health (NIH) Brain Research Through Advancing Innovative Neurotechnologies (BRAIN) Initiative,182 which are unraveling the brain's architecture to advance brain prostheses and other innovations.

At NIH (National Institute of Neurological Disorders and Stroke, National Institute on Aging, National Institute of Mental Health, National Institute on Drug Abuse, BRAIN Initiative), AI analyzes neuroimaging data to identify new brain cell populations, circuits, connections, and functional patterns, offering insights into cognitive architecture, function, and consciousness. AI models accelerate drug discovery by simulating compound interactions in the brain.

BRAIN Initiative-funded projects are refining brain-recording techniques, studying speech motor circuits, and developing AI to decode brain activity patterns into computer-generated speech, holding promise for individuals with paralysis because of amyotrophic lateral sclerosis or spinal cord injury. AI algorithms analyze imaging (MRI, CT, PET), EEG, demographics, and biomarkers for disease signatures in disorders like Alzheimer's disease, Parkinson's disease, multiple sclerosis, and epilepsy, enabling earlier, more effective treatments with fewer side effects.

In neurosurgery, AI-powered robots enhance procedures, minimize tissue damage, and improve patient safety through real-time imaging analysis and simulations. In psychiatry, AI analyzes speech, facial expressions, and behavior for detecting mental health disorders early, and AI chatbots offer 24/7 support and therapy tools. Computer vision uses AI algorithms to automatically process and extract insights from images and videos, with powerful applications in neurology. AI-powered techniques are used for automated diagnosis, analysis of brain lesions and atrophy, and segmentation of brain structures.

AI is also advancing in counterfactual and abductive reasoning, potentially enhancing patient-centered care through fine-grained analyses, challenging traditional diagnostic criteria, illuminating brain-behavior-environment interactions, and informing interventions responsive to patient values and contexts.183

AI is vast and probing the technical details of specific AI algorithms can provide neurologists with a deeper understanding of their workings and mathematical foundations. Addressing the challenges of deploying AI systems in real-world clinical neurology settings, including data integration, interoperability, regulatory hurdles, and user acceptance, is a long campaign.

Integrating AI tools into clinical practice awaits evidence of their effectiveness and lessons learned from their implementation. Exploring the long-term implications of AI for neurology, including changes to training curricula, clinical workflows, and the skills required for future neurologists to work effectively with AI systems, is crucial for the field's future. The AI landscape in neuroscience and healthcare is rapidly evolving, with new applications and research emerging continually. This is a transformative time for neurology and neuroscience.184

Acknowledgments

This work was supported by NIH R01AG017177, NIH U54GM115458, and the University of Nebraska Foundation. Special thanks to E. Vlock for her work on figure creation, manuscript formatting, and review. R. Taylor provided editing and formatting assistance, whereas K. Lynch helped manage references. We also thank Dr. M. G. Savelieff for her editorial assistance. We are grateful to Dr. R. Dai for contributions on advanced biostatistics and its relationship to AI and to Dr. J. Wang for expertise in convolutional and generative neural networks for image processing. Dr. S. Sarkar offered valuable insights into AI's recent history and multifaceted aspects, and Dr. Y. Zhang provided expertise on biostatistics and the “curse of dimensionality.” Dr. A. Graber contributed helpful discussions on philosophical distinctions in logical reasoning. B. Lynch provided key insights into models, algorithms, and the distinctions between computer programming and ML.

Author Contributions

M.R. contributed to the conception and design of the manuscript; M.R. and J.D. contributed to the interpretation of studies included in the manuscript; M.R. and J.D. contributed to drafting the text and preparing the figures.

Potential Conflicts of Interest

Nothing to report.