Dietary patterns and the neoplastic-prone tissue landscape of old age

Abstract

There is now sufficient evidence to indicate that aging is associated with the emergence of a clonogenic and neoplastic-prone tissue landscape, which fuels early stages of cancer development and helps explaining the rise in cancer incidence and mortality in older individuals. Dietary interventions are among the most effective approaches to delay aging and age-related diseases, including cancer. Reduced caloric intake has been, historically, the most intensely investigated strategy. Recent findings point to a critical role of a long fasting interval in mediating some of the beneficial effects of caloric restriction. Time-restricted feeding, intermittent fasting, and fasting mimicking diets are being proposed for their potential to prolong healthy life span and to delay late-onset diseases such as neoplasia. Evidence will be discussed suggesting that the effects of these dietary regimens are mediated, at least in part, through retardation of age-related functional changes at cell and tissue level, including a delay in the emergence of the neoplastic-prone tissue microenvironment.

1 CANCER INCREASES WITH AGE

It is widely acknowledged that advancing age is among the strongest risk factors for cancer, as it is well illustrated by the epidemiology of most common types of neoplasia in humans. Lung cancer, by far the leading cause of cancer-related deaths in Western Countries, is diagnosed in over two third of cases in people who are 65 or older, whereas fewer than 2% occur in individuals younger than 45, with an average age at diagnosis of about 70 (American Cancer Society: https://cancerstatisticscenter.cancer.org). Similar statistic applies to prostate cancer, the most common malignancy in men in the United States: about 60% are diagnosed after 65 years, whereas this cancer is very rare before the age of 40 (American Cancer Society: https://cancerstatisticscenter.cancer.org). Likewise, the age-adjusted incidence of breast cancer in women in the United States doubled between the fifth and the sixth decade of life and it became four times as high during the eighth decade in the years 2012-2016 (US NCI, https://seer.cancer.gov/archive/csr/1975_2016/). Finally, colon cancer is diagnosed at a median age of 68 in men and of 72 in women, whereas the rectal localization is found at a median age of 63 for both men and women. A relatively low rate (11%) of all colorectal cancers occurs before the age of 50, although a disturbing increase of its incidence in the young has been reported in recent years (ASCO, https://www.cancer.net/cancer-types/colorectal-cancer/risk-factors-and-prevention). Overall, in the United States, close to 80% of all cancers were diagnosed in individuals older than 55 between 2007 and 2011 (NCI, https://www.cancer.gov/about-cancer/causes-prevention/risk/age).

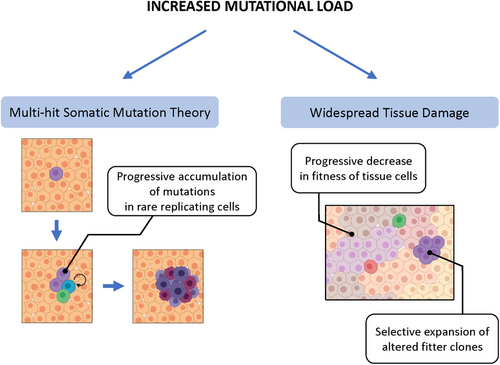

Given such a strong association, several hypotheses have attempted to identify possible mechanistic links between aging and cancer. Albeit not necessarily mutually exclusive, they can be conceptually included into two broad categories: those focused on age-related alterations occurring in rare cells, putative precursors of preneoplastic, and/or neoplastic cells, and those placing more emphasis on age-dependent changes taking place in the bulk of the tissue, organ, or organismal context in which cancer originates (Figure 1).

2 AGING AND THE EMERGENCE OF RARE MUTATED CELLS

According to one group of hypotheses, the association between aging and cancer is primarily attributable to an increased generation and/or progressive accumulation of mutated cells at risk of neoplastic transformation in aged tissues. This in turn can result from two basic mechanisms. (a) Aging may simply allow more time for the occurrence of endogenous (eg, bad luck) or exogenous mutagenic events; alternately, or in combination, (b) it can decrease the ability to remove altered cells through quality control mechanisms operating at tissue level, including (but not limited to) those enacted by the immune system.

Mutations in somatic cells accumulate throughout life and they have long been thought to be a major determinant in the age-dependent increase in the incidence of neoplastic disease, under the heading of the multihit somatic mutation theory (SMT) for the origin of cancer.1-5 For example, recent studies in clonal organoid cultures derived from primary human multipotent cells indicate that approximately 40 novel mutations per year arise in each adult stem cell.6 However, it is intriguing to note that a similar rate of mutations was reported in tissues such as colon, small intestine, or liver6; given the enormous differences in the incidence of cancer among the above tissues, this finding indicates that mutation rate per se cannot solely account for the increase in the frequency of neoplastic disease as we age. The linear increase in mutation accumulation with age6 is also difficult to reconcile with the nearly exponential shape of the curve plotting cancer incidence against age at diagnosis, unless a threshold level of molecular damage is postulated as necessary before the biological effects become manifest.

On the other hand, aging can negatively interfere with mechanisms that are routinely involved in the identification and elimination of altered, putative preneoplastic cells. In the simplest theoretical scenario, a mutated gene that will translate into a change in protein sequence will make the affected cell a possible target for the adaptive immune response, with activation of effector T cells by the altered protein fragment presented on MHC molecules. Although the relative relevance of this mechanism in decreasing the overall risk of neoplastic disease in a lifetime is difficult to evaluate, it is reasonable to hypothesize that the waning of the adaptive immune response in the old may favor the accrual of altered cells, including subpopulations with preneoplastic/neoplastic potential. Thus, the decrease in both B-cell and T-cell receptor repertoires observed with age is bound to determine a reduced potential to identify newly appearing antigenic determinants.7

An alternative strategy to keep at bay and/or clear altered cells is through induction of cell senescence. This complex phenotype entails a persistent cell cycle arrest and it is considered to represent an effective means to suppress the expansion of DNA-damaged cells at risk for neoplastic transformation.8 Furthermore, senescent cells can in turn be targeted for clearance by immune-mediated mechanisms,9 thereby eliminating altogether their possible contribution toward the emergence of cancer. However, aging is associated with a progressive accumulation of senescent cells in several tissues, possibly reflecting an attenuation in the clearing capacity of the immune system.10 Such a defective clearance and/or increased generation of senescent cells has been proposed to fuel carcinogenesis.10, 11

Extrusion of altered, potentially preneoplastic cells can also be accomplished directly by normal surrounding counterparts through a process that has been referred to as epithelial defense against cancer (EDAC).12, 13 For example, altered cytoskeletal interactions between normal cells and RasG12V-transformed cells were able to trigger removal of the latter from mouse intestinal epithelium.11 Moreover, the efficiency of this protective strategy is susceptible to modulation by environmental influences. Interestingly, feeding a high-fat diet (HFD), a known risk factor for colon cancer, resulted in a decrease in the rate of removal of altered cells from mouse intestine, possibly as a consequence of HFD-induced inflammatory changes; in support of this conclusion, treatment with aspirin was able to mitigate HFD negative effects on transformed cell clearance.14 Whether the efficiency of EDAC is also affected by aging has not been investigated so far. Given the apparent role of inflammation in modulating the process and considering the pro-inflammatory phenotype associated with aging, this is a likely possibility. Along a similar vein, mouse intestinal stem cells harboring genetic alterations, including Apc loss, Kras activation, or P53 mutations, were found to be replaced by wild-type stem cells; however, P53-mutated stem cells had a clonal advantage under conditions of chemically induced colitis.15

3 AGING AND THE NEOPLASTIC-PRONE TISSUE LANDSCAPE

A second group of hypotheses addressing possible mechanistic links between aging and cancer shifts the focus from rare mutated cells to age-associated changes occurring in the bulk of the tissue. Although there are certainly areas of overlapping between the two analytical approaches, placing emphasis on the role of tissue, organ, or organismal context in the pathogenesis of neoplastic disease has represented a significant departure from the cell-centric (and mutation-centric) view that has dominated cancer research for several decades.

When considering the role of the environmental context in the origin of cancer, one has to set an important distinction between the tissue microenvironment in which cancer originates and what is more appropriately referred to as the tumor microenvironment,16 a peculiar milieu inside the neoplastic lesions that emerges as a consequence of its expansion.17 In the context of the present discussion, the effects of aging will only be considered with reference to changes in normal tissue microenvironment.18

The age-associated tissue alteration that has been most investigated as a possible driver of cancer development is the increased activation of the inflammatory response, referred to as inflammaging.19 Several cancers arise in tissues undergoing chronic inflammation, including lung, liver, pancreas, intestine, among others.20-23 From the chronic irritation theory of Virchow,24 to the Dvorak's proposition of cancer as “a wound that does not heal,”25 the possible link between inflammation and cancer has long intrigued generations of investigators. However, one must acknowledge that no sound comprehensive biological hypothesis is available so far to explain how the inflammatory response might impact the neoplastic process, particularly at early phases.26 It is conceivable that mediators involved in this response, for example, cytokines and reactive oxygen species, or the associated metabolic alterations, such as increased glycolysis and acidosis,17 may induce genotoxic damage and/or stimulate the growth of putative preneoplastic cells, but direct evidence that this does in fact occur is still lacking. Therefore, this is an important area for future investigations.

A different, by no means alternative view postulates that aging fuels carcinogenesis by inducing changes in the tissue microenvironment that select for the emergence of fitter cell clones, including those with preneoplastic potential. The idea that “context” should be included in the equation that attempts to explain the origin of cancer was conceptually laid down several decades ago. Studies conducted by Haddow in the late 1930s were among the first to point out that the then newly discovered chemical carcinogens were invariably toxic and growth suppressive to their target organ.27 This suggested that their carcinogenic potential did not result from a direct effect of the chemical, but it was possibly related to selection of cell variants that were best fit and able to emerge in a background of diffuse tissue impairment. Four decades later, the work of Farber and collaborators provided a proof of principle that this was in fact the case: carcinogen-initiated hepatocytes expressing a “resistant” phenotype to mitoinhibitory agents were able to grow vis-à-vis the suppressed surrounding counterparts upon appropriate stimulation, forming numerous hepatic nodules, a subset of which progressed to cancer.28 Such focal lesions were interpreted as adaptive in nature, in that their growth was sustained, at least initially, by the regenerative drive of the tissue that could not be fulfilled by normal neighboring cells.28 Direct evidence that a growth-constrained tissue environment was pathogenically relevant to the expansion of preneoplastic cell populations was subsequently obtained through cell transplantation studies. It was shown that hepatocytes isolated from early nodules could only grow and progress to cancer upon orthotopic injection into a host liver whose resident parenchymal cells were mitotically blocked, whereas they were unable to proliferate when transferred in a normal, syngeneic tissue environment.29

Importantly, these observations were later extended to the aged tissue microenvironment. Normal isolated hepatocytes obtained from a young donor were able to form larger cluster following implantation in the liver of an old recipient compared to that of a young normal control, suggesting that the aged tissue landscape is clonogenic.30 Similar results were independently observed by other research groups using fetal hepatocytes as donor cells.31 In recent years, the pervasive presence of aberrant clonal expansions is increasingly being reported in normal aged human tissues. One of the first and most notable examples is the age-related clonal hematopoiesis, consisting in the emergence of mutated clones of white blood cells in peripheral blood at increasing frequency as we age.32, 33 Although they were found to be rare before the age of 40, their incidence rose to almost 20% in individuals older than 90 years.33 Their presence is associated with an increased risk of hematologic neoplasms and they can be the site of origin of such malignant cellular phenotype.32 Similar clonal expansions of mutant cells were later reported in an increasing number of solid tissues.34

The basis for this intriguing phenomenon is still uncertain. Both healing and regenerative capacity progressively wane with advancing age,35-37 partly due to a cell autonomous decrease in proliferative potential.38-40 This may provide a selective advantage for cellular clones with a (relatively) preserved growth capacity, leading to a slow process of repopulation involving significant areas of the tissue. Although most clones show normal histology, others may manifest as dysplastic lesions and may then evolve and progress toward the acquisition of an overt malignant (invasive and metastatic) behavior. Studies using cell transplantation approaches in both liver and bone marrow have indeed indicated that the aged tissue microenvironment is more supportive for the clonal expansion of preneoplastic cells compared to that of young recipients.41, 42

On the other hand, epigenetic mechanisms could also contribute to the selective advantage of mutant clones emerging at older age. For example, three genes involved in epigenomic modifications, namely, DNA methyltransferase 3 alpha (DNMT3A), Tet methylcytosine dioxygenase 2 (TET2), and additional sex combs-like protein 1, were reported as the most frequently mutated in clonal hematopoiesis, leading to the suggestion they might account for the dominant phenotype of these clones. In line with this contention, loss of function of DNMT3A or TET2 in murine hematopoietic stem cells causes altered methylation patterns in pluripotency genes and confers a growth advantage over wild-type stem cells, although no growth autonomy is implied.33 Indeed, a generalized “shadowing” of epigenetic pathways regulating gene expression during development and throughout reproductive life has been proposed as a driver of aging phenotypes, leading to a functionally compromised tissue landscape that may help select for fitter epigenetic variants.43

4 IS THERE A ROLE FOR CELL COMPETITION?

The latter scenario could also be interpreted as fulfilling the criteria that have been proposed to define cell competition. Basically, cell competition refers to a process whereby healthy cells in a given tissue are cleared by functionally more proficient surrounding counterparts.44 It is therefore a context-dependent phenomenon, and the reciprocal fates of winner and loser cells are interrelated. As such, cell competition is an integral part of homeostatic mechanisms overlooking tissue size and pattern and is restricted within defined developmental compartments.45 An additional corollary of this definition is that competition can only occur among homotypic cells when a critical threshold of phenotypic heterogeneity is present; furthermore, there must exist a limit in the availability of specific resources these cells are competing for. Analysis conducted at single cell level has revealed that genetic and epigenetic heterogeneity is indeed far more pervasive than previously thought even in normal adult tissues, raising the possibility that cell competition may not be a rare phenomenon.45 Within this conceptual framework, the common finding of clonal proliferations in aged tissues may well represent the outcome of such a continuous, long standing process. On a similar vein, the relative clonal expansion of normal young hepatocytes upon transplantation into old recipient livers referred to above30 could be interpreted as a manifestation of a winner phenotype of younger cells over old resident homotypic counterparts. In line with this view, cell competition was proposed as the basis for the selective growth of normal embryonic hepatic cells transplanted in the liver of syngeneic adult rats.31

Does cell competition have a role in carcinogenesis and/or, more specifically, does it contribute to the increased cancer incidence typical of older age? Although answers to these questions are largely incomplete, evidence begins to emerge that biological mechanisms underlying cell competition under normal conditions are directly relevant to the pathogenesis of cancer, particularly at early stages of the disease.46 A compelling case is the elimination of scrib mutant cells from Drosophila imaginal disk by wild-type counterparts, whereas, in the absence of normal cells, mutant clones do not die and progress to form tumors.47 The already mentioned extrusion of altered cells by normal surrounding epithelium through EDAC can also be viewed as resulting from cell competition, although the underlying biological and molecular mechanisms appear to differ from classical prototypes.12

On the other hand, aging entails a generalized decrease in the efficiency of mechanisms overlooking maintenance of cell fitness, possibly including cell competition, and this can increase persistence of altered cells.48, 49 Mutations in somatic cells increase with age fueling a feedback loop that leads to the age-associated exponential functional decline.48 This in turn causes a decreased competitive fitness of normal cells, thus (a) increasing the risk for the unopposed emergence of clones with altered phenotype, including preneoplastic ones and/or (b) positively selecting for specific genetic alterations under new environmental conditions (eg, pro-inflammatory, growth-constrained, etc) that favor their phenotype.31, 39-41 Incidentally, it is worth noting how the role of somatic mutations in carcinogenesis is radically reinterpreted within this scenario compared to that implied in the classical SMT for the origin of cancer. As already mentioned, the SMT is rigidly cell centric, whereas the sequence of events outlined above, feeding on the somatic mutation catastrophe theory of aging,48 proposes to explain the link between aging and cancer ascribing a pathogenic role to widespread mutational load in aged tissues (Figure 1).

5 DIETARY PATTERNS AND AGING

If the pace of aging has room for modulation, dietary habits, including quality, quantity, and patterns of food intake, occupy a major share of this space, as also epitomized in the saying “we are what we eat.” Decades of clinical and experimental data strongly support the notion that caloric restriction (CR) (a reduction of the amount of daily calories without causing malnutrition) results in the extension of life span and a simultaneous reduction in the incidence of chronic morbidities typically associated with advanced age.52 In more recent years, greater attention has been given to the possible role of our eating patterns in shaping our health condition. Over the past several decades, the amount and schedule of food consumption have substantially changed in the human population and recent evidence suggests that this may bear long-term consequences on our well-being.

5.1 Caloric restriction

Among the proposed strategies to delay the aging process and age-associated diseases, CR is certainly the most studied and reproducible nongenetic intervention that has been proven to effectively extend health and life span.53 CR commonly refers to a dietary intervention whereby caloric intake is reduced by 20-40% while avoiding malnutrition.

Initial scientific evidence of the beneficial effects of CR was reported during the first decades of the last century, as it was demonstrated that a simple reduction of the daily amount of calories significantly extended life span of rats.54, 55 The effect of CR on health and life span has since been confirmed in a vast number of animal species, from unicellular life forms to nonhuman primates, and in humans.53, 56-58

The first indication that a reduced caloric intake may affect health and life span in humans comes from decades of studies on one of the world's longest-lived populations, the Okinawans.59, 60 It was reported that older individuals, who have drastically lower risk of mortality from age-associated diseases compared to western populations, consumed approximately 17% fewer calories than the average adult in Japan and 40% less than the average adult in the United States. Moreover, their diet was lower in proteins and rich in fresh vegetables, fruits, sweet potatoes, soy, and fish, which may have a CR mimicking effect.61 Interestingly, observations on some Okinawan families who moved to Brazil revealed that the adaptation to a Western lifestyle impacted both their diet and physical activity, resulting in an increased body weight and a drop in life expectancy of 17 years.62

Recently, a 2-year randomized trial known by the eponym of CALERIE, involving nonobese men and women between 21 and 50 years, was conducted to assess CR feasibility, safety, and improvements in terms of quality of life and risk of diseases. The degree of CR achieved in the study (theoretically fixed at 25%, it was effectively at 19.5% during the first 6 months and at 9.1% for the remainder of the study) was safe and well tolerated, with no adverse effects on the quality of life.63 CR participants lost weight significantly compared to ad libitum (AL)-fed controls and they experienced improvements in some potential modulators of longevity and in cardiometabolic risk factors, such as decrease in triglycerides and total cholesterol, increase in high-density lipoprotein, reduction in low-density lipoprotein, and decrease in both systolic and diastolic blood pressure.57, 64

Parenthetically, some investigators propose that life span extension exerted by CR might be an artifact, both in laboratory animals and in human studies. According to this interpretation, CR could be considered as a way to revert AL overfed animals to eating conditions in the wild. Thus, typical laboratory studies, instead of comparing normal controls with caloric restricted animals, are comparing overfed animals with adequately fed ones and, not surprisingly, the overfed ones develop a series of obesity-related pathologies and die earlier.65, 66 Similar considerations may also apply to human studies on the same topic.67

5.2 Time-restricted feeding, intermittent fasting, and fast mimicking diets

In spite of their undeniable beneficial effects on health and longevity, CR-based strategies have never met convinced acceptance in the scientific community and in the public opinion at large in terms of their translational potential. The reasons for such skeptic ostracism are primarily cultural (any “restriction” is hard to implement and swallow in a society of “consumers”). And yet, as it has been pointedly noted, a decrease in the average daily intake of calories is essential if we are to counter the epidemics of overweight and obesity presently afflicting many countries in the world.68, 69 Nevertheless, long-term adherence to CR regimen does carry the risk of side effects that may become clinically relevant over time, including perpetual hunger, depression, infertility, among others.70-72 This is one of the reasons why over the past several years numerous efforts have been devoted at finding alternative approaches, preserving the positive features of CR while avoiding its downsides, the bottom line being that they should be at least acceptable, if not appealing, to humans.

A common theme that unifies most of these proposed approaches is a period of fasting. In fact, a long fasting interval is also integral to the large majority of CR protocols, because the reduced food ration is usually consumed within a few hours (<8 in a typical rodent study), with no feeding for the rest of the day. Several approaches, likely more amenable to be implemented in humans, have been considered, mainly falling into three categories: (a) time-restricted feeding (TRF), with daily cycles of feeding and fasting, where feeding/eating time period is restricted to 8-12 h/day, during the active phase73; (b) intermittent fasting (IF), which refers to a range of dietary regimens, in which fasting is generally >18 h, and it is repeated two-thirds times per week, or every other day74; (c) fast mimicking diets (FMD), which consist in a 4-5 days cycle of very low-calorie diet (500-1000 kcal), repeated periodically (every 2 weeks, monthly, or every 2 months).70

An increasing number of studies have now indicated that at least some effects of CR are reproduced by TRF, including stimulation of autophagy, increased mitochondrial respiratory efficiency, modulation of reactive oxygen species, and changes in the profile of inflammatory cytokines.75 In fact, this implies the possibility that the effects of CR may partly attributable to fasting, rather than to reduced calories per se.76 The benefits of TRF appear to be proportional to the daily fasting duration, which ranges between 12 and 16 h/day.77 Importantly, under controlled experimental conditions animals exposed to TRF are able to eat ≥90% of the food ration consumed by AL-fed controls, after a short training period of less than 1 week.78-80 Among the best characterized effects of TRF is the prevention of obesity, which is a major risk factor for several diseases, including cardiovascular pathologies, cancer, chronic liver and kidney disease, and diabetes mellitus.68 The ability of TRF to prevent excess increase in body weight was first shown in Drosophila melanogaster72 and then confirmed in mice fed either regular or HFDs.79, 82-84 Interestingly, TRF could also reverse preexisting diet-induced obesity, after both long-77 and short-term85 exposure. Moreover, it was shown that TRF decreases plasma levels of triglycerides and low-density lipoproteins77, 78, 82, 86 and inhibits diet-induced liver steatosis and the accompanying increase in serum markers of liver disease.78, 79 Preliminary studies conducted in humans showed that TRF improved insulin sensitivity, blood pressure, oxidative stress, and quality of life in overweight or diabetic adults.87-89 Healthy middle-aged and older adult patients subjected to TRF showed high adherence, which resulted in improved cardiovascular function and other indicators of health span, without any significant loss of body weight.90

Likewise, IF protocols were reported to exert beneficial effects similar to CR in animal models, including reduced oxidative stress and inflammatory response91, 92 and enhanced autophagy and tissue repair capacity.93 The effects of alternate-day fasting on longevity in rodents are mostly inconsistent, depending upon species and age at initiation of feeding regimen, ranging from negative effects to a 30% life span increase.94, 95 Some studies in humans have shown that IF can improve metabolic health, physiological function, and lipid and glucose metabolism, while reducing inflammatory response, lowering blood pressure, and improving cardiovascular function.96-98 A minor concern associated with IF studies was the possible induction of micronutrient deficiencies, along with the observation of minor physical symptoms such as hunger, lack of energy and concentration, constipation, and feelings of cold.74 Moreover, interpretation of results is partly complicated by the vast heterogeneity in the design of IF, particularly in terms of duration and recurrence of fasting periods.74, 99

More recently, FMD strategies have been developed to limit the drastic reduction of body weight associated with animal studies on IF. Initial FMD studies administered a low-protein, low-sugar, HFD with an overall caloric reduction between 10% and 50% for a period of 4 days, twice a month.100 The reported effects were similar to those caused by a water-only fast of 2-3 days, particularly in terms of loss of visceral fat, and they were associated with no overall monthly reduction of calories as compared to AL control.100, 101 Inflammation was reduced by 50% and improvements in terms of motor coordination and long- and short-term memory were reported. FMD also caused an 18% increase in the 75% survival point and an 11% increase in the mean life span.100, 101

Very preliminary data were reported with the use of FMD in humans, including a significant reduction in body weight, body fat, serum IGF-1 levels, and systolic blood pressure compared to the control diet.102 Although many questions about the application of FMD in the clinics remain presently open,103 several clinical studies are currently ongoing and will hopefully shed some light into the feasibility of such interventions.

6 DIETARY PATTERNS AND THEIR EFFECTS ON CANCER

Early studies from the beginning of last century reported for the first time that the growth of transplanted tumors could be significantly reduced by exposing recipient mice to CR.104 Later studies showed that a reduction in caloric intake caused a sizeable decrease also in the incidence of spontaneous tumors in experimental animals.55, 105 Since then, the ability of CR to reduce the incidence of chemically induced cancers has been demonstrated for mammary tumors induced by 7,12-dimethylbenzantracene,106-108 intestinal tumors induced by methylazoxymethanol,109 hepatocellular adenomas in mice treated with diethylnitrosamine,110 and spontaneous intestinal polyps in genetically susceptible ApcMin mice.111 Similar results have been obtained in studies carried out in nonhuman primates, where the incidence of neoplasia was substantially reduced in CR groups.112, 113 These effects were particularly significant in young-onset CR monkeys, whereas the incidence in the late-onset CR group was similar to AL-fed animals, indicating that an early intervention may have a more significant impact on cancer development.113

In humans, a retrospective cohort study revealed a 50% reduction of breast cancer incidence in women with history of anorexia nervosa and low BMI, suggesting that severe CR in humans may protect from invasive breast cancer.114 Conversely, numerous lines of evidence suggest that excess caloric intake is associated with an increase in the incidence of several types of cancer.115

Interventions such as TRF, IF, and FMD can also exert beneficial effects in terms of cancer incidence and progression, although evidence is more limited. In rodents, TRF was able to decrease the incidence of spontaneous hepatocellular carcinoma76 and to limit the growth of transplanted preneoplastic cells in the liver.80 Results with fasting-refeeding in mice subjected to alternate-day fasting had a major reduction in the incidence of lymphomas,116 whereas fasting for 1 day/week delayed the emergence of spontaneous tumors in p53-deficient mice.117 In aging mice subjected to FMD starting from 16 months of age, a delay in the emergence and progression of spontaneous lymphomas was observed.101

Limited evidence of the beneficial effects of TRF on cancer risk in humans comes from a retrospective epidemiological study, which reported a positive correlation between the duration of overnight fasting period and protection from breast cancer risk.118

Despite the positive preliminary results obtained with IF, the refeeding phase is often characterized by a period of intense cellular proliferation due to the release of high levels of growth factors, also related to the duration of fasting. It was shown that the contemporary administration of carcinogens during refeeding could lead to an increased proliferation of precancerous lesions in the liver.119 Similarly, three cycles of fasting, lasting 3 days each and repeated every 2 weeks, caused a more rapid evolution of chemically induced preneoplastic lesions in rat liver, with progression to hepatocellular carcinoma.120

7 DIETARY PATTERNS AND TISSUE MICROENVIRONMENT

Evidence discussed in the preceding paragraphs suggests that aging increases the risk of cancer at least in part via the emergence of a neoplastic-prone tissue landscape, which is clonogenic to both homotypic normal and putative preneoplastic cell populations.30, 42 On the other hand, dietary interventions such as CR have consistently been recognized as one of the most effective strategies to retard aging and age-related diseases, including cancer. Based on these premises, it was reasonable to postulate that the beneficial impact of CR on carcinogenesis could be due, at least partly, to a delaying effect of the dietary regimen on emergence of the neoplastic-prone tissue microenvironment typical of advanced age. This hypothesis was tested using the syngeneic cell transplantation system referred to above. Rats were exposed to AL feeding or CR diet (70% of AL amount) for 18 months followed by orthotopic injection of freshly isolated nodular hepatocytes. Three months later, clusters of transplanted cells, including macroscopically visible hepatic nodules, were significantly smaller in the group exposed to CR compared to controls fed AL, indicating that the clonogenic potential of the aged tissue microenvironment was reduced by the CR regimen.121

As already discussed, more recent approaches propose fasting-centered regimens, with minimal or no reduction in total caloric intake, as a means to reproduce the effect of CR on health and longevity. When one such regimen, namely, TRF, was tested for its ability to impact the emergence of the age-associated, neoplastic-prone tissue landscape, results were very similar to those obtained with CR, that is, long-term exposure to TRF diet reduced the clonal expansion of orthotopically transplanted preneoplastic hepatocytes.80

Mechanistically, multiomics approaches have pointed to metabolic hubs associated with longevity and improved health conditions, including a decreased incidence of cancer, in experimental animals exposed to either CR or TRF.122 Specific metabolic pathways involving amino acids glycine, serine, and threonine were implicated in life span extension, whereas short-chain fatty acids (SCFAs) and essential poly unsaturated fatty acids metabolisms were uniquely associated to health-span.122 Furthermore, a 24-h period of fasting was sufficient to enhance intestinal stem cell function in young and aged mice, possibly via activation of fatty acid oxidation (FAO) pathway.123 Accordingly, acute impairment of carnitine palmitoyltransferase Ia activity, the rate-limiting enzyme in FAO, abrogated the beneficial effects of fasting.123 Of note, an increase in FAO was also implicated in mediating CR-induced longevity in Caenorhabditis elegans, which occurred via maintenance of mitochondrial network homeostasis and functional coordination with peroxisomes.124

Conversely, chronic administration of a HFD stimulates the expansion and enhances the function of Lgr5-expressing intestinal stem cells of the mouse intestine, and this was associated with induction of peroxisome proliferator-activated receptor delta pathway.125 However, overstimulation of the latter pathway in Apc-defective intestinal organoids enables these cells to form tumors in vivo.125

Feeding a CR diet also affects the epigenetic landscape of various tissue, retarding the onset of age-associated changes in DNA methylation.126 Thus, patterns of DNA methylation at loci involved in lipid metabolism were profoundly modified by CR, and this translated in a longer persistence of a youth-associated lipid metabolic profile.126 Furthermore, DNA methylation at the ribosomal RNA locus was modulated by CR in an age-dependent manner in both rat and human liver,127 and the activity of this locus is a critical determinant of translation, which in turn can impact stem cell function and renewal128

Last but not least, both CR129 and TRF130 are known to attenuate the age-associated upregulation of the inflammatory response referred to as inflammaging, thereby reducing its possible contribution to an increased risk of cancer in the aged tissue microenvironment,131 as discussed above.

8 CONCLUSIONS

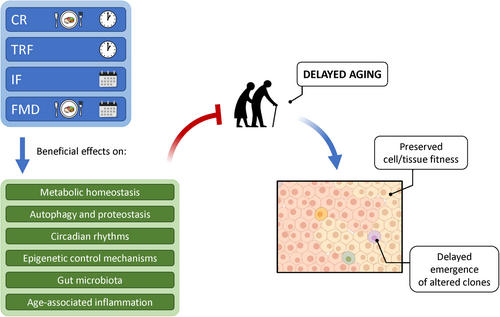

The evidence linking dietary habits, including dietary patterns, with aging and cancer is overwhelming, and yet any attempt to translate this knowledge into general conclusions and practical recommendations is still very challenging. This is largely due to important gaps that continue to exist in our understanding of biological and biochemical/molecular mechanisms relating aging and cancer on one side and the impact of feeding habits on such complex processes on the other side. Most recent findings appear to converge on the critical role of a sufficiently long fasting period to be included in the feeding pattern in order to observe a beneficial effect on the rate of aging and on the emergence of age-associated diseases, including cancer. Such fasting interval, which is common to CR, TRF/time-restricted eating (TRE), IF, and FMDs, exerts profound effects on metabolism and has also far reaching consequences on circadian oscillatory rhythms, microbiota composition, and food-rewarding circuits, among others (Figure 2).

On the other hand, the inextricable interdependence between bodily biochemistry and the metabolic activity of host microbiota is now well established, particularly with reference to SCFA.132 Importantly, both CR and TRF impact microbiota composition and metabolism, boosting propionogenesis and limiting butyrogenesis and acetogenesis.122, 133, 134

One of the most intriguing facets of fasting-based dietary approaches resides in their phasic trait, that is, the alternating periods of access/no access to food. Rhythmicity is fundamental in biology, as exemplified by the essential role of the wake-sleep cycle in preserving good health.135 In any function, life revolves around high and low tides, whereas it tends to avoid monotony. It has been shown that the amplitude of circadian metabolic oscillations decreases with age; however, CR and TRF reinforce diurnal oscillations in the activity of several metabolic functions, thereby delaying their progressive waning typical of the aging phenotype.136

In summary, the central tenet of the present discussion is that dietary interventions such as CR, TRF, and/or IF exert at least part of their protective effects on the risk of neoplastic disease via modulation of age-associated changes in the tissue landscape. The latter include, but are not limited to, alterations in metabolism, autophagy, proteostasis, circadian rhythms, DNA methylation, inflammatory markers, and microbiota composition (Figure 2), all of which can contribute to the emergence of conditions favoring survival and selection of altered cell clones, including those with neoplastic potential.

Thus, the way we eat, other than how much and what we eat, needs to be considered in the complex equation relating food, longevity, cancer risk avoidance, and wellbeing.

ACKNOWLEDGMENTS

We thank Anna Saba for her secretarial assistance. Authors acknowledge support from AIRC (Italian Association for Cancer Research (Grant No. IG 10604 to EL) and Fondazione di Sardegna (to FM).

AUTHOR CONTRIBUTIONS

Fabio Marongiu and Ezio Laconi conceptualized the study, acquired funding, wrote the original draft, and reviewed and edited the manuscript.

CONFLICT OF INTEREST

The authors declare no conflict of interest.