How do applicants fake? A response process model of faking on multidimensional forced-choice personality assessments

Abstract

Faking on personality assessments remains an unsolved issue, raising major concerns regarding their validity and fairness. Although there is a large body of quantitative research investigating the response process of faking on personality assessments, for both rating scales (RS) and multidimensional forced choice (MFC), only a few studies have yet qualitatively investigated the faking cognitions when responding to MFC in a high-stakes context (e.g., Sass et al., 2020). Yet, it could be argued that only when we have a process model that adequately describes the response decisions in high stakes, can we begin to extract valid and useful information from assessments. Thus, this qualitative study investigated the faking cognitions when responding to MFC personality assessment in a high-stakes context. Through cognitive interviews with N = 32 participants, we explored and identified factors influencing the test-takers' decisions regarding specific items and blocks, and factors influencing the willingness to engage in faking in general. Based on these findings, we propose a new response process model of faking forced-choice items, the Activate-Rank-Edit-Submit (A-R-E-S) model. We also make four recommendations for practice of high-stakes assessments using MFC.

Practitioner points

-

What is currently known about the topic of your study:

-

Prevalence of motivated distortions increases as the stakes increase.

-

Scholars have developed numerous faking models focused primarily on either the antecedents of faking or its consequences.

-

Although this research provides insightful results, it reveals little about the actual faking behavior.

-

The question of what is going on in people's minds when completing a questionnaire in high stakes remains.

-

-

What your paper adds to this:

-

We explored and identified factors influencing the test-takers' decisions to fake regarding specific items and blocks.

-

We identified factors influencing the willingness to engage in faking in general.

-

We propose a new response process model of faking forced-choice items, the Activate-Rank-Edit-Submit model.

-

-

The implications of your study findings for practitioners:

-

We provide four recommendations that can be implemented in practice to potentially decrease faking of personality assessments in high stake situations.

-

1 INTRODUCTION

In the selection context, applicant faking can be defined as an intentional response to a self-reported personality measure that does not correspond to the person's true self-image (Kiefer & Benit, 2016), with the goal of creating a favorable impression. Faking on personality assessments remains an unsolved issue, raising major concerns regarding their validity and fairness (Kuncel et al., 2011). This concern is warranted as research has indicated that 30%–50% of job applicants alter their test responses to pursue hiring success (Griffith & Converse, 2011). In a study conducted by König et al. (2011), participants were asked about their past behaviors when completing job applications and whether they were honest in the process. Two European samples (Iceland and Switzerland) were compared to a sample from the US. Overall, results indicated that 32% of participants had overreported their positive traits on questionnaires at some point in their lives. Additionally, 15% of participants admitted to having given outright falls responses. Yet, the prevalence of self-presentation was significantly greater amongst American participants compared to the European sample. Furthermore, Swiss and Icelandic participants reporting similar levels of self-presentational behavior. The researchers explained that these cultural differences might occur due to lower unemployment rates in the European countries at the time of participation, and consequently lower perceived competition for jobs, thus, decreasing the motivation to engage in faking behavior. Indeed, it has been shown that the prevalence of motivated distortions increases as the stakes increase (McFarland & Ryan, 2006). Subsequently, investigating faking behavior is important to develop assessment methods that prevent it (Ziegler, 2011), or statistical methods that can be utilized for correction. In this pursuit, scholars have developed numerous faking models focused primarily on either the antecedents of faking, such as motivations, opportunity, ability to fake; or its consequences, such as selection ratios or correlations with selection outcomes (Ziegler, MacCann, et al., 2011). Although this research has provided insightful results, it reveals little about the actual faking behavior. The question of what is going on in people's minds when completing a questionnaire in high stakes remains. Yet, it could be argued that only when we have a process model that adequately describes the response decisions in high stakes, can we begin to extract valid and useful information from assessments.

2 WHAT WE KNOW ABOUT THE FAKING PROCESS IN GENERAL

Scholars have argued that test takers have goals which might play an important part in how test takers respond to personality tests (e.g., Kuncel et al., 2011). For example, a qualitative study conducted by Ziegler (2011) provided evidence that individuals evaluate whether a given item is important in achieving a specific goal (e.g., getting hired), before deciding whether to fake. If an item was identified as instrumental in achieving the goal by test takers, they enhanced their score on that item. If it was deemed as irrelevant to achieving the goal, they either answered truthfully or choose the middle (neutral) option. Interestingly, an alternation of true response and neutral response was found within the same test takers. Additionally, research has indicated that individuals do not always over-report specific attributes but also under-report them, if they believe it will help them achieve their goal (Griffith & Converse, 2011). In support, Kuncel et al. (2011) suggested that individuals view the completion of each item on the questionnaire as a mini social interaction with their prospective employer. The researchers suggested that applicants are driven by three main goals when answering questionnaire items, which are being credible (i.e., appearing believable and trustworthy to the employer), being impressive (i.e., to evoke admiration within the employer, through to presentation of skills or qualities), and staying true to themselves (i.e., behaving in accordance with one's own believes and values).

These often-opposing goals can differ in their importance dependent on the context and lead to different response behaviors on different items.

Moreover, a qualitative study conducted by König et al. (2012) highlighted several assumptions that applicants make about the interpretation of their responses, for example, the importance of being consistent. Specifically, they found that people believing that the assessor will not be able to detect an inconsistency in their profile faked. Moreover, some participants thought it was bad to select middle answers in comparison to extreme answers, as it was interpreted as a sign of mediocrity. On the contrary, others avoided the endorsement of extreme responses as it was viewed as obstinacy.

Taken together, these findings suggest that individual's decision to fake is made on an item-by-item basis. Different motivational drivers and different assumptions that test takers have seem to influence the decision-making process of faking, leading individuals to fake in both directions and to different extents to reach their strategic goal.

3 RESPONSE FORMATS AND FAKING BEHAVIORS

The most common response format used in self-report personality questionnaires is the rating scale (RS) format. Here, each stimulus is presented as a single item (statement or question). Responses are collected on a rating scale, which can have a number of ordered categories, for example, strongly agree—agree—neutral—disagree—strongly disagree. This format is particularly susceptible to faking because applicants can easily endorse category that they perceive to be most desirable (Wetzel et al., 2016). To alleviate the ease with which all desirable options can be endorsed, the forced-choice (FC) format has been introduced (e.g., Dilchert & Ones, 2011). In the forced choice format test takers are presented with two or more items simultaneously. In the case of paired items, individuals are asked to indicate which statements describes them most. When three, four or more items are presented in a block (making triplets, quads, etc.,), test takers are either asked to rank all items according to how well they describe them or to indicate which item describes them most and which item describes them least. Blocks are sometimes constructed to include items measuring the same trait, called unidimensional FC, or different traits, called multidimensional FC (MFC). Blocks of three or more items are most often multidimensional (MFC). Research has indicated that the MFC format compared to traditional RS format can reduce faking substantially (Salgado et al., 2015). Importantly, the MFC format seems to be most effective in reducing faking when items within block are matched on their desirability in the selection context (Wetzel et al., 2021).

4 RESPONSE PROCESS MODELS FOR FAKING BEHAVIOR

One of the first response process model concerned with answering attitude questions, was proposed by Tourangeau and Rasinski (1988). The researchers introduced four stages of response including: (1) Item representation, (2) Retrieval, (3) Judgment, and (4) Mapping. Thus, a respondent first activates relevant cognitive structures through the encounter with the target question. The activated information is thought to be representative of the respondent's standpoint on the latent trait measured. In the second stage, the respondent retrieves the information that appears relevant considering the given question. Next, a judgment has to be made how the retrieved information integrates into an answer to the presented question. Lastly, the respondent has to map the judgment onto one presented response option. Yet, in this stage the respondent might decide to edit the answer checking for consistency with previously given answers or to present a more socially desirable answer.

Based on more recent research advances, a variety of new modeling approaches have been proposed building on Tourangeau and Rasinski (1988) model. For example, based on the evidence of a central role played by decisions to edit one's retrieved information whenever concerns for self-presentation are high (e.g., Holtgraves, 2004), Böckenholt (2014) proposed to consider three stages of item response decisions, dedicating a whole stage to Edit decisions, giving rise to the “Retrieve-Edit-Select” model (R-E-S). This approach was used to describe either honest or falsified response to sensitive surveys through decisions to either report one's retrieved response or select a less revealing response instead. Specifically, the framework suggests that when presented with a question, test takers first retrieve the requested information. The information is most likely retrieved when individuals activate attitudes and behaviors that are characteristic for them. Subsequently, the retrieved information should correspond to the attribute that the item is designed to measure. At this point the individual has the option to either report the retrieved answered or to edit it. The decision to edit is thought to be influenced by situational demands such as choosing a more appropriate answer for the given context, and the natural tendency of that individual to edit answers. After the decision to edit is made, the individual will select the appropriate response that would serve a self-presentation goal.

A main benefit of this approach is that it incorporates the key features of deliberate misreporting confirmed in experimental research. Such features include for example delayed (slower) responses when editing takes place (Holtgraves, 2004; Tourangeau & Yan, 2007). Additionally, it can account for the heterogeneity of behavior between the test takers (Kuncel et al., 2011; Robie et al., 2007) and within the test taker's profile (Brown & Böckenholt, 2022). However, it has been argued that the R-E-S framework is not directly applicable in the context of high-stakes assessments. Unlike sensitive survey questions, where the less revealing answer is obvious, personality items require complex judgments about balancing the most favorable impression with other goals and assumptions. These judgments are thought to depend on candidates' ability to identify attributes that are important to demonstrate during selection, so-called Ability to Identify Criteria (ATIC; Klehe et al., 2012).

Recently, Brown and Böckenholt (2022) developed the “Faking as Grade of Membership” or F-GoM model, which considers every individual's assessment as a potential mixture of “real” (retrieved) and “ideal” (selected) responses, with each type underpinned by its own distribution and structure. The model adopts the R-E-S stages but proposes ATIC-related considerations as drivers of decisions to edit own retrieved responses, and a select-stage model accommodating not only for individual differences in the tendency to over-report desirable attributes, but also for differences between items' sensitivity to this tendency to over-report.

Yet, it could be argued that response processes will differ depending on the response format (RS vs. MFC). Frick (2021) proposed the Faking Mixture model considering the response process for MFC questionnaires in a high-stakes situation. She argues, similar to Brown and Böckenholt (2022), that the response process is a mixture of two processes whereas the test taker can either respond honestly to some items or fake some other items, but highlights the rank order selection on MFC blocks as the objects and the ultimate outcomes of these manipulations. Specifically, she suggests that test-takers in a high-stakes situation could rank items in the same way they would in a low stakes-situation. Reasons for such ranking decision could include (a) The test-takers perceive their ranking to already be desirable, thus an alteration is unnecessary, (b) When items within the MFC blooks are closely matched on desirability, test-takers may fail to identify the most desirable option and decide to respond honestly (Berkshire, 1958).

Alternatively, test-takers in a high-stakes situation could rank items purely based on their perception of item desirability, resulting in a ranking that is not reflective of test-takers actual trait levels. In the case of closely matched blocks, given a certain degree of motivation, individuals could either select ranks randomly or activate additional information to inform their ranking decision. However, in the case of poorly matched blocks, test-takers make selections on the basis of item desirability and reach a greater level of agreement regarding the most desirable rank order.

One of the main benefits of the model is that it allows for the variation of faking by block and by person and quantifies the prevalence of a certain rank order (block fakability). Frick (2021) found that MFC-mixed blocks had a higher fakability than MFC-matched blocks. Yet carefully matching items on their desirability within blocks did not result in lower fakability parameters for all blocks. Frick concluded that test-takers engage in a far more complex and fine-grained evaluation of item desirability when items are combined in blocks compared to individual presentation.

5 STUDY AIMS

Although there is a large body of quantitative research investigating the response process of faking on personality assessments, for both RS and MFC, only a few studies have yet qualitatively investigated the underlying processing mechanisms of faking when responding to MFC in a high-stakes context (e.g., Sass et al., 2020). Therefore, this qualitative study aims to explore faking behavior when completing a MFC personality assessment. Of particular interest is verifying stages of the R-E-S process model (and F-GoM model as its high-stakes assessments counterpart) in forced-choice responding. Although some obvious modification to stages can be envisaged, for example ranking the items before a select decision is made (e.g., Frick, 2021), it remains to be seen how salient these considerations are to test takers at each stage, and what factors influence them. Moreover, the extent to which the edit and select decisions are influenced by how items within blocks are matched on desirability need to be investigated.

6 METHODS

6.1 Participants

A total of 32 participants were recruited using the online social media platform LinkedIn. Of the 32 participants, 21 (65%) were women, and 11 (35%) were men. The age ranged from 19 to 63 years (M = 29.5, SD = 11.74). Furthermore, 10 different Nationalities were presented by the sample. For a specific breakdown view Table 1. Furthermore, information about participants' employment status and occupations is also displayed in Table 1.

| Nationalities | % |

|---|---|

| UK | 37.5 |

| Germany | 21.9 |

| India | 12.5 |

| US | 6.3 |

| Ireland | 6.3 |

| South Africa | 3.1 |

| Cyprus | 3.1 |

| Slovakia | 3.1 |

| Ukraine | 3.1 |

| United Arab Emirates | 3.1 |

| Occupations | Number of participants |

|---|---|

| Student | 8 |

| HR/business consultant | 4 |

| Psychologist | 4 |

| Research analyst | 2 |

| Administrative role | 2 |

| Government affairs trainee | 1 |

| Controlling intern | 1 |

| Project coordinator | 2 |

| Deputy editor | 1 |

| Customer account manager | 1 |

| Business support officer | 1 |

| Specialist in business processes | 1 |

| Lecturer | 1 |

| Service specialist | 2 |

| Software engineer | 1 |

| Employment status | Number of participants |

|---|---|

| Employed | 25 |

| Self-employed | 3 |

| Unemployed | 4 |

6.2 Materials

6.2.1 Questionnaire

- A.

I reach conclusions quickly

- B.

I maintain my opinions when others disagree

- C.

I tell people exactly what I think

- D.

I have old-fashioned values

6.2.2 Answer sheet

An answer sheet was designed to record general demographic information such as age and gender as well as participants' responses on the personality questionnaire.

6.2.3 Interview guide

A semistructured interview guide was developed to help the interviewees to articulate their cognitions in a consistent manner. Thus, the guide covered six areas of interest: thoughts about the retrieval of information when first confronted with statement blocks, the decision-making process whether to edit the retrieved information, the decision-making process of selecting the final response and factors influencing the selection, general difficulty of answering items, and the use or nonuse of strategies and underlying reasoning. Each interview started with a question about the retrieval process (What was the first thought that came to your mind when presented with a statement block?). Depending on the given answers, the researcher flexibly adjusted, following the logic of the conversation whilst making sure that all six areas of interest were covered at the end of the interview. All interviews ended with the researcher asking the participants for general thoughts and comments and whether they wanted to add something to the conversation that was not yet covered.

6.3 Procedure

All participants received an information sheet and consent form via email. Participants agreeing to partake returned the signed consent form via email. In return they received an answer sheet as well as an invitation link to the visual meeting room that was set up using an online video conference tool. Participants were informed that the researchers are currently designing a personality test for selection that aims to minimize manipulation by job applicants to present themselves in a better light. They were told that their help was needed to identify possible strategies that could be utilized by an applicant to manipulate the test in their favor.

At the beginning of each video conference session, participants were presented with the preselected blocks from the OPQ32i (on-screen) and were asked to complete the blocks individually in silence to familiarize themselves with the questions. Participants received the instructions to complete the questionnaire as if they were applying for their dream job. Although many studies that have employed faking good instruction picked a specific job so that all participants faked toward the same profile (Robie et al., 2007; Wetzel et al., 2021), we decided to implement “faking to dream job” instructions (a different version of faking good instruction) for several reasons.

Arguably, direct “fake good” instructions (e.g., to fake fulfilling certain job criteria or generally) create a “fake” faking situation. Thus, participants might not be thinking what they would think when they actually apply for a real position, but would most likely utilize heuristics about the target position.

Moreover, Guenole et al. (2022) raised the concern of utilizing “faking to job” instructions with a heterogeneous sample suggesting that: (a) Some people might be better able or better positioned to fake the predetermined job role compared to others, (b) People might have very different motivations and attitude toward the nominated job profile, (c) These differences might lead to a lack of motivation and/or interest to follow the faking instructions in a number of participants. Thus, in their study they presented “faking to dream job” instructions to overcome these limitations and found that the heterogeneity of job roles that participants imagined did not negatively impacted the results of laten variable models. Subsequently, we adopted the “faking to dream job” instructions as proposed by Guenole et al. (2022).

After 10 min of filling out the questionnaire, participants were interrupted, and the interview began. The purpose of letting the participants complete the questionnaire only partially was to give them an opportunity to experience the question format and the process of answering, yet not tire them out before talking about the response process. We hoped that when the interview began, participants would have salient memories and the energy to be able to produce detailed verbal descriptions.

At this point, the researcher drew participants' attention to the MFC blocks and asked them to share their thoughts following the semistructured interview guide. Importantly, the researcher asked probing questions such as “How exactly would an applicant reach that decision?”, “Do you think there are other ways an applicant could manipulate the answer?”, or “What would an applicant do if they do not know how to answer and why?”. We avoided questions directly addressing the participants experiences, particularly alluding to the possibility of them faking. We made the decision to use an indirect questioning approach to create a safe space between the interviewer and the interviewee. This is important when discussing sensitive topics such as lying or faking. Research has showed that here, an indirect questioning approach makes participants more willing to open up and share their own perspective and experiences without the researchers having to asked them directly (Kvale & Brinkmann, 2009). After discussing all questions, participants were given the opportunity to add anything else and were thanked for their participation. They received a verbal debrief and a written copy was supplied via email.

6.4 Data analysis

Considering the nature of our research question and the semi structured interview guide used during the focus groups, we decided to conduct a Reflexive Thematic Analysis (RTA). The benefit of this type of analysis above other approaches is that it is a theoretically flexible interpretative approach to qualitative data analysis (Braun & Clarke, 2006). To address common criticism regarding Thematic Analysis (TA) in general, namely a lack of concise guidelines in the procedure, we followed the six systematic steps to RTA as outlined by Braun and Clarke (2006). Furthermore, as recommended by Braun and Clarke (2021), before the analysis we made choices between: (1) essentialist versus constructionist epistemologies, (2) experiential versus critical orientation, (3) Inductive versus deductive analysis, and (4) semantic versus latent coding.

First, in our analysis we adopted constructionist epistemologies, meaning that we viewed the relationship of language/experience as bidirectional. Thus, we recognized the importance of language in the social production and reproduction of meaning and experience (Burr, 2015) and reflected this viewpoint in the interpretation and development of codes and themes by considering not only the reoccurrence of perceived important information but also the meaningfulness of such. Thus, for a theme to be considered important it should appear within the data repeatedly. Yet only because something is repeated frequently, does not make it meaningful or important to the analysis.

Second, we adopted an experiential orientation focusing on how a given phenomenon is experienced by our participants. Thus, we accepted the meaning and meaningfulness of the phenomena as described by the participants, knowing that the thoughts, feelings, and experiences are subjective. We appreciate that all three are reflections of internal states held by our participants.

Third, we adopted a mixture of inductive and deductive coding and analysis. Although we held certain theoretical assumptions before conducting the analysis, we first adopted the inductive approach to data, to appreciate participants' viewpoint on their own terms, and to avoid overlooking significant insights based on our previous assumptions and biases. Later in coding process we adopted the deductive perspective. Further information about the inductive and deductive coding process is provided in the coding section.

Fourth, in this study we adopted a mixture of semantic and latent coding. Whereas semantic coding focuses on the explicit surface meaning of the data without further investigation of what a participant has said, latent coding goes beyond the descriptive level and attempts to understand participants' underlying assumptions, ideas or hidden meanings (Braun & Clarke, 2006). Please see the coding section for detail about how we utilized semantic and latent coding.

6.4.1 Familiarization stage

After having conducted all 8 focus groups, we started to transcribe the audio files. Each completed transcript was checked back against the original audio recording for accuracy. As suggested by Braun and Clarke (2006), the transcription process was viewed by us as an important step in the familiarization stage. While reading and rereading the data set, we started to make initial notes on an individual transcript bases, but also about the overall data. This helped us to get very immersed in the data and intimately familiar with it.

6.4.2 Coding

This phase involved the generation of concise labels (aka codes) to identify and capture important elements and features of the data. The entire data set was coded in three rounds. The first two rounds followed an inductive approach, where we derived codes from the data without considering any prior theory, allowing the data to create its own narrative. The third round of coding followed a deductive approach.

More specifically, the first round was descriptive (semantic) coding, whereby we identified specific issues the participants talked about and gave them descriptive labels. For example, we created the code “memories of past behavior” and throughout the coding process, we then looked out for participant talking about memories of past behaviors and variations of it. The second round was interpretative (latent) coding, whereby we looked at the data again, now asking how this issue came about and in what context. For example, memories of past behavior might have been constructed “as first thought when reading a given statement.” Then, throughout the process we looked for interactive variety within the code. For example, we found the variations of “memories of past behaviors absent when reading a given statement.”

The third round of coding was also interpretative (latent), yet we followed a deductive theory driven approach. Thus, we would now draw upon external theory and concepts (top-down sense-making) to frame the codes within and understand them relative to process models of faking. At this stage, themes began to be abstracted from the data. For example, “memories of past experiences” became part of the theme “activation mechanism” later in the analysis.

6.4.3 Generating initial themes

Next, we examined the codes to identify and develop broader categories of meaning that would potentially form our themes. Here, we call them “contestant” themes. Then, we gathered data that was relevant for a given contestant theme to assess its feasibility and viability.

6.4.4 Developing and reviewing themes

At this stage we started to review our contestant themes to identify “…pattern of shared meaning underpinned by a central concept or idea” (Braun and Clarke, 2019, p. 10). Thus, we reviewed all contestant themes against the coded data to establish whether the themes represented the data well and whether they told a coherent story while addressing the research question. We further developed our themes by combining contestant themes or sometimes splitting one theme into separate themes. When we thought that a theme was not thoroughly supported by the data, we discarded it.

6.4.5 Refining, defining, and naming themes

In this stage we defined the focus of each theme and its scope, making sure that each theme presented a coherent narrative of the data. In the last step of this stage, we choose the final theme names.

6.4.6 Writing up

The final stage involved bringing all themes and data extracts together. In the discussion section we contextualized our analysis in light of the existing literature.

7 RESULTS

- 1.

Activation mechanisms (past behaviors (work/home), self-concept (work/home), or ideal employee)

- 2.

Ranking of statements

- 3.

Factors influencing the block-specific decision to edit one's ranking

- 4.

Making a selection decision and item desirability matching

- 5.

Factors influencing the tendency to edit the questionnaire as a whole

In what follows, we discuss the first four themes pertaining to faking forced-choice blocks. The fifth theme, pertaining to general considerations involved in faking an assessment, does not contribute to the research questions posed in this study and is therefore not included in the main report. However, for reasons of completeness and reproducibility, we provide a full report of theme 5 in an online Supplement.

7.1 Activation mechanisms

Although it has been argued that when responding to personality questionnaires, individuals activate information from memory of past experiences it appears that this is not always the case. Some participants reported that they did not activate past experiences but rather recalled their general self-concept or information about the job requirements and/or stereotypical ideal employees. Thus, three distinct activation pathways could be identified.

7.1.1 Activation of memory of explicit past experiences

Some participants reported that when first confronted with a statement within a given block, they retrieved past experiences regarding their behavior in a given situation. They retrieved a multitude of examples to help them make a judgment at a later stage. Participants also engaged in explicit behavioral reflection to identify their attitudes regarding a specific item. For example, Participant #1 (from here referred to as P#1) described this process as follows: … the first thing that comes to my mind is, I think back to actual memories in whether I've been in a situation where the way I have behaved has reflected this or not. So, for example I think breaking the rules is wrong I kind of imagine various rules that you have at work or in general life and think, you know, have I really been a rule breaker or not, I suppose…

Subsequently, some individuals activate and retrieve information from episodic memory, which has been defined as the memory of everyday events that can be consciously recalled and/or explicitly stated. These memories are very detailed and include contextual information such as time and place but also associated emotions (Madan, 2020). Yet, it appears that individuals access a more specific part of the episodic memory system—episodic self-knowledge, which entails memories of specific events involving the self, for example, personal episodes (autobiographical memories) to identify when their behaviors were congruent or incongruent with specific personality traits (Sakaki, 2007). This type of memory retrieval is cognitively demanding/effortful and requires a certain level of motivation from the individual to engage in such a process (Hasher & Zacks, 1979). Evidently, this retrieval process can only occur sequentially for every item within a block.

Furthermore, some participants described the process of evaluating their own personalities in different contexts. This was especially common when people found it difficult to make a decision. They started to reflect on their personality at work vs their personality at home (in private) and highlighted that they can clearly identify a difference in their own behavior. Thus, evaluating one's personality in a clearly defined context was seen as helpful. For example, P#2 explained that …when I found these ones tricky was to try and separate what would be me at home versus me being a work. So, … at home, I would probably have quite different responses to how I was at work. Similarly, P#3 stated: I had two competing things of work and not work. Now, I started looking at it and thinking, actually, you know, maintaining your opinions when others disagree, I think at work I am probably less likely to maintain my opinions. Because the nature of my role is I kind of need to almost agree with everyone to some extent. And whilst I'm, you know, at home, I might be more opinionated than I am at work… So, you start to sort of imagine your different kind of not different personalities, but you start to imagine different types of yourself …

7.1.2 Activation of general moral rules, values and attitudes

Other participants highlighted that they did not think back to past experiences when confronted with a given item. Instead, they accessed general information about their personality, including their moral standing, current values, and general attitudes. P#5 highlighted: My first reaction isn't thinking of my own behavior. It's more of a general moral rule… P#4 shared a similar experience: I wasn't really thinking about the past. I was rather thinking about, at the moment, how I would evaluate myself. I didn't really have certain experience in mind or certain things in the past, I was just thinking what feels most like me.

Thus, some participants appear to retrieve semantic self-knowledge. In contrast to episodic memory, semantic self-knowledge is information that has been isolated from personal episodes, such as a self-concept or abstract trait knowledge (i.e., the knowledge that one is reliable, compliant, or lazy) (Sakaki, 2007). Importantly, research has indicated that the retrieval of abstract-trait knowledge is less effortful and requires less motivation compared to accessing behavioral exemplars (Budesheim & Bonnelle, 1998; Sakaki, 2007). In addition, as described above, context specific information was also seen as helpful within this type of activation.

7.1.3 Activation of job requirements

Finally, some participants described that the first thought that came to mind was about employer expectations. Thus, they activated information about a stereotypical good worker, “ideal employee,” and/or job requirements, disregarding their own personality in its entirety. P#6 explained: First what I thought was what the employer would ideally want in … their ideal candidate. and P#7 highlighted I think I'd probably not even think about how it relates to me. In this type of retrieval, participants do not activate ideas, concepts, or facts related to their own personality. Rather, they activate information that is relevant and directly helpful for achieving the goal of “passing” the personality assessment.

7.2 Ranking of statements

Next, no matter what retrieval process was engaged, all participants compared each statement to their activated information, evaluating to what extent a given statement is in line with their activated knowledge. Based on this comparison, a ranking is created. P#8 described: Yeah, first I make a decision for every statement. But then of course, I compare and order it because I should take just two answers [most and least like me] out of four. Specifically, participants activating past experiences judge each statement individually, evaluating for example the frequency of a given behavior or the context in which it occurred. This seems to be particularly important when a participant identified with all presented behaviors. P#1 explained: You could be probably all of these things at the same time. And because of that, I felt I had to sort of rank them in the amount of which I do them. The judgment stage for participants activating information based on semantic-self-knowledge can elicit binary categorization such as “yes that is me” or “no that is not me” which appears to be quick and effortless. For example, P#4 described the process as follows: I suppose I've just tried to… think of my personality in general. And some of the questions were really… obvious straightaway,… yeah, that's really me. And even though the other ones might describe me as well, there'll be one particular statement that… would jump out … and… you could not say that about me.

After preliminary ranks have been allocated, participants may activate job requirements next and make a ranking of statements based on what is desirable. P#9 explained: I went through it and kind of done this review of which one is the most accurate for me as a person. I did that and then went right, okay, ignore that throw that away. Now let's think about what they want to hear. So, I went through all of them again and I thought which one is the most positive, and which one is the most negative?

If a participant activated information based on job requirements and/or employee expectation, they interpret each statement to identify what attribute is being assessed. Next, a value judgment is made considering item relevance and desirability to create an “ideal” rank order. Unlike the ranking processes based on retrieved information, it appears that this ranking is not preliminary and represents participants' final responses. P#7 presented a detailed description of this process: … I tried to filter them out by seeing what each answer represents. For example, I think breaking the rules is wrong. I don't know, maybe that sort of represents something negative, something that the assessor person wouldn't really like, because it means that you're not really open to thinking outside the box. And I want to be first, I guess that's sort of being competitive. So, I kind of looked at it that way…. And then after that, I'd probably be like, okay, which one is the more positive trait? Which one's more negative? And then I'd select it that way. Which one I feel like, the assessor rates and ranks the highest.

7.3 Factors influencing the block-specific decisions to edit one's ranking

Participants who have ranked items based on information about job requirements do not engage in any form of editing. This is because their ranking already represents their perception of the most desirable response, ready to be reported. On contrary, participants who have activated past experiences or information about the general self and created the preliminary rank orders, may evaluate their answer as creating a disadvantage in getting the job. A disadvantage may be identified when participants find a discrepancy between their honest and “ideal” ranking. Then, they might decide to edit the honest rank order and report the ideal rank order. P#10 explained: I try to understand which one [statement] would be the most accurate for me. Now, if it's a conflict, for example, I come up with an answer that this statement, which might be identified as bad or morally wrong, then I have to make a choice. Do I go ahead and give the honest answer, or do I lie? Thus, participants' decision to edit one's retrieved responses seems to be dependent on several factors. This study identified three factors, as discussed below.

7.3.1 Avoiding looking bad

Participants highlighted that one of their main goals was to avoid looking bad. This appeared more important than looking their best. Subsequently, if they thought that their true ranking would be perceived as undesirable by the assessor, they would engage in the editing process. Yet, if they thought their honest ranking is positive and desirable, they would not engage in the editing stage even if a more desirable ranking was possible. P#4 explained: I wouldn't change it if …this seems like a good answer but maybe not the best, then I keep it. If … I think this makes me look bad, then I would adjust it. Similarly, P#10 stated: My strategy was to be as honest as possible without making myself look bad. You know, honesty was kind of the first thing …, I didn't care if I looked best. I just didn't want to look bad, so if it was going to look bad, I'd lie. But other than that, I'd rather be honest than being in the most positive possible light.

This supports the notion that total score maximization is not a common objective for applicants when completing a personality questionnaire, as suggested by Kuncel et al. (2011) and Ziegler (2011). Interestingly, it appears that test takers are capable of identifying the more desirable rankings but decide against them. In support, a study conducted by Landers et al. (2011) indicated that even if a simple strategy that would result in maximal scores is available to the applicant, only around 7% utilize it. The likely explanation for this is the relatively high utility people place on “staying true to self” goal compared to “appearing impressive.” Indeed, some of our participants explained that their choice not to edit their honest responses was driven by their need to represent themselves truthfully to a least some extent. Yet again, this was only the case if they believed that their true answer was at least somewhat desirable. For example, P#11 highlighted: Well, in the end, I put my cross to answer B. I did it because I thought yeah, answer A would probably be the more desirable trait in the end, but I just think my decision process was, as I said before, I want to stay true to myself.

7.3.2 Avoiding statements with ambiguous desirability

To make desirability judgments, participants also engaged in an elaborate process of identifying and interpreting the flipside of each statement. For example, participants evaluated whether a seemingly positive statement could be interpreted or misconstrued as something negative. Participants explained that they would avoid selecting such statements as “most” like them or select them as “least” like them. P#12 explained: I was least likely to select A because … you cannot twist it into something positive. And …<B and C> are things that you could … make …into positives, but you can't do anything for <A>.

7.3.3 Avoiding inconsistent responding

Another factor in deciding to edit one's ranking was the consideration whether the assessor will be able to identify faking. The participants believed that the identification would be difficult if they create a consistent profile. These findings are in line with results presented by König et al. (2012), highlighting that people engaging in faking if they believe the assessor will not be able to detect inconsistencies. P#12 admitted …I felt like I was doing more editing, trying to second guess the questions and trying to give an overall consistent profile. A similar process was reported by P#7 who explained, …I just scan through and make sure things a pretty consistent…and I have gone back and checked if they will be able to tell if I am cheating… Moreover, P#4 reasoning was as follows: …What did I pick on the last one? Would I be really inconsistent if I tick something different this time? And if anything influenced me to not answer honestly it would have been that…because I really want to avoid there being any discrepancies…

Nevertheless, several participants highlighted that faking a questionnaire consistently will become increasingly difficult the longer the questionnaire gets. They explained that after a while they can identify items assessing the same trait but worry that they cannot remember previous (faked) answers and therefore respond consistently. They suggested the only option to create a consistent profile on a lengthy questionnaire is to answer truthfully. Thus, the length of the questionnaire seems to be an important factor influencing the decisions to edit. P#7 explained: So, I think if you are in a lengthy questionnaire, and they're asking you the same question five times at different intervals, it is going to be very difficult to keep track of your answers unless you're being honest.

Subsequently, if the questionnaire is short, people become more likely to manage impressions entailing that answers should become more honest towards the end of a longer questionnaire because it becomes more difficult to stay consistent. Participants also indicated, that creating consistency becomes easier when allowed to review their answers as highlighted by P#7 who pointed out: … I just kind of scan though and make sure things are pretty consistent…but yeh, I have gone back and checked if they will be able to tell if you are cheating…

7.4 Deciding what ranking to submit and item desirability matching

Participants reported different experiences in regard to deciding what ranking to submit dependent on how items within blocks were matched on desirability.

7.4.1 Matched undesirable blocks

Participants explained that they had a hard time ranking items if they all represented a negative trait or behavior. P#10 explained … I think the all-negative block is probably the hardest one. And one that you want to just make sure you pick the least negative… Additionally, some participants reported spending a long time making a decision as was highlighted by P#13 stating I spent a really long time thinking about that, as to whether that would be a bad thing or not…

Thus, when participants are presented with matched undesirable blocks, each decision seems incongruent with achieving one's goal of presenting oneself in a good light. Subsequently, they think about how to minimize the potential damage that might be caused by their answer. As evident from the quotes above, this perceived incongruence appears to promote direct comparison of item desirability and subsequently faking, as was feared by some in the early days of forced-choice measurement (Feldman & Corah, 1960).

7.4.2 Matched desirable blocks

Some participants explained finding it somewhat difficult to make a decision when all options represented their personality. This difficulty was especially present when picking the “least like me” among equally desirable and relevant to the job options. Yet, participants explained that if this difficulty arose, they were more likely to answer truthfully. This is because they thought there should not be any adverse consequences for honesty and that being truthful was a safe choice. P#4 explained this process as follows: So, since I could instantly identify my true answer, I would select that one…and now the challenge was identifying the least <applicable> one. In that case, I, again went with an honest answer here, because there was nothing much to lose, it's all positive.

Some participants did not experience any difficulties to decide on matched desirable blocks. They highlighted, that because everything was positive there was no reason to fake or adjust one's answers. Therefore, in line with the above, they decided against editing their response and selected their true answer. For example, P#13 explained: …It was easy for me to answer because it's all good. So, I always choose the most truthful ones…

7.4.3 Mixed desirability blocks

Most participants reported that mixed blocks were easier to manipulate, as right or wrong answers were obvious. For example, P#14 argued: Well, the first one was easy. Because there is an obvious right answer; and P#10 explained: I have trouble keeping calm during a major event is definitely an undesirable characteristic, which is why in the first place I avoided it, even though it applies to me I do have trouble keeping calm. Thus, obvious desirable items were selected as “most like me” and obvious undesirable items were avoided or selected as “least like me even if they were characteristic of that person. Moreover, to identify the obvious right or wrong answer, participants based their judgments solely on knowledge or preconceptions about job requirements, disregarding their own personality. P#6 stated “I agree that some questions they look like they have an obvious answer. Just by looking at the job requirement and company culture…So it makes it easier to manipulate the test. Participants also indicated that they were less honest on mixed blocks compared to matched desirable blocks which was highlighted by P#4 explaining: …I think you can be more honest on, <matched blocks>, than …if you had a mixed block of answers.

7.4.4 Ambivalent blocks

Yet sometimes there is a degree of ambiguity to whether the items are desirable or undesirable, making it difficult to decide on what basis to make choices. Participants described this process as overthinking, increasing uncertainty regarding their answers and particularly the impact of a given answer. Participants reported having selected more honest answers the more they engaged in overthinking. P#1 explained: I just found these statements the meaning is quite vague. So, I think I just tried to be as honest as I could… P#10 further highlighted: Like it can be good as much as it can be bad. So, it was more difficult with these questions, and I think the more I think about it, the more I tend to say the most truthful answer about myself. Taken together, it can be suggested that mixed blocks are the easiest to manipulate in comparison to matched desirable and undesirable blocks. Participants feel they cannot be as honest on mixed blocks compared to matched desirable blocks, because an obvious rights choice can be identified in perusing their goal of getting the job. Thus, honest reporting seems to become less salient the easier it is to identify item desirability in a given job context.

8 DISCUSSION

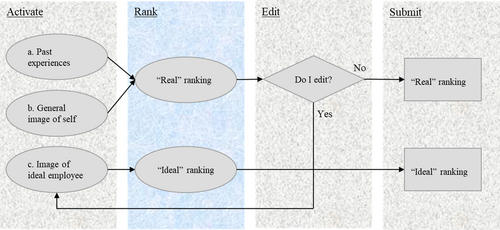

This qualitative study investigated the underlying process mechanisms of faking when responding to MFC personality assessment in a high-stakes context. Specifically, we explored and identified factors influencing the test-takers' decisions to fake specific items and blocks, Based on these findings, we propose a new response process model of faking forced-choice items, the A-R-E-S model, displayed in Figure 1. This model integrates the stages of the R-E-S model (Böckenholt, 2014) whilst proposing some noteworthy modifications. Importantly, the A-R-E-S model describes the response process on an item by items basis. Thus, the same individual might engage in one specific pathway on one item and engages in a different one on the next. Moreover, an individual may revisit previous stages or jump stages within the decision-making process on a given item.

8.1 The A-R-E-S model

8.1.1 Stage 1: Activate

Overall, participants described three distinct types of information that can be activated when presented with MFC blocks. Specifically, they either activate past experiences, information about their general self-image, or information regarding the image of an ideal employee. Similar differentiations in activation mechanisms have been identified by Hauenstein et al. (2017). As part of their mixed method study, they identified differences in intrapsychic processes as a function of motivation to fake and item characteristics. The results indicated that participants instructed to fake good engaged in an elaborate response processes based on semantic analyses and trait-oriented processing. This supports our findings that some test takers activate information based on semantic self-knowledge. Moreover, Robie et al. (2007), highlighting that people either activate traits, activate job requirements, or activate both. In their study, they categories participants as either honest, slight fakers, or extreme fakers depending on the initial activation mechanism. Specifically, participants were classified as “honest” if they responded to all items on the bases of trait-based information triggered by trait-relevant situational cues. By contrast, participants were classified as “extreme fakers” if they answered all items from the perspective of an “ideal” applicant. Participants were classified as “slight fakers” if they activated trait-based information (self-referencing) but also considered how an ideal applicant should respond.

Yet, research has shown that individuals fake on an item-by-item basis as there might not always be the need to fake (e.g., Brown and Böckenholt, 2022). Thus, the classifications as suggested Robie et al. (2007) might be too crude. Instead, one person can be categorized as extreme faker on one question whilst being honest on others. With this, “slight fakers” might be simply people with “extreme” (faked) and “honest” answers mixed. Thus, we find it more helpful to think of memberships in faking classes as not mutually exclusive, therefore supporting findings by Brown and Böckenholt (2022) who proposed to consider every individual's assessment as a potential mixture of “real” (retrieved) and “ideal” (faked) responses.

Nevertheless, it appears that the overall activation mechanisms apply but can vary on an item-by-item basis. Support for this can be taken from research conducted by Robinson and Clore (2002). They were interested to see what factors might influence emotional-self reports, and made a distinction between episodic memory, which holds information of everyday events that can be consciously recalled with specific details such as time, place but also emotions, and semantic memory, whereby individuals form general concepts about the self which become dissociated from experiences and behaviors of every life. They found that when the delay between the experience and the retrieval of such is long, the information becomes increasingly inaccessible leading the individual to shift from episodic to a semantic retrieval strategy. Considering these results in light of the finding from the presented study, it could be suggested that the recency with which the participants have experienced given situations might have influenced the activation of one over the other memory systems. This would also suggest that the memory activation strategies are item dependent.

Thus, one block (e.g., matched desirable block) might trigger the activation of trait-based information, whereas the next block (e.g., mixed block) triggers the activation of information concerning an “ideal” applicant and so forth. This also questions the assumptions made by the R-E-S model that information about self on a given item is always retrieved, and only then possibly edited. Our evidence suggests that individuals do not always activate information about self and, thus, do not engage in the retrieval of explicit self-knowledge. Instead, they retrieve their knowledge of job requirements and subsequently, an image of “ideal employee.” Therefore, we label the first stage “activate” to highlight that, unlike the “retrieve” stage of the R-E-S model, items can trigger the activation of either self-characteristics or “ideal employee” characteristics.

Moreover, some participants have highlighted the importance of contextual information as they were able to identify differences in their own behavior depending on whether they thought about themselves at work or at home. Thus, the activated information seems to be influenced by the context in which activation is triggered. This idea is supported by Kunda and Sanitioso (1989), highlighting that test takers often present an inaccurate self-image when no contextualized instructions are provided. On contrary, the more specific the instructions, the more likely individuals activate the appropriate self-knowledge for the given context. Subsequently, providing more context specific instructions activating test takers workplace personality might improve the tests predictive validity if the test to be used for selection in occupational context.

8.1.2 Stage 2: Rank

Participants activating information about an ideal employee will subsequently rank items based purely on the direct comparison of item social desirability in the given context. Thus, they create an “ideal” ranking, reflecting the participants' beliefs regarding the characteristics and behaviors exhibited by an ideal employee, jump the editing stage and go straight to reporting the “ideal” ranking. These findings are in line with the Faking Mixture model (Frick, 2021), which suggests that test-takers might rank items purely based on their perception of item social desirability, resulting in a ranking that is not reflective of test-takers content trait levels.

By contrast, participants who activated either past experiences or information about their general self-image will rank the items reflecting their “real” response. We have adopted the term “real” from Brown and Böckenholt (2022) who suggested that the activated information only represent the test-takers perceived reality and might be affected by subconscious biases. In support, Leng et al. (2020) suggested, that individuals deceive themselves whilst forming a preliminary opinion and that this deception tendency is unintentional deriving from the uncertainty of memory (Paulhus & John, 1998; Paulhus, 2002). Moreover, as highlighted above, contextual information might influence the type of information activated which in turn impacts the accuracy of the self-image presented by the test-taker. Thus, participants might perceive their rank order to be a real reflection of themself, but this does not necessarily mean that the ranking also reflects their “true” standing on the given latent traits.

8.1.3 Stage 3: Edit

Participants who have activated past experiences or information about their general self-image and have created an initial “real” ranking have to decide whether to edit their ranking. On the one hand, participants who decide against editing (skipping the edit stage) will subsequently report their “real” ranking. On the other hand, participants who decide to edit seem to revisit the activate stage, now seeking information about the image of an ideal employee. Next, they create an “ideal” ranking which will subsequently be reported. Largely, this stage is well captured by the edit stage of the R-E-S model, although in the case of MFC blocks, test takers make an editing decision regarding not individual items but item ranking, which is influenced by several factors.

8.1.4 Factors influencing the block-specific decisions to edit one's ranking

Most participants explained that one of their main goals was trying to avoid looking bad but not necessarily the best, and that their choice not to edit their real responses was driven by their need to represent themselves accurately to at least some extent (i.e., stay true to themselves). Yet, this was only the case if they believed that their real ranking was at least somewhat desirable. Subsequently, if participants thought that their real answer would make them look bad, they decided to edit their responses. Thus, it can be suggested that outright lying is uncommon and happens most frequently on in blocks involving “key negative items” (items representing undesirable behaviors choosing which, in test taker opinion, would lead to determination of the selection process). Subsequently, most rankings, particularly those emerging from matched desirable blocks, represent the candidates' personality to some extent driven by internal goals such as the need to stay true to oneself as supported by the theory of test taker goals introduced by Kuncel et al. (2012). For example, the objective of avoiding looking bad (“appearing impressive”) might override the goal of “staying true to oneself” on some blocks but not others.

For some participants, the decision to edit their real ranking was also influenced by whether they believe the assessor is able to identify inconsistencies within their answers. If they thought a consistent profile cannot be achieved by faking, participants preferred to respond honestly, driven by the fear of adverse consequences. The participants also anticipated that faking consistently becomes increasingly difficult the longer the questionnaire gets, but could be managed if they were allowed to revisit and change their answers afterwards.

8.2 Stage 4: Submit

8.2.1 Influence of items' desirability matching on the submit decision

Overall, it seems that matched desirable blocks encourage honest responding in test-takers, unlike the matched undesirable blocks. In the case of matched undesirable blocks, each decision seems incongruent with achieving one's goal of presenting oneself in a good light, triggering attempts to minimize the potential damage. This perceived incongruence appears to promote direct comparison of item desirability and subsequently faking (Feldman & Corah, 1960). By contrast, although participants seem to still engage in the direct comparison of item desirability when presented with matched desirable blocks, faking behavior does not seem to be directly facilitated. These findings support the argument presented by Gordon (1951), suggesting that when test-takers are faced with equally desirable options, they will report true preferences because any choice is congruent with the goal of presenting oneself in a good light. Test takers feel safe in such situations, and such safety encourages honest responding.

Moreover, ambivalent blocks have the potential to increase participants' cognitive load to a point at which they start to report their honest answers. In support, Stodel (2015) argued that it is highly relevant to consider cognitive load when conducting surveys. He suggested that when participants' attention is divided with additional information or tasks, they should be taking a route that is less cognitively demanding and provide honest responses. This theorizing was tested and supported by van't Veer et al. (2015), who indicated that individuals with limited cognitive capacity show a tendency to be honest in a situation where having more cognitive capacity would have enabled them to serve self-interest by lying. Taken together, it can be suggested that the MFC format has the potential to increases participants' cognitive load to a point at which they start to report their honest answers. Yet, this only seems to be the case if item blocks include ambivalent items.

Mixed blocks seem to elicit least difficulty in determining the most desirable answer, making mixed blocks the least effective in faking prevention. In support, research has indicated that a greater level of agreement is found regarding optimal ranking when blocks are poorly matched compared to closely matched blocks (Hughes et al., 2021). This study enabled further distinction of matched blocks, arguing that matched desirable blocks facilitate honest responding more compared to matched undesirable or ambivalent blocks.

8.3 Limitations and future directions

A limitation of this study is that we did not sample from real applicants at the time of job application. Although we instructed participants to think about a high-stakes situation, it is possible that the process of faking differs in a real selection process. For instance, research has found that response patterns of real applicants are more like response patterns from those in honest than in direct-faking conditions. The literature proposes several reasons such as test-takers need to be honest or the fear of getting caught (Kuncel et al., 2011), which is also supported by our findings. Yet, Kuncel et al. (2011) suggested that the false consensus effect (Ross et al., 1977) might best explain these patterns. Subsequently, future research should investigate if and how previously held believes regarding prevalence of faking influence a person's tendency to engage in such behavior in high-stakes situations.

Furthermore, our study did not provide context specific instructions. Although participants were asked to think of their dream job, this instruction could have triggered very different imagined contexts in different participants. Similarly, our “imagine dream job” instruction could have brought another potential issue with it. On the one hand participants that have a clear vison of their dream job might subsequently fake to a specific job profile (i.e., “fake to job”). On the other hand, some participants might not have a dream job or are already established in their careers, which might have led them to fake good in general. This would mean that instructions we use would have resulted in a somewhat diffused faking induction. Yet, many studies have found that “fake good” and “fake to job” lead to different faking profiles (e.g., Bagby & Marshall, 2003; Birkeland et al., 2006). Subsequently, we want to highlight that our study most likely conflates several types of faking, which might actually be desirable in a qualitative study exploring themes emerging when people fake on MFC blocks. We would argue that the more variety in decision pathways we uncover in a relatively small sample, the more likely our findings will generalize to different contexts. This diffused faking instruction would be more concerning in quantitative research that aims to control and standardize all conditions, particularly when examining the actual scores obtained from participants. However, given the importance of context specific instruction, future research should investigate the activation of relevant information in controlled contexts. This is particularly important to the activate and rank stages as the desirability levels of items are dependent on the context in which the items are presented.

Another limitation is the use of an indirect questioning approach. Indirect questioning methods are still widely debated (Krause & Wahl, 2022), and although we firmly believe that this method was appropriate in the context of our study, some potential issues might have occurred. For example, some participants might have referred to their own experience with the personality test they completed in the study, while others might have referred to previous personal experiences they had as real applicants (which could be about MFC formats or not), and others might have actually revealed what they predict a “typical other” might do or think. This objective judgment might not always be predictable of the participants' own intentions or behaviors. Nevertheless, as evident by our data extracts, many participants actually reported on the experience of completing the MFC questionnaire in the study, making the limitations above less concerning. Yet, we must be aware that participants reported on a limited amount of experience with the question format, given the length and time constrains to complete the questionnaire in this study.

Additionally, given the importance of item desirability matching, more advanced methods need to be developed to assess item desirability in high stakes. This is because the MFC format prevents faking only if all response options within a given block are perceived as equally desirable by the test-taker. Yet, these perceptions can vary between test takers and between contexts. It seems likely that items change in their perceived desirability depending on the context in which they are presented, and on the items presented alongside (Feldman & Corah, 1960; Hofstee, 1970). Future research should aim at creating full control over item desirability.

8.4 Implication for practice of personality assessment

Considering the evidence gathered in this qualitative study, we formulated four recommendations that can be implemented in practice to potentially decrease faking on MFC personality assessments. First, all personality assessments should include context specific instruction (e.g., to think of own behavior at work) to facilitate the activation of relevant self-knowledge and thus, more accurate responding. Second, we suggest that the personality assessment needs to be of sufficient length (ideal length needs to be established) as honest responding seems more likely towards the end of a longer questionnaire, as faking consistently becomes increasingly more difficult. Thirdly, in extension of the previous point, test takers should not be allowed to revisit answers to remove the possibility of response distortion to create a more consistent profile. Lastly, test developers should be aware that blocks of mixed desirability or matched undesirable blocks seem to be more susceptible to faking compared to matched desirable blocks. Thus we recommend the use of matched desirable blocks over matched undesirable or mixed blocks, where possible. We appreciate that sometimes blocks combining items that are positively and negatively keyed to the traits they measure are needed to provide accurate person scores in forced-choice measurement (Bürkner, 2022). In this case, we propose to write items that do not immediately appear undesirable although they measure the “negative” end of a trait (e.g., I speak up when people are wrong is negatively keyed to Agreeableness but is not generally an undesirable behavior). Such “negatively keyed but desirable” items can then be combined with “positively keyed” items of similar desirability. Taken together, we believe that an implementation of these recommendations can facilitate the likelihood of honest responding on MFC personality assessments.

CONFLICT OF INTEREST

The authors declare no conflict of interest.

Open Research

DATA AVAILABILITY STATEMENT

The data that support the findings of this study are available on request from the corresponding author. The data are not publicly available due to privacy or ethical restrictions.