Perspectives on water quality analysis emphasizing indexing, modeling, and application of artificial intelligence for comparison and trend forecasting

Abstract

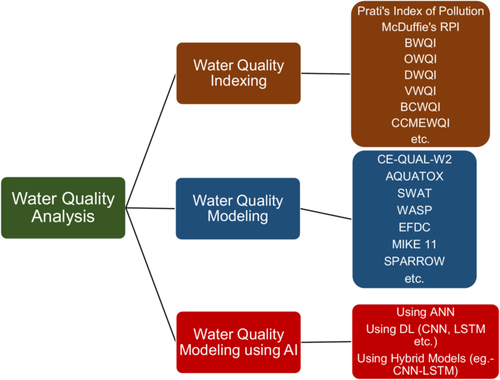

Freshwater essential for civilization faces risk from untreated effluents discharged by industries, agriculture, urban areas, and other sources. Increasing demand and abstraction of freshwater deteriorate the pollution scenario more. Hence, water quality analysis (WQA) is an important task for researchers and policymakers to maintain sustainability and public health. This study aims to gather and discuss the methods used for WQA by the researchers, focusing on their advantages and limitations. Simultaneously, this study compares different WQA methods, discussing their trends and future directions. Publications from the past decade on WQA are reviewed, and insights are explored to aggregate them in particular categories. Three major approaches, namely—water quality indexing, water quality modeling (WQM) and artificial intelligence-based WQM, are recognized. Different methodologies adopted to execute these three approaches are presented in this study, which leads to formulate a comparative discussion. Using statistical operations and soft computing techniques have been done by researchers to combat the subjectivity error in indexing. To achieve better results, WQMs are being modified to incorporate the physical processes influencing water quality more robustly. The utilization of artificial intelligence was primarily restricted to conventional networks, but in the last 5 years, implications of deep learning have increased rapidly and exhibited good results with the hybridization of feature extracting and time series modeling. Overall, this study is a valuable resource for researchers dedicated to WQA.

1 INTRODUCTION

A river flows due to gravity, carrying water from precipitation, snowmelt, and partial aquifer storage as base flow towards the ocean or larger river as a tributary. Rivers have been vital for civilizations, providing water and major transport routes while supporting diverse ecosystems (Giri, 2021). Globally, river water is used for drinking, domestic, industrial, and agricultural purposes. The Earth's water reserve is mainly in oceans with ~96% as saltwater, only 1.1% being freshwater. Aquifers hold 99% of freshwater while rivers comprise just 0.0001% of total water reserves. Rivers, despite their low percentage, are crucial in human life and have been extensively studied in fields like hydrology, hydraulics, ecology, environment etc. (Syeed et al., 2023).

The population boom has increased water demand, putting pressure on all freshwater sources, including rivers. Anthropogenic influence quickened river pollution by affecting river water in two ways. Firstly, the over-extraction of water raised pollutant levels, and secondly, the anthropogenic activities add pollutants to water (Schwarzenbach et al., 2010). Industries in river catchments discharge harmful pollutants that contaminate the river. Agricultural runoff carries contaminants that negatively impact rivers. Domestic sources also contribute to river pollution. These combined effects have sparked global research interest in tackling river water quality (WQ) and pollution issues. Various researchers worldwide have aimed to identify pollutant sources, assess impacts, select key parameters, zone river pollution, predict and monitor river WQ, explore relationships between WQ and river conditions, and evaluate the ecological impact of river WQ (Schaffner et al., 2009). Significant literatures exist on assessing, monitoring, and modeling river WQ and pollution using diverse methods to address the challenge, with many researchers summarizing these efforts. Existing assessment techniques are improving with new modeling, data collection, and increased computational power. New methods are emerging, performing satisfactorily, and often outperforming traditional methods. Researchers are reviewing studies to explore the various methods used globally for WQA and modeling.

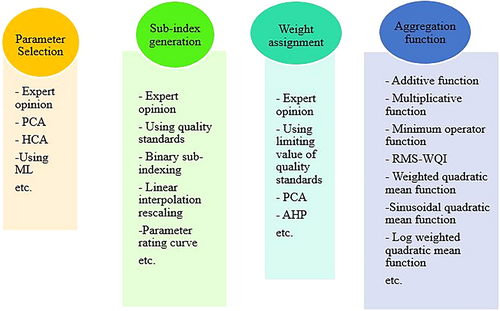

Gupta and Gupta (2021) reviewed papers published during 2010–2021 on water quality indexing (WQI) to assess river water status. The number of papers in SCOPUS and SCIE journals increased from around 750 to 2500 during this period. The WQI forms through four steps: selecting quality parameters, scaling them uniformly, assigning weights, and defining an aggregation function for the final result. These four steps can be executed in different manners (Sutadian et al., 2016). The selection of parameters is subdivided into three groups based on the number of parameters used: fixed, open, and mixed systems. Parameter selection involves reviewing literatures, data availability, parameter redundancy, and intended water use. In indexing systems, parameters are selected using expert judgment or statistical methods like principal component analysis (PCA) or correlation studies. The sub-indexing step can be divided into experts' opinions, standard WQ values, and statistical methods.

Delphi method, analytical hierarchy process (AHP), budget allocation procedure, and revised Simo's procedure are used in WQI models to determine parameter weights. In most WQI models, aggregation functions are created using additive or multiplicative functions. Researchers use modified functions and a combination of additive and geometric methods to address issues with raising eclipsing and uncertainty concerns. Gupta and Gupta (2021) classified the WQI into four groups: general, specific, designing/planning, and statistical indices. First one ignores the purpose of water utilization, while the second one specifies the quality level for a particular use. Third one formulates system design or planning, while the last relies solely on statistical methods.

The forms and formula of WQI have evolved since Horton (1965) first developed it. Total 13 indices such as National Sanitation Foundation WQI (NSFWQI) in 1970, Prati's index of pollution (IP) in 1971, McDuffie's river pollution index (McDuffie's RPI) in 1973, Bhargava's WQI (BWQI) in 1983, Oregon WQI (OWQI) developed in an updated form in 1995 after its first formation in late 1970s, Dinius WQI (DWQI) in 1987, Ved Prakash WQI (VWQI) in 1990, Aquatic toxicity index (ATI) in 1992, British Columbia WQI (BCWQI) in 1995, Canadian council of ministers of the environment WQI (CCMEWQI) in 1999, Overall index of pollution (OIP) in 2003, Universal WQI (UWQI) in 2007 and weighted arithmetic WQI (WAWQI) in 2011, represent the evolution of WQIs. Applying these indices is crucial for WQ research as they simplify the status for public understanding. The spatiotemporal extent of rigorously formulating WQ has sparked controversy among researchers, which is seen as a major limitation of the indexing method.

Uddin et al. (2021) outlined key issues in applying WQI. The model eclipsing problem arises from the aggregate function's nature used to calculate the final index score in many cases. By adjusting the sub-index ratings for dissolved oxygen (DO) and faecal coliform, the final index score changes concavely and linearly with a large interception for additive and multiplicative aggregation functions respectively. WQ may appear acceptable based on the final index score, despite individual parameters exceeding permissible limits. The minimum operator function has been used to address this issue. Uncertainty in WQI models arises from parameter selection, sub-indexing and weighting, contributing to inherent issues. Selection of WQ parameters (WQPs) is a key consideration in developing WQIs. Different WQI models have varying parameter numbers, from under five to around 30. The data availability is a concern for modelers using a specific WQI form. The parameters are commonly chosen based on expert opinion. Subjectivity error often arises in indexing. Statistical methods like Spearman's rank correlation coefficient, and PCA are used to exclude highly correlated parameters. In the mathematical part, there are issues with calculating sub-index values, parameter weighting and evaluating the aggregation function. These issues include confining parameter importance, subjectivity errors and issues of uncertainty and eclipsing.

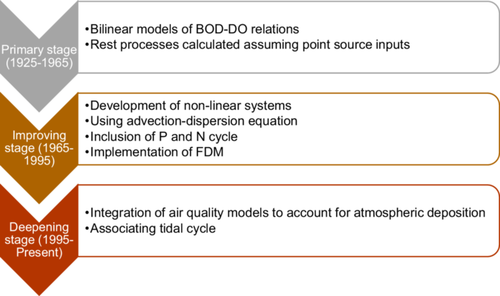

Other than WQI, water quality modeling (WQM) involves mathematically simulating WQPs under certain pollutant loads, hydraulic scenarios and hydrological scenarios. WQM simplifies physical reality by focusing on a single or a few governing processes. Wang et al. (2013) outlined the historical evolution of WQM stages, categorizing them into primary (1925–1965), improving (1965–1995), and deepening (post-1995) stages. At first, bilinear BOD-DO models assumed industrial point loads as the primary factor influencing this relationship. External outputs like hydrodynamic factors, oxygen demand for sediment, and algal colonies were considered. The BOD was classified as carbonized and nitrifying, with added complexity from the concept of BOD reduction through sediment depositions. During the improvement stage, the models assessed six linear systems for predicting WQPs before moving to nonlinear systems development. The model development stage incorporated P and N cycles, nutrient-growth rate relationship, the use of finite difference method for solving nonlinear equations, hydrodynamic conditions, and sediment influences. During the deepening stage, air quality models were integrated into WQM to address atmospheric deposition considering tidal effects.

Sharma and Kansal (2013) categorized WQMs into simulation and optimization models. These two groups were further classified into physical and mathematical simulation models, and into linear, nonlinear, and dynamic optimization models. The mathematical models are subdivided into groups based on the assumed process, data type, solution type and geographic scale. The review included six models with their equations, application domains, and limitations. Gao and Li (2014) reviewed eight WQMs, discussing artificial intelligence (AI), integrating models and using remote sensing/GIS for large-scale spatial data, highlighting benefits, drawbacks, and future perspectives. Burigato Costa et al. (2019) found that SWAT (soil and water assessment tool) followed by QUAL models are widely used worldwide. Issues arise with non-standardized WQMs when applied outside their original conditions, leading to simulation errors. In these reviews, a key error in WQMs is using geography-specific rate constants, restricting its wide geographic application. To address this, many suggested calibration and uncertainty analysis.

AI models integrated into WQM offer various overviews (Tiyasha et al., 2020). The importance of AI models is clear because WQ data is complex, nonlinear, nonstationary, and connected to various factors. These AI models can be classified into five main groups: Artificial Neural Network (ANN), fuzzy logic-based, kernel-based, hybrid and complementary. The application of AI in WQM involves using various quality parameters as input to predict one or more output parameters. Different literatures consider the temporal resolution at daily, weekly, or monthly intervals. Hybrid and complimentary models generally outperform others, despite the advantages and disadvantages of individual models.

Rajaee et al. (2020) reviewed 51 literatures published during 2000–2016 regarding the use of AI models in WQM. DO and suspended sediment load are the most predicted variables globally using AI models. Daily and monthly predictions are common in literature, showcasing hybrid models' superiority over single-structure models. Ighalo et al. (2021) found that the most popular AI models during 2010–2020 were Adaptive Neuro Fuzzy Inference System (ANFIS), followed by ANN. Wavelet-ANN, wavelet-ANFIS, and ANFIS were successful in predicting the WQPs, with BOD being the most frequently used parameter in literatures.

Previous review studies reveal three major strategies used globally in WQ analysis (Figure 1): WQI utilization, mathematical modeling, and data-driven AI-based modeling. Previous reviews of WQM strategies mostly focused on certain sectors, leaving other sectors unexplored within a single study.

This study aims to achieve three main objectives: (i) present and summarize all the major approaches the scientific community adopts in WQ analysis, (ii) comparatively discuss three modeling strategies to find out the overall and strategy-specific gaps and outline application domains for each approach, (iii) profile the future directions for each strategy to make the step forward more robust and comprehensive. The novelty of this study is to present the latest developments in WQ analysis approaches and facilitates a comparative discussion among these approaches to benefit the research community.

2 WQA APPROACHES

2.1 WQI development

WQI is a well-researched topic worldwide in WQA. Due to the complex relationships among various parameters and the influence of the environment, conveying WQ accurately with numerical values is difficult. Horton (1965) pioneered the concept of WQI to simplify this by providing a single score. Since then, the scientific community has continued to develop and address various issues related to WQA.

The development of WQI involves four key steps: parameter selection, sub-index generation, weight assignment, and aggregation function design (Figure 2). These steps produce a value that indicates the WQ level. Subsequent sections detail researchers' methods, advantages, and limitations in carrying out these steps for WQI calculation.

2.1.1 Parameter selection

Parameter selection in WQI development is highly subjective, influenced by varying geography leading to a lack of standardized parameter sets. Brown et al. (1970) used the Delphi method to involve experts in creating a comprehensive parameter list. Subsequently, various literatures also gathered expert opinions to compile parameter lists (Bordalo et al., 2006; Dojlido et al., 1994; Liou et al., 2004; Stambuk-Giljanovic, 1999; Swaroop Bhargava, 1983). In the past decade, PCA has been successfully used to generate multiple PCs with different loadings for each parameter. The significant components explain the majority of the data set's variance and are consequently chosen for developing WQI parameters (Dutta et al., 2018; Fathi et al., 2018; Tripathi & Singal, 2019; Ustaoğlu et al., 2020; Zeinalzadeh & Rezaei, 2017). Other statistical techniques provide valuable insights into WQP selection. Correlation study (Aydin et al., 2021; Shil et al., 2019) and hierarchical cluster analysis (Arora & Keshari, 2021; Njuguna et al., 2020) among different WQPs exhibit important relationships among them and help to make decisions about the parameter selection. A comprehensive literature survey is a useful method to achieve this objective where published literatures explain the significance of selected WQPs (Gani et al., 2023; Mishra et al., 2024). A multi-stepped technique was adopted to select the WQPs for developing West-Java WQI (WJWQI). Two screening procedures with reference to data availability followed by correlation studies to identify parameters expressing the same and omitting was done to reduce the dimensionality (Sutadian et al., 2018). Various studies select parameters focusing on specific water use sectors, with differences seen in the literature. Shil et al. (2019) assessed the WQI for irrigation water. The WQPs considered following FAO guidelines, which were sodium adsorption ratio, residual sodium carbonate, residual sodium bicarbonate, magnesium hazard, potential salinity, pH, chloride, electrical conductivity, and total dissolved solids (TDS). Multiple combinations of input parameters were used for WQI development using machine learning (ML) models. The combination produced the best values of performance indicators and was selected (Sakaa et al., 2022). This method is feasible only with the use of ML models for WQI development.

2.1.2 Sub-index generation

The WQPs are expressed in different units covering different ranges, preventing direct use of raw numerical values in WQI development. The parameter values are unified into a single scale called sub-index, simplifying the overall representation. Different methods of evaluating the sub-index have evolved with new WQI formations. Expert opinion is the primary method for sub-index evaluations. Horton (1965) and Brown et al. (1970) scaled parameters on a 0–100 scale based on their impacts on WQ. Pesce, (2000) provided a table of normalization factors for deriving sub-index values used in several studies (Koçer & Sevgili, 2014; Misaghi et al., 2017; Wu et al., 2021). House (1989) demonstrated using WQ standards to calculate sub-index values. Many studies used both standard and measured parameter values to calculate the sub-index (Ewaid & Abed, 2017; Goher et al., 2014; Oni & Fasakin, 2016; Sahoo et al., 2015; Tiwari et al., 2015).

2.1.3 Calculation of weights

Several researchers use PCA for weight calculation, finding it a rational method (Naik et al., 2022; Roy et al., 2024; Sabinaya et al., 2024). The equation of the derived principal components gives the weight directly. PCA offers the crucial parameters and weights in one analysis. In contrast, Ding et al. (2023) blended subjective and objective weights using game theory to determine the final weight for each parameter.

2.1.4 Aggregation function

Though Ott (1978) used “eclipsing” for errors from additive or multiplicative factors, which can arise during parameter weighting and sub-indexing processes. The issue occurs when some parameters exceed limits but are balanced by better conditions in other areas, resulting in a seemingly good overall WQ score. For example, if parameters A, B, and C are weighted equally at 0.33 and have sub-index values of 20, 30, and 90, the additive aggregation yields a good final quality score of 46.2. However, parameter C has exceeded its permissible limit, posing serious adverse effects on WQ which is being suppressed in the aggregated result.

Smith (1990) suggested using the minimum operator function to avoid the eclipsing problem. The minimum operator function calculates the final score using the sub-index value of the worst-conditioned parameter. However, it underuses available information on WQ and hence reduces efficiency. Few other formulated aggregation functions are the square root of harmonic mean (Gazzaz et al., 2015), non-equal weighted geometric method (Sutadian et al., 2018), RMS-WQI (Gani et al., 2023), weighted, log-weighted and sinusoidal quadratic mean function (Ding et al., 2023).

The mentioned four steps are core in many WQI models. Yet, some models deviate from performance. Here, we briefly explore two such models: fuzzy inference system (FIS) and CCMEWQI.

2.1.5 Fuzzy inference system

The FIS mainly includes membership functions, fuzzy set operations, fuzzy logic, inference rules and defuzzification process to determine the final quality score (Zadeh, 1965). The common membership functions used are trapezoidal and triangular in shape. These functions normalize the quality parameter values during set operations. The fuzzy logic mainly consists of If–then statements, conceived from expert knowledge in many cases. Centroid method is commonly used for defuzzification to get the final WQI value. Researchers use FIS mostly in the inference part, which parallels the four steps of WQI development (Uddin et al., 2021). Tiwari et al. (2018) used subtractive clustering and fuzzy c-means clustering adaptive neuro-fuzzy inference system (ANFIS) with expert opinions' weights and sub-indexes from transformation equations to achieve the final outcome. Sahoo et al. (2015) used PCA to reduce parameters and their weights before feeding the PCA-processed data into ANFIS network. Mourhir et al. (2014) used the minimum operator function on two WQIs to get the worst conditioned parameters and their weight. These two WQI models evaluated fuzzy membership function ranges and performed defuzzification using the centroid method.

2.1.6 CCMEWQI

2.1.7 Limitations

Despite its simple model structure and benefits of depicting results spatially and numerically with minimal data, the WQI approach has significant limitations that require attention for future improvements. The framework for WQI development lacks a universally validated form. Parameters, sub-indexing, weights, and aggregation methods vary across locations due to environmental factors and prior local knowledge. Reducing generalizability and increasing subjectivity errors are consequences of this limitation in the model. Uncertainty in WQI models can arise in all four development steps, posing another issue. The impact of a contaminant on WQ status may vary by geography during parameter selection. The WQI framework does not consider this variation, causing uncertainty in the model. Weight assignment in WQI development is subjective and a significant source of uncertainty. In sub-index generation, the mathematical formulation (Equations (1)–(4)) adds uncertainty. When aggregating the function, ambiguity and eclipsing scenarios may arise. If the aggregated WQ appears worse than individual parameter sub-index values, it is termed ambiguity. The opposite, called eclipsing, occurs when individual values are poor but the overall index suggests otherwise. Both ambiguity and eclipsing increase model uncertainty (Syeed et al., 2023). Uncertainty levels vary with the standards for WQ classification. Seifi et al. (2020) found that incorporating BIS standards led to the least uncertainty in parameter weight assignments when developing WQI. Many studies estimated uncertainty and created frameworks to reduce it (Pak et al., 2021; Uddin, Nash, et al., 2023). Sensitivity analysis helps select parameters accurately, reducing uncertainties in the parameter selection step. The basic way to assess sensitivity is by removing a parameter and observing its impact on the result. Talukdar et al. (2024) tested the WQI of a lake and found that removing TDS and EC from the analysis could enhance result accuracy by reducing uncertainty. The study recommended closer monitoring of pH and turbidity due to their significant impact on WQ. Therefore, sensitivity analysis identifies unimportant or uncertain parameters, as well as crucial ones for determining WQ status. Sensitivity analysis methods for WQI models expand with the application of statistical, probabilistic, and ML techniques. Therefore, conducting uncertainty and sensitivity analysis in WQI modeling is crucial for making more reliable conclusions.

2.2 Water quality modeling

The WQ reflects the overall water condition, which is the result of interactions between biotic and abiotic constituents. Modeling these processes is complex due to many interrelationships and physical phenomena. Each WQM is defined by its conceptualization, simulated processes, required input data, strengths, limitations, and application domain. The pollutant concentration in rivers is linked to hydrodynamic, hydrologic, and ecological factors, making comprehensive WQM challenging, with model performance sensitive to environmental conditions. Previous reviews have described specific WQMs and their specifications mentioned in the introduction section. Various model components are highlighted here to discuss evolution and predict future trends.

2.2.1 Historical development of conceptualization of WQM

In the Streeter and Phelps (1925) model, DO and BOD are linearly related as the basic form of WQM. The study focused on stream point loads to predict DO patterns using a sag curve for longitudinal profiles. Oxygen depletion depends on microorganisms using DO to digest organic matter of water, linked to both microbe count and atmospheric reaeration. After developing this basic framework, various researchers added other factors and processes to express reality holistically. Thomas (1949) included sediment deposition and flocculation in studying DO and BOD dynamics. Dobbins (1964) added algal photosynthesis and respiration factors affecting DO levels, emphasizing shifts in BOD due to sediment release. Only advection was considered in these developments, leading to the execution of one-dimensional models. The initial step in WQM involves modeling the dispersion process to enhance the result dimensionality and predictive capabilities of the model. The quality parameters were predicted as a function of time and space. So, more advanced numerical techniques were used to solve the process equations of WQMs (Gough, 1969; Welander, 1968). Other processes were also started alongside these changes. The N and P cycles, biotic responses, sediment hydraulics, and hydrological integration were incorporated into WQMs to enhance performance (Riffat, 2012; Yih & Davidson, 1975). Recent complex additions to WQMs involve integrating air quality models to assess atmospheric depositions in waterbodies (Golomb et al., 1997; Morselli et al., 2003; Poor et al., 2001). Figure 3 illustrates the key features of each step of the historical development of WQM.

This study reviews seven popular WQMs, summarizing their development and research applications over the last decade. The reviewed models are CE-QUAL-W2, AQUATOX, SWAT, WASP and environmental fluid dynamics code (EFDC).

2.2.2 CE-QUAL-W2

The WQM is done by evaluating 46 WQPs as state variables and more than 60 derived variables covering physical, chemical and biological types. The oxygen profile, constituent concentration profile, eutrophication, and bacteriological quantities are simulated in every grid and time step (Bai et al., 2022). In the past decade, researchers primarily used the CE-QUAL-W2 model in reservoirs and rivers to simulate WQ profiles. Modifications and additional processes were made for simulating WQPs. For example, Zhang et al. (2015) improved a model by adding a sediment diagenesis module to improve sediment oxygen demand (SOD) evaluation. The module considered carbon, nitrogen, and phosphorus cycles through 22 state variables, computing SOD as the sum of nitrogen, methane, and hydrogen sulfide SODs. Tested at the lower Minnesota River, the model showed satisfactory performance in predicting ammonia, nitrate, phosphate, and DO concentrations. Afshar et al. (2017) replaced conservative mass transport with volatilization for predicting the fate of volatile organic compounds. Their model also considers degradation, settling, and biological processes. The new module was calibrated by adjusting viscosity and diffusivity parameters. Shoaei et al. (2022) developed a modified heavy metal module for lead (Pb) in water, focusing on its transportation and transformation. The heavy metal phases included particulate and dissolved forms, changing through desorption and sorption processes. Adsorption and desorption rates depend on total metal concentrations and reaction rates. This study evaluated the reaction rate of Pb based on temperature, DO, TDS, and suspended solids. Genetic algorithm (GA) was used to optimize the reaction rate with the best results obtained using all parameters.

2.2.3 AQUATOX

Over the last decade, the AQUATOX model has been applied in WQM to assess how WQ affects ecological health. Case studies also explored hypothetical scenarios to devise improvement strategies. Akkoyunlu and Karaaslan (2015) applied AQUATOX in Mogan Lake to evaluate how a virtual wetland could enhance the trophic state. They projected a transition from hypertrophic to eutrophic, followed by a shift to a mesotrophic condition. The AQUATOX model integrated with WASP and SWAT predicts nutrient concentrations and WQ by adjusting the point and non-point source loadings (Elçi et al., 2018). Several studies examined the impact of some particular contaminants on waterbody health and ecological status. Lombardo et al. (2015) executed the risk assessment of Tricoslan and Alkylbenzene Sulfonate on the food web of a river ecosystem. The impact of polycyclic aromatic hydrocarbons on eutrophicated lake systems in China was studied using AQUATOX. Both the direct toxic impact and indirect ecological impact were assessed (Zhang et al., 2018). Ma et al. (2024) evaluated the potential risk of per-polyfluoroalkyl substances in aquatic ecosystems.

2.2.4 SWAT

Over the past decade, the scientific community has extensively utilized SWAT for multiple purposes: estimating nutrient levels, analyzing the influence of land use on WQ, combining SWAT with machine learning, enhancing SWAT predictions through source code modifications, and pinpointing critical zones. Abbaspour et al. (2015) estimated nitrogen concentration in 14 Europe basins using catchment data, showing R2 range of 0.28–0.65 during the calibration period. Epelde et al. (2015) assessed the nitrogen budget in the Alegria River outlet and showed a surplus of 114 and 65 kg.N.ha−1.year−1 through two decades from 1990 to 2011. The nitrate concentration in groundwater was assessed, including predicting future WQ under different land uses by Gong et al. (2019), rather than relying solely on basic estimations. The CA-Markov model was used to predict land use by analyzing nitrogen and phosphorus correlating with various land uses. They found a significant positive correlation between nitrogen levels and sediment concentration, agricultural land area, river discharge, and urban area. Total phosphorus was also correlated with river flow, sediment yield, agricultural and urban area, as well as rice cultivation. Using CA-Markov model, the future budget of total nitrogen and phosphorus was estimated with future land use. Lee et al. (2022) improved biomass modeling by replacing the SWAT module with the CE-QUAL-W2 module in the algal bloom source code. Fang et al. (2024) combined machine learning with the SWAT model to pinpoint critical non-point pollution sources. They used self-organizing maps and stepwise regression to model variable relationships. ROC analysis set a forest cover threshold affecting WQ responses.

2.2.5 WQA simulation program (WASP)

The model considers pollutant transport through advection-dispersion processes, point and diffuse sources, boundary exchange, and sediment diagenesis in its latest version for accurate assessment (Martin & Wool, 2017). WASP was used to estimate the total maximum daily loads by addressing various pollutants. It was used to assess concentration profiles and analyzed Akkulam-Veli Lake for 15 months, estimating nitrate, phosphate, DO, BOD, and chlorophyll-a daily variations. WASP has several limitations, including isolating lake segments and overlooking their interactions. Bouchard et al. (2017) modified the WASP by incorporating particle collision rate and particle attachment efficiency to simulate the transport of carbon nanotubes (CNTs) in surface water. CNT concentration was evaluated in both the water and sediments. Mbuh et al. (2019) used WASP to conduct a sensitivity analysis revealing high sensitivity of phosphorus and DO concentrations during summer in terms of errors and model utility. They modeled chromium-III dispersion in the Bogota River, highlighting processes affecting WQPs (Ramos-Ramírez et al., 2020). Notably, the model indicated phosphorus as a phytoplankton limiter, CBOD decay reducing DO, and SOD as key in causing river hypoxia (Cashel et al., 2024).

2.2.6 Environmental fluid dynamics code

The EFDC model mainly predicts waterbody WQPs under specific scenarios. Gong et al. (2016) used it to assess how rainfall and sewage leaks affect DO and various nutrient levels. Rainfall notably boosted total nitrogen and phosphorus concentrations. Sewage leaks led to increases in total nitrogen and phosphorus levels over time. The impact on chlorophyll-a was shorter in duration compared to the other parameters. In EFDC, eutrophication modeling, with sediment diagenesis, has been vital. Chen et al. (2016) studied this using different scenarios. The sediment diagenesis model mainly consists of three parts particulate organic matter (POM) deposition, diagenesis of POM and the resulting sediment fluxes returning inorganic nutrients to the water column. As algal blooms in reservoirs and other waterbodies are a major concern, EFDC is used to assess the relationship among hydrodynamics, WQ and algal blooms (Gao et al., 2018). Their study showed that water temperature and water age are influential factors in algal bloom. Integrating AI with the EFDC model boosts capabilities more. The multi-layered perceptron (MLP) was used to generate daily time series data from regular and daily monitored data. That generated data was fed into the EFDC model, which improved the model performance upstream (Kim et al., 2021). Shin et al. (2023) observed that WQ is primarily influenced by temperature, total phosphorus levels in inflow, discharge in the outlet, and wind speed for internal hydrodynamics. Across 19 stations, temperature was key in 8, while total phosphorus, solar radiation, outflow, total nitrogen, and TSS were influential in varying degrees at the other stations.

2.2.7 Limitations

Before applying WQM or making future modifications, it is important to note its limitations. First of all, WQM requires top-level skills and expertise in WQA. Physical system models typically include measurement error and structural uncertainties arising from lacking understanding of the physical mechanism. Performing uncertainty analysis enhances model result applicability accuracy. Uncertainty in WQMs arises from data quality and model structure. For example, Zhang et al. (2014) demonstrated that the resolution of the digital elevation model (DEM) affects SWAT model uncertainty. Higher DEM resolution leads to increased uncertainty in DO estimation. Lee et al. (2024) utilized input remotely sensed data from various sources to reduce uncertainty in SWAT. Masoumi et al. (2021) used the SUFI-2 algorithm to calibrate the CE-QUAL-W2 model by adjusting parameters related to water temperature and elevation. Xu et al. (2022) used generalized likelihood uncertainty estimation and regional sensitivity analysis (RSA) for the EFDC model implemented in the Three Gorges Reservoir. They identified sensitive parameters corresponding to the model variables. These examples highlight the importance of uncertainty estimation and sensitivity analysis for improving model results.

These research highlights the uncertainty in WQMs, serving as a limitation. Uncertainty varies in different environments and fluctuates with diverse input data. WQ model structures follow physical laws but use empirical equations for intermittent process evaluation involving parameters. This structure brings uncertainty since the empirical equation may deviate from the actual mathematics of the physical process. That is why calibration of sensitive parameters is done to make the model result more robust and closer to reality. In the domain of WQM, many developing countries lack standardization policies, posing an issue in ensuring consistency. Standardization of WQ models means evaluation of consistent guidelines for model development strategies because different models can produce different results while being applied on the same waterbody. This can occur due to considering different structures for empirical equations, incorporating additional processes or eliminating one with the assumption of non-significance, differences in governing equations are also a potential reason for the variations in model results. Therefore, the selection of a model without a standardized framework relies on data availability, computational effort, model accessibility, waterbody characteristics and simulation capabilities of WQPs.

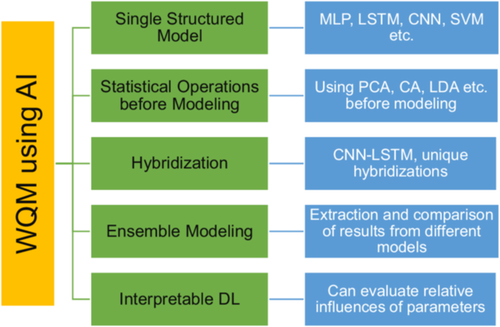

2.3 WQM using AI

This study simultaneously focuses on deep learning (DL) applications in WQA, as DL has been used more frequently in the last 5 years compared to traditional ML methods mentioned in past reviews. The DL implications in forecasting and estimation of WQ variables have reached their peak in these years. This modeling foundation involves feature extraction, and analyzing temporal dependencies in time series data. Recent trends include hybridization and ensemble modeling. Figure 4 highlights key AI features in WQM applications.

Solanki et al. (2015) applied DL in WQ forecasting using the deep belief network (DBN) and stacked denoising autoencoder (SdA) to predict lake parameters like DO, pH, and turbidity. In the DBN layer, adjacent layers are interconnected while units within each layer remain isolated. In the SdA network, data denoising is achieved by minimizing reconstruction errors in autoencoders. The network performance was compared with multilayer perceptron (MLP) and linear regression, which shows that the DL model efficiently handles data variability, underfitting issues and mitigates overfitting. The WQ research community is now using advanced models for predicting WQPs due to improved modeling abilities and new model introductions. Baek et al. (2020) used a long-short-term memory network (LSTM) to predict total nitrogen, phosphorus and organic carbon. LSTM, an upgraded recurrent neural network (RNN), is advantageous because it rectifies the vanishing gradient error inherent in RNN structure. Hence, LSTM is the optimal model for time-series data and is used in WQPs time-series modeling.

Blue-green algae Chl-a, fluorescent dissolved organic matter, DO, specific conductance and turbidity were estimated by progressively decreasing deep neural network (DNN). Results were compared with SVR, MLR and extreme learning machine regression. The input to the models was 20 spectral features from image processing and spatial operations. The DNN performed the best with a mean R2 of 0.89. Zhi et al. (2021) fed sparse DO data and daily hydro-meteorological data into the LSTM model. Here, 74% of the study area showed Nash–Sutcliffe efficiency above 0.4. The model excelled in low DO variability zones but struggled with peaks and troughs. Preprocessing data using statistical operations to extract key features or manage missing data has also been applied in multiple studies. Dilmi and Ladjal (2021) applied principal component, linear discriminant and independent component analyses for feature extraction from WQ data. Then, the LSTM and SVM were used as WQ classifiers for qualitative decision-making of WQ condition. Zhou (2020) used a multivariate Bayesian uncertainty processor to link ANN-simulated and observed WQ data. The output provided a probabilistic forecast using a Monte Carlo simulation, indicating uncertainty.

Along with this, LSTM and transfer learning LSTM (TL-LSTM) were implemented to address the missing data issue of rate 0.5. The TL-LSTM transferred the knowledge from the reference temporal sequence. Bi et al. (2024) used the Savitzky-Golay filter to eliminate noise from the WQ data set and then fed it into LSTM-based encoder-decoder generative NN to predict the DO and COD. Mokarram et al. (2024) used geostatistical methods to prepare WQ maps and develop fuzzy membership functions for qualitative analysis of spatial distribution. Different models like LSTM, MLP, SVR, and RBF were used to predict EC, turbidity, Chl-a, TSS, Mg, and Na. Hybrid models, introduced in the last half-decade, are now widely used in various studies. Barzegar et al. (2020) used a convolutional neural network (CNN), LSTM and a hybrid CNN-LSTM to predict DO and Chl-a from time series data of EC, oxidation–reduction potential, pH and temperature. Time-lagged input data was used in DL and hybrid models. CNN can extract features from data series, while LSTM models temporal dependencies. By combining CNN and LSTM, this study reduced the root mean absolute error for predicting DO and Chl-a.

Prasad et al. (2022) found that the CNN-LSTM hybrid outperforms stand-alone CNN and LSTM models in their comparison study. Khullar and Singh (2022) used free ammonia, DO, coliform, pH, nitrogen, and temperature in a hybrid CNN and BiLSTM model to predict BOD and COD. In BiLSTM, an extra LSTM layer changes the time-series data flow direction compared to LSTM. This CNN-BiLSTM hybrid excelled in WQP prediction over ANN, SVM, CNN and LSTM. Rasheed Abdul Haq and Harigovindan (2022) compared CNN-LSTM and CNN-GRU hybrid models to forecast salinity, pH, DO, and temperature, favoring CNN-LSTM. They tuned hyperparameters using two separate WQ datasets. Wan et al. (2022) used a hybridization approach, simulation observation difference-visual geometry-LSTM to model WQPs by extracting the multidimensional spatial features of a watershed. The SOD modular using Equation (13) was used to simulate the concentrations of nutrients. LSTM was fed with hydro-meteorological and spatial time series features to predict errors. This error model, incorporating spatial and environmental conditions, outperformed ARIMA, SVR, and RNN models in accuracy. Yu et al. (2022) used another hybrid model processing combining data decomposition, fuzzy c-means clustering and bidirectional gated recurrent unit (BiGRU). They decomposed WQ data into subseries with empirical wavelet transform, recombined using fuzzy c-means clustering, and employed BiGRU to predict DO and NH3-N. Han et al. (2023) introduced CRn-BEATS, a hybrid model for pH, turbidity, and temperature prediction. Compared to LSTM, LSTnet, GNN, ConvLSTM, and N-BEATS, CRn-BEATS demonstrated the best performance. The model comprised three blocks with fully connected LSTM layers. Ensemble modeling merged results from various models to enhance accuracy. Zamani et al. (2023) used ensemble learning, combining RNN, LSTM, GRU, and temporal convolutional network DL models to predict Chl-a. They utilized genetic algorithms for weighted averaging. Predictors included pH, oxidation–reduction potential, temperature, EC, and DO. While GRU excelled individually, ensemble modeling outperformed by leveraging diverse models. Notably, interpretable DL marks a significant advancement in WQM. Zheng et al. (2023) used the Shapley additive explanations (SHAP) method to interpret the prediction results of WQPs. Meteorological, land-use and socioeconomic parameters were used in a five-layered feed-forward network. NH3-N, DO, and TN, air temperature, forest area, grain production, population density and urban area were the influencing factors in predicting COD. This interpretation technique may calculate prediction changes with and without input parameters.

2.3.1 Limitations

DL methods aimed at model WQPs offer ample potential for uncovering hidden patterns in natural systems. DL models excel at capturing nonlinear variable relationships, offering modelers increased flexibility in their modeling approach. The limitations of DL modeling lie in the training processes. DL model training requires vast data to optimize weights and biases across network layers effectively. The more complex the model, the higher the number of trainable parameters. DL models require adjustments to their hyper-parameters for optimal results. DL models suffer from the inability to generalize the input-output relationship due to the complexity of the internal mathematical operations. That is why the scientific communities in various fields are including physical laws in DL models like physics-informed neural networks (PINNs) to decrease data and computational needs and improve the generalization for datasets outside the training range. SHAP-like frameworks are also executed to interpret the relative impacts of different factors on WQPs. The results of DL models are uncertain due to input data quality and model structure.

Rahat et al. (2023) used the Monte Carlo dropout method for model uncertainty estimation, finding it boosts LSTM network generalization in WQM. Sensitivity analysis is key for DL models in WQM. A flawed selection of parameters can mislead the model, affecting results. Yu et al. (2024) used Sobol's and hierarchical sensitivity analyses to determine key parameters and processes influencing redox zonations in riparian areas. The study highlighted the significance of sensitivity analysis in DL models for reliable WQ status predictions. Hence, DL models lack generalization ability and do not have a built-in strategy to identify parameters and estimate uncertainty, which is a significant limitation of this approach.

3 COMPARATIVE DISCUSSION

This study discusses three methods for expressing WQ conditions - WQ indexing, WQM, and WQM using AI with a focus on DL with corresponding applications. Each method involves specific inputs and outcome derivation processes, each evaluated differently based on performance.

In WQ indexing, WQPs serve as primary inputs. As mentioned in earlier sections, the process of parameter selection has progressed to develop a better index model. The WQM requires hydrologic, hydrodynamic, and climatic parameters as input with the initial concentration of several pollutants and parameters. In WQM with AI, input includes direct parameter values mainly, but also considers various types such as spectral features with the potential for other types. So, it can be said that the WQM requires the most versatile data set. It is probably the most expensive one in the practical field but very specific in terms of the requirement which is not the case for the other two types as the issues of subjectivity come because of using presumptions.

The processing methods differ in each approach. WQ indexing involves a series of mathematical operations to get to the final result. Sub-index generation, weight assignment and aggregation are the steps for evaluating the final index value. WQM uses numerical schemes to solve equations related to the physical processes of water bodies, yielding the final results. In WQM using AI, steps include choosing a model, inputting data, and evaluating performance with indicators. In all three methods, outcomes include determining an index value, evaluating a concentration map, and obtaining a direct parameter value. Each of these represents a unique expression of the WQ status. Through indexing, no prediction can be done using variant environmental conditions unless the index is related to parameters non-related to WQ. For WQM using AI to produce the same results as WQPs, their inputs must match. Measurement of parameters is essential for running these two methods. Once calibrated and validated, the WQM model can repetitively operate in various scenarios. The analysis of water pollution susceptibility using these three methods is also scattered. Indexing reveals the key WQPs that affect the WQ index value significantly during susceptibility analysis. AI-driven WQM continues evolving with emerging applications to interpret DL results as addressed in earlier sections. In WQM, both parameters and dominant processes driving WQ changes can be accurately identified.

Performance evaluation is crucial for assessing WQM and WQM with AI using statistical measures to analyze their explicit parameter values. However, evaluating WQ indexing is challenging as the index value is not a physical parameter, requiring expert opinions and domain knowledge for assessment. Therefore, it is important to choose the most efficient WQ analysis method based on objectives. WQ indexing can be used for overall quality and pollution assessment, identifying key parameters. WQM is a useful strategy for addressing WQ changes and pollution. It can also aid in predicting unexperienced conditions for the waterbody. WQM using AI is a developing sector, finding applications in new domains with progress. This method excels when predicting specific parameters based on observed values or environmental variables. Table 1 summarizes the three WQA strategies in a comparative discussion.

| WQA technique | Input data requirement | Processing | Advantages | Limitations |

|---|---|---|---|---|

| WQI |

|

|

|

|

| WQM |

|

|

|

|

| WQM using AI |

|

|

|

|

3.1 Performance metrics

Performance assessment is crucial in all modeling. Model performances are mainly evaluated using statistical measures of modeled and observed variables. The three WQA approaches examined in this study have unique methods and performance assessments. In WQI modeling, it is crucial to note that the model's output is a numerical value, not a physical measurement. WQI values for waterbodies are often categorized into classes like “excellent,” “good,” “fair,” and “bad.” There is no universally accepted scheme for this classification because different WQI models use varying stratifications.

In literature, scholars discussed the “metaphoring problem,” where the same quality properties can have various interpretations. The scientific community mainly uses two strategies to establish accurate values for WQI. One approach involves comparing new model results with established WQI models using metrics like coefficient of determination (R2), mean squared error (MSE), root MSE, and mean absolute error to evaluate accuracy. These performance metrics are utilized solely for WQI development in a regression context. Secondly, the WQ is classified based on the number of quality parameters that surpass the acceptable level mentioned earlier.

Uddin, Jackson, et al. (2023) proposed a universal scheme for WQA. This scheme categorizes WQ as “excellent” when no parameters fail and “bad” when all parameters fail, with two intermediate classes in between. This approach transforms WQI development into a classification task. Evaluation metrics used in this classification context include ROC curve, binary cross-entropy, kappa index, and accuracy, precision, and specificity from the confusion matrix. A modeler must understand the data framework of the study area to decide if WQI development should be approached as a regression or classification problem. The challenge in evaluating WQI model performance lies in determining accurate “true values,” as using a previous model result can introduce subjectivity due to inherent error structures. Uddin et al. (2022) and Uddin, Nash, et al. (2023) validated a new ML-based WQI models against conventional models, with a focus on an extreme gradient boosting (XGB) model. Results of root MSE (~3) and R2 (~0.9) indicated a good performance. Uddin, Rahman, et al. (2023) found that the weighted quadratic mean and unweighted RMS-WQI models exhibit a greater resemblance to the newly developed XGB model.

Uncertainty analysis is implemented for the performance assessment of WQI. In WQM, assessment is more straightforward using regression metrics. Given the global widespread use of WQMs, we highlight performance in key studies across diverse locations to inform future modelers. For example, Abbaspour et al. (2015) used the SWAT across Europe, simulating nitrate levels only at 14 Danube basin stations due to limited data availability. R2 for nitrate ranged from 0.2 to 0.65, influenced by unknowns like fertilizer usage which was the main reason for nitrate leaching beyond the root zone. The SWAT simulation of fertilizer application, while accurate for requirements, underestimates channel nitrate concentrations due to overlooking overuse. Woo et al. (2021) applied the SWAT WQM in an interbasin water transfer scenario, achieving R2 values above 0.6 for all cases, demonstrating good simulation capabilities in this specific context.

Zhang et al. (2013) applied SWAT in different regulated basins in China, finding that WQ simulation is more effective in reservoirs compared to sluice gates due to the absence of pollutant transport mechanisms under sluice gates in SWAT. Saravanan et al. (2023) successfully modeled ammoniacal and nitrate nitrogen in India with good calibration results. However, performance declined in validation, indicating reality was not captured. This mirrors the European case, where the SWAT model underestimates channel nutrient levels due to unknown fertilizer applications. Also in USA, Zeiger and Hubbart (2016) revealed SWAT model shortcomings in nutrient loading estimation, emphasizing the significance of knowing fertilizer quantities for accurate predictions.

The SWAT model overlooks baseflow temporal quality changes, leading to errors in pollution loading estimates. To improve accuracy, efforts have been made to integrate SWAT with groundwater and contaminant transport models. The CE-QUAL-W2 model, more commonly used in reservoirs than rivers and other channels, is now being integrated with models like SWAT and SWMM to incorporate flow patterns for quality modeling in rivers. Its performance in reservoirs is influenced by various factors. Benicio et al. (2024) found high accuracy (R2 > 0.9) for water temperature and level simulations, but nutrient and DO predictions vary due to organic matter, algal properties, and data quality. Studies show CE-QUAL-W2 performs better forecasting phosphorus in dry seasons than in summer. Therefore, climatic factors significantly impact the modeling process.

Burigato Costa et al. (2019) reviewed six WQMs, noting the prevalence of CE-QUAL-W2 in the USA, Iran, and China. It is critiqued in South Korea but performs well in India. Modelers should consider process complexities and if the model addresses them. AQUATOX assesses contaminants' impact on ecosystems in rivers and lakes, requiring biomass quantification for calibration and validation. In River Thames, Lombardo et al. (2015) found a 1.6% and 2.3% biomass variation when using the AQUATOX model to evaluate triclosan and linear alkyl benzene sulfonate impact. Zhang et al. (2018) and Zhang and Liu (2014) used the model in two Chinese lakes, yielding satisfactory results.

However, it is important to note that the database needed for developing and calibrating the model is unique and often unavailable in developing countries, limiting its global applicability. For the WASP model, literatures suggest that a sufficiently long observed data set obtained from the field enhances simulation accuracy. The model performance in Shenandoah River was fluctuating when predicting DO, Chl-a, N, and P due to data unavailability (Mbuh et al., 2019). The WASP model for Lushui River was calibrated with observed data over 12 months, showing a good fit (Obin et al., 2021). Properly calibrating model parameters using observed data is important for the success of the WASP model.

The EFDC model is commonly used to predict Chl-a levels along with other quality parameters in water bodies. Gong et al. (2016) observed a 33.3% relative RMSE for predictions of Chl-a, DO, temperature, and total phosphorous, indicating the model's accuracy in simulations. Kim et al. (2017) found EFDC model predicts better in low Chl-a areas. They emphasized growth characteristics of different algal groups to enhance simulation accuracy in high-concentration zones.

The versatility of DL techniques of WQM stems from diverse model structures, input-output frameworks, and hyper-parameter adjustments. Therefore, discussing the performance metrics of different networks in various scenarios is crucial for the research community. Li et al. (2019) preferred time-series WQM using RNN with improved Dempster-Shafer evidence theory to predict the WQPs in two setups. Single and multiple time-step predictions showed decreasing accuracy with longer time lags, regardless of the complexity of the RNN structure. Prasad et al. (2022) used 12 WQPs with CNN, LSTM, and AutoDL to predict WQ status in an Indian lake. They structured the input-output model in binary and multiple classes, with CNN displaying superior feature extraction compared to LSTM and AutoDL. However, if approached as a regression task, CNN's advantage might not hold. Additionally, DL effectively predicted WQ in chemically ungauged rivers. Zhi et al. (2023) used LSTM to predict DO for 580 rivers in the USA with input from temperature, light, and flow. They chemically gauged 480 rivers and left 100 rivers ungauged within specific regions. The LSTM model achieved NSE between 0.5 and 0.72 for ungauged rivers in the western and north-eastern regions, indicating prediction accuracy in data-scarce zones with varying performance metrics and uncertainties.

3.2 WQA practices across different countries

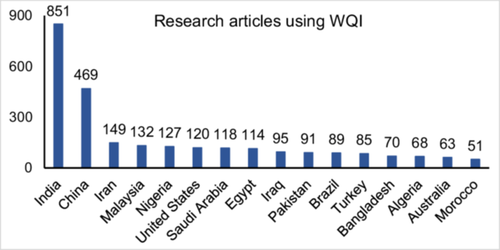

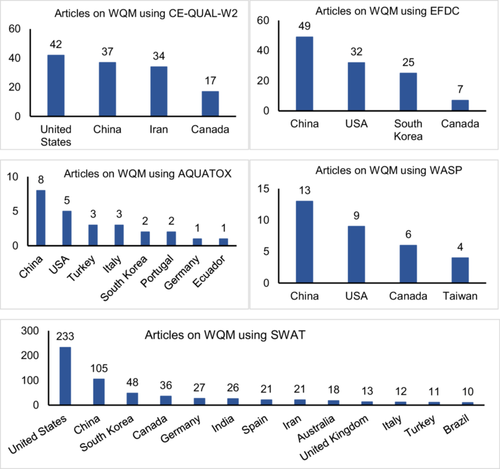

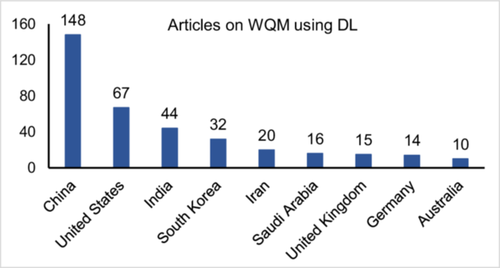

It is important to test how different WQA methods perform under various environmental and climatic conditions to understand their effectiveness. Factors like government regulations, data availability, and public attitude also influence the methodological choice of the scientific community. Here, we have explored the SCOPUS database to get insights into research article counts published in the aspect of different countries on all WQA approaches. Figures 5-7 show the detailed geographic variations.

The total number of documents is evaluated by providing suitable keywords in the search engine. The keywords used are water quality index, CE QUAL W2, Aquatox, EFDC, WASP and water quality, SWAT and water quality, deep learning, and WQM. The total number of articles found is 1825, 115, 25, 135, 37, 521, and 374, respectively. This figure displays articles published by countries with significant numbers in the domain from 2014 to 2024. It can be seen that the WQI being the most applied WQA strategy is hugely executed in the developing countries even much more than the developed countries. Chidiac et al. (2023) stated a similar statement describing the intent of the developing nations in analyzing the WQ using WQI, which indicates how the WQ status of rivers and lakes is crucial to the socioeconomic development. For example, Botle et al. (2023) found significant heavy metals polluted 21 Indian rivers, posing carcinogenic risks to children and adults. The toxicity was also increasing due to high levels of heavy metals exceeding acceptable limits.

In case of WQM using various models, China and the USA have the most number of articles. Only in the case of SWAT, the execution is higher than in other WQMs. The possible reason behind this disparity may be the availability of data. As the WQMs require a huge and versatile data set for model development and calibration, it has not been adopted by many in the developing world (though China is a developing country), where data availability and data collection are big challenges for infrastructural and financial issues. For example, by exploring the SCOPUS database it can be found that in USA a total of 835 studies have utilized SWAT and among them, 233 are with the objective of WQA and other 602 studies have been done for hydrological modeling (quantitative analysis of river flow) indicating almost 28% of studies using SWAT are for WQ study. The same SWAT model when comes to India, only 7.56% of studies are with the objective of WQA. Only 26 studies among 344 utilizations of SWAT approached for WQ.

Hence, it can be said that even with satisfying knowledge and technical expertise resources, the developing countries are unable to apply the WQM because of lacking data availability and infrastructure. Along with this, WQM using AI is being started to develop as a viable methodology for the whole world. But as discussed earlier, the results of WQI or even WQM using AI have certain limitations, which do not depict the physical processes and are prone to many kinds of error, and the data requirement for DL models is also high because of the complex model training processes. This issue can reduce its implications in developing countries. For example, Nishat et al. (2025) implemented 14 ML models in four rivers of Bangladesh and obtained satisfactory model results, but BOD and COD were not considered as input parameters of the models because of the unavailability of data, which reduces the model application substantially. Hence, the countries that are focusing on developing research on WQA should focus on an increase in WQM applications.

4 FUTURE TRENDS

The future of WQA lies in exploring the distinct trends of its three approaches: WQI, WQM, and WQM using AI. The WQI development has been extensively focused on drinking purposes. However, water consumption today includes multiple areas such as irrigation, aquaculture, industrial requirements, and recreational uses. A comprehensive WQI framework for these diverse purposes will enhance its practicality. New parameters and standard permissible values shall be adjusted based on water consumption needs. One upcoming challenge is assessing the relationship between water ecosystems and characteristics like hydrological, hydrodynamic, and chemical for quality maintenance. Exploring this relationship can integrate the role of microalgae in developing WQI.

Another key research goal is to create a globally accepted classification system that categorizes WQ effectively. Developing WQI requires labor-intensive raw data collection, consuming significant time and economic resources. Most studies collect data daily within a specific time range. However, the quality variables show notable diurnal changes that can impact the overall WQ. For example, the DO changes with temperature, impacting nutrient and organic matter concentrations. Therefore, a real-time data system for WQI assessment can improve quality by capturing temporal variations effectively. The future WQ indexes should prioritize inclusivity and robustness concerning waterbody health. For habitat suitability, the depth and velocity of rivers play a crucial role as specific species need certain conditions for growth. These hydrodynamic factors should be considered in the WQI framework alongside WQPs.

In the context of WQMs, there is a clear gap in literature on the future development directions. WQMs, rooted in mathematical equations, advance through discoveries of relationships among natural elements. For example, the atmospheric deposition module of present WQMs has been incorporated only after the physics behind this process has been explored by the scientific community. That is why, a future need rather than a future direction can be stated for WQMs. The matter of pollution stress on waterbodies is driven by anthropogenic and climatic factors together. The segmentation of this joint impact is one of the key understandings that the scientific community and the policymakers want to develop.

Adding a pollutant without increasing the pollution load, but with reduced discharge, can change a river from clean to contaminated. The decline in discharge can result from both climatic and/or anthropogenic factors. So, future WQ management needs to consider both these factors together. While climate variables are commonly included in WQMs, anthropogenic factors such as water withdrawal from rivers and aquifers should also be incorporated in modeling structures. Including more ecological state variables in the WQM framework can show how WQ affects the habitat suitability of various species. Sediment diagenesis in WQ models is mainly unaffected by waterbody hydrodynamics, as it is a significant issue in static environments like lakes and reservoirs. However, recent studies show this process occurs significantly in dynamic waterbodies, affecting sediment transport and diagenesis even with hydrodynamic influences. Therefore, the inclusion of hydrodynamic state variables in the diagenesis equation is necessary and should be addressed in future.

The future directions in WQM with AI should distinguish themselves from general ML model progress. Many previous literatures have focused on smart hyper-parameter tuning, the development of globally acceptable generic network architecture, and the utilization of different optimization algorithms as future directions of WQM using AI. These improvements enhance ML models across all domains where they perform well. In the future, AI can enhance WQM by refining WQA, often by utilizing ML models to link WQPs. Before applying the ML model, it should be understood whether the parameters have any relationship according to the available knowledge of chemistry. If the parameters are not related, then a wide range of data must be tested in different geographical locations to increase the dependability of ML models in WQM. Incorporating physical laws in ML models can also yield good results without requiring a large amount of data. PINNs can be used for even parameter estimation, as they consider the governing differential equations as the loss function of the ML model.

5 CONCLUSIONS

This study elaborates on three methodologies adopted by the scientific community for WQ analysis. The three methods are WQ indexing, WQM, and WQM using AI. The description of the model development procedures, initial requirements, drawbacks and advantages, field of application, and future trends of each method have been highlighted in this study. A comparative discussion among the aforementioned three methods has also been included, which is a novel attempt till now as per our knowledge.

This study highlights the problems one may face while adopting any of these three methods of WQ analysis. The set of parameters to be selected is one of the major problems in WQI development, which is addressed by statistical schemes like-PCA by many across the globe. The use of a FIS has provided the opportunity to get optimized values of weights and sub-indices in WQI development. The use of ML models is also paving the way for nonlinear aggregations of WQPs to evaluate WQI. On the other hand, the WQM is becoming more sophisticated by integrating other environmental process models and incorporating various processes occurring inside waterbodies, which influence the WQ. Attempt to develop the concept regarding the process of sediment diagenesis by exploring the role of many water parameters affecting the process has been attempted by many. The hydrodynamic part and surface water-groundwater interaction have the opportunity to be focused more for better predictions of WQ. Lastly, the WQM using AI is still an emerging field to predict a single or a set of WQPs. In most of the studies in the last 5 years, time-series data of WQPs have been used to predict the same or other WQPs using AI. Few studies of taking socioeconomic factors and other environmental variables as input have also been done to draw novel relationships and optimized management plans. The use of CNN-LSTM hybrid has emerged as a successful modeling scheme while being experimented on around the world.

This study concludes another important point to lead researchers and practitioners regarding the choice of methodologies for WQ analysis. As the comparative discussion suggests, the WQI, WQM and modeling using AI have a very distinct domain of application. These three methods can be adopted with an objective of assessment of status, process understanding, and single or a set of parameter predictions respectively. So, the objective of the study can lead to the choice of the methodology to be adopted.

ACKNOWLEDGMENTS

The Science and Engineering Research Board, Government of India is acknowledged for funding this study under the State University Research Excellence (SURE) Project (SUR/2022/001557).

CONFLICT OF INTEREST STATEMENT

The authors declare no conflicts of interest.

ETHICS STATEMENT

None declared.

Open Research

DATA AVAILABILITY STATEMENT

The data used for the research are described in the article.