An intelligent algorithm to fast and accurately detect chaotic correlation dimension

Abstract

Detecting the complexity of natural systems, such as hydrological systems, can help improve our understanding of complex interactions and feedback between variables in these systems. The correlation dimension method, as one of the most useful methods, has been applied in many studies to investigate the chaos and detect the intrinsic dimensions of underlying dynamic systems. However, this method often relies on manual inspection due to uncertainties from identifying the scaling region, making the correlation dimension value calculation troublesome and subjective. Therefore, it is necessary to propose a fast and intelligent algorithm to solve the above problem. This study implies the distinct windows tracking technique and fuzzy C-means clustering algorithm to accurately identify the scaling range and estimate the correlation dimension values. The proposed method is verified using the classic Lorenz chaotic system and 10 streamflow series in the Daling River basin of Liaoning Province, China. The results reveal that the proposed method is an intelligent and robust method for rapidly and accurately calculating the correlation dimension values, and the average operation efficiency of the proposed algorithm is 30 times faster than that of the original Grassberger-Procaccia algorithm.

1 INTRODUCTION

Complexity has become one of the most important measurements in nonlinear time series analysis in that it not only offers another description for nonlinear characteristics (e.g., chaoticity, fractality, irregularity, and long-range memorability) but also determines the features of inner factors (e.g., central trend, and cyclical, seasonal and mutable patterns) and their interrelationship (Tang et al., 2015). Thus, during the past few decades, numerous attempts have been made to define, qualify, and quantify complexity and also to apply complexity-based theories for studying natural and physical systems (Benmebarek & Chettih, 2023; Huang et al., 2020; Ogunjo et al., 2024; Shen et al., 2020; Sivakumar, 2017; Wang et al., 2019). Amongst them, the correlation dimension is an important indicator reflecting the system's complexity. As correlation dimension (CD) method can represent the essential features of a system using time series of only a single variable of the entire multi-variable system through a “pseudo” phase-space (or state-space) reconstruction, it has attracted considerable interest of scientists in atmospheric and hydrologic sciences (Di et al., 2023; Shu et al., 2023; Sivakumar, 2004a). The obtained CD value is extremely sensitive to slight changes in the complexity of the underlying deterministic structure (Decoster & Mitchell, 1991). The higher the CD value, the more complex the underlying system dynamics appear to be.

Grassberger-Procaccia algorithm (hereinafter denoted as the “G-P algorithm”), proposed by Grassberger and Procaccia (1983a, b), is used for calculating the CD values. However, this algorithm provides few criteria for the identification of scaling region. The term “scaling region” refers to the straight line portion of the curves in the versus plot; however, due to data noise, the straight line corresponding to the scaling region is not a strict straight line, but a linear region of discrete points with gentle fluctuations, making the scaling region difficult to identify (Mcmahon et al., 2017). By now, “visual inspection” is a widely adopted method to determine the scaling region, yet it is quite difficult and troublesome for the users to identify the scaling region, thereby leading to inaccurate estimated CD values (Camastra et al., 2018). In light of this, many scholars have attempted to seek a systematic, objective, and fast method for identifying the scaling region. Based on different features, these studies can be divided into two main categories. First, some studies proposed the methods to fast identify the scaling region, but these methods lack not only theoretical basis but also certain objectivity. For example, Yokoya and Yamatomo (1989), Maragos & Fan-Kon Sun, 1993; Kim et al. (1999), Harikrishnan et al. (2006), and Jothiprakash and Fathima (2013) tried to obtain the upper limit of the scaling region using empirical equations; Wang et al. (1993) proposed a three-fold method, and Bolea et al. (2014) proposed a S-curve fitting method to identify the scaling region based on special curve models; Judd (1994) proposed Judd algorithm to calculate the CD values of special fractal systems; Dang and Huang (2004) proposed a method involving grouped recursive computer-recognition based on two so-called appraisal indices: confidence level and correlation. Second, other studies proposed methods to objectively identify the scaling region, but they are time-consuming and easy to fall into a certain value, or cause a bloated calculation, resulting in slow calculation for big data. For example, Wu (2002) proposed an adaptive method based on standard deviation to determine the scaling region; Jia et al. (2012), Du et al. (2013), and Wu et al. (2014) determined the scaling region based on piecewise fitting method; Yang et al. (2008) applied K-means clustering algorithm to identify the scaling region, and used classic chaotic attractors for verification; Ji et al. (2011) proposed a novel method based on the K-means algorithm and the point-slope-error algorithm to improve the calculational accuracy of the correlation dimension; Di et al. (2018) proposed an improved G-P algorithm, integrating the normal-based K-means clustering technique and random sample consensus algorithm, to calculate the correlation dimension. Chen et al. (2019) introduced a novel two-stage method using the K-means algorithm, which identifies the initial scaling region in the first stage, and refines this region to achieve greater accuracy in the second stage. Zhou et al. (2022) proposed a method using machine learning known as “density peak” based clustering algorithm to identify the scaling region. To sum up, these methods either lack certain objectivity or are time-consuming for big data, among other problems. In addition, these methods are not necessarily effective in many actual time series, such as streamflow, rainfall, groundwater, and temperature, even if they are useful in experimental data produced by ideal attractors. In this context, this paper tries to fill this gap and provides a method that can identify the scaling range rapidly and robustly.

The primary goals of this study are twofold: First, a new algorithm is proposed to improve the G-P algorithm, which integrates the distinct windows tracking technique and fuzzy C-means clustering algorithm. The classical Lorenz chaotic system is adopted to test the effectiveness of the proposed algorithm for estimating the CD values. Afterwards, the streamflow series in the Daling River basin is used for further verification. The rest of this paper is organized as follows: Section 2 describes the methods, including G-P algorithm and fuzzy C-means clustering algorithm, and data used in this paper. Section 3 presents the main findings of this paper. Finally, Sections 4 and 5 presents the discussion and conclusion.

2 METHODS AND DATA

- (1)

Reconstructing the phase space using the time delay embedding method to represent the underlying system dynamics.

- (2)

Identifying the scaling region based on the distinct windows tracking technique and fuzzy C-means clustering algorithm for calculating the correlation exponent.

- (3)

Plotting correlation exponent v versus embedding dimension m for different values of time lags, and then estimating the CD value as the mean value of the saturation range in the correlation exponent versus embedding dimension plot.

2.1 Correlation dimension method

For a stochastic system, v increases boundlessly with increase in m, whereas for a deterministic system, v increases with increasing m and reaches a plateau on which v is relatively constant for a range of large enough m. The saturation value of v is defined as the CD value of the attractor or a time series.

It is evident that the fast and exact delineation of the scaling region is quite important for the estimation of CD values. Some methods have been developed to identify the scaling region, and verified in classic chaotic systems instead of actual time series. As a result, “visual inspection” is still widely adopted to determine scaling region in actual time series. Moreover, time lag and embedding dimension m are two important parameters in G-P algorithm, so it is necessary to identify the proper and m. At present, different methods have been developed, such as the autocorrelation function method (Leung & Lo, 1993), the mutual information method (Haykin & Puthusserypady, 1997; Haykin & Xiao Bo Li, 1995), the false nearest neighbors (Hegger et al., 1999; Kennel et al., 1992; Rhodes & Morari, 1997), the Cao's method (Cao, 1997) and the correlation integral method (Ghorbani et al., 2018). However, none of these methods is effective. A large number of experiments we conducted generally showed that was an integer between 3 and 8, and m was an integer between 3 and 20. Therefore, in this study, different and m were tested for each time series.

The package “nonlinearTseries” in R project, which can be used to detect the CD value of a time series, was defined as a contrast in this study. In this package, and m can be determined by the embedding function “AMI” and “estimateEmbeddingDim,” respectively, but the determination of scaling region still requires visual observation. Further details can refer to the manual for the package “nonlinearTseries.”

2.2 Fuzzy C-means clustering method

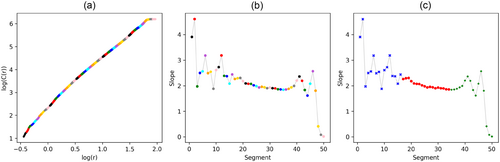

The curve of versus was divided into 50 segments by the distinct window tracking technique, and the slope of each segment was obtained by the least square method. We found that these slopes were parted in three different regularities. But which part was the scaling region we needed? Therefore, the fuzzy C-means clustering algorithm was introduced to determine the starting and ending points of the scaling region. In the past, “visual inspection” was often used to determine the starting and ending points of the scaling region, affecting the final results due to large ambiguity. The proposed method was automated, which exhibited a high degree of accuracy, facilitating its application across diverse scenarios and datasets. This has significantly enhanced overall efficiency.

Fuzzy C-means clustering algorithm (Bezdek et al., 1981, 1984; Dunn, 1973), is a soft-clustering algorithm that analyzes and models data using fuzzy theory. It establishes an uncertain description of data categories, which can objectively reflect the real world. This algorithm was introduced by Dunn in 1973 and improved by Bezdek in 1981 and in 1984.

The iteration ends when , where t is the iteration steps, and is a very small constant, which represents the error threshold and usually equals to 10−6. That is to say, and will be iteratively updated until the maximum change in membership does not exceed the error threshold. Eventually, this process converges to the local minimum or saddle point of .

2.3 Data

The data used in this study included Lorenz data and streamflow data. The Lorenz data was used for new method verification, while streamflow data was used to further test the effectiveness and stability of the proposed algorithm.

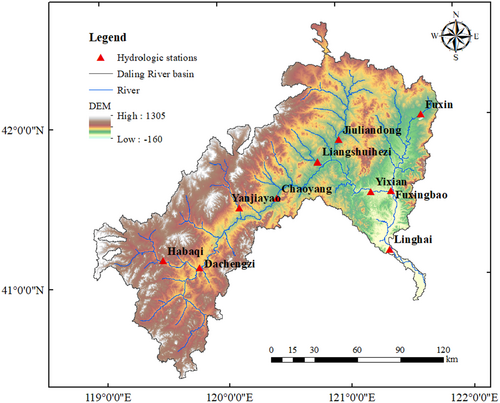

For streamflow data, daily streamflow series in 10 hydrologic stations in the Daling River basin of Liaoning Province, China, which is situated in a semi-arid area with an average annual precipitation of 400–600 mm (as shown in Figure 2), were selected in this study. The start and end dates of these streamflow series are presented in Table 1. It is important to note that, the streamflow data in missing months for Yanjiayao, Liangshuihezi, and Fuxingbao stations in 1997, were substituted with the previous year's data for the same day.

| Station number | Station ID | Station name | Start-end of the series (year/month/day) |

|---|---|---|---|

| 1 | 21200355 | Dachengzi | 1980/1/1–1999/12/31 |

| 2 | 21200450 | Chaoyang | 1980/1/1–1999/12/31 |

| 3 | 21200600 | Yixian | 1983/1/1–1999/12/31 |

| 4 | 21200650 | Linghai | 1980/1/1–1999/12/31 |

| 5 | 21210800 | Habaqi | 1980/1/1–1999/12/31 |

| 6 | 21211201 | Jiuliandong | 1980/1/1–1999/12/31 |

| 7 | 21211355 | Fuxing | 1980/1/1–1999/12/31 |

| 8 | 21210955 | Yanjiayao | 1980/1/1–1999/12/31 |

| 9 | 21211045 | Liangshuihezi | 1980/1/1–1999/12/31 |

| 10 | 21211450 | Fuxingbao | 1980/1/1–1999/12/31 |

3 RESULTS

3.1 Lorenz data analysis

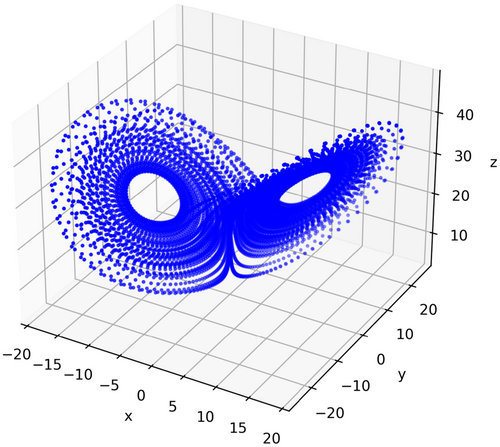

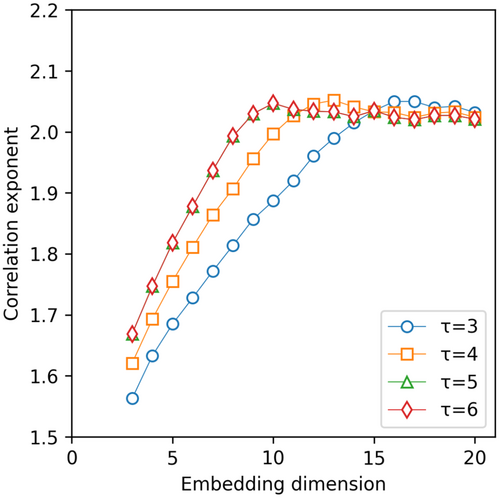

Figure 3 presents the process of calculating correlation exponent with and for the Lorenz attractor based on the proposed algorithm. Based on distinct windows tracking technique, versus curve was divided into 50 segments marked by different colors (Figure 3a). The slope of each segment was determined through the least square fitting of the points within that segment (Figure 3b). The results of fuzzy C-means clustering analysis of these slopes are shown in Figure 3c. It is obvious that the slope exhibited a certain degree of fluctuation, followed by a slightly smaller variation within a specific range, and ultimately infinitely approached 0 at a faster pace. Usually, the second part, represented by red dots, was regarded as the scaling range, and the mean value of the slopes within this range was the correlation exponent we needed. Figure 4 presents the relationship between the correlation exponent and embedding dimension values. The correlation exponent increased with increase in the embedding dimension for = 3, 4, 5, 6 up to a certain point and saturated beyond that. The mean saturation value of the correlation exponent (i.e. CD value) was 2.03 ± 0.01, sufficiently close to the theoretical value (i.e., 2.05 ± 0.01) (Grassberger & Procaccia, 1983a), indicating the reasonable effectiveness of the proposed algorithm.

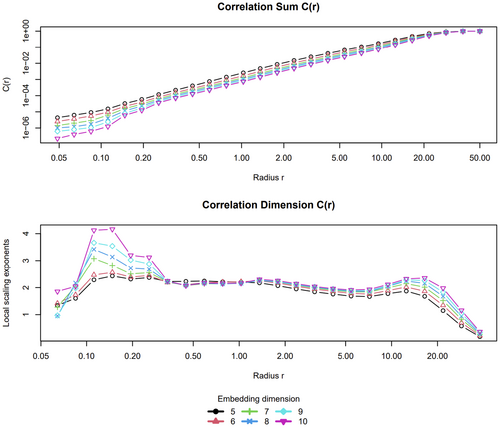

In addition, the package “nonlinearTseries” in R project was employed to detect the CD value of the Lorenz attractor. The time lag and embedding dimension of the Lorenz data were 9 and 5, respectively, and the selected interval of radius (i.e., the scaling region) was from 0.5 to 10. The CD value detected by this package was 2.05 (Figure 5), which was also close to the theoretical value. In summary, the CD values detected by the proposed algorithm and the package “nonlinearTseries” in R project are relatively similar. This indicates that the two methods are both useful to detect the intrinsic dimensionalities of the Lorenz system.

3.2 Actual streamflow data analysis

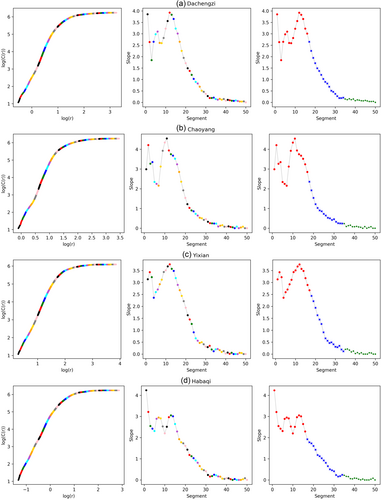

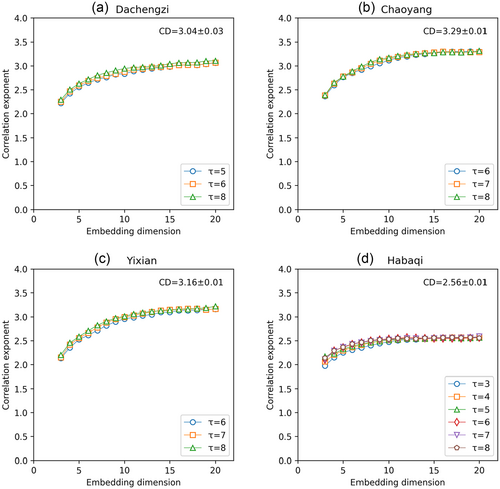

10 streamflow time series data in the Daling River basin were employed for analysis to further verify the effectiveness of the proposed algorithm. Here, taking the streamflow series of Dachengzi station, Chaoyang station, Yixian station, and Habaqi station, for example, the CD values of four streamflow series were calculated using the proposed algorithm. Figure 6a–d shows the process of calculating the correlation exponent, with specific and m for 4 streamflow series of the Daling River basin using the proposed algorithm. Based on the distinct windows tracking technique, versus curve was divided into 50 segments marked by different colors (left figure). The slope of each segment was determined through the least square fitting of the points within that segment (middle figure). The results of fuzzy C-means clustering analysis of these slopes are given in the right figure. As can be seen, the slope showed a certain fluctuation, followed by a rapid decrease, and ultimately infinitely approached 0. Hence, we chose the first part, represented by red dots, as the scaling range. As mentioned above, the mean value of the slopes within this range was the correlation exponent. Figure 7a–d shows the results of the correlation dimension analysis based on the proposed algorithm for 4 streamflow series. For each streamflow series, the correlation exponent, in general, increased with the embedding dimension up to a certain dimension, beyond which it was saturated, for different , indicating the presence of deterministic chaos. The CD values of four streamflow series were 3.04, 3.29, 3.16, and 2.56, respectively.

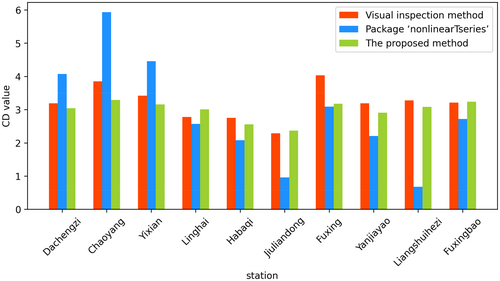

Table 2 provides a summary of the results of correlation dimension analysis performed on all the 10 streamflow time series of Daling River basin based on three methods, including visual inspection method, the proposed method, and the package “nonlinearTseries” in R project. It is worth noting that, in this study, the CD values detected by the visual inspection method, which was combined with the distinct windows tracking technique to improve the accuracy, were considered the true values. The finite non-integer CD values obtained using the proposed algorithm ranged from 2.37 to 3.29, indicating the presence of chaotic behaviors in 10 streamflow series. From Table 2, we can see that the plot of correlation exponent versus embedding dimension of the streamflow series of other hydrologic stations, except Linghai and Jiuliandong stations, saturated for at least three different time lags using the proposed algorithm. This makes the estimated CD values more reliable. In addition, it can be seen that, in most cases, as time lag increases, the embedding dimension may decrease or remain constant.

| Name | Visual inspection method | Package “nonlinearTseries” | The proposed method | ||||||

|---|---|---|---|---|---|---|---|---|---|

| m | CD | m | CD | m | CD | ||||

| Dachengzi | 5, 6, 8 | 16, 13, 11 | 3.19 ± 0.02 | 5 | 9 | 4.07 | 5, 6, 8 | 15, 15, 15 | 3.04 ± 0.03 |

| Chaoyang | 5, 6, 7 | 13, 16, 13 | 3.85 ± 0.07 | 3 | 11 | 5.93 | 6, 7, 8 | 15, 15, 14 | 3.29 ± 0.01 |

| Yixian | 4, 5, 6, 7 | 16, 15, 12, 11 | 3.42 ± 0.04 | 2 | 14 | 4.46 | 6, 7, 8 | 16, 15, 15 | 3.16 ± 0.01 |

| Linghai | 3, 4, 6 | 15, 17, 16 | 2.78 ± 0.23 | 3 | 8 | 2.57 | 6, 7 | 19, 17, 15 | 3.01 ± 0.02 |

| Habaqi | 3, 4, 5 | 11, 8, 8 | 2.75 ± 0.06 | 1 | 10 | 2.08 | 3, 4, 5, 6, 7, 8 | 15, 12, 12, 11, 11, 11 | 2.56 ± 0.01 |

| Jiuliandong | 6, 7, 8 | 17, 16, 14 | 2.29 ± 0.11 | 1 | 15 | 0.96 | 6, 7 | 18, 17 | 2.37 ± 0.05 |

| Fuxing | 5, 6, 7 | 15, 12, 12 | 4.03 ± 0.05 | 2 | 12 | 3.09 | 3, 4, 5 | 15, 15, 15 | 3.18 ± 0.06 |

| Yanjiayao | 5, 6, 7 | 17, 15, 15 | 3.19 ± 0.04 | 1 | 9 | 2.21 | 3, 4, 5, 6 | 15, 16, 17, 17 | 2.91 ± 0.07 |

| Liangshuihezi | 6, 7, 8 | 17, 17, 16 | 3.28 ± 0.02 | 1 | 13 | 0.68 | 4, 6, 7, 8 | 16, 17, 14, 16 | 3.08 ± 0.06 |

| Fuxingbao | 6, 7, 8 | 17, 15, 15 | 3.21 ± 0.02 | 2 | 11 | 2.72 | 6, 7, 8 | 17, 15, 15 | 3.24 ± 0.01 |

To better compare the results of the correlation dimension analysis based on three methods, the CD values of 10 streamflow series calculated by three methods are shown in Figure 8. It can be observed that, the CD values calculated by the proposed algorithm were closer to the actual CD values than those calculated by the package “nonlinearTseries” in R project. The deviations between the CD values detected by the proposed algorithm and the true values were approximately 0.2 for these streamflow series, except the streamflow series of Chaoyang and Fuxing stations. However, it is evident that there was a significant disparity between the estimated CD values for 10 streamflow series based on the package “nonlinearTseries” and the true values. This indicates it is useful to detect the CD values for the Lorenz data based on the proposed algorithm and the package “nonlinearTseries,” but the proposed algorithm was superior to the package “nonlinearTseries” for the actual streamflow series. Furthermore, to calculate the CD value of one streamflow series with from 3 to 8 and from 3 to 20, the operation time required by the visual inspection method was approximately 3 h, while the proposed method only took 6 min. The average operation efficiency of the proposed algorithm is 30 times faster than that of the visual inspection method (i.e., the original G-P algorithm). In summary, the proposed algorithm is not only intelligent and fast, reducing human interference, but also works better in real-world data.

4 DISCUSSION

As mentioned previously, the results of correlation dimension analysis for the Lorenz data and 10 actual streamflow series based on three methods, including visual inspection method, the proposed method, and the package “nonlinearTseries” in R project, indicate the effectiveness and efficiency of the proposed algorithm for calculating CD values. There was a significant discrepancy between the CD values calculated based on the package “nonlinearTseries” and the true values, primarily due to the following two reasons. First is the choice of time lag and embedding dimension. As we all know, there has always been controversy over the selection of time lag and embedding dimension, which is important for the phase space reconstruction and correlation dimension estimation. Various methods have been proposed for the selection of and , including the autocorrelation function method (e.g., Leung & Lo, 1993), the mutual information method (e.g., Haykin & Puthusserypady, 1997; Haykin & Xiao Bo Li, 1995), the false nearest neighbors (e.g., Hegger et al., 1999; Kennel et al., 1992; Rhodes & Morari, 1997), the correlation integral method (e.g. Ghorbani et al., 2018) and the Cao's method (e.g., Cao, 1997). Different values of and are obtained using different methods, leading to different estimated CD values. In this study, the parameters and m involved in correlation dimension analysis based on the package “nonlinearTseries” in R project were obtained using the mutual information method and the Cao's method, respectively, and the estimated CD values differed greatly from the true values. This indicates that it is inaccurate to calculate the CD values by only one and only one . Some studies (e.g., Benmebarek & Chettih, 2023; Di et al., 2018; Di et al., 2019; Labat et al., 2016; Rolim & de Souza Filho, 2023) have selected one but multiple when conducting the correlation dimension analysis. However, it is not reliable, and sometimes, the correlation exponent value increases with an increase in embedding dimension for obtained using aforementioned methods. Second is the selection of the scaling region. It is quite difficult and subjective to determine the scaling region of actual data (e.g., streamflow, groundwater, precipitation) by visual inspection method. While it is relatively accurate to detect the CD values based on the visual inspection method combined with the distinct windows tracking technique, it typically requires 3 h for a time series of over 7000 data points. The larger the amount of data, the more time it takes to compute.

In view of these issues, in the present study, different and m were tested for each time series, which was a better choice (e.g. Sivakumar, 2000; Tsonis et al., 1993). The CD values were calculated when the saturation range in the correlation exponent versus embedding dimension plot was visible for at least three different time lags. This makes the estimated CD values more stable and convincing. Also, the distinct windows tracking technique, and the fuzzy C-means clustering algorithm, were integrated into the original G-P algorithm. This makes it objective, fast, and accurate to detect the CD value of a chaotic time series, especially actual time series. Nevertheless, there still existed a certain disparity between the estimated CD values obtained using the proposed algorithm and the true values.

Previous studies show that the slope is often not constant within the scaling range for finite real-world data sets (e.g., Sivakumar & Singh, 2012; Sivakumar, 2017), which also can be seen in Figure 5a–d. Thus, it is difficult to identify the scaling region accurately. However, we know the second part, where the slope continuously descended, and the third part, where the slope reached saturation, was definitely not the scaling range, and the first part was considered the scaling range, or at least covered the scaling region. From Figure 6a–d (left), we can find some points did not belong to the linear part, but we were unable to rule them out, leading to the underestimation of the CD values. Further investigations are required to solve this problem in the future.

Additionally, as mentioned above, it is easy to detect the CD values of ideal data, such as the Lorenz data, but it is much more complex to detect the CD values of actual data, such as streamflow, groundwater, and precipitation. In the future, with the continuous extension of actual data length, more time will be required to calculate the CD values. Besides, due to the influence of climate change and anthropogenic activities, the intrinsic dynamic systems of the actual data may change dramatically, leading to large changes in the complexity of the actual data. The choice of study period of actual data also has a certain impact on the CD values. Therefore, it is of great significance to fast and accurately detect the CD values of actual time series. With the development of big data in the future, the proposed algorithm will have stronger generalizability. Before modeling, this algorithm can be used to mine the dimension information, which can greatly reduce the workload and be used to guide the selection of prediction models. This can improve both work efficiency and prediction accuracy to a certain extent. In the future, our research will combine this algorithm with hydrological model simulation and prediction.

5 CONCLUSION

In this study, the distinct windows tracking technique and fuzzy C-means clustering algorithm are introduced in the original G-P algorithm to calculate the correlation dimension value of a chaotic time series. The Lorenz data and streamflow time series data of 10 hydrologic stations in the Daling River basin are employed to verify the effectiveness of the proposed method. Also, the package “nonlinearTseries” in R project is used for comparison. The following conclusions are reached: First, the proposed method is an automatic method for accurately and rapidly calculating the CD values, especially for the actual time series data, and the average operation efficiency of the proposed algorithm is 30 times faster than that of the original G-P algorithm. Second, the CD values of a chaotic time series should be estimated through different time lags and embedding dimensions, which are more reliable than the CD values calculated by only one-time lag and one embedding dimension.

The improved G-P algorithm proposed in this study can fast and accurately detect the CD values of actual time series, such as streamflow, groundwater, and precipitation, thus providing a more reliable estimation of the number of dominant processes of governing the behavior of the time series. It may facilitate a better understanding of complex interactions and feedback behind these actual time series, and provide new insights for the model optimization of these actual time series. Further studies are underway to explore these aspects.

ACKNOWLEDGMENTS

The research was funded by the IWHR Basic Scientific Research Project (JZ110145B0072024), the IWHR Internationally-Oriented Talent for International Academic Leader Program (0203982012), and the National Natural Science Foundation of China (51609257).

ETHICS STATEMENT

None declared.

APPENDIX A: Package “nonlinearTseries”

Package “nonlinearTseries” was developed by Constantino A. Garcia and Gunther Sawitzk. It depends on R greater than or equal to version 3.3.0. It can be downloaded from https://github.com/constantino-garcia/nonlinearTseries. This package permits the computation of the most-used nonlinear statistics/algorithms, including generalized correlation dimension, information dimension, largest Lyapunov exponent, sample entropy, and Recurrence Quantification Analysis (RQA), among others.

Open Research

DATA AVAILABILITY STATEMENT

The data that support the findings of this study are available from the corresponding author upon reasonable request.