Artificial intelligence in rheumatoid arthritis

Edited by Lishao Guo.

Abstract

Rheumatoid arthritis (RA) is a chronic autoimmune condition that causes joint inflammation and damage and significantly affects patients' quality of life. Over the past 5 years, the application of artificial intelligence (AI), particularly deep learning, has resulted in notable advancements in the field of rheumatology. This review explores these developments, highlighting how AI has enhanced the precision and reliability of imaging techniques, such as radiography, ultrasound imaging, and magnetic resonance imaging, for managing RA. In addition, the integration of diverse data sources, including clinical records, genetic profiles, and imaging examinations, has facilitated more accurate predictions and formulation of personalized treatment strategies. However, challenges such as data variability, complexity of AI models, and ethical considerations remain. Addressing these issues is essential for further progress. Future research should focus on improving data integration, model interpretability, and ethical deployment of AI in clinical practice. These advancements have the potential to significantly improve the diagnosis and management of RA, moving closer to the goals of precision medicine in this field.

Key points

-

This review explores the advancements in artificial intelligence (AI), focusing on machine learning, deep learning, and natural language processing in enhancing rheumatoid arthritis (RA) diagnosis, treatment, and prediction.

-

It proposes the integration of multimodal data, such as clinical records, imaging, and genomics, for more accurate predictions and personalized treatment strategies in RA management.

-

Challenges like data variability, model complexity, and ethical concerns are discussed, with a focus on future research to improve data integration, model interpretability, and ethical considerations for AI in RA clinical practice.

1 INTRODUCTION

Rheumatoid arthritis (RA) is a chronic autoimmune disease that primarily affects synovial joints, leading to inflammation and potential joint destruction. It affects approximately 1% of the global population and is more prevalent in women than in men. Furthermore, RA can affect systemic organs and systems, leading to comorbidities, such as myocardial infarction, stroke, and pulmonary fibrosis.1 Early diagnosis and treatment are critical for preventing irreversible joint damage and improving long-term outcomes.2

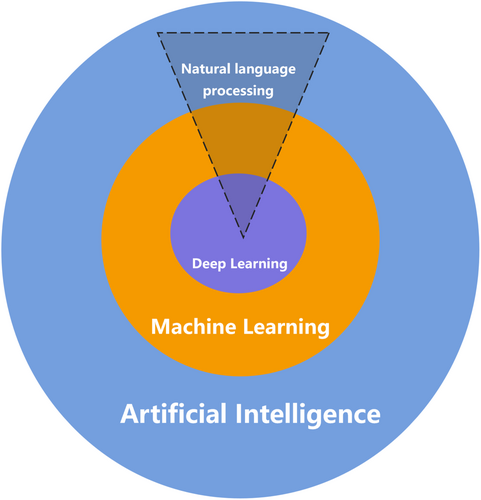

Artificial intelligence (AI) refers to the simulation of human intelligence in machines designed to perform tasks that typically require human cognition, such as visual perception, speech recognition, decision-making, and language translation.3 Machine learning (ML) is a critical subcategory of AI that focuses on developing algorithms that enable computers to learn from data and improve their performance over time.4 The process of ML through which computers learn to generate the desired output is referred to as “training.” ML methods are generally classified into three main categories based on their training approach: supervised, unsupervised, and reinforcement learning. In supervised learning, models are trained to predict future outcomes through the identification of patterns from labeled input–output data. Popular techniques in this category include random forests, support vector machines (SVMs), neural networks, and natural language processing (NLP) models. NLP models are designed to interpret and analyze text and speech, making them useful for tasks such as the analysis of electronic health records (EHRs). Unlike supervised learning, unsupervised learning focuses on uncovering hidden structures and relationships within the input data, such as identifying clusters or reducing data dimensionality.

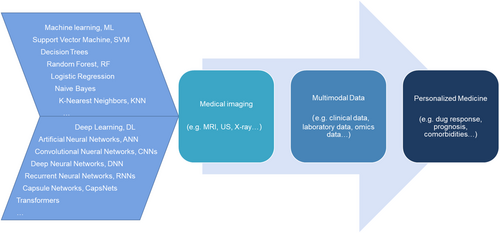

Another subset of ML is deep learning (DL), which utilizes neural networks with multiple layers (hence “deep”) to analyze various forms of data (Figure 1). DL uses artificial neural networks (ANNs) composed of multiple layers to extract complex patterns from large datasets. Among various types of ANNs, convolutional neural networks (CNNs) are particularly effective for image processing, making them invaluable for medical image analysis.5 In contrast, recurrent neural networks are designed to handle sequential data, making them suitable for tasks such as analyzing time-series medical data or predicting patient outcomes over time.6, 7 More recently, transformer models, which excel at processing sequential data through self-attention mechanisms, have gained prominence owing to their ability to provide context-aware predictions, thereby enhancing the integration and analysis of multimodal medical data8, 9 (Figure 2).

Recently, AI has emerged as a transformative tool in rheumatology.10 The capacity of AI to process and analyze vast heterogeneous datasets, including clinical records, imaging, and genomics, has established it as an indispensable tool for managing the complexity inherent in RA. By integrating diverse data modalities, AI facilitates the detection of previously unrecognized patterns, enabling early diagnosis, formulation of personalized treatment strategies, and identification of novel therapeutic targets. Moreover, AI-driven predictive models are increasingly being applied to forecast disease progression and evaluate the risk of comorbidities, such as cardiovascular complications, in patients with RA.

Despite the promise of AI in improving the early diagnosis and management of RA, it remains insufficiently mature for full integration into clinical practice. This review summarizes recent advances in the application of AI, particularly ML, DL, and NLP, in the field of RA, focusing on imaging techniques, multimodal data integration, and personalized medicine. The objective of this study was to highlight the progress made in the past 5 years and identify future research directions to enhance the management of RA.

2 RA IMAGING: RECENT ADVANCES

RA imaging primarily includes modalities such as radiography, ultrasound imaging, and magnetic resonance imaging (MRI). Each has specific applications in the diagnosis and monitoring of various diseases.11 Various AI methods, such as CNNs, have been used in medical imaging to automatically segment joint regions, detect erosions and synovitis, and quantify joint space narrowing, offering improvements in consistency and efficiency, particularly in reducing observer variability, although not necessarily surpassing human accuracy in all cases (Tables 1–3).12-30

| Year | First author | Study objective | Study methodology | Sample size (training/test) | Study results | Strengths | Weaknesses |

|---|---|---|---|---|---|---|---|

| 2024 | Peng14 | Develop an automatic diagnostic system using CNN for RA recognition and staging from hand radiographs. | CNN (AlexNet, VGG16, GoogLeNet, ResNet50, EfficientNetB2); 100 epochs, AdamW optimizer. | Training: 240, Testing: 104 | GoogLeNet: 97.80% AUC, 100% sensitivity for RA recognition; VGG16: 83.36% AUC, 92.67% sensitivity for staging. | High sensitivity and AUC, robust across architectures, especially useful in resource-limited settings. | Lower accuracy in staging, requires large annotated datasets for better performance. |

| 2024 | Izumi15 | Improve detection accuracy of ankylosis and subluxation in hand joints for mTSS estimation. | Ensemble DNNs (SSD). | Training: 210, Testing: 50 (5-fold CV) | 99.8% detection accuracy for MP/PIP joints; high precision in detecting ankylosis and subluxation. | High detection and classification accuracy, ensemble method improved overall performance. | Excluded carpal joints, limited data set size, potential issues with joint overlap and image resolution. |

| 2024 | Ma16 | Develop a DL model to distinguish RA from OA and evaluate the impact of different training parameters. | EfficientNet-B0 CNN, varied image resolution, pretraining datasets, and radiographic views. | Training: 9714 exams, Testing: 250 exams | AUC: 0.975 for arthritis, 0.955 for RA detection, kappa: 0.806 for three-way classification (RA, OA, no arthritis). | High accuracy in distinguishing RA from OA, useful across clinical settings. | Reduced performance in early RA and mild OA detection, high computational resources required. |

| 2023 | Okita17 | Develop a DL model for assessing AAS in RA patients using cervical spine X-ray images. | HRNet-based CNN for keypoint detection; ADI and SAC values compared to clinician assessments. | Training: 3480, Testing: 408 | 99.5% key coordinate identification; Sensitivity: 0.86 (ADI), 0.97 (SAC); specificity lower. | High accuracy in detecting AAS, quantitative output useful for tracking disease progression. | Lower specificity, limited to AAS, requires validation across different hospitals. |

| 2022 | Mate18 | Develop an efficient CNN model for automatic RA classification in hand X-rays. | Customized CNN, preprocessing included image resizing, noise removal, feature extraction, compared with SVM and ANN classifiers. | Training: 218 (120 RA, 98 normal), Testing: 72 (40 RA, 32 normal) | 94.64% accuracy, sensitivity: 0.96, specificity: 0.92. | High accuracy, efficient preprocessing, improved performance over other methods like SVM and ANN. | Limited data set size, potential overfitting, requires further validation with larger datasets. |

| 2022 | Miyama19 | Develop a DL system for automatic bone destruction evaluation in RA using hand X-rays utilizing contextual joint information. | DNN (DeepLabCut) for joint detection, classification models (SISO, MIMO) for binary classification. | Training: 180, Testing: 46 | 98.0% detection for erosion, 97.3% for JSN; MIMO model outperformed surgeons in erosion classification. | High accuracy in detecting and classifying bone destruction, better performance than surgeons for erosion detection. | Limited to hand joints, struggled with mild bone destruction, requires more data for improved performance. |

| 2020 | Üreten20 | Develop an automated diagnostic method using CNNs to assist in RA detection from hand radiographs. | CNN with six convolution layers, batch normalization, ReLU, max-pooling; data augmentation (rotation, translation). | Training: 135 (61 normal, 74 RA), Testing: 45 (20 normal, 25 RA) | 73.33% accuracy, sensitivity: 0.6818, specificity: 0.7826, precision: 0.7500. | Potential to assist in RA diagnosis for both specialists and non-specialists. | Moderate accuracy and sensitivity, requires larger datasets for clinical application. |

- Abbreviations: AAS, atlantoaxial subluxation; ADI, atlantodental interval; AUC, area under the curve; CNN, convolutional neural network; CV, cross-validation; DL, deep learning; JSN, joint space narrowing; MIMO, multiple-input, multiple-output; MP, metacarpophalangeal; mTSS, modified Toronto Shoulder Score; OA, osteoarthritis; PIP, proximal interphalangeal; RA, rheumatoid arthritis; SAC, spinal cord; SISO, single input, single output; SSD, single shot multibox detector; SVM, support vector machines; VGG, Visual Geometry Group.

| Year | First author | Study objective | Study methodology | Sample size (training/test) | Study results | Strengths | Weaknesses |

|---|---|---|---|---|---|---|---|

| 2024 | Chang21 | Develop a diagnostic system using U-Net with self-attention for detecting synovial hypertrophy and effusion. | SEAT-UNet (Self-Attention U-Net) | 756 (training), 170 (testing) for hypertrophy; 269 (training), 173 (testing) for effusion | 100% sensitivity, 84% Dice coefficient for hypertrophy; 86% sensitivity, 84% Dice coefficient for effusion. | High accuracy in segmentation, effective feature learning with self-attention, reduced computational requirements compared to other models. | Limited data set diversity, potential lack of interpretability, concerns about generalizability. |

| 2024 | He22 | Investigate DL models based on multimodal ultrasound images for quantifying RA activity. | ResNet-based CNN models with attention module, BiLSTM for dynamic images. | 1244 (training), 152 (test cohort 1), 354 (test cohort 2) | AUC up to 0.95 for SPD and DPD models, comparable to experienced radiologists. | High accuracy, potential to assist radiologists, especially with dynamic imaging, better performance than traditional methods. | Limited training data, potential variability across joints, challenges in model generalizability. |

| 2023 | Lo23 | Develop a CAD system using DL for knee septic arthritis diagnosis based on ultrasound images. | Vision Transformer (ViT) | 214 non-septic arthritis images, 64 septic arthritis images | 92% accuracy, AUC of 0.92 combining GS and PD features. | High accuracy, efficient computation, better performance than CNNs. | Small data set, potential overfitting, misclassification due to image quality issues like shadows. |

| 2020 | Christensen24 | Develop and evaluate a cascaded CNN model for automatic grading of disease activity in RA using ultrasound images. | Cascaded CNN | 1678 (training), 322 (testing) | 83.9% four-class classification accuracy, no significant difference in performance compared to an expert rheumatologist on a per-patient basis. | High accuracy, potentially useful as an assistive tool in clinical practice. | Misclassifications mostly occurred between adjacent classes (EOSS scores 0 to 1 and 2 to 3), limited by a small data set. |

- Abbreviations: AUC, area under the curve; BiLSTM, bidirectional long short-term memory; CAD, computer-aided diagnosis; CNN, convolutional neural network; DL, deep learning; DPD, dynamic power doppler; EOSS, the EULAR-OMERACT Synovitis Scoring; GS, gray-scale; PD, power doppler; RA, rheumatoid arthritis; SPD, static power doppler.

| Year | First author | Study objective | Study methodology | Sample size (training/test) | Study results | Strengths | Weaknesses |

|---|---|---|---|---|---|---|---|

| 2024 | Schlereth25 | Develop and validate a CNN-based approach for automated scoring of erosions, osteitis, and synovitis in hand MRI of inflammatory arthritis patients. | ResNet-3D CNN architecture trained with different MRI sequences (T1, T2 fs, T1 fs CE) for score prediction. | Training/Internal Validation: 211 MRIs from 112 patients, External Validation: 220 MRIs from 75 patients. | The CNN achieved macro AUC of 92% for erosions, 91% for osteitis, and 85% for synovitis; robust performance in external validation data set. | High diagnostic accuracy, efficient use of MRI sequences, potential to reduce the need for contrast-enhanced scans. | Limited training data size, potential underestimation of high erosion scores, performance variability with different MRI sequences. |

| 2024 | Izumi15 | Develop a DNN model to detect and classify ankylosis and subluxation in hand joints from radiographic images automating the estimation of mTSS. | SSD with VGG16 as the feature extractor; ensemble mechanism integrating multiple SSD detectors for enhanced performance. | 260 X-ray images of 130 patients (5200 PIP/IP and MP joints for ankylosis detection, 4680 PIP and MP joints for subluxation/dislocation detection). | High detection accuracy for ankylosis (99.88%) and subluxation/dislocation (99.93%); improved detection rate with ensemble methods. | High detection and classification accuracy, effective in overcoming false detections due to image artifacts, robust performance with ensemble learning. | Limited data set size, exclusion of carpal and foot joints from analysis, potential misclassification in severely deformed joints. |

| 2024 | Wang26 | Develop a DL model for the segmentation of the SC and IPFP and distinguish between three common types of knee synovitis (RA, GA, PVNS). | Semantic segmentation model trained on manually annotated sagittal proton density-weighted images; classification compared to radiologists. | Training: 233, Internal Test: 93, External Test: 50. | High segmentation accuracy (mIoU 0.555, mean accuracy 0.989) and better or comparable performance to senior radiologists in classification tasks (AUC 0.83 internal, 0.76 external). | High diagnostic performance, faster segmentation compared to manual processes, explainable AI via CAM. | Limited sample size, data from only two institutions, focused only on three types of synovitis. |

| 2024 | Fang27 | Develop a DL-based method for the automatic segmentation and quantification of enhanced synovium in RA patients using DCE-MRI. | 1D-CNN with dilated causal convolution and SELU activation for segmentation of synovitis based on TIC analysis. | Training and Testing: 28 RA patients with 407 joint bounding boxes (129 with positive synovitis). | Mean sensitivity: 85%, specificity: 98%, accuracy: 99%, precision: 84%, Dice score: 0.73 for synovitis segmentation; strong correlation with manual segmentation at pixel-based (correlation coefficient r = 0.87) and patient-based levels (correlation coefficient r = 0.84). | High accuracy, fast processing time (1 s per bounding box), potential to significantly reduce radiologists' workload. | Limited by the small data set size, potential overestimation of synovitis volume due to similarity with other tissues, no external validation data set. |

| 2024 | Schlereth25 | Train, test, and validate a CNN-based approach for automated scoring of bone erosions, osteitis, and synovitis in hand MRI of inflammatory arthritis patients. | ResNet-3D CNN architecture trained on different MRI sequences (T1, T2 fs, T1 fs CE) for score prediction; fivefold CV used for training and validation. | Training/Internal Validation: 211 MRIs from 112 patients, External Validation: 220 MRIs from 75 patients. | High macro AUC (92% for erosions, 91% for osteitis, 85% for synovitis); consistent performance across different pathologies. | High diagnostic accuracy using fewer MRI sequences, potential to reduce the need for contrast-enhanced scans. | Lower performance for synovitis compared to erosions and osteitis, struggled with high erosion scores, variability in performance based on training samples and MRI sequences used. |

| 2024 | Li28 | Develop a consistency-based DL framework for early RA prediction and classification using wrist, MCP, and MTP joint MRI scans. | Consistency-based framework using self-supervised pre-training with a MAE and contrastive loss function built on a U-Net encoder architecture for feature extraction and classification. | Training: 5945 MRI scans from 2151 subjects (1247 with early arthritis, 727 with clinically suspect arthralgia, 177 healthy controls); Testing: Separate test set not specified in size. | Mean AUC: 83.6%, 83.3%, 69.7% for three classification tasks, 67.8% for RA prediction; promising results for early RA detection via MRI using DL. | Promising results for early detection and classification, leveraging anatomical consistency for robust learning despite limited data. | Limited by data availability, potential overfitting, challenges in distinguishing RA from other arthritides, lack of external validation datasets. |

| 2022 | Folle29 | Evaluate whether neural networks can distinguish between seropositive RA, seronegative RA, and PsA based on inflammatory patterns from hand MRIs. | ResNet neural networks pre-trained on video understanding; separate networks used for each MRI sequence followed by ensemble learning. MRI sequences included T1 coronal, T2 coronal, T1 coronal CE, T1 axial CE, and T2 axial. | Training: 519 MRIs, Testing: 130 MRIs. | Highest AUC: 75% for seropositive RA versus PsA, 74% for seronegative RA versus PsA, 67% for seropositive versus seronegative RA; Psoriasis patients mostly classified as PsA indicating potential early PsA-like patterns in psoriasis. | Potential for distinguishing between different forms of arthritis using MRI, reduced need for contrast-enhanced sequences without significant loss of accuracy. | Moderate classification accuracy due to small data set size; model's performance plateaued, suggesting further improvements require larger datasets. |

| 2021 | More13 | Develop an automatic system for grading knee RA severity using MRI, focusing on JSNand KL grading. | ResNet50 architecture for classification with preprocessing by SANR-CNN for noise reduction and segmentation by MultiResUNet combined with DWT; Adam optimizer during training. | Training: 143 subjects with 23652 images, Testing: 50 subjects with 7928 images. | 96.85% accuracy, AUC: 0.98, precision: 98.31%, low mean absolute error of 0.015. | High accuracy in RA severity grading, robust preprocessing and segmentation, reduced computational time. | Limited training data, focus only on KL grading, lack of consideration for other relevant features like synovial fluid or osteophytes. |

| 2019 | Murakami30 | Develop a method for automatically detecting bone erosion in hand radiographs of RA patients to assist radiologists in diagnosis. | DCNN for classification combined with the MSGVF Snakes algorithm for segmentation. | Training: 129 cases (90 RA, 39 without RA), Testing: 30 RA cases. | TPR: 80.5%, FPR: 0.84%, average of 3.3 false-positive regions per case. | High sensitivity, low false-positive rate, potential to assist radiologists effectively in detecting bone erosions. | Over-segmentation and over-learning issues in DCNN, limited data size for training, no practical application level achieved yet. |

- Abbreviations: 1D-CNN, one-dimensional convolutional neural network; AI, artificial intelligence; AUC, area under the curve; CAM, class activation mapping; CE, contrast enhanced; CNN, convolutional neural network; CV, Cross-Validation; DCE-MRI, dynamic contrast-enhanced magnetic resonance imaging; DCNN, deep convolutional neural network; DL, deep learning; DNN, deep neural network; DWT, discrete wavelet transforms; FPR, false-positive rate; fs, fat suppressed; GA, gouty arthritis; IP, interphalangeal joint; IPFP, infrapatellar fat pad; JSN, joint space narrowing; KL, Kellgren-Lawrence; MAE, masked autoencoder; MCP, metacarpophalangeal joint; MP, metacarpophalangeal; MRI, nagnetic resonance imaging; MSGVF, multiscale gradient vector flow; MTP, metatarsophalangeal joint; mTSS, modified Toronto Shoulder Score; PIP, proximal Interphalangeal; PsA, psoriatic arthritis; PVNS, pigmented villonodular synovitis; RA, rheumatoid arthritis; SANR-CNN, sparse aware noise reduction-convolutional neural network; SC, suprapatellar capsule; SELU, scaled exponential linear unit; SSD, single shot multibox detector; TIC, time–intensity curve; TPR, true-positive rate; VGG, Visual Geometry Group.

2.1 Radiography in RA

Traditionally, radiography has been the standard for detecting joint space narrowing and erosion, which are essential for the diagnosis of RA. However, traditional scoring systems, such as the Sharp/van der Heijde method, rely heavily on manual interpretation, leading to variability and potential inaccuracies.31 Much effort has been made to improve the consistency and accuracy of radiography and reduce observer variability.

Before 2019, the applications of AI in this domain focused on automating the detection and quantification of radiographic features. For example, early ML algorithms were trained to recognize patterns in radiographs corresponding to various stages of RA, offering an efficient tool that complements manual scoring systems by reducing time and observer variability.32

Since 2019, there has been a notable shift toward the use of DL, particularly CNN, to enhance the precision of RA assessment. A landmark study in 2019 utilized a U-Net-based architecture to automatically detect synovitis in wrist radiographs, achieving a high Dice coefficient, which marked an improvement over the traditional method.33 Building on this foundation, Üreten et al.20 applied a CNN to detect RA from hand radiographs, demonstrating the potential of DL in aiding the diagnosis of RA, albeit with moderate accuracy. Miyama et al.19 introduced a more sophisticated deep neural network (DNN)-based system that leveraged contextual information from hand and wrist joints (16 joints for erosion and 15 joints for joint space narrowing) to enhance detection accuracy, particularly for bone erosion, setting a new standard in automated RA evaluation. Okita et al.17 expanded the application of DL to cervical spine radiographs using a high-resolution net to assess atlantoaxial subluxation in RA, marking an important step in tracking the progression of RA with high sensitivity.

A series of studies in 2024 further refined these methods. Peng et al.14 explored different CNN architectures, such as GoogLeNet and VGG16, to improve the accuracy of diagnosis and staging of RA from hand radiographs, achieving high area under the curve (AUC) and sensitivity. Ma et al.16 focused on distinguishing RA from osteoarthritis using EfficientNet-B0, showing impressive discriminative power but faced challenges in detecting early RA. Finally, Izumi et al.15 advanced the field by developing an ensemble of single-shot multibox detector models for detecting ankylosis and subluxation, pushing the automation of the modified total Sharp score assessment toward clinical viability.

2.2 Ultrasound imaging in RA

Ultrasound imaging is widely used to evaluate synovitis and monitor disease progression in patients with RA. Although the Outcome Measures in Rheumatoid Arthritis Clinical Trials-European League Against Rheumatism Synovitis Scoring (OESS) system standardizes ultrasound assessments, it remains dependent on the operator's skill and experience, which can lead to inconsistent results.34 The introduction of AI in this field aims to reduce observer variability and enhance the consistency of ultrasound interpretation, particularly in routine applications.

Before 2019, AI applications in RA ultrasound imaging had begun to gain traction. Andersen et al.35 were among the pioneers in this area, utilizing well-known CNN architectures, such as VGG-16 and Inception v3. By pre-training these models on the ImageNet data set, they achieved promising results, particularly in differentiating between healthy joints (OESS score: 0–1) and those with pathology (OESS score: 2–3), with an AUC of 0.93. However, the ability of these models to precisely match human scoring on detailed ordinal scales is limited.

Since 2019, there have been significant advancements in the application of AI in ultrasound imaging. A notable study in 2020 by Christensen et al.24 refined the use of cascaded CNNs to automatically score RA disease activity based on ultrasound images, achieving an accuracy of 83.9%. The scoring performed by this method demonstrated strong agreement with scoring by expert rheumatologists, highlighting the potential of AI to assist in clinical assessments. Building on this foundation, a 2023 study by Lo et al.23 employed vision transformer models for knee septic arthritis detection, achieving 92% accuracy and further improving the precision of AI-driven diagnostics. In 2024, Chang et al.21 introduced a self-attention-enhanced U-Net model that achieved 100% sensitivity in detecting synovial hypertrophy and an 84% Dice coefficient in effusion detection, marking a leap forward in the accuracy of RA-related ultrasound analysis. Xu et al.22 developed multimodal DL models incorporating residual neural network (ResNet) and bidirectional long short-term memory architectures to analyze both static and dynamic ultrasound images for RA disease activity scoring.

2.3 MRI in RA

MRI is considered the gold standard for visualizing soft-tissue changes in RA, including synovitis, bone marrow edema (BME), and cartilage loss. Despite its advantages, the traditional manual assessment of MRI, such as using the RA MRI scoring system (RAMRIS) scoring system, is time-consuming and prone to interobserver variability.36 AI techniques have been developed to automate the segmentation and analysis of MRI data, thereby significantly improving the efficiency and reliability of RA assessments.37

Before 2019, AI applications in MRI were limited and focused primarily on automated segmentation tasks. Kubassova et al.38 developed an automated system called Dynamika-RA that effectively quantified synovial inflammation and demonstrated a significant correlation with manual scoring methods. Crowley et al.39 compared manual segmentation techniques with the RAMRIS for assessing bone erosion and edema and found high intraobserver reliability but noted limitations in interobserver agreement for BME. These early studies underscored the potential of AI and automated techniques to enhance the accuracy and efficiency of MRI analysis in RA, thereby laying a critical foundation for subsequent advancements.

Building on earlier work, Aizenberg et al.40, 41 conducted a series of studies in 2018 and 2019 that significantly advanced the application of AI in RA. In 2018,40 they demonstrated the feasibility of automatically quantifying BME in patients with early arthritis using wrist MRI and achieved a strong correlation with visual assessments (correlation coefficient r = 0.83). This was followed by a 2019 study in which they applied a similar automated approach to quantify tenosynovitis in the wrist, again showing a strong correlation with visual assessments (correlation coefficient r = 0.90) but highlighting challenges, such as false detections.41 More et al.13 further developed AI applications by creating a DL framework for the automatic grading of RA severity, demonstrating the potential of this system to objectively assess disease progression. In 2022, Folle et al.29 employed advanced neural networks to classify MRI patterns among patients with psoriatic arthritis, seronegative RA, and seropositive RA, achieving high AUC values and emphasizing distinct imaging characteristics. These studies underscore the rapid advancements in AI-based MRI analysis for RA, with notable progress made in the years following 2019.

In conclusion, the past 5 years have seen rapid advancements in the application of AI, particularly DL, in RA imaging. Each imaging modality (radiography, ultrasound imaging, and MRI) has benefited from these innovations, which have improved diagnostic accuracy, enhanced the ability to monitor disease progression, and streamlined the evaluation process. The introduction of CNN has significantly shifted RA imaging research compared with traditional ML methods. Unlike ML approaches that require extensive feature engineering, CNNs automatically learn complex patterns from imaging data, enhancing precision and efficiency in detecting RA features, such as joint space narrowing, synovitis, and erosions. This has led to more advanced imaging analyses, including the use of DL models, to predict disease progression and severity with greater accuracy.

However, this advancement introduces new challenges, notably the “black-box” nature of CNNs, which makes it difficult for clinicians to interpret their decision-making. This may hinder its adoption in clinical settings where transparency is crucial. Future research should focus on applying CNNs to areas where interpretability is less critical, such as predicting objective outcomes from imaging data. For example, using radiography to predict MRI results or distinguish between inflammatory and noninflammatory arthritis could be effective applications that support clinical decision-making while improving transparency.

3 INTEGRATION OF MULTIMODAL DATA IN RA

Multimodal data refer to the integration of diverse types of medical information, such as clinical notes, medical imaging, genomic data, and biosensor readings, all of which are used to provide a comprehensive understanding of patient health. In the context of RA, this could involve the fusion of text-based EHRs, medical images, such as radiographs and magnetic resonance images, and molecular profiles, such as genetic and biochemical data.

Over the past decade, substantial progress has been made in leveraging different AI methodologies for various types of medical data, each making distinct contributions to the diagnosis and treatment of complex RA conditions. DL models have demonstrated considerable efficacy in interpreting medical images and facilitating automated detection of joint erosion and inflammatory features.42 In 2023, Ahalya et al.43 introduced RANet, a custom CNN model that detects RA from hand thermal images with up to 97% accuracy by integrating quantum techniques. Ojha et al.44 utilized a CNN to predict RA from hand images and achieved an impressive accuracy of 97.5%. Similarly, Phatak et al.45 demonstrated the effectiveness of a ResNet-101-based CNN for detecting inflammatory arthritis in hand joints using smartphone photographs, highlighting the robustness of the model across diverse skin tones without requiring feature engineering. Bai et al.46 further enhanced diagnostic accuracy by developing an ANN for RA diagnosis, achieving an AUC of 0.951.

Likewise, ML algorithms have been extensively applied to genomic data to uncover genetic markers associated with disease susceptibility.47-49 In PathME (Lemsara et al.,50), a multimodal sparse autoencoder was employed to cluster patients using multi-omics data by integrating gene expression, miRNA, and methylation profiles to identify disease subgroups. Recently, Li et al.51 employed advanced ML algorithms, such as Boruta, Least Absolute Shrinkage and Selection Operator regression, and random forests, to identify PANoptosis-associated genes in RA, focusing on key biomarkers, such as SPP1, which were validated to be crucial for RA diagnosis and treatment response. Liu et al.52 integrated transcriptomic data using clustering techniques to identify novel biomarkers and RA subtypes based on immune signatures, highlighting the potential of combining supervised and unsupervised methods for biomarker discovery and patient stratification.

NLP has also been used to extract valuable insights from clinical narratives, thereby enhancing the analysis of large-scale EHR. Humbert Droz et al.53 developed an NLP pipeline to extract RA disease activity measures from clinical notes across multiple EHR systems, thereby demonstrating the feasibility of integrating clinical narratives into multimodal frameworks for large-scale RA research. Similarly, England et al.54 applied an NLP tool to extract forced vital capacity values from EHRs of patients with RA-associated interstitial lung disease, significantly enhancing the availability of these critical data for disease monitoring. These diverse applications illustrate the broad potential of AI across different data modalities, contributing to improved outcomes in patients with RA.

Studies employing AI in multimodal data in RA are rare. Wei et al.55 employed large language models (LLMs), such as generative pre-trained transformer-3.5-Turbo, to investigate the molecular mechanisms and identify shared drugs for RA and arthrofibrosis. By analyzing over 370,000 publications and integrating bulk and single-cell sequencing data, they developed a multimodal framework highlighting the pathogenic similarities, key cell types, and therapeutic targets for both conditions. Although the integration of multimodal data using AI for RA remains in its nascent stages, preliminary trials have demonstrated promising results for other diseases. DeePaN, a graph convolutional network developed by Fang et al.,55 integrates clinicogenomic data to stratify patients with non-small cell lung cancer, outperforming other unsupervised methods and enhancing personalized treatment strategies. Similarly, the holistic AI in medicine framework,56 combines tabular, image, time-series, and textual data, resulting in a 6%–33% performance increase in tasks, such as chest pathology diagnosis and mortality prediction. In the same year, Tang et al.57 introduced FusionM4Net, which integrates clinical and dermoscopic images with metadata for skin lesion classification, achieving 77.0% accuracy, surpassing single-modality models. However, some challenges remain, such as limitations in generalizing across datasets and the need for task-specific optimization. For RA, integration of diverse data types, such as imaging, clinical records, and genomics, offers substantial promise but requires further research. Future studies should focus on data harmonization and improving model generalizability to fully unlock the potential of AI in the diagnosis and management of RA.

Despite these advancements, several challenges still need to be overcome. High-dimensional data, such as medical imaging combined with genomics and clinical records, introduce what is known as the “curse of dimensionality,” a major obstacle to effective model training and generalization. The high complexity of multimodal data often results in overfitting, which limits the applicability of models to real-world settings. Techniques such as dimensionality reduction (e.g., using autoencoders) and feature selection are crucial for mitigating these effects.

4 PERSONALIZED MEDICINE IN RA

DL-based predictive models have been developed to predict patient responses to specific RA treatments. These models analyze EHRs, including past treatment outcomes, to identify patterns that suggest the most effective therapies for individual patients.58 Recent studies have demonstrated the potential of AI-driven personalized medicine to improve outcomes in RA by tailoring treatment to the unique characteristics of each patient while still requiring clinical judgment.59

Over the past 5 years, the application of ML in utilizing multi-omics and laboratory data to predict clinical outcomes has expanded significantly. In 2019, Kim et al.60 applied a nonnegative matrix factorization approach to identify synovial transcriptional signatures associated with RA and used supervised learning models to predict the infliximab response, achieving an AUC of 0.92. Tao et al.61 applied random forest algorithms to gene expression and/or DNA methylation profiling to predict responses to adalimumab and etanercept therapies in patients with RA and achieved impressive accuracy rates of 85.9% and 79%, respectively. This demonstrates the application of supervised learning to enhance treatment personalization through high-dimensional biological data analysis. In 2021, Morid et al.62 explored the benefits of semi-supervised learning, specifically one-class SVM, over traditional supervised methods, such as extreme gradient boosting, for predicting step-up therapy in RA. In 2021, Jung et al.63 applied k-means clustering, an unsupervised learning method, to classify 1103 patients with RA into four clinical phenotypes. In 2023, Ferreira et al.64 used unsupervised k-means clustering to differentiate patients with RA based on their proteomic signatures. Anioke et al.65 used hierarchical clustering to predict the progression to inflammatory arthritis based on lymphocyte subsets. Their findings underscored the utility of unsupervised ML for integrating multi-omics data, thereby contributing to a deeper molecular understanding of the pathogenesis of RA.

DL has also seen considerable advancement over the past 5 years, particularly in the context of RA. Xing et al.66 integrated DNNs with ML models to predict the activity of inhibitors targeting key RA-related kinases, including spleen tyrosine kinase, Bruton's tyrosine kinase, and Janus kinase. This integration demonstrated the potential of DL in drug discovery for RA, in which targeting multiple pathways could lead to better therapeutic outcomes. Wang et al.67 reviewed the use of DL in combination with traditional and Western medicines to enhance RA diagnosis and treatment. They employed CNNs and cloud computing to analyze multiple data types, such as imaging and clinical records, leading to more precise diagnoses and optimized treatment plans. Kalweit et al.68 applied deep embedded clustering combined with an adaptive deep autoencoder to categorize patients with RA based on their response to biological disease-modifying antirheumatic drugs, identifying distinct patient clusters with different drug responses.

NLP has also become an important tool for improving personalized therapeutic strategies for RA, with LLMs being extensively utilized for complex data extraction and integrative analyses. Nashwan and AbuJaber69 discussed how LLMs can optimize EHRs by automating data extraction, streamlining patient communication, and enhancing decision-making, underscoring the potential of these models to improve RA management. Furthermore, Wei et al.70 utilized GPT-3.5-Turbo to explore the molecular mechanisms shared between RA and arthrofibrosis, demonstrating how LLMs can assist in drug repositioning by integrating transcriptomic and literature data, thereby supporting personalized therapeutic strategies. Advancements in LLMs have facilitated the extraction of critical clinical information including diagnoses, outcomes, and treatments, thereby enhancing the utilization of real-world clinical data for RA.

Furthermore, the integration of multimodal data may be key to advancing personalized medicine for RA.71 However, as model complexity increases, interpretability often diminishes, raising critical questions regarding balancing AI reliance with clinician expertise. The black-box nature of complex AI models necessitates their cautious deployment, particularly when weighing AI-generated recommendations against human clinical judgments. Nevertheless, the utility of AI in evaluating drug safety profiles and predicting optimal therapeutic pathways offers substantial promise for augmenting clinician decision-making and delivering individualized care. These findings underscore the transformative potential of AI for advancing personalized rheumatology.

5 CHALLENGES AND FUTURE DIRECTION

One of the major challenges in applying AI to RA research is the scarcity and quality of available data. Ensuring that the data used for the training models are comprehensive and representative of diverse RA patient populations is critical.72 Researchers are exploring methods, such as data augmentation, transfer learning, and the use of synthetic data, to enhance the model performance and generalizability.73 Recently, federated learning has been explored as a method to enhance data utilization without compromising privacy.74 This approach allows multiple sites to contribute to the model training by sharing model coefficients instead of raw data. However, federated learning involves significant challenges, such as the high costs of the central server setup and intersite communication. Moreover, it does not completely solve the data privacy issue because models can still potentially “memorize” the training data. To address this, techniques such as differential privacy have been employed to prevent data memorization with the aim of creating more secure and effective AI solutions in RA research.75, 76

In addition to technical challenges, ethical considerations are crucial to addressing errors flagged by AI when applied in RA research. If AI models support clinical decisions, the responsibility for errors owing to data inaccuracies or model misinterpretations will raise significant ethical concerns.77-79 Particularly in high-stakes contexts, such as RA diagnosis and treatment planning, any errors flagged by AI systems must be meticulously evaluated.77 Errors identified using AI tools may result from biases inherent in the training datasets, which may not fully represent the diverse population of patients with RA, potentially leading to algorithmic bias.77 Furthermore, AI-generated errors can affect patient outcomes if not managed transparently, making it essential to establish ethical guidelines for interpreting and rectifying these errors in the clinical workflow.78, 79

To mitigate these risks, it is essential to incorporate robust auditing processes and error-handling mechanisms into the deployment of AI tools.80 This approach ensures that clinicians are fully aware of the limitations of AI and can make informed decisions, balancing the recommendations from AI with clinical judgment.80, 81 The black-box nature of DL models has raised concerns regarding their interpretability in clinical settings. Efforts to develop explainable AI techniques, such as saliency maps and attention mechanisms, are critical to ensure that these models can be trusted and adopted by healthcare professionals.82 The complexity of the DL models poses a challenge in terms of their interpretability, which is crucial for their clinical adoption. Researchers are exploring various methods to improve the explainability of these models and ensure that their predictions are trusted by clinicians.42

Ethical and regulatory challenges in deploying AI for RA include concerns regarding patient data privacy, the potential for algorithmic bias, and the need for regulatory frameworks that can keep pace with rapid technological advancements.4 These challenges are compounded by the complexity of integrating AI with existing clinical workflows and ensuring that AI-driven decisions are transparent and interpretable to both clinicians and patients.83, 84 Furthermore, ensuring equitable access to AI technologies across different healthcare settings is essential to prevent the exacerbation of existing disparities in RA care.85

Future research in AI, especially DL for RA, is likely to focus on improving model accuracy, expanding the use of multimodal data, and addressing the challenges of data scarcity and model interpretability.4, 5, 42 The recent literature emphasizes the integration of clinical, genetic, and imaging data to refine prediction models and personalize treatment strategies.4, 5, 32, 42, 86 For instance, advancements, such as combining DL with multi-omics and imaging modalities, are expected to enhance predictive capabilities and provide real-time actionable insights for clinicians.32 Moreover, addressing data scarcity through transfer learning and synthetic data generation could overcome the current limitations, leading to more robust and generalizable models.

6 CONCLUSION

Over the past several years, significant advancements in AI, particularly in DL, have transformed the landscape of RA research. Traditional ML techniques have laid the groundwork for data-driven insights, and the application of DL has shown promise in contributing to various areas of RA management. These include enhancing diagnostic consistency through the analysis of complex imaging data, assisting in the identification of potential biomarkers for the prediction of treatment response, and supporting the integration of multimodal data sources to inform personalized treatment plans. However, these methods require robust clinical validation and illustration before they can be broadly adopted and accepted. Despite these advancements, challenges remain, particularly in model interpretability, the risk of overfitting, and the need for robust clinical validation across diverse patient populations. As AI techniques continue to evolve, their integration with clinical practice holds promise for the further refinement of RA diagnosis, prognosis, and treatment, thereby moving the field closer to the realization of precision medicine.

AUTHOR CONTRIBUTIONS

Yiduo Sun conceptualized the study, conducted the literature review, wrote the manuscript, and prepared the figures and tables. Jin Lin contributed to the literature review, assisted in the writing of sections related to AI methodologies and applications, and provided critical revisions. Weiqian Chen supervised the study, provided overall guidance, and finalized the manuscript. All authors read and approved the final manuscript.

ACKNOWLEDGMENTS

The authors would like to express their sincere gratitude to Professor Lanfen Lin from the College of Computer Science at Zhejiang University for her inspiring lectures, which have greatly contributed to their understanding and provided valuable insights for this work. This work was partly supported by the National Natural Science Foundation of China, Grant/Award Numbers: 82171768, 81701600.

CONFLICT OF INTEREST STATEMENT

The authors declare no conflict of interest.

ETHICS STATEMENT

This review article does not involve human participants, animal studies, or any other ethical concerns.

Open Research

DATA AVAILABILITY STATEMENT

Data sharing is not applicable to this article as no new data were created or analyzed in this review. All data used in the review are publicly available through the cited sources.