Neuroplastic changes in functional wiring in sensory cortices of the congenitally deaf: A network analysis

Michaela Ruttorf and Zohar Tal contributed equally.

Abstract

Congenital sensory deprivation induces significant changes in the structural and functional organisation of the brain. These are well-characterised by cross-modal plasticity, in which deprived cortical areas are recruited to process information from non-affected sensory modalities, as well as by other neuroplastic alterations within regions dedicated to the remaining senses. Here, we analysed visual and auditory networks of congenitally deaf and hearing individuals during different visual tasks to assess changes in network community structure and connectivity patterns due to congenital deafness. In the hearing group, the nodes are clearly divided into three communities (visual, auditory and subcortical), whereas in the deaf group a fourth community consisting mainly of bilateral superior temporal sulcus and temporo-insular regions is present. Perhaps more importantly, the right lateral geniculate body, as well as bilateral thalamus and pulvinar joined the auditory community of the deaf. Moreover, there is stronger connectivity between bilateral thalamic and pulvinar and auditory areas in the deaf group, when compared to the hearing group. No differences were found in the number of connections of these nodes to visual areas. Our findings reveal substantial neuroplastic changes occurring within the auditory and visual networks caused by deafness, emphasising the dynamic nature of the sensory systems in response to congenital deafness. Specifically, these results indicate that in the deaf but not the hearing group, subcortical thalamic nuclei are highly connected to auditory areas during processing of visual information, suggesting that these relay areas may be responsible for rerouting visual information to the auditory cortex under congenital deafness.

1 INTRODUCTION

The brain has evolved to process and integrate inputs from several sensory modalities. Congenital sensory deprivation, such as in blindness or deafness, induces significant changes in the structural and functional organisation of the brain that are accompanied by enhanced performance in some perceptual and attentional aspects of the preserved senses (for review see Alencar et al., 2019; Bell et al., 2019; Cardin et al., 2020; Hribar et al., 2020). Neuroplasticity not only alters brain areas of the deprived modality but also the intact sensory and higher-order cortical networks. One of the well-phenomena characterising congenital sensory loss is cross-modal plasticity, in which the deprived cortical areas are recruited to process information from the non-affected sensory modalities. For instance, there is large body of literature that has consistently shown that the auditory cortex of deaf individuals is activated following visual and somatosensory tasks (e.g. Almeida et al., 2015; Benetti et al., 2021; Bottari et al., 2014; Finney et al., 2003; Retter et al., 2019; Scurry et al., 2020; Vachon et al., 2013; Whitton et al., 2021). Furthermore, congenital deafness induces structural alteration to cortical and subcortical structures, particularly in the right hemisphere (Amaral et al., 2016; Hribar et al., 2020; Shiell & Zatorre, 2017; Simon et al., 2020). However, despite the growing number of studies elucidating different aspects of cross-modal neuroplasticity within auditory cortex of deaf individuals, the routes which feed the deprived auditory cortex with non-auditory inputs remain mostly unknown. Here, we examine the role of potential pathways in conveying visual information to the auditory cortex of congenitally deaf individuals using graph theory analysis.

Enhanced behavioural performance of deaf individuals in visual tasks was found to correlate with the cross-modal activation level of the auditory cortex and with cortical thickness and white matter structural changes of the right auditory cortex (Shiell & Zatorre, 2017). Lomber et al. (2010) have further provided a causal link between compensatory visual performance and auditory cortex reorganisation. The authors showed that reversible deactivation of different areas of the auditory cortex of deaf cats eliminated their superior visual abilities, thus localising specific visual functions to discrete areas in the deprived auditory cortex. Importantly, in a systematic review on animal models of deafness, Meredith and Lomber (2017) have consistently shown that cross-modal reorganisation does not rely on the emergence of novel projections to the auditory cortex but, more likely, on unmasking of existing connections. Thus, visual information may reach the auditory cortex through corticocortical connections with visual areas (Bavelier & Neville, 2002; Rauschecker, 1995), and/or through (direct or indirect) connections with subcortical structures of the visual system (for animal studies see Sur et al., 1988; Barone et al., 2013; for human studies see Amaral et al., 2016; Lyness et al., 2014). Importantly, although cross-modal effects were widely documented across a variety of species, the extent and functional relevance of such cross-modal connections seems to be highly species-specific (Meredith & Lomber, 2017) and could not be easily generalised from animal studies to humans.

In our previous work, we found that right subcortical auditory and visual nuclei (including the thalamus, lateral geniculate body (LGB) and inferior colliculus (IC)) of deaf (but not hearing) individuals were larger than their left counterparts (Amaral et al., 2016). Furthermore, we have shown that connections from the right superior colliculus to the right IC are functionally relevant to rerouting visual information to the primary auditory cortex of deaf (Giorjiani et al., 2022). These results point to a potentially central role of subcortical structures in cross-modal plasticity. But there is still the need to further examine the role of these subcortical structures in relay visual information to the auditory cortex of congenitally deaf individuals. In line with our previous results, we predict that these subcortical structures – namely thalamic visual and auditory relays—will constitute (at least one of) the pathway(s) of visual information to the deprived auditory cortex.

To do so, we analyse visual and auditory networks in deaf and hearing individuals with traditional visual stimulation paradigms under functional magnetic resonance imaging (fMRI), and use graph theory analysis to calculate the community structure of the networks and compare the connectivity patterns of different nodes between the groups.

Up to now, there are very few studies evaluating the cortical organisation differences of deaf and hearing individuals by means of graph theory: Moreover, these studies focus on resting-state fMRI (Li et al., 2016), electroencephalography (EEG) (Ma et al., 2023; Sinke et al., 2019) and structural voxel-based morphometry (Kim et al., 2014). Importantly, none of these studies examined topology changes in functional brain networks in detail. Therefore, and because cognitive functions rely on the processes happening within networks of functionally-connected brain regions rather than on local and isolated areas, we look at neuronal organisation using a typical retinotopic mapping (i.e., task-based) fMRI experiment. We did so because performance in these visual tasks is known to strongly elicit visual processing and thus allows us to functionally probe how congenitally deaf and hearing individuals process visual information. Moreover, task performance enhances neuronal activity resulting in functional connectivity between relevant brain areas being more reliable in terms of graph theory metrics (Wang et al., 2017) which allows us to map the spatio-temporal patterns of functional reorganisation at the systems level.

2 EXPERIMENTAL LAYOUT AND RESULTS

We asked a group of 31 adults (15 deaf and 16 hearing) to participate in a task-based fMRI experiment. The paradigm consisted of passively watching pictures of flickering wedges or annuli (Figure 1—see Section 4 for more details). This resulted in two experimental conditions per group: deaf watching annuli (DeafA), deaf watching wedges (DeafW), hearing watching annuli (HearA) and hearing watching wedges (HearW).

For the network analysis, we decided to concentrate on cortical and subcortical areas known to be involved in visual and auditory processing (Amaral et al., 2016) which resulted in 52 regions of interest (ROIs) listed in Table 1. These ROIs include nodes in the visual and auditory cortices as well as subcortical nuclei involved in visual and auditory processing. In Figure 2, the locations of the 52 ROIs are displayed via BrainNet Viewer software (Xia et al., 2013) (Version 1.7) by light blue spheres placed on the ICBM-152 template (Mazziotta et al., 2001). The location corresponds to the ROIs' centre coordinates as given in Table 1.

| Lobe | Hemisphere | Structure | Label | MNI centre coordinates [mm] | ||

|---|---|---|---|---|---|---|

| X | Y | Z | ||||

| Temporal | Left | Brodman area 22 | BA22 | −68 | −12 | 0 |

| Right | BA22 | 60 | −4 | 0 | ||

| Temporal | Left | Brodman area 42 | BA42 | −68 | −22 | 10 |

| Right | BA42 | 60 | −18 | 10 | ||

| Temporal | Left | Superior temporal gyrus | STG | −60 | −32 | 12 |

| Right | STG | 56 | −20 | 6 | ||

| Temporal | Left | Area TE 1.0 | Te1.0 | −46 | −20 | 9 |

| Right | Te1.0 | 48 | −14 | 7 | ||

| Temporal | Left | Area TE 1.1 | Te1.1 | −37 | −32 | 14 |

| Right | Te1.1 | 39 | −27 | 13 | ||

| Temporal | Left | Area TE 1.2 | Te1.2 | −52 | −8 | 3 |

| Right | Te1.2 | 51 | −2 | −2 | ||

| Temporal | Left | Area TE 2.1 | Te2.1 | −55 | −18 | 4 |

| Right | Te2.1 | 55 | −12 | 0 | ||

| Temporal | Left | Area TE 2.2 | Te2.2 | −60 | −24 | 10 |

| Right | Te2.2 | 52 | −26 | 11 | ||

| Temporal | Left | Area TE 3 | Te3 | −62 | −1 | −3 |

| Right | Te3 | 59 | 6 | −4 | ||

| Temporal | Left | Area TEI | TeI | −49 | −21 | 2 |

| Right | TeI | 50 | −8 | −4 | ||

| Temporal | Left | Area TI | TI | −47 | −2 | −14 |

| Right | TI | 47 | 2 | −13 | ||

| Temporal | Left | Superior temporal sulcus | STS1 | −50 | −22 | −9 |

| Right | STS1 | 50 | −13 | −7 | ||

| Temporal | Left | Superior temporal sulcus | STS2 | −58 | −5 | −19 |

| right | STS2 | 55 | −1 | −23 | ||

| Occipital | Left | V1 | hOc1 | −13 | −94 | 2 |

| Right | hOc1 | 13 | −87 | 3 | ||

| Occipital | Left | V2 | hOc2 | −13 | −102 | 12 |

| Right | hOc2 | 6 | −88 | 15 | ||

| Occipital | Left | V3d | hOc3d | −7 | −87 | 31 |

| Right | hOc3d | 4 | −84 | 35 | ||

| Occipital | Left | V3v | hOc3v | −19 | −90 | −9 |

| Right | hOc3v | 15 | −73 | −11 | ||

| Occipital | Left | V3A | hOc4d | −16 | −88 | 20 |

| Right | hOc4d | 18 | −87 | 32 | ||

| Occipital | Left | V4 | hOc4v | −29 | −84 | −15 |

| Right | hOc4v | 36 | −82 | −13 | ||

| Forebrain | Left | Pulvinar | Pul | −20 | −28 | 2 |

| Right | Pul | 10 | −28 | 2 | ||

| Forebrain | Left | Lateral geniculate body | LGB | −21 | −25 | −4 |

| Right | LGB | 25 | −25 | −4 | ||

| Forebrain | Left | Medial geniculate body | MGB | −18 | −25 | −4 |

| Right | MGB | 19 | −25 | −4 | ||

| Forebrain | Left | Thalamus | Thal | −18 | −18 | 2 |

| Right | Thal | 8 | −18 | 2 | ||

| Midbrain | Left | Inferior colliculus | IC | −6 | −38 | −13 |

| Right | IC | 7 | −38 | −13 | ||

| Midbrain | Left | Superior colliculus | SC | −5 | −35 | −4 |

| Right | SC | 7 | −35 | −4 | ||

| Brainstem | Left | Superior olivary complex | SOC | −12 | −35 | −41 |

| Right | SOC | 16 | −35 | −41 | ||

- Note: Here, we list the names of the brain areas, labels used in the text and centre coordinates (x, y, z) in Montreal Neurological Institute (MNI) space of the regions of interests in analysed functional network.

Brain networks demonstrate hierarchical modularity (or multi-scale modularity)—that is, each module contains a set of sub-modules that contains a set of sub-sub-modules, etc. (Meunier et al., 2010). Watching visual stimuli is organised in a modular way (Felleman & Van Essen, 1991). Thus, we treat the auditory and visual network as a modular network with a subset of highly functional-connected nodes. Keeping this in mind, we are able to test whether the patterns of visual information processing in deaf individuals differs from those in hearing.

2.1 Graph theory analysis

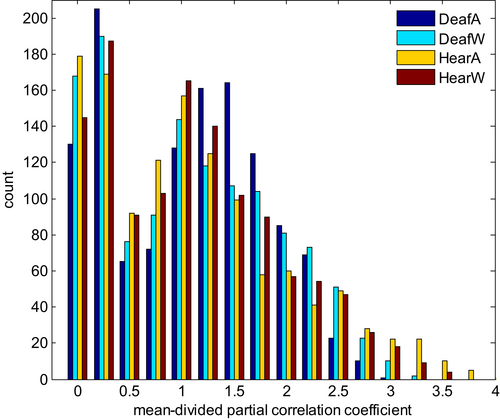

A graph is a mathematical description of a network consisting of nodes N (here: the ROIs selected) and edges k (here: functional “links” between pairs of ROIs). We analysed weighted undirected graphs averaged per group and experimental condition (see Section 4 for details of graph construction) using Brain Connectivity Toolbox (Rubinov & Sporns, 2010) implemented in MATLAB R2013a (The MathWorks Inc., Natick, MA, USA). To ensure comparability between the averaged graphs, we divided each averaged correlation matrix by its mean which leads to mean-divided partial correlation coefficients (md-pcc) larger than 1. Because we were interested in changes in underlying graph architecture in the brain between conditions and groups, we looked at topological graph metrics as community structure primarily. After graph construction, we checked for N,k-dependence (see Section 4). The number of nodes stays constant (N = 52) in all conditions, the number of edges is almost equal between conditions ( = 1202, Δk = ±19). We kept the resulting graphs while considering the gain or loss of an edge as an effect of the paradigm, we were only interested in changes between conditions and groups.

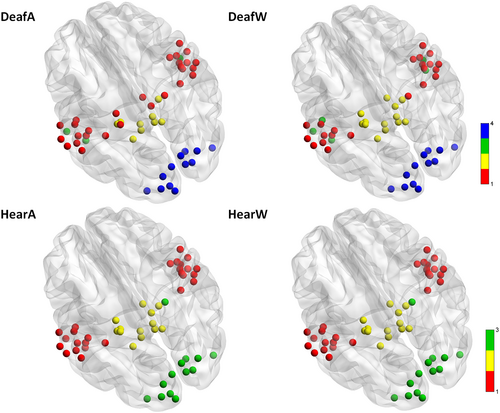

2.2 Community structure

Community structure has been identified as a sensitive marker for organisation in brain networks (He et al., 2009; Ruttorf et al., 2019). Community structure analysis detects the groups of regions more densely interconnected than expected by chance. The resulting group-level community structure was visualised by assigning a different colour to each community (see Figure 3). This was then displayed by overlaying spheres coloured by community affiliation on the ICBM-152 template as per Figure 2.

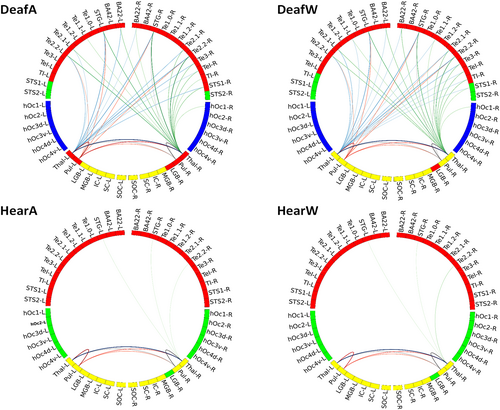

The values of modularity Q corresponding to the community structures shown in Figure 3 are all positive and almost identical (ΔQ = ±0.03). Thus, while the general modularity is similar between groups, there are differences in the number and structure of communities between deaf and hearing. There are three communities in the hearing group independent of paradigm (annuli or wedges). We labelled these communities as auditory (red), subcortical (yellow) and visual (green). On the other hand, in the deaf group, the community structure changes to four (auditory (red), subcortical (yellow) and visual (blue)) while watching either wedges or annuli. The additional community (green) includes bilateral superior temporal sulcus (STS1 and STS2), as well as the left temporo-insular region (TI) in the wedges condition, and bilateral STS2 and left STS1 in the annuli condition. Importantly, we notice several differences between the groups in the community structure of subcortical ROIs. The right LGB, part of the visual community in the hearing group, is part of the auditory community in the deaf group independent of paradigm. Furthermore, in the annuli condition, nodes from the subcortical community moved to the auditory community in the deaf group compared to the hearing group. That is, bilateral thalamic and pulvinar nuclei joined the auditory community in the deaf group, while they are part of the subcortical community in the hearing group.

We controlled for possible limitations (Sporns & Betzel, 2016; Wang et al., 2017) relevant to our experimental layout: the results shown in Figure 3 are neither subject to resolution limit of the objective function (Fortunato & Barthélemy, 2007) nor dependent on the method used to average the correlation coefficients (see Section 4 for more details).

Furthermore, we overlaid the community structure for each experimental group on their averaged weighted temporal correlation matrix before converting to absolute values to verify that negative edge weights are sparser within and denser between communities found (Traag & Bruggeman, 2009). We show that the number of communities changed depending on the group (deaf/hearing) and task performed (watching wedges/annuli). While there is no difference in community affiliation within the hearing group, there are clear effects within the deaf group regarding the tasks (see different colours in Figure 3). Next, we turned to examine the connection patterns within and across communities and looked for differences across groups and experiments. We decided to focus first on the change in strongest correlations because of the large number of edges within graphs. Therefore, we displayed all md-pccs as histogram with bins of width 0.25 (see Figure 4). We set the threshold for cut-off at 1.75 as a trade-off between still discernible plots without discarding too much information.

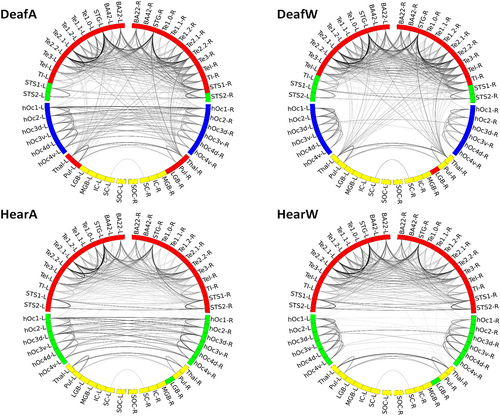

The resulting plots of the graphs are displayed using Circos software (Krzywinski et al., 2009) (Version 0.69-8) as shown in Figures 5 and 7.

As expected, the interhemispheric connection patterns of visual areas differ depending on the paradigm (watching annuli/wedges), but independent of group (deaf/hearing). While watching annuli, which spans both sides of the visual field, the right and left visual areas are strongly correlated to each other both in deaf and in hearing group. In the wedge condition, the visual areas exhibited less bilateral connections, as the stimuli are not evenly distributed across the visual field sides. Importantly, the differences between the groups are mostly evident in the connections between auditory and subcortical areas. While the graph plots in the hearing group show a clear distinction between visual, auditory and subcortical areas, the plots in the deaf show a denser connectivity pattern of the thalamic and pulvinar nuclei with auditory areas.

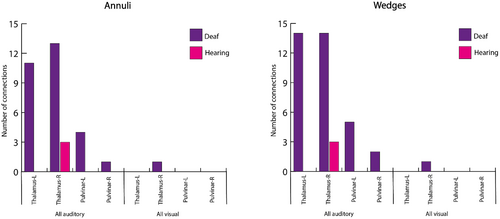

Therefore, we calculated the overall number of connections plotted in Figure 5 per ROI with all auditory and visual areas. This pointed to four areas which differ between the groups: the bilateral thalamic and pulvinar ROIs (see Figure 6). Both thalamus and pulvinar are highly connected to auditory areas in deaf, but not in hearing group—independent of experimental condition. Importantly, there are no differences in the number of connections to visual areas, and thus this is specific to connectivity with auditory areas.

Based on the results shown in Figure 6, we turned to explore the specific connectivity pattern in these ROIs. Figure 7 presents the circular graph plots of the four ROIs, highlighted in different colours. Group differences are clearly observable due to the dense connectivity of the thalamic and pulvinar nuclei with auditory areas in the deaf group. These connections spread both to ipsi- and contralateral hemispheres, including early and associative auditory areas.

3 DISCUSSION

Sensory deprivation provides an excellent opportunity to investigate neural plasticity. Several underlying mechanisms have been suggested to explain cross-modal organisation following sensory deprivation. These include unmasking of existing connections that are normally silent, stabilisation of transient connections that are usually trimmed during normal development and sprouting of new connections (Bavelier & Neville, 2002; Rauschecker, 1995). We analysed visual and auditory networks of deaf and hearing individuals during different visual tasks, assessing changes in network community structure and connectivity patterns. Both lines of analysis revealed differences between the deaf and hearing groups.

3.1 Differences in community structure

The community structure analysis of the networks revealed several differences in the subcortical connectivity patterns between the deaf and hearing groups: (1) the right LGB moved from visual to auditory community (for both experimental conditions) in the deaf group; and (2) the bilateral thalamus and pulvinar joined the auditory community in the deaf group for the annuli task (but not for the wedges task). These findings are in line with human studies and animal models of deafness. First, the community switch of the right LGB found in our study corroborate previous results showing that the auditory cortex of congenitally deaf individuals processes and represents visual information in a way that favours the right hemisphere (Almeida et al., 2015; Amaral et al., 2016; Finney et al., 2001, 2003). Second, plasticity of subcortical structures and connections has been found in human deaf individuals and animal models, and support the visual behavioural changes observed following deafness. For instance, a former study of our group (Amaral et al., 2016) showed that the right thalamus, right LGB, and right IC are larger than their left counterparts in deaf but not in hearing individuals. Results from studies with deaf cats also imply that subcortical regions may play a role in the transmission of visual information to the auditory cortex in deaf (Butler et al., 2018; Kok & Lomber, 2017) for example, through direct connections from the lateral posterior nucleus to different subareas of the auditory cortex of deaf animals (Barone et al., 2013). Overall, then, our data, and these results, suggest that in congenital deafness, visual information could be redirected to the auditory cortex by these subcortical structures.

Another finding emerging from the community structure analysis is the difference in the number of communities. While in the hearing group the nodes are divided into three communities (visual, auditory and subcortical community), there are four communities in the deaf group. The fourth community includes bilateral STS1 and STS2 as well as left TI while watching wedges, and bilateral STS2 and left STS1 while watching annuli. STS1 and STS2 regions, which correspond to middle and anterior/ventral STS regions, are part of the extended auditory cortex, and have been associated with multimodal processing, such as association learning of arbitrary audiovisual stimuli (Tanabe et al., 2005) or audio-visual motion integration (Scheef et al., 2009). Furthermore, these areas exhibit alteration in their cross-modal activity following sensory deprivation, in a way that increases their responses to bimodal (as well as unimodal) visual and somatosensory stimuli in deaf individuals (Karns et al., 2012). The emergence of the fourth community in the deaf group in our study indicates that during visual stimulation, these nodes were highly interconnected, while showing reduced connectivity to other areas including those of the auditory community. One potential explanation for these findings might be related to the sources of the cross-modal inputs arriving to primary and associative auditory areas. While both early and associative cortices could be recruited to process cross-modal stimuli, it might be the case that the early auditory cortex receives visual information mainly via subcortical connections, while the main source for visual information in the associative areas are cortico-cortical connections. This difference could be reflected in the pattern of internal connections within auditory cortex. This hypothesis is supported by our analysis of the number of connections (see Figure 7), which shows that the thalamic and pulvinar nuclei are highly connected mainly to nodes of the early auditory areas. Moreover, altered interregional connectivity within auditory networks in deaf has been found in human and animal resting-state studies (Li et al., 2013; Stolzberg et al., 2018). For example, Stolzberg et al. (2018) reported reduced connectivity between the dorsoposterior (including primary auditory area) and the anterior auditory networks in deaf cats, which might reflect a reduced influence of early auditory cortex following cross-modal plasticity. Future studies aiming to explore the inter- and intra-regional functional connectivity of auditory areas during other non-auditory tasks (such as somatosensory and motor stimuli) could be used to further test and generalize this hypothesis.

3.2 Differences in number of connections

Subcortical structures show higher connectivity patterns to auditory areas in deaf than in hearing group. Further analysis of the number of overall connections shows more connections of the bilateral thalamic and pulvinar ROIs to auditory areas in the deaf group. No differences were found in the number of connections of these nodes to visual areas, and thus these results do not reflect a general change in connectivity patterns, rather they are specific to connectivity with the auditory domain. Studies with animal models of deafness collectively indicate that the distribution of cortical and thalamic afferents to deprived cortical auditory areas is similar between hearing and deaf animals (Kok & Lomber, 2017; Meredith et al., 2016). Thus, it is unlikely that the documented functional cross-modal reorganisation of these areas relies on the emergence of novel projections, or the stabilisation of transient connections. Rather, accumulating evidence suggest a role for the strengthening or re-weighting of existing inputs at the synaptic level (Clemo et al., 2017). These processes might occur at the subcortical level, in which intramodal inputs are (re)directed to subcortical relay nuclei (Allman et al., 2009). For example, Kok and Lomber (2017) studied thalamic projection to the dorsal zone (an auditory area that shows cross-modal reorganisation and mediate enhanced visual motion perception in deaf cats) and showed that while the general thalamo-cortical pattern was similar between hearing, early and late deafened animals, some differences in the strength of these connections were observed. Specifically, reduced connectivity strength was found in some auditory thalamic nuclei of deaf animals, and increased connectivity was observed in other multisensory and visual thalamic nuclei (such as the suprageniculate nucleus which is involved in visual motion processing). The authors suggested that existing projections from these regions are likely to be involved in the improved visual motion detection observed in deaf animals. Indeed, large body of anatomical studies indicates that some thalamic nuclei (including for example the medial pulvinar, lateral posterior, medial dorsal and central lateral nuclei) receive converging information from different sensory modalities, that could be transmitted directly from other sensory relay nuclei or via cortico-thalamic connections (for review see Cappe et al., 2012). These thalamic nuclei, that have been associated with multisensory integration under normal sensory development, could provide the anatomical support for cross-modal plasticity in cases of sensory deprivation.

The pulvinar nucleus was traditionally associated with visual processing, due to their dense reciprocal connections with cortical and subcortical visual areas (Bridge et al., 2016) and its involvement in visual spatial attention (Snow et al., 2009). However, recent studies indicate that the pulvinar nuclei are widely connected to other sensory cortices, as well as to higher-order cortical areas (Froesel et al., 2021). For example, both inferior pulvinar and medial pulvinar subnuclei are interconnected to early and associative visual and auditory areas. It has been recently proposed that the pulvinar supports sensory processing such as stimuli filtering and detection for all sensory modalities, as well as being involved in global cognitive functions such modulating of behavioural flexibility (Froesel et al., 2021). Thus, although the functional role of the pulvinar is still largely unknown, accumulating anatomical and functional evidence point to a potential role of these nuclei in multisensory processing (Tyll et al., 2011; Vittek et al., 2023). For example, a recent study of the posterior lateral nucleus (the rodent homologue of primate pulvinar) found that visual inputs modulated the activity of neurons in the primary auditory cortex and that silencing the lateral posterior nucleus diminished this modulation (Chou et al., 2020). The authors proposed that a multisensory pathway including the superior colliculi, the pulvinar nuclei and the primary auditory cortex contributes to cross-modal modulation of auditory processing. Furthermore, the pulvinar showed enhanced functional connectivity during speech comprehension with both primary visual and auditory cortex in blind participants, suggesting a recruitment of these subcortical pathways for auditory processing in blindness (Dietrich et al., 2015). Our results suggest that these pathways might also play an important role in directing visual information to the deprived auditory cortex. Although the spatial resolution in our study was not sufficient to associate specific sub-nuclei of the pulvinar that showed increase connectivity with auditory areas, we speculate that the inferior and medial subnuclei might play an important role in cross-modal plasticity.

Overall, and taken together, our results indicate that in the deaf group, subcortical thalamic nuclei are highly connected to auditory ROIs during processing of visual information, suggesting neuroplastic alterations in auditory thalamic relays, so that these relays can transmit visual information to the auditory cortex. Importantly, these changes were related with tasks that require extensive visual processing. These findings reveal substantial neuroplastic changes occurring within the auditory network caused by deafness. The observed alterations in subcortical structures emphasise the dynamic nature of the auditory system in response to congenital deafness. These neuroplastic changes in the auditory network provide important insights for the design and optimisation of cochlear implants and other sensory substitution devices. The understanding of the complex adaptive processes taking place within subcortical structures, in addition to the auditory cortex, is crucial for the development of effective interventions targeting congenital sensorial deprivation. By considering these neuroplastic changes, researchers and engineers can enhance the performance and success rate of auditory prosthetic devices, ultimately improving the quality of life for individuals with auditory impairments.

4 METHODS

4.1 Participants

We examined a group of 31 participants, 15 congenitally deaf (mean age = 19.4 years, 2 males) and 16 hearing individuals (mean age = 19.1 years, 3 males), which had no history of neurological disorder and normal or corrected-to-normal vision. The study adhered to the Declaration of Helsinki and was approved by the institutional review board of Beijing Normal University Imaging Center for Brain Research, China. All participants gave written informed consent after a detailed description of the complete study. All deaf participants used Chinese sign language as primary language, and none could communicate using oral language in more than single words. The hearing loss of the deaf participants lay above 90 dB binaurally (tested frequency range: 125–8000 Hz); five of them used hearing aids in the past but did not benefit from it. Deafness was caused either by genetic, pregnancy-related diseases and complications at childbirth or ototoxic medications, respectively, or by reasons unknown. All hearing participants had no knowledge of Chinese sign language and no hearing impairment.

4.2 FMRI paradigm

In the two experimental conditions, participants viewed two types of visual stimuli (rotating wedges and expanding annuli). These types of stimuli are typically used to study the processing and representation of low-level visual information, and specifically, the main two spatial dimensions that govern the organization of early visual areas, namely eccentricity and polar angle. Previous studies have shown that the enhanced performance of deaf in some visual tasks is modulated by the spatial location of the stimuli (see, e.g., Bavelier et al., 2000; Almeida et al., 2018) and that the early auditory cortex of deaf process low-level visual information (Almeida et al., 2015). Thus, in the current study we focused on these types of stimuli to study the network community structure and connectivity patterns in the hearing and control groups. Participants went through four runs in the MRI scanner and viewed two types of visual stimuli (rotating wedges and expanding annuli). Visual stimuli were presented in a blocked design with five blocks per run. Each run started with a resting period of 12 s that was followed by a 48-s block of stimuli. Each stimulation block was followed by a 12-s inter-bock-interval (IBI). In two runs (“Wedge condition”) the visual stimuli included a random sequence of four images showing of counterphase flickering (5 Hz) checkerboard wedges subtending 10.50° of the visual angle, that were located on the left and right parts of the screen along the azimuth plane, and upper and lower parts of the screen along the meridian plane (see Figure 1a). In the second condition (“Annuli condition”) the visual stimuli included a random sequence of four images showing counterphase flickering (5 Hz) rings subtending 9.23°, 6.61°, 3,97°, or 1.30° of the visual angle (see Figure 1b). Participants were asked to maintain fixation on a central point throughout the entire run. All participants completed two runs per condition in which the four wedge stimuli or the four annuli randomly alternated—which resulted in recording 312 functional volumes per condition. The data utilized in the current study was published in a previous study (Almeida et al., 2015), which investigated processing of low-level visual information in the auditory cortex of deaf.

4.3 Data acquisition and pre-processing

MRI data acquisition was performed at a 3 T MAGNETOM Trio whole-body MR scanner (Siemens Healthineers, Erlangen, Germany) using a 32-channel head coil. For structural MRI data, a T1-weighted magnetisation prepared rapid gradient echo (MPRAGE) sequence (repetition time (TR) = 2530 ms, echo time (TE) = 3.39 ms, flip angle (α) = 7°, field of view (FoV) = 256 × 256 mm2, matrix size = 256 × 192, bandwidth (BW) = 190 Hz/px) was used. The fMRI data was acquired using a T2*-weighted gradient-echo echo planar imaging (EPI) sequence (TR = 2000 ms, TE = 30 ms, FoV = 200 × 200 mm2, matrix size = 64 × 64, slice thickness = 4 mm + 0.6 mm gap, α = 90°, BW = 2520 Hz/px). In both measurements, no parallel imaging was used. Each fMRI volume consisted of 33 axial slices recorded in interleaved slice order covering the whole brain. Before pre-processing, the first two volumes of each run were discarded to allow for T1 saturation effects.

Pre-processing was conducted with Statistical Parametric Mapping software (SPM12 (v7771), Wellcome Trust Centre for Neuroimaging, Institute of Neurology, University College London, UK) implemented on MATLAB R2013a. Functional volumes were slice time corrected to reference slice 2 (middle slice in time) and realigned to the third volume by minimizing the mean square error (rigid body transformation) in order to correct for head movement. Five deaf and six hearing participants were excluded because of excessive motion estimates (greater than 2 mm in translation and 2° in rotation). The generated mean image was coregistered to each participant's MPRAGE which was normalised into standard stereotactic space (Montreal Neurological Institute (MNI), Quebec, Canada) using tissue probability maps included in SPM12. The nonlinear transformation parameters were then applied to the functional images. For analysis of functional brain networks, the overall mean time series from each of 52 brain regions involved in visual and auditory processing (see Table 1) were extracted using the EBRAINS multilevel Human Brain Atlas (Amunts et al., 2020) and the Human Atlas within WFU PickAtlas Tool v2.4 (Maldjian et al., 2003), respectively. ROIs for superior olivary complex and inferior colliculus were taken from Mühlau et al. (2006), those for superior colliculus from Limbrick-Oldfield et al. (2012) and extracted as described therein. All time series were z-normalised and high-pass filtered (0.008 Hz) to remove low-frequency scanner drift before constructing functional brain networks.

4.4 Construction of functional brain networks

Each of the 52 ROIs selected above represents a single node in the resulting functional network. From the extracted overall mean time series, we obtained a temporal correlation matrix (size 52 × 52) for each participant by computing the Pearson partial correlation coefficients with controlled variables as implemented in MATLAB R2013a between time series of every pair of ROIs, while controlling for effects of noise. As covariates of non-interest for noise correction, we grouped the mean time series from white matter and cerebrospinal fluid extracted for each participant individually along with each participant's motion parameters derived from the realignment step in pre-processing and the effects of the paradigm. For each temporal correlation calculated, a p-value is given based on Student's t distribution. To minimise the number of false-positives, we used a significance level of p < 0.0002 (Bonferroni correction) and removed from the temporal correlation matrix of each participant those correlations whose significance was below this level. The remaining correlations can be interpreted as connections or edges between the nodes of the functional network. Here, the values of the correlation coefficients serve as edge weights showing the strength of a relation. While binary values enhance contrast, they may also hide important information as edge weights below or above threshold may vary substantially between conditions. Weighted graph analysis preserves this information. In our analyses, to avoid negative edge weights we converted them to absolute values because we were interested in any differences between the two groups. For averaging of correlation coefficients, we used the Olkin–Pratt (Olkin & Pratt, 1958) estimator which is supposed to be least biased (for more details see Ruttorf et al., 2019). Finally, we divided each correlation matrix by its mean to ensure comparability because graph properties are highly dependent on the average weight of a network.

4.5 Graph theory metrics

In general, networks are represented as sets of nodes N and edges k. Below, we refer to graphs explicitly because this does not make any assumptions on the nature of the edges but rather emphasises the aspect of mathematical modelling because “network” generally refers to real-world connected systems (De Vico Fallani et al., 2014).Graphs are said to be unweighted if edges are either only present or absent—or weighted if edges are assigned weights. Graphs are undirected if edges do not contain directional information and directed if they do. Here, we analysed weighted undirected graphs by means of graph theory using the Brain Connectivity Toolbox (Rubinov & Sporns, 2010) (BCT, version 2017-01-15). All graphs analysed are connected. Graph theory metrics depend on the number of N and k (van Wijk et al., 2010) (N,k-dependence) as well as on the choice of correlation matrix and edge weights (Phillips et al., 2015). N,k-dependence can have two effects on graph theory metrics: (i) true effects are masked by opposite effects and (ii) significant effects are introduced. Here, we have primarily looked at graph theory metrics that are less sensitive to changes in N and k like topological metrics. First, we compared the graphs of the two conditions and groups concerning number of edges to address N,k-dependence of graph metrics. The number of nodes (here: 52) stays constant throughout conditions and groups. Then, we looked at topological metrics such as modularity, community structure and correlation strength.

4.5.1 Degree

Node degree is the number of edges connected to a node. During calculation of node degree using BCT, weight information on edges is discarded (van Wijk et al., 2010).

4.5.2 Modularity

4.5.3 Community structure

ACKNOWLEDGEMENTS

For the publication fee, we acknowledge financial support by Deutsche Forschungsgemeinschaft within the funding programme “Open Access Publikationskosten” as well as by Heidelberg University. Open Access funding enabled and organized by Projekt DEAL.

FUNDING INFORMATION

Jorge Almeida is supported by the European Research Council (ERC) under the European Union's Horizon 2020 research and innovation programme Starting Grant number 802553 “ContentMAP”.

CONFLICT OF INTEREST STATEMENT

The authors declare no conflicts of interest.

Open Research

DATA AVAILABILITY STATEMENT

The data that support the findings of this study are available upon reasonable request from one of the corresponding authors (Jorge Almeida).