Toward Bayesian Data Compression

Abstract

In order to handle large datasets omnipresent in modern science, efficient compression algorithms are necessary. Here, a Bayesian data compression (BDC) algorithm that adapts to the specific measurement situation is derived in the context of signal reconstruction. BDC compresses a dataset under conservation of its posterior structure with minimal information loss given the prior knowledge on the signal, the quantity of interest. Its basic form is valid for Gaussian priors and likelihoods. For constant noise standard deviation, basic BDC becomes equivalent to a Bayesian analog of principal component analysis. Using metric Gaussian variational inference, BDC generalizes to non-linear settings. In its current form, BDC requires the storage of effective instrument response functions for the compressed data and corresponding noise encoding the posterior covariance structure. Their memory demand counteract the compression gain. In order to improve this, sparsity of the compressed responses can be obtained by separating the data into patches and compressing them separately. The applicability of BDC is demonstrated by applying it to synthetic data and radio astronomical data. Still the algorithm needs further improvement as the computation time of the compression and subsequent inference exceeds the time of the inference with the original data.

1 Introduction

One of the challenges in contemporary signal processing is dealing with large datasets. Those datasets need to be stored, processed, and analysed. They often reach the limit of the available computational power and storage. Examples include urban technology,[1] internet searches,[2] bio-informatics[3] and radio astronomy.[4] In this paper we discuss, how such huge datasets can be handled efficiently by compression.

In general, there are two categories of data compression methods: Lossless compression and lossy compression. From lossless compressed data one can regain the full uncompressed data. This limits the amount of compression as only redundant information can be taken away by a lossless scheme. Lossy compression is more effective in terms of reducing the storage needed by the compressed data. This is possible at the cost of loss of information.

In this work, we focus on lossy compression methods. The considered scenario is: compressing data which carries information about some quantity of interest, which we call the signal. Only the relevant information for this signal needs to be conserved. Therefore, there is no need to regain the full original data in such applications.

Many lossy compression schemes have been developed: Rate distortion theory (ref. [5], pp. 301–307) gives a general approach stating the need of a loss function, which shall be minimized in order to find the best compressed representation of some original data d. As a consequence of the Karhunen–Loéve theorem,[6-8] principal component analysis (PCA)[9, 10] can also be used for data compression. Its aim is to compress some data, such that the compressed data carries the same statistic properties as the original. It was shown that PCA minimizes an upper bound of the mutual information of the original and the compressed data about some relevant signal.[11] Both methods aim to reproduce the original data from the compressed data, but are not specifically optimized to recover information about the actual quantity of interest.

Before compressing data, one should be clear about the signal on which one wants to keep as much information as possible. In a Bayesian setting, this means that the posterior probability of the signal conditional to the compressed data should be as close as possible to the original posterior that was conditioned on the original data.

The natural distance measure between the original and compressed posterior to be used as the action principle is the Kullback Leibler (KL) divergence.[12] From this, we derive Bayesian data compression (BDC). Using the KL divergence as the loss function reduces the problem of finding the compressed data representation to an eigenvalue problem equivalent to the generalized eigenvalue problem found by ref. [13]. In this work, we give a didactic derivation and show how this approach can be extended to nonlinear and non-Gaussian measurement situations as well as to large inference problems. This is verified using synthetic data with linear and nonlinear signal models and in a nonlinear astronomical measurement setup.

This publication is structured as follows: In Section 2, we assume the setting of the generalized Wiener filter: a linear measurement equation, for a Gaussian signal sensed under Gaussian noise.[14] There, the optimal compression for prior mean reduces to an eigenvalue problem. In Section 3, this is generalized to nonlinear and non-Gaussian measurements. Furthermore, we show how a sparse structure can be utilized on the compression algorithm making it possible to handle large datasets in a reasonable amount of time. In Section 4, BDC is applied to synthetic data resulting from a linear measurement in one dimension, a nonlinear measurement in two dimensions, and real data from the Giant Metrewave Radio Telescope (GMRT).

2 Linear Compression Algorithm

We approach the problem of compression from a probabilistic perspective. To this end, we juxtapose the posterior probability distribution of the full inference problem with a posterior coming from a virtual likelihood together with the same prior. The goal is to derive an algorithm which takes the original likelihood and the prior as input and returns a new, virtual likelihood that is computationally less expensive than the original likelihood. This shall happen such that the resulting posterior probability distribution differs as little as possible from the original posterior.

The natural measure to compare the information content of a probability distribution and an approximation to it in the absence of other clearly defined loss functions is the KL divergence, as shown in ref. [12]. Minimizing the KL divergence completely leads to the criteria for the most informative likelihood.

2.1 Assumptions and General Problem

- 1. The signal s, which is a priori Gaussian distributed with known covariance S, has been measured with a linear response function Ro. The resulting original data do is subject to additive Gaussian noise with known covariance No. In summary,

(1)where we denote definitions by “≔ ”, with “:” standing at the side of the new defined variable, and and are drawn from zero centered Gaussian distributions. The notation indicates that s is drawn from a Gaussian distribution with mean s0 and covariance S. For the signal prior, s0 is zero.

- 2. The compressed data dc, which is going to be lower dimensional compared to the original data do, is related to the signal s linearly through a measurement process with additive Gaussian noise with covariance Nc and response Rc, which need to be determined,

(2)

We call the measurement precision matrix. Our goal is to find the compressed measurement parameters such that the least amount of information on the signal s is lost as compared to .

Only the last term of this new KLo, c in Equation (14) depends on the original data. The more the compressed response Rc preserves the information of the original posterior mean mo, the smaller this term becomes which reduces KLo, c. Thus, a response Rc that is sensitive to mo is favored. The original posterior mean mo will typically exhibit large absolute values in signal space where the original response was of largest absolute values. This gives an incentive for the compressed response toward the original one.

In this section, we looked at the posterior distributions in the context of Gaussian prior and Gaussian likelihood in a linear measurement setup. By minimizing the Kullback–Leibler divergence with respect to the compressed data, we found an expression for the latter. Plugging in this expression, KLo, c only depends on the compressed response and noise covariance.

2.2 Information Gain from Compressed Data

We see that the relevant part of KLo, c splits up into a sum over independent contributions associated to the individual compressed measurement direction , each of which belongs to a specific compressed data point . Since KLo, c expressed this way is additive with respect to the inclusion of additional data points, the sum in (28) can easily be extended. To minimize KLo, c with respect to w (or r, respectively), the contributions can be minimized individually with respect to their respective compressed measurement direction .

2.3 Optimal Expected Information Gain

In order to find the compressed data point which adds most information to the compressed likelihood, (34) needs to be maximized with respect to the normalized vector . For zero posterior mean , this problem reduces to an eigenvalue problem as shown in Appendix A. We proceed by treating the general case of non-vanishing mo.

There is only one normalized vector (respectively ) left to be determined for each . However, in the current form, (34) cannot be maximized analytically. The main issue is the last term that contains and which in the following we treat stochastically using the prior signal and noise knowledge on the signal s and the noise no only. Thereby, we can calculate the expected information gain.

2.4 Algorithm

Now, the previously derived method shall be turned into the actual BDC. For that we need to solve eigenvalue problem (44). For compressing the data to kc data points, one needs to determine the kc largest eigenvalues and corresponding eigenvectors that belong to the most informative measurement directions. First we derive an estimate for the fraction of information stored in the compressed measurement parameters if we compute only a limited number of eigenpairs, that is, eigenvalues and corresponding eigenvectors. Then, we discuss some details of how to compute the input parameters for getting the compressed measurement parameters, that is, for the eigenvalue problem (44) and how to solve it.

Due to computational limits, in general we cannot determine all K eigenpairs of (44) carrying information. We need to set the number of most informative eigenpairs being determined numerically. For that limited number of eigenpairs, we derive a lower bound of the information stored in the corresponding compressed measurement parameters in the following. If we are only interested in a certain amount of information we can use this bound to find and neglect eigenpairs containing too little information, such that in the end, we have eigenpairs containing still enough information.

The eigenpairs carrying information are those with non-zero eigenvalue . The number K of non-zero eigenvectors is equal to the rank of . As Gaussian covariances, S and No are positive definite and therefore have full rank, the original response Ro has at most a rank equal to the smaller rank of both covariances. Thus, with (7), the rank of and therefore the number of informative eigenpairs K is equal to the rank of Ro. Altogether the compressed measurement parameters can maximally carry the total information . I is the difference in information stored in the posterior, when considering all informative eigendirections compared to having no compressed data, . For no compressed data, the compressed posterior distribution becomes the prior distribution with covariance S. Thus, I is the total information about the signal s encoded in the original data with respect to prior knowledge.

Now we can find the minimum number of eigenpairs containing at least information by finding the smallest number of eigenpairs kc such that (57) holds and then forget all eigenpairs with a larger index than kc.

In case , some eigenpairs contain no additional information to the prior knowledge and the information gain of the last eigenpair is zero. Then we have stored all information () in the compressed data with non-zero eigenvalue and Equation (57) is automatically fulfilled for given .

With the information fraction γ, we have found a quantification of how much of the available information is stored in the compressed measurement parameters. For limited number of computed eigenpairs, one can still estimate γ by its upper and lower bounds. Next, we discuss some details of the computation of the input parameters of BDC and its final implementation.

The eigenvalue problem (44) can then be solved by an Arnoldi iteration.[15]

This leaves us with basic BDC as summarized in Algorithm 1. We first compute the original posterior mean and prior covariance using the prior dispersion. Given the original data do, response Ro and inverse noise covariance , we can compute the fidelity matrix to solve eigenvalue problem (44) for the largest eigenvalues. If a minimal amount of information fraction that shall be encoded in the compressed data is specified, we can determine the largest index kc so that Equation (57) holds and only save those kc eigenpairs that carry that much information. Then we normalize the eigenvectors with respect to the norm induced by the prior covariance to finally determine the compressed measurement parameters according to Equations (51)–(53).

Algorithm 1. Basic Bayesian Data Compression

| 1: | procedure compress, Ro, , do, , |

| 2: | |

| 3: | |

| 4: | compute largest eigenpairs of |

| 5: | find smallest kc, such that (57) holds. |

| 6: | for every do |

| 7: | forget |

| 8: | for every do |

| 9: | |

| 10: | , with unit vectors |

| 11: | |

| 12: | |

| 13: | returndc, Rc, |

In the linear scenario the full Wiener filter needs to be solved. Thus, the computational resources required to compute and store the compressed measurement parameters exceed the resources saved by the compression. It would be of benefit in a real world application if the eigenfunctions could be re-used in repetitions of the same measurement and do not need to be computed again. BDC's main benefit lies in the nonlinear scenario with a nonlinear response inside the measurement equation. There, the inference appears to be more complicated, but BDC enables us to exploit information stored in the data further while calling the original data and response less often.

3 Generalizations

3.1 Generalization to Nonlinear Case

- 1. Compress the original measurement parameters with prior knowledge and original measurement parameters as input.

- 2. Infer the posterior mean given the compressed measurement parameters. This will only be an approximate solution.

- 3. Approximate the original posterior around the inferred mean and use it as the new prior to start again with the first step.

3.2 Utilization of Sparsity

High-dimensional data are difficult to handle simultaneously. For the eigenvalue problem of BDC, it is more efficient to solve a larger number of lower dimensional problems. For the signal inference, it is beneficial to ensure that the response Rc and noise Nc of the compressed system are sparse operators. This can be achieved by dividing the data into patches to be compressed separately. For that, we use the fact that not every data point carries information about all degrees of freedom of the signal at once. Data points that inform about the same degrees of freedom of the signal can then be compressed together exploiting sparsity of the compressed measurement directions. This also has the advantage of lower dimensional eigenvalue problems to be solved, saving computation time. The separately compressed data of the patches as well as corresponding responses and noise covariances are finally concatenated.

An example would be data and signal that are connected via a linear mask hiding parts of the signal from the data as discussed in Section 4.2. If then the signal is correlated in space, we can divide the data into patches which carry information about the same patch in signal space.

Alternatively, this method can be used to compress data online, that is, while data is measured one can collect and process it blockwise as suggested by ref. [19] such that the full data never has to be stored completely. After each compression, the reconstruction of the signal takes the concatenated measurement parameters, where the compressed response is now sparse, and solves the inference problem altogether.

The signal is not affected by the patching. Signal correlations are still represented via the signal prior covariance S, and therefore also present in the compressed signal posterior. Since the reconstruction is running over the full problem, its result is not biased due to patchwise compression. In principle, any kind of compression could be specified via introduction of arbitrary Rc and Nc into Equation (11). The resulting reconstruction would all be unbiased, but of course, less accurate.

To summarize, we separate the data into patches. The data of every patch is compressed separately leading to compressed measurement parameters for every patch. Prerequisite for treating the patches separately is that the noise of the individual patches is uncorrelated between the patches. By concatenating the compressed measurement parameters of all patches, we get all operators needed for the compressed signal posterior. This removes the need to store the compressed responses over the entire signal domains. Only their patch values have to be stored, saving memory and computation time.

4 Application

Now, the performance of BDC is discussed for applications of increasing complexity, first for a linear synthetic measurement setting and then for a nonlinear one. For the latter, we demonstrate the advantage of dividing the data into patches and compressing them separately. Finally, the compression of radio interferometric data from the GMRT is discussed.

4.1 Synthetic Data: Linear Case

First, the BDC is applied to synthetic data in the Wiener filter context. This means all probability distributions such as prior, likelihood, and posterior are Gaussian and the data are connected to the signal via a linear measurement equation . In this setup, we can test basic BDC in its actual, not approximated form, for changing noise and masked areas. Also, we compare it with the Bayesian analog of PCA (BaPCA), reducing the expected data covariance to its principal components.

The signal domain is a 1D regular grid with 256 pixels. The synthetic signal and corresponding synthetic data are drawn from a zero centered Gaussian prior. The data is masked, such that only pixels 35–45 and 60–90 are measured linearly, according to . Additionally, white Gaussian noise is added with zero mean and standard deviation of for measurements up to pixel 79, and for pixels 80–90. Those noisy data are then compressed to four data points, from which the signal is inferred in a last step.

We apply basic BDC as described in Algorithm 1. For the eigenvalue problem, we use the implementation of the Arnoldi method in scipy (scipy.sparse.linalg.eigs[20]).

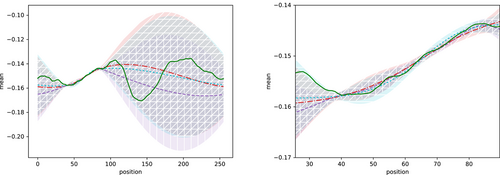

After having compressed the data, we evaluate the reconstruction performance using the compressed data. With Equation (4) the posterior can be calculated directly from the compressed measurement parameters, and signal covariance. The posterior mean and uncertainty for the original and the compressed data are compared to the ground truth in Figure 2. The original data has been compressed from 40 to 4 data points with a fraction γ of 83.7% of the total information encoded in the compressed data. Especially at the measured areas, both the original and the compressed reconstruction are close to the ground truth, while the reconstructed means deviate from the ground truth at masked areas far away from measured areas. However, this deviation is still captured in the uncertainties.

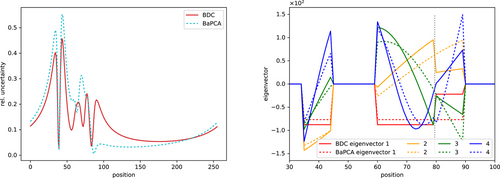

Figures 2 and 3 show that the compressed posterior has a higher variance than the original posterior. Figure 3 shows the relative uncertainty difference of the compressed and original posterior, that is, the compressed posterior uncertainty subtracted from the original posterior uncertainty divided by their mean at each pixel. It is strictly positive in the measured areas as well as in the unmeasured areas. In the measured area compared to the unmeasured area, the relative uncertainty excess of the compressed posterior is higher, since there the absolute uncertainty is low. Slight absolute increase of uncertainty there leads to a higher relative variation. This proves the increase of uncertainty due to the compression.

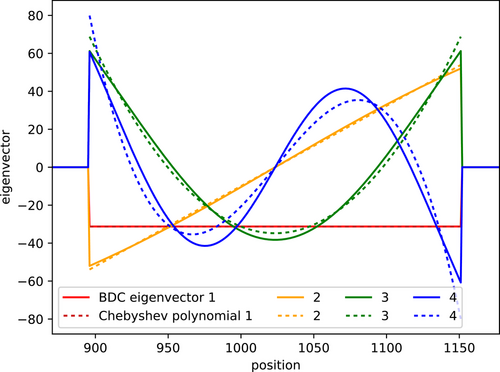

The eigenvectors are plotted in Figure 3. At the masked pixels, the eigenvectors stay zero. The changing noise covariance visibly impacts the shape of the eigenvectors. Between pixels 79 and 80, where the noise increases, is a clear break in all the eigenvectors. A higher noise standard deviation leads to abrupt drops in the eigenvectors. A more detailed discussion of the eigenvectors can be found in Appendix B. There we compare the shape of the eigenvectors in a simpler setup of a continuous mask and constant noise to those of Chebyshev polynomials of the first kind. An analytical derivation of their form in this simple setting is given in Appendix C.

We have plotted the corresponding reconstructions in Figure 2 with corresponding uncertainty as cyan dotted line with horizontally hatched shades. BaPCA reconstructs the mean and standard deviation similar to BDC. For comparison, we have also plotted the relative uncertainty in Figure 3 as we did for BDC as well as the eigenvectors building the compressing transformation V. The relative uncertainty from BaPCA clearly exceeds the one of BDC in areas of low noise. In the area of higher noise, the relative uncertainty excess from BaPCA is lower than the one from BDC compared to the original posterior uncertainty. The reason for this can be seen by comparing the eigenvectors of BaPCA and BDC: BaPCA is more sensitive in high noise areas, therefore having a lower posterior uncertainty there, but also letting thereby more noise enter the compressed data. Compared to BaPCA, BDC encodes more information in regions of lower noise, where the data is more informative, and it keeps less information from regions of higher noise.

This can be seen when looking at the eigenvectors of both methods. The amplitude of eigenvectors from BDC drop where the noise standard deviation becomes higher. For BaPCA, only the fourth eigenvector changes its amplitude in the region of higher noise standard deviation. The first three eigenvectors, however, do not vary their amplitude with noise change. This coincides with the observation that BaPCA and BDC become equivalent for constant noise standard deviation as discussed in Section 2.3.

We found that the compression method reduces the dimension of the data with minimal loss of information in the simple case of a linear 1D Wiener filter inference. Storing only four compressed data points still reconstructs the signal well, compared to the reconstruction with original data. Every compressed data point determines the amplitude of an eigenvector such that the signal is approximated appropriately. The lossy compression leads to a slightly higher uncertainty, as information is lost. In this application, BDC and BaPCA give similar results in terms of reconstruction. Compared to a BaPCA, BDC focusses more on regions of lower noise standard deviation, where the data are more informative.

4.2 Synthetic Data: Nonlinear Case

Testing BDC on data from a nonlinear generated signal in two dimensions allows us to verify the derivation of the nonlinear approximation in Section 3.1 and also test patchwise compression discussed in Section 3.2. Some nonlinear synthetic signal is generated and then inferred with the original data, with compressed data, and with data, which has been first divided into patches and then compressed. The results are discussed with respect to the quality of the inferred mean for the different methods, their standard deviation, their power spectrum, as well as the computation time.

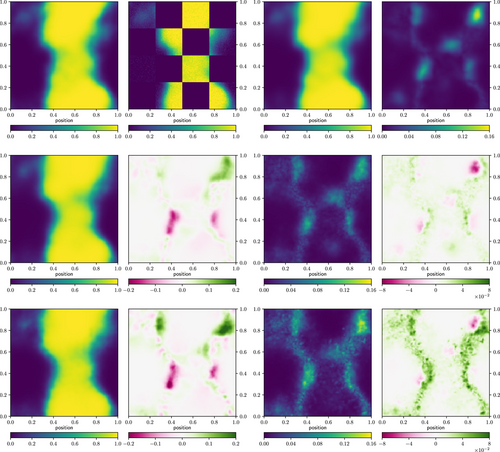

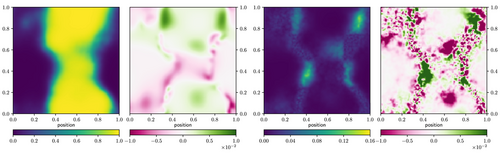

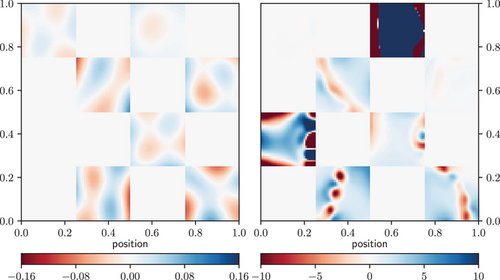

The synthetic signal has been generated with a power spectrum created by a nonlinear amplitude model as described in ref. [21] deformed by a sigmoid function to create a nonlinear relation between signal and data. The code of the implementation can be found here: https://gitlab.mpcdf.mpg.de/jharthki/bdc. The resulting ground truth lies on an 128 × 128 regular grid and is shown on the top left most panel of Figure 4. To test BDC on masked areas in a nonlinear setup as well, this signal is covered by a 4× 4 checkerboard mask with equally sized 32 × 32 squares, as displayed in the second top panel of Figure 4. Additionally uncorrelated noise with zero mean and 0.02 standard deviation has been added. From this incomplete and noisy data, the non-Gaussian signal as well as the power spectrum of the underlying Gaussian process need to be inferred simultaneously. The results of the original inference are plotted in the third top panel of Figure 4.

After setting up the input parameters for BDC, the data was compressed from 8 192 to data points altogether without sorting out less informative data points. Next metric Gaussian variational inference[17] performed inference steps based on the compressed data, each time finding a better approximation for the posterior mean and approximating the posterior distribution again. Then the original data was compressed another time using the current posterior mean as the reference point . This was done times in total. To determine the amount of information contained in the resulting compressed data another run has been started where 4096 eigenpairs have been computed. This way the estimation of γ is more exact using Equation (57) for a lower bound and (58) for an upper bound . With this we estimate that the compressed data of size 80 contains 31.4–32.2% of the information. The same way, one gets that 672 compressed data points contain 80% of the information. It turns out that already 80 compressed data points contain enough information to reconstruct the essential structures of the signal as one can see from reconstruction and difference maps shown in Figure 4.

The corresponding posterior mean is plotted in the center left of Figure 4 together with the difference to the originally inferred mean. Overall the compression yields similar results. Deviations appear at the edges of homogeneous structures, while deviations inside homogeneous structures are neglectable. The variance is plotted in the center right of Figure 4. Again it differs mainly at the edges. Since during the compression process information is lost, the results should have higher uncertainty in general. This is almost everywhere the case, however, there are some parts, which report a better significance than the reconstruction without compression. This either implies an inaccurateness of BDC or that BDC can partly compensate the approximation MGVI brings into the inference by providing it with measurement parameters that are better formatted for its operation.

Let us discuss in more detail how such a high loss in information still reproduces reasonable results. The information loss is equivalent to a widening of the posterior distribution. Its quantitative value in terms of does not take into account on which scales the information is mainly lost. As can be observed, a substantial fraction of the measurement information constrains small scales. Losing this part does not make such a large difference to the human eye, in particular as the small scale structures are of smaller amplitude, but the information loss is measured on relative changes. Thus a loss of 70% of mainly small scale information is possible without increasing the error budget significantly.

Now we investigate patchwise compression in the same setup. The data in each of the eight measured squares were compressed to data points separately. In total there are as many compressed data points for the patchwise compression as for the joint one. We can use that the response only masks the signal but does not transform it. Thus, we can compress the data with prior information of the corresponding patch only. This way we reduce the dimension of the eigenvalue problem (44) to the size of the patch. Before the reconstruction, the resulting compressed responses are expanded to the full signal space in a sparse form and concatenated as described in Section 3.2. For the reconstruction, the whole signal is inferred altogether. The resulting mean and variance of the inference for this method and their difference to the original ones are shown in the lower part of Figure 4. Both differ mainly at the edges of homogeneous structures from the original posterior mean and variance. Also for the case of patchwise compressed data, the variance at some points becomes smaller than for the original inference. Since patchwise BDC does not use knowledge about correlations between the patches for the compression, it compresses less optimal than joint BDC, and thus mostly has a higher deviation of the mean and higher uncertainty in the reconstruction. Like in the case of compressed data, we improve the estimation of γ by computing 4096 eigenpairs, that is, 512 eigenpairs per patch. It turns out that for every patch on average 34.8–35.4% of information is kept about the signal inside this patch when using the 10 most informative eigenpairs for the compressed data points, where all patches but two contain 15–25%. Counting from left to right and top to bottom, the data compressed from the second and fifth patch with very homogeneous signal contain more than 75% of the information. When comparing the γ values of each patch, one needs to consider that the patches are compressed individually. Therefore the information of one and another patch might be partly redundant and their individual γs can not just be added or averaged in order to get the joint information content.

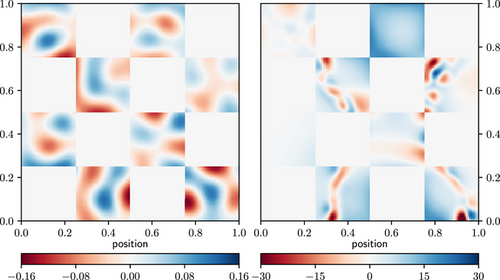

After having computed the compressed measurement parameters, we can also directly get the compressed posterior mean and covariance from Equations (5) and (6). The inference from the compressed data then reduces to a linear Wiener filter problem. The resulting mean and variance are plotted in Figure 5 together with their difference to the mean and variance using one more MGVI inference. Both deviate at the order of 10−2 which is one magnitude lower than the uncertainty. This illustrates that the compression helped to linearize the inference problem around the posterior mean.

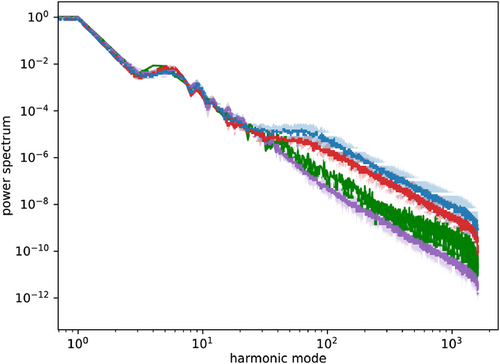

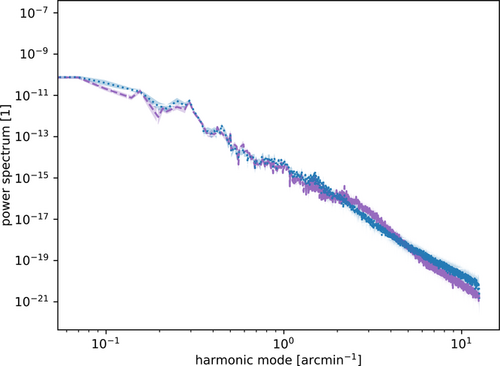

Finally the results of the methods can be compared by looking at the inferred power spectra of the underlying Gaussian process in Figure 6. All of the reconstructions recover the power spectrum well for high harmonic modes up to the order of 101. For higher modes, the samples of the originally inferred power spectrum tend to lie below the ground truth. In contrast, the reconstructions of the two compression methods overestimate the power spectrum for higher modes. It is not completely clear why this is the case. For higher modes, the signal to noise ratio is low. In those regimes it is more difficult to reconstruct the power spectra. This could be a reason for the deviation on high harmonic scales. In addition variational inference methods tend to underestimate uncertainties.[22] Since we use MGVI for the reconstruction for all methods, this could cause the inferred power spectra not to coinside within their uncertainties.

It is interesting to have a closer look on the back projection of the compressed data, that is, as well as on the projection of the eigenvectors building the compressed responses onto the space. The back projection of the jointly compressed data before the first inference, that is, having looked at the original data only once, is shown in Figure 7 on the left. In contrast to the back projection after the minimization process in the right plot, the data look quite uninformative, covering more or less uniformly the whole probed signal domain. After the inference, when the reference point around which the linearization is made has changed, the jointly compressed data addresses mainly regions of rapid changes in the signal. Especially the contours at the edges are saved in the jointly compressed data. This is even clearer visible in the projection of the eigenvectors building the compressed response according to (19) in Figure 8. The first two eigenvectors capture the frame of the large structure. The third one mainly looks at the upper left corner, where also some structure occurs, though it is less distinct than the large one. None of the eigenvectors covers any structure in the second and fifth patches, which was also the patches with largest γ, that is, least information loss due to the compression. Since the structure of the ground truth there is rather uniform, it does not contain much information but the amplitude of the field, which can easily be compressed to few data points.

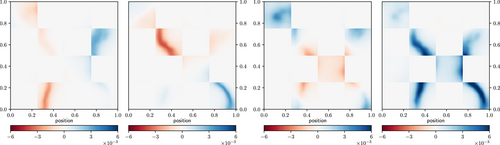

Figure 9 shows the back projection of the patchwise compressed data before and after the inference. Here, the change of the basis functions becomes apparent as well.

Table 1 shows the computation time of the different compression methods and reconstructions. As described above, it has been measured for , as well as . In case of the original inference, in total inference steps were performed such that in there is an identical number of inference steps for every method. The time has been measured for the inference only and for the total run of separation of the data into patches, ncomp compressions with nrep inferences after each compression. The average of all inferences is given in the first line of Table 1. The time for the total runs is given in the second line. It has been measured on a single node of the FREYA computation facility of the Max Planck Computing & Data Facility restricted to 42 GB RAM. In all categories, the inference with patchwise compressed data is the fastest. In contrast, joint compression takes the longest time. There, one can clearly see the advantage of patching as discussed in Section 3.2. This leads to sparse responses which are more affordable in terms of computation time and storage. This is in agreement with the synthetic example discussed in this section. It shows that such sparse representations are highly beneficial.

| Original | Comp | Patchcomp | |

|---|---|---|---|

| Inference time [s] | 317 | 984 | 289 |

| Total run time [s] | 633 | 1972 | 586 |

| No response calls | 686187 | 2913 | 6062 |

| Inference time [s] | 205 | 637 | 200 |

| Total run time [s] | 615 | 1917 | 613 |

| No response calls | 686187 | 4369 | 10776 |

- Also the number of original response calls is stated. The original data has been inferred times.

The response is called, that is, applied, several times during the minimization. In the application here, calling the response is inexpensive. However, there are applications in which the response is expensive. Then one aims to minimize the number of response calls, as this determines the computation time. The number of response calls were counted for . In the inference with the original data, Ro was called 686 187 times. In the process of compressing jointly it was called 4 369 times. During the patchwise compression, the patchwise original response has been called 10 776 times. In the case of patchwise compression, the response only maps between the single patches, that is, it is a factor 16 smaller than the full response. Thus, effectively the full original response has been called only about 674 times in the case of patchwise compression leading to a speed up factor of up to 1018 in case the response calculation is the dominant term. In this application, patchwise compression lead to computation times consistent with the computation time of inferring with original data. Future steps to make BDC more rewarding could be to find representations for the compressed response that are even more feasible.

4.3 Real Data: Radio Interferometry

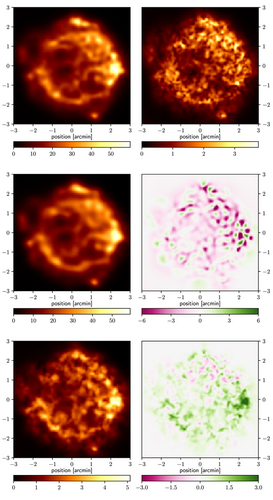

Finally, we apply BDC to radio astronomical data from the supernova remnant Cassiopeia A observed by the GMRT.[23, 24] 200 000 data points from the measurement were selected randomly and noise corrected according to [25]. Using those data, two images were constructed by the RESOLVE algorithm[25, 26] that relies on MGVI, where we make one image with compression and one without compression for comparison.

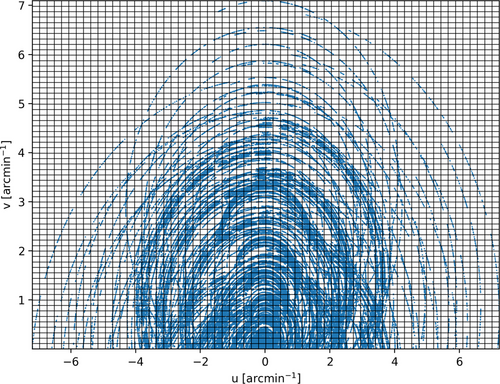

We divide the Fourier plane into 64 × 64 squared patches, as shown in Figure 10. The location of the measurements in the Fourier plane are marked as well. It is apparent that many patches are free of data, some contain some data, and the highest density of data points occurs around the origin of the Fourier plane.

Figure 11 shows the image obtained from the original dataset and from the patchwise compressed dataset. The mean is obtained after three MGVI iteration steps from the original data. Using BDC, the data in each patch was compressed ideally under prior information.

The resulting data, noise covariance, and responses were concatenated and used for inference in MGVI with three inference steps. The received posterior distribution was used to compress the separated original data once more with updated knowledge, inferred with three minimization steps. Doing this one more time resulted in the reconstruction shown here. The bottom right plot in Figure 11 shows that the uncertainty of the reconstruction from patchwise compressed data is mostly higher than the uncertainty from the original reconstruction. This is expected due to the information loss of the compression. The data points in every patch have been compressed to maximally data points per patch. The minimal fraction of information stored in the compressed measurement parameters has been set to 0.99. In total, this leads to 73 239 compressed data points, which is a reduction of the data size by a factor 2.73. Due to the large computation time, it is infeasible to determine more eigenpairs. Thus, estimates of the amount of information γ contained in the compressed data points are very vast. On average, with Equations (57) and (58), the estimated range of γ is 0.35–94.8% for every patch with a standard deviation of 0.39% and 17.3%, respectively. The dispersion of those values for different patches is high, caused by the varying distribution of data points per patch.

The corresponding power spectra of the underlying Gaussian processes are shown in Figure 12. Their slopes qualitatively agree, but partly deviate outside their uncertainties. As discussed for the synthetic application in Section 4.2, one needs to take into account that MGVI tends to underestimate the uncertainties. In general, the power spectrum inferred from the original data is more distinct in its slope than the one from compressed data in terms of deviations from a straightly falling power spectrum. The compressed spectrum is flatter especially in the higher harmonic regime. This could be caused by a lower signal to noise ratio in this regime. However, it is not completely clear, why BDC shows this behavior.

In addition to Euclidean gridded patches, we separated the data into equiradial and equiangular patches. This leads to a more even distribution of data points inside the patches, since this way patches become larger further outside, where there are less data points. However, for this patch pattern small structures in the reconstruction get lost. The reason for this is that data points in the Fourier plane far away from the origin store information about the small scale image structures. Compressing them together therefore can be expected to lead to a loss of information on small scale structures. Thus, two criteria need to be considered for the choice of the patch geometry: from a computational perspective, patches with few data points are favored. From information theoretical perspective, data points carrying similar information shall be compressed together.

This application shows that BDC is able to operate on real world datasets in the framework of radioastronomical image reconstruction. The run time still can be improved, though. Since the compression for different patches works independently, this can perfectly be parallelized. Another potential area for improvement would be the choice of the separation of the patches. One needs to aim for a patch geometry, where the original data points are evenly distributed among the patches, while also highly correlated data points are assigned to the same patch.

5 Conclusion

A generic Bayesian data compression algorithm has been derived, which compresses data in an optimal way in the sense that as much information as possible about a signal for which the correlation structure is assumed to be known a-priori.

Our derivation is based on the Kullback–Leiber divergence. It reproduces the results of ref. [13] that optimizing the information loss function leads to a generalized eigenvalue problem. We generalized the method to the nonlinear case with the help of metric Gaussian variational inference.[17] Also, we divided the dataset into patches to limit the computational resources needed for the compression. This leads to sparseness of the response, allowing to apply the method in high-dimensional settings as well.

The method has been successfully applied to synthetic and real data problems. In an illustrative 1D synthetic linear scenario, 40 data points could be compressed to four data points with less than 20% loss of information. In a more complex, 2D and nonlinear synthetic measurement scenario, 8192 measurements could be reduced to 80 data points with 70% loss of information that still capture the essential structures of the signal. Dividing the data into patches resulted in a huge reduction of the required computation time for the compression itself, confirming the expected advantage.

Finally, the method has been applied to real astrophysical data. The radio image of a supernova remnant has been reconstructed qualitatively with a data reduction by a factor of almost 3.

For such scientific applications of BDC, one needs to choose the variable of interest, the signal s, such that s represents the scientific interest best. Then BDC can optimally adjust which information need to be stored in the compressed data optimally. In the chosen example, all degrees of freedom of the field had to be stored. In principle, only certain (Fourier) scales or certain areas of a field could be defined to be the quantity of interest. This allows BDC to remove information on the other, irrelevant scales or areas.

BDC compresses optimally with respect to the knowledge about this quantity. It is a lossy compression method, that is, the compressed data contain less information about the quantity of interest—information that is relevant to answer the scientific question—than the original data. This information loss consistently leads to higher uncertainty in the linear case where the solution is exactly known and mostly higher uncertainty in the nonlinear applications where only approximate solutions can be found. To quantify the loss of information we have introduced the fraction γ of information about the quantity of interest s stored in the compressed data compared to the information in the full data. This fraction can be used to reduce the dimension of the compressed data such that they still contain the relevant amount of information. In case the compression is too lossy, one needs to adjust the number of computationally determined eigenpairs that build the compressed measurement parameters or increase the limit of information that should be contained in the compressed data.

Still, the current BDC algorithm requires too much resources in terms of storage needed for the responses and computation time. In order to improve this even further, the choice of the data patches can still be investigated and optimized such that data points storing similar information are assigned to the same patch. Up to now, data have been patched which are neighboring in real or Fourier space. However also data points could be informationally connected non-locally. One would need to look at the Kullback–Leibler divergence again to find those connections and group the data accordingly.

Another problem is the computational cost of the response. In the course of our derivation, we represented it in a vector decomposition. One could demand further restrictions to those vectors such as a certain parametrization or find other representations to find a computationally ideal basis for the responses. This could lead to a higher reduction factor in applications such as the astrophysical application in Section 4.3.

As a final step BDC needs to prove its advantage in real applications. A promising application could be online compression such as ref. [19] suggest. In a scenario, where data come in blockwise, those blocks can be treated as the patches and compressed separately. This is for example applicable in any experiment running over time. There, time periods imply the measurement blocks. This way the original data never needs to be stored at all, but compressed immediately and optimally under the current knowledge.

Acknowledgements

The authors thank their reviewers for extensive and constructive feedback that helped to improve the paper a lot. T.E. and J.H.-K. acknowledge financial support by the Deutsche Forschungsgemeinschaft (DFG, German Research Foundation) under Germany's Excellence Strategy – EXC-2094 – 390783311. P.A. acknowledges financial support by the German Federal Ministry of Education and Research (BMBF) under grant 05A17PB1 (Verbundprojekt D-MeerKAT). The authors thank Philipp Frank for help with the coding.

Conflict of Interest

The authors declare no conflict of interest.

Appendix A: Optimality of BDC for Zero Posterior Mean

In this section of the appendix, we prove that for zero original posterior mean the compression is optimal, if is the eigenvector to the smallest eigenvalue of . Optimal means that the information gain in (34) is maximal with respect to .

Proof.For the proof, the dependence in (34) needs to be shown explicitly:

Let be the eigenpairs of . The eigenvectors form a complete orthonormal basis. Then can be written as with , such that is normalized, and

We will use that is a convex function. This can be easily verified by calculating the first derivative

Note, we used here that has its minimum at and that the eigenvalues of are between 0 and 1, that is, the smaller eigenvalues maximize . This way we got an upper bound reached for , where v0 is the eigenvector corresponding to the smallest eigenvalue .

Doing data compression by just considering the smallest eigenvalues of Do will be found to be the right choice when considering as a loss function in the section A. This gives the expected loss for the expected mean of under .

Appendix B: 1D Wiener Filter Data Compression

In Section 4.1, we applied our data compression method to synthetic data in the context of the generalized Wiener filter with a linear measurement equation. In this section, we are going to investigate the shape of the eigenfunctions corresponding to the eigenproblem of Equation (44) in this setting.

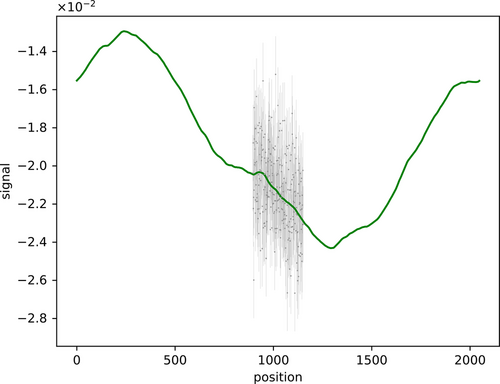

Therefore consider an easy set up without varying noise nor a complex mask. To ensure a certain definition, we choose the signal space to be a 1D regular grid with 2048 lattice points in one dimension. The synthetic signal and corresponding synthetic data are drawn from the prior specified in Section 4.1. The data is masked, such that only the central 256 pixels are measured. Those data are then compressed to four data points, from which the signal is inferred in a last step.

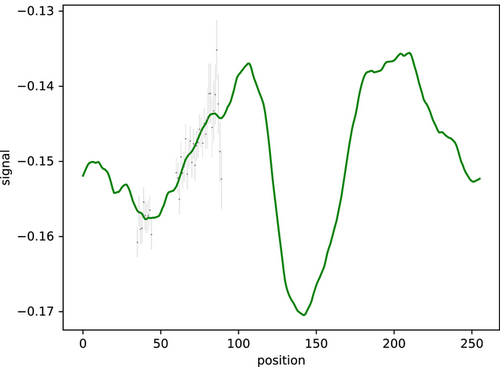

The synthetic signal and data are computed as before. However, the noise standard deviation now is constantly and the response is set to be a mask measuring pixels 896–1152 leading to a transparent window of 256 pixels in the center of the grid. The measurement setup with signal mean, synthetic signal and data can be seen in Figure B1.

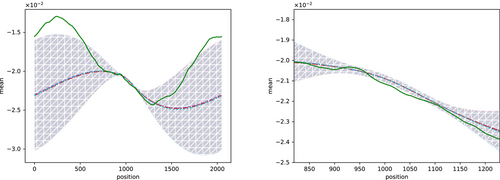

The consequential mean and uncertainty for the inference with the original data and the ones with the compressed data are plotted together with the ground truth in Figure B2. The original data has been compressed from 256 to 4 data points.

Now let us have a closer look at the eigenvectors plotted in Figure B3, which correspond to a back projection with Rc of the corresponding single data point being one and all the others being zero. These functions remind of Chebyshev polynomials of the first kind. The Chebyshev polynomials were fitted to the eigenvectors minimizing the mean squared error and are plotted in the same figure as the eigenvectors. One can clearly see their similarity. The lower order polynomials fit the best, while higher order polynomials deviate especially at the edges. This hints at BDC transferring the compression problem to a polynomial fit. Then the compressed data points are the amplitudes of the polynomials while the compressed response stores their individual shapes.

An analytical analysis is done in the next section.