Training for Failure: A Simulation Program for Emergency Medicine Residents to Improve Communication Skills in Service Recovery

Abstract

Objectives

Service failures such as long waits, testing delays, and medical errors are daily occurrences in every emergency department (ED). Service recovery refers to the immediate response of an organization or individual to resolve these failures. Effective service recovery can improve the experience of both the patient and the physician. This study investigated a simulation-based program to improve service recovery skills in postgraduate year 1 emergency medicine (PGY-1 EM) residents.

Methods

Eighteen PGY-1 EM residents participated in six cases that simulated common ED service failures. The patient instructors (PIs) participating in each case and two independent emergency medicine (EM) faculty observers used the modified Master Interview Rating Scale to assess the communication skills of each resident in three simulation cases before and three simulation cases after a service recovery debriefing. For each resident, the mean scores of the first three cases and those of the last three cases were termed pre- and postintervention scores, respectively. The means and standard deviations of the pre- and postintervention scores were calculated by the type of rater and compared using paired t-tests. Additionally, the mean scores of each case were summarized. In the framework of the linear mixed-effects model, the variance in scores from the PIs and faculty observers was decomposed into variance contributed by PIs/cases, the program effect on individual residents, and the unexplained variance. In reliability analyses, the intraclass correlation coefficient between rater types and the 95% confidence interval were reported before and after the intervention.

Results

When rated by the PIs, the pre- and postintervention scores showed no difference (p = 0.852). In contrast, when scored by the faculty observers, the postintervention score was significantly improved compared to the preintervention score (p < 0.001). In addition, for the faculty observers, the program effect was a significant contributor to the variation in scores. Low intraclass correlation was observed between rater groups.

Conclusions

This innovative simulation-based program was effective at teaching service recovery communication skills to residents as evaluated by EM faculty, but not PIs. This study supports further exploration into programs to teach and evaluate service recovery communication skills in EM residents.

Long waits, unexpected delays, breakdowns in communication, and medical errors are daily occurrences in any emergency department (ED).1 These potentially distressing events for patients and staff are service failures, in which the provided service falls short of meeting the patients’ expectations.2 The unpredictable nature of the ED makes it prone to these events. Service recovery is the immediate action taken in response to a service failure to bring the patient back to a state of satisfaction.1

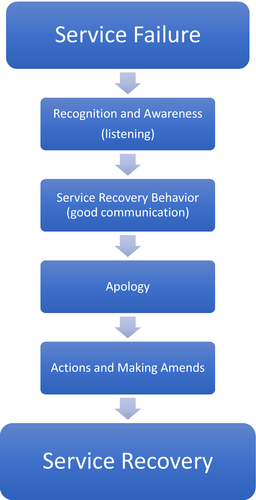

The concept of service recovery in the ED is derived from other service industries such as hospitality and food services. In these fields, there is often a standard approach to resolving service failures quickly to improve the experience of their customers and staff. The best practices in service recovery include active listening, a blameless apology, acknowledgment of the issue, and making amends (Figure 1). Frontline employees play a critical role in service recovery, as immediate resolution of problems is an effective approach for improving customer satisfaction.2

According to the Agency for Healthcare Research and Quality, resolving service failures for patients has a strong impact on their satisfaction. Satisfied patients are more cooperative, compliant, and likely to continue using the same health care services for future needs.3 Patients with resolved service failures are more likely to be satisfied with their visit and tell others. In addition, successful service recovery has positive impacts on the providers, including lower malpractice risks, less stress at work, and potentially increased wellness.1 Studies in other service industries have shown that frontline staff members who perform excellent service recovery experience higher rates of job satisfaction and lower turnover rates.4

Overall, the health care industry has been slow to formally adopt and teach service recovery practices. When the inevitable conflicts arise in health care, professionals and staff may respond variably, which may lead to more stressful and less productive interactions. A standard approach to service recovery can lead to less emotional investment and stress.4 When the unexpected event becomes the routine to resolve, both physicians and patients may have improved experiences.

Emergency medicine (EM) physicians are taught how to manage an enormous breadth of medical issues during residency. However, few residents receive formal education in specifically managing the numerous service lapses that occur in the ED on a daily basis. A 2016 survey revealed that the majority of EM residencies provide little to no organized curriculum regarding the patient experience. The authors suggested that further work is needed to improve patient experience education for EM residents.5

Case-based simulation, an effective approach to educating adult learners in the health care setting, is an interactive educational modality that avoids risk to patients and learners.6 Simulation is one of the modalities recommended to implement service recovery programs in health care.1 The ideal service recovery behaviors provide a framework for simulation case development, educational instruction, debriefing, and performance assessment. Herein, this study presents the design and evaluation of a simulation-based service recovery program implemented at an EM residency program and the data analyses of its effectiveness.

Hypothesis

A simulation-based education program will improve the communication and service recovery skills of postgraduate year 1 (PGY-1) EM residents over the course of the program, as assessed by EM faculty and patient instructors (PIs).

Methods

Study Design

This study was a prospective repeated-measure design. The institutional review board at the sponsoring institution reviewed the project and determined that it was not human subjects research.

Funding

This study received no external funding. Programmatic support was provided by the sponsoring institution's department of graduate medical education.

Study Setting and Population

The program was held at an Accreditation Council for Graduate Medical Education–accredited EM residency program in the Northeastern United States. It is a 3-year program with a total of 54 residents. Eighteen PGY-1 EM residents participated in the study. The program was held at an academic medical center clinical skills center. The PIs are employed by the academic medical center. Medical school and EM residency faculty developed and implemented the program.

Study Protocol

Program development was guided by the six-step approach to curriculum development described by Thomas et al.7 A literature search on simulation programming for service recovery education was conducted to identify preexisting curricular content. The search confirmed numerous resources on service recovery in health care1, 3, 8-12 and patient experience programs,13-18 but no studies describing service recovery curricular programming. A targeted needs assessment was then performed. A Likert scale survey around service recovery current practices, perceived proficiencies, attitudes, and self-efficacy was distributed to the PGY-2 and PGY-3 residents at the sponsoring EM program.

Cases were developed and edited by simulation and EM residency faculty to represent situations that required service recovery behavior to resolve a service failure. The five faculty were selected by the study group as specific content experts in simulation, EM education, and administration alongside experts in clinical skills programming. The cases were based on real-life, common cases encountered in the ED (Table 1) .

| Case | Summary |

|---|---|

| Case 1: Pediatric AMA | Pediatric patient was admitted with fever. There was a lapse in communication and the patients mother was never updated. She is upset and wants to leave against medical advice (AMA). |

| Case 2: Forgotten CT scan | An elderly patient with dementia was in the ED for a fall workup. An error was made and the CT scan of the head was not ordered. The family member of the patient is upset about the delay of testing. |

| Case 3: Insulted patient | A patient overheard staff speaking about him negatively. He is upset and would like to leave against medical advice (AMA). |

| Case 4: Missed fracture | A patient is upset when called back to the ED for a fractured foot that was missed the night before. |

| Case 5: Rude consultant | A patient is treated rudely by a consultant in the ED and is upset. |

| Case 6: Inadequate pain management | A patient with a kidney stone waited many hours for pain treatment and is upset. |

| Case 7: Delay of care* | A patient has waited hours for an X-ray and is upset. |

- *Backup/alternative case, not used in session.

The cases were presented and reviewed by the PIs and case development experts to confirm feasibility and case performance standardization. A training and feedback session was held to practice the cases, review the objectives, and debrief the content of the session. The format of this session was adapted from a previous report describing the standardization of patient training for clinical performance.19 Suggestions from the PIs and faculty were taken into account, and the cases were improved to provide more realism. During case development, PIs were encouraged to edit minor details of the case and create patient characters for greater engagement during the session. Disagreements regarding changes were resolved through majority consensus. Each PI was assigned the same case to participate in and evaluate through the entire program.

Eighteen PGY-1 EM residents participated in the program during their first block of residency, which is dedicated to orientation. PGY-1 residents were selected for the program under the assumption that they would have very little training in service recovery at the start of residency. Prior to the session, residents received a prebriefing, which included a psychological safety discussion and a short description of the purpose of the session without instruction on how to handle the scenarios.

The residents participated in a total of six cases. After each 7-minite case, they received a 5-minute immediate standardized feedback session from the PIs that was focused specifically on the Master Interview Rating Scale (MIRS) assessment elements. After completing three simulated cases, the residents participated in a 45-minute debriefing session that was learner led with faculty guidance through an evidence-based approach to service recovery. Faculty ensured that all the debriefings emphasized this evidence-based approach (Table 2). The residents then participated in three additional cases. The residents were randomized to the cases they experienced before (preintervention) and after (postintervention) the debriefing session.

| Step 1 | Recognition and awareness |

|

|

||

|

||

| Step 2 | Service recovery behavior |

|

|

||

|

||

|

||

| Step 3 | Apology |

|

|

||

| Step 4 | Actions and making amends |

|

|

||

|

Measurements

The MIRS, a revised version of the well-established Arizona Clinical Rating Scale (ACIR),20-22 was selected as an evaluation tool for resident performance. The ACIR underwent substantial development and evaluation showing good internal consistency and validity.20 The MIRS is widely used for evaluating training programs that teach communication skills to providing physicians.23 The MIRS addresses general communication aspects. Each item is rated on a scale of 1 (worst) to 5 (best) with descriptive anchors. There are clear and detailed descriptions of anchors 1, 3, and 5. This tool has been used successfully in several prior studies to assess communication skills.24, 25 The MIRS was selected as an assessment tool for several reasons. Primarily, with very little modification, it encompasses many of the communication techniques necessary in the service recovery framework. Additionally, it is used extensively by the sponsoring institution's school of medicine with historically high interobserver agreement data and internal consistency.26, 27

As the MIRS was developed to assess medical student communication in a more comprehensive manner, not all items of the MIRS were applicable to these service recovery cases. The applicable items were identified and included in the assessment tool as determined by the content experts, clinical skills assessment leaders, and PIs. Because the service recovery framework also includes elements not directly assessed in the MIRS, two additional similarly scaled items were added to specifically address the blameless apology and making amends. Anchors were developed for these two items (Table 3). No inter-rater analysis was done on these two items prior to this study.

| Category | 5 | 4 | 3 | 2 | 1 |

|---|---|---|---|---|---|

| Apology* | During the interview, the interviewer clearly apologizes using empathy in language. The interviewer need not take blame but conveys an apology. | The interviewer conveys empathy and apologetic tone but does not say they are sorry. | The interviewer offers no apologize, empathy, or acknowledgment of the experience of the patient during the case. | ||

| Reassurance and making amends* | During the interview, the interviewer clearly and specifically reassures the patient that they will address the situation and make amends for the problem. |

The interviewer may offer some reassurance or amends, but does not do both. The interviewer is vague with a plan for making amends. |

The interviewer provides no reassurance to the patient about the resolution of the issue and does not offer to make amends. | ||

| Eliciting the narrative thread or the “patient's story” |

The interviewer encourages and lets the patient talk about their problem. The interviewer does not stop the patient or introduce new information. |

The interviewer begins to let the patient talk about their problem but either interrupts with focused questions or introduces new information into the conversation. |

The interviewer fails to let the patient talk about their problem. or The interviewer sets the pace with Q&A style, not conversation. |

||

| Lack of jargon |

The interviewer asks questions and provides information in language which is easily understood. Content is free of difficult medical terms and jargon. Words are immediately defined for the patient. Language is used that is appropriate to the patient's level of education. |

The interviewer occasionally uses medical jargon during the interview, failing to define the medical terms for the patient unless specifically requested to do so by the patient. | The interviewer uses difficult medical terms and jargon throughout the interview. | ||

| Verbal facilitation skills |

The interviewer uses facilitation skills through the interview. Verbal encouragement, use of short statements, and echoing are used regularly when appropriate. The interviewer provides the patient with intermittent verbal encouragement. |

The interviewer uses some facilitative skills but not consistently or at inappropriate times. Verbal encouragement could be used more effectively. |

The interviewer fails to use facilitative skills to encourage the patient to tell his story. | ||

| Nonverbal facilitation skills |

The interviewer puts the patient at ease and facilitates communication by using: Good eye contact; Relaxed, open body language; Appropriate facial expression; Eliminating physical barriers; and Making appropriate physical contact with the patient. |

The interviewer makes some use of facilitative techniques but could be more consistent. One or two techniques are not used effectively. or Some physical barrier may be present. |

The interviewer makes no attempt to put the patient at ease. Body language is negative or closed. or Any annoying mannerism (foot or pencil tapping) intrudes on the interview. Eye contact is not attempted or is uncomfortable. |

||

| Empathy and acknowledging patient cues |

The interviewer uses supportive comments regarding the patient's emotions. The interviewer uses NURS (name, understand, respect, support) or specific techniques for demonstrating empathy. |

The interviewer is neutral, neither overly positive nor negative in demonstrating empathy. |

No empathy is demonstrated. The interviewer uses a negative emphasis or openly criticizes the patient. |

||

| Closure |

At the end of the interview the interviewer clearly specifies the future plans: What the interviewer will do (leave and consult, make referrals); What the patient will do (wait, make diet changes, go to physical therapy); When (the time of the next communication or appointment). |

At the end of the interview, the interviewer partially details the plans for the future. |

At the end of the interview, the interviewer fails to specify the plans for the future, and the patient leaves the interview without a sense of what to expect. There is no closure whatsoever. |

- MIRS = Master Interview Rating Scale.

- *Modification to MIRS.

Faculty observers were uniformly trained on the utilization of the modified MIRS in a session with experts in service recovery and clinical skills assessment. The training included an in-depth review of the MIRS as well as a video depiction of ideal and poor service recovery skills as determined by the experts. No data were collected in this training session. PIs are extensively trained at this program on the use of the MIRS, with regular validation and inter-rater exercises.25, 26 The added service recovery items were reviewed and depicted in the PI training and feedback session described.

Patient instructors evaluated the residents after each case performed using the modified MIRS. Out of a pool of four, two independent faculty observers were randomized to each individual resident case. They watched videotaped sessions and filled out the MIRS evaluation for each one. Both faculty observers and PIs were blinded to the order of the resident cases.

Data Analyses

The mean MIRS scores of the first three cases (preintervention) and the last three cases (postintervention) were recorded for each resident. The pre- and postintervention scores were summarized by mean and standard deviation (SD) for each rater type (PI, faculty observer 1, and faculty observer 2), and the pre and post differences were tested against 0 using paired t-tests. Additionally, the scores of each case were summarized by mean and SD. In the framework of a linear mixed-effects model, the variance in scores from PIs was decomposed into variances contributed by PIs/cases, the program effect on individual residents, and the unexplained variance. The effects of the PI/case and program were further tested by comparing the estimated variances to zero, implying no effect. Similarly, the variance in scores from faculty observers was decomposed into variances contributed by faculty observers, the program, PIs/cases, and the unexplained variances. Each random-effect was tested in the same manner. Finally, the intraclass correlation coefficient (ICC) between rater types and the 95% confidence interval (CI) were calculated before and after the intervention. A p-value < 0.05 was deemed to be statistically significant. All the statistical analyses were performed in R 3.4.3.28

Results

Needs Assessment Survey

The targeted needs assessment survey was distributed to 36 PGY-2 and -3 residents in the program and completed by 20, with a 55% response rate; 90% (18) of the respondents indicated that interacting with angry and upset patients causes them stress at work; 85% (17) of the respondents agreed that “learning a standard approach to handling an angry or upset patient or family member would be beneficial to their practice.”

Simulation Program

The mean pre- and postintervention scores as scored by PIs showed no significant difference (p = 0.852; Table 4). In contrast, the mean postintervention score was significantly improved compared to the preintervention score when assessed by faculty observers (p < 0.001; Table 4).

| Rater Type | Preintervention Score | Postintervention Score | p-value |

|---|---|---|---|

| PI | 36.48 ± 2.02 | 36.59 ± 1.79 | 0.852 |

| Faculty observer 1 | 30.94 ± 3.79 | 36.48 ± 2.00 | <0.001 |

| Faculty observer 2 | 29.83 ± 3.12 | 37.07 ± 1.70 | <0.001 |

- PI = patient instructor.

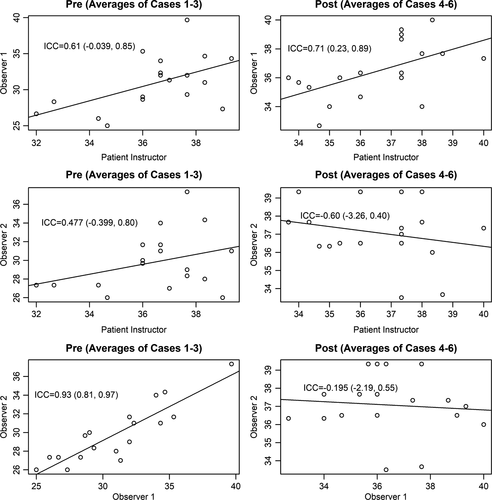

The ICC between rater types and the 95% CI are shown in Figure 2, which presents scatter plots of pre- or postintervention scores between rater types. The ICC was high between faculty observers 1 and 2 before the intervention (ICC = 0.93, 95% CI = 0.81 to 0.97) but was low postintervention (ICC = 0.19, 95% CI = –2.19 to 0.55; Figure 2).

The variation of scores by PIs was small and significantly attributed to PIs/cases. The program effect showed no statistical significance. PIs/cases and the program together explained 43% of the variance (Table 5). In contrast, the variance of scores by faculty observers was not due to the PIs/cases and faculty observers, but was significantly attributed to the program, which explained 60% of the variance (p < 0.001). A total of 36% of the variance was unexplained due to factors that were not included in the model (Table 6). Subjective evaluations by the 18 residents were overwhelmingly positive, with 90% rating the program as very good or excellent; 100% indicated that it would change their practice.

| Variance | SD | % Variance | p-value | |

|---|---|---|---|---|

| Program effect for individual residents | 1.10 | 1.05 | 13 | 0.07 |

| PI/case | 2.58 | 1.61 | 30 | <0.001 |

| Residual (unexplained) | 4.84 | 2.20 | 57 |

- PI = patient instructor.

| Variance | SD | % Variance | p-value | |

|---|---|---|---|---|

| Program effect for individual residents | 15.12 | 3.89 | 60 | <0.001 |

| PI/case | 0.53 | 0.73 | 2 | 0.11 |

| Faculty observer | 0.53 | 0.73 | 2 | 0.07 |

| Residual (unexplained) | 9.09 | 3.02 | 36 |

- PI = patient instructor.

Discussion

This exploratory study represents the first of its kind to teach and assess service recovery skills in EM physicians. The results of this study suggest that this program was effective at improving service recovery and communication skills over the course of the experience as evaluated by the faculty observers. The variation in scoring for the faculty observers was significantly attributable to the program, which is ideal. The ICC for faculty observers was high preintervention, but low postintervention when average scores were higher. The score range became much smaller postintervention, and therefore the same difference between raters had a greater impact on the ICC. Overall, these results are consistent with prior studies, which show the efficacy of simulation-based PI training in improving communication and professionalism performance in residents.24, 29-32

In contrast to the faculty observer scores, the service recovery skills scored by the PIs showed no improvement over the course. The PI scores were very high throughout the sessions and left little room for improvement. Consequently, the program effect was not statistically significant. While the PIs/cases showed statistical significance, the variation overall was small.

Several potential reasons could explain the overall high PI scoring. PIs are trained intensively on the MIRS scale in medical student patient interview education, especially in the preclinical years.26 Interns likely perform at a higher level in these skills after completing medical school, and it is possible that the entering interns exceeded the expectations of the PIs on the MIRS. Comparatively, the faculty observers routinely assess resident-level performance. As such, they may have performed as more stringent assessors due to their experience with this level of learner.

Overall, the variability of ratings in performance assessments is a common, well-described issue with unclear mechanisms.33, 34 Assessors may be influenced by social and environmental factors and context.35, 36 During the PI debriefing after the program, the PIs collectively discussed that they often “felt bad” for the residents since they were yelling loudly or upset with the resident in their role. This program required emotional reactions from the PI to stimulate how a patient would behave under a stressful circumstance. It has been described that assessors may be biased by recent experiences.35, 36 The emotional investment may have impacted the objectivity of the PIs in grading immediately following these cases, leading to higher scores overall. There is also evidence to suggest that PIs score residents higher than physician examiners do in observed structural clinical examinations.37

Targeted needs assessment analysis revealed that many residents indicated a desire to learn more about service recovery. However, few formal training opportunities targeting the patient experience are provided in residency education.5 Several studies have suggested that enhanced communication training in residency improves the patient experience and outcomes.17, 38-41 Communication skills may not be innate to the medical trainee and should be taught and practiced.42 This study suggests that simulation-based communication training can be targeted to specific areas that are known to improve patient satisfaction when performed well.

Just as important to improving the patient experience, service recovery training may be integral to the physician experience. The residents in this program indicated that interacting with angry patients caused them stress at work. The health care industry must find ways to improve the EM physician experience, starting in residency training. Burnout is defined by emotional exhaustion, physical fatigue, and cognitive weariness and is associated with feelings of irritability and cynicism.43-45 Burnout is prevalent in EM physicians, with a reported level of above 60%.46 Although the reasons behind the high rate of burnout are complex, they include emotional exhaustion that the job places on physicians.47 Residents need to communicate in highly emotional contexts that contribute to burnout,48, 49 yet often report not being sufficiently trained in those communication skills.50 Prior studies have made associations between lack of self-efficacy in communication and burnout.51 Communication training may lower the stress attributed to communication and improve self-efficacy.52

Evidence from other service industries suggests that training in service recovery skills can specifically improve the experience of the frontline staff.4 It is far easier to deal with an emotionally charged patient interaction if one has the tools to resolve the issue promptly with little emotional exhaustion. Training EM physicians to handle the everyday, inevitable service lapses quickly and effectively may be one element to improving the overall experience of physicians in this high-stress environment.

Additionally, training in service recovery could potentially improve physician performance and reasoning. Difficult patients and emotionally charged interactions may interfere with appropriate reasoning, impair diagnostic accuracy, and increase cognitive errors.53-56 Moreover, practice in exerting control over feelings may provide less susceptibility to the depletion of mental resources that may lead to errors.53, 57-59 It would stand to reason that a training program in service recovery might be beneficial in preparing medical trainees to handle emotionally charged patient interactions.

One must use caution, however, when implementing these type of programs, as there has been a link between additional customer service training and burnout levels in health care workers. Workers may see it as an additional administrative burden.9 The positive subjective feedback seen in this program may indicate that physicians would be receptive to a training program that was directly applicable to their everyday work, especially when the benefits to their well-being are pointed out. More work must be done to explore the role of service and communication training in preparing our residents for the emotional interactions they will have on a daily basis.

Limitations

This study had several limitations. First, the findings of this small sample of residents from a single site and year of training may impact the generalizability of the results. Second, the time and method of observation differed between the faculty evaluators and the PIs, which may have inadvertently impacted the scores. Other studies have found real-time and video observations may not be interchangeable.60, 61 The PIs scored the students in the 5 minutes after the case, whereas the faculty observers had as much time as they needed to view the videos and evaluate the performance.

Another limitation is that the MIRS may not be the appropriate scoring tool when teaching the specific skills of service recovery; however, at present no specific tool exists. As this program evolves, more evaluator training, inter-rater reliability analysis and standardization of the observation environment for evaluators will be required.

Conclusion

This innovative simulation-based program was effective at teaching service recovery communication skills to residents as evaluated by emergency medicine faculty but not patient instructors. The faculty evaluations noted improvement in service recovery skills with simulated practice and debriefing. Residents viewed the program very positively. This small pilot program supports further exploration into the use of patient instructor simulation to teach and assess these important communication skills in emergency medicine residents to improve the experience of our patients and our physicians.