Better explaining the benefits why AI? Analyzing the impact of explaining the benefits of AI-supported selection on applicant responses

Abstract

Despite the increasing popularity of AI-supported selection tools, knowledge about the actions that can be taken by organizations to increase AI acceptance is still in its infancy, even though multiple studies point out that applicants react negatively to the implementation of AI-supported selection tools. Therefore, this study investigates ways to alter applicant reactions to AI-supported selection. Using a scenario-based between-subject design with participants from the working population (N = 200), we varied the information provided by the organization about the reasons for using an AI-supported selection process (no additional information vs. written information vs. video information) in comparison to a human selection process. Results show that the use of AI without information and with written information decreased perceived fairness, personableness perception, and increased emotional creepiness. In turn, perceived fairness, personableness perceptions, and emotional creepiness mediated the association between an AI-supported selection process, organizational attractiveness, and the intention to further proceed with the selection process. Moreover, results did not differ for applicants who were provided video explanations of the benefits of AI-supported selection tools and those who participated in an actual human selection process. Important implications for research and practice are discussed.

Practitioner Points

-

AI-supported selection tools are increasingly used in HR selection.

-

Our findings show that the usage of AI-supported selection tools without explanations decreases the perception of fairness and personableness and increases emotional creepiness.

-

However, research focusing on the actions that organizations can take to prevent negative applicant reactions is scarce.

-

Video information—in comparison to written information—appears to be beneficial for explaining the advantages underlying an organization's decision to use AI in applicant selection.

-

Transparency about the reasons why the organization uses AI in the selection process can reduce negative applicant reactions.

1 INTRODUCTION

To hire high-level applicants, organizations are increasingly applying AI-supported selection tools, such as AI-supported CV screening or AI-supported interviews. These AI-supported selection tools offer the benefits of increased standardization, faster and more efficient hiring, and greater flexibility for applicants and recruiters (Gonzalez et al., 2019; Hickman et al., 2022; Woods et al., 2020).

The selection process is often the first point of contact with the potential employer, and the perceptions during the selection process determine the intention to pursue the application process, to finally accept a job offer, and the reputation of the organization itself (Harold et al., 2016; Hausknecht et al., 2004; Ryan & Ployhart, 2000). Therefore, maintaining a positive applicant experience is highly relevant during the selection process. Due to the intensified so-called “war for talent,” applicants increasingly select their employers and have considerably high demands and expectations on organizations (N.Anderson, 2003; Black & van Esch, 2020; van Esch et al., 2021). For this reason, organizations should not focus their personnel selection processes on selecting effectively suitable applicants, but also on avoiding negative applicant reactions because negative reactions can lead to resentment and a premature withdrawal from the applicant pool (N. Anderson, 2003; Ryan & Huth, 2008).

Previous research showed that applicant reactions to AI-supported selection tools are predominantly negative in terms of justice perceptions (e.g., Acikgoz et al., 2020; Köchling et al., 2022; Langer & Landers, 2021; Langer et al., 2020; Newman et al., 2020; Wesche & Sonderegger, 2021). While taking advantage of AI-supported selection tools and not discouraging applicants and keeping them in the selection process at the same time, it is paramount to examine the possible actions that organizations can take to improve applicant reactions in personnel selection. For example, Basch and Melchers (2019) showed that emphasizing the advantages of video interviews compared to face-to-face interviews in terms of standardization and flexibility increased fairness perceptions and perceived usability.

Meta-analytical evidence suggests that providing applicants with explanations and justifications about the selection and decision process is a cost-effective way of increasing applicants' fairness perceptions and—to a smaller extent—organizational attractiveness (Truxillo et al., 2009). Recent studies transferred this meta-analytical evidence from the pre-AI era to new AI-based automated systems and found that providing information about the used technology does not increase organizational attractiveness per se (Langer & König, 2018; Langer et al., 2021). Although the provision of information indirectly increases organizational attractiveness through fairness, providing applicants with information directly diminishes organizational attractiveness at the same time (Langer et al., 2018). Similarly, providing additional justifications about the usefulness of an automated process increases applicants' acceptance, although information about the upcoming process does not affect organizational attractiveness (Langer et al., 2021).

Notwithstanding these important insights, the question remains whether explaining the specific benefits of AI-supported selection tools in terms of higher objectivity, higher consistency, or equal opportunities can help to diminish or even prevent negative applicant reactions. In addition, it is unclear whether differences in media richness (i.e., written explanations vs. video explanations) might also influence applicants' acceptance of AI-supported selection tools. To fill this void, this study draws on media richness theory (Daft & Lengel, 1986) and examines whether an organization can alter applicant reactions to AI-supported selection tools by providing written or video explanations. Since current practice is far beyond research, and scientific scrutiny is necessary (Cheng & Hackett, 2021; Gonzalez et al., 2019), this study provides empirically founded valuable practical implications. From an organizational perspective, it is essential to avoid negative reactions because talented applicants might withdraw from the applicant pool. Therefore, this study helps organizations to apply AI-supported selection tools while simultaneously avoiding negative applicants' reactions.

2 THEORY AND HYPOTHESES

2.1 AI in the selection process

Nowadays, organizations increasingly use AI-supported selection tools, such as AI-supported CV screening and/or AI-supported interviews for preselection to better decide whom to invite for an in-person job interview. Advantages of these selection processes are, for example, increased standardization, faster time to hire, reduced cost, and greater flexibility (Gonzalez et al., 2019; van Esch et al., 2019; Woods et al., 2020). However, AI-supported selection tools can lead to unfair treatment in recruitment and selection if the training data set for machine learning contains unbalanced distributions of certain groups or ethnic minorities and if the underlying statistical model is ill-designed (e.g., Köchling et al., 2021). One possible application of AI in selection is AI-supported CV screening, where words and specific criteria are analyzed algorithmically to predict the job-fit of an applicant without human intervention. In addition to the initial CV screening, web-based and noninteractive AI-supported interviews—also known as asynchronous video interviews (e.g., Brenner et al., 2016; Langer et al., 2019) or automated interviews (e.g., Langer et al., 2021)—represent another application that became widespread recently (Lukacik et al., 2022). The interviewees are shown questions, and they have to answer these questions within a given time period via microphone and webcam (Basch et al., 2020, 2021). In the case of an AI-supported interview, the interview is later analyzed with the help of an AI system, which analyzes the verbal and nonverbal behavior of the interviewee by applying various methods, for example, word counts, topic modeling, prosodic information (e.g., pitch intention and pauses), and also facial expressions (e.g., head gestures and smiles) (Naim et al., 2018; Raghavan et al., 2020). However, these AI-supported selection tools offer only a minimum of human contact or involvement, which means that the applicant is assessed by an AI technology and not by a human member of the organization after the asynchronous video interview.

2.2 Hypotheses development

Researchers, as well as organizations in the field of personnel selection, have an interest in applicant reactions to the selection process because these reactions can have important organizational consequences such as the organization's attraction, the willingness to apply and complete the selection process, and finally to accept a job offer (Harold et al., 2016; Hausknecht et al., 2004; Ryan & Ployhart, 2000). We formulate hypotheses regarding the differences between a human selection process and an AI-supported process. Further, we consider the differences between no additional information on why an AI-supported process is used, written information, and information in the form of a video.

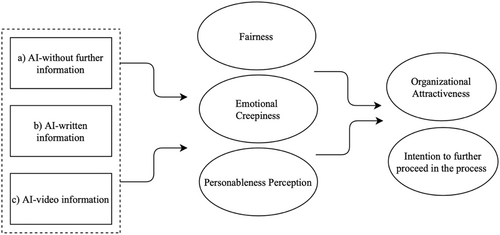

To investigate applicants' reactions when using AI-supported selection tools and to examine actions to prevent applicants' negative reactions, we draw upon organizational justice theory (e.g., Colquitt et al., 2001; Cropanzano et al., 2015; Gilliland, 1993). Organizational justice theory provides a theoretical basis for hypothesizing applicants' reactions to the usage of AI-supported selection tools. Accordingly, applicants examine the justice of an outcome (distributive justice), the adequacy and fairness of a process (procedural justice), and the adequacy of the treatment received by applicants from the deciding person (interactional justice, consisting of interpersonal justice and informational justice) (Colquitt et al., 2001). In this study, we focus on procedural and interactional justice perceptions as important mechanisms for explaining applicants' reactions toward the AI-supported selection tool. We theoretically transfer and empirically examine procedural and interactional justice perceptions of applicants toward the usage of AI in selection. Besides procedural justice (i.e., fairness), in particular, we emphasize the importance of interactional justice (i.e., personableness perception and emotional creepiness) from an applicant's perspective to deepen our understanding of employees' adverse reactions toward the use of AI in selection. We thereby follow recent suggestions by other recent studies considering personableness perception and creepiness when investigating the reaction to technology (Hiemstra et al., 2019; Langer et al., 2019; Lukacik et al., 2022). We additionally applied media richness theory (Daft & Lengel, 1986) to determine if further explanation by organizations for their implementation of AI-supported selection tools will prevent negative applicant reactions. Applying these theories, we propose that AI use in selection influences the responses of applicants in terms of organizational attractiveness and intention to further proceed the application process, mediated by their fairness, emotional creepiness, and personableness perceptions. We also propose that providing applicants with further explanations of why AI-supported selection tools are used will positively influence their reactions. Figure 1 depicts our proposed research model.

2.2.1 Fairness

Several different rules of procedural justice contribute to an individual's assessment of fairness (Cropanzano et al., 2015; Leventhal, 1980), especially during selection processes (Gilliland, 1993; Köchling et al., 2022; Langer, König & Fitili, 2018). Besides others, the list of the procedural rules consists of consistency, bias-suppression, ethicality, and accuracy (Cropanzano et al., 2015; Leventhal, 1980). Based on these rules, Gilliland (1993) summarized the consistency of administration, opportunity to perform, job relatedness, and reconsideration opportunity as formal characteristics of a selection process influencing the overall fairness assessment. Although AI-supported selection tools might be seen as being consistent among all applicants and more accurate by potentially reducing the human bias (Gonzalez et al., 2019; Lukacik et al., 2022), it is important to understand how the selection process is subjectively assessed and evaluated by applicants in terms of fairness if a company uses these tools.

Recent studies reveal that AI-supported selection tools are perceived as more skeptical than human-based selection processes (e.g., Acikgoz et al., 2020; Köchling et al., 2022; Langer & Landers, 2021; Langer et al., 2020; Newman et al., 2020; Wesche & Sonderegger, 2021). Hiemstra et al. (2019) demonstrated that applicants perceive video applications as less fair than traditional application methods. In Gonzalez et al. (2019)'s study, applicants reacted less favorably to an AI than to a human decision maker. Likewise, Newman et al. (2020) showed that applicants perceive the use of algorithms in the selection process as less fair in comparison to a selection decision made by a human. Similarly, Köchling et al. (2022) demonstrated that AI-support in later stages of the selection process (i.e., telephone or video interview) was negatively associated with the opportunity to perform, which is a part of applicant's fairness assessment. A possible explanation is that some factors (such as personality, leadership, and attitude) are difficult to quantify, which is why applicants may have difficulty understanding how they, as human beings, can be reduced to “numbers” and may perceive the AI-supported selection process as less fair than a human evaluation (Lee, 2018). Moreover, applicants do not know how the AI-supported selection tool is prioritizing certain factors in their CV and during their video interview, which might lead to negative applicant reactions. Besides, the applicants are mostly unfamiliar with these new tools, which can also increase perceived unfairness (Gonzalez et al., 2019). Additionally, the decision process is nontransparent for the applicants, making it difficult for them to understand it. Furthermore, applicants may be concerned that the AI-supported tool is not free of bias (Cheng & Hackett, 2021). Thus, we propose that applicants may perceive the AI-supported selection process as less fair than a human evaluation and hypothesize:

H1: Compared to a human evaluation, AI support in selection is negatively associated with fairness perception.

2.2.2 Emotional creepiness

Not only the perception of fairness, but also feelings and emotions play an important role in applicants' assessment of AI-supported selection tools (Langer et al., 2018), which partially reflect the interactional justice dimension in terms of honesty, respectfulness, and privacy (Bies & Tyler, 1993; Cropanzano et al., 2015). Emotional creepiness can be defined as a rather unpleasant affective impression triggered by unpredictable people, situations, or technologies (Langer & König, 2018). The feeling of creepiness can occur when applicants feel insecure and uncomfortable in an unknown situation (Langer & König, 2018). Thus, the newness of the AI-supported selection tools can lead to a feeling of creepiness since they are often still unknown to the applicants (Langer & König, 2018; Tene & Polonetsky, 2013). For applicants, it is mostly unpredictable how the AI-supported decision tool assesses the written information for CV screening and the verbal and nonverbal behavior during the AI-supported interviews (Langer & König, 2017; Langer et al., 2019). Langer and König (2017) found that applicants are more skeptical toward asynchronous video interviews compared to video conference interviews in terms of creepiness. In the case of an AI-supported video interview, both interview and analysis are not conducted by a member of the HR department, namely a human. This likely leads to a feeling of creepiness. Thus, we hypothesize:

H2: Compared to a human evaluation, AI support in selection is positively associated with emotional creepiness.

2.2.3 Personableness perception

For applicants, interpersonal factors are also important, such as communication or adequate treatment (Gonzalez et al., 2019), which resonate with the interactional justice rules of an appropriate interpersonal treatment (Bies & Tyler, 1993; Cropanzano et al., 2015; Gilliland, 1993). Personableness perception means the perception of empathy and interest in one's person by the other party. Applicants want to determine if they like the organization's culture and the people who work there (Black & van Esch, 2020) and, therefore, they need personal contact to gain an impression of the potential workplace. Since no representatives of the organization are present when AI-supported tools are implemented, the social nature of the interview is affected, and it is hard for applicants to form a picture of and carefully evaluate the organization (van Esch et al., 2019). Moreover, applicants mostly have expectations and presumptions; the lack of an HR member often violates their presumptions, and the impersonal treatment by using AI-supported tools may lead to a lower perception of personableness perception. The absence of social presence and, with it, the impairments of personal contact and interaction during the selection could be interpreted by applicants as a lack of esteem and appreciation. Previous studies showed that applicants perceive algorithms as dehumanizing and demeaning (Lee, 2018). They may feel less valued because representatives of the HR department are neither present during the interview nor do they evaluate applicants' CVs or videotaped interviews. Thus, organizations treat applicants in a less personal manner, and applicants feel less respected (Noble et al., 2021). The fact that the interview is analyzed using AI may even increase the feeling of surveillance.

H3: Compared to a human evaluation, AI support in selection is negatively associated with personableness perception.

2.2.4 Additional explanation

When facing unfamiliar AI-supported selection tools, feelings of unfairness, emotional creepiness, and less personableness may be evoked in new applicants due to the lack of personal contact and the lack of transparency about how the AI tool analyzes applicants' performance. Applicants may perceive the AI-supported selection process as very impersonal.

Providing applicants with additional information about the selection process is related to high transparency (e.g., Gilliland, 1993). Thus, providing additional information can be a helpful way to decrease applicants' adverse reactions (Gilliland, 1993; McCarthy et al., 2017). Truxillo et al. (2009) found that providing explanations affects important applicant reactions (e.g., perceived fairness or organizational attractiveness) and behaviors. Moreover, a study by Basch and Melchers (2019) showed that emphasizing the higher standardization of the procedure when using asynchronous video interviews leads to higher perceived fairness. Consequently, providing information about the benefits of the AI-supported selection process may help to make the process more predictable and decrease uncertainty (Langer et al., 2021). Additionally, applicants may want to understand why the organization uses AI-supported selection tools (Langer et al., 2021). Hence, explaining why an organization uses an AI-supported selection tool and the associated benefits of using this tool could improve the applicants' interpersonal concerns because the organization is investing time to ensure that their applicants feel that they are being respectfully and fairly treated (Gonzalez et al., 2019).

When providing information about the selection process, different forms of media can be used to present the reasons why the organization uses an AI-supported selection process and demonstrate the advantages of this process, namely lean (e.g., written text) and rich (e.g., video) media. Media richness in the form of a written or video explanation might avoid adverse reactions. According to Daft and Lengel's (1986) media richness theory, types of media differ in terms of “information richness.” The aim is to reduce uncertainty. A medium has high information richness when it has a broad range of criteria. Four attributes contribute to information richness, namely, the capacity for immediate feedback, the number of cues and signals that can be transmitted (e.g., body language, body posture, gestures, tone of voice), the level of personalization, and language variety (Daft & Lengel, 1986). The type of communication media influences applicants' perception of and reactions to the media, which in turn affects their attitudes toward the organization (Allen et al., 2004). Media richness theory postulates that media with higher richness can communicate more personal information (Allen et al., 2004).

The text and the video provided to the applicants can be interpreted as an indication of wider organizational attributes. Richer media is more effective than leaner media in conveying ambiguous and personal information, and this can influence attitudes and decision making (Allen et al., 2004). Therefore, it is necessary for organizations to engage with potential new employees to receive positive applicant reactions. The video introduction explaining the reasons for using an AI-supported video interview can indirectly disclose information about the organization's culture and provide a “human touch,” and in some way creates a connection between the organization and the applicant (Lukacik et al., 2022). The video can also ensure that there is higher interactivity and perception of presence (Lukacik et al., 2022). In Walker et al. (2009) study, applicants were more attracted to an organization when welcomed with a video message instead of a picture and text. Additionally, the video transmits multiple verbal and nonverbal cues (body language and vocal tone), uses natural language, and conveys emotions and feelings. Thus, we hypothesized that higher levels of media richness would improve the perceived fairness, the perceived personableness perception, and decrease the emotional creepiness:

H4: An explanation of the advantages of the use of AI in selection is positively related with (a) fairness, negatively related with (b) emotional creepiness, and positively related with (c) personableness perception compared to no information about the advantages of the use of AI in selection.

2.2.5 Organizational attractiveness and intention to further proceed in the selection process

The perceived attractiveness of the organization is one of the most important results of applicant reactions to a selection process and reflects the overall assessment (Bauer et al., 2001; Gilliland, 1993; Hausknecht et al., 2004; Smither et al., 1993). Whereby organizational attractiveness can be defined as applicant responses like attitudes or insights that an individual might have regarding the selection process and the potential employer (Ryan & Ployhart, 2000). Equally important is the intention to proceed further with the selection process. If the applicants have positive attitudes toward the organization, then the desire to continue the selection process and the intention to work for this organization increases accordingly (Bauer et al., 2001). The attractiveness of the organization depends, among other things, on how individual and personalized application processes are experienced by applicants (Chapman et al., 2005; Lievens & Highhouse, 2003; Wilhelmy et al., 2019). Thus, when applicants evaluate the selection process positively, consider the selection methods as fair, and have positive affective responses during the process, they also generate positive attitudes toward the organization and are more likely to be attracted to the organization and to continue in the selection process (Bauer et al., 2006; Chapman et al., 2005; Hausknecht et al., 2004). Conversely, applicants who are dissatisfied with the selection process may self-select themselves out of the hiring process (Hausknecht et al., 2004). Organizational attractiveness appears to be driven by fairness perceptions of the use of AI-supported tools (Ötting & Maier, 2018) and the fairness perceptions are predominantly negative (Langer & Landers, 2021).

Thus, we hypothesize that perceived fairness, emotional creepiness, and personableness influence organizational attractiveness and applicant intention to proceed further with the selection process.

H5a: Fairness is positively related to organizational attractiveness and intention to proceed further in the process.

H5b: Emotional creepiness is negatively related to organizational attractiveness and intention to proceed further in the process.

H5c: Personableness perception is positively related to organizational attractiveness and intention to proceed further in the process.

3 METHODOLOGY

3.1 Sample and procedure

Using an online panel of an ISO 20252:19 certified online sample provider, we recruited a quota-based sample, with gender and age approximating the respective distributions in the German general population, including a total of 200 participants (50 participants per condition). Consequently, 50% (N = 100) of the participants were female and the mean age was 45.11 years (SD = 11.65). All participants were currently employed with an average working experience of 24 years. On average, participants had previously participated in six selection processes, whereby only 1% (N = 2) of the participants had previous experience with AI in selection.

3.2 Scenarios

To test our hypotheses, we applied a between-subject design using four hypothetical scenarios for our treatment (Aguinis & Bradley, 2014). This methodology was chosen because the procedure provides a relatively high degree of experimental control through the manipulation of constructs and is therefore particularly well suited to empirically approaching an emerging research topic (Robinson & Clore, 2001). First, participants were informed on the landing page of the study about general information specific to the study (e.g., procedure and duration of the study, data protection, and data use). Participants were then randomly assigned to one of the four scenarios and were requested to read a description of the application process of the fictitious organization Marzeo1 on the organization's website. Entirely fictitious, the organization name and URL (www.marzeo.de) have been developed and used in previous research (Evertz et al., 2021); thus, participants were unable to find any additional information about the organization.

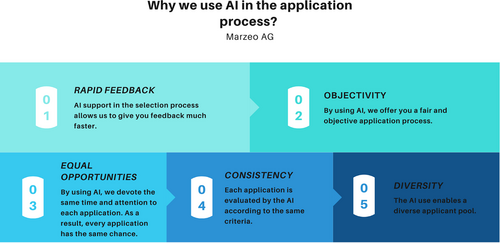

Participants should imagine that they are currently looking for a job and that they found an interesting job advertisement of an organization of their preferred industry, that exactly matches their professional skills and experiences. They were further told that they are planning to apply for the job (without specifying which job it was) and that they were searching for further information about it on the organization's website. After this short introduction, the participants were told that they found information about the selection process on the organization's website. The information provided on the organization's website was manipulated to present different scenarios clarifying (1) who conducted which step of the selection process (human or AI) and (2) whether the organization provided further information about why an AI was used in the selection process (no additional information, written information, video information) (see Appendix for the full scenarios). We did not exactly specify, which AI system (e.g., machine learning, deep learning) for CV screening or system vendor of AI-based interviews was used by the organization. The participants were told that the final selection process was the personal interview and the decision remained with humans. In particular, in scenario 1, participants were told that members of the HR department evaluate the preselection process and conduct the final interview (preselection, video evaluation, and final interview). In scenario 2, they were informed that the preselection, as well as the video, was conducted by AI but the final interview remained with members of the HR department. Scenario 3 is the same as scenario 2, but participants were presented with additional written information on the organizations' website about why the organization uses AI support for the selection process. The participants were told that with help of an AI-supported selection process, they are given faster feedback, the process is more objective, each applicant has the same chance to proceed in the selection process, the process is more consistent, and the applicant pool is more diverse. Scenario 4 was the same as scenario 3 but participants received the information via a short video rather than in written form about why AI is used in the company hiring process. In the video, an HR member explains the reasons why AI is used. The video was professionally filmed with an acting pedagogue in a professional context (see the Appendix for a screenshot of the video). The member of the HR department was filmed in an office setting to keep the scenario as realistic as possible.

3.3 Pretest and implementation of quality checks

Several procedures were implemented to check and ensure high-quality data. First, we pretested the wording of our scenarios and questionnaire in a sample of (N = 40) to examine whether our treatments worked as intended. Minor changes were necessary. Second, we included two attention checks in our questionnaire to enhance data quality. All participants in our sample passed the attention checks (e.g., “For this item, please select ‘strongly agree’”) (Barber et al., 2013; Kung et al., 2018). Third, we included implementation quality checks for our treatments at the end of the questionnaire to ensure that the participants understood the scenarios as intended (Shadish et al., 2002). Specifically, participants were asked to think about the scenario again and indicate whether employees of the HR department or an AI had conducted a certain process during the selection process. All participants in the sample passed the implementation check. Moreover, we asked the participants who had been assigned to a scenario of an AI-supported process if they received enough information about the reasons for the use of AI in selection. Indeed, 84% of scenario 2 participants (who had not received any additional information), reported that they did not receive enough information about the motives of AI use. The vast majority of participants who received information about the reasons for the organization's use of an AI-supported selection process (74% of participants receiving written information in scenario 3% and 82% of participants receiving video information in scenario 4) reported feeling adequately informed about the AI implementation. Consequently, this manipulation also worked well, and the participants who received information felt informed about the motives for the use of AI.

As an additional implementation check, we measured whether our scenarios were realistic to the participants (Maute & Dubés, 1999). We asked respondents to rate on a 7-point Likert-scale how realistic the description was to them (1 = very unrealistic to 7 = very realistic) and how well they were able to put themselves into the described situation (i.e., valence; 1 = very bad to 7 = very good). Overall, the results showed sufficient realism (M = 4.47) and that participants were able to put themselves into the situation (M = 4.81). We used a one-way analysis of variance (ANOVA) to compare the differences between our four scenarios and these two variables. First, the results of the one-way ANOVAs for differences in realism of the scenarios were nonsignificant, F(3, 196) = 2.57; p = 0.056. Second, a one-way ANOVA showed significant differences for participants' ability to put themselves into the described situation, F(3, 196) = 3.28; p = 0.022. Post-hoc Tukey's HSD test for multiple comparisons in the mean values for this ability of participants was significantly different between AI with written information (scenario 3; mean = 4.30) and the human condition (scenario 1; mean = 5.34) (p = 0.011, 95% confidence interval [CI] = [−1.90, −0.18]), while all other comparisons were nonsignificant (p > 0.05).

Beforehand, we approximated the sample size required for our analysis with the power analysis program G*Power (Faul et al., 2009). The sample size was a priori calculated based on a significance level of α = 0.01 and a power level of 1-β = 0.99, which both provided a conservative estimate for the necessary sample size. Based on the recommendation by Cohen (1992), we chose a medium effect size index with 0.15. To analyze the groups and the variables, we drew on a global effect MANOVA, which yielded 160 participants to have sufficient statistical power. Based on this power analysis, we slightly oversampled and recruited 200 participants (aiming for 50 participants per condition).

3.4 Measures

We measured all scales with items with response options that ranged from 1 (strongly disagree) to 7 (strongly agree), except for the treatments. We used a random rotation of the items for each scale to exclude a specific response behavior due to the sequence of the items. All scales were adopted from previous research to ensure the reliability and validity of our measures.

3.4.1 Treatment variables

Since we used four scenarios, we built three dichotomous variables that reflect our treatments, with the human condition (scenario 1) as the reference category. The first dummy variable is AI-support without additional information (0 = human condition; 1 = AI-support without information; scenario 2). The second dummy variable is AI-support with written explanation of the advantages (0 = human condition; 1 = AI-support with written information; scenario 3). The third dummy variable is AI-support with video explanation of the advantages (0 = human condition; 1 = AI-support with video information; scenario 4).

3.4.2 Fairness

This variable was measured with two items from Warszta (2012) based on Bauer et al. (2001), and we adjusted the second item to our company context. The two items were “I think that the selection process itself is fair” and “All in all, the selection process used by Marzeo is fair.” Cronbach's alpha of the scale was 0.94.

3.4.3 Emotional creepiness

This variable was measured with two items from Langer and König (2018) which we adjusted to our context. The two items were “I feel uneasy during this selection process” and “During this selection process, I have a queasy feeling.” Cronbach's alpha of the scale was 0.87.

3.4.4 Personableness perceptions

This variable was measured with two items from Wilhelmy et al. (2019) who used and further developed the scale. We adjusted the items to our context. The two adjusted items were “The organization Marzeo is empathetic toward me” and “Marzeo addresses me as an individual.” Cronbach's alpha of the scale was 0.81.

3.4.5 Organizational attractiveness

This variable was measured with two items from Highhouse et al. (2003). We adapted the items to the company Marzeo used in the scenarios. The two adjusted items were “For me, this organization is a good place to work” and “Marzeo is attractive for me as a place for employment.” Cronbach's alpha of the scale was 0.94.

3.4.6 Intention to further proceed with the selection process

This variable was measured with two items from Feldman et al. (2006) that we adapted to our company context. The two items were “How likely would you be to contact Marzeo for more information about the job being offered?” and “How likely would you be to apply to Marzeo?” Cronbach's alpha of the scale was 0.88.

3.4.7 Controls

For our research model, it seems reasonable that participants who are interested in new technologies, like to experiment with technological innovations, and who have a positive attitude toward AI are more inclined to positively evaluate and perceive the use of AI during the selection process. First, as a control variable, we measured technological affinity with two items of the “personal innovativeness in the domain of information technology” scale from Agarwal and Prasad (1998). The two items were “I like to experiment with new information technologies” and “If I heard about a new information technology, I would look for ways to experiment with it.” Cronbach's alpha of the scale was 0.90. Second, positive attitude toward AI was measured with two items from Schepman and Rodway (2020).2 The items were “Artificial intelligence is exciting” and “I am impressed by what Artificial intelligence can do.” Cronbach's alpha of the scale was 0.85. Third, we also controlled for demographic characteristics of our participants, such as age and gender (1 = female; 0 = male).

3.5 Analytical procedures

Before we employed structural equation modeling (SEM), we analyzed the mean differences between our scenarios by applying one-way ANOVAs. For the final SEM, we followed a two-step approach to analyze the data and test our hypothesis, using AMOS (J. C. Anderson & Gerbing, 1988; Arbuckle, 2014). First, we estimated the confirmatory factor analysis (CFA). Second, we evaluated the SEM to test our hypotheses. We used chi-square statistics and common fit indices to assess the model fit to our data (Bollen, 1989; Brown & Cudeck, 1993; Kline, 2015). A well-fitting model should have a nonsignificant chi-square test (χ²) (Bollen, 1989), a comparative fit index (CFI) value above 0.95 (Hu & Bentler, 1998), and a root mean square error of approximation (RMSEA) value below 0.06 (Brown & Cudeck, 1993).

The results of the CFA including our focal variables and the control variables exhibited a satisfactory model fit (χ2 = 113.18, df = 91, p = 0.058; CFI = 0.99; RMSEA = 0.035). First, all standardized factor loadings and Cronbach's alpha values of the latent constructs were above 0.70, which indicates a high reliability of our measurements (Fornell & Larcker, 1981). Second, we calculated the average variance extracted (AVE) and composite reliability (CR) for the latent constructs (Fornell & Larcker, 1981). Recommended threshold for the AVE is 0.50 and for the CR is 0.60 (Fornell & Larcker, 1981). All latent constructs exceeded these values (fairness: AVE = 0.88, CR = 0.94; emotional creepiness: AVE = 0.78; CR = 0.87; personableness perception: AVE = 0.70, CR = 0.82; organizational attractiveness: AVE = 0.89, CR = 0.94; intention to further proceed in the selection process: AVE = 0.81, CR = 0.89; technological affinity: AVE = 0.82, CR = 0.90; attitude toward AI: AVE = 0.77, CR = 0.86). In summary, the high factor loadings, reliabilities, and AVE and CR values support the validity of our measurements as well as convergent and discriminant validity.

4 RESULTS

4.1 Descriptive statistics and differences between the scenarios

Table 1 reports the means, standard deviations, and correlations of our variables.

| Variables | M | SD | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 | 11 | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 1 | Organizational attractiveness | 3.79 | 1.59 | (0.94) | ||||||||||

| 2 | Intention to proceed with the process | 3.71 | 1.86 | 0.76** | (0.88) | |||||||||

| 3 | Fairness | 4.39 | 1.61 | 0.67** | 0.65** | (0.94) | ||||||||

| 4 | Emotional creepiness | 4.24 | 1.84 | −0.63** | −0.63** | −0.71** | (0.81) | |||||||

| 5 | Personableness | 3.39 | 1.63 | 0.70** | 0.62** | 0.66** | −0.62** | (0.81) | ||||||

| 6 | AI without informationa | 0.25 | 0.43 | −0.19** | −0.22** | −0.22** | 0.17* | −0.25** | ~ | |||||

| 7 | AI with written informationa | 0.25 | 0.43 | −0.02 | −0.01 | −0.07 | 0.09 | −0.12 | −0.33** | ~ | ||||

| 8 | AI with video informationa | 0.25 | 0.43 | 0.05 | 0.08 | 0.13 | −0.10 | 0.21** | −0.33** | −0.33** | ~ | |||

| 9 | Technological affinity | 4.27 | 1.54 | 0.33** | 0.30** | 0.28** | −0.22** | 0.13 | 0.05 | 0.11 | −0.13 | (0.90) | ||

| 10 | Attitude toward AI | 4.86 | 1.51 | 0.34** | 0.38** | 0.39** | −0.25** | 0.28** | 0.05 | −0.15* | −0.00 | 0.48** | (0.85) | |

| 11 | Genderb | 0.50 | 0.50 | −0.05 | −0.11 | −0.11 | 0.12 | −0.01 | 0.02 | 0.00 | −0.02 | −0.23** | −0.04 | ~ |

| 12 | Age | 45.12 | 11.65 | −0.02 | −0.02 | −0.12 | 0.04 | −0.10 | 0.07 | 0.07 | −0.00 | −0.02 | −0.17* | −0.13 |

- Note: Internal consistency reliability coefficient (Cronbach's alpha) in parentheses along the diagonal. Abbreviations: M, mean value; SD, standard deviation. *p < 0.05; **p < 0.01, N = 200.

- a The treatment variables are dichotomous with 1 = treatment and 0 = human condition and all other conditions.

- b Gender is dichotomous with 1 = females and 0 = males.

We used a one-way ANOVA to compare the differences between our four scenarios and the five dependent variables. Results are depicted in Table 2 and show significant differences in fairness, F(3, 196) = 4.90; p = 0.003, emotional creepiness, F(3, 196) = 4.53; p = 0.004, personableness, F(3, 196) = 8.28; p < 0.00, organizational attractiveness, F(3, 196) = 3.36; p = 0.02, and intention to proceed with the process, F(3, 196) = 4.03; p = 0.008.

| Human | AI (without information) | AI (written information) | AI (video information) | |||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Measure | M | SD | M | SD | M | SD | M | SD | F (3,196) | η2 |

| Fairness | 4.82 | 1.37 | 3.79 | 1.69 | 4.20 | 1.68 | 4.76 | 1.49 | 4.90** | 0.07 |

| Emotional creepiness | 3.76 | 1.72 | 4.84 | 1.89 | 4.66 | 1.67 | 3.88 | 1.94 | 4.53** | 0.07 |

| Personableness | 3.80 | 1.51 | 2.64 | 1.57 | 3.00 | 1.56 | 3.95 | 1.56 | 8.28** | 0.11 |

| Company attractiveness | 4.27 | 1.59 | 3.22 | 1.78 | 3.72 | 1.73 | 3.94 | 1.70 | 3.36* | 0.05 |

| Intention to proceed with the process | 4.20 | 1.95 | 3.01 | 1.81 | 3.67 | 1.72 | 3.97 | 1.79 | 4.03** | 0.06 |

- Note: *p < 0.05; **p < 0.01.

Subsequently, we used Tukey's HSD test for multiple comparisons between the scenarios and we calculated Cohen's d for each pairwise comparison. Concerning our proposed mediators, first, we found that the mean value of fairness was significantly lower for AI without information (M = 3.79, SD = 1.69) in comparison to human (M = 4.82, SD = 1.37) (p = 0.006, d = 0.67, 95% CI = [−1.84, −0.22]) and to AI with video information (M = 4.76, SD = 1.49) (p = 0.012, d = 0.61, 95% CI = [−1.78, −0.16]). Second, the mean value of emotional creepiness was significantly higher for AI without information (M = 4.84, SD = 1.89) in comparison to human (M = 3.76, SD = 1.72) (p = 0.017, d = 0.61, 95% CI = [0.14, 2.02]) and to AI with video information (M = 3.88, SD = 1.94) (p = 0.042, d = 0.46, 95% CI = [0.02, 1.84]). Third, the mean value for personableness was significantly lower for AI without information (M = 2.64, SD = 1.57) in comparison to human (M = 3.80, SD = 1.51) (p = 0.001, d = 0.76, 95% CI = [−1.96, −0.36]) and to AI with video information (M = 3.95, SD = 1.56) (p < 0.01, d = 0.84, 95% CI = [−2.11, −0.51]). Additionally, the mean value for personableness was significantly lower for AI with written information (M = 3.00, SD = 1.56) in comparison to AI with video information (M = 3.95, SD = 1.56) (p = 0.013, d = 0.61, 95% CI = [−1.76, −0.15]).

Concerning our two outcome variables, organizational attractiveness was significantly lower for AI without information (M = 3.22, SD = 1.78) compared to human (M = 4.27, SD = 1.59) (p = 0.012, d = 0.62, 95% CI = [−1.93, −0.17]). Similarly, the intention to proceed with the process was significantly lower for AI without information (M = 3.01, SD = 1.81) compared to human (M = 4.20, SD = 1.95) (p = 0.007, d = 0.63, 95% CI = [−2.13, −0.25]) and to AI with video information (M = 3.97, SD = 1.79) (p = 0.044, d = 0.53, 95% CI = [−1.90, −0.02]).

In summary, the ANOVA results support our basic assumptions that AI support without any additional information was negatively associated with fairness perception, emotional creepiness, and personableness perception compared to a traditional human evaluation in selection (H1–H3). Similarly, AI support without additional information was also negatively associated with the three mediators compared to AI support with video information, which also supports our hypotheses H4a–c. Interestingly, we found no significant differences in any dependent variable between the human condition (scenario 1) and the AI with video information condition (scenario 4).

4.2 Results of the SEM

Table 3 shows the estimated coefficients of the SEM. Again, the SEM including our control variables shows a satisfactory model fit to our data (χ2 = 119.22, df = 97, p = 0.062; CFI = 0.99; RMSEA = 0.034). First, AI without information diminished the perceived fairness of the selection process (β = −0.28; p < 0.001), while both AI with additional written information (β = −0.11; p = 0.17) and AI with additional video information (β = 0.03; p = 0.70) did not influence the perceived fairness. Second, AI without information (β = 0.31; p < 0.001) and AI with written information (β = 0.22; p = 0.01) were both positively associated with emotional creepiness, while AI with additional video information showed no significant influence (β = 0.05; p = 0.52). Third, AI without information (β = −0.33; p < 0.001) and AI with written information (β = −0.19; p = 0.04) were negatively associated with personableness perceptions of the selection process, while AI with additional video information showed no significant influence (β = 0.03; p = 0.76). Consequently, the use of AI and AI with additional written information reduces the perceived fairness and the perceived personableness perception and increases the emotional creepiness (supporting our hypotheses H1, H2, and H3), while AI with video information seems equal to a human evaluation in terms of fairness, personableness perception, and emotional creepiness, supporting our hypothesis H4.

| B | SE | β | p-Value | |

|---|---|---|---|---|

| Treatment effects and controls on mediatorsa | ||||

| AI without information → Fairness | −0.96 | (0.27) | −0.28 | <0.001 |

| AI-written information → Fairness | −0.38 | (0.28) | −0.11 | 0.17 |

| AI-video information → Fairness | 0.11 | (0.27) | 0.03 | 0.70 |

| AI without information → Emotional creepiness | 1.35 | (0.37) | 0.31 | <0.001 |

| AI-written information → Emotional creepiness | 0.97 | (0.38) | 0.22 | 0.01 |

| AI-video information → Emotional creepiness | 0.24 | (0.37) | 0.05 | 0.52 |

| AI without information → Personableness | −0.97 | (0.25) | −0.33 | <0.001 |

| AI-written information → Personableness | −0.54 | (0.26) | −0.19 | 0.04 |

| AI-video information → Personableness | 0.08 | (0.25) | 0.03 | 0.76 |

| Technological affinity → Fairness | 0.12 | (0.10) | 0.12 | 0.20 |

| Attitude toward AI → Fairness | 0.33 | (0.09) | 0.33 | <0.001 |

| Technological affinity → Emotional creepiness | −0.21 | (0.13) | −0.16 | 0.10 |

| Attitude toward AI → Emotional creepiness | −0.22 | (0.12) | −0.18 | 0.07 |

| Technological affinity → Personableness | 0.03 | (0.09) | 0.03 | 0.76 |

| Attitude toward AI → Personableness | 0.24 | (0.09) | 0.29 | 0.01 |

| Effects of the mediators and controls on the outcomes | ||||

| Fairness → Organizational attractiveness | 0.23 | (0.11) | 0.21 | 0.03 |

| Emotional creepiness → Organizational attractiveness | −0.06 | (0.09) | −0.06 | 0.53 |

| Personableness → Organizational attractiveness | 0.78 | (0.14) | 0.57 | <0.001 |

| Fairness → Intention to proceed | 0.21 | (0.10) | 0.21 | 0.03 |

| Emotional creepiness → Intention to proceed | −0.18 | (0.08) | −0.22 | 0.02 |

| Personableness → Intention to proceed | 0.46 | (0.12) | 0.38 | <0.001 |

| Technological affinity → Organizational attractiveness | 0.26 | (0.08) | 0.21 | <0.001 |

| Attitude toward AI → Organizational attractiveness | −0.01 | (0.07) | −0.01 | 0.93 |

| Technological affinity → Intention to proceed | 0.20 | (0.07) | 0.19 | 0.003 |

| Attitude toward AI → Intention to proceed | 0.02 | (0.07) | 0.02 | 0.78 |

- Note: B = unstandardized effect; SE = standard error; β = standardized effect; N = 200; Coefficients that are significant at p < 0.05 are bold.

- a Reference category is the human condition (scenario 1). The control variables age and gender showed nonsignificant associations with all variables and were therefore omitted from the table.

Moreover, we found that perceived fairness (β = 0.21; p = 0.03) and the personableness perception (β = 0.57; p < 0.001) were positively associated with organizational attractiveness, while emotional creepiness was not related to organizational attractiveness (β = −0.06; p = .53). Additionally, fairness (β = 0.21; p = 0.03) and personableness perception (β = 0.38; p < 0.001) were positively associated with the intention to further proceed with the selection process, while emotional creepiness (β = −0.22; p = 0.02) was negatively associated with the intention to further proceed with the process, supporting our hypotheses H5a, H5c, and partially supporting H5b.

Finally, we tested the total indirect effects of our treatments on the outcomes, and the results are depicted in Table 4. First, AI without information (B = −1.05; p = 0.001, 95% CI = [−1.66, −0.50]) and AI with written information (B = −0.56; p = 0.04, 95% CI = [−1.10, −0.01]) were both negatively associated with organizational attractiveness, while AI with additional video information showed no significant total indirect effect (B = −0.07; p = 0.68, 95% CI = [−0.39, 0.59]). Second, AI without information (B = −0.89; p = 0.001, 95% CI = [−1.39, −0.45]) and AI with written information (B = −0.50; p = 0.02, 95% CI = [−0.99, −0.08]) were both negatively associated with the intention to further proceed with the selection process, while AI with additional video information showed no significant total indirect effect (B = 0.01; p = 0.88, 95% CI = [−0.39, 0.45]).

| B | SE | Lower bound | Upper bound | p-Value | |

|---|---|---|---|---|---|

| AI without information → Organizational attractiveness | −1.05 | (0.29) | −1.66 | −0.50 | 0.001 |

| AI-written information → Organizational attractiveness | −0.56 | (0.28) | −1.10 | −0.01 | 0.044 |

| AI-video information → Organizational attractiveness | 0.07 | (0.25) | −0.39 | 0.59 | 0.681 |

| AI without information → Intention to proceed | −0.89 | (0.24) | −1.39 | −0.45 | 0.001 |

| AI-written information → Intention to proceed | −0.50 | (0.23) | −0.99 | −0.08 | 0.023 |

| AI-video information → Intention to proceed | 0.01 | (0.21) | −0.39 | 0.45 | 0.884 |

- Note: The total indirect effects are the sum of the indirect effects and address in how far our treatments affect organizational attractiveness and intention to further proceed with the selection process mediated by all mediators together.

- a Reference category is the human condition (scenario 1); Number of bootstrap samples = 2000; Bias-corrected standard errors are given; B = unstandardized effect; SE = standard error; lower/upper bounds are calculated as 95% bias-corrected confidence intervals; Coefficients that are significant at p < 0.05 are bold.

5 DISCUSSION

5.1 Main findings and theoretical contributions

Our study provides valuable implications to the HR and broader management literature. Since research on actions that organizations can take to prevent negative applicant reactions is still scarce, our study contributes to the literature by investigating possible actions by organizations to diminish negative applicant reactions toward AI-supported selection tools (e.g., Basch & Melchers, 2019; Gonzalez et al., 2019; Langer & Landers, 2021; Langer et al., 2021). Furthermore, our study demonstrates that media richness, that is, the type of media used to convey information to the applicants, is important.

First, the findings of the SEM demonstrate that the use of AI without providing any further explanation of why AI is being used during the selection process in comparison to a selection process conducted by humans leads to negative applicant reactions in terms of fairness, personableness, and emotional creepiness, thus corroborating research findings by previous research (e.g., Acikgoz et al., 2020; Köchling et al., 2022; Langer & Landers, 2021; Langer et al., 2020; Newman et al., 2020; Wesche & Sonderegger, 2021). In turn, implementing AI without further explanation in comparison to the human condition diminished organizational attractiveness and the intention to further proceed with the selection process mediated by perceived fairness, emotional creepiness, and personableness perceptions.

Second, providing written information about the potential benefits of AI during the selection process in comparison to a selection process conducted by humans increased emotional creepiness and decreased personableness perceptions, while we did not find significant differences in fairness perceptions. Nonetheless, AI with written explanations still diminished organizational attractiveness and the intention to further proceed with the selection process mediated by fairness, creepiness, and personableness.

Third, we found no significant differences between the video explanation of the potential benefits of AI in comparison to a selection process conducted by humans in terms of fairness, creepiness, and personableness. In addition, AI with video information was neither associated with organizational attractiveness nor the intention to further proceed with the selection process. However, ANOVA results showed significant differences between the AI condition without further explanations and AI with video explanations indicating that AI with video explanations was perceived to be fairer, higher in personableness, and less creepy.

Overall, our results address the calls for further research in this area (e.g., Basch & Melchers, 2019; Langer & Landers, 2021; Langer et al., 2021; Gonzalez et al., 2019), implicating that organizations are able to take actions to diminish negative applicant reactions to the use of AI during selection processes.

In comparison to no additional information, video explanations about the potential benefits of AI seem to be a fruitful way to avoid negative applicant reactions to the implementation and usage of AI-based selection tools. Videos can make the AI-supported selection process more personal because the higher media richness of using a video with a real person transmits more personal information and they are easier to grasp by the applicants (Allen et al., 2004; Daft & Lengel, 1986). Additionally, applicants have the opportunity to form an idea of the organization with help of the video because they view an actual member of the organization thereby gaining a first impression of the organization, its culture, and its employees, which provides a “human touch.” This could be the reason why we did not find a difference between the human condition and the AI condition with video explanations, but why we found a difference between the AI condition with video explanations and the AI condition without further explanations.

Second, media richness seems to be a fruitful extension for understanding and altering applicant reactions toward the use of AI in personnel selection. Previous research showed that high-information recruitment practices (i.e., recruitment websites) have a stronger impact on employer knowledge than low-information recruitment practices (i.e., printed recruitment advertisements) because richer media is considered to be perceived as being more accurate and more credible (Baum & Kabst, 2014; Cable & Yu, 2006; Cable et al., 2000). Transferred to the times of an increased use of social media, such as Instagram, Facebook, Tik Tok, and Twitter, people got used to watching short videos, which are richer media than written text. Thus, videos have become an effective way of communicating information and they are able to transfer explanations about AI support in personnel selection, which could be supplemented by written text. The simultaneous use of multiple recruitment activities has been shown to be beneficial in altering applicant reactions (Baum & Kabst, 2014). Thus, more research is necessary to further examine and exploit the benefits of media richness in providing information about AI and explaining its functionality as well as its advantages and potential disadvantages to stakeholders inside and outside of the organization.

5.2 Practical implications

Given the evidence of the large research–practice gap on this topic (Cheng & Hackett, 2021; Gonzalez et al., 2019; Noble et al., 2021), we hope that our study results help HR managers to implement and use AI-supported selection tools more effectively in a way that mitigates or even prevents adverse applicant reactions. Organizations that decide to conduct parts of their selection process with the help of AI should carefully evaluate the potential adverse effects of AI support on organizational attractiveness to prevent losing talented applicants.

However, given that AI-supported selection tools come with many advantages (McCarthy et al., 2017) if the technology is well-designed, organizations should consider ways to improve their applicants' perceptions and try to reconcile the advantages with the individual applicant's needs. Specifically, a cost-effective option would be to give applicants a suitable explanation of why an AI-supported selection tool is used so that applicants better understand the advantages of the AI-supported process (i.e., faster decision, higher objectivity, same chances for all applicants, consistency, and more diverse applicant pool).

The study results showed that the most effective way to explain the advantages is through a professional video. Since interpersonal contact is missing when using AI-supported selection tools, and for applicants, it is difficult to gain an impression of the organization without actual contact with a member of the HR department. Therefore, it is important that organizations that use an AI-supported selection process try to create a personal environment. With help of the video, a personal atmosphere can be created allowing applicants to gain insights into the organization and feel more valued. Viewing an explanation of the benefits via video helps to make the AI-selection process more salient for applicants undergoing it; these benefits are clearly outlined before they proceed with the selection process.

However, recruiters should still keep in mind when implementing AI-supported selection tools that AI-supported selection tools can lead to unfair treatment if the underlying training data set is unbalanced or contains discrimination, or if the system is poorly designed (e.g., Köchling & Wehner, 2020; Köchling et al., 2021). It is therefore important that the AI-supported systems are properly designed, trained, validated, and monitored (Tippins et al., 2021).

5.3 Potential limitations and future research implications

Although the present findings make valuable contributions to theory and management practice and much effort was invested to ensure that the design of the studies allowed us to minimize the risk of potential biases (Podsakoff et al., 2012), we acknowledge that there are some potential limitations and remaining questions that should be addressed by future research. First, even though we kept the hypothetical scenarios as realistic as possible and written scenarios usually provide a suitable way to investigate feelings, attitudes, and behaviors of real-life situations (Maute & Dubés, 1999; Taylor, 2005), participants found themselves in a hypothetical environment and were not actually applying for a job in a legitimate organization. Consequently, the experimental design examines the actual first-hand experience with the applicant and the selection contexts.

However, written scenarios provide an appropriate and internally valid method for testing theories when the hypothetical situations are sufficiently realistic (Maute & Dubés, 1999). Experiments have the advantage of examining whether a treatment causes a change in the outcome while holding all other factors constant in a controlled environment (Highhouse, 2009). Since it was not the purpose to determine an effect size rather than to test theory and theoretical explanations, a laboratory (i.e., hypothetical) experiment is an appropriate research method (Highhouse, 2009). However, future studies could analyze whether the results of this study are transferable to a real selection situation, which could potentially be difficult due to ethical concerns that arise when applicants are provided with different information and different kinds of information.

Second, participants had little information about the organization, the job or industry, and the manner of AI that was actually applied by the organization. During an actual application process, applicants usually have more information about the potential employer (e.g., employer reputation, image, industry) and the specific job position, which could distinctly affect the current results. For example, a high employer image may partially compensate for the negative applicant reactions toward using AI to get a job with the employer of choice. However, while this might reduce the negative effects of AI that we found, we do not assume that the effects will be reversed or even positive. In addition, we controlled for technological affinity and positive attitudes toward AI, which limits the potential influence of a high-tech employer image or high-tech industry. Nevertheless, to strengthen the empirical evidence on negative reactions, future studies could examine applicant reactions toward AI in a real-life setting, for example, in well-known organizations that already use AI in their selection process.

Third, another limitation is related to the AI condition with video information. The fact of showing a videotaped recording of a representative of the organization to applicants might already increase the feeling of being valued by the organization and, thus, could increase the perceptions of personableness and decrease emotional creepiness. Commercial vendors of AI-based selection tools offer official videos to explain the recording process of the video interviews to the applicant during the selection process (e.g., HireVue). Thus, presenting video information about the benefits of AI-based selection tools is externally valid, but might diminish internal validity, which is a common trade-off of laboratory experiments. Future studies could analyze whether explaining reasons for AI-based selection tools using only videos by a commercial vendor or by an organization's representative might lead to different reactions.

Fourth, we collected cross-sectional and self-reported data due to the chosen experimental between-subject design. While this does not affect the influence of our treatments on the proposed mediators, the relationships between the mediators and outcomes of our research model are correlational and could be overestimated. In addition, self-reported data are useful to examine the opinions, feelings, and attitudes of participants, but these data do not allow us to examine actual behavior. Thus, different research designs, such as naturalistic observations, are needed to collect longitudinal data and to examine the actual behavior of applicants during their participation in an AI-based selection process.

Our findings also offer additional and new fruitful research avenues for future research. First, our study focuses on the explanation of the advantages and the use of written or video information. Future studies could analyze if other actions could also help to reduce negative applicant reactions. For example, this could be AI-supported personalized feedback after the selection process or education about how evaluation is performed by the AI-supported selection tool. Second, our sample of respondents in Germany had only little experience with AI-based selection tools. In this regard, the usage of online conference tools during and after the COVID-19-pandemic might also facilitate the diffusion of AI technology during the selection process in Germany, which simultaneously increases applicants' experience with AI-based selection tools. The question arises whether applicants perceive AI-based selection tools differently if they are well experienced with these tools in comparison to inexperienced applicants over time. Another idea is that future research could evaluate how the applicants respond when the questions, for example, in AI-supported interviews, are also shown via video. In sum, we hope that this study provides an impetus for future research, and we encourage future studies to further examine how adverse reactions can be avoided when using AI-supported selection tools.

6 CONCLUSION

The application of AI-supported selection tools for personnel selection is growing, however, at the same time, extant research showed that adverse applicant reactions to this technology can occur. Consequently, to attract, motivate, and retain talented applicants in the application process, there is a need to assess how negative applicant reactions can be avoided when using AI-supported selection tools. Nevertheless, the literature on potential actions to minimize the adverse applicant reactions when using AI-supported tools for selection is still in its infancy, so this study makes important contributions to the field, employing a unique experimental scenario and providing far-reaching implications for practice and research. We demonstrated that negative applicant reactions can easily be prevented by using a professional video explaining the advantages of AI use in selection. In this respect, our study is among the first of its kind that investigates specific actions that can be taken to mitigate negative applicants' reactions.

7 ACKNOWLEDGMENT

Open Access funding enabled and organized by Projekt DEAL.

ENDNOTES

- 1 We would like to thank Lena Evertz, Rouven Kolitz, and Stefan Süß for allowing us to use Marzeo as a fictive organization.

- 2 We measured the original scale by Shepman and Rodway (2020) consisting of 10 items. However, because the original scale also contained negative attitudes and fear toward AI (e.g., “I think artificially intelligent systems make many errors,” and “I shiver with discomfort when I think about future uses of Artificial Intelligence”), which partially overlaps with emotional creepiness, we decided to reduce our measurement to two items because we only wanted to control for positive attitude.

APPENDIX

Scenarios

Introduction (same for all scenarios)

You are currently looking for a job. On the website of Marzeo AG, an organization that operates in your target industry, you come across an interesting job posting that exactly matches your skills and expectations. In addition to reading the job posting thoroughly, you go to the Marzeo AG website to find out more about the selection process.

There you will find the following information about the selection process:

Scenario 1

Homepage Marzeo AG

Scenario 2

Homepage Marzeo AG

Scenario 3

Scenario 4

(same introduction as scenarios 2 and 3).

Content of the video:

You have made it to the next round in the recruiting process of the Marzeo AG. My name is Antje Kramer, I work in the HR department of the Marzeo AG, and I will show you the advantages of the artificial intelligence-based selection process. We would like to get you to know in greater detail. Through the assistance of the AI, you will receive feedback on your application much earlier than usual. Additionally, the whole recruiting process gets fairer and more objective through the integration of AI, because every application receives the same attention and the same handling time. Furthermore, this process enables a higher consistency because every applicant receives the same questions and therefore, every applicant has the same chance. After that, your application will be evaluated by the AI based on the same criteria as other applications. In addition to that, this process allows us to have a diverse pool of applicants. Good luck with your application! We are looking forward to receiving your application!

Open Research

DATA AVAILABILITY STATEMENT

The data that support the findings of this study are available from the corresponding author upon reasonable request.