Water level recognition based on deep learning and character interpolation strategy for stained water gauge

Abstract

Due to the diversity of climate and environment in China, the frequent occurrence of extreme rainfall events has brought great challenges to flood prevention. Water level measurement is one of the important research topics of flood prevention. Recently, the image-based water level recognition method has become an important part of water level measurement research due to its advantages in easy installation, low cost, and zero need of manual reading. However, there are two mainly shortcomings of the existing image-based water level recognition methods: (1) severely affected by light intensity and (2) low accuracy of water level recognition for stained water gauges. To solve these two problems, this paper proposes a water level recognition method in consideration of complex scenarios. This method first uses a semantic segmentation convolutional neural network to extract the water gauge mask, and then uses the YOLOv5 object detection network to extract the letter “E” on the water gauge. Based on the character sequence inspection strategy, the algorithm dynamically compensates for the missed detection of characters of stained water gauges. Through a large number of experiments, the proposed water level measurement method has good robustness in complex scenarios, meeting the needs of flash flood defense.

1 INTRODUCTION

In recent years, flash floods caused by global warming and frequent extreme rainfall events have seriously threatened the safety of people's lives and property along rivers. Water level measurement is one of the most important tasks in flash flood defense, cause an effective monitoring system can provide a data foundation for flash flood prediction and defense work. Traditional water level measurement work is carried out through manual reading or sensors. Manual reading is time-consuming and labor-intensive, especially during the flood season, when the life safety of measuring personnel cannot be guaranteed. On the other hand, there are issues with inconvenient equipment installation and difficult maintenance when measuring through sensors. With the continuous development of computer vision, water level measurement based on image process algorithm have attracted widespread attention from academia and engineering circles due to their advantages such as easy installation, low cost, and easy maintenance of equipment, which become an important branch of water level measurement method research. However, during high flood periods, the flow velocity of rivers increases, the water level is deeper, and the water level rises and falls rapidly. Floods carry a large amount of sediment and floating objects attached to the surface of the water gauge, making water level measurement based on image more challenging.

Among the traditional image processing algorithms of water level recognition, (Sabbatini et al., 2021) believe that image quality has a significant impact on the accuracy of the water level recognition method. Mean color and mean square of saturation are used to identify and filter out poor quality images (Sun et al., 2013) proposed identifying water level through histograms, morphological transformations, and edge detection (Zhong, 2017) proposed dividing water gauge scales by k-mean cluster analysis, which is poor of generalization in complex background (Chen et al., 2021) proposed multitemplate matching and sequence verification to recognize character. This method increases the accuracy of character recognition, but still limited by the dirty spots on the surface of water gauge (Liu et al., 2021) proposed average and variance threshold method to detect water level, which calculating water level based on binary image extracted from Lab image. And then converting pixel distance to actual water level through camera calibration. The above methods based on traditional image processing algorithms can achieve certain accuracy in specific environments, especially when there is no large-scale dirt on the water gauge, but the experimental results are not ideal in complex natural environments.

In recent years, with the maturity of deep learning and continuous technological innovation, water level recognition based on deep learning has gradually emerged (Jafari et al., 2021) proposed using semantic segmentation to detect water body contours and obtain water level boundaries, which can locate the water line boundary more precise but need extra calibration process (Pan et al., 2018) proposed using CNN for water gauge feature extraction (Qiao et al., 2022) proposed using YOLOv5s to detect water gauges and numerical characters, and then using a CNN classification network to classify scale number characters to identify water level. This method improves the accuracy of character detection in variable background, but its overall water level detection accuracy is still constrained by character distinguishability.

Those methods mentioned above have more or less two drawbacks to some extend: first, they require prior information, such as the possition of the water gauge in the input image, color distribution or camera calibration which is poor of extensibility and the accuracy is easy to be affected. Second, the robustness of the algorithm in complex environments is limited, as changes in light intensity, foreign object attachment, and natural corrosion of the water gauge can all reduce the detection accuracy of the algorithm. Therefore, they cannot meet the requirements of water level measurement in flash flood scenarios, cause the scene is more complex the and the accuracy of water level estimation for dirty water gauges is limited.

In response to the limits of the algorithm application mentioned above, this article proposes a new algorithm based on semantic segmentation and object detection to improve the accuracy of water level recognition in complex scenes. We use semantic segmentation model short-term dense concatenate (STDC) (Fan et al., 2021) and object detection model YOLOv5 to recognize water gauge and scale characters, and proposed a new method of character sequence interpolation to dynamically filter falsely detected characters and filling in missing characters. Through a large number of experiments, it has been shown that our proposed method achieves competitive recognition accuracy and robustness in complex environments, without requiring any prior information. In addition, the proposed algorithm is more convenient for installation and maintenance, which is worth for engineering promotion.

2 RELATED WORK

2.1 Semantic segmentation

Semantic segmentation is a pixel-wise image detection and classification deep learning method, which is different from the usual meaning of object detection. Semantic segmentation can perform more precise target segmentation on images or videos. Mainstream semantic segmentation models include Deeplab (Chen et al., 2018) series, U-Net (Ronneberger et al., 2015), PSPNet (Zhao et al., 2017), and so forth. Due to the fact that the target of semantic segmentation is pixel-wise image classification, real-time performance is always a big challenge for semantic segmentation. There are two mainstream solutions to fulfill the demand: (1) on the one hand, choosing a lightweight backbone, for example, DFANet (Li et al., 2019), BiSeNetV1; (2) on the other hand, restricting the input image size. Both methods can more or less cause a decrease in segmentation accuracy. In this paper, we intend to use STDC segmentation network to detect water gauge object. STDC proposes a new model structure based on BiSeNetv2 (Yu et al., 2021) to reduce parameter numbers and reform the original BiSeNet decoder network structure to improve segmentation accuracy, which reaches a trade-off between accuracy and efficiency.

2.2 Object detection

Object detection is one of the basic tasks in computer vision. Object detection algorithms based on deep learning can be mainly divided into two categories: one-stage and two-stage. The most representative works for one-stage object detection include the YOLO (Redmon et al., 2016) series, single shot detector (SSD), and RetinaNet (Lin et al., 2017), and so forth. The mainstream two-stage object detections are the R-CNN series, such as Faster R-CNN (Ren et al., 2017), R-FCN, cascade R-CNN (Cai & Vasconcelos, 2018), and so forth. Although the one-stage method is more efficient compared to two-stage in the early stage, its model accuracy is always unsatisfactory. As the network structure continues to optimize, YOLO gradually shows its powerful performance, especially since YOLOv4 (Bochkovskiy et al., 2020), which has surpassed the most state-of-the-art two-stage models and other one-stage detectors at that time.

3 PROPOSED METHOD

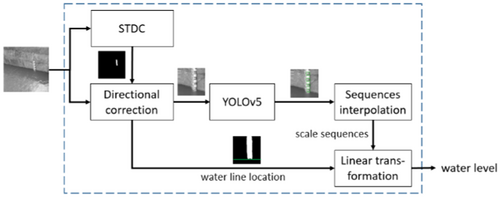

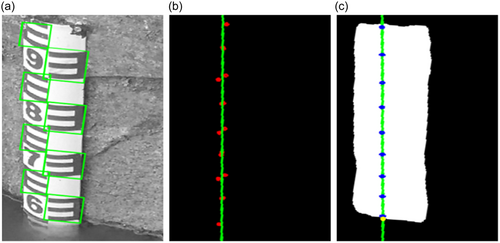

This paper proposed a water level detection method based on deep learning, the algorithm flow is shown in Figure 1. First, the semantic segmentation model STDC is used to extract the water gauge target binary mask. Meanwhile, we can also obtain water level boundary information from the segmentation mask. Second, the segmentation mask is used to locate the water gauge target and perform directional correction. Then we apply YOLOv5m to detect the scale characters in the water gauge region. Next, the character sequence interpolation algorithm proposed in this paper is used to eliminate false positive character objects and complete missed characters. Finally, the water level is calculated through linear interpolation.

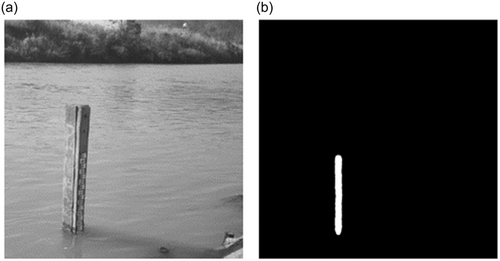

3.1 Water level segmentation in complex environments

To avoid calibration process and achieve real automatic water level recognition, it is necessary to positioning the water gauge object at first. The segmentation model can generate target mask, while recognizing water gauge targets, it is also possible to extract water level boundary information. Furthermore, some cameras may not have a shooting angle that is completely perpendicular to the horizontal plane, as shown in Figure 2, and the inclined water gauge in the image can impact the accuracy of subsequent character recognition. By generating binary mask through segmentation model, image processing operations can be used to correct the inclination of the water gauge.

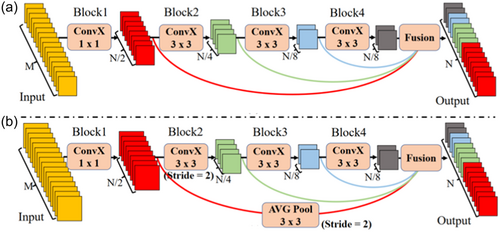

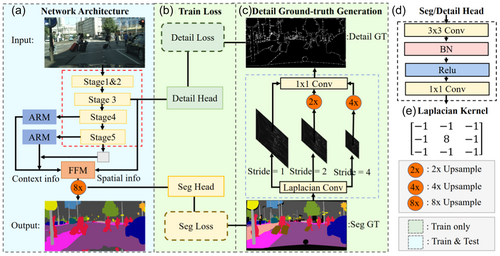

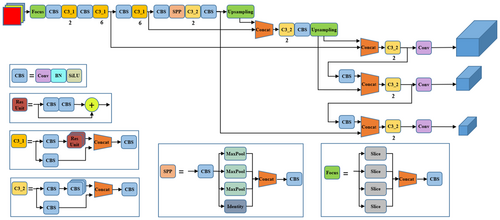

STDC is a lightweight and efficient read-time segmentation network. The Short-Term Dense Concatenate module (STDC module) concentrate on scalable receptive field and multiscale information by concatenating low-level fine-grained features with high-level semantic features. The detail structure of STDC is shown in Figure 3. The number of output feature channels in the STDC module continuously decreases as the network deepens, and this kind of structure greatly reduces the computational complexity of the model. On the other hand, a detail guidance module is introduced to guide the low-level layers to learn the spatial information in single-stream manner. The overview of STDC network structure is presented in Figure 4.

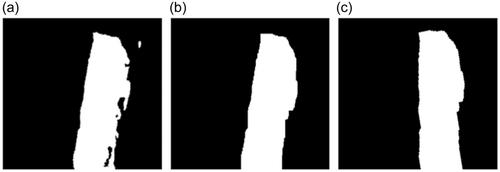

In practical applications, the segmentation results of the STDC model are influenced by the clarity of the water gauge and the shooting conditions. Therefore, it is necessary to integrate post processing operations to further improve the reliability of the segmentation mask. Our proposed process is shown in Figure 5.

Through process mentioned above, we achieved a segmentation accuracy of 0.86 on our water gauge segmentation data set, greatly improving the noise resistance of the water gauge segmentation process. Figure 7 shows the recognition results of the proposed water gauge segmentation algorithm.

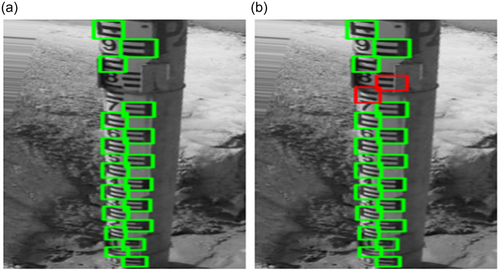

3.2 Character recognition and missing character completion algorithm

In this paper, we recognize the “E” and “∃” characters on the water gauge, and estimate the water level through linear transformation (Yi et al., 2015) and (Qiao et al., 2022) recognize digital characters on the water gauge, but observation shows that there exists character deformation under certain shooting angles, leading to a decrease in the detection ability of the digital character recognition model. Therefore, we choose to recognize the “E” and “∃” characters.

The model size of YOLO is controlled by two parameters: depth and width. For YOLOV5m, its depth factor is 0.67 and width depth is 0.75, which make YoLOv5m a middle size model among YOLOv5 series. Since YOLOv5m achieves the best trade-off between efficiency and performance among the YOLOv5 series, we use YOLOv5m to detect characters. Figure 8 shows the structure of YOLOv5. As we all know, it's a regular operation to unify the size of the input images during training phase. However, due to the fact that our training data set has a wide range of image resolution, large scale scaling down will increase the number of extremely small-sized objects. Moreover, the complex background environment of the water gauge image also increases the difficulty of detection. Both resulted in limiting model accuracy.

To solve this problem, character recognition is applied only in the water gauge region, which not only removes complex background interference, improving model detection accuracy, but also improves detection efficiency. Table 1 shows the performance improvement of the YOLOv5m by this data preprocessing operation. To ensure that all characters on the gauge are involved in the cropped image, we expand the subimage from top, bottom, left, and right. Specifically, we add 0.05 times of the subimage in width to the left and right respectively, so as the height to top and down.

| Crop | AP50 | AP50:95 |

|---|---|---|

| 0.962 | 0.628 | |

| √ | 0.988 | 0.70 |

- Note: Ap50 refers to the average precision evaluation indicator in object detection tasks, where the IoU threshold is set to 0.5. Similarly, the AP50:95 represents the mean value of average precision when the IoU threshold range from 0.5 to 0.95. Bold values represent the best performance in ablation experiments.

In practical applications, even if the character detection accuracy is greatly improved through the above preprocess, it is difficult to ensure that every character can be detected, because in natural environments, flood erosion, foreign objects, natural corrosion, camera shooting angle, and other factors can lead to a missing in character recognition, as shown in Figure 9. To address this issue, this paper proposes a character sequence interpolation postprocessing algorithm, which utilizes the orderliness of character sequences to fill in missing characters.

3.3 Water level calculation

By the character interpolation algorithm, we use characters adjacent to the missing characters to complete the coordinates and size of the missing characters. Finally, by counting the number of characters and performing linear transformation in the bottom area of the water gauge, we calculate the final water level height.

| Algorithm 1. Character sequence interpolation method. | |

| Character sequence interpolation | |

| 1: | Require: is a set of sorted vertical coordinate of bottom right point of character bounding boxes |

| 2: | Require: |

| 3: | Require: α |

| 4: | while do |

| 5: | calculating |

| 6: | if then |

| 7: | calculating and insert into |

| 8: | |

| 9: | else |

| 10: | |

| 11: | end if |

| 12: | end while |

4 EXPERIMENTS

4.1 Data set

ALL of the images in our data set are collected from internet, including water gauge images of varying shooting angle, changing light, different water gauge material, and with different degrees of dirt on the surface of water gauge. There are 385 images used for water gauge segmentation, including 492 water gauge targets. Among them, the training set contains 321 images and the validation set contains 64 images. As for character recognition, we make the data set by randomly cropping operation based on the water gauge data set. There are a total of 468 images used for character recognition, including 8722 characters objects, of which the training set contains 373 images and the validation set contains 92 images.

During the training phase, random perspective transformation, random scaling, and so forth are used to enhance the data of RGB images. It should be pointed out that the change of light is one of the main reasons that affects the recognition, so we apply random brightness variation to simulate changes in light, and further enhance the generalization of model in complex scenes. Figure 11 shows the generated images of brightness variation.

4.2 Model training

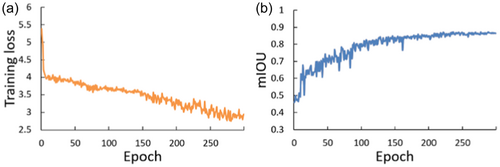

For the STDC segmentation model training, initial learning rate is 0.001, momentum is 0.9, the weight decay is 0.0005, the learning rate exponential decay is 0.9, and the batch size is 16. The SGD optimizer is used to update the network parameters, cross entropy loss is used for segmentation loss, the binary cross entropy loss is used for detail guidance loss, the warm-up iteration is 3, and the epoch is 300. Training result is shown in Figure 12 and the best performance is shown in Table 2.

| Model | Input size | mIoU | Params(M) | FPS |

|---|---|---|---|---|

| STDC1 | 480 | 0.865 | 12 | 71 |

| STDC2 | 480 | 0.816 | 16 | 50 |

| STDC1 | 640 | 0.861 | 12 | 59 |

| STDC2 | 640 | 0.844 | 16 | 43 |

| STDC1 | 896 | 0.861 | 12 | 45 |

| STDC2 | 896 | 0.841 | 16 | 35 |

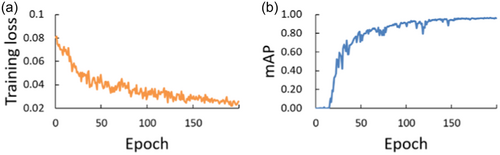

For the character detection training, the initial learning rate is 0.003, the momentum is 0.84, the weight decay is 0.0004, cosine annealing strategy is adopted to update the learning rate, and the batch size is 32. Adam optimizer is used to optimize parameters, binary cross entropy is used for classification and object loss calculation, and IoU loss is used for location regression. The number of warm-up iterations is 2, and the total training iterations is 200. The training result is shown in Figure 13 and the model performance is shown in Table 3. And mAP50 and mAP50:95 are used as evaluation criteria, where mAP50 represents the mean average precision when the IoU threshold of NMS process is greater than 0.5. Similarly, mAP50:95 is the average mAP when the IoU threshold ranging from 0.5 to 0.95.

| Model | Brightness variation | AP50 | AP50:95 | Size | Params(M) | FPS |

|---|---|---|---|---|---|---|

| YOLOv5m | 0.983 | 0.682 | 640 | 21 | 67 | |

| YOLOv5m | √ | 0.988 | 0.70 | 640 | 21 | 67 |

4.3 Results and analysis

STDC has designed two alternative models, STDC1 and STDC2. STDC2 has a deeper network structure and a larger number of parameters, which is supposed to achieve better model performance. However, in the experiments, we set different sizes of input images for model training, and the STDC2 model always performed worse than STDC1, as shown in Table 2. Although the performance of the STDC2 model improved as the image resolution increased, it began to decline after reaching a input resolution of (896, 896). So ultimately, STDC1 was chosen as the water gauge segmentation model.

As we can see from Table 3, the model performance of YOLOv5 has increased by 0.5% and 1.8% on mAP50 and mAP50:95 separately with brightness variation.

We test our method on 48 images with different background and we can see from Table 4 that our proposed method greatly reduces the average error of water level recognition. More specifically, the error reduces by 0.93 after adding crop operation during phase of training YOLOv5 model. The average error decreased to 1.80 after applying Our proposed character sequence interpolation, which achieves 0.69 performance gains. And with the tilt correct processing, we achieve a final average error of 1.58.

| Model | Crop | Tilt correct | Seq interp | Err(cm) | Std |

|---|---|---|---|---|---|

| Base(yolo+stdc) | 3.42 | 5.66 | |||

| Base | √ | 2.49 | 4.14 | ||

| Base | √ | √ | .80 | 2.32 | |

| Base | √ | √ | √ | .58 | 2.38 |

- Note: Bold values represent the best performance in ablation experiments.

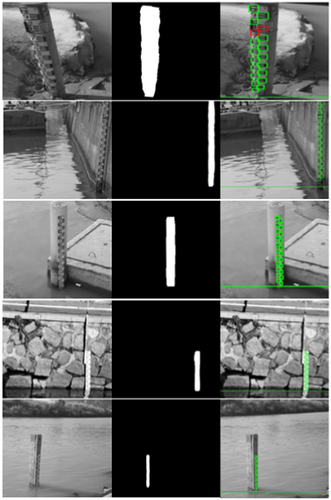

Figure 14 shows part of the recognition results of our proposed method. From the figure, it can be seen that the algorithm can adapt to water level recognition in complex environments such as dirty, tilted, and foreign objects attached to the water gauge.

5 CONCLUSION

In this paper, we proposes a new intelligent water level recognition method based on deep learning, which is suitable for complex environments. We adopt STDC to finely extract the water gauge target and determine the position of the water level. The YOLOv5m is used to detect characters in the water gauge region of whole image, and a character sequence interpolation postprocess is used to fill in the missing character, improving the overall generalization ability of the recognition process. Finally, we convert the pixel distance into the actual water level by linear transformation. The experiment shows that this method has good robustness in various complex environments. Without any prior conditions and calibration operations, the water level detection can be automatically completed, which has good application and promotion value. Although our proposed method shows good performance in some complex scenes, there are still some limitations that need further optimization. First, in very clean water, the performance of segment model is not so good and then increase the calculation error. Second, we also consider of further study on meeting the need of monitoring water level day and night.

ACKNOWLEDGMENTS

This study was supported by National Key Research and Development Program of China (2023YFC3006700).

ETHICS STATEMENT

None declared.

Open Research

DATA AVAILABILITY STATEMENT

Research data are not shared.