Deep learning methods for protein structure prediction

Abstract

Protein structure prediction (PSP) has been a prominent topic in bioinformatics and computational biology, aiming to predict protein function and structure from sequence data. The three-dimensional conformation of proteins is pivotal for their intricate biological roles. With the advancement of computational capabilities and the adoption of deep learning (DL) technologies (especially Transformer network architectures), the PSP field has ushered in a brand-new era of “neuralization.” Here, we focus on reviewing the evolution of PSP from traditional to modern deep learning-based approaches and the characteristics of various structural prediction methods. This emphasizes the advantages of deep learning-based hybrid prediction methods over traditional approaches. This study also provides a summary analysis of widely used bioinformatics databases and the latest structure prediction models. It discusses deep learning networks and algorithmic optimization for model training, validation, and evaluation. In addition, a summary discussion of the major advances in deep learning-based protein structure prediction is presented. The update of AlphaFold 3 further extends the boundaries of prediction models, especially in protein-small molecule structure prediction. This marks a key shift toward a holistic approach in biomolecular structure elucidation, aiming at solving almost all sequence-to-structure puzzles in various biological phenomena.

1 INTRODUCTION

Proteins are the cornerstone of cellular function, coordinating the biological processes of all life forms. From enzyme catalysis to immune system responses, proteins play ubiquitous roles. The complexity of protein structures is reflected in their three-dimensional conformations, which determine their functional roles and mechanisms of action. Therefore, accurate prediction of protein structure helps us to gain insight into the function of proteins as well as the basic mechanisms of life and provides an essential foundation for drug design,1 disease treatment,2 antibody design,3 and synthetic biology.4

Anfinsen's research5 emphasizes that the natural structure of a protein is determined solely by its amino acid sequence. This revelation has since made understanding this sequence-based paradigm a key area of study. Advances in experimental structural biology techniques, such as X-ray crystallography,6 nuclear magnetic resonance (NMR),7 and cryo-electron microscopy (cryo-EM),8 have enabled the generation of high-resolution and high-quality protein structures improving precise structure determination. Despite these developments, a substantial “structural knowledge gap” remains due to their high cost and time-intensive nature. In addition, interpretation of these data requires extensive expertise9 as well as the progressively increasing differences in protein sequences and structures further increase the complexity of prediction. Therefore, knowledge-based computational methods have emerged to help bridge the gap between experimental methods and advance the field of protein structure prediction.

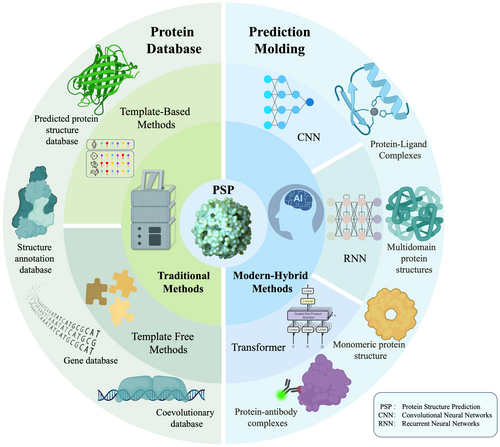

Artificial intelligence technologies, particularly machine learning, have rapidly developed in recent years. Against this backdrop, deep learning methods have been widely employed in PSP due to their high-precision predictions and ability to process nonhomologous proteins (Figure 1).10 Using an innovative Transformer network architecture, DeepMind's AlphaFold 2 has revolutionized the accuracy standard of PSP. Moreover, AlphaFold 2 has been used to predict 98.5% of human protein structures,11 making precise predictions of protein functions and RNA structures possible.12 Recently, AlphaFold 3 further pushed the boundaries of PSP.13 The transformative substitution of diffusion with the optimization of the performer module, which predicts the original atomic coordinates by generating the diffusion process, enables multimodal structure prediction of the structures of complex biomolecular complexes, including proteins, nucleic acids, small molecules, ions, and modified residues.14 Currently, deep learning, as a powerful computational tool, is changing the research landscape in this field of protein structure prediction. With the continuous progress of deep learning technology, protein structure prediction's accuracy and application scope will be continuously expanded, bringing more possibilities for biological research and drug development.15

Here, we present the cutting-edge methodologies in protein structure prediction facilitated by deep learning technologies and their synergistic integration with other key techniques and resources. We focus on the evolution and distinctive features of both traditional methods and contemporary deep learning models in this field. Our review encompasses the architecture of several pivotal deep neural networks (DNNs), including convolutional neural networks (CNNs), recurrent neural networks (RNNs), generative adversarial networks (GANs), and transformer models. We discuss the successful application of deep learning to protein structure prediction, especially highlighting the diversity of model applications and their features following the introduction of AlphaFold2. Additionally, we address the challenges these advanced technologies face in complex biological scenarios and provide a balanced perspective that outlines how deep learning methods have improved the accuracy and efficiency of protein structure prediction, thus aiming to contribute to accelerated progress in the field.

2 BASIS OF PROTEIN STRUCTURE

Structure prediction involves extrapolating complex structural patterns from basic structural units through mathematical and computational methods. Its core goal is to predict higher-level structures by analyzing and modeling the basic components (e.g., atoms, molecules, or amino acid sequences) to understand their physical properties, chemical properties, or biological functions.16 In biology, protein structure prediction is an important branch of structure prediction, especially occupying a key position in computational biology. Protein structure prediction methods aim to bridge the sequence-structure gap by inferring the three-dimensional conformation of a protein from the amino acid sequence.17 These provide accurate models of protein folding without relying on expensive and time-consuming experimental techniques.18 Due to the inherent complexity of the multilevel structure of proteins, an in-depth understanding and systematic analysis of their basic structural units is essential to enable end-to-end structure prediction.

2.1 Hierarchy of protein structures

Proteins are biomolecules composed of linear sequences of amino acids whose functional diversity and biological activity are determined by their specific three-dimensional structures. These three-dimensional structures are formed by amino acid residues in their primary sequences through a complex folding process. From the amino acid sequence of the primary structure to the local folding pattern of the secondary structure, to the overall spatial conformation of the tertiary structure, up to the multisubunit assembly of the quaternary structure, each level of structure has a profound impact on the function and properties of proteins.19 This hierarchy reflects the conservatism in the evolutionary process and provides a multilevel source of information for structure prediction.

The amino acid sequence determines the primary structure, which is the blueprint for protein structure. The genetic code determines the uniqueness of the amino acid sequence, and the structure of this sequence determines the basic chemical properties and potential folding ability of the protein. However, the relationship between sequence and structure is not a simple one-to-one correspondence.20 By performing multiple sequence alignment (MSA) at the primary structure level, conserved regions and coevolutionary information in protein sequences can be revealed, which can help to understand the evolutionary relationships, functional properties, and structural stability of proteins.21 Secondary structure refers to the local folding of a protein's amino acid chain, primarily stabilized by hydrogen bonds. It mainly includes α-helices (H), β-strands (E), β-turns (T), and random coils (C). The secondary structure of proteins is closely related to protein evolution and function. The tertiary structure represents the overall three-dimensional conformation of the protein, which is stabilized by hydrophobic interactions, ionic bonding, hydrogen bonding, and van der Waals forces. This determines the functional regions of the protein, such as enzyme active sites and ligand binding sites, and affects the protein's function in the cell.22 Analysis by cryo-EM reveals that the core DNA-binding domain of the tumor suppressor protein p53 protein binds specifically to the p53 binding site (p53BS) DNA containing 20 base pairs in a tetrameric form, which causes DNA to be stripped from the surface of the histone protein and significantly alters the of the DNA through the nucleosome, thereby activating the p21 gene. Similarly, cGMP binding induces a conformational transition in PKG, activating its kinase center and phosphorylating the substrate.23 Quaternary structures are protein complexes consisting of multiple polypeptide chains (subunits). Quaternary structure prediction is key to understanding complex biological systems.24 has successfully predicted the structures of more than 500,000 protein complexes using AlphaFold-Multimer, with 70% of the predicted structures having an RMSD of less than four angstroms from the experimental structures. This breakthrough opens new avenues for systems biology and cellular network research. Further, this method was applied to membrane protein complex prediction, achieving an average TM-score of 0.82 prediction accuracy in GPCR family proteins and providing a new perspective on membrane protein drug design.25

2.2 Accurate prediction of structure

Accurate protein structure prediction is critical for decoding the functional mechanisms of biomolecules, which embodies the core “structure-function” paradigm of molecular biology and enhances our understanding of life processes.19 High-precision structure prediction not only elucidates the molecular basis of protein function but also provides key insights in areas such as drug design, disease mechanism studies, and protein engineering.26 shows that high-precision structure prediction can significantly accelerate the new drug development process. The full-length spike protein structure of the SARS-CoV-2 virus was predicted using the CI-TASSER model with an average template modeling score (TM-score) of 0.9 or more.27 The high-precision predicted structures were used for virtual screening to rapidly identify potential inhibitors and accelerate the development of antiviral drugs. The accuracy of protein structure prediction is affected by several factors, including the richness of sequence information, the availability of homologous protein structures, prediction algorithm performance, and computational resources.28 A noteworthy limiting factor is the sequence variability associated with the structure maintenance of homologous proteins rooted in evolutionary processes.29 With the rapid development of genome sequencing technology, the availability of many homologous sequences has also significantly improved structure prediction accuracy.

2.3 Traditional methods of protein structure prediction

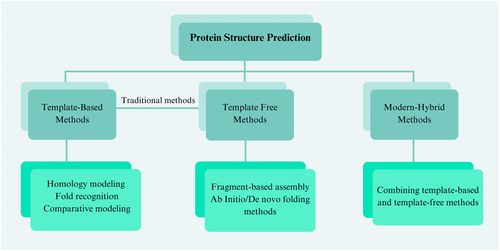

In protein structure prediction, traditional structure prediction methods use a combination of known structural templates, sequence homology analyzes, and biophysical principles to infer the 3D structure of a target protein using fine-grained computational models and algorithms (Figure 2). Traditionally, based on whether known protein structures are used as templates, PSP methods can be approached from two perspectives: template-based methods (TBM)30 and template-free modeling (TFM).31 Both rely on computational modeling to predict the structure of proteins. TBM, including homology modeling,32 fold recognition,33 and comparative modeling,31 are used to guide the structure prediction of target proteins using known homologous protein sequences.34 successfully predicted the three-dimensional structures of fungal effector proteins using a template-based modeling approach.

Homology modeling, or homology-based modeling, can build a 3D structural model of the target protein by comparing the unknown sequence with the template protein amino acid sequence using tools such as SWISS-MODEL35 and HHpred.36 The underlying assumption is that structurally similar protein sequences are evolutionarily related. After finding template proteins with similar sequences to the target proteins through sequence comparison tools such as BLAST,37 the target sequences are mapped onto the template structures through comparison adjustment and molecular modeling techniques, and the final 3D structural models are obtained through structural optimization and energy minimization. However, the effectiveness of this method is limited by the sequence identity to the known structural template, and it is usually required that the structure prediction of target proteins with more than 30% sequence similarity can reach the experimental resolution level.38 In addition, its structure prediction accuracy is limited by factors such as the size of the template protein database39 and long-distance sequence comparison.40 Fold recognition, or template matching, is suitable for structure prediction with low sequence similarity between the target sequence and template.41 Unlike homology modeling, fold recognition compares at the 3D structure level, directly comparing the 3D morphology of the target sequence with known structures in the database, thus identifying possible folding modes with the target sequence. This approach uses physical and statistical energy function numbers to assess the fitness of the target sequence in all known template structures, considering factors such as the spatial position of the amino acid side chains and the conservatism of the core structure.42 Although it has significant advantages over homology modeling in dealing with low sequence similarity, it is still limited by the quality of the size of the template library and the applicability of the predicted structure. Comparative modeling, as a comprehensive prediction method, applies to a wider range of template selection criteria. It generates a 3D structural model of the target protein by comparing the target sequence with multiple templates and synthesizing the structural information from different templates.43 The comparative modeling approach makes up for the shortcomings of single-template modeling by integrating information from various templates, thus improving structure prediction accuracy.

TBM rely on the sequences of known homologous proteins as templates, allowing for the relatively accurate prediction of target protein structures. However, their primary limitation is the inability to predict the structures of newly discovered proteins that lack homologous templates. On the other hand, TFM methods (ab initio modeling) can be used to predict protein structures without any potential template structures.44 This makes it possible to predict all protein structures, but usually with lower accuracy. The main implementation techniques include fragment-based assembly31 and ab initio or de novo folding methods.45 In CASP7, I-TASSER, based on a TFM approach, generated 7 (about 1/3) correct topologies out of 19 free modeling targets with a TM score >0.5.46 However, these sequences were limited to 155 residues in length, and the average RMSD of the template in the aligned region was 13.5 Å, significantly greater than the average RMSD of 5.0 Å achieved by the template-based approach. TFM is completed by two main strategies: fragment-based assembly methods47 and ab initio or ab initio folding methods.48 The fragment-based assembly approach exploits the principle of conservation of the local structure of proteins to construct the 3D structure of proteins by segmenting the target sequence into multiple short fragments and then searching for similar pieces in a library of known structural fragments for assembly. This method relies on a rich library of structural pieces, and the structural model with the lowest energy is finally selected by performing multiple simulations and reorganizations of the short fragments. A representative algorithm, Rosetta, assembles and optimizes structures by conformational sampling and energy minimization via the Monte Carlo strategy.49 However, this approach needs to be revised regarding the efficiency and accuracy of conformational sampling.50 As protein size increases (beyond 100 amino acid residues), the conformational space grows exponentially. In addition, long-range interactions are difficult to accurately capture by short fragments for large proteins (typically over 100 amino acid residues).51 Ab initio folding/ab initio assembly represents an important branch in protein structure prediction. The core idea is to predict the three-dimensional conformation of proteins based entirely on physicochemical principles without relying on known structural templates.52 The three-dimensional structure of a protein is predicted based entirely on physicochemical principles without relying on any template structure. The method explores the energy landscape through molecular dynamics (MD) simulations or Monte Carlo simulations, that is, Newton's equations of motion to simulate the motion of atoms over time, as well as random sampling to search for the lowest energy state among all possible conformations of the target sequence to obtain the predicted 3D structure.53 This process involves complex energy function calculations, including van der Waals forces, hydrogen bonding, electrostatic, and hydrophobic interactions.

Traditional protein structure prediction methods, developed over decades, have resulted in a mature technical system that integrates the application of sequence analysis, biophysical modeling, and computational algorithms. Despite their success in several aspects, they show limitations in predicting the structure of new nonhomologous proteins or have no obvious similar templates. With the significant increase in computational power and the continuous evolution of algorithms, especially the incorporation of deep learning techniques, the traditional methods are being gradually transformed into a more integrated data-driven approach to structure prediction at the levels of functionality, dynamics, and interoperability, which has brought about an unprecedented level of accuracy and range of applications for protein structure prediction.

3 MODERN DEEP-LEARNING TECHNIQUES

With the advancement of high-throughput sequencing technologies, the boundaries between traditional methods have narrowed, and a new paradigm for predicting protein structures is gradually emerging at the forefront of research—hybrid methods.54 This emerging mode of PSP transcends the rigid categorization of template-based and template-free predictions. Instead, it adopts a flexible strategy that utilizes both advantages for structure prediction.55 While traditional methods have limitations such as dependency on homology,49 sequence identity threshold,38 and lower accuracy of free-modeling methods,46 the latest hybrid methods alleviate these limitations to some extent by integrating a variety of deep learning network architecture problems.56 DeepMind's AlphaFold2 model, released in 2020, is a pioneering model for protein prediction and a successful case study of modern hybrid methods for PSP. Deep learning is an important branch of machine learning.57 In computational biology, deep learning architectures are forging innovative pathways for PSP based on the complex hierarchical structure of artificial neural networks (Table 1). The addition of various deep learning systems has also changed the traditional means of protein structure prediction. Evolving from feedforward neural networks, these systems emphasize feature extraction using multiple connected layers to achieve complex mappings from input to output. The diverse linking patterns constitute customizable DNNs for different data classes.57 Within the field of protein structure prediction, CNNs, RNNs, GNNs, long short-term memory networks (LSTMs), and Transformer, as well as GANs, are among the most prominent and widely used DNNs. Applying deep learning to protein structure prediction has gone from a challenging research area to an active field, constantly improving its accuracy and application scope through continuous optimization. This section will introduce the core principles of deep learning, multiple network architectures, and their characteristics and provide insights into strategies for model optimization.

| Model | Year of release | Open source (Y/N) | Applicability | Innovations/feature | References |

|---|---|---|---|---|---|

| MetaPSICOV | 2015 | N | Contact relationships of residues within protein molecules | Contact map prediction using coevolution | [58] |

| DeepCov | 2018 | Y | Protein‒protein complex contact-map and interactions | Improved prediction accuracy for nonhomologous protein complex contacts | [59] |

| DeepFragLib | 2019 | Y | Amino acid residues (fragments) | Efficiently build protein-specific fragment libraries | [60] |

| AlphaFold2 | 2021 | Y | Protein monomer and complex structures | Highly accurate protein structure prediction, scoring the accuracy of predicted structures | [11] |

| TrRosetta | 2021 | Y | Protein Monomer Structure | Rapid and accurate de novo structure prediction | [61] |

| CopulaNet | 2021 | N | Protein complex structure | Estimate residue coevolution directly from multiple sequence alignment (MSA) | [62] |

| RGN2 | 2022 | N | Single-sequence protein structure prediction | Protein language models, faster predictions | [63] |

| SPOT-Contact-LM | 2022 | Y | The protein contact-map | Narrowed the gap between homologous and nonhomologous complex prediction | [64] |

| ColabFold | 2022 | Y | Large protein complexes | Fast and memory-efficient | [65] |

| Umol | 2023 | Y | Protein‒ligand complexes | Evoformer accepts protein sequences and the ligand small molecule SMILES as inputs | [66] |

| ESMFold | 2023 | Y | Atomic-level protein structure | Protein language modeling replaces MSA as input, which leads to faster predictions | [67] |

| IgFold | 2023 | Y | Antibody variable region 3D structure | Deep learning-based end-to-end antibody structure prediction | [68] |

| RoseTTAFoldNA | 2024 | Y | Protein-nucleic acid complex | Based on the three-track architecture of RoseTTAFold, end-to-end protein–NA structure prediction network | [69] |

| AF-Cluster | 2024 | Y | Protein Multi-Conformation Prediction | Clustering of MSA by sequence similarity | [70] |

| DeepFusion | 2024 | N | Protein‒RNA complexes | Dissect the patterns of RNA‒protein interactions | [71] |

| AlphaFold 3 | 2024 | N | Large protein complexes | Fast and memory-efficient | [72] |

3.1 Deep learning and neural networks

Neural networks, fundamental to deep learning, comprise artificial neurons that simulate biological neurons by receiving inputs, performing weighted sums, and generating outputs through activation functions.73 DNNs excel in learning complex nonlinear relationships between protein sequences and structures by layering these neurons, enhancing the model's ability to process intricate data structures.74 used the ReLU activation function, which reduces the risk of overfitting by inducing sparsity in the network and reducing the interdependence between parameters. The loss function is pivotal as it quantifies discrepancies between model predictions and actual data, influencing both the learning trajectory and the network's generalization capabilities. The mean squared error (MSE) is commonly used to assess the accuracy of predicted protein structures against experimental data.75 At the same time, cross-entropy loss evaluates the alignment of the model's output probability distribution with the target distribution.76 enables mask prediction of four protein structure hierarchies by calculating residual types, distances, angles, and cross-entropy of dihedrals.

In addition, deep learning techniques often use supervised learning methods such as logistic regression, support vector machines, decision trees, and random forests for binary classification problems77, 78 proposed IDEGBM prediction mechanism based on the extreme random tree (ERT) model to extract innovative hybrid features from evolutionary information, secondary structures, chemical properties, and global descriptors to reflect the diversity of different amino acid arrangements. As for multiclass classification problems such as protein functional domains and protein phase–protein interaction interface types, softmax regression or one-versus-rest (OvR) strategy is often used to train the model using protein datasets with known structures, and then predict the structural features and functional properties of the new proteins. DeepMC-iNABP79 employs the “one-versus-all” multiclass classification technique, which applies unique heat codes to reshape the categorical variables (labeled variables) of the data instances to achieve the identification of the structure of the nucleic acid binding protein (NABP). In contrast, unsupervised learning methods operate independently of labeled data, enabling the discovery of inherent patterns and structures directly from the data set.80 Techniques such as k-means and hierarchical clustering are frequently employed to elucidate intrinsic patterns and natural groupings within protein sequences or structures.81 These methods facilitate a deeper understanding of the similarities and differences among protein families. Additionally, self-supervised learning approaches, which leverage contrastive learning, are increasingly utilized to explore the latent structures within proteins, further enhancing our comprehension of molecular biology.82

3.2 Deep learning architectures

3.2.1 CNNs

Deep learning methods excel at handling large and complex datasets, revealing intricate relationships between protein sequences and their structures. These techniques are particularly adept at capturing and exploiting nonlinear and high-dimensional features challenging in traditional template-based or free modeling approaches. CNN use convolutional filters to extract local features from the image and then use a pooling layer to reduce the data dimensionality.83 This makes it suitable for processing lattice-like topological data, especially for protein secondary structure prediction.84 While standard 1D CNNs can handle linear sequence data, 2D CNNs can also be used in protein contact maps to generate 2D matrices by analyzing the distance relationships between amino acid residues in proteins, allowing 2D CNNs to efficiently identify patterns in these images to infer the tertiary structure of proteins. In addition, 3D CNNs can learn key information about the spatial conformation of proteins directly from the original 3D structure. CNNs also show great power when applied to predicted distance maps or co-evolutionary signals extracted from MSA by direct coupled analysis (DCA) models. DeepCov uses fully convolutional neural networks (FCNNs) to achieve highly accurate structure prediction even when few homologous sequences are available. DeepCov achieves highly accurate structure prediction even with fewer homologous sequences available through FCNNs.59 With cascading convolutional and pooling layers, CNNs can capture the complex spatial hierarchies of proteins and thus accurately predict the multilevel structure of proteins.85 Ju et al. proposed CopulaNet,62 which employs a CNN architecture. By utilizing convolution and pooling layers to extract local features of protein sequences and integrating coevolutionary information between residues, CopulaNet achieves high-precision predictions of protein structures. PSSP-MVIRT86 constructs a hybrid network architecture of CNN and bi-directionally gated recurrent units to extract global and local features of peptides, and its Sov metrics are more than 15% higher than that of HMM and other methods. by more than 15%.

3.2.2 RNNs and LSTM

RNNs and LSTM networks have become central to sequence-to-sequence prediction models, especially in the fields of protein folding and dynamics.87 RNNs excel in processing protein sequences characterized by sequence dependencies. Compared to CNNs, the distinct advantage of RNNs lies in their ability to handle long-range dependencies within sequence data. This capability enables them to predict functional or structural domains of proteins and process longer sequences.88 used an RNN-based seq. 2seq autoencoder to learn the embedding vectors and subsequently used the attention mechanism to learn the binding site information between compounds and proteins while using CNN to train a compound–protein interaction CPI prediction model. To alleviate the problem of vanishing or exploding gradients that occur when RNN processes long sequences,89 proposed LSTM. It builds on standard RNNs by combining memory cells and gating mechanisms that regulate information flow, allowing RNNs to capture interactions between distal amino acids in protein sequences. This ability to recognize a sequence's overall structural features significantly improves the prediction accuracy. By understanding the complex interactions between distal amino acids, RNNs reveal the overall structural features of protein sequences, which improves the accuracy of protein prediction models.87 demonstrates that simple character-level language models based on LSTM neural networks can learn probabilistic models of time series generated from physical systems and shows the reliability of the models utilizing force spectral trajectories for different benchmark systems and multi-state riboswitches. In addition Wang et al.,90 obtained the best prediction performance in balanced F1 scores using RNN with LSTM architecture to deal with the effect of mutations on protein–ligand binding affinity.

3.2.3 GANs

GANs consist of two competing networks, a generator and a discriminator, which are trained through generator-discriminator adversarial training to improve the quality of generated data further. GAN has shown excellent performance in image processing and generative tasks, and recently, these advantages have gradually been introduced into bioinformatics and structural biology.91 In the field of PSP, GAN is mainly applied to data enhancement and structure generation. GANcon92 predicts accurate protein contact maps using GAN networks. To address the lack of homologous protein sequences and the low accuracy limitation of long-distance contact prediction, CGAN-Cmap uses GAN to capture and interpret distance distributions from 1D sequential and 2D paired feature maps, improving the accuracy of long-distance contacts in the model by more than 3.5%.93 In addition,94 used conditional Wasserstein GAN (CWGAN) for protein lysine modification site prediction. The resultant Euclidean distance was below 0.03 angstroms, which was much smaller than that of conditional GAN (CGAN) with Euclidean distance below 0.03 angstroms, which is much smaller than that of CGAN with distance above 0.1. To reveal potential sequence-structure relationships and design protein sequences for possibly novel structural folds, gcWGAN95 used Wasserstein distance in the loss function of the WGAN. The folding accuracy was comparable to that predicted by the cVAE, but sequence diversity and novelty are significantly higher than that of cVAE. In addition to GAN, generative models based on flow matching show tremendous potential.96 These models include diffusion models and rectified flows, among others. Diffusion models generate high-quality structural predictions by gradually adding noise and learning the denoising process.97 Rectified flows, on the other hand, generate accurate predictions by gradually transforming and correcting the data distribution.98 These methods have significant advantages in capturing proteins' complex spatial configuration and dynamical behavior. Denoising autoencoder (DAE) also helps to learn dense, stable, and low-dimensional representations of proteins by learning in an unsupervised manner.99 Graph neural networks (GNN), by processing non-Euclidean data structures consisting of vertices and edges, enable efficient capture of the complex topology of protein molecules and identification of residue interactions. GCN-based DeepFRI100 encodes amino acid residue interactions as graph edges. In contrast, the residues are represented as vertices of the graph, enabling molecular-level annotation of protein functions. The graphical structure representation is implemented to enhance the flexibility and computational efficiency of modeling the complex topology of proteins.

3.2.4 Transformer

The Transformer architecture proposed by Vaswani et al.101 has gained widespread attention because of its excellent natural language processing (NLP) performance. As shown for AlphaFold2, the Transformer has been successfully applied for protein structure prediction.10 The transformer's core feature, the self-attention mechanism, enables it to capture global dependencies in sequence data efficiently.102 In Transformer, each token in an input sentence can “attend” to all other tokens by exchanging activation patterns corresponding to the intermediate outputs of neurons in the neural network. In contrast to traditional CNN and RNN architectures, the global dependency modeling capability of Transformer, combined with its parallel processing advantage, allows the model to comprehensively consider the information between any two points within a sequence, thus effectively identifying remote dependencies. It introduced a paradigm shift in processing sequences by relying exclusively on a self-concern mechanism to weigh the importance of different parts of the input data.103 Unlike RNN and LSTM, which process data sequentially, Transformer processes data in parallel, greatly speeding up training and enhancing the ability to capture complex dependencies in the data. The self-attention mechanism enables the model to focus on all parts of the sequence simultaneously, allowing it to focus on modeling different biological sequences for multitype structure prediction of proteins.104 It is particularly effective when contextual relationships span long sequences, such as complex protein or RNA molecule folding patterns. Many models have adopted the release of AlphaFold2, the transformer architecture. The RoseTTAFoldNA model69 significantly broadens the range of predictable structures by integrating various biomolecular data such as protein sequences, nucleic acid sequences, metal ions, small molecule ligands, and covalent linkage modifications as inputs. The Umol model66 focuses on the structure prediction of protein–ligand complexes, accepting protein sequences and small molecule SMILES sequences as inputs and demonstrating the model's ability to resolve the interactions between proteins and small molecules, which is crucial for drug design and functional molecule research. The development of a transformer for protein structure prediction has not only led to revolutionary innovations. It has also advanced the prediction of interactions between proteins and other biomolecules (e.g., nucleic acids, other proteins) and small‒molecule ligands, expanding the variety and complexity of structures the model can predict.105

Transformer provides a powerful framework for dealing with remote dependencies across sequences; CNN applies its convolutional filters to capture local structural motifs. RNN and LSTM excel in temporal data continuity, which is crucial for temporal dynamics. On the other hand, GAN innovates by synthesizing real biological data, which is invaluable for training predictive models where experimental data is scarce or incomplete. The unique nature of these structures provides researchers with a diverse toolkit, with each approach bringing unique advantages to different challenges in protein structure prediction. For example, CNN may be better suited for static structure analysis, RNN for dynamic processes, LSTM for long-term evolutionary studies, gan for generating new biomolecular structures, and Transformer for comprehensive sequence analysis that requires understanding local and global context. However, these models also have certain limitations. CNN may ignore global dependencies when capturing local features.106 RNN and LSTM are susceptible to the gradient vanishing problem and are particularly limited when dealing with long sequences.107 GAN, although advantageous in the field of data generation, is unstable in the training process and is prone to pattern collapses.108 Despite its outstanding performance in cross-sequence dependency, the transformer's high computational resource requirements and its generalization ability on small sample datasets must be improved. These limitations must still be further optimized and improved in protein structure prediction.

In summary, the various integrations of network architectures based on deep learning provide powerful tools for advancing our understanding of protein structure. The unique features of each architecture enable it to handle specific aspects of the complex data involved in predicting protein folding and interactions. This complementary set of tools enhances our ability to model and understand biological systems. It paves the way for innovative approaches to drug design, genetic engineering, and other applications in biotechnology and medicine.

3.3 Training, validation, and evaluation of predictive models

Since the release of AlphaFold, optimization of the performance of protein structure prediction models has become one of the main focuses of computational biologists.109 The optimal design of algorithms in the training, validation, and evaluation phases is a key component in achieving this goal. Training is the initial phase in which the model learns to predict results based on the data set, aiming to maximize prediction accuracy and generalization. This stage mainly involves data preparation and preprocessing, forward propagation and feature learning, loss calculation, backpropagation, parameter updating, and iterative optimization. Proteins have a multilevel structure and a variety of protein-ligand complexes, which leads to feature extraction from information such as sequences being a key aspect of training.110 Feature extraction has traditionally relied on position-specific scoring matrices (PSSM) and simple coding schemes. PSSM are derived from multiple sequence comparisons and can statistically represent the conservation of each amino acid at a specific position in a sequence comparison. Conventional feature extraction often ignores local context or long-range interactions of amino acids. To address this shortcoming, evolutionary scale modeling (ESM) uses the Transformer architecture to learn evolutionary information and sequence patterns from a large amount of protein sequence data to capture local and long-range dependencies in sequence data.67 ESMFold enables end-to-end atomic-level prediction of a single sequence by combining language models with structural modules.111 The subsequent release of ESM-267 introduces a multiscale feature fusion strategy based on the attention mechanism, which optimizes position embedding and employs rotary position embedding (RoPE) to handle sequences of arbitrary lengths without the limitation of the length of the pretrained sequences. Principal component analysis (PCA) is commonly used as a dimensionality reduction technique to reduce the high dimensionality of protein structure data. By identifying principal components or significant axes of variation in the data, PCA allows the model to focus on the essential features, thus reducing the computational load and improving learning efficiency.112 For example, Ojeda-May et al.,113 used PCA to describe the general folding characteristics of proteins, a visual representation of the first principal component, and a density map to capture and detail the typical rearrangement patterns of adenylate kinase (AdK) in the ATPlid and AMPlid regions. In biological sequence analysis, feature extraction methods such as NMBroto, Z curve-12bit, SNC, and DNC can effectively capture local and global features of sequences by computing positional correlation and geometric mapping.114 These methods perform well in predicting RNA and DNA sequences, but their application in protein structure prediction is challenging. The functions and structures of proteins depend on sequences and involve complex 3D conformations and multilevel interactions, so it is difficult to fully reveal the complex properties of proteins by these linear feature extraction methods alone. To address this problem, deep learning-driven feature extraction methods, such as Autoencoders and multiscale feature extraction techniques, have shown more significant potential in recent years, further improving the accuracy and adaptability of protein structure prediction by combining local and global features.115

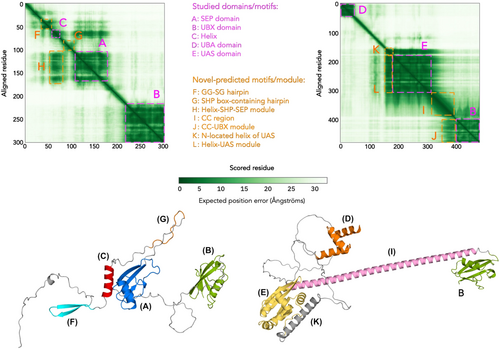

The validation and evaluation of protein structure prediction requires objective metrics to measure the similarity between computational models and experimentally determined reference structures. Objective metrics such as TM-score and prediction alignment error (PAE) are essential for fine-tuning the parameters and preventing overfitting. The TM-score was proposed by Zhang116 to evaluate the metric of protein structural topological similarity. It improves traditional metrics such as RMSD by emphasizing smaller distance errors, thus increasing the sensitivity to global folding rather than local differences. In addition, the TM-score employs length normalization to ensure that its value is independent of protein size, allowing for consistent structural comparisons of proteins of different lengths. Protein pairs with TM-scores >0.5 are mostly located in the same fold, whereas protein pairs with TM-scores <0.5 are predominantly not in the same fold.117 AlphaFold-Multimer uses a weighted combination of pTM and ipTM to indicate the model confidence for the interaction scores between different chain residues to score their interactions.24 On the other hand, the PAE provides the expected error in residue-residue prediction throughout the protein sequence, predicting the dynamic nature of protein residues (Figure 3).119 concluded that the PAE plot of AF2 correlates with the distance variation (DV) matrix of MD simulations. DV matrix in MD simulations. Unlike traditional similarity metrics that rely on global superposition and are susceptible to structural domain shifts, the local distance difference test, lDDT, provides a robust assessment of the local model quality and maintains relevance even in structural domain shifts. lDDT serves as a superposition-free score for evaluating the local distance differences of all atoms in the model, including validation of stereochemical transformations. lDDT is also used to assess the local distance differences of all atoms in the model, including stereochemical transformations. lDDT is used to validate stereochemical transformations. Applies this concept to the per-residue lDDT-Cα score,10 pLDDT (per-residue lDDT-Cα). The score ranges from 0 to 100 and indicates the confidence level of a single model residue, the higher the score, the higher the confidence level. Language model-based protein structure prediction methods such as ESMFold also use plDDT-based metrics, and very low confidence pLDDT scores correlate with a high propensity for intrinsic disorder (intrinsic disorder) in protein structure.11 Furthermore, for protein-protein complex structure prediction evaluation, DockQ combines several criteria, such as interface root mean square deviation (iRMSD), interface clustering, and interface local contacts, to quantify the accuracy of the prediction model in protein interactions.120 Optimization of structural prediction models focuses on algorithmic improvement and strategy tuning in the training, validation, and evaluation phases. Together, these phases form the cornerstone of effective model development, ensuring that the models are highly accurate and robust for different protein structure prediction tasks.

In model optimization for protein structure prediction, a systematic approach is essential to ensure the generality and robustness of the model. Chou's five-step rules provide a theoretical basis for model development, covering the complete process from benchmark data set construction, mathematical representation, and prediction algorithm development to cross-validation and software implementation.121 Based on Chou's five-step rule, multiscale feature extraction methods have achieved significant results in other biological sequence analysis tasks. For example, in DNA-protein binding (DPB) prediction, the researchers systematically analyzed the fused sequence features through a multiscale CNN to accurately predict the regulatory mechanism of gene expression.122 This approach combines local and global features, effectively solves the complex dependency problem, and provides a reference for applying deep learning in protein structure prediction. In deep learning model development, following Chou's five-step rule can ensure the transparency and reproducibility of model development, especially in the feature extraction and validation process, which shows significant advantages. Combining traditional rules with cutting-edge technologies enables protein structure prediction to achieve higher accuracy and adaptability in the training, validation, and evaluation processes.56

In the prediction of protein structures, regardless of the method chosen, the accuracy and efficiency of the results heavily rely on the availability of bioinformatics databases. Therefore, using these open-access databases is essential for protein structure prediction. The protein data bank (PDB),123 UniProt,124 and AlphaFold Protein Structure Database (AlphaFold DB)125 contain exhaustive information ranging from protein sequences to structures, functions, and protein–protein interactions (Table 2). These databases are pivotal for algorithm development, model training, and statistical analysis within deep learning-based technologies. They also provide a foundation for validating and assessing the accuracy of new algorithm predictions, which is indispensable for advancing research and applications in the field of biomedical sciences.

| Database | Data type | Features | Cover range | Official website |

|---|---|---|---|---|

| Protein data bank | 3D structures | X-ray, NMR, cryo-EM derived | Approximately 200,000 protein structures | rcsb.org |

| UniProt | Protein family and structural domain database | Comprehensive biological information | More than 200 million sequences | uniprot.org |

| InterPro | Protein sequence database | Aggregates multiple database info | More than 30,000 sequences | interpro |

| Gene ontology (GO) | Gene database | Biological processes, molecular functions | Approximately 1.5 million genes | geneontology.org |

| STRING | Protein interaction networks | Predicted and experimental interactions | More than 59 million proteins | string-db.org |

| ESM Atlas | Predicted protein structure | Computational Prediction-Based Structural Ensemble | More than 700 million metagenomic proteins | esmatlas.com |

| AlphaFold DB | Predicted protein structure | Computational prediction-based structural ensemble | Over 200 million protein structure predictions | alphafold.ebi.ac.uk |

| ESM Metagenomic Atlas | Predicted protein structure | Computational prediction-based structural ensemble | 772 million predicted metagenomic protein structures | esmatlas.com |

| BFD (Big Fantastic Database) | Protein sequence database | Large-scale sequence data for machine learning | Over 2.5 billion sequences | bfd.mmseqs.com |

| MGnify | Metagenomic sequences and annotations | Automated analysis of microbiome sequencing data | Over 700,000 metagenomic datasets from various environments | metagenomics |

4 MAJOR ADVANCES IN DEEP LEARNING-BASED PROTEIN STRUCTURE PREDICTION

Protein structure prediction is a fundamental problem with significant challenges in bioinformatics. Traditional methods are limited by the coverage of template libraries and the accuracy of force fields, while modern prediction methods have shown advantages in recent years.126 In the presence of available templates, deep learning methods improve the accuracy of template selection and optimize the alignment between templates and target sequences. For proteins for which no suitable template is available, deep learning methods can predict the structure of the protein from scratch using a strategy similar to free modeling. This approach typically utilizes deep learning models to process large amounts of data to understand and predict complex protein folding patterns, including various protein structure prediction types (Table 3). In the critical assessment of protein structure prediction (CASP) 14 competition, the AlphaFold 2 model performed well due to its innovative attention mechanism, advanced information encoding, and end-to-end framework.132 Following the release of AlphaFold 2, a series of models such as Umol, RGN2, and TrRosetta adopted deep learning architectures, including the transformer, and further refined algorithmic strategies to enhance the model's predictive power greatly. The recent release of AlphaFold 3 further extends the boundaries of predictive modeling, particularly in protein-small molecule structure prediction. This extension marks a key shift toward a holistic approach in biomolecular structure elucidation, aiming to address virtually all sequence-to-structure challenges in various biological phenomena.

| Type of prediction | Main models | Solution | Features and application scenarios | Challenge | Reference |

|---|---|---|---|---|---|

| Single-chain protein | trRosettaX-Single | Transformer-based language modeling and multiscale networks | Predict natural protein and single-sequence structures | Single-sequence accuracy | [127] |

| Multichain protein/protein complexes | HelixFold-Multimer | Integration of domain expertise to optimize cross-chain interaction modeling | Predict antigen-antibody and peptide–protein interfaces | Capturing complex interactions; improving prediction accuracy | [128] |

| Protein-small molecule complex | RoseTTAFold All-Atom | Optimizing the three-track architecture | Atomic-level precision; Custom pockets per ligand | Computational efficiency; detailed feature learning | [129] |

| Protein-nucleic acid complex | RoseTTAFold NA | Extend all tracks of the network to support nucleic acids | Protein–NA interface modeling; designing sequence-specific nucleic acid binding proteins | Improving single-mode performance and prediction accuracy | [69] |

| Protein–protein Interaction | OpenFold | Retrain AlphaFold2 to improve generalization | Precise prediction; framework compatibility | New molecular modeling; different protein family | [130] |

| Covalent modification of structure | PPICT | Elastic Network Models (ENMs) and Network Embedding Method | Predict posttranslational modification cross-talk | Lack of gold standard data sets; large protein pair space | [131] |

| Multimodal structure | AlphaFold 3 | Using the pairformer module and diffusion module | Unified deep learning for high-accuracy biomolecular modeling | Managing complex chemical entities; Dynamic structure; Optimizing computational demands | [13] |

4.1 AlphaFold's development and innovation

The AlphaFold2 model primarily consists of two architectures: (i) the Evoformer module for learning protein MSA sequence information (48 blocks) and (ii) the 3D Equivariant structure module for interpreting the three-dimensional structure of protein sequences (eight blocks). The Evoformer module utilizes a self-attention mechanism to process and integrate MSA data, enhancing the ability of the model to capture interactions between amino acid residues, particularly those that coevolve during protein folding. This enables efficient exchange of information within MSAs, pairwise representations that allow direct inference of spatial and evolutionary relationships, and success in learning complex patterns in protein sequences that are not explicitly bound to any known structure. In the 3D Equivariant Structure module, the model utilizes invariant point attention (IPA) and a recycling iteration mechanism to extract evolutionary information from MSA, achieving end-to-end structure prediction at the atomic level. Recently, new PSP systems, such as protein–nucleic acid complexes,69 protein–ligand complexes,68 and protein multicon formation 70, 133 prediction, have also been developed using advanced protein structure prediction methods based on deep learning. Despite the remarkable results achieved by AlphaFold 2 for single protein structure prediction, structure prediction for noncanonical amino acids,protein–ligands, protein–nucleic acids, protein complexes (e.g., antibodies), and posttranslational modifications of proteins has yet to be successful. In terms of algorithm optimization, AlphaFold-Multimer specifically targets known stoichiometric multimerization inputs, significantly improving the accuracy of predicted multimerization interfaces while maintaining high intrachain accuracy.13 AlphaMissens uses an AlphaFold-derived system to integrate structural contexts, enabling accurate prediction of proteome-wide missense variant effects.134 AF-Cluster enables the prediction of multiple conformations of proteins through sequence clustering.70 In addition,135 enriches AlphaFold models with ligands and cofactors using algorithms such as AlphaFill. However, the update of AlphaFold 3 is a major and important advancement in the problem of protein multitype structure prediction. The model reduces the reliance on MSA by integrating Evoformer into the simpler Pairformer module. More importantly, AlphaFold 3 introduces a diffusion-based model. This novel structure replaces the previous modules focusing on specific amino acid frames and torsion angles. It can predict the joint structure of biomolecular complexes such as proteins, nucleic acids, small molecules, ions, and modified residues. The diffusion model generates atomic coordinates and provides a less computationally resource-intensive approach to modeling docking and biomolecular interactions. Nevertheless, Alphafold 3 still needs to improve on the problem of proceeding judgment, the problem of illusions (overlapping of two chains, etc.), and insufficient information about protein dynamics. To address these issues, machine learning researchers are actively exploring diffusion generative models, especially in flow matching,136 Schrodinger bridges,137 and stochastic interpolation,136 to improve the accuracy and dynamic prediction ability of the models.

4.2 Advances in deep learning methods

With the optimization and integration of Transformer technology, models such as TrRosetta and RGN2 have significantly improved the accuracy of structural prediction of single-chain and multichain protein complexes by capturing long-range dependencies in protein sequences.138 Meanwhile, integration methods that incorporate data from multiple sources, as well as the application of self-supervised and semisupervised learning techniques, have provided innovative solutions to deal with the problem of scarcity of labeled data, and these methods have enhanced the generalization ability and prediction accuracy of the models by utilizing a large amount of unlabeled biological sequence data.139 With the rapid growth of biological data size and the increase of model complexity, traditional computing resources are challenging to cope with the demand of large-scale data training.140 Parallel computing techniques provide strong support for handling complex tasks and play an important role in large-scale protein structure prediction. With distributed deep learning and multi-GPU architectures, parallel computing frameworks can efficiently distribute computational loads, significantly reduce model training time, and exhibit higher computational efficiency when dealing with ultra-large-scale datasets.141 Khan et al.142 significantly improved the efficiency and stability of DNNs in the task of anti-inflammatory peptide prediction by using a parallel distributed computing approach. Specifically, the training time of the model was reduced by about 70% in a multi-GPU architecture. At the same time, the gradient convergence was accelerated when dealing with large-scale datasets, and the incidence of training instability was reduced, with a 20% reduction in error fluctuations. Modern deep learning-based protein structure prediction methods have been achieved in single-stranded proteins and have recently been used in new protein structure prediction systems such as protein–nucleic acid complexes, protein–ligand complexes, and protein multiconformational prediction. RoseTTAFoldNA extends the RoseTTAFold deep learning approach to accurately predict nucleic acids and protein–nucleic acid complexes to design sequence-specific RNA and DNA binding proteins.69 Co-folded protein–ligand complexes have the potential to accelerate drug repositioning, and Umol combines a large language model and a multimodal language model independent of structural information to enable prediction of the complete all-atom structure of protein–ligand complexes.143 In addition, Schweke et al.144 described a scalable AlphaFold2-based strategy for predicting homo-oligomer assembly between different proteomes to reveal the quaternary structure of proteins. In addition to continuously optimized algorithmic strategies, methods combining large and multimodal language models are gradually showing advantages. Protein language modeling is based on NLP technology, which uses analogous processing of text data to process biological information such as amino acid sequences and protein sequences. Its core advantage lies in capturing the Great Wall dependencies and complex patterns in protein sequences, and even kinetic information, to achieve end-to-end modeling of sequences and to predict the three-dimensional structure of proteins directly from the original amino acid sequences, including precise positioning at the atomic level. OmegaFold uses a protein language model combined with transformer architecture to predict only high-resolution protein structures from a single primary sequence.130 trRosettaX-Single embeds a supervised Transformer protein language model, which performs well in dealing with orphan proteins and protein design, with an average template modeling score (TM-score) of 0.79 and using fewer computational resources.127 Recent studies also explore dynamic prediction methods to simulate the folding process and functional state changes of proteins in organisms, opening up new directions in protein structure prediction techniques.145 These technological advances greatly advance protein structure prediction and provide powerful new tools and methods for understanding complex disease mechanisms, new drug designs, and personalized medicine.

5 CHALLENGES AND SOLUTIONS

Modern hybrid methods demonstrate the potential for solving complex protein structure prediction problems. However, these methods also pose new challenges that require further optimization. High-accuracy protein structure prediction places higher demands on the availability and quality of training data.146 At the same time, modern hybrid methods often rely on data integration from different architectures, which not only increases the complexity of managing and coordinating different learning paradigms with data representations but also significantly increases the demand on computational resources, including memory, processing power, and the complexity of model training and tuning.147, 148 In addition, deep learning models are prone to overfitting, that is, models may overfit specific features (including noise and bias) in the training data while ignoring more general and generalizable patterns when dealing with nonlinear data.149 This overfitting results in models performing well on the training set but performing much less on new data.150 Further, these models have a high dependency on large-scale, high-quality datasets, and the predictive power of the models is significantly reduced in the presence of scarce or noisy data.151 Current deep learning models mainly focus on static structure prediction and have limited ability to predict the dynamic changes of proteins under physiological conditions, especially in complex biological systems.152 Although these models are more effective in predicting the interactions of known molecules under static conditions, dynamic conformational changes of proteins in metabolic pathways or complete biological systems are still difficult to capture accurately.153 This is mainly because proteins continuously adjust their spatial structure to fit the optimal molecular interaction conformation in a dynamic environment. Therefore, the predictive accuracy of existing models is still deficient for large-scale, multicomponent protein systems, such as organelles or transmembrane protein complexes. In addition, the “black-box” nature of deep learning models leads to a lack of interpretability, and the high complexity of protein structures and data scarcity increase the uncertainty of predictions, especially in the case of high-dimensional datasets.154 This is a critical issue in biological applications, where biologists often need to understand the biological mechanisms behind the predictions. One potential solution is a hybrid model combining traditional physical models and deep learning approaches, which combines deep learning with physical models such as MD simulations—leveraging the strengths of deep learning in pattern recognition and big data processing and drawing on the accuracy of physical models in capturing intermolecular mechanical interactions and temporal evolution thereby improving the ability to model the dynamic behavior of proteins. Another approach is to develop multimodal learning frameworks capable of simultaneously processing sequence, structural, and time-series data to capture the dynamic properties of proteins. By integrating multi-level information more comprehensively, multimodal frameworks can provide more accurate solutions for predicting complex protein networks and large-scale multicomponent systems. Roll's study highlights the importance of capturing proteins' conformational changes and dynamic properties for understanding their functions.155 Protein structure prediction is a multidisciplinary field involving several disciplines, including computational biology, structural biology, and artificial intelligence. Collaborative efforts and open-source initiatives are essential to drive the rapid development of this field. Although models such as AlphaFold 3 have made breakthroughs in protein structure prediction, they still need to be open-sourced, limiting the participation of the broader community and further innovation.

6 APPLICATION AND SIGNIFICANCE OF PREDICTED PROTEIN STRUCTURES

The rapid development of protein structure prediction technology has revolutionized life science and medical research.156 Predicting proteins is important for drug discovery and design, understanding disease mechanisms, protein engineering, personalized medicine, and so on. The core of drug design lies in constructing molecules with controllable interaction characteristics to regulate multiple target and nontarget proteins in organisms precisely.157 Potential binding molecules can be identified more rapidly in screening drug candidate molecules by accurately predicting target protein structures combined with large-scale virtual screening.158 Zhang et al.159 showed that the virtual screening method based on the predicted structure of AlphaFold increased the hit rate by 30% compared with the traditional method, which greatly accelerated the discovery process of the lead compounds. Structure prediction of membrane protein complexes is particularly critical in understanding the mechanism of drug-target interactions, as they are the main targets of numerous drugs.160 By analyzing predicted protein–ligand complex structures, deep learning models can reveal key interactions such as hydrogen bonding, hydrophobic interactions, and π–π stacking, information that is crucial for structural optimization and property improvement of drugs. However, predicting the structure of membrane protein complexes faces unique challenges. As membrane proteins are widely found in cell membranes, their transmembrane regions are highly hydrophobic and structurally unstable, which makes acquiring high-quality experimental data very difficult, thus affecting the accuracy of prediction models. Even advanced deep learning models, such as AlphaFold and RoseTTAFold, still have limitations when dealing with transmembrane regions or multicomponent membrane protein complexes.161, 162 These challenges have important implications for drug discovery because membrane protein complexes usually involve complex protein–protein and protein–ligand interactions, directly determining the drugs' binding effect and biological activity. Liu et al. successfully designed a series of highly efficient inhibitors using the AlphaFold-predicted structure of the SARS-CoV-2 master protease, which provided a new idea for developing therapeutic drugs for neoconjugate pneumonia.163 In addition, protein aggregates play a role in many diseases. The effects of 59 mutations on domain stability in cystic fibrosis were predicted using 15 different algorithms, and the structures help predict the effects and mechanisms of multiple disease-causing mutations in other proteins.164 Meanwhile, by accurately predicting protein structures, researchers can purposefully design the amino acid sequences of proteins to create novel proteins with specific functions, which have a wide range of applications in fields such as industrial biocatalysis, environmental protection, and biomaterial development.17

7 CONCLUSION AND OUTLOOK

As deep learning methods evolve in PSP, traditional methods are moving toward revolutionary “neuralization” heralded by the emergence of computational power and combining multiple deep learning techniques. This review outlines the paradigm shift from traditional PSP methods to hybrid prediction methods. Modern prediction methods based on deep learning networks have significantly improved prediction accuracy, especially when competing with nonhomologous proteins, where traditional template-based and TFM approaches often falter. Both TBM and free modeling require computers and corresponding algorithms to simulate the 3D structure of proteins. These methods rely on complex computational processes, including sequence alignment, structure modeling, and energy minimization. The establishment and improvement of various bioinformatics databases have laid a solid foundation for predicting protein sequences, structures, functional annotations, and protein–protein interactions.

The success of AF 2 lies in its innovative attention mechanism, evolutionary information encoding, and end-to-end framework. Subsequently, a series of models such as Umol, RGN2, and TrRosetta adopted deep learning architectures, including Transformer. Further, they refined the algorithmic strategy to enhance the predictive power of the models significantly. The recent release of AlphaFold 3 introduces diffusion modeling techniques that further extend the boundaries of predictive models, particularly in protein-small molecule structure prediction. This extension marks a key shift toward a holistic approach in elucidating biomolecular structures. With the addition of more deep learning methods, such as adversarial generative networks, the applicability of the protein structure prediction problem has been greatly improved, and the prediction of RNA structure is now at the forefront. Meanwhile, in addition to improving the accuracy of the original models, methods combining large and multimodal language models are gradually showing advantages. In the future, as the size and complexity of biological data grow, parallel computing will play a key role in driving deep learning techniques to handle more extensive and complex protein structure prediction tasks. Through multi-GPU architectures and distributed computing frameworks, parallel computing is expected to significantly improve the computational efficiency of models and further optimize the performance of deep learning models in the context of big biological data.

Although MSA is crucial in moving from sequence prediction to structure prediction, it may no longer be the only core challenge. Adding more deep learning techniques, such as diffusion models, GANs, and large language models, is gradually changing the focus of research in this field. The integration of protein language models with innovative neural network architectures will undoubtedly refine our predictive capabilities, heralding a new era in which accurate modeling of proteins will be transformed from a scientific challenge to a routine capability with far-reaching implications for drug discovery, therapeutic interventions, and understanding of the molecular structure of life itself.

AUTHOR CONTRIBUTIONS

Yiming Qin: Conceptualization (equal); data curation (equal); investigation (equal); visualization (equal); writing—original draft (equal); writing—review and editing (equal). Zihan Chen: Writing—review and editing (equal). Ye Peng: Writing—review and editing (equal). Ying Xiao: Supervision (equal); writing—review and editing (equal). Tian Zhong: Resources (equal); supervision (equal); writing—review and editing (equal). Xi Yu: Conceptualization (equal); funding acquisition (equal); project administration (equal); supervision (equal). All authors have read and approved the final manuscript.

ACKNOWLEDGMENTS

We gratefully thank the anonymous reviewers for their important and constructive comments and suggestions. This study was supported by the Fundo para o Desenvolvimento das Ciências e da Tecnologia (Grant Number: 0065/2023/ITP2).

CONFLICT OF INTEREST STATEMENT

The authors declare no conflict of interest.

ETHICS STATEMENT

Not applicable.

Open Research

DATA AVAILABILITY STATEMENT

Not applicable.