The Comparative Diagnostic Capability of Large Language Models in Otolaryngology

This manuscript was presented at the Triological Society Combined Sections Meeting, West Palm Beach, FL, January 24–27.

The authors have no funding, financial relationships, or conflicts of interest to disclose.

Abstract

Objectives

Evaluate and compare the ability of large language models (LLMs) to diagnose various ailments in otolaryngology.

Methods

We collected all 100 clinical vignettes from the second edition of Otolaryngology Cases—The University of Cincinnati Clinical Portfolio by Pensak et al. With the addition of the prompt “Provide a diagnosis given the following history,” we prompted ChatGPT-3.5, Google Bard, and Bing-GPT4 to provide a diagnosis for each vignette. These diagnoses were compared to the portfolio for accuracy and recorded. All queries were run in June 2023.

Results

ChatGPT-3.5 was the most accurate model (89% success rate), followed by Google Bard (82%) and Bing GPT (74%). A chi-squared test revealed a significant difference between the three LLMs in providing correct diagnoses (p = 0.023). Of the 100 vignettes, seven require additional testing results (i.e., biopsy, non-contrast CT) for accurate clinical diagnosis. When omitting these vignettes, the revised success rates were 95.7% for ChatGPT-3.5, 88.17% for Google Bard, and 78.72% for Bing-GPT4 (p = 0.002).

Conclusions

ChatGPT-3.5 offers the most accurate diagnoses when given established clinical vignettes as compared to Google Bard and Bing-GPT4. LLMs may accurately offer assessments for common otolaryngology conditions but currently require detailed prompt information and critical supervision from clinicians. There is vast potential in the clinical applicability of LLMs; however, practitioners should be wary of possible “hallucinations” and misinformation in responses.

Level of Evidence

3 Laryngoscope, 134:3997–4002, 2024

INTRODUCTION

The emergence of accessible artificial intelligence (AI) has already produced a profound change in the medical field and offers a plethora of new opportunities that have potential to change the future of medicine.1 Despite its nascency, AI modalities such as interactive large language models (LLMs) have already been appraised and acclaimed for their integration into various health care fields to carry out a variety of tasks.1 The more popular LLMs thus far are platforms such as ChatGPT, Google Bard, and Bing AI that carry out natural language processing (NLP) tasks, offering person-like chatbots. Trained upon colossal datasets, these models are able to recognize, predict, generate, translate, and conceptualize information on an incredible level.

Common LLM Models

OpenAI's ChatGPT (San Francisco, CA) was launched publicly on November 30, 2022, ushering in a new era of technological access. Within a few months, millions of users were granted access to deep-learning LLMs that could converse with them and answer all of their questions.2 Two versions of the LLM were released: generative pretrained transformer (GPT) 3.5 and GPT 4.0 (available to paying subscribers). While GPT-3.5 has been found to suffer from hallucinations and limitations in its training/knowledge base, GPT-4.0 boasts improved efficiency and accuracy with increased contextual understanding, image processing, language fluency, and a larger knowledge base.3

Bard, launched shortly after ChatGPT, was developed by Alphabet (Google) and involved a separate LLM (pathways language model 2—PaLM2), which uniquely excels at comprehending facts, logical reasoning, and mathematics.4 Linked to Google's real-time network, initial studies indicate that it offers up-to-date information and increased factual accuracy over ChatGPT while lacking the latter's creative and diverse text generation. Finally, Bing has also recently released its own platform in 2023, utilizing technology from GPT-4.0 to enhance its accuracy and performance. However, studies indicate that it is more adept at searching the internet and providing relevant sources.5

Clinical Utility

Within a little more than a year of public use, LLMs have been piloted across medical specialties, building rapport in clinical practice and research. Studies across the board have propounded for the models' usage in practical clinic services: charting assistance, consent documentation, answering patient questions, translation assistance, and boosting readability and health care access. There is also support for their use in a pedagogical space with patient and physician education, research writing and generation, test-writing, and test-taking. Importantly, the models have even begun to enter the realm of physician responsibilities: triaging patients, streamlining protocols, radiological image interpretation, surgical planning, reconstructive image synthesis, and even differential diagnosis generation.1, 6-14 The use of these models has even proven useful for the surgical fields, with literature across the subspecialties including plastic surgery, neurosurgery, orthopedic surgery, and otolaryngology, corroborating its utility in training, research, communication, and diagnostic capabilities.15-17

The Use of ChatGPT for Diagnostic Purposes

As both patients and physicians may turn to LLMs to answer medical questions, the diagnostic capability of these models is of great interest to specialties with a complex symptomatology and interdisciplinary nature such as otolaryngology.18 Many studies, primarily outside of otolaryngology, have explored the capabilities of LLMs (generally restricted to ChatGPT) and have discovered their powerful utility and impressive accuracy for more common patient presentations.6, 19 In certain cases, the LLM even exceeded the performance of unassisted clinicians in the production of differential diagnoses based on a patient vignette.20 However, very few studies have explored this capability for other popular LLMs that patients and physicians may approach with their symptomatology and related questions.21 Furthermore, there is a dearth of information in the medical literature overall comparing the diagnostic accuracy of these various models, which provides a powerful insight into their mechanistic differences and offers valuable information to patients and physicians alike. To better understand how these models compare and to understand the application of LLMs into clinical practice, this study elucidates the strengths and limitations of three of the most popular publicly available LLMs through verified clinical vignettes within otolaryngology. Creating a framework for this information and integrating it into clinical practice is crucial to assessing its accuracy and capabilities while mitigating risks and exploring its use in the future.

MATERIALS AND METHODS

Study Design

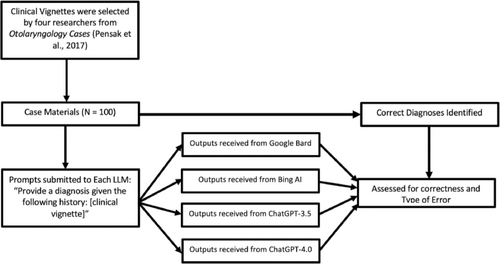

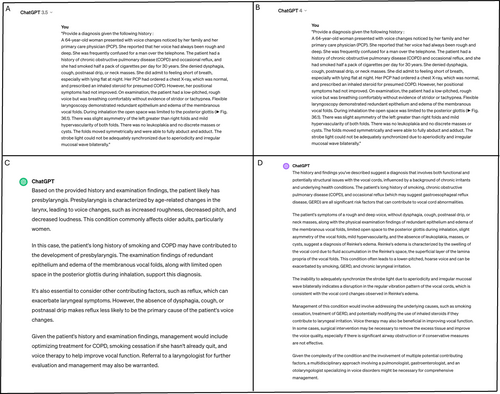

The accuracy of ChatGPT-3.5, ChatGPT-4.0, Google Bard, and Bing AI were assessed through their publicly accessible websites in solving prompted comprehensive clinical vignettes (Fig. 1). Detailed patient histories were selected as clinical vignettes of 10 different otolaryngology subcategories from the second edition of Otolaryngology Cases—The University of Cincinnati Clinical Portfolio (Pensak et al, 2017).22 Each generative artificial intelligence model was prompted to provide a diagnosis given the patient's history. A new chat was created for each clinical vignette; the following prompt was used prior to the inputted vignette to elicit a diagnosis: “Provide a diagnosis given the following history.” The LLM outputs were compared for correctness against textbook answers, and incorrect answers were assessed for type of error (Fig. 2). The queries for ChatGPT-3.5, Google Bard, and Bing AI were conducted in June 2023. The queries for ChatGPT-4 were conducted in January 2024.

Types of Error for Incorrect Outputs

- Logical Error: LLM output demonstrated an understanding of the prompt and step-wise reasoning; however, it was unable to reach the appropriate conclusion.

- Informational Error: LLM output demonstrated a partial understanding of the prompt; however, it utilized additional information separate from the patient history to arrive at an incorrect conclusion.

- Explicit Error: LLM output demonstrated minimal understanding of the prompt or was unable to provide an output.

Data Analysis

Data were analyzed using chi-squared tests of homogeneity with an alpha level of 0.05. All analyses were performed using IBM SPSS Statistics version 26 (Armonk, NY).

RESULTS

Accuracy of Models

Of the 100 clinical vignettes prompted, ChatGPT-3.5 provided 89 accurate diagnoses, Google Bard provided 82 accurate diagnoses, Bing AI provided 74 accurate diagnoses, and ChatGPT-4.0 provided 82 accurate diagnoses (Table I). Each LLM occasionally indicated a need for additional testing, occurring seven times for ChatGPT-3.5, seven times for Google Bard, six times for Bing AI, and seven times for ChatGPT-4.0. These instances were not in response to the same vignettes for the different models. Excluding these occurrences, the accuracy rates for the LLMs were 95.7% (ChatGPT-3.5), 88.17% (Google Bard, ChatGPT-4.0), and 78.72% (Bing AI). ChatGPT-3.5 was significantly more accurate in providing clinical diagnoses as compared to its counterparts (p = 0.006).

| Model | Total Correct | “Additional Testing Needed” | Total Incorrect | Total Accuracy (N = 100) | Revised Accuracy |

|---|---|---|---|---|---|

| ChatGPT-3.5 | 89 | 7 | 4 | 89% | 95.7% |

| Google Bard | 82 | 7 | 11 | 82% | 88.17% |

| Bing AI | 74 | 6 | 20 | 74% | 78.72% |

| ChatGPT-4.0 | 82 | 7 | 11 | 82% | 88.17% |

Distribution of Errors

Excluding outputs indicating a need for additional testing, ChatGPT-3.5 provided four incorrect diagnoses, Google Bard provided 11 incorrect diagnoses, Bing AI provided 20 incorrect diagnoses, and ChatGPT-4.0 provided 11 incorrect diagnoses. ChatGPT-3.5, Google Bard, and ChatGPT-4.0 predominantly experienced logical errors (67%, 55%, and 73%, respectively). Bing AI predominantly experienced informational errors (65%) (Table II).

| Model | Logical Error | Informational Error | Explicit Error |

|---|---|---|---|

| ChatGPT-3.5 | 2 (66.67%) | 1 (33.33%) | 1 (33.33%) |

| Google Bard | 6 (54.54%) | 4 (36.36%) | 1 (9.09%) |

| Bing AI | 6 (30.00%) | 13 (65.00%) | 1 (5.00%) |

| ChatGPT-4.0 | 8 (72.73%) | 3 (27.27%) | 0 |

DISCUSSION

This study is the first to assess the comparative efficacy of the most popular generative LLMs in diagnostic capabilities in otolaryngology. Analysis across the 100 clinical vignettes elucidated some crucial findings. While most of the models did well, ChatGPT-3.5 was the most accurate generative LLM, providing significantly greater revised accuracy of nearly 95% (omitting outputs that did not require additional information). Additionally, the majority of errors committed by three out of the four models were logical errors, whereas Bing reported a higher number of informational errors. The study reveals that there seems to be a significant discrepancy in diagnostic accuracy for otolaryngology vignettes between the most popularly used LLMs, which is crucial for both physicians and patients to be informed about.

Diagnostic Accuracy

All LLMs diagnosed common otolaryngology vignettes with generally high accuracy (>70%) but varied broadly in their individual aptitude beyond this threshold. ChatGPT-3.5 provided correct diagnoses at a very high accuracy of 89% and 7% of the incorrect diagnoses contained output from ChatGPT-3.5 that would need further testing to confirm a diagnosis (95.7% accurate omitting “additional testing needed” prompts). Interestingly, less accurate than ChatGPT-3.5, both Google Bard and ChatGPT-4.0 shared equal accuracies of 82%, and both reported 7% of the incorrect responses due to additional testing requirements as well (88.17% omitting “additional testing needed” prompts). Finally, Bing AI performed relatively poorly, reporting 74% accurate diagnoses and a 78% revised accuracy rate due to 6% of incorrect outputs due to needing further testing.

Open AI's ChatGPT-3.5 is perhaps the most famous and most widely used LLM by both patients and physicians alike.23 Although its access to the internet and training dataset is inferior to that of ChatGPT-4.0 and has similar detriments compared to Bard and Bing, ChatGPT-3.5's ability to diagnose common otolaryngology clinical presentations is superior. ChatGPT-3.5's increased accuracy compared to both Bing and Bard can be due to its training dataset being more comprehensive or relevant to medical data or even to its inherent strength in spontaneous generative responses compared to its more informational/reference-based counterparts, especially for long clinical vignettes. Additionally, ChatGPT-3.5 may handle uncertainty/error better than the other models and it may better understand user queries as well as integrate user language better into its responses.24-26 Its superior diagnostic capability to ChatGPT-4.0 is uniquely interesting considering its purported vast capabilities due to an improved training dataset, more advanced model, and internet access.3 However, it could indeed be that overfitting of the model—its large, diverse training data leading to capturing more complex noise instead of a more obvious signal—that causes it to be less optimized for specific tasks like diagnosing clinical vignettes, leading to ChatGPT-3.5's increased performance in this study.5 Or, more simply, the large amount of misinformation in the internet that ChatGPT-4.0 accesses could also lead to incorrect conclusions.

Error Categorization

ChatGPT-3.5, Google Bard, and ChatGPT-4.0 all reported higher rates of logical errors than any other error type, which may indicate a few things about these LLMs that all users should be cognizant of. First, it may outline the difference between understanding and field-specific reasoning; that is, LLMs are adept at understanding language and performing step-wise reasoning but may lack the ability to make accurate judgments in specialized fields, especially when some information is not presented, and forming conclusions requires skips in logical reasoning.26 Otherwise, these logical error types may be due to the lack of specialized understanding of topics that can lead to errors in judgment, which will then propagate to incorrect conclusions.4, 26 Additionally, while this may not be the cause in this case for common clinical vignettes, LLMs do rely on the data they were trained on, which may be outdated, biased, or incomplete, causing a final diagnosis to err despite sound step-wise reasoning, especially in a rapidly evolving field like medicine. In a different vein, Bing AI's notable higher informational error rate may be due to the LLM's strength in referential source generations and web browsing, which could contribute to the introduction of extraneous information, generating high amounts of error.5 This is uniquely relevant to a field like medicine, with a colossal literature base and public knowledge forums that often report different findings and conclusions. It is important to recognize that the inherent qualities, training data, and training intent of each LLM lead to different strengths and weaknesses that manifest as different types of errors for users to navigate.

Comparison to the Literature and Clinical Utility

The considerable accuracy of LLMs in diagnosing otolaryngology clinical vignettes seen here is variably reflected across the medical literature in other specialties. Some fields, including ophthalmology and orthopedics, report poor diagnostic capabilities of ChatGPT (comparative testing has not been performed in the literature), whereas other more data-intensive fields, such as radiology, mirrored the findings in this study with remarkably high accuracy rates. Despite this dichotomy, the general research consensus echoes a reticence to advocate for the usage of these tools in a self-diagnostic capacity, emphasizing the preeminence of physician consultation and abilities.27-31 The immense heterogeneity in the literature of methodology and technique very much reflects the eclectic nature of the LLMs themselves. It also elucidates the necessity of coherent and perhaps specialty-specific standardized paradigms regarding the usage of these models when appropriate.

This study's demonstration of LLM's impressive generative and innovative language capacity in a highly accurate manner sheds a positive note on the implications and clinical utility of these tools in other medical domains. Related research has utilized LLM's semiotic capacity to demonstrate its significant aptitude in answering patient questions and providing management/treatment advice for disease and symptomologies in otolaryngology and across specialties.32-36 The potential of these models does not stop there; this study's demonstration of LLM language abilities with simple medical information could be used to streamline documentation and triage in the ER or even assist in translation and readability services in the clinic. While this paper's findings are specific to otolaryngology, a broader perspective must be emphasized due to the vast capabilities of LLMs. Understanding the differences between the individual models, done here, is an essential next step to begin standardizing instruments for clinical applicability.

Limitations

While novel in its comparative analysis of LLMs, there are a few limitations that should be noted in assessing this study. The clinical vignettes used in this study were limited to otolaryngology; in turn, this specialization may not represent the broader capabilities and comparative functions of other medical fields. Additionally, the models assessed are continually evolving, and there are far more public AI platforms that physicians and patients may access; thus, further research on the comparative efficacy of these platforms is crucial in the future. Additionally, the error categorization may oversimplify the complex nature of diagnostic reasoning in LLMs; thus, a more standardized approach to evaluating AI error for diagnostic utility may be important. Finally, there are a few limitations to the external validity of this study; although this study chose a standardized and well-accepted set of clinical vignettes, patients and physicians may approach these LLMs for diagnosis in significantly different ways with much more variable amounts of information. These limitations underscore the necessity for cautious interpretation of findings and outputs from LLMs in medical diagnostics.

CONCLUSION

This comparative analysis of popular generative LLMs in the diagnosis of common otolaryngology conditions has yielded key insights into the application of AI in medicine. LLMs can indeed offer substantial support and high levels of accuracy in medical diagnostics, but individual models vary considerably in their performance depending on the task; patients and physicians must be well-informed on these differences before they utilize the models for any sort of medical advice. The study has shown that Open AI's ChatGPT-3.5 is the most diagnostically accurate in the context of common otolaryngology cases. The error analysis further suggests unique strengths and weaknesses inherent to each model and that ChatGPT is uniquely effective for diagnosing common otolaryngology presentations. However, even small error rates in AI platforms can be very dangerous if not interpreted with care, as they can lead to official misdiagnoses, errors in care, and patients treating themselves incorrectly. All users of these platforms should critically evaluate the responses they are receiving and contact physicians for the ultimate judgments regarding their symptomatology and diagnoses. Additionally, there are several ethical and legal implications of deploying LLMs in medical diagnostics that need to still be developed. Therefore, while this study is a significant step forward in understanding the utility of LLMs in medicine, it highlights the need for ongoing research. As the health care field moves forward, it is crucial to continue the evaluation and refinement of these tools, as well as the spreading of user awareness of efficacy, utility, and trustworthiness, to ensure that they enhance, instead of hinder, quality medical care.