How Much Retrieval Ability Is in Originality?

We thank Amina Rajakumar, Jasmin Thelen, and Tim Trautwein for their support in data collection. The research project has been funded by Ulm University (Graduate and Professional Training Center Ulm, 029/126/P/IIP).

ABSTRACT

Creative fluency and originality are pivotal indicators of creative potential. Both have been embedded in hierarchical intelligence models as part of the ability to retrieve information from long-term memory; an ability that is often measured with indicators of retrieval fluency. Creative fluency and retrieval fluency, both expressed by the count of correct responses, are procedurally highly similar. This raises the question how creative fluency and originality are related with retrieval fluency and how both are predicted by other cognitive abilities. In a multivariate study (N = 320), we found that retrieval fluency is very strongly related with creative fluency (r = .87) and substantially related with originality (r = .59). A combined fluency factor still fitted the data well. Cognitive abilities accounted for 63% variance in fluency and 47% variance in originality. After controlling for established cognitive abilities, latent variables for fluency and originality were unrelated with one another. This suggests that the procedural proximity of the ability to fluently generate either information from long-term memory or ad-hoc solutions to unusual tasks and the ability to come up with original ideas needs reconsideration. Locating originality below an overarching retrieval factor is contradicted by the present data.

Creative thinking is, above all, characterized by the ability to think divergently. To date, divergent thinking is the gold standard for measuring creativity or creative potential (see for example Weiss, Wilhelm, & Kyllonen, 2021b). Creative fluency and originality are key constituents of divergent thinking that have previously been related to the ability to retrieve information from long-term memory. Intelligence models have proposed that these constructs can be subsumed below an overarching factor of retrieval ability (e.g., Schneider & McGrew, 2018). This can be explained theoretically, as well as methodologically: The ability to produce a variety of creative answers (creative fluency) is procedurally highly similar to the ability to retrieve information from a given category (retrieval ability). Both require the retrieval of previously stored knowledge regarding a given topic and the monitoring of this process (Rosen & Engle, 1997) and are mostly assessed by the count of correct answers whereas assessment of originality focuses on answer quality.

Even though the relation between retrieval ability and divergent thinking (creative fluency and originality), as well as divergent thinking and other cognitive abilities, is well studied, the question of how retrieval ability, creative fluency, and originality are related with each other and with other cognitive abilities has so far not been studied in a multivariate way.

RETRIEVAL ABILITY AND DIVERGENT THINKING

Divergent thinking can be conceptualized as a cognitive ability that stresses creative fluency (Runco, 2020) as well as the originality of responses (Carroll, 1993). Other aspects have been proposed, such as the flexibility (Weiss & Wilhelm, 2022) and the elaboration of generated ideas (Forthmann, Oyebade, Ojo, Günther, & Holling, 2018), but are only rarely studied (Hornberg & Reiter-Palmon, 2017; Reiter-Palmon, Forthmann, & Barbot, 2019).

Creative fluency is theoretically intertwined with the ability to retrieve information. This ability has been defined as the “rate and fluency at which individuals can produce and selectively and strategically retrieve verbal and nonverbal information or ideas stored in long-term memory” (pp 102, Schneider & McGrew, 2018). The originality of ideas, however, relies on retrieval since it is based upon further processing of both stored and retrieved information, but retrieving information/ideas is not the first, foremost aspect. Previously, the retrieval part has been rarely considered a crucial aspect of creative cognition, because participants are deemed equally able to rely on information available in long term memory. However, novel methodological approaches in modeling semantic memory and accordingly retrieval processes, for example by semantic networks, have revived the link between goal-directed retrieval and retrieval that is rather based on free associations from memory as it is crucial in thinking divergently (Beaty & Kenett, 2023). Furthermore, research provides considerable evidence of how having a lot of ideas (creative fluency/retrieval ability) is related to finding the best idea. For instance, chance models of creativity, like the Equal Odds baseline, assume that creative hits are a positive linear function of the number of produced ideas (Forthmann, Szardenings, & Dumas, 2021).

Models of individual differences in human intelligence locate retrieval, creative fluency, and originality as aspects of idea production which is in term deemed part of the ability to retrieve information from long-term memory (e.g., Goecke, Weiss, & Wilhelm, 2024; Silvia, Beaty, & Nusbaum, 2013). More specifically, Schneider and McGrew (2018) propose five first-order factors in the area of idea production: ideational fluency (frequency of coming up with ideas, words, phrases), expressional fluency (expressing ideas in various ways), associational fluency (producing quality ideas to a given concept), alternative solution fluency (coming up with alternative solutions to a given problem) and originality/creativity (producing original ideas regarding a specific topic).

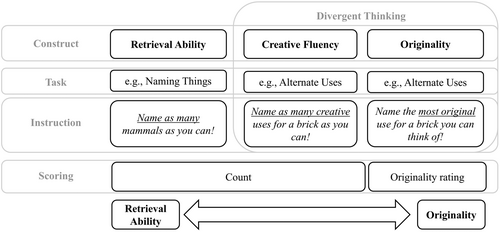

In sum, embedding divergent thinking in models of intelligence—most prominently below a factor of retrieval ability (Schneider & McGrew, 2018)—has a long tradition, and the significant role of retrieval of information from long-term memory in divergent thinking seems self-evident (Carroll, 1993; Guilford, 1956; Miroshnik, Forthmann, Karwowski, & Benedek, 2023). Figure 1 schematically displays the relationship between the three constructs and highlights methodological kinship in terms of performance appraisal.

As displayed in Figure 1, retrieval ability and creative fluency, can both be evaluated by using count variables. This implies that retrieval ability and creative fluency are predominantly assessed by human counts of correct and appropriate responses. Furthermore, as depicted in Figure 1 tasks that are instructed for providing the most original answer can be used to assess the construct of originality. However, in the literature these originality ratings are often also applied on creative fluency tasks that were initially instructed in terms of fluency (“Names as many (creative)”; see Scoring in Figure 1). This might be even the predominant application of tasks in creativity literature (e.g., creative fluency tasks that are instructed for creative fluency, but scored with regards to the count of correct answers and the originality), while tasks that only ask to name the most creative thing are less frequently applied (e.g., Saretzki et al., 2024). Scoring a creative fluency task for both, count data and an originality rating might be a time-efficient variant, that however is also to be considered critically given the dependency between the two scores that are derived from the same set of answers. The possibility to capture originality—as indicated by uncommonness, remoteness, and cleverness of ideas (Wilson, Guilford, & Christensen, 1953)—and not only idea production makes divergent thinking tasks valuable for creativity assessment (Runco, 2020; Weiss et al., 2021b). Although operationalizing creativity by means of originality seems to meet the core definition of creativity best, the literature on creativity is saturated with simple fluency assessments (count of appropriate answers) in divergent thinking tasks, as they are more time-efficient and less costly to score than human codings of originality (Forthmann, Goecke, & Beaty, 2023; Hornberg & Reiter-Palmon, 2017). Therefore, there are two important research questions that cannot be answered conclusively so far. First, are creative fluency assessments based on count variables psychometrically distinguishable from retrieval ability that is also based on count variables of appropriate responses? Depending on the answer to this question, a second important research question is, whether or not we find differential relations with established cognitive abilities with retrieval and creative fluency.

Despite the relative importance of retrieval ability for divergent thinking and the overt overlaps in scoring procedures (c.f., count of appropriate answers), only few studies explicitly addressed the relationship between divergent thinking and retrieval ability. In previous meta-analytic evidence, the simplex displayed in Figure 1 has even mostly been neglected (Acar, Runco, & Park, 2020; Said-Metwaly, Fernández-Castilla, Kyndt, & Van Den Noortgate, 2020). Such meta-analytic studies have, for example, focused on the impact of instruction and time constraints on the ability to be creatively fluent and original, but they have not taken the ability to retrieve information into account.

In contrast, other studies reported the relationship between aspects of divergent thinking and retrieval ability. For example, one multivariate study showed that retrieval ability (including a higher-order model with six narrow ability factors) predicted a factor capturing both creative fluency and originality significantly (β originality = .44, β fluency = .40; c.f., table 4 in Silvia et al., 2013). A similar correlation between divergent thinking and retrieval (r = .54), also on the latent level, was reported by Forthmann et al. (2019). Further evidence for this relationship was reported in a recent meta-analysis (r = .48, 95% CI: [.40, .56]; Miroshnik et al., 2023). This meta-analysis included 41 studies that were—among others—also coded for the type of instruction and the type of divergent thinking indicator that was used. Both variables were examined as moderators: the type of instruction did not play a significant role in the relationship between divergent thinking and retrieval ability, only the type of divergent thinking indicator played a significant role as a moderator in this relationship (Miroshnik et al., 2023). Another moderator, called Gr-ness (i.e., time restriction, instruction condition, and scoring condition of divergent thinking tasks), which should depict the conceptual overlap between Gr and DT measures, did not alter the relationship between divergent thinking and retrieval ability. Based on these results, the authors concluded that the communalities between retrieval ability and divergent thinking as well as the differences between these constructs (such as retrieving existing information versus generating novel combinations) require further exploration.

Given the theoretical relationship between retrieval, creative fluency, and originality it seems reasonable to think that they might be similarly predicted by the same cognitive abilities. Next, we summarize results reported for the relation between established cognitive abilities and retrieval and aspects of divergent thinking.

COGNITIVE ABILITIES THAT EXPLAIN VARIANCE IN RETRIEVAL ABILITY AND DIVERGENT THINKING

People differ in their ability to think divergently as well as in their ability to retrieve information from long-term memory. These individual differences seem to be partially explained by several cognitive abilities. For example, recent meta-analytical evidence shows not only that a factor accounting for general cognitive abilities is substantially related with overall creativity, but also that various second-order abilities such as fluid and crystallized intelligence, memory and learning related abilities, visual perception, cognitive and processing speed, and retrieval ability are linked to creativity (Serban, Kepes, Wang, & Baldwin, 2023). In addition, numerous studies report a link between retrieval ability and divergent thinking with working memory capacity (WMC) (Benedek, Jauk, Sommer, Arendasy, & Neubauer, 2014b; Lee & Therriault, 2013; Rosen & Engle, 1997; Unsworth, Spillers, & Brewer, 2011), secondary memory (Hedden, Lautenschlager, & Park, 2005; Madore, Jing, & Schacter, 2016), crystallized intelligence (Gc) (Unsworth, 2019; Weiss et al., 2021a), and mental speed (Forthmann et al., 2019; Miroshnik et al., 2023; Vock, Preckel, & Holling, 2011). These cognitive abilities have been previously discussed as crucial predictors of retrieval performance, as they are essential to activate, monitor, and suppress information during the actual retrieval process. A recent multivariate study has shown that these predictors explain 62% of the variance in a higher-order factor of retrieval ability (Goecke et al., 2024). Given the theoretical proximity of retrieval ability and divergent thinking, it seems promising to investigate whether these constructs predict divergent thinking to a similar extent. Next, we present earlier findings regarding the relation between divergent thinking and WMC, secondary memory, Gc, and mental speed.

Working memory capacity

Working memory is considered a cognitive system that facilitates non-automatized cognitive processes by actively retaining information while simultaneously allowing the processing of new information (e.g., Conway, Jarrold, Kane, Miyake, & Towse, 2008). Competing theories of WM agree that it is a system that can only store and process a limited amount of information (Oberauer et al., 2018). Generating creative ideas that require the activation of stored knowledge and its processing into a novel solution seems to increase the affordances on executive functions, including working memory, relatively more as compared to simply retrieving existing information from long-term memory. This assumption is supported by previous findings that suggest that WMC plays a significant role in divergent thinking (Benedek et al., , 2014b; Lee & Therriault, 2013; Weiss et al., 2021a). In line with this, a recent meta-analysis reported k = 176 effect sizes of n = 29 studies for the correlation between WMC and divergent thinking. They found a significant, but low correlation between both constructs (r = .09, 95% CI [.07, .10]; Gerver, Griffin, Dennis, & Beaty, 2023). This small meta-analytical correlation might be biased by differences in understanding and operationalizing WMC as well as by differences in operationalizing, instructing and scoring divergent thinking tasks (Gerver et al., 2023). Further meta-analytical evidence reports a similar relationship between WMC (verbal, visual–spatial, and dual-task WMC) and creativity (r = .08, 95% CI [.05, .12]; Gong et al., 2023). This meta-analysis furthermore reports a moderating effect of cultural environments.

Secondary memory

Dual component models of working memory distinguish between primary and secondary memory (Unsworth & Engle, 2007). Primary memory is deemed responsible for active maintenance of a number information units, whereas secondary memory contains units of information that cannot be stored any longer in primary memory, but that are not subject to decay yet (Unsworth & Engle, 2007)—hence secondary memory resembles the idea of activated long-term memory and its importance rises as soon as the performance on the primary memory or working memory reaches capacity limits (e.g., Mogle, Lovett, Stawski, & Sliwinski, 2008). Previous research has shown that episodic (personal experiences) and semantic secondary memory are involved when it comes to producing divergent ideas. Basically, it can be assumed that creative ideas are based on controlled retrieval from semantic and episodic memory: A search process in stored information that also includes idea construction and evaluation steps (Benedek, Beaty, Schacter, & Kenett, 2023). In a study including two experiments it was shown that response fluency and flexibility of responses is enhanced after an episodic memory induction; however, these studies did not account for originality (Madore et al., 2016). Further evidence is again provided by a recent meta-analysis that shows that the relationship between semantic memory and divergent thinking (r = .20, 95% CI [.18, .24]) is comparable in height to the relationship between episodic memory and divergent thinking (r = .12, 95% CI [.01, .09]; Gerver et al., 2023). Again, differences in operationalization, instruction, and scoring were not accounted for.

Crystallized intelligence

Evidence on the relationship between Gc and retrieval ability is substantial and based on several multivariate studies including broad construct coverages of Gc (e.g., Goecke et al., 2024; Unsworth et al., 2011). Likewise, there is strong meta-analytical evidence that supports a meaningful link between Gc and divergent thinking (r = .28, 95% CI [.22, .33]; Gerwig et al., 2021). Interestingly, this relationship seems to be even higher in magnitude than the meta-analytical relationship between fluid intelligence and divergent thinking. Specifically, cultural knowledge and vocabulary are related with generating creative ideas (Sligh, Conners, & Roskos-Ewoldsen, 2005). In a further multivariate study a nested factor of Gc predicted divergent thinking—a latent factor accounting for fluency—significantly (β = .38) and even mediated the relationship between the personality trait of openness for experience and divergent thinking (Weiss et al., 2021a). However, a factor for originality was not significantly predicted by Gc (Weiss et al., 2021a). Another study only reported a weak correlation (on the manifest level) between Gc and a figural divergent thinking task (r = .25) and no significant correlation with verbal divergent thinking (Avitia & Kaufman, 2014). A study investigating a sample of young children (3rd graders) found a correlation of r = .25 between crystallized intelligence and divergent thinking coded for fluency based on a count variable (Goecke et al., 2023). This suggests, that (a) there is a relationship of retrieval akin scorings of divergent thinking and Gc and (b) the relationship between originality and crystallized intelligence requires further investigation.

Mental speed

Forthmann et al. (2019) demonstrated a higher correlation of mental speed with retrieval ability (r = .67) compared to its correlation with divergent thinking (r = .31). Nevertheless, they also illustrated that the performance in divergent thinking, modeled as nested below mental speed and retrieval ability, relied on both: mental speed and retrieval ability. A recent meta-analysis indicated that the strong correlation between mental speed and divergent thinking (r = .31, 95% CI [.20, .41]) was strongly influenced by the relationship between retrieval ability and mental speed (Miroshnik et al., 2023). Consequently, mental speed exhibited only a marginal R 2 increment of .03 over retrieval ability (Miroshnik et al., 2023). Contrary to these findings, mental speed (nested below a general factor of cognitive abilities) did neither predict fluency (indicated by count variables accounting for retrieval fluency and creative fluency) nor originality (indicated by originality ratings) in a multivariate study (Weiss et al., 2021a). Hence, the connection between divergent thinking and mental speed remains inconclusive. This discrepancy might stem from variations in operationalization, instruction, and scoring. Furthermore, differences in the paced nature of divergent thinking tasks could introduce bias in estimating the strength of this relationship (Preckel, Wermer, & Spinath, 2011).

THE PRESENT STUDY

For the present study, we aimed at examining two research aims. First, we addressed the question how creative fluency and originality are related with retrieval ability. Since creative fluency, expressed as a count variable, is conceptually close to retrieval we assume creative fluency to be highly correlated with retrieval ability. Furthermore, we assume that due to the positive manifold among ability measures originality is lower, but still substantially related with retrieval ability. Given prior results, we assume that model fit deteriorates substantially if creative fluency and retrieval fluency are assumed to correlate at unity.

Second, we examined the predictive power of cognitive abilities (working memory capacity, Gc, mental speed, and secondary memory) in the fluency factor(s) and originality. Based on previous findings, we expect that cognitive ability factors predict fluency and originality to a similar degree but might play a larger role in accounting for variance in fluency. Given that originality has been subsumed as a lower-order factor of retrieval ability in previous intelligence models, we further test if the residuals of retrieval and originality are meaningfully related after controlling for established cognitive abilities. A correlation of these residuals would indicate specificity in the link between both constructs. The research objectives of the present study were not preregistered.

METHOD

PARTICIPANTS AND PROCEDURE

The study was promoted through multiple channels, including social media, mailing lists, flyers, and advertisements in newspapers and public transportation. Data collection occurred in two German cities. Participants needed to be fluent in German and aged between 18 and 45 years, considering that cognitive decline is observed more commonly in later stages of life (Hartshorne & Germine, 2015). Tasks and instructions were presented on 27″ computer screens using Inquisit 6 software (Inquisit 6, Version 6.5.2, 2022). The sequence of tasks was uniform across all participants. Each session had standardized on-screen instructions and was overseen by a trained proctor. The computerized test battery was conducted in group sessions, accommodating up to eight participants simultaneously. The entire test battery, with three breaks (two 5-min breaks and one 15-min break) including snacks and beverages, took about 5 h to complete. All participants provided written consent and received 55 Euros as compensation for their involvement. The study was conducted in adherence to the ethical guidelines outlined in the EU General Data Protection Regulation (GDPR), ensuring participant anonymity and receiving approval from a local ethics committee.

In total, N = 335 participants from the general population took part in our study1, from which N = 331 were eligible for statistical analysis, because n = 4 participants were excluded during data cleaning, because they either encountered major technical errors or had terminated the study early due to personal reasons. Please note that the sample size for the current study was determined based on a power analysis conducted for a multivariate study of retrieval ability (see also Goecke et al., 2024). The power analysis was based on the target measurement models of retrieval ability (single-factor vs. correlated factors model with three latent factors) and found that a sample size of N = 300 yields sufficient power (>.80, α = .01) for the targeted parameter estimates of these models (i.e., factor loadings). The sample of N = 331 were 65.4% female and ranged in age from 18–42 years (M age = 26.4 years, SD age = 5.4). N = 263 of the sample indicated holding at least a high school degree, and N = 85 indicated holding a college degree.

MEASURES

The focus of this manuscript is on the measurement of retrieval ability and divergent thinking. All constructs were measured by various tasks in a multivariate fashion as described in the following sections. Furthermore, cognitive abilities were assessed by measuring WMC, Gc, secondary memory, and mental speed. For all constructs displayed in table 1 measurement models based on aggregated items were estimated. The latent factors displayed acceptable to good reliability (ωRetrievalAbility = .84; ωCreativeFluency = .61; ωOriginality = .60).

| Construct | Task | Length (minutes) | Item | ICC3k | M (SD) |

|---|---|---|---|---|---|

| RA | Things | 8 | Animals | 1 | 69.97 (21.41) |

| RA | Things | 8 | Groceries | 1 | 71.58 (25.32) |

| RA | Things | 1.5 | Countries | .97 | 11.23 (4.10) |

| RA | Things | 1.5 | Furniture | .99 | 12.46 (5.55) |

| RA | Things | 1.5 | Plants | 1 | 22.01 (7.60) |

| RA | Words | 8 | First letter “S” | 1 | 75.25 (22.05) |

| RA | Words | 8 | Last letter “N” | 1 | 70.45 (28.43) |

| RA | Words | 1.5 | First letter “D” | 1 | 19.41 (5.54) |

| RA | Words | 1.5 | Last letter “R” | 1 | 13.69 (5.23) |

| RA | Words | 1.5 | Four letters | 1 | 16.70 (5.73) |

| RA | Synonyms | 4 | Lonely | .82 | 6.21 (3.39) |

| RA | Synonyms | 1.5 | To relax | .95 | 6.50 (2.71) |

| RA | Synonyms | 1.5 | Clean | .98 | 6.75 (3.22) |

| CF | Alternate Uses | 1 | Wooden lath | 1 | 5.73 (2.26) |

| CF | Alternate Uses | 3 | Towel | 1 | 14 (5.10) |

| CF | Alternate Uses | 1 | Book | 1 | 5.81 (2.00) |

| CF | Alternate Uses | 3 | Knife | 1 | 9.48 (4.51) |

| CF | Consequences | 3 | Epidemic erases all males | 1 | 6.88 (3.99) |

| CF | Consequences | 1 | Teleportation is possible | 1 | 3.34 (1.75) |

| CF | Consequences | 3 | Only online-shopping is possible | 1 | 7.01 (3.55) |

| CF | Consequences | 1 | Everyone can read and write perfectly | 1 | 2.71 (1.40) |

| ORG | Alternate Uses | 1 | Wooden lath | .75 | 1.99 (.41) |

| ORG | Alternate Uses | 3 | Towel | .88 | 2.14 (.33) |

| ORG | Alternate Uses | 1 | Book | .81 | 2.07 (.38) |

| ORG | Alternate Uses | 3 | Knife | .89 | 1.95 (.44) |

| ORG | Consequences | 3 | Epidemic erases all males | 1 | 2.01 (.56) |

| ORG | Consequences | 1 | Teleportation is possible | .88 | 1.90 (.66) |

| ORG | Consequences | 3 | Only online-shopping is possible | .83 | 2.20 (.55) |

| ORG | Consequences | 1 | Everyone can read and write perfectly | .90 | 1.67 (.65) |

| ORG | Combining Objects | — | Construct a scarecrow (place: house with garage, no trees) | .89 | 2.15 (.95) |

| ORG | Combining Objects | — | Construct a lightning rod (place: cabin close to a junkyard) | .79 | 2.68 (1.00) |

| ORG | Combining Objects | — | Construct a doorstopper (place: ordinary home) | .84 | 3.02 (.89) |

| ORG | Combining Objects | — | Construct a stretcher for a wounded person (place: cabin in the forest) | .83 | 2.49 (.92) |

| ORG | Plot Titles | 2 | See OSF | .70 | 2.21 (.83) |

| ORG | Plot Titles | 2 | See OSF | .71 | 2.33 (.86) |

| ORG | Plot Titles | 2 | See OSF | .65 | 2.41 (.77) |

| ORG | Plot Titles | 3 | See OSF | .60 | 2.45 (.77) |

| ORG | Plot Titles | 3 | See OSF | .71 | 2.38 (.79) |

| ORG | Plot Titles | 5 | See OSF | .66 | 2.43 (.80) |

- Note. All tasks were administered in German. Please see the open science repository for the full item description of the Plot Titles items. Retrieval Ability and Creative Fluency were coded based on a count variable, originality is rated using an originality scoring (Likert-Scale 1 to 5; see Methods).

- RA = Retrieval ability; CF = Creative fluency; ORG = Originality.

Retrieval ability

Retrieval ability was measured with both verbal and figural tasks. The verbal task tapped the narrow Gr abilities of ideational fluency, expressional fluency, and word fluency. An overview of these tasks and their respective items can be found in Table 1. All tasks were presented to participants in a similar manner: first, participants were instructed regarding the task specific requirements. For example, instructions for ideational fluency stated that the task was to name as many representatives of a given category as possible (for a full overview, see the column Item in Table 1). Then, participants were presented with an example category and example responses. As soon as participants were ready and understood the instructions, they were able to start the actual task themselves. Participants then had to type their responses into an open-ended textbox and press “Enter” to log each of their responses until the time limit of the task was reached. For each verbal fluency task, we administered several items (i.e., categories) in both a “long” and a “short” time condition, which refers to the total time for each task per item. That is, we distinguished between short periods of retrieval time (i.e., baseline short), and long periods of retrieval time (i.e., baseline long). Please note that this differentiation between time constraints has no psychometric consequences; that is, long and short time periods for retrieval cannot be distinguished (see Goecke et al., 2024).

The tasks for assessing figural fluency (e.g., “Draw as many objects as you can based on a circle and a rectangle”) were used from the Berliner Intelligenzstruktur-Test für Jugendliche: Begabungs- und Hochbegabungsdiagnostik (Berlin Structure-of-Intelligence test for Youth: Diagnosis of Talents and Giftedness; Jäger, 2006; Jäger, Süß, & Beauducel, 1997). We employed four figural fluency items that were assessed using paper and pencil, as they required participants to draw figures.

All retrieval tasks, verbal and figural, were rated by two to three independent human coders regarding their fluency. To estimate agreement among coders, ICCs were estimated (Koo & Li, 2016). The applied coding procedure for the figural fluency tasks followed the recommendations of the test manual. ICC3k greater .9 can be considered very good. The inter-rater reliabilities for each of the verbal retrieval tasks are shown in Table 1. The inter-rater reliabilities for the figural fluency tasks with n = 3 coders ranged from .98–1.

Divergent thinking tasks

We applied two tasks for assessing creative fluency and two tasks for assessing originality. Tasks, descriptive statistics, and reliability estimates (ICCs) for all single items are presented in the Table 1. As displayed in Figure 1, the two tasks that assessed creative fluency were rated in terms of response quantity (count variable) as well as in terms of response originality. The ICCs were estimated as proposed by Shrout and Fleiss (1979). Based on our study design, we chose the ICC3k formula that reflects the relative agreement of a fixed set of coders that rated all items. All human coders were semi-experts regarding creativity, and all went through a training procedure prior to coding. All coders were trained in a two-hour session in which the data, their structure, and the scoring guidelines along with a definition of creativity (including fluency and originality) were explained. During the coding procedure, coders evaluated their responses independently and were only provided with the responses of the task they were currently coding.

Creative fluency

We used two tasks (four items each) to measure creative fluency. In the alternate uses task (AUT) and consequences (CON) fluency tasks, participants were instructed to produce as many creative and appropriate responses as possible within a given time. Both tests were first described in Wilson, Guilford, Christensen, and Lewis (1954) and have been popular indicators of fluency ever since (e.g., see Fink & Benedek, 2014).

The AUT (e.g., “Name as many creative alternate uses for the following object: ‘towel’”) included four items that were administered with different time constraints. For two of the items the generation period lasted 180 s per item, for the other two items it was 60 s per item. The CON task (e.g., “Name as many creative consequences of a hypothetical situation: e.g. no male humans are living on earth anymore”) also included 4 items, two with a 180 s time limit each and two with a 60 s time limit each.

The tasks had open response formats and hence—just like other divergent thinking tasks—required human coding. Three independent human coders applied a retrieval akin fluency coding (number of correct responses) as well as a originality coding. The originality of each idea was rated according to rareness and uniqueness of a response on a Likert-Scale from one (“not original”) to five (“very original”) (e.g., Benedek, Mühlmann, Jauk, & Neubauer, 2013; Silvia et al., 2008). Inter-rater reliability was acceptable to high for both tasks (count variable as well as originality scoring; see Table 1). We averaged scores provided from the three independent coders, resulting in a single mean score per item. Subsequently, all items of a task were aggregated to derive a task score.

Originality

Originality was assessed based on four tasks (with four to six items per task). Two of these tasks overlapped with creative fluency tasks. This implies that the multiple creative answers produced in these tasks were all coded for originality and averaged afterwards. Even though, this practice has been questioned righteously because it undermines the instruction-scoring fit (Reiter-Palmon et al., 2019) and it also leads to computational dependencies between the scores given they are based on the same set of answers, it is the most frequently used scoring setting in the creativity literature. In order to investigate these problems, we furthermore used two tasks—plot titles (TIT; Wilson et al., 1954) and combining objects (CO; Ekstrom, French, Harman, & Derman, 1976)—in which participants were instructed to provide a single response that should be as unique and original as possible.The items for CO (e.g., “Combine two objects in order to build a door stopper in your house”) were adapted and translated from English to German language from the Kit of Reference Tests for Cognitive Factors (Ekstrom et al., 1976). The task consisted of four items (with no time limit). All items that were used in plot titles task were inspired by Wilson et al., 1954 and the participants were asked to come up with an original title for a short story. This task was piloted in an earlier study (Weiss, Olderbak, & Wilhelm, 2023) and included six items with a time limit of 2 min to deliver a maximally original title.

Three human coders rated all individual responses. Every response was rated by each coder on a five-point scale based on scoring guidelines recommended in the literature (Silvia et al., 2008; Silvia, Martin, & Nusbaum, 2009). More precisely, a response was rated as very original if it was unique/rare/novel (uncommon), remote, and unexpected (clever) within the current sample (e.g., Guilford, 1967; Silvia et al., 2008). Coders were instructed to rate the originality in relation to the responses given by other participants: this implies that they first scanned across all answers before coding each answer in relation to the sample. Inappropriate responses were coded as zero. ICCs for originality were all acceptable. We averaged scores provided from the three independent coders, resulting in a single mean score per item. Subsequently, all items of a task were aggregated to derive a task score.

Working memory capacity

WMC was measured by three tasks tapping location-letter binding, numerical updating, and spatial-figural updating (Wilhelm, Hildebrandt, & Oberauer, 2013). In the spatial-figural updating paradigm colored rectangles are presented and updated in a 3 × 3 grid matrix. In this task, participants are asked to remember the last position of each colored rectangle in the matrix. Similarly, the numerical updating task used numbers as stimuli which are presented and continuously updated on the screen. For each trial, participants were asked to remember the last number for each possible position. In the location-letter binding task, a trial included the sequential presentation of a list of letter-position bindings, which participants were instructed to remember. For a more detailed description of the tasks see Goecke et al. (2024). All WMC tasks were scored according to partial credit scoring procedures. The scores per task were used as indicators in the latent variable modeling approach.

Secondary memory

Secondary memory (SM) was assessed using a word-word and a word-number task (c.f., Wilhelm et al., 2013). In both tasks, participants were asked to learn and memorize 40 word or word-number pairs divided in two blocks each. Between learning and recall, participants completed other tasks for 3 minutes. After the retention interval, participants were asked to recall the learned stimulus pairs of each list. Secondary memory was also scored according to a partial credit scoring scheme (Conway et al., 2005). For more information see Goecke et al. (2024). Task performance per block (i.e., four mean scores) were used as indicators for latent variable modeling.

Mental speed

Mental speed was also assessed using three tasks: A figural comparison task (two strings of three abstract figures simultaneously presented on screen; Goecke, Schmitz, & Wilhelm, 2021; Schmitz & Wilhelm, 2016), a numerical search task (digits ranging from 0 to 9; Schmitz & Wilhelm, 2016), and a lexical decision task (Lepore & Brown, 2002; Meyer & Schvaneveldt, 1971; Vurgun, 2014). For the respective tasks, participants had to decide as fast as possible if the strings of abstract figures were identical, if the presented digit was a “3” or not, and if the word was either an existing verb/noun or a non-word. All tasks used a binary response format. For more information regarding outliers and implausible values see Goecke et al. (2024). Task scores were mean response times of the participants; these scores were used for latent variable modeling.

Crystallized intelligence (Gc)

Crystallized intelligence was measured with vocabulary tests and tests of declarative knowledge. The vocabulary test included 50 items sampled from three different existing measures of vocabulary (Lehrl, 2005; Schmidt & Metzler, 1992; Schroeders, 2018). One parcel per test was built and subsequently used for latent variable modeling. For more information see Goecke et al. (2024). Declarative knowledge was measured with 96 items that cover four broad knowledge domains (natural sciences, social sciences, humanities, and life sciences; c.f., Steger, Schroeders, & Wilhelm, 2019). 50% of the items were presented in a multiple-choice response format with four response alternatives; the other 50% were presented in an open-ended response format (c.f., Goecke, Staab, Schittenhelm, & Wilhelm, 2022). Parcels that could be used for latent variable modeling were built for each response format for each broad knowledge domain (i.e., 8 parcels). For more information see Goecke et al. (2024).

DATA TREATMENT

Prior to statistical analysis, the data of interest for the current study were checked for outliers and implausible values. We investigated multivariate outliers across task scores in our sample based on the Mahalanobis distance (see also Meade & Craig, 2012). The Mahalanobis distance is the standardized distance of one data point from the mean of the multivariate distribution of several task scores. For our study, we considered participants fluency scores of three of the retrieval indicators and the scores of the three vocabulary tests to account for the verbal nature of most of the administered tasks. We excluded a total of n = 9 participants due to their Mahalanobis distances >20. Additionally, n = 2 more participants were removed as they were extreme outliers (>3 SDs) in the creativity task alternate uses, that is an often applied divergent thinking task. The final sample for statistical analysis thus consisted of N = 320 participants.

STATISTICAL ANALYSIS

All analyses were conducted with R (R Core Team, 2022) and we provide all materials necessary to reproduce the analyses in an online repository: https://osf.io/bt5zu/.

Confirmatory Factor Analysis (CFA) was carried out with the R package lavaan (Rosseel, 2012). Where possible, we used full information maximum likelihood estimation under the assumption of missing completely at random to combine missing data and parameter estimation in a single step (Schafer & Graham, 2002; Enders, 2010). Models based on continuous indicators are based on maximum likelihood estimator with robust standard errors (MLR). The following fit statistics were considered indicating good model fit: CFI (Comparative Fit Index) ≥ .95, RMSEA (Root Mean Square Error of Approximation) ≤ .06, and SRMR (Standardized Root Mean Square Residual) ≤ .08 (Hu & Bentler, 1999). For acceptable model fit these boundaries were used: CFI ≥ .90, RMSEA ≤ .08, and SRMR ≤ .10 (Bentler, 1990; Browne & Cudeck, 1992). We also computed dynamic fit indices (McNeish & Wolf, 2023), since fixed cutoffs for evaluating model fit are not a panacea for evaluating models (e.g., Groskurth, Bluemke, & Lechner, 2023). Therefore, we additionally evaluated model fit (CFI and RMSEA) from the empirical data against suggested dynamic cutoffs for “mediocre”, “fair”, and “close” model fit. Fair fit on CFI and RMSEA is recommended as a lower threshold for accepting models (McNeish & Wolf, 2023). Close fit is meant to indicate excellent fit of a model. Throughout the results, we transparently report both traditional and dynamic fit indices, but do not reject models based on single fit indices. We used McDonald's ω as indicator of factor saturation (McDonald, 1999; Raykov & Marcoulides, 2011). Factor saturation of a factor indicates how much variance is accounted for by a latent variable in all underlying indicators (Brunner, Nagy, & Wilhelm, 2012). Prior to modeling, indicator variables were standardized to account for differences in scales.

RESULTS

RETRIEVAL ABILITY, CREATIVE FLUENCY AND ORIGINALITY

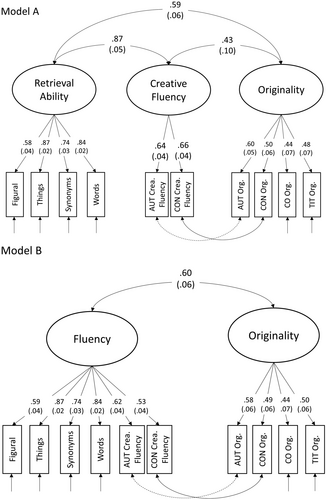

First, we examined the relationship between retrieval ability, creative fluency, and originality. Both, retrieval ability and creative fluency are based on a count of correct answers and therefore express fluency of performance. The measurement models we examined are displayed in Figure 2. Traditional fit indices of model A showed acceptable fit, whereas the dynamic fit indices indicated that the model fit was below acceptability (i.e., <mediocre). Model A shows that retrieval ability and creative fluency are highly correlated (r = .88; SE = .054; p < .001). In contrast to that, retrieval ability and originality are related weaker but still substantially r = .59 (SE = .060; p < .001). Interestingly, creative fluency and originality—both aspects of divergent thinking—are still weaker related with one another (r = .43; SE = .099; p = .001) than both constructs are related with retrieval ability. Even though the correlation between retrieval ability and creative fluency is not at unity, setting this correlation to unity only leads to slight deterioration of fit (c.f., Model B in Figure 2). Given these results, retrieval ability and creative fluency can be subsumed below one fluency factor that entails ideational, expressional, word, or creative fluency. This factor is substantially related with originality (r = .60; SE = .061; p < .001).

ADDING COGNITIVE ABILITIES

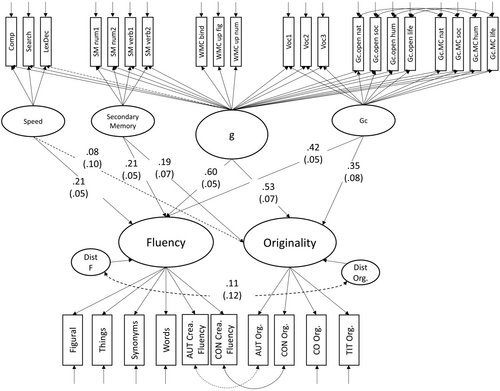

Second, we aimed to examine to what degree general cognitive abilities account for variance in originality and fluency. Additionally, we also were interested in whether or not fluency and originality are related after controlling for cognitive abilities. Hence, we extended the model by a measurement model of cognitive abilities (for a detailed description of the measurement model see Goecke et al., 2024) that consists of a general factor (marked by the WMC tasks) and three nested factors capturing unique shares of variance of Gc, secondary memory, and mental speed respectively. We have chosen to model a bifactorial measurement model that is described as bifactor-(S-1) in the literature (Eid, Geiser, Koch, & Heene, 2017; Eid, Krumm, Koch, & Schulze, 2018). This model contains only three specific factors (S; in Figure 3: Speed, Secondary Memory, and Gc) and omits the fourth specific factor (WMC) in favor of a reference factor. Given the importance of WMC/fluid abilities for general cognitive abilities (e.g., Carroll, 1993; Kyllonen & Christal, 1990; Wilhelm et al., 2013) and given the methodological problems associated with full bifactor models (e.g., vanishing factors, irregular loading patterns, negative variances; Eid et al., 2017), this approach is warranted. Thismodel was then combined with Model B as displayed in Figure 2.

The structural equation model displayed in Figure 3 fitted the data well (dynamic fit: close fit). General cognitive abilities (g) predicted both retrieval ability (β = .60) and originality (β = .53) to a similar degree. Gc (β = .42 and β = .35, respectively) and secondary memory (β = .21 and β = .19, respectively) also predicted both factors fluency and originality. The regression weights from these nested factors indicate approximately similar proportions of variance accounted for by established cognitive abilities. Mental speed only predicted fluency significantly (β = .21), but did not play a significant role for originality (β = .08)—nevertheless, compared to the other predictors, both regression weights were substantially smaller. In total, 62.5% (R 2 = .625) of variance in fluency was explained by the applied predictor space, and 45% (R 2 = .450) of variance in originality was explained by the set of predictors. To investigate the unique relation between fluency and originality, after having controlled for the predictor space, we correlated their respective residuals (i.e., disturbance terms) with one another, which yielded a non-significant correlation (r = .11).

Please note that in Figure 3 all regression weights towards fluency and originality were freely estimated. To examine whether the amounts of explained variance in the factors of fluency and originality were statistically different from one another, we fitted an additional model where all regression weights for each predictor were set to be equal. Although the model still exhibited good fit (n = 320; χ2(405) = 795.69, CFI = .90, RMSEA = .06 with 90%-CI [.05; .06], SRMR = .07; dynamic fit: close fit), a formal model comparison revealed that the model displayed in Figure 3 (no constraints) fitted the data significantly better than Model B (∆χ2(4, N = 320) = 26.62, p < .001).

DISCUSSION

A long tradition of cognitive ability models, but also factor analytical approaches to human intelligence, subsumed key aspects of divergent thinking, such as originality, as part of a factor of the ability to retrieve information from long-term memory (e.g., Carroll, 1993; McGrew, 2009; Schneider & McGrew, 2018). Importantly, this classification has been neglected in creativity research and aspects of divergent thinking have mostly been studied disconnected from cognitive abilities (e.g., retrieval ability). In this study, we contribute to answering the question of how retrieval ability, creative fluency, and originality are related with each other and how they are related with each other once established intelligence factors are controlled for.

RETRIEVAL ABILITY AND CREATIVE FLUENCY

Retrieval ability was correlated highly with creative fluency. Both factors are based on tasks that express performance as count variables. The correlation between both abilities was close to unity and subsuming both abilities below one fluency factor hardly deteriorated fit. The correlation in the present data seems to be higher than relations previously reported either in multivariate individual studies or meta-analysis (see for example Miroshnik et al., 2023; Silvia et al., 2013).

However, previous theoretical and statistical models have stressed the closeness of both constructs that obviously lies in the method of performance appraisal if both are coded by a count variable. A general factor of fluency that subsumes retrieval ability and creative fluency is therefore in line with the proposed factor structure of Schneider and McGrew (2018) and Carroll (1993). In the creativity literature, such count variables are often used based on their time-efficiency and cost-effectiveness (e.g., Reiter-Palmon et al., 2019). Our study, however, shows that if indicators of creative fluency are analyzed based on simple count variables, they are highly related to the ability to retrieve information from long-term memory. Their extremely strong relationship, as well as the result that the model fit does not significantly diminish if both factors are collapsed to one factor even allows for the conclusion that these arguably two constructs (both displayed by count variables) are measuring the same underlying ability. Interestingly, creative fluency is more strongly related with retrieval ability than with originality. This implies that, creative fluency based on count variables has much less to do with originality than previously expected. Furthermore, the correlation between originality and retrieval ability is similar to the correlations that have been found hitherto (e.g., Miroshnik et al., 2023). This correlation indicates that retrieval ability and originality are related; however, they are far from being the same. The present result and the strength of the relation reported by Silvia et al. (2013) seem to match this. In fact, the reported correlations challenge assumptions that can be drawn from monotrait-monomethod and heterotrait-monomethod considerations (Campbell & Fiske, 1959). That is, given that creative fluency and originality are deemed two indicators of the same underlying construct, namely divergent thinking, they should be higher correlated with one another (monotrait-monomethod) than with another construct, that is not deemed part of the construct rationale of divergent thinking per se (e.g., retrieval ability: heterotrait-monomethod). In contrast to that assumption, our results rather endorse an interpretation of creative fluency being a plausible indicator of retrieval ability, whereas originality should be seen as something quite different.

Based on our findings, it is prudent to conclude that creative fluency indicators that are based on count variables are more akin to retrieval from long-term memory than they are to originality. These findings also provide further evidence for the existence of originality as a latent factor, if measured broadly, that can be distinguished from fluency factors and is meaningfully related to other abilities. In previous work, the existence of originality has been questioned, for example, due to limited factor variance of a nested originality factor (Weiss et al., 2021a) or due to redundancies and confounds with (creative) fluency (e.g., Batey, Chamorro-Premuzic, & Furnham, 2009; Preckel, Holling, & Wiese, 2006). Other studies show that originality, as indicated by various tasks using multiple items (e.g., combining objects task, creative consequences tasks, etc.), is indeed existent and discriminant to creative fluency (see, for example, Weiss et al., 2023). This inconclusiveness regarding originality can be traced back to insecurities regarding the many faces this construct can be measured. Taken together, our present results rather stress the importance of originality as a distinct factor (if measured broadly based on indicators asking for one very original answer, but also including indicators that average the originality across multiple answers) and we suggest—despite their time and cost efficiency—to refrain from using count variables, but rather use originality indicators.

FLUENCY AND ORIGINALITY BEYOND COGNITIVE ABILITIES

Prior research endorses the view that relations between indicators of fluency (e.g., retrieval indicators) are not simply due to these indicators being a linear combination of various established cognitive abilities (Goecke et al., 2024). Similarly, divergent thinking has been related to cognitive abilities. However, most studies are restricted to measures of fluid and/or crystallized intelligence and might thus not exhaust the predictive power of established intelligence factors (e.g., Gerwig et al., 2021). Given the conceptual proximity of retrieval ability and originality, we expected that cognitive abilities that have been shown to explain substantial variance in retrieval ability (WMC, Gc, secondary memory, and mental speed) also and to a similar size explain variance in divergent thinking. Explicitly, we found a general factor (referenced by WMC) of intelligence explaining the most variance in originality, while nested Gc was the second-highest predictor before secondary memory and speed (that was unrelated with originality). Recent meta-analytical findings on the relationship between creativity and various Stratum II abilities (c.f., Three-Stratum Theory of Cognitive abilities; Carroll, 1993) support our findings and report correlations of a similar magnitude. In sum, we found that these constructs explained 47% of the variance in divergent thinking, compared to 63% of the variance in fluency (indicators of retrieval ability and creative fluency). Previous studies have related fluency (as an indicator of retrieval ability and creative fluency) as well as originality with these cognitive abilities and found similar predictive power. Given the overlap between fluency and originality and previous results where divergent thinking/originality assessments were often contaminated with fluency resulting in increasing correlations between the two (Forthmann, Szardenings, & Holling, 2020; Hocevar, 1979) the assumptions that originality is ordered among fluency inherently makes sense. However, most studies have not included the variety of cognitive abilities, fluency indicators and originality to go further and test if fluency and originality really share communalities beyond cognitive abilities. In our study, we found that residuals of fluency and originality are not meaningfully related, once established cognitive abilities are controlled for. This implies that the two constructs have nothing in common beyond the variance that is explained by Gc, WMC, and secondary memory. Based on this finding we would argue that (a) originality and the fluently retrieved information from long-term memory are much less related than previously suggested; and (b) the results question theoretical models that subsume originality as a part of retrieval ability (e.g., Schneider & McGrew, 2018). Therefore, we would argue that originality is a unique ability that is related with other cognitive abilities, but not ordered among one of them.

LIMITATIONS AND DESIDERATA FOR FUTURE RESEARCH

The current study included a broad variety of indicators and used a large community sample. Nevertheless, there are a few limitations that have to be kept in mind when interpreting the results. First, creative fluency was only assessed based on two tasks (each including four items). More tasks are desirable to allow better abstraction from peculiarities of specific indicators. Second, the tasks that were used to measure divergent thinking were all based on verbal indicators, whereas retrieval ability also included a figural indicator. Future studies should cover a broader range of task content to obtain better construct coverage. Third, the scoring procedures applied in the creative fluency as well as the originality tasks might challenge the current results: different scoring procedures might have led to (slightly) different results. For example, if only truly novel answers were scored as correct responses in creative fluency tasks and if all answers that seem to display a pure retrieval from long-term memory were dismissed (Benedek et al., 2014a, 2014b; Gilhooly, Fioratou, Anthony, & Wynn, 2007; Silvia, Nusbaum, & Beaty, 2017), this scoring could have affected the relationship between creative fluency and retrieval ability as well as originality. In such a case, the correlation between creative fluency and retrieval ability might be lower than reported in this study. We thus want to stress that future research might benefit from studying such moderating effects. This also applies to various scoring procedures that could be applied to score originality. In our current study originality was scored by rating each and every answer. However, a recent quantitative review of human scoring methods shows more than 100 different instructions and scoring procedures of divergent thinking tasks (Saretzki et al., 2024). Future studies should systematically investigate how different scoring approaches of creative fluency tasks and originality tasks and aggregation methods in scorings are related to one another and how they affect relations with other cognitive abilities. Such a study should also include variants of computerized scorings that are based on large language models (e.g., Organisciak, Acar, Dumas, & Berthiaume, 2023).

CONCLUSION

In sum, we found that retrieval ability and creative fluency were highly related to one another. Originality was related to both, however, to a much smaller degree. We conclude that the usage of count variables in creativity research does rather capture retrieval. Interestingly, originality and the ability to fluently retrieve information from long-term memory were not related to another beyond working memory capacity, crystallized intelligence, and secondary memory. These empirical findings stress the unique position of originality and its distinctiveness of fluency and question several theoretical intelligence models that place originality among factors of the ability to retrieve information. We conclude that the placement of originality among retrieval ability should be rethought.

Acknowledgment

Open Access funding enabled and organized by Projekt DEAL.

Open Research

DATA AVAILABILITY STATEMENT

All files and data for analyses are available at Open Science Framework: https://osf.io/bt5zu/. The authors have no conflicts of interest to disclose. The study was conducted in accordance with the declaration of Helsinki.

REFERENCES

- 1 Please note that, given the multivariate design of the study, another manuscript has been published based on the same sample (Goecke et al., 2024).