Artificial intelligence and radiomics applied to prostate cancer bone metastasis imaging: A review

Abstract

The skeletal system is the most common site of metastatic prostate cancer and these lesions are associated with poor outcomes. Diagnosing these osseous metastatic lesions relies on radiologic imaging, making early detection, diagnosis, and monitoring crucial for clinical management. However, the literature lacks a detailed analysis of various approaches and future directions. To address this gap, we present a scoping review of quantitative methods from diverse domains, including radiomics, machine learning, and deep learning, applied to imaging analysis of prostate cancer with clinical insights. Our findings highlight the need for developing clinically significant methods to aid in the battle against prostate bone metastasis.

Abbreviations

-

- CT

-

- computer tomography

-

- GLUE

-

- global-local unified emphasis

-

- GRAD-CAM

-

- gradient class activation map

-

- HIPAA

-

- Health Insurance Portability and Accountability Act

-

- KNN

-

- k-nearest neighbor

-

- MBD

-

- metastatic bone disease

-

- ML

-

- machine learning

-

- MRI

-

- magnetic resonance imaging

-

- PET

-

- positron emission tomography

-

- PSMA

-

- prostate-specific membrane antigen

-

- R-CNN

-

- region-based convolutional neural network

-

- ROI

-

- region of interest

-

- SEER

-

- surveillance, epidemiology, and end results

-

- SREs

-

- skeletal related events

-

- SVM

-

- support vector machine

-

- WBS

-

- whole bone scintigraphy

-

- XGB

-

- eXtreme gradient boosting

-

- YOLO

-

- you look only once

1 INTRODUCTION

The main physiological functions of bone are to provide structural support for the body, protect internal organs, store calcium and phosphate, and provide an environment for bone marrow (which contains hematopoietic and mesenchymal stem cells for the production of blood cells and connective tissue, respectively) [1]. Bone is the 3rd most common site of metastasis in the body overall [2]. These bone lesions can appear as osteoblastic/sclerotic (bone-forming), marked by increased intensity on imaging; osteolytic (bone-destroying), marked by decreased intensity on imaging; or mixed appearance. Prostate cancer is the most common nondermatologic cancer in men and has the second highest cancer mortality [3]. Bone is the most common site of metastases from prostate cancer, occurring in more than 80% of patients with advanced prostate cancer [4]. These metastatic lesions typically present with an osteoblastic appearance but can also form osteolytic lesions [4, 5]. Tumor cells preferentially affect the highly vascularized regions of bone, including the vertebrae, pelvis, ribs, and proximal ends of long bones [6] (Figure 1).

Bone metastasis physiology. Osteoblastic factors secreted by metastatic cancer cells include endothelin-1 (ET-1), bone morphogenetic protein (BMP), Wingless proteins (Wnt), and parathyroid hormone-related peptide (PTHrP), which stimulate new bone formation. PTHrP can also stimulate osteoblasts to secrete receptor activator of nuclear factor-kappa B ligand (RANKL), which binds to RANK receptors on osteoclasts to trigger bone resorption. Bone resorption then releases various growth factors, such as transforming growth factor-beta (TGF-β), the insulin-like growth factor (IGF)-1, and extracellular calcium, that drive cancer cell growth.

The seed and soil model, a well-validated model originally proposed by Stephen Paget in 1889, describes how tumor cells preferentially select specific metastatic environments, with bone being one such site for some cancers [7]. Bone metastases in prostate cancer carry a poor prognosis, with a median survival of approximately 40 months and a 5-year survival rate of 33% [8]. Seminal trials such as the Chemohormonal Therapy Versus Androgen Ablation Randomized Trial for Extensive Disease in Prostate Cancer trial stratify patients into low-volume and high-volume by the number and location of metastases, with high-volume patients showing improved response to chemo-hormonal treatment [9]. Patients with metastatic bone lesions are at particular risk for developing a range of complications known as skeletal related events (SREs), which include bone pain, pathological fractures, hypercalcemia, and spinal cord compression [10]. SREs occur in up to half of advanced prostate cancer patients and greatly impair quality of life, mobility, and functionality [11]. Thus, the early detection, treatment, and monitoring of metastatic bone lesions in prostate cancer cases remains paramount. Until recently, nuclear medicine scan (bone scintigraphy) has been the most commonly used modality for detecting malicious bone lesions. It provides several advantages, including [1] the ability to capture detailed functional information like blood flow and metabolic activity, [2] the ability to scan the entire skeleton, unlike computed tomography (CT) or magnetic resonance imaging (MRI), which are fixed at a specific location, and [3] lower exposure to radiation and cost. Though MRI provides distinct capabilities, including higher sensitivity to soft tissue structures, it is subjected to complicated imaging protocols and cost. On the other hand, with higher spatial resolution, CT is highly sensitive to bone morphology; however, it involves adding to the radiation dose to patients. More recently, positron emission tomography-computer tomography (PET-CT) is the imaging modality of choice for visualizing these bone lesions due to its combination of anatomic and functional imaging capabilities. However, this does not restrain radiation exposure and cost [12]. It is crucial to diagnose malignant bone lesions in the early stage to accurately stage and monitor the disease and assess treatment response. Early detection of these bone lesions can be achieved with multiple paradigms, such as prediction, classification, detection, and segmentation, depending solely on the objective. These tools enable clinicians to make informed decisions using quantitative and qualitative assessments to improve disease detection, progression tracking, and management.

Recent advancements in artificial intelligence (AI) in healthcare have witnessed significant strides, from enhanced diagnostic accuracy to personalized treatment recommendations. AI is generally defined as the ability of computers to replicate human intelligence and behavior. Machine learning (ML) is a subfield of AI that utilizes statistical methods to perform tasks often rooted in pattern recognition, often by minimizing a cost function to reduce error. Deep learning is a subfield of ML which specifically uses deep neural networks as a model architecture. Fueled by data, ML models now excel in detecting complex medical conditions, aiding in early disease identification. Machine learning-based methodologies often have a significant role in the automation process across the paradigms. Popular regression-based techniques such as Elastic Net, Lasso, Ridge, and support vector regressors have been extensively used in regression analysis. Furthermore, traditional techniques such as the support vector machine, decision trees, random forest, and K-nearest neighbor (KNN) were the dominant approaches in classification tasks. In segmentation, we can find the wide usage of contour-based, region-growing, and threshold-based techniques. However, ML methods require domain expertise followed by manual feature extraction, and the performance often remains subpar in addressing complex problems. The advent of neural network-driven architectures overtook ML techniques by automatically extracting pertinent features. In particular, convolutional neural networks (CNNs) have shown immense potential in addressing complex problems.

Additionally, radiomics have been applied to a variety of pathologies to improve lesion analysis [13-15]. Radiomics is a broad term but generally refers to the quantitative extraction of features from radiologic images. These features include both first-order features (e.g., pixel intensity) and higher-order features (e.g., the Gray-Level Cooccurrence Matrix and Gray-Level Run-length Matrix) [16]. Radiomic features can be used as input for ML models, alongside manually annotated image qualities and direct image data, to enhance the performance of these models [17]. While these quantitative tools prove promising in analyzing radiologic images, they are mostly restricted to the research domain and have not yet been translated into clinical work. This review discusses the quantitative approaches used to study osseous metastatic prostate cancer lesions on radiologic imaging, focusing on AI and radiomics methods. Shedding light on these methods reveals underdeveloped research topics and identifies the knowledge gaps and steps needed to translate these strategies and their application to clinical practice.

Given the critical roles of bone in both normal physiology and as a common site of metastasis, particularly in prostate cancer, the necessity for precise detection and monitoring of bone lesions becomes evident. Traditional imaging modalities, while useful, have limitations in sensitivity, specificity, and practical application. This landscape sets the stage for exploring innovative approaches such as AI and radiomics. By leveraging the power of ML and deep learning, these methods can offer enhanced diagnostic accuracy and early detection capabilities. Thus, the research objective of this review is to evaluate the quantitative approaches, particularly AI and radiomics, for studying osseous metastatic prostate cancer lesions. This evaluation aims to highlight how these advanced techniques can address existing limitations in clinical practice, thereby improving disease detection, progression tracking, and patient management.

The inclusion criteria for this review include research papers that cover quantitative methods (radiomics, ML, and deep learning) applied to the study of bone metastatic prostate cancer lesions on radiologic and nuclear imaging (X-ray, CT, MRI, positron emission tomography [PET], PET-CT, and whole bone scintigraphy). The following keyword search was run on PubMed:

(“prostate cancer” OR “prostate metastases” or “prostate lesion” OR ((prostate OR prostatic) AND (cancer OR cancers OR metastases OR metastasis OR neoplasm OR neoplasms OR metastatic OR tumor OR tumors OR malignant OR tumor OR tumors))) AND (radiology OR radiomic OR radiomics OR radiologic OR CT OR MRI OR PET OR PET/CT OR x-ray OR scintigraphy OR imaging) AND (bone OR skeleton OR bones OR osseous OR blastic OR lytic) AND (“machine learning” OR “deep learning” OR “artificial intelligence” OR “neural network” OR “neural networks” OR “radiomic” OR “radiomics” OR “statistical”).

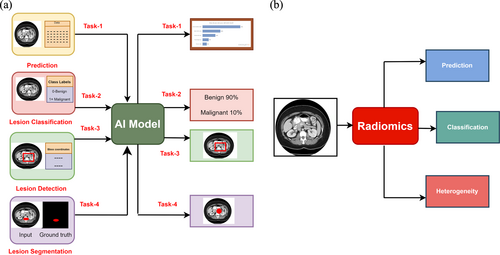

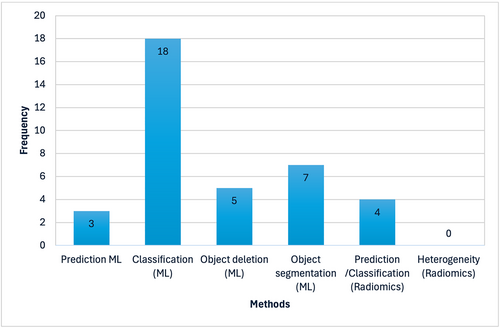

A representative sample of 37 papers covering multiple imaging modalities and quantitative methods were selected for this review. We divide the review by the primary task which the quantitative methods were aiming to achieve. Some of the most common aims of ML include prediction, classification, object detection, and object segmentation (Figure 2a). Some of the most common aims of radiomics include prediction, classification, and heterogeneity analysis (Figure 2b). Figure 3 depicts the number of papers included in the respective methods.

Inclusion criteria overview. (a) Machine learning and deep learning techniques and (b) Radiomics-based approaches for addressing prostate bone metastasis.

Graphical depiction of various methods proposed in the literature on prostate bone metastasis versus number of publications.

2 MACHINE LEARNING AND DEEP LEARNING TECHNIQUES

2.1 Prediction

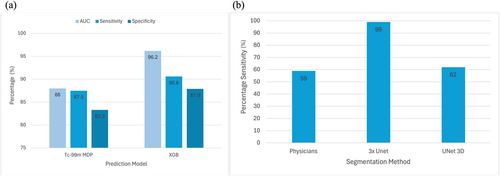

Prediction aims to use imaging data to predict future events, such as patient outcomes or treatment response. In early 2008, Chiu et al. [18] presented a perceptron-based neural network for detecting prostate cancer as skeletal and nonskeletal metastasis from technecium 99m-methylene diphosphonate (Tc-99m MDP) whole-body bone scintigraphy images. This study achieved a discriminatory power of area under the receiver operating characteristic curve (AUC) (0.88 ± 0.07, p < 0.001) with sensitivity (87.5%) and specificity (83.3%). However, the inherent limitations of ANNs, such as interpretability and susceptibility to biases, influence the generalizing capability. In a study by Liu et al. [19], the authors experimented with various ML models to predict bone metastasis by analyzing the demographic and clinical data from the Surveillance, Epidemiology and End Results (SEER) database. Out of many methods, the authors discovered that the eXtreme gradient boosting (XGB) yielded good predictive performance. XGB achieved significant results with AUC, accuracy sensitivity and specificity of 96.2%, 88.4%, 90.6%, and 87.9%, respectively. Figure 4a represents the graphical depiction comparing the power of Tc-99m MDP whole body bone scintigraphy images versus XGB in predicting metastases in prostate cancer. Anderson et al. [20] conducted a study investigating patients' survival periods after treating SREs in prostate cancer patients, depicting reasonable performance gain (AUC = 76%) with clinical utility. Constant improvements to these AI-generated, disease-specific prediction models show promise in informing better clinical decision-making in a timelier manner. Namely, increased reliability in forecasting skeletal metastases may allow these prediction techniques to serve as a robust guide for clinicians to formulate personalized treatment strategies derived from predictive outcomes. Consequently, ongoing research endeavors are imperative to enhance the predictive accuracy of ML models, thereby facilitating their seamless integration into clinical practice.

Model performance metrics. (a) Graphical depiction comparing power of methylene diphosphate (Tc-99m MDP) whole body bone scintigraphy images versus eXtreme gradient boosting (XGB) in predicting metastases in prostate cancer. (b) Graphical comparison of sensitivity of AI-based bone lesion segmentation models compared to nuclear medicine physicians. AI, artificial intelligence; Tc-99m MDP, technecium 99m-methylene diphosphonate.

2.2 Lesion classification

Classification aims at mapping the lesions in the images to discrete categories, most commonly by grade (i.e., grade 1–4) or stage (i.e., benign, malignant, or metastatic). We observe the trend of using classical DNN-based classification architectures such as ResNet [21], ResNext [22], MobileNet [23], DenseNet [24], and GoogleNet [25] to achieve the objective. Residual networks introduced identity mapping through skip connections in CNNs, facilitating feature reuse, flexible gradient flow, and optimizing complex functions. ResNext is an improved version of ResNet that adds the concept of ‘cardinality’ by facilitating parallel paths within each block, enabling richer feature formulation to improve overall performance. DenseNet is another popular variant; unlike ResNet in DenseNet, a skip connection is laid one-to-one, facilitating faster optimization, enabling feature reuse, and eliminating the risk of vanishing gradient. MobileNet is a popular ‘lightweight’ variant of a CNN, with a depthwise separable convolution component leading to lower computation complexity and model size without compromising performance. An Inception network facilitates an environment for fusing multiple convolutional filters of varying scales, allowing robust feature representation by reducing the number of trainable parameters.

Masoudi et al. conducted an extensive study detecting metastatic bone lesions from prostate cancer [26]. The authors investigated a multitude of aspects, including size, location, texture, and voxel-level information, to devise a concrete ensemble framework and achieved an accuracy of 92.2 in identifying benign versus malignant bone lesions. Han et al. [27] presented a [1] spatial normalization (SN), [2] spatial and count normalization (SCN) driven 2D CNN architecture for classifying whole-body bone scans into progression and nonprogression classes based on clinical reports and achieved AUC of (78–85)% with SN and (95–97)% with SCN, respectively. Furthermore, the authors incorporated GRAD-CAM as an explainable AI component to visualize the areas responsible for making decisions. Papandrianos et al. presented a CNN-based classification architecture for differentiating whole-body bone scans as metastatic and nonmetastatic [28] and achieved an overall accuracy of 91.61%, with patient-level accuracy of 91.3%, 94.7%, and 88.6% for normal, malignant, and degenerative changes respectively. Furthermore, as an extension, the same group of authors presented fast CNN architecture for classifying whole-body bone scans into metastatic, degenerative, and nonmetastatic with superior performance [29, 30] with an accuracy and sensitivity of 97.3% and 95.8%, respectively. Ntakolia et al. have adopted the Look-Behind fully convolutional neural network concept in lightweight mode to reduce the trainable parameters and the computation cost of lesion classification without compromising overall performance [31]. Belcher et al. conducted two tasks involving the classification of the bone scan into the anterior and posterior pose, followed by classifying bone scan hotspots into metastatic and nonmetastatic using CNNs to achieve superior performance [32]. Acar et al. conducted a texture analysis on PET-CT images with metastatic lesions exhibiting prostate-specific membrane antigen (PSMA) expression and sclerotic lesions (completely responded) without PSMA expression [33]. The authors concluded that the weighted KNN approach differentiated sclerotic lesions from metastasis or completely responded lesions with an AUC of 76%. Providência et al. presented an iterative semi-supervised learning framework (hotBSI) focusing on reducing false positive rates in detecting malignant and nonmalignant bone hotspots [34] and achieved a sensitivity and specificity of 63% and 58%, respectively.

Han et al. [35] proposed a customized 2D CNN architecture that accepts a pair of whole-body-based DICOM images (anterior and posterior) using an appropriate mask to define the region of interest. Further, the authors introduced a global–local unified emphasis (GLUE) by combining the whole body with local patches to improve the overall performance. The GLUE and WB models achieved an overall accuracy of 89% and 88.9%, respectively. Liu et al. [36] presented the ResNet 50 architecture with a pretraining (ImageNet) and fine-tuning strategy for identifying bone lesions from whole-body bone scintigraphy images to achieve an accuracy of 81.23% and lesion-based sensitivity and specificity of 81.30% and 81.14%, respectively. Ibrahim et al. [37] presented a CNN-based classification pipeline for detecting metastatic bone disease in prostate cancer with the specificity and sensitivity of 80% and 82% respectively. Furthermore, the authors have provided visual proof via GRAD-CAM activations, emphasizing the learning pattern. In a study conducted by Higashiyama et al. [38], the authors analyzed and concluded the ability of CNNs in processing anterior and posterior bone scintigraphy images to examine bone metastases from prostate cancer. In [39], authors have experimented with CNN (Inception, EfficientNet, DenseNet, and ResNext) and transformer (ViT and DeiT) architectures to delineate superiority in detecting prostate bone metastasis. The authors concluded that transformers (tiny viT) show superior performance in terms of overall F1 score over CNNs.

Clinically, the development of robust legion classification architectures will be useful in improving clinical monitoring and informing treatment approaches. With a wide breadth of strategies already available for categorizing tumors, most research is now focused on devising more efficient and accessible methods of lesion classification for use in clinical settings. Studies such as Ntakolia et al.‘s [31] development of more lightweight, less computationally heavy CNNs have begun moving in this direction, while others such as Han et al. [35] and Liu et al. [36] have focused on advancing CNN classification models through improving accuracy in lesion identification. Ibrahim et al. developed an algorithm that can maintain high accuracy with reduced time, improving clinical viability [37]. Despite these advancements, many strategies for lesion classification through ML have yet to achieve clinical feasibility, as further validation is needed for clinical use. Going forward it is imperative that these strategies continue to maximize accuracy and processing speed while reducing computational burden and cost, for clinical use to be realized.

2.3 Lesion detection

The goal of object detection is to identify a lesion or a high-risk region of an image, often with a bounding box. Mask R-CNN is one of the pioneer architectures in object detection involving region proposal, feature extraction, and bounding box regressor configuring object detection pipeline in CNNs [40]. You Look Only Once (YOLO) is another popular object detection model [41]. It follows a grid-based strategy (dividing images into patches) for detection and localization through one pass, making it fast and accurate. Cheng et al. designed an object detection approach driven by YOLO v4 for detecting prostate cancer from bone scintigraphy images [42]. The authors adopt appropriate augmentation techniques (intensity, flipping), transfer learning, and negative mining to improve the overall performance. Cheng et al. [43] presented a CNN-based object detection module involving two stages for early identification of prostate bone metastasis. Firstly, the pelvis-neural network is designed to identify the metastatic pelvis malignancy, followed by identifying the metastatic hotspots from the rib and spinal cord. Notably, this method extensively uses hard negative and positive mining with data augmentation. This model achieved a sensitivity and precision of 87% and 81% when detecting the pelvis. In a study conducted by Masoudi et al. [44], the authors employed a cascaded deep neural network architecture for staging prostate bone metastasis. In a multi-stage framework, the authors employed Yolov-3 and ResNet-50 for detection, achieving superior performance. This model achieved an overall accuracy and sensitivity of 81% and 89%, respectively with substantial improvement with data augmentation (91%). These early lesion detection models, particularly when used in tandem with classification strategies to determine high-risk regions, show promise of clinical usefulness in identifying and treating prostate bone metastases. CNN based object detection models, as well as the YOLO object detection models proposed by Cheng et al. [42, 43] have the potential to allow clinicians to easily identify early metastases, and these systems can also provide a pre-diagnostic report to help clinicians make more accurate and informed final decisions about treatment strategies.

2.4 Lesion segmentation

Image segmentation is the process of delineating fine contours around a lesion, which can be used for quantifying the true extent of the tumor, for tracking lesion changes over time, or as a radiomic feature for further analysis. Most segmentation methods are inspired by the well-established UNet [45] architecture involving a feature extractor block and reconstructor block. Of the four categories of ML tasks discussed in this review, segmentation is the one with the least available literature. Zhao et al. [46] reported success when applying a 3x UNet model to axial, coronal, and sagittal images to segment lesions on 3D images. This method achieved a 99% sensitivity, specificity, and F1 score in detecting bone lesions while achieving 94%, 89%, and 92% sensitivity, specificity, and F1 score, respectively, in detecting lymph nodes. Furthermore, this architecture facilitates a conducive environment for extracting features from multiple views (sagittal, coronal, and axial) to formulate a superior feature space, improving the overall performance. In a study by Schott et al. [47] the authors evaluated the efficacy of nnUNet and DeepMedic in multiple aspects (detection, segmentation, prognosis, etc.). nnUNet, a UNet-based algorithm with built-in workflow and architectural optimization, displayed superior image segmentation ability compared to nonCNN architectures; however, it remained subpar regarding prognosis. Unet3D applied to PET-CT, in which both the PET and CT images were provided as input per sample, showed 62% sensitivity in segmenting these bone lesions, which is significantly lower than its ability to segment primary tumor and lymph node metastatic lesions (each with 79% sensitivity), but higher than the ground truth sensitivity when compared to physicians (with 59% sensitivity) [48]. In an interesting study by Afnouch et al. [49], the authors presented an ensemble of segmentation methods, namely Hybrid-AttUnet++, for segmenting metastatic lesions from CT images. Notably, this dataset included metastatic lesions across the organs, including two subjects with prostate bone metastasis. Hybrid-AttUnet++ achieved a dice, precision and recall of 77%, 82%, and 84%, respectively. The studies have shown that the use of CNN architectures to detect and segment metastatic lesions in prostate cancer is possible without a drop in accuracy, with some studies such as Schott et al. [47] proposing that they are in fact more accurate and consistent than previously used methods. However, because of the limited research done on lesion segmentation, this ML task is the farthest from being ready for clinical use. There is a need for the development of more optimal methods directed at specific clinical tasks, along with a need for larger benchmark datasets to train CNN architectures, as suggested by Afnouch et al. [49]. Continued research on automated, CNN-based lesion segmentation models has the potential to allow for improved tracking of metastatic lesions, providing clinicians with data to inform optimal decision making.

3 Radiomics

Radiomics is an innovative medical imaging field involving the extraction and analysis of quantitative features from radiographic images. Unlike traditional image interpretation, radiomics delves into the intricate details of pixel intensities, textures, and spatial relationships to uncover hidden patterns indicative of underlying physiological or pathological conditions. Zhang et al. [50] proposed a “radiomics nomogram” for predicting bone metastasis from T1-weighted MRI images (dynamic contrast-enhanced). The comprehensive mechanism involved LASSO regression integrated with clinical risk factors, multivariate regression, and decision curve analysis exhibited decent performance (AUC 0.93, CI 0.86–0.89). In a study by Wang et al. [51], the authors analyzed mp-MRI (T2-weighted) and T1-weighted (DCE T1-w) images with various texture characteristics. The authors have used multiple methods (step regression, LASSO regression, and ridge regression) for feature extraction. The authors concluded that combined features from mp-MRI (T2-weighted) and T1-weighted (DCE T1-w) images depicted a better predictive performance with AUC of 0.875 and 0.870, respectively. Reischauer et al. [52] showed how whole-tumor textural analysis changed significantly at 1 month, 2 months, and 3 months of androgen deprivation therapy when compared to pre-treatment measurements and that these changes correlated with serum prostate-specific antigen levels. The authors concluded that employing volumetric texture analysis of the whole tumor could serve as a pertinent approach tackling prostate bone metastasis. Hinzpeter et al. [53] presented a radiomics feature driven by ML algorithms to classify the CT images as metastatic and nonmetastatic using Ga-PSMA PET imaging as a reference standard. Experiments depicted the gradient-boosted tree achieving superior performance with accuracy, sensitivity and specificity of 85%, 78%, and 93%, respectively.

One aspect of prostate bone metastasis radiologic lesion characteristics that has not been explored in depth is inter-patient and intra-patient lesions heterogeneity. A small number of studies have been devoted to prostate cancer bone metastatic lesion heterogeneity on a molecular or histological level, which has shown promise in stratifying lesions by tumor phenotype [54, 55], patient prognosis [56], and treatment planning [57, 58]. Molecular heterogeneity involves multiple factors such as proteins related to androgen receptor sensitivity, DNA repair [54], prostate-specific antigen [55], inflammatory markers, and global DNA methylation. However, we could not identify any studies that focused on radiomic heterogeneity, nor that correlated molecular heterogeneity with its radiomic counterpart. Studies in this area could provide a greater ability to better characterize these lesions and understand the connection between molecular events and appearance on imaging. This facilitates a better understanding of prostate cancer etiology, affecting treatment strategies and perhaps leading to the development of more effective therapies.

4 DISCUSSION

In this discussion section, we critically evaluate the efficacy of ML methods and radiomics in tackling the intricate challenges of prostate bone metastasis detection and analysis. Additionally, we explore avenues such as dataset, heterogeneity, dimensionality, and future research and potential synergies between ML algorithms and radiomic methodologies, aiming to advance diagnostic precision and therapeutic strategies in prostate cancer management.

Machine learning shows immense promise in radiology, possessing the ability to conduct analysis that the human eye is incapable of perceiving. However, there are drawbacks that limit its utility as it currently stands. Machine learning models often struggle with explainability, making it difficult to fully utilize the output or verify its accuracy [50]. Machine learning models also tend to require large amounts of pertinent data for proper training, making generalizability challenging and often requiring each model to only perform a very narrow task. With that said, these limitations will likely be addressed in the coming years with more interpretable methods that can assist radiologists in improving the accuracy and speed of their tasks. Radiomic analysis in prostate bone metastasis offers a noninvasive and quantitative approach, extracting valuable information from imaging data (CT, MRI, etc.). It enables early detection of subtle changes, aids in risk stratification, and provides valuable insights into tumor heterogeneity, allowing personalized treatment strategies. Furthermore, it also aids in predicting treatment response and disease progression. The challenges in radiomic analysis include issues related to the standardization of image acquisition protocols, potential variability in feature extraction methods, and the need for extensive and diverse datasets for robust model training. The interpretability of radiomic features and ensuring clinical relevance remain areas of ongoing research.

With both ML and radiomics, the quality of the output is limited by the quality of the input. Two important characteristics of datasets for both ML and radiomics are the size and dimensionality of the dataset. The size of the dataset is crucial for ML to prevent overfitting and better promote generalizability of the model. For radiomics, large dataset size improves the accuracy and statistical power of extracted radiomic features. Dataset sizes in medical tasks tend to be limited due to Health Insurance Portability and Accountability Act (HIPAA) regulations and minimal public datasets. Data dimensionality plays an important role as well. 3D data contains more spatial information than 2D, but also necessitates higher computational intensity and increased workload if manual annotation is required. Additionally, the quantitative features or model architectures used to analyze 3D versus 2D data differ significantly, making dataset dimensionality an important factor in analysis.

The datasets covered in this review vary depending on the problem-solving approach (prediction, classification, detection, and segmentation) from several 100s of cases to very few. In a predictive analysis study by Liu et al. [19], the authors used a whopping 207,137 cases involving Demographic and clinicopathologic variables extracted from the SEER database to identify metastatic cases. The range (highest and lowest) of patients employed in the classification study falls between 5342 and 21 cases; in detection, 205 to 194; and in segmentation, 205 and 193 cases. However, we did not observe a standard benchmark public dataset across the approaches. Many studies have conducted experiments on in-house and small datasets, challenging the generalizability. Further, we can observe the practice of cross-validation (leave one out, 5-fold, 10-fold) in most of the methods, depicting the stability of the quantitative analysis in the respective experimentation setup [20, 27, 32, 33, 35, 36, 42], and [53]. However, true generalizability can be estimated only by external validation (In Figure 4b, we have shown comparison of sensitivity of AI-based bone lesion segmentation models compared to nuclear medicine physicians). On small data cohorts, we can witness extensive usage of data augmentation techniques by tuning numerous parameters, such as rotation, shift, whitening, and flipping, leading to performance improvement. While augmentation may improve overall performance on the test, it may not enhance generalizing capability. A few methods have also used transfer learning techniques using Imagenet and COCO databases [29, 42]. However, the performance gain through knowledge transfer from nonmedical datasets is still a questionable concern; and coming to various imaging techniques employed for detecting prostate bone metastasis. Bone scintigraphy is the most commonly used modality, while only a few studies have employed MRI, CT, and PET-CT methods. The possible reasons for this are numerous, including [1] the ability to capture detailed functional information like blood flow and metabolic activity, [2] the ability to scan the entire skeleton, unlike CT or MRI, which are fixed at a specific location, and [3] lower exposure to radiation and cost. However, analyzing 3D data is often advantageous as it accurately represents complex anatomical structures and allows volumetric analysis and establishing spatial relationships.

5 CONCLUSION

This review presents multiple strategies, including classification/prediction, detection, segmentation, and radiomic methods for evaluating prostate bone metastasis, helping the community to gain an overview of the literature. We inferred that most methods in the literature achieved reasonable performance (overall accuracy > 0.85), with most of the architectures being benchmark classification and segmentation methods (VGG 16, ResNets, and UNet) with slight modification backed by data augmentation and transfer learning techniques. Our analysis of diverse approaches to addressing prostate bone metastasis provides insight into study frequency in respective disciplines with the performance status. The superior performance depiction in methods without cross-validation and external validation may be subject to overfitting, affecting the generalizability. There are knowledge gaps identified with scope for future study in this field, including lesion heterogeneity analysis, the combination of radiomic and ML analysis for outcome prediction, and detailed lesion characterization for precision medicine.

AUTHOR CONTRIBUTIONS

S. J. Pawan: Conceptualization (equal); data curation (equal); methodology (equal); project administration (equal); writing—original draft (equal); writing—review and editing (equal). Joseph Rich: Conceptualization (equal); data curation (equal); formal analysis (equal); funding acquisition (equal); investigation (equal); methodology (equal); writing—original draft (equal); writing—review and editing (equal). Jonathan Le: Writing—original draft (equal); writing—review and editing (equal). Ethan Yi: Writing—original draft (equal); writing—review and editing (equal). Timothy Triche: Writing—review and editing (equal). Amir Goldkorn: Writing—review and editing (equal). Vinay Duddalwar: Supervision (equal); writing—review and editing (equal).

ACKNOWLEDGMENTS

None.

CONFLICT OF INTEREST STATEMENT

The authors declare that they have no conflicts of interest. V.D. reports consultant activity with Radmetrix, Westat, and DeepTek.

ETHICS STATEMENT

Not applicable.

INFORMED CONSENT

Not applicable.

Open Research

DATA AVAILABILITY STATEMENT

Data sharing is not applicable to this article as no data sets were generated or analyzed during the current study.