Evaluating the potential risks of employing large language models in peer review

Lingxuan Zhu, Yancheng Lai, Jiarui Xie and Weiming Mou have contributed equally to this work and share first authorship.

Abstract

Objective

This study aims to systematically investigate the potential harms of Large Language Models (LLMs) in the peer review process.

Background

LLMs are increasingly used in academic processes, including peer review. While they can address challenges like reviewer scarcity and review efficiency, concerns about fairness, transparency and potential biases in LLM-generated reviews have not been thoroughly investigated.

Methods

Claude 2.0 was used to generate peer review reports, rejection recommendations, citation requests and refutations for 20 original, unmodified cancer biology manuscripts obtained from eLife's new publishing model. Artificial intelligence (AI) detection tools (zeroGPT and GPTzero) assessed whether the reviews were identifiable as LLM-generated.All LLM-generated outputs were evaluated for reasonableness by two expert on a five-point Likert scale.

Results

LLM-generated reviews were somewhat consistent with human reviews but lacked depth, especially in detailed critique. The model proved highly proficient at generating convincing rejection comments and could create plausible citation requests, including requests for unrelated references. AI detectors struggled to identify LLM-generated reviews, with 82.8% of responses classified as human-written by GPTzero.

Conclusions

LLMs can be readily misused to undermine the peer review process by generating biased, manipulative, and difficult-to-detect content, posing a significant threat to academic integrity. Guidelines and detection tools are needed to ensure LLMs enhance rather than harm the peer review process.

1 INTRODUCTION

Large language models (LLMs) have gained widespread attention within the academic community for their exceptional text processing capabilities, such as generating paper abstracts and providing assistance with academic writing.1-3 Moreover, there is evidence that LLMs have also been utilised in peer review processes.4, 5 According to a study, between 6.5% and 16.9% of the text in peer review reports for some artificial intelligence (AI) conferences might have been substantially generated or modified by LLMs.6 Another study highlighted that some peer review reports published in academic journals display signs of reviewers using LLMs to compose their reviews.7

As a critical component of academic publishing, peer review serves as a crucial mechanism for ensuring the quality and academic integrity of scientific papers, playing a decisive role in the acceptance or rejection of a research paper. However, peer review faces numerous challenges, including the scarcity of qualified reviewers, variability in evaluation quality, and the timeliness of the review procedure.8 Therefore, some studies have attempted to integrate the assistance of LLMs into the peer review process,9 as exemplified by ChatReviewer.10 Other research has explored the feasibility of using LLMs for peer review. Liang et al. created an automated pipeline using LLMs to generate peer review reports for published papers and explored researchers' perceptions of these LLM-generated reviews. The findings showed that 82.4% of authors considered LLM reviews to be more beneficial than those provided by human reviewers.11 A study by Dario von Wedel et al. used LLMs to review paper abstracts to make acceptance or rejection decisions, demonstrating that LLMs might help reduce affiliation bias in peer review.12

However, the above-mentioned studies on the use of LLMs in peer review have some limitations. First, existing studies have employed LLMs to review published versions of articles, meaning that the manuscripts submitted to LLMs for review have already been revised based on peer review comments, which differ from the original versions that reviewers assess during the initial review process. Second, due to previous technical limitations, these studies were either unable to input full texts to LLMs12 or relied on external plugins to upload full texts.11 This has led to less accurate assessments of LLMs' performance in peer review. Third, prior research focused on the benefits of using LLMs for peer reviews while neglecting their potential harm. The incorporation of LLMs in peer review could have disruptive consequences for academic publishing, as LLMs have the potential to generate biased peer review reports based on predetermined review inclinations (such as acceptance/rejection) in the prompt. Some malicious individuals might use this to undermine the integrity of the peer review process.

In this study, we used Claude 2.0 [Anthropic] to review the original versions of articles published in eLife under its new publishing model. At the time of our research, Claude was the only AI assistant that allowed users to upload PDF files without the need for payment or requiring third-party plugins, making it more user-friendly compared to other AI tools available. Under eLife’s new publishing model, articles are published as reviewed preprints, accompanied by reviewer reports and the original unmodified manuscripts used for peer review. The scenarios set in this study closely resemble the real-world applications of LLMs for peer review. We explored the use of LLMs to directly generate review reports from scratch, generate rejection comments, and request authors to cite specific literature and refute the request to cite literature. We not only need to evaluate the hazards and risks brought by LLMs during the review process in our research but also need to conduct a preliminary exploration of potential solutions to alleviate the drawbacks of using LLMs in peer review.

2 METHOD

2.1 Study design and article acquisition

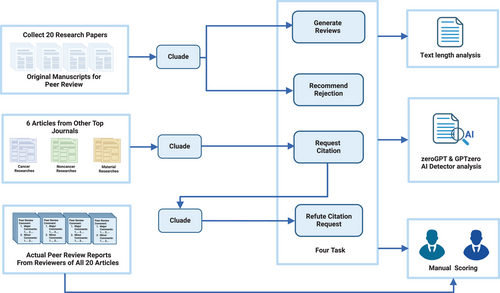

This study included four tasks: (i) Using LLM to directly generate review reports from scratch. (ii) Using LLM to recommend rejection. (iii) Using LLM to request a citation. (iv) Using LLM to refute citation request (Figure 1). The latest 20 cancer-related research papers with their peer review reports published under the new publishing model in the cancer biology section of eLife between 24 August 2023 and 17 October 2023 were included (Table S1A). We obtained the publicly available peer review reports from the article pages and retrieved the unmodified manuscripts used for peer review based on the preprint links recorded. The public release dates of the peer review reports are all later than the cut-off date of the model's training data, ensuring that the review reports are not included in the model's training data. For the ‘Request citation’ task, we collected the titles and abstracts of two articles from each field, totalling six articles from three fields: cancer research, non-oncological medical research and materials science research (Table S1B).

2.2 Collecting responses

The unmodified manuscripts used for peer review were uploaded to the Claude 2.0 model as attachments, using the prompts listed in Table S2. For generating complete peer review reports, we instructed the model to review the uploaded manuscript attachments and write a peer review report that includes three sections: general comments, major comments and minor comments.13 For review comments requesting the citation of specific references, we submitted the original manuscript being reviewed along with the title and abstract of the article to be cited. For rebuttal requests, we uploaded the manuscript, title and abstract of the article to be cited, as well as the corresponding review comment requesting the citation of that article. Notably, we did not provide the LLM with the actual review comments corresponding to the manuscripts, nor did we provide additional knowledge on how to conduct peer reviews. Each inquiry was repeated to generate three responses,14 with each response generated in an independent session. A researcher who did not participate in the scoring, manually deleted irrelevant parts in the responses, such as ‘Here is a draft of the peer review based on the information provided’, retaining only the main body of the review comments generated by the model.

2.3 AI detector

Two popular AI detectors, zeroGPT15 and GPTzero,16 were used to test whether specific algorithms could detect if the review comments were generated by LLM. The zeroGPT uses its proprietary DeepAnalyse deep learning algorithm technology, which reportedly can distinguish between human and LLM-generated text with up to 98% accuracy.15 The GPTzero primarily relies on perplexity (randomness of the text) and burstiness (variation in perplexity) as metrics to determine whether the text was generated by LLM.16 The results returned by the two algorithms include the probability (0–1) that input content was generated by LLM, as well as a conclusion: the text was generated by LLM, was written by a human, or is a mix of human-written content and LLM, along with the confidence level of the conclusion.

2.4 Evaluation of LLM-generated review comments

Two experts with extensive peer review experience in the field of oncology independently scored the LLM-generated review comments using a five-point Likert scale. For the generated reviews, the reviewers evaluated the degree of consistency between the LLM-generated comments and the actual review comments. Five points represent the highest consistency, while one point represents the lowest consistency. For the remaining tasks, the reviewers assessed the reasonableness of the LLM-generated comments, with five points indicating very reasonable and one point indicating completely unreasonable. For the task of requesting citation, a researcher who did not participate in the scoring selected one reasonable response for each reviewed article. This chosen response was subsequently used to generate the responses to refute citation requests. The two reviewers also scored the level of detail in the actual peer review comments, evaluating whether the real review comments focused on specific details of the article or were merely general remarks. Additionally, the Flesch reading ease score was used to assess the readability of the LLM-generated review comments. This score is on a scale of 0–100, where 10–30 corresponds to a College graduate level and 0–10 corresponds to a Professional level.17

2.5 Statistical analysis

Spearman correlation analysis was used to assess the associations between variables. The Wilcoxon rank-sum test was used to compare means between two groups. For multiple group comparisons, the Benjamini–Hochberg method was applied for correction. Statistical analyses were performed using R (version 4.2.3). The ggplot2 and ggstats R packages were used for visualisation. Two-sided tests with p-values less than .05 were considered statistically significant.

3 RESULT

3.1 LLM-generated review comments cannot be identified by AI detectors

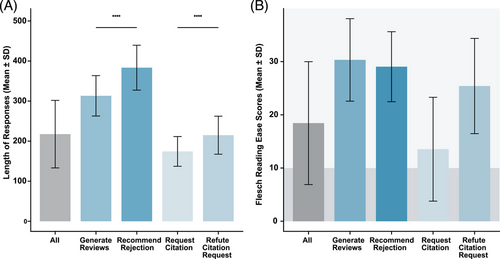

The workflow of this study is shown in Figure 1. LLM was asked to review the unmodified versions of manuscripts used for peer review from 20 articles in the cancer biology section published under the new publishing model in eLife. The average length of all the LLM-generated review comments was 218 ± 84 words. The average length of the rejection comments (383 ± 56 words) was significantly higher than that of the directly generated review comments (313 ± 50 words) (Figure 2A, p < .0001). The average length of the refute citation request comments (215 ± 48 words) was higher than that of the request citation comments (175 ± 38 words) (Figure 2A, p < .0001). There was a positive correlation between the length of the LLM-generated responses and their scores (Figures S1 and S2). Additionally, the average Flesch reading ease score for each task is within the range of 10–30 points (Figure 2B), indicating a college graduate level of readability. This suggests that a professional, academic language style was employed in the review comments generated by LLM.

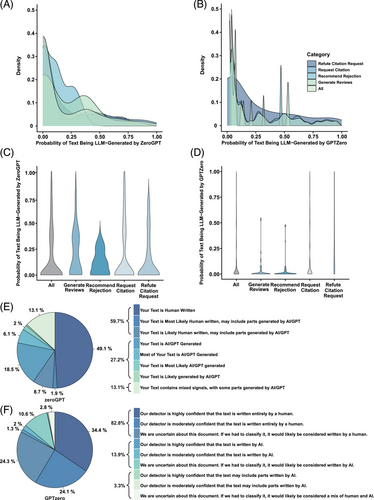

Subsequently, we used two AI detectors, GPTzero and zeroGPT, to test whether their algorithms could detect if the review comments were generated by LLM. The results showed that for most of the texts, the probability given by the algorithms that the texts were LLM-generated was below 50% (Figure 3A–D). Specifically, the average LLM-generated probability for all responses was 17.1% for GPTzero and 12.4% for zeroGPT. In terms of the conclusions given by the algorithms, without considering the confidence level, zeroGPT and GPTzero identified 59.7% and 82.8% of the responses as human-written, respectively (Figure 3E,F; Figures S3 and S4). ZeroGPT identified only 27.2% of the responses as LLM-generated and 13.1% as a mix of human and AI writing. GPTzero confidently identified only 13.9% of the responses as LLM-generated and indicated that 3.3% may include parts written by LLM. In summary, these results suggest that LLM can already generate text that closely resembles the academic research style and is difficult to identify as LLM-generated by detection tools in most cases.

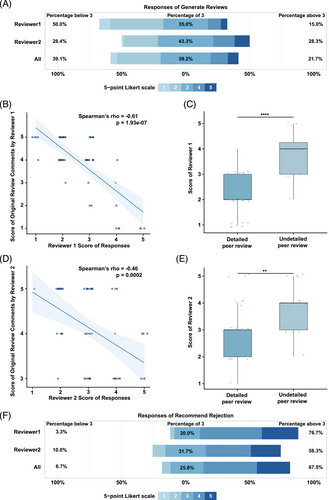

3.2 LLM can replace human reviewers in some cases

Overall, the two reviewers were not satisfied with the consistency between the AI-generated review comments and the actual review comments (Figure 4A), with only 15.0% and 28.3% of the scores given by the two reviewers being greater than 3. However, we found that the consistency scores of the AI-generated reviews with the actual review comments were significantly negatively correlated with the detail scores of the actual review comments (Figure 4B,D). This means that the more concise or broad the actual review comments are, the more consistent the AI-generated review comments are with the actual review comments. Furthermore, based on the scores given by the two reviewers for the original peer review comments, we divided them into two groups (undetailed peer review for scores ≤3, detailed peer review for scores >3). We found that for both Reviewer 1 (p < .0001) and Reviewer 2 (p < .01), the scores of the undetailed peer review group were significantly higher than those of the detailed peer review group (Figure 4C,E). These results suggest that although LLM-generated review comments cannot fully take the place of human reviewers' comments, they can already achieve a level comparable to relatively broad and undetailed review comments.

3.3 LLM can provide convincing rejection comments

We next explored the potential harms of using LLM for peer review. LLM demonstrated an unexpected ability to provide rejection comments. The proportion of scores greater than 3 given by the two reviewers was 76.7% and 58.3%, respectively (Figure 4F).

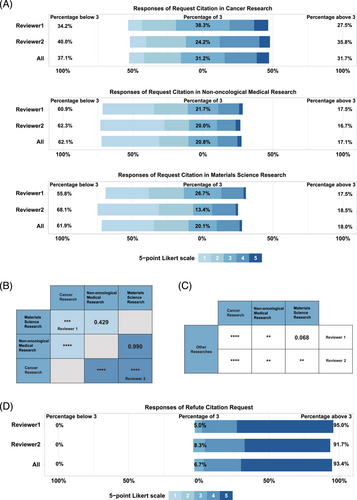

3.4 LLM can generate seemingly reasonable citation requests for unrelated references

It is not uncommon for reviewers to request authors to cite specific references during peer review.18 We additionally collected a total of six articles, with two articles each from different journals on cancer research, non-oncological medical research, and materials science research. We then asked the LLM to generate a review comment requesting the authors to cite these references. It was found that the scores for requesting citations of cancer research were significantly higher than those for other types of research articles (p < .0001, Figure 5A–C). Unsurprisingly, the scores for requesting citations for materials science research were significantly lower than those for other types of research articles. Notably, some responses requesting citations of materials research received a score of 5. This indicates that LLM can generate seemingly reasonable and somewhat persuasive citation requests for some irrelevant references, but may provide rather far-fetched reasons for requesting citation of certain articles.

3.5 Using AI to refute unreasonable citation requests

We then attempted to use the LLM to refute its own unreasonable citation requests. Both reviewers gave high scores to all the rebuttal comments, with all scores being ≥3 (Figure 5D). This indicates that LLM can easily generate comments refuting unreasonable citation requests from reviewers, which could play a crucial role in the process of authors responding to reviewers' comments.

4 DISCUSSION

Our study using Claude as an example reveals the potential hazards and risks of employing LLMs in peer review. We utilised unmodified manuscripts used for peer review and obtained matching actual review comments, making them closer to real-world scenarios. Our results indicate that LLMs can already generate text consistent with academic research styles and, in most cases, can evade detection by popular AI detection tools. Furthermore, we explored the harmful potential of using LLMs to generate biased review comments. We found that LLMs can easily be used to generate rejection comments and request authors to cite specific references, presenting a new challenge to the peer review process.

First, our study found that LLM-generated review comments received a certain degree of recognition, but they cannot completely replace human opinions. We observed that LLM-generated comments align more closely with actual review comments only when the actual review comments are broader. While LLMs can replicate the form of academic critique, their grasp of the substantive logic and nuanced scientific reasoning may be limited, impacting the true depth of their evaluations. High-quality peer review requires reviewers to have a deep background knowledge of the discipline, an understanding of the development trends in the research field, and the experience and insight to identify innovation. These are areas where current LLMs fall short. Currently, LLM-generated review comments do not reach the level of high-quality review comments. And those lower quality review reports generated by LLMs cannot effectively enhance the quality of manuscripts or exclude manuscripts unsuitable for publication, which goes against the primary purpose of peer review. Therefore, over-reliance on LLMs for peer review could compromise the effectiveness of quality control in academic publishing.

Second, LLMs seem to be quite adept at providing reasons for rejecting papers. Theoretically, no paper is perfect. While this capability may help researchers identify deficiencies in their manuscripts before submission and thereby improve their quality, it could also be misused. If a malicious reviewer uses LLMs to generate rejection comments intentionally during peer review, it could lead to the erroneous rejection of research that should have been published. As editors cannot be experts in all fields, a seemingly compelling rejection comment could prompt the editor to decide to reject the manuscript. Even though authors may appeal to reverse the decision, the lengthy appeal process could delay the publication, affecting the novelty and impact of the paper. This not only discourages researchers but also hinders the dissemination and development of scientific knowledge. Conversely, paper mills that seek to manipulate peer review might use this capability to write detailed reports recommending that the editor accept the manuscript directly or with minor revisions, thereby achieving their goal of manipulating the peer review process.19

Additionally, we also found that LLMs can generate seemingly reasonable and persuasive citation requests for completely unrelated references, highlighting the potential risks LLMs pose to academic integrity. Some reviewers might use LLMs to generate specific citation requests in an attempt to manipulate citation metrics, such as increasing their own H-index. Such behaviour not only violates academic ethics but also compromises the quality of the reviewed papers by introducing irrelevant citations.

Lastly, our study also explored possible countermeasures, such as using LLMs to refute unreasonable citation requests. This provides a new approach to addressing academic misconduct. Furthermore, by leveraging the powerful text capabilities of LLMs, we might be able to more efficiently identify and respond to inappropriate citation behaviours, thereby maintaining the health of the academic ecosystem.

In summary, our research highlights the potential detrimental effects that the application of LLMs may have on the integrity and reliability of the peer review process. We urge the academic community to recognise the impact of LLMs on peer review and to take swift action. For authors and reviewers, just as authors are required to declare the use of generative AI tools in writing their papers, journals should also require reviewers to disclose if they have used such tools in preparing their peer review reports; indeed, for data security reasons, it might even be necessary to prohibit reviewers from using AI for peer review to prevent the leakage of unpublished manuscript content. For publishers and editors, it is crucial to develop operational guidelines for handling suspected AI-generated reviews. This protocol could involve using detection tools not for automatic rejection, but as a flag for closer scrutiny, allowing editors to assess the review's substance before deciding whether to discount its influence, discard it or contact the reviewer for clarification. For LLM service providers, feasibility discussions around technical-level restrictions are vital. This could include exploring solutions like digital watermarking for traceability or developing specialised ‘academic modes’ to prevent misuse. At a minimum, clear terms of service prohibiting the input of confidential manuscript data should be established to create ethical and legal guardrails. Our study has certain limitations. Due to the time-consuming nature of manual peer review, we included only 20 articles in our study. Additionally, we selected research papers from the field of oncology based on our expertise, which may not be representative of papers from all fields. The pros and cons of using LLMs in peer review require further and larger scale evaluation. All in all, the potential disruptive impact of LLMs on peer review cannot be ignored. In the future, we need to establish robust norms and regulatory mechanisms to maximise the positive contributions of AI technology while effectively mitigating its potential risks. This will require the joint efforts of the academic community, publishers, technology companies and other stakeholders to explore the development path of academic publishing in the LLM era.

AUTHOR CONTRIBUTIONS

Lingxuan Zhu, Yancheng Lai, Jiarui Xie, Weiming Mou: conceptualisation; investigation; writing—original draft; methodology; literature review; writing—review and editing; visualisation. Haoyun Haungli, Chang Qi, Tao Yang, Aiming Jiang, Wenyi Gan, Dongqiang Zeng: conceptualisation; writing—review and editing; investigation. Bufu Tang, Mingjia Xiao, Guangdi Chu Zaoqu Liu: writing—review and editing; visualisation. Quan Cheng, Anqi Lin, Peng Luo: conceptualisation; literature review; project administration; supervision; resources; writing—review and editing. All authors have read and approved the final manuscript.

CONFLICT OF INTEREST STATEMENT

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

FUNDING INFORMATION

Not applicable.

ETHICS STATEMENT

Not applicable.

Open Research

DATA AVAILABILITY STATEMENT

Data generated or analysed during this study are included in this published article and its supplementary information files. Any additional information required is available upon request.