Double Robust Bayesian Inference on Average Treatment Effects

Abstract

We propose a double robust Bayesian inference procedure on the average treatment effect (ATE) under unconfoundedness. For our new Bayesian approach, we first adjust the prior distributions of the conditional mean functions, and then correct the posterior distribution of the resulting ATE. Both adjustments make use of pilot estimators motivated by the semiparametric influence function for ATE estimation. We prove asymptotic equivalence of our Bayesian procedure and efficient frequentist ATE estimators by establishing a new semiparametric Bernstein–von Mises theorem under double robustness; that is, the lack of smoothness of conditional mean functions can be compensated by high regularity of the propensity score and vice versa. Consequently, the resulting Bayesian credible sets form confidence intervals with asymptotically exact coverage probability. In simulations, our method provides precise point estimates of the ATE through the posterior mean and delivers credible intervals that closely align with the nominal coverage probability. Furthermore, our approach achieves a shorter interval length in comparison to existing methods. We illustrate our method in an application to the National Supported Work Demonstration following LaLonde (1986) and Dehejia and Wahba (1999).

1 Introduction

This paper proposes a double robust Bayesian approach for estimating the average treatment effect (ATE) under unconfoundedness, given a set of pretreatment covariates. Our new Bayesian procedure involves both prior and posterior adjustments. First, following Ray and van der Vaart ( 2020 ), we adjust the prior distributions of the conditional mean function using an estimator of the propensity score. Second, we use this propensity score estimator together with a pilot estimator of the conditional mean to correct the posterior distribution of the ATE. The adjustments in both steps are closely related to the functional form of the semiparametric influence function for ATE estimation under unconfoundedness. They do not only shift the center but also change the shape of the posterior distribution. For our robust Bayesian procedure, we derive a new Bernstein–von Mises (BvM) theorem, which means that this posterior distribution, when centered at any efficient estimator, is asymptotically normal with the efficient variance in the semiparametric sense. The key innovation of our paper is that this result holds under double robust smoothness assumptions within the Bayesian framework.

Despite the recent success of Bayesian methods, the literature on ATE estimation is predominantly frequentist-based. For the missing data problem specifically, it was shown that conventional Bayesian approaches (i.e., using uncorrected priors) can produce inconsistent estimates, unless some unnecessarily strong smoothness conditions on the underlying functions were imposed; see the results and discussion in Robins and Ritov ( 1997 ) or Ritov, Bickel, Gamst, and Kleijn ( 2014 ). Once the prior distribution was adjusted using some preestimated propensity score, Ray and van der Vaart ( 2020 ) recently established a novel semiparametric BvM theorem under weaker smoothness requirement for the propensity score function. 1 However, a minimum differentiability of order is still required for the conditional mean function in the outcome equation, where p denotes the dimensionality of covariates. In this paper, we are interested in Bayesian inference under double robustness that allows for a trade-off between the required levels of smoothness in the propensity score and the conditional mean functions.

Under double robust smoothness conditions, we show that Bayesian methods, which use propensity score adjusted priors as in Ray and van der Vaart ( 2020 ), satisfy the BvM theorem only up to a “bias term” depending on the unknown true conditional mean and propensity score functions. In this paper, our robust Bayesian approach accounts for this bias term in the BvM theorem by considering an explicit posterior correction. Both the prior adjustment and the posterior correction are based on functional forms that are closely related to the efficient influence function for the ATE in Hahn ( 1998 ). We show that the corrected posterior satisfies the BvM theorem under double robust smoothness assumptions. Our novel procedure combines the advantages of Bayesian methodology with the robustness features that are the strengths of frequentist procedures. Our credible intervals are Bayesianly justifiable in the sense of Rubin ( 1984 ), as the uncertainty quantification is conducted conditionally on the observed data and can also be interpreted as frequentist confidence intervals with asymptotically exact coverage probability. Our procedure is inspired by insights from the double machine learning (DML) literature, as well as the bias-corrected matching approach of Abadie and Imbens ( 2011 ), since our robustification of an initial procedure removes some nonnegligible bias and remains asymptotically valid under weaker regularity conditions. While the main part of our theoretical analysis focuses on the ATE of binary outcomes, also considered by Ray and van der Vaart ( 2020 ), we outline extensions of our methodology to continuous and multinomial cases, as well as to other causal parameters.

In both simulations and an empirical illustration using the National Supported Work Demonstration data, we provide evidence that our procedure performs well compared to existing Bayesian and frequentist approaches. In our Monte Carlo simulations, we find that our method results in improved empirical coverage probabilities, while maintaining very competitive lengths for confidence intervals. This finite sample advantage is also observed over Bayesian methods that rely solely on prior corrections. In particular, we note that our approach leads to more accurate uncertainty quantification and is less sensitive to estimated propensity scores being close to boundary values.

The BvM theorem for parametric Bayesian models is well established; see, for instance, van der Vaart ( 1998 ). Its semiparametric version is still being studied very actively when nonparametric priors are used ( Castillo ( 2012 ), Castillo and Rousseau ( 2015 ), Ray and van der Vaart ( 2020 )). To the best of our knowledge, our new semiparametric BvM theorem is the first one that possesses the double robustness property. Our paper is also connected to another active research area concerning Bayesian inference for parameters in econometric models, which is robust to partial or weak identification ( Chen, Christensen, and Tamer ( 2018 ), Giacomini and Kitagawa ( 2021 ), Andrews and Mikusheva ( 2022 )). The framework and the approach we take is different. Nonetheless, they share the same scope of tailoring the Bayesian inference procedure to new challenges in contemporary econometrics.

2 Setup and Implementation

This section provides the main setup of the average treatment effect (ATE). We motivate the new Bayesian methodology and detail the practical implementation.

2.1 Setup

We consider a family of probability distributions for some parameter space , where the (possibly infinite dimensional) parameter η characterizes the probability model. Let be the true value of the parameter and denote , which corresponds to the frequentist distribution generating the observed data.

Assumption 1.(i) and (ii) there exists such that for all x in the support of .

2.2 Double Robust Bayesian Point Estimators and Credible Sets

We build upon the ATE expression in ( 2.3 ) to develop our doubly robust inference procedure. Our approach is based on nonparametric prior processes for and . For the latter, we consider the Dirichlet process, which is a default prior on spaces of probability measures. This choice is also convenient for posterior computation via the Bayesian bootstrap; see Remark 2.1 . For the former, we make use of Gaussian process priors, along with an adjustment that involves a preliminary estimator of . Gaussian process priors are also closely related to spline smoothing, as discussed in Wahba ( 1990 ). Their posterior contraction properties (see Ghosal and van der Vaart ( 2017 )), together with excellent finite sample behavior (see Rassmusen and Williams ( 2006 )), make Gaussian process priors popular in the related literature. Since does not depend on , the specification of a prior on the propensity score is not required.

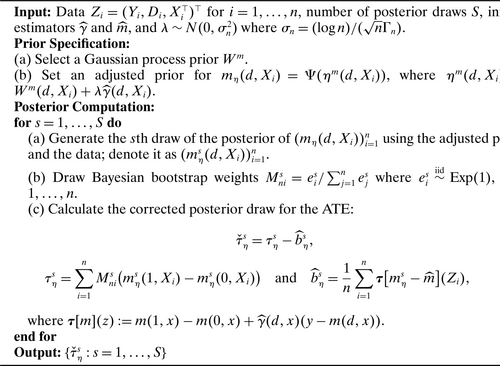

Double Robust Bayesian Procedure.

For the implementation of our pilot estimator given in ( 2.6 ), we recommend using propensity scores estimated by the logistic Lasso. For the implementation of the pilot estimator , we adopt the posterior mean of generated from a Gaussian process prior without adjustment, as in Ghosal and Roy ( 2006 ). Section 4.2 provides more implementation details. To approximate the posterior distribution, we make use of the Laplace approximation, but one can also resort to the Markov Chain Monte Carlo (MCMC) algorithms. The parameter controls the weight placed on the prior adjustment relative to the standard unadjusted prior on (e.g., a Gaussian prior with a squared exponential covariance function). Regarding the tuning parameter , we emphasize that our finite sample results are not sensitive to its choice, as shown in Supplemental Appendix H.

Remark 2.1. (Bayesian Bootstrap)Under unconfoundedness and the reparametrization in ( 2.2 ), the ATE can be written as . With independent priors on and , their posteriors also become independent. It is thus sufficient to consider the posterior for and separately. We place a Dirichlet process prior for with the base measure to be zero. Consequently, the posterior law of coincides with the Bayesian bootstrap introduced by Rubin ( 1981 ); also see Chamberlain and Imbens ( 2003 ). One key advantage of the Bayesian bootstrap is that it allows us to incorporate a broad class of data generating processes, whose posterior can be easily sampled. Replacing by the standard empirical cumulative distribution function does not provide sufficient randomization of , as it yields an underestimation of the asymptotic variance; see Ray and van der Vaart ( 2020 , p. 3008). In principle, one could consider other types of bootstrap weights; however, these generally do not correspond to the posterior of any prior distribution.

3 Main Theoretical Results

3.1 Least Favorable Direction

Lemma 3.1.Consider the submodel ( 3.2 ). Let Assumption 1 hold for with any η under consideration, then the least favorable direction for estimating the ATE parameter in ( 2.3 ) is:

3.2 Assumptions for Inference

We first introduce high-level assumptions and discuss primitive conditions for those in the next section. Below, we consider some measurable sets of functions such that . We also denote when we index the conditional mean function by its subscript η. We introduce the notation for all , as well as the supremum norm . For two sequences and of positive numbers, we write if , and if and .

Assumption 2. (Rates of Convergence)The estimators and , which are based on an auxiliary sample independent of , satisfy and for :

Assumption 3. (Complexity)For it holds and

Assumption 4. (Prior Stability)For , is a continuous stochastic process independent of the normal random variable , where , and that satisfies: (i) , for some deterministic sequence and (ii) for any .

Discussion of Assumptions

Assumption 2 imposes sufficiently fast convergence rates for the pilot estimators for the conditional mean function and the propensity score . When considering frequentist pilot estimators, these rate conditions can be justified by adopting the recent proposals of Chernozhukov, Newey, and Singh ( 2022a , b ). One can also use Bayesian point estimators such as the posterior mean of the Gaussian process for and . The posterior convergence rate for the conditional mean can be derived in the same spirit of Ray and van der Vaart ( 2020 ). The rate conditions in Assumption 2 also resemble conditions (i) and (ii) of Theorem 1 of Farrell ( 2015 ) in the context of frequentist estimation. Remark 4.1 illustrates that under classical smoothness assumptions, this assumption is less restrictive than the method of Ray and van der Vaart ( 2020 ) or other approaches for semiparametric estimation of ATEs as found in Chen, Hong, and Tarozzi ( 2008 ) or Farrell, Liang, and Misra ( 2021 ). Assumption 4 incorporates Conditions (3.9) and (3.10) from Theorem 2 in Ray and van der Vaart ( 2020 ), and it is imposed to check the invariance property of the adjusted prior distribution. These restrictions are mild and extend beyond the Gaussian processes that are considered in Section 4 for concreteness.

Assumption 3 restricts the functional class to form a -Glivenko–Cantelli class; see Section 2.4 of van der Vaart and Wellner ( 1996 ). It also imposes a new stochastic equicontinuity condition, as ( 3.5 ) restricts a product structure involving and , which further relaxes the corresponding condition from Ray and van der Vaart ( 2020 ), namely, . In the next section, we demonstrate that our formulation allows for double robustness under Hölder classes (see Remark 4.1 ). Hence, the complexity of the functional class can be compensated by sufficient regularity of the corresponding Riesz representer and vice versa. A condition similar to our Assumption 3 is also used in the frequentist literature; see Section 2 of Benkeser, Carone, van der Laan, and Gilbert ( 2017 ). Nonetheless, the technical argument differs substantially from the frequentist's study, because we mainly need the condition ( 3.5 ) to control changes in the likelihood under perturbations along the estimated and true least favorable directions. This is unique to Bayesian analysis with nonparametric priors.

3.3 A Double Robust Bernstein–von Mises Theorem

Below is our main statement about the asymptotic behavior of the posterior distribution of . As in the modern Bayesian paradigm, the exact posterior is rarely of closed form, and one needs to rely on certain Monte Carlo simulations, such as the implementation procedure in Section 2.2 , to approximate this posterior distribution, as well as the resulting point estimator and credible set.

We emphasize that the above BvM theorem is not feasible for applications, because it depends on the “bias term” , which depends on the unknown conditional mean . Nonetheless, it provides an important theoretical benchmark. One can follow the existing literature on semiparametric BvM theorems to impose the so-called “no-bias” condition, but this generally leads to strong smoothness restrictions and may not be satisfied when the dimensionality of covariates is large relative to the smoothness properties of the underlying functions; see the discussion on page 395 of van der Vaart ( 1998 ).This “bias term” in our context consists of two key components, with the first involving unknown true functions and the second depending on the posterior of . We consider pilot estimators for the unknown functional parameters in . The correction term , as introduced in 2.8, results in a feasible Bayesian procedure that satisfies the BvM theorem under double robustness, as demonstrated below.

We now show how Theorem 3.2 can provide frequentist justification of Bayesian methods to construct the point estimator and the confidence sets. Recall that represents the posterior mean. Introduce a Bayesian credible set for , which satisfies for a given nominal level . The next result shows that also forms a confidence interval in the frequentist sense for the ATE parameter, whose coverage probability under converges to .

To the best of our knowledge, this is the first BvM theorem that entails the double robustness. We discuss the distinction from Theorem 2 in Ray and van der Vaart ( 2020 ). Their work laid the theoretical foundation for Bayesian inference based on the propensity score adjusted priors. Specifically, under this prior adjustment, they established a BvM result under weak regularity conditions on the propensity score function, referring to this property as single robustness. Our analysis differs from Ray and van der Vaart ( 2020 ) in two crucial ways. First, we improve on their Lemma 3 by showing that it is possible to verify the prior stability condition for propensity score-adjusted priors under the product structure in Assumption 3 , modulo the “bias term” . This separation is essential to identify the source of the restrictive condition, such as the Donsker property on , which is mainly used to eliminate . Second, our proposal introduces an explicit debiasing step, borrowing key insights from recent developments in the DML literature.

Remark 3.1. (Connection with frequentist robust estimation)In our BvM theorem, we do not restrict the centering estimator , as long as it admits the linear representation as in ( 3.1 ). A popular frequentist estimator for the ATE that achieves double robustness is

Remark 3.2. (Parametric Bayesian Methods)A couple of recent papers propose doubly robust Bayesian recipes for ATE inference, under parametric model restrictions. Saarela, Belzile, and Stephens ( 2016 ) considered a Bayesian procedure based on an analog of the double robust frequentist estimator given in Equation ( 3.8 ), replacing the empirical measure with the Bayesian bootstrap measure. However, there was no formal BvM theorem presented therein. Another recent paper by Yiu, Goudie, and Tom ( 2020 ) explored Bayesian exponentially tilted empirical likelihood with a set of moment constraints that are of a double-robust type. They proved a BvM theorem for the posterior constructed from the resulting exponentially tilted empirical likelihood under parametric specifications. Luo, Graham, and McCoy ( 2023 ) provided Bayesian results for ATE estimation in a partial linear model, which implies homogeneous treatment effects. They also assign parametric priors to the propensity score. Their BvM theorem allows for misspecification only in a parametric nonlinear component of the outcome equation. It is not clear how to extend their analysis to incorporate flexible nonparametric modeling strategies.

4 Illustration Using Squared Exponential Process Priors

We illustrate the general methodology by placing a particular Gaussian process prior on in relation to the conditional mean functions for . The Gaussian process regression has been extensively used among the machine learning community, and started to gain popularity among economists; see Kasy ( 2018 ). We provide primitive conditions used in our main results in the previous section. In addition, we provide details on the implementation using Gaussian process priors and discuss the data-driven choices of tuning parameters.

4.1 Asymptotic Results Under Primitive Conditions

Let be a generic centered and homogeneous Gaussian random field with covariance function of the following form , for a given continuous function . We consider as a Borel measurable map in the space of continuous functions on , equipped with the supremum norm . The Gaussian process is completely determined by the covariance function. For example, the covariance function of the squared exponential process is given by , as its name suggests. In this section, we focus on the squared exponential process prior, which is one of the most commonly used priors in applications; see Rassmusen and Williams ( 2006 ) and Murphy ( 2023 ). We also consider a rescaled Gaussian process for some . Intuitively speaking, can be thought as a bandwidth parameter. For a large (or equivalently a small bandwidth), the prior sample path is obtained by shrinking the long sample path . Thus, it employs more randomness and becomes suitable as a prior model for less regular functions; see van der Vaart and van Zanten ( 2008 , 2009 ).

Proposition 4.1.Let Assumption 1 hold. The estimator satisfies and for some . Suppose for and some with . Also, . Consider the propensity score-dependent prior on m given by , where is the rescaled squared exponential process for , with its rescaling parameter of the order in ( 4.1 ) and for some deterministic sequence , and . Then the corrected posterior distribution for the ATE satisfies Theorem 3.1 .

Remark 4.1. (Double Robust Hölder Smoothness)Proposition 4.1 requires , which represents a trade-off between the smoothness requirement for and . This encapsulates double robustness; that is, a lack of smoothness of the conditional mean function can be mitigated by exploiting the regularity of the propensity score and vice versa. Referring to the Hölder class , its complexity measured by the bracketing entropy of size ε is of order for . One can show that the key stochastic equicontinuity assumption in Ray and van der Vaart ( 2020 ), that is, their condition ( 3.5 ), is violated by exploring the Sudkov lower bound in Han ( 2021 ) when , or equivalently, when . In contrast, our framework accommodates this non-Donsker regime as long as , which enables us to exploit the product structure and a fast convergence rate for estimating the propensity score. Our methodology is not restricted to the case where propensity score belongs to a Hölder class per se. For instance, under a parametric restriction (such as in logistic regression) or an additive model with unknown link function, the possible range of the posterior contraction rate for the conditional mean function can be substantially enlarged. In the case , the bias term becomes asymptotically negligible, that is, . This allows for smoothness robustness only with respect to the propensity score and is also known as single robustness. In this case, no posterior correction is required; see Ray and van der Vaart ( 2020 ).

4.2 Implementation Details

We provide details on the Gaussian process prior placed on and its posterior computation. Algorithm 1 sets the adjusted prior as . In our implementation, we choose the first component to be a zero-mean Gaussian process with the commonly used squared exponential covariance function; see Rassmusen and Williams ( 2006 , p. 83). That is, where the hyperparameter is the kernel variance and are rescaling parameters that reflect the relevance of treatment and each covariate in predicting . They are selected by maximizing the marginal likelihood. Conditional on the data used to obtain the propensity score estimator , the prior for has zero mean and the covariance kernel , which includes an additional term based on the estimated Riesz representer . It is given by , cf. related constructions from Ray and Szabó ( 2019 ) and Ray and van der Vaart ( 2020 ). The parameter , representing the standard deviation of λ, controls the weight of the prior adjustment relative to the standard Gaussian process. The choice , where , as specified in Algorithm 1 , satisfies the conditions and in Assumption 4 , with probability approaching one. It is similar to the choice suggested by Ray and Szabó ( 2019 , page 6), where is proportional to . The factor normalizes the second term (adjustment term) of to have the same scale as the unadjusted covariance K. Supplemental Appendix H shows that the finite sample performance of the double robust Bayesian approaches remains stable across different choices of .

Utilizing Gaussian process priors with zero mean and covariance function , and incorporating the available data, we generate posterior draws of the vector for . This can be achieved through the Laplace approximation method detailed in Supplemental Appendix G.

For the implementation of the pilot estimator given in ( 2.6 ), we recommend logistic Lasso for the propensity scores, with the penalty parameter chosen by cross-validation, following Friedman, Hastie, and Tibshirani ( 2010 ). As a pilot estimator in Algorithm 1 for posterior correction, we use the uncorrected posterior mean , where is calculated following Step (a) of posterior computation in Algorithm 1 , but with a Gaussian process prior without adjustment, that is, . When the rescaling parameter is as stated in Proposition 4.1 , the convergence rate of is . This can be shown by combining Theorems 11.22, 11.55, and 8.8 from Ghosal and van der Vaart ( 2017 ).

5 Numerical Results

In this section, we apply our method to one version of the Lalonde–Dehejia–Wahba data that contains a treated sample of 185 men from the National Supported Work (NSW) experiment and a control sample of 2490 men from the Panel Study of Income Dynamics (PSID). The data has been used by LaLonde ( 1986 ), Dehejia and Wahba ( 1999 ), Abadie and Imbens ( 2011 ), and Armstrong and Kolesár ( 2021 ), among others. We refer readers to LaLonde ( 1986 ), and Dehejia and Wahba ( 1999 ) for reviews of the data. 4

5.1 Simulations

In this section, we consider a simulation study where the observations are randomly drawn from a large sample generated by applying the Wasserstein Generative Adversarial Networks (WGAN) method to the Lalonde–Dehejia–Wahba data; see Athey, Imbens, Metzger, and Munro ( 2024 ). We view their simulated data as the population and repeatedly draw our simulation samples (each consisting of 185 treated observations and 2490 control observations) for each of the 1000 Monte Carlo replications. We slightly depart from previous studies by focusing on a binary outcome Y: the employment indicator for the year 1978, which is defined as an indicator for positive earnings. The treatment D is the participation in the NSW program. We are interested in the average treatment effect of the NSW program on the employment status. For the set of covariates, we follow Abadie and Imbens ( 2011 ) and include nine variables: age, education, black, Hispanic, married, earnings in 1974, earnings in 1975, unemployed in 1974, and unemployed in 1975. We implement our double robust Bayesian method (DR Bayes) following Algorithm 1 , using posterior draws and the pilot estimator and , as detailed at the end of Section 4.2 . We compare DR Bayes to two other Bayesian procedures: First, we consider the prior adjusted Bayesian method (PA Bayes) proposed by Ray and van der Vaart ( 2020 ), which constructs the point estimate and credible interval based on in (2.8). Second, we examine an unadjusted Bayesian method (Bayes), which is also based on but generated using Gaussian process priors without adjustment.

We also compare our method to frequentist estimators. Match/Match BC corresponds to the nearest neighbor matching estimator and its bias-corrected version by Abadie and Imbens ( 2011 ), which adjusts for differences in covariate values through regression. DR TMLE corresponds to the doubly robust targeted maximum likelihood estimator by Benkeser et al. ( 2017 ). DML refers to the double/debiased machine learning estimator from Chernozhukov, Chetverikov, Demirer, Duflo, Hansen, and Newey ( 2017 ), where the nuisance functions and are estimated using random forests (which outperformed DML combined with other nuisance function estimators, such as Lasso, in our simulation setup). Since the job-training data contains a sizable proportion of units with propensity score estimates very close to 0 and 1, we follow Crump, Hotz, Imbens, and Mitnik ( 2009 ) and discard observations with the estimated propensity score outside the range , with the trimming threshold . 5

Table I presents the finite sample performance of the Bayesian and frequentist methods mentioned above. We use the full data twice in computing the prior/posterior adjustments and the posterior distribution of the conditional mean function. Supplemental Appendix H reports the performance of DR Bayes using sample splitting, which results in similar coverage but a larger credible interval length due to the halved sample size.

|

Bias |

CP |

CIL |

Bias |

CP |

CIL |

Bias |

CP |

CIL |

|

|---|---|---|---|---|---|---|---|---|---|

|

Methods |

() |

() |

() |

||||||

|

Bayes |

−0.040 |

0.683 |

0.147 |

−0.010 |

0.841 |

0.149 |

−0.006 |

0.911 |

0.120 |

|

PA Bayes |

−0.008 |

0.981 |

0.260 |

0.033 |

0.949 |

0.254 |

0.047 |

0.897 |

0.308 |

|

DR Bayes |

−0.024 |

0.983 |

0.223 |

0.014 |

0.970 |

0.221 |

0.023 |

0.952 |

0.258 |

|

Match |

0.027 |

0.933 |

0.334 |

0.048 |

0.908 |

0.323 |

0.033 |

0.965 |

0.323 |

|

Match BC |

0.040 |

0.880 |

0.347 |

0.065 |

0.816 |

0.334 |

0.083 |

0.804 |

0.339 |

|

DR TMLE |

0.015 |

0.832 |

0.300 |

0.039 |

0.746 |

0.282 |

0.039 |

0.668 |

0.242 |

|

DML |

0.045 |

0.927 |

0.524 |

0.052 |

0.870 |

0.393 |

0.054 |

0.918 |

0.522 |

Concerning the Bayesian methods for estimating the ATE, Table I reveals that unadjusted Bayes yields highly inaccurate coverage except for the case with trimming constant . If the prior is corrected using the propensity score adjustment, the results improve significantly. Nevertheless, our DR Bayes method demonstrates two further improvements: First, DR Bayes leads to smaller average confidence lengths in each case while simultaneously improving the coverage probability. This can be attributed to a reduction in bias and/or more accurate uncertainty quantification via our posterior correction. Second, when the trimming threshold is small (i.e., ), propensity score estimators can be less accurate, leading to reduced coverage probabilities of PA Bayes. Our double robust Bayesian method, on the other hand, is still able to provide accurate coverage probabilities. In other words, DR Bayes exhibits more stable performance than PA Bayes with respect to the trimming threshold. 6

Our DR Bayes also exhibits encouraging performances when compared to frequentist methods. It provides a more accurate coverage than bias-corrected matching, DR TMLE and DML. Compared to the matching estimator without bias correction, which achieves similarly good coverage, DR Bayes yields considerably shorter credible intervals.

5.2 An Empirical Illustration

We apply the Bayesian and frequentist methods considered above to the Lalonde-Dehejia-Wahba data. Similar to the simulation exercise, we consider a varying choice of the trimming threshold . 7 The ATE point estimates and confidence intervals are presented in Table II . As a benchmark, the experimental data that uses both treated and control groups in NSW () yields an ATE estimate (treated-control mean difference) of 0.111 with a 95% confidence interval .

|

() |

() |

() |

|||||||

|---|---|---|---|---|---|---|---|---|---|

|

Methods |

ATE |

95% CI |

CIL |

ATE |

95% CI |

CIL |

ATE |

95% CI |

CIL |

|

Bayes |

0.213 |

[0.120, 0.301] |

0.181 |

0.214 |

[0.132, 0.292] |

0.161 |

0.198 |

[0.140, 0.251] |

0.112 |

|

PA Bayes |

0.158 |

[0.019, 0.288] |

0.270 |

0.170 |

[0.045, 0.281] |

0.236 |

0.090 |

[−0.078, 0.233] |

0.311 |

|

DR Bayes |

0.178 |

[0.061, 0.293] |

0.231 |

0.184 |

[0.064, 0.294] |

0.230 |

0.121 |

[−0.031, 0.250] |

0.281 |

|

Match |

0.188 |

[0.022, 0.355] |

0.333 |

0.140 |

[−0.029, 0.309] |

0.338 |

0.079 |

[−0.111, 0.269] |

0.380 |

|

Match BC |

0.157 |

[−0.006, 0.321] |

0.327 |

0.145 |

[−0.021, 0.310] |

0.331 |

0.180 |

[−0.004, 0.365] |

0.369 |

|

DR TMLE |

−0.023 |

[−0.171, 0.125] |

0.296 |

0.073 |

[−0.074, 0.220] |

0.294 |

0.071 |

[−0.146, 0.289] |

0.435 |

|

DML |

0.172 |

[0.018, 0.327] |

0.308 |

0.150 |

[−0.010, 0.310] |

0.320 |

0.258 |

[−0.183, 0.699] |

0.882 |

As we see from Table II , the unadjusted Bayesian method yields larger estimates. The adjusted Bayesian methods (PA and DR Bayes), on the other hand, produce estimates comparable to the experimental estimate. PA Bayes finds that the job training program enhanced the employment by 9.0% to 17.0% across different trimming thresholds, and DR Bayes estimates the effect from 12.1% to 18.4%. Among frequentist estimators, the matching estimator and its bias-corrected version produce similar estimates as PA and DR Bayes, but with wider confidence intervals. DR TMLE produces negative estimates for when all other estimates are positive. For and 0.05, DML yields similar point estimates as PA and DR Bayes, but with less estimation precision. In the case , where the overlap condition is nearly violated, its point estimate and confidence interval length become considerably larger than those of other methods.

6 Extensions

This section extends the binary variable Y to encompass general cases, including continuous, counting, and multinomial outcomes. First, we examine the class of single-parameter exponential families, where the conditional density function is solely determined by the nonparmatric conditional mean function. This covers continuous outcomes and counting variables. Second, we consider the “vector” case of exponential families for multinomial outcomes. For both classes, we derive the novel correction to the Bayesian procedure and delegate more technical discussions to Supplemental Appendices D and F. Additionally, we outline extensions to other causal parameters of interest.

6.1 A Single-Parameter Exponential Family

Lemma 6.1.Let Assumption 1 hold for with any η under consideration. Then, for the joint distribution ( 6.2 ) and the submodel defined by the path with as defined in ( 3.2 ), the least favorable direction for estimating the ATE parameter in ( 2.3 ) is

6.2 Multinomial Outcomes

Lemma 6.2.Consider the submodel defined by the path , , with as defined in ( 3.2 ). Let Assumption 1 hold for with any η under consideration, then the least favorable direction for estimating the ATE parameter is

6.3 Other Causal Parameters

We now extend our procedure to general linear functionals of the conditional mean function. We do so only for binary outcomes, as the modification to other types of outcomes follows as above. Recall that the observable data consists of . observations of . The causal parameter of interest is , where the function ψ is linear with respect to the conditional mean function . We introduce the Riesz representer satisfying . Let and be pilot estimators for the conditional mean and Riesz representer, respectively, computed over an auxiliary sample. Our double robust Bayesian procedure can be extended by considering the corrected posterior distribution for as follows: , , where here . The derivations of the least favorable directions in the following two examples are provided in Supplemental Appendix E.

Example 6.1. (Average Policy Effects)The policy effect from changing the distribution of X is , where the known distribution functions and have their supports contained in the support of the marginal covariate distribution . Following the general setup, with its Riesz representer , where and stand for the density function of and , respectively.

Example 6.2. (Average Derivative)For a continuous scalar (treatment) variable D, the average derivative is given by , where denotes the partial derivatives of m with respect to the continuous treatment D. Thus, we have with its Riesz representer given by , where here denotes the conditional density function of D given X.

Appendix A: Proofs of Main Results

Proof of Theorem 3.1.Since the estimated least favorable direction is based on observations that are independent of , we may apply Lemma 2 of Ray and van der Vaart ( 2020 ). It suffices to handle the posterior distribution with set equal to a deterministic function . By Lemma 1 of Castillo and Rousseau ( 2015 ), it is sufficient to show that the Laplace transform given in ( A.1 ) satisfies

The Laplace transform can thus be written as

Next, we plug this into the exponential part in the definition of , which then gives

Further, observe that and by the definition of the efficient influence function given in ( 2.4 ). As we insert these in the previous expression for , we obtain for all t in a sufficiently small neighborhood around zero and uniformly for :

Proof of Theorem 3.2.It is sufficient to show that , where and . We make use of the decomposition

Proof of Corollary 3.1.The weak convergence of the Bayesian point estimator directly follows from our asymptotic characterization of the posterior and the argmax theorem; see the proof of Theorem 10.8 in van der Vaart ( 1998 ). The corrected Bayesian credible set satisfies for any . In particular, we have

Proof of Proposition 4.1.Note that is based on an auxiliary sample, and hence, we can treat below as a deterministic function denoted by satisfying the rate restrictions and . Regarding the conditional mean functions, we consider the set , where for and some constant :

We first verify Assumption 2 with . The posterior contraction rate is shown in our Lemma C.3. Referring to the product rate condition, that is, for . This is satisfied if , which can be rewritten as .

We now verify Assumption 3 . It is sufficient to deal with the resulting empirical process . Note that the Cauchy–Schwarz inequality implies

Finally, it remains to verify Assumption 4 . By the univariate Gaussian tail bound, the prior mass of the set satisfies . Also, the Kullback–Leibler neighborhood around has prior probability at least . We may thus apply Lemma 4 of Ray and van der Vaart ( 2020 ), which yields , as imposed in Assumption 4 (i).

Regarding Assumption 4 (ii), we need to show the posterior probability of the shifted version of is tending to one. Considering itself, the first set in the intersection of ( A.6 ) that defines is seen to have posterior probability tending to one by the result in (II) of Lemma C.3, combined with the univariate Gaussian tail probability bound

Appendix B: Key Lemmas

Proof.We start with the following decomposition:

The next lemma verifies the prior stability condition under our double robust smoothness conditions.

Lemma B.2.Let Assumptions 1 – 4 hold. Then we have

Proof.Since is based on an auxiliary sample, it is sufficient to consider deterministic functions with the same rates of convergence as . Denote the corresponding propensity score by . By Assumption 4 , we have and

Considering the log likelihood ratio of two normal densities together with the constraint , it is shown on page 3015 of Ray and van der Vaart ( 2020 ) that

We complete the proof by establishing the following result: