An Improved Fault Diagnosis Method and Its Application in Compound Fault Diagnosis for Paper Delivery Structure Coupling

Abstract

The coupling torque signal contains essential information about the operating condition of the motor-follower mechanical system. Artificial Intelligence methods have been effective in diagnosing coupling faults. However, due to internal and external excitations caused by the operating environment and neighboring components, existing coupling fault diagnosis models often struggle with poor feature extraction, low recognition accuracy, and weak generalization performance. To overcome these limitations, a multihead self-attention mechanism-enhanced empirical mode decomposition (EEMD)–convolutional neural network (CNN)–bidirectional long short-term memory (BiLSTM) model is proposed. Empirical mode decomposition (EMD) is first applied to extract spatial features from the data. Next, the multihead self-attention mechanism captures essential internal characteristics. CNN is then used for further spatial feature extraction, and BiLSTM is employed to extract temporal features, enabling effective spatiotemporal feature fusion. Torque and vibration signals of three typical coupling faults—loosening, rough contact, and misalignment—are collected using a motor-coupling-paper handling mechanism testbed and compared with traditional models, including long short-term memory (LSTM), CNN, and Random Forest. The proposed method shows an average F1-Score improvement of 31.43%, 40.07%, and 31.71%, respectively, indicating superior noise robustness and better generalization. Furthermore, the dimensionality reduction results of each network layer are visualized using the T-stochastic neighbor embedding (T-SNE) method, which reveals clear feature patterns and confirms the model’s reliability and effectiveness.

1. Introduction

As a vital transmission component in mechanical systems, the coupling plays a key role in connecting and transmitting torque, enabling the active rotary shaft in different mechanisms to drive the passive rotary shaft for synchronized rotary motion. Among various types, the plum-blossom flexible coupling has been widely used in high-torque and high-speed operations due to its compact design, high transmission capacity, and other advantages. It has been applied in fields such as drive systems, aviation systems, and printing systems, providing flexibility and high efficiency for modern engineering [1, 2]. In a printing system, the paper feeder feeds paper sheets from the paper stack into the printing device one at a time. During this process, power is transmitted through a plum-blossom flexible coupling that connects the motor to the bearings of the delivery mechanism, ensuring the continuous operation of the system.

Plum-blossom flexible couplings in paper delivery mechanisms are subject to high-speed rotation and complex loads, making them vulnerable to failure modes such as coupling misalignment [3], loosening of expansion bolts [4], and rough contact [5]. These issues threaten not only the coupling’s performance but also the stability of the entire system. Fault diagnosis is crucial to ensure system reliability [6]. For example, in the study of misalignment faults, Xuan et al. [7] observed that vibration signals caused by misalignment in plum-blossom flexible couplings on ship hydraulic pumps did not show significant line spectrum frequencies at motor rotational frequencies. Similarly, Dos [8], Hujare and Karnik [9], Chandra and Sekhar [10], and Guo et al. [11] emphasized that prolonged misalignment can impair system performance and cause severe failures, with the vibration spectrum significantly changing over time. On the topic of bolt loosening, Jiang et al. [12] focused on the dynamic effects of bolt loosening and microsliding on threaded and curved surfaces. Zhang et al. [13] investigated loosening in coupling connections used in cranes, highlighting the critical importance of connection reliability for safe operation. In addition, Li [5] discovered that coupling vibration signals exhibit nonlinearity in the early stages of faults, making accurate fault modeling and identification challenging.

The long short-term memory (LSTM) neural network, as an advanced variant of the recurrent neural network (RNN), was capable of handling long sequences while mitigating issues such as gradient vanishing and gradient explosion [14–16]. Compared with other commonly used deep learning models, such as GRU [17] and XGBoost [18], LSTM offers several advantages in processing faulty time-series data. It could manage variable-length sequences and was well suited for fault diagnosis tasks across different devices and systems [19, 20]. However, as research on LSTM advanced, scholars identified several limitations, including insufficient forgetting capability and the need for larger training datasets [21]. To address these limitations, studies introduced the bidirectional long short-term memory (BiLSTM) neural network, which improved fault diagnosis performance by integrating forward and backward sequence information, thereby enhancing the model’s ability to capture long-term dependencies [22–24]. For instance, Song et al. [25] applied a convolutional neural network (CNN)–BiLSTM network as a pretrained model with limited training data for bearing fault diagnosis under varying operating conditions, achieving better detection accuracy. Compared with a single CNN model and LSTM model, the recognition accuracy and recall increased by 1.36% and 0.705%, while the F1-Score improved by 1.369% and 0.72%, respectively. In this study, to enhance the classification and recognition accuracy of coupling faults by mining data features, a feature extraction module was developed, with the BiLSTM neural network selected as the core training model.

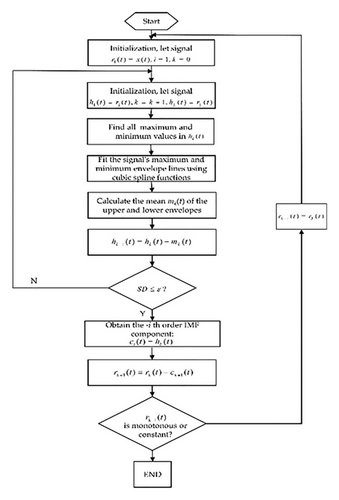

Effective decomposition of data signals can enhance model accuracy and improve feature representation. Liu [26] and Li et al. [6] used ensemble empirical modal decomposition (EEMD) to break down fault signals from reservoir gate opener couplings and wind turbine couplings, improving the model’s ability to diagnose weak fault signals. Jiao [27] combined the empirical mode decomposition (EMD) method with LSTM to enhance temporal feature extraction, resulting in higher accuracy than BP, RNN, and LSTM models without EMD processing. While variational mode decomposition (VMD) could provide more precise signal decomposition [28], it requires predefining modes and adjusting penalty parameters [29], which adds complexity. In contrast, EMD is adaptive [30], requiring no preset modes, making it more suitable for complex and uncertain signal characteristics. EMD is also effective for handling nonstationary and nonlinear signals commonly seen in mechanical systems, offering a reliable foundation for fault diagnosis in complex environments. Thus, EMD was chosen for signal decomposition in this study due to its balance of accuracy and practical deployment.

CNN is effective in capturing image features and patterns [31, 32] and also improves the accuracy of temporal models. By extracting spatial information through convolutional operations, CNN enhances the combined model, allowing it to perform both temporal and spatial feature extraction [33]. Fu et al. [34] used CNN to extract spatial features from time–frequency data obtained from BiLSTM. Using a parallel neural network, Fu’s approach outperformed traditional machine learning and deep learning methods in fault identification using datasets from Case Western Reserve University and Jiangnan University. Similarly, Kavianpour et al. [35] applied a CNN–BiLSTM model for earthquake prediction, effectively extracting spatial information with CNN.

Attention mechanisms are often integrated into deep learning models to enhance focus on relevant features during extraction and are widely applied in image processing [36] and other data-driven fields [37]. Xie et al. [38] used Attention–BidiRNN and Attention–BidiLSTM models, achieving accuracy improvements of 9.05% and 4.7%, respectively. Dong et al. [39] proposed a position encoding-based attention mechanism for predicting the future motion of ships in real sea environments.

This study first applies EMD to adaptively decompose various types of fault signals, extracting high-frequency, low-frequency, and trend information to maximize data feature representation of the target. Next, a multihead self-attention mechanism is introduced, enabling the model to automatically focus on targets with varying positional and angular features. Subsequently, CNN with a sliding convolution kernel is employed to extract spatial information from the data. Finally, the BiLSTM neural network is integrated to capture temporal information, ensuring precise fault identification.

2. Data Resource

2.1. Data Preprocessing

The quality of data collected during experiments directly affects the model’s performance and reliability. Several preprocessing measures were applied to minimize data errors and enhance the model’s robustness, ensuring effective feature extraction and strong generalization.

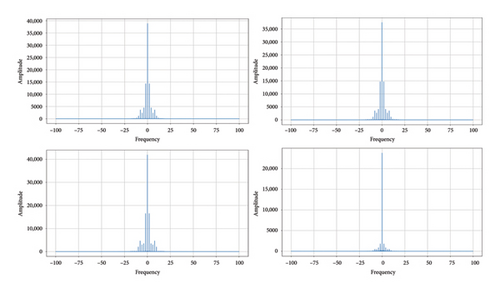

During the experiments, signal acquisition in industrial environments is often accompanied by noise and outliers, which can negatively affect feature extraction and model training. Therefore, noise inspection and processing of the collected torque signals are necessary to ensure effective model training and robustness. Figure 1 illustrates the frequency domain diagrams obtained through the Fourier transform for normal, loosening, rough contact, and misalignment conditions, with a time sampling interval of 0.005. From the figure, it can be observed that the main frequency components are concentrated in the low-frequency range, while the high-frequency range contains a certain level of noise. This high-frequency noise primarily originates from external environmental interference and sensor precision limitations.

An outlier filtering method was adopted to reduce the impact of noise on model training. Abnormal data were filtered out by setting reasonable upper and lower threshold limits, while samples with fluctuation characteristics were retained to verify the model’s robustness. Outliers, identified as samples with fluctuation amplitudes significantly exceeding the normal range and inconsistent with actual operating conditions, were removed. In contrast, samples with slight fluctuations were kept to improve the model’s adaptability to noise.

2.2. Data Details

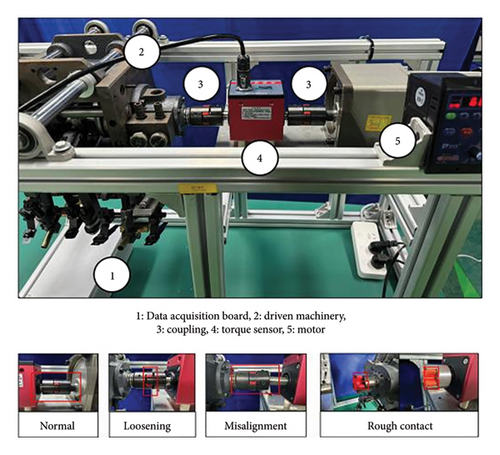

A motor-coupling-paper delivery mechanism test bed was constructed in this study to collect fault signal data. Torque was transmitted from the motor to the paper delivery mechanism via a flexible coupling, driving its operation. The motor had a power rating of 0.75 kW and a speed range of 0–1500 rpm. Data acquisition was carried out using a DYN-200 dynamic torque sensor with a precision of 0.1% and a rated capacity of ±10,000 Nm. The setup included two GFS-40X55 elastic couplings. A variable frequency drive adjusted the motor speed to simulate different operating conditions, and torque signals were collected under normal conditions and three fault conditions: loosening, misalignment, and rough contact. The loosening fault was simulated by reducing the clamping force of the coupling bolts, the misalignment fault by offsetting the axes of the drive and load shafts and the rough contact fault by slightly wearing down the elastic elements inside the coupling to induce abnormal friction. Detailed descriptions of the faults and their parameters are provided in Table 1 to ensure the controllability and reproducibility of experimental conditions. The experimental platform setup and specific fault details are illustrated in Figure 2.

| Fault type | Fault description | Fault parameters | Sampling frequency (Hz) | Motor speed (rpm) |

|---|---|---|---|---|

| Normal | Coupling installed properly without any faults | Coupling assembled following standard procedures with axial alignment deviation controlled within 0.05 mm | 100 | 595 |

| Loosening | Insufficient clamping force causing loose connection | Fastening bolts loosened by 1/2 turn, with a looseness gap of about 2 mm | 100 | 595 |

| Misalignment | Axial misalignment on both sides of the coupling | Axial offset of 2 mm between the drive and load shafts, with an offset angle of 1.5° | 100 | 595 |

| Rough contact | Wear on the elastic elements during coupling operation | Wear depth of elastic elements inside the coupling is 0.5 mm | 100 | 595 |

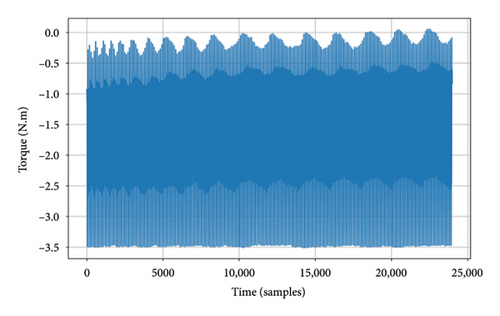

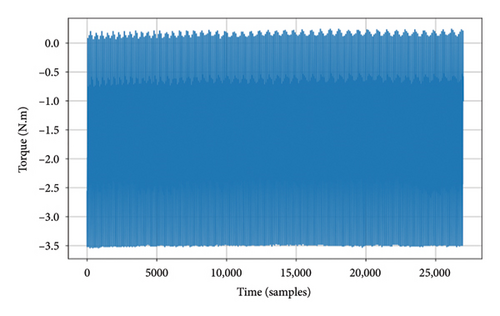

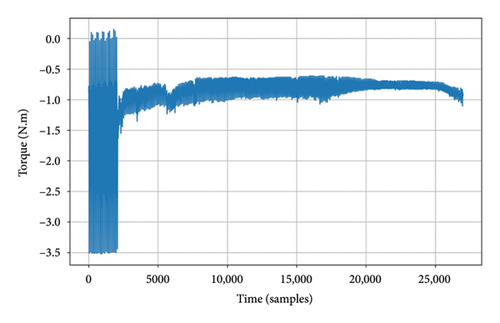

The sampling frequency of measured data is 100 Hz. Rotational speed is 595 revolutions per minute (rpm). The data of normal and faulty conditions of the coupling measured are shown in Figure 3.

As shown in Figure 3, the torque signals exhibit distinct characteristics under normal and different fault conditions. Under normal conditions, the torque signals display approximately periodic fluctuations, with consistent intervals between peaks and valleys and no noticeable irregularities. In the case of loosening faults, the torque signal loses its periodicity, and the intervals between peaks and valleys vary significantly, indicating unstable torque transmission caused by the loosening of the coupling. For rough contact faults, the torque signal shows a significant reduction in the intervals between peaks and valleys, resulting in a shorter fluctuation period. This reflects an increase in friction resistance within the coupling. In misalignment faults, the torque signal reveals a sudden decrease in torque amplitude during the measurement process, along with abnormal fluctuations. This indicates an unbalanced load between the rotating components due to shaft misalignment, leading to additional friction and vibration during operation.

3. Methods

3.1. Data Standardization

3.2. EMD

3.3. Fault Diagnosis Classification Model

3.3.1. BiLSTM Neural Network Model

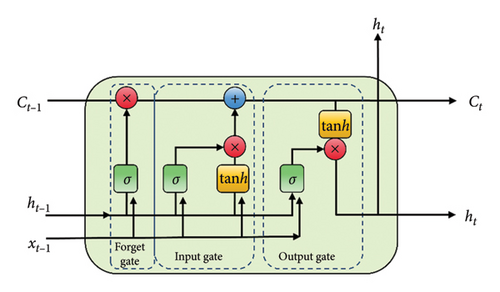

LSTM handled long-term dependencies in sequential data, maintained long-term memory increased the stability of backpropagation, and reduced the challenges of gradient disappearance and gradient explosion during training [41]. Its structure is shown in Figure 5.

Its cell structure mainly consists of three gating mechanisms and one cell state. The gating mechanisms are input gate it, output gate Ot, and forget gate ft. At time t − 1, the LSTM cell receives inputs Ct−1, ht−1, and xt−1, while at time, it outputs Ct, ht, and xt.

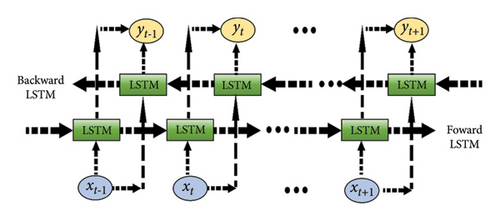

The BiLSTM neural network extends the capabilities of the LSTM by processing sequential data bidirectionally, integrating both forward and backward information. This allows for more effective capture of long-term dependencies and local features within the data, enhancing the accuracy of fault differentiation [42]. The structure of the BiLSTM neural network model is shown in Figure 6.

Here, the LSTM unit receives xt−1 as input information at time t − 1 and likewise receives xt and xt+1 as input information at time t and t + 1, respectively. The final output at time t − 1 is denoted by yt−1. Similarly, yt and yt+1 are the final output at the moment of t and t + 1, respectively. For each input, a forward and a backward LSTM unit are used for feature extraction and processing of the information.

3.3.2. CNN

3.3.3. Multihead Self Attention Mechanism

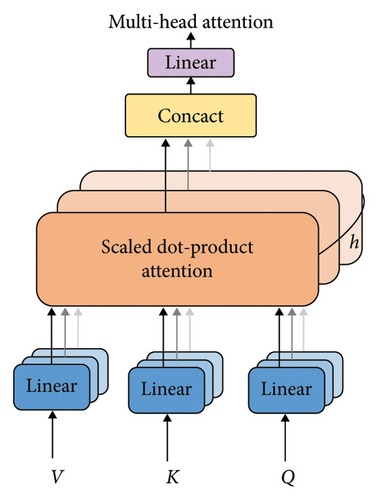

The attention mechanism dynamically adjusts the weights of elements in the input sequence [44], enabling the model to focus on the most critical information when processing sequential data. In the proposed EMD–CNN–BiLSTM model, the multihead self-attention mechanism is employed to extract fault features across different time scales and frequency ranges, thereby enhancing the model’s ability to understand complex signals. Compared with single-head attention, the multihead self-attention mechanism captures diverse features through multiple parallel “heads,” ensuring that the model can identify global trends while extracting local details [45]. For instance, in fault diagnosis, misalignment faults may only exhibit prominent characteristics within specific frequency ranges or time windows. The multihead self-attention mechanism can simultaneously focus on features at different scales, improving the detection capability for various types of faults. By incorporating the multihead self-attention mechanism into the BiLSTM network, the model can more accurately learn the dynamic characteristics of signals over time, reduce the risk of overfitting, and enhance generalization. Figure 7 illustrates the workflow of the multihead self-attention mechanism.

3.3.4. Optimized EMD–CNN–BiLSTM Model With Multihead Self-Attention Mechanism

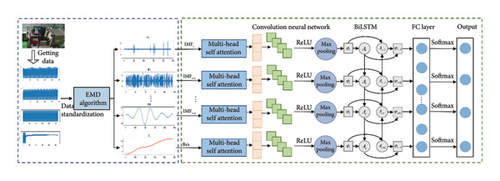

The EMD–CNN–LSTM fault diagnosis model is optimized based on a multihead self-attention mechanism consists of a data decomposition module (EMD), complex mechanism extraction module (multihead self-attention mechanism), fault feature extraction module (CNN), temporal dependency extraction module (LSTM neural network), overfitting prevention module (L2 regular term, gradient trimming technique), multiclassification output (Softmax function), and other modules.

The overall process is as follows. First, the raw torque data are decomposed into multiple IMFs using EMD, isolating key features while reducing irrelevant noise to provide cleaner and more information-rich input for subsequent processing. Next, the data are fed into the multihead self-attention mechanism, where multiple attention heads capture patterns across different frequencies or time scales, enabling the extraction of both fine-grained local features and global trends. Afterward, the data pass through a series of 1D convolutional layers and max-pooling layers. The convolutional layers, by scanning with kernels, detect patterns such as peaks, trends, and abrupt changes, while the max-pooling layers reduce data dimensionality and eliminate redundant information, thereby improving the model’s generalization ability. Following local feature extraction, the data are input into the LSTM network. As a sequential model, LSTM is well-suited for learning dependencies in time-series data. By employing a bidirectional structure, it considers both past and future context, allowing for more accurate capture of dynamic changes. To prevent overfitting, the model incorporates L2 regularization and Dropout. L2 regularization enhances generalization by penalizing large weights, while Dropout randomly deactivates a fraction of neurons, increasing model robustness. Finally, the extracted features are passed to the Softmax layer, which converts them into a probability distribution over the fault categories. The category with the highest probability is selected as the prediction result. For example, if the input data correspond to a “misalignment” fault, the Softmax layer assigns a high probability to that category and the model outputs “misalignment” as the predicted fault type. Figure 8 shows the overall structure of the model.

3.4. Performance Index

4. Fault Detection Results Analysis

The multihead self-attention mechanism-optimized EMD–CNN–BiLSTM model is used to train, validate, and test the coupling operation data to judge the reasonableness of the model establishment.

4.1. Enhanced Representation of Data Features

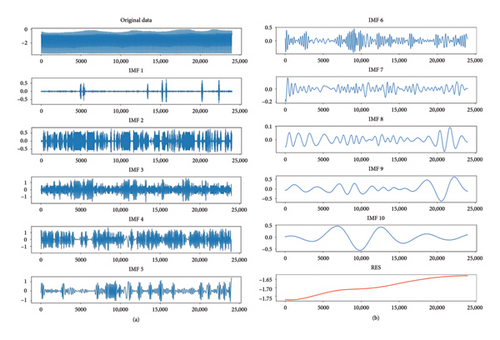

The original torque data is highly nonlinear, volatile, and unstable, with unclear features, making it difficult for model construction. To overcome this, EMD is used to decompose the data, separating simple fluctuations from complex signals and extracting features at different time scales. This enhances the data’s expressiveness, increases input diversity, and improves prediction accuracy.

Using normal data as an example, the data were decomposed into 10 IMFs and 1 residual (Res) group. IMF1 represents high-frequency noise, IMF2–IMF5 capture mid-frequency oscillations, reflecting the data’s dynamics at a medium time scale, and IMF6–IMF9 highlight low-frequency oscillations, showing slow trends and basic data features. IMF10 represents the overall trend, while the Res variable captures nonperiodic residual features. The decomposition shows that the data stabilize as it transitions from fluctuations, revealing consistent change patterns. This enables more effective extraction of features for improving modeling accuracy, such as with LSTM neural networks, as shown in Figure 9.

4.2. Validation of Fault Detection Model Effectiveness

4.2.1. Model Parameters

Experiments choose the Tensorflow2.10.0 Version of the deep learning environment, selected Keras2.10.0 to build the network, and the model constructor for sequential. The network is iteratively trained to get the optimal hyperparameters. According to the experiments, it can be seen that the highest precision is when the parameters are set as shown in the table, where because the network structure is more complex, so we select the regular term L2 with the Dropout method to prevent network overfitting. The parameters of the model are shown in Table 2.

| Network infrastructure | Parameterization |

|---|---|

| Multihead self-attention mechanism |

|

| Convolutional layer |

|

| Maximum pooling layer | Pool_size = 1 |

| BiLSTM layer | Neurons = 128 |

| Dropout | 0.2 |

| Full connectivity layer |

|

| Final fully connected layer | Activation = Softmax |

As shown in Table 2, the model parameters are set to optimize performance. Four attention heads and 256-dimensional keys were used to balance feature extraction and computational efficiency. A lower number of heads limits feature diversity, while a higher key dimension increases complexity [46]. The convolutional layer has 128 kernels with a kernel size of 3, effective for capturing local patterns and maintaining generalization, as shown in recent studies [47]. The ReLU activation function introduces nonlinearity, speeds up convergence, and mitigates the vanishing gradient problem [48]. L2 regularization is applied with a factor of 0.1 to prevent overfitting and maintain generalization [49]. Max-pooling layers reduce dimensionality while preserving key features, with a pool size of 1 to minimize complexity for small datasets [50]. BiLSTM with 128 neurons captures temporal dependencies, and the bidirectional structure improves dynamic signal modeling. To prevent overfitting, a 0.2 dropout rate is used, which is effective for small to medium datasets without slowing convergence [51].

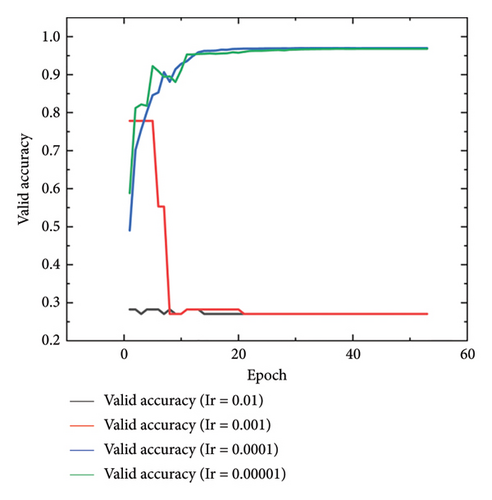

For network training, 70% of the data were used for training, 10% for validation, and 20% for testing. The Adam optimizer was employed for LSTM training, and the optimal batch size of 32 was selected based on experimental comparisons (Table 3), which achieved the best performance across all metrics with a 96.98% accuracy and an average training time of 40 s per epoch. Smaller batch sizes (16) increased training time and reduced performance, while larger batch sizes (64 and 128) led to poorer results, with an F1-Score of only 66.62% for 64, showing that larger batches hindered feature extraction and generalization. The batch size of 32 struck the best balance between performance and efficiency. A dynamic learning rate strategy, starting at 0.0001 and decreasing by a factor of 0.1 every 10 epochs, was used to improve convergence and avoid local minima. Experimental results (Figure 10) showed that a starting learning rate of 0.0001 yielded the best validation accuracy, while higher rates (0.01 and 0.001) caused instability, and a lower rate (0.00001) slowed convergence. To prevent overfitting, early stopping was applied based on validation loss.

| Batch size | Accuracy (%) | Recall (%) | F1-Score (%) | Training time (s/epoch) |

|---|---|---|---|---|

| 16 | 82.93 | 82.41 | 82.73 | 54 |

| 32 | 96.98 | 96.98 | 96.98 | 40 |

| 64 | 78.06 | 75.00 | 66.62 | 31 |

| 128 | 85.61 | 83.53 | 83.51 | 35 |

- Note: The bold values show that the model performs best with a Batch size of 32.

4.2.2. Model Test Results

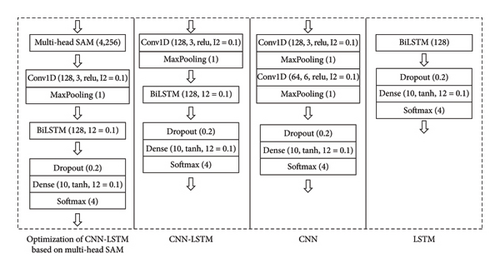

In order to confirm the validity of the suggested model, the test outcomes are contrasted and validated using the identical conventional CNN model, LSTM model with the same number of neurons, and EMD-optimized CNN–LSTM model. The parameters of each junction are shown in Figure 11.

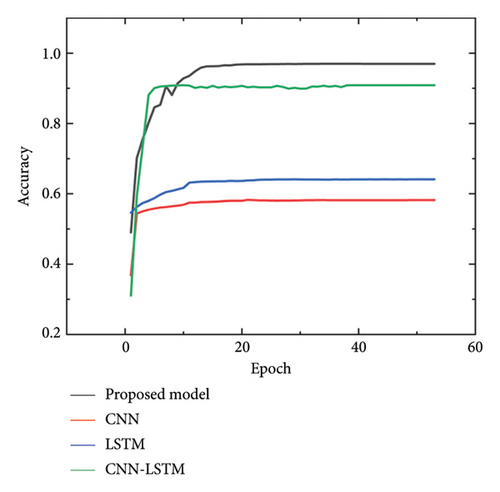

The results from multiple experiments show that the model begins to converge after 54 iterations, with early stopping observed. The iteration accuracy of CNN, LSTM, EMD–CNN–LSTM, and the EMD–CNN–LSTM model optimized with a multihead self-attention mechanism on the validation set is visualized to assess the training effectiveness, as shown in Figure 12.

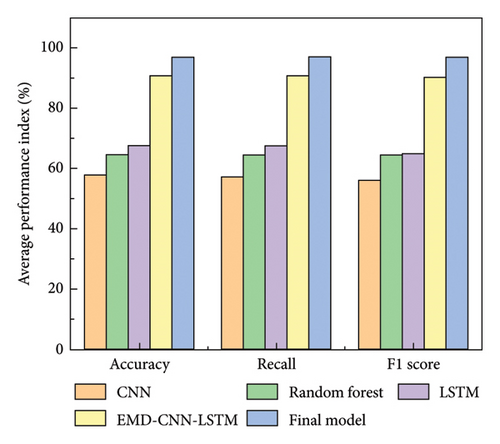

Figure 12 shows that the multihead self-attention mechanism-optimized EMD–CNN–LSTM model proposed has the highest accuracy in the valid set, which has the ability to extract feature information from torque and other data more effectively and provide a feasible, high-precision solution for fault diagnosis. To further validate the effectiveness of the model, the proposed model was tested three times using the test set to prevent the high accuracy on the validation set from being a result of overfitting. In addition, based on current research findings, models such as Random Forest [52] have demonstrated significant advantages in fault detection, so they were included for comparison. The results of three tests on the test set, using the best weights obtained after early stopping for each model, are presented in Table 4 and Figure 13.

| Metrics | Methods | |||||

|---|---|---|---|---|---|---|

| Final model | CNN | LSTM | EMD–CNN–LSTM | Random Forest | ||

| 1 | Accuracy | 0.9698 | 0.5894 | 0.6454 | 0.9088 | 0.6445 |

| Recall | 0.9698 | 0.5894 | 0.6454 | 0.9088 | 0.6445 | |

| F1-Score | 0.9698 | 0.5536 | 0.6390 | 0.9084 | 0.6432 | |

| 2 | Accuracy | 0.9667 | 0.5725 | 0.6978 | 0.9089 | 0.6463 |

| Recall | 0.9663 | 0.5574 | 0.6955 | 0.9007 | 0.6463 | |

| F1-Score | 0.9637 | 0.5594 | 0.6195 | 0.9003 | 0.6465 | |

| 3 | Accuracy | 0.9596 | 0.5715 | 0.6856 | 0.9044 | 0.6455 |

| Recall | 0.9536 | 0.5715 | 0.6856 | 0.9117 | 0.6455 | |

| F1-Score | 0.9535 | 0.5718 | 0.6856 | 0.8993 | 0.6458 | |

| Average | Accuracy | 0.9654 | 0.5778 | 0.6763 | 0.9074 | 0.6454 |

| Recall | 0.9632 | 0.5728 | 0.6755 | 0.9071 | 0.6454 | |

| F1-Score | 0.9623 | 0.5616 | 0.6480 | 0.9027 | 0.6452 | |

- Note: The bold values reflect the fact that the final model has the best classification results.

As shown in Table 4 and Figure 13, the proposed model outperforms others across key metrics. It achieves the highest Accuracy of 0.9654, significantly surpassing EMD–CNN–LSTM (0.9074), LSTM (0.6763), Random Forest (0.6454), and CNN (0.5778). The model also improves Recall, outperforming CNN by 0.3904, LSTM by 0.2877, EMD–CNN–LSTM by 0.0561, and Random Forest by 0.3178. For the F1-Score, it reaches 0.9623, well above CNN (0.5616), LSTM (0.6480), EMD–CNN–LSTM (0.9027), and Random Forest (0.6452). The bar chart shows that CNN performs the worst across all metrics, with average values below 60%. Random Forest shows slight improvement at around 64% due to its reliance on handcrafted features. LSTM improves to around 67% for Accuracy and Recall, but its F1-Score is lower. EMD–CNN–LSTM, combining EMD preprocessing and CNN–LSTM, boosts all metrics above 90%. The final model excels, achieving over 96% in Accuracy, Recall, and F1-Score, thanks to the global feature modeling of the multihead self-attention mechanism and the optimizations from the BiLSTM and multiscale feature fusion module. Overall, the model’s performance improves with increasing architectural complexity and enhanced feature extraction.

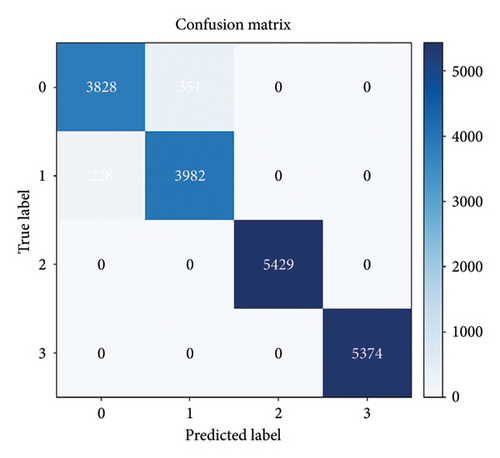

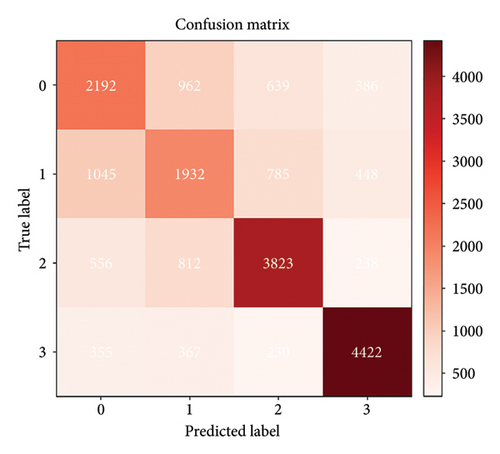

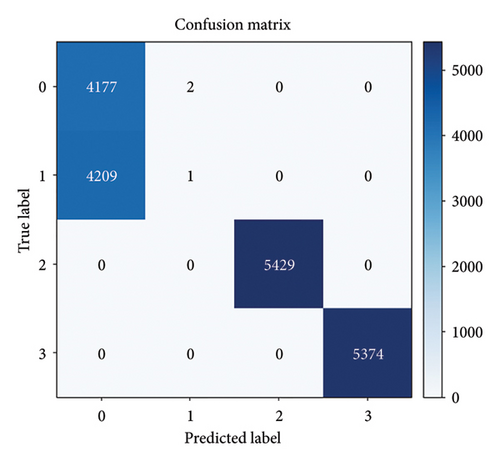

A confusion matrix was used to further determine which faults were misclassified by the proposed model, providing a visualization of the fault detection results. The EMD–CNN–LSTM neural network optimized by multihead self-attention mechanism is visualized with the Random Forest classification results, as shown in Figure 14.

As shown in Figure 14, the proposed model outperforms the traditional Random Forest model by more effectively extracting data features and maximizing the identification of distinctive characteristics across different fault types. For the normal and loosening categories, where the feature differences are relatively small, both models exhibited some misclassification. The multihead self-attention mechanism-optimized EMD–CNN–LSTM neural network misclassified 228 normal samples as loosening and 351 loosening samples as normal. In contrast, the Random Forest model misclassified 1061 and 1774 samples, respectively. This indicates that the proposed model extracts features more comprehensively, resulting in significantly better performance than Random Forest.

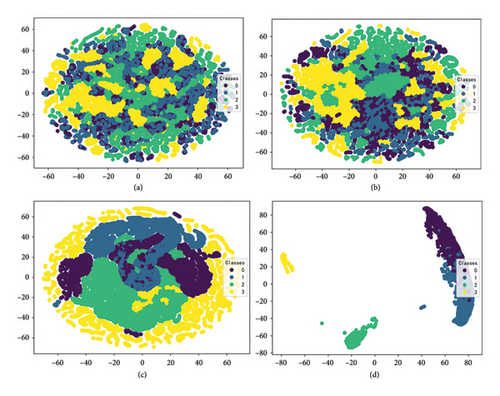

4.2.3. Layer-By-Layer Visualization of Model Classification

To better understand the model structure and training process, this study visualizes the feature extraction capability of each layer and analyzes the interlayer relationships in the deep network. The T-stochastic neighbor embedding (T-SNE) algorithm is used for dimensionality reduction and visualization of the output features at each layer. As shown in Figure 15(a), the input data are initially cluttered with no clear classification. After passing through the attention mechanism layer (Figure 15(b)), some features are effectively separated and aggregated, but complex features with different time frequencies and scales remain mixed. These features are further refined in the CNN and LSTM layers. Figure 15(c) shows that local features are effectively extracted in the CNN and pooling layers, though some features are still not fully separated. Finally, after the LSTM layer (Figure 15(d)), fault features are clearly extracted and classified, with only a small overlap remaining. Overall, the model successfully learns fault features and performs effective diagnosis and classification.

5. Discussion

5.1. Analysis of Model Performance

5.1.1. Reasons for Performance Enhancement

From Table 4 and Figure 13, it is clear that our proposed method significantly outperforms other algorithms, achieving an F1-Score of 0.9623. This improvement is due to the multihead self-attention mechanism, which effectively captures both global dependencies and local features, integrating time and spatial information to enhance the model’s ability to recognize long-range dependencies. In comparison, CNN performs the worst because it relies solely on spatial feature extraction, making it less effective for complex time-series and multiclass fault data and sensitive to high-frequency noise. Random Forest performs better but is limited by fixed handcrafted features, hindering its ability to capture temporal dependencies. LSTM performs well for time modeling but struggles with spatial feature extraction and is affected by gradient issues. The EMD–CNN–LSTM model improves performance significantly, with EMD preprocessing extracting time–frequency features, and the combination of CNN and LSTM enhancing spatial-temporal feature modeling.

Figure 14 shows that the proposed model correctly classifies all samples for each fault category, especially for rough contact faults, demonstrating its strong time and spatial feature extraction capabilities. It also outperforms Random Forest in diagnostic accuracy. For misalignment faults, the model achieves perfect classification, showcasing its powerful deep feature extraction ability.

5.1.2. Statistical Significance of Model Performance Improvement

An independent sample t-test was conducted to determine whether the performance differences between models were statistically significant, ensuring that the observed performance improvement of the model was not due to chance. The performance index of the final model was compared with those of other models, and the detailed results are presented in Table 5.

| Model | T-statistic | p value |

|---|---|---|

| CNN | 80.5611 | 8.9043 × 10−5 |

| LSTM | 31.7740 | 8.8625 × 10−4 |

| EMD–CNN–LSTM | 32.5942 | 2.9951 × 10−5 |

| Random Forest | 344.7944 | 7.5447 × 10−6 |

The t-test results in Table 5 indicate that there is a significant difference between the final model and other models, with all p values being less than 0.001, confirming the statistical significance of the performance improvement of the final model. Compared with LSTM and EMD–CNN–LSTM, the p values are much smaller than 0, and the t-statistics exceed 30, indicating that the improvements brought by the final model are substantial. For Random Forest, the t-statistics and p values further highlight the significant performance difference. In summary, combining Table 5 with Table 4 and Figure 13, the results confirm the significant performance improvement of the final model.

5.2. Analysis of Different Data Decomposition Methods

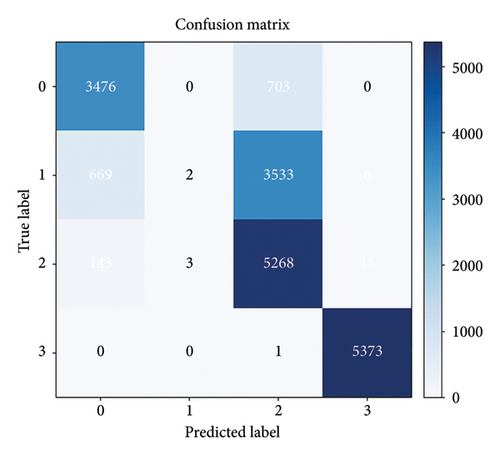

Further validation of the impact of different signal decomposition methods on the proposed model involved applying VMD to decompose the raw torque data and integrating it into the model. Building on previous studies of VMD in fault diagnosis [53], the fault diagnosis performance was evaluated with the number of modes K set to 6 and 10, respectively. The confusion matrices for both cases are presented in Figure 16.

As shown in Figure 16, both VMD schemes with K = 6 and K = 10 failed to distinguish normal from loosening, resulting in significant misclassification. When K = 6, the test Accuracy was 0.7357, the Recall was 0.7006, and the F1-Score was 0.6314, with frequent confusion between fault types. Increasing the number of modes to K = 10 slightly improved Accuracy, Recall, and F1-Score to 0.7806, 0.7499, and 0.6663, but the issue of misclassification, especially between normal and loosening, persisted. Combined with Table 4, it can be seen that the use of the VMD method failed to effectively extract the fine-grained features crucial for distinguishing between different fault types. In contrast, EMD, through its adaptive decomposition approach, was able to better capture time–frequency features, thereby improving the overall performance of the model. Therefore, although VMD has certain advantages in some applications, its performance is clearly inferior to EMD in this task and failed to effectively improve fault diagnosis accuracy.

5.3. Analysis of Model Robustness and Scenario Applications

In the field of fault diagnosis, avoiding noise interference and achieving robust fault detection is crucial for multiscenario applications. The proposed model was tested using the 12 kHz drive-end data from the CWRU dataset [54] under various operating conditions, including 0 HP, 1 HP, 2 HP, and 3 HP, to validate its robustness. The test results in Table 6 indicate that the model achieved accuracies of 97.50%, 95.56%, 94.67%, and 97.33% under these conditions. These findings demonstrate that the model is not only suitable for coupling monitoring of printing equipment but also widely applicable to fault monitoring of key components in other industrial devices, such as spindle drive systems and conveyor belt couplings in machine tools. In future applications, combining high-precision sensors, advanced filtering techniques, and the model’s real-time inference capabilities is expected to significantly enhance the intelligent diagnostic level of equipment in these scenarios.

| Operating condition (HP) | Accuracy (%) | Recall (%) | F1-Score (%) |

|---|---|---|---|

| 0 | 97.50 | 97.14 | 97.14 |

| 1 | 95.56 | 94.81 | 94.93 |

| 2 | 94.67 | 94.50 | 94.23 |

| 3 | 97.33 | 97.40 | 97.28 |

5.4. Model Limitations and Improvement Directions

Despite achieving strong classification performance, the proposed EMD–CNN–BiLSTM model optimized with multihead self-attention has some limitations. It struggles in scenarios with minimal feature differences, such as distinguishing between normal and loosening categories, where fine-grained feature extraction is insufficient, leading to misclassification. Moreover, the model has 439,078 parameters in total, with a significant portion coming from the multihead self-attention (68,864 parameters), BiLSTM layers (263,168 parameters), and convolutional layers (98,432 parameters). While these components improve feature extraction, they also result in high computational cost and memory usage, which could limit its deployment in resource-constrained environments, like fault diagnosis in industrial production lines.

Addressing these issues involves enhancing feature extraction through deeper CNNs or techniques such as pyramid pooling or dilated convolutions to capture finer features. High computational cost and memory usage can be mitigated by employing model compression, pruning, or lightweight architectures such as MobileNet or EfficientNet. These approaches improve feature extraction while reducing computational complexity, making the model more suitable for resource-constrained environments such as industrial production lines.

6. Conclusion

A coupling fault diagnosis model is established based on the optimized EMD–CNN–BiLSTM neural network. Abnormal fluctuations and amplitude changes of torque signals are found by collecting and analyzing different fault data signals of coupling.

Comparing the proposed model with the CNN, the LSTM, and the Random Forest, the proposed model effectively captures the feature information in the fault signals and achieves accurate diagnosis of different fault types. The method improves 40.07%, 31.43%, and 31.71% in F1-Score. The multihead self-attention is introduced to further enhance the expressive ability of the model. After the input data pass through the attention mechanism layer, a portion of the features are effectively separated and aggregated. These indicate that the attention mechanism layer is able to extract fault features at different scales. After the LSTM layer, the fault feature extraction and classification are very obvious. The model effectively learns the features of different faults and realizes the goal of diagnosis and classification.

Conflicts of Interest

The authors declare no conflicts of interest.

Funding

This work was supported by the R&D Program of Beijing Municipal Education Commission (KM202410015004), Doctoral Research Startup Fund of Beijing Institute of Graphic Communication (27170124032), and BIGC Project (Ec202301).

Open Research

Data Availability Statement

The data used to support the findings of this study are available on request from the corresponding author.