Optimized Change Management Process Through Semantic Requirements and Traceability Analysis Tool

Abstract

Change analysis and validation is a challenging task in an agile-based environment. An agile-based environment encourages changes in the requirements, but still, it is problematic when there are frequent changes due to the involvement of multistakeholders, multiviews, or aspects related to managing the requirements of the software. All of these challenges ultimately impact the requirement prioritization and change management process. Currently, changes are managed through a change control board, involving various experts to make decisions. Our approach focuses on restructuring and reusing techniques by mapping similar codes and functions. To handle change impact analysis, traceability, and prioritization, we propose a model that is based on semantic analysis using NLP consisting of function-based analysis and GitLab used for trace-link generation. NLP automates the extraction, classification, and analysis of requirements written in natural language; analyzes unstructured data; and identifies dependencies, redundancies, and ambiguities in requirements. Automation of the proposed model for impact analysis, trace link generation, and prioritization helps to mitigate the issues of irrelevancy, redundancy, and ambiguity during a change and its impact on different artifacts. The experimental results show that the automation of the proposed model in an agile environment facilitates the changes in requirements and improves the mapping and its impact significantly. We selected two projects from the software house: one is a healthcare system and the second is an E-commerce application. The results in both projects depicted that the performance of the requirement engineer, change control board, and end-user outperformed (i.e., more than 80% satisfaction level) compared to the existing approaches (i.e., less than 60%). Therefore, automation may help practitioners in an agile environment to manage changes, their effects, and the prioritization process. It can also guide researchers to improve the software’s productivity and quality in this domain.

1. Introduction

The continuously changing needs of clients, organizations, stakeholders, market environments, and policies always result in changes to software requirements throughout time [1]. The thorough planning, comprehensive design, and documentation involved in traditional software development result in low change acceptance [1, 2]. Agile software development (ASD), on the other hand, consistently “welcomes changing requirements, even late in development,” because it gradually improves the product to satisfy the client [3]. Ultimately, it encourages ongoing stakeholder engagement and feedback. Requirements are frequently changed in ASD for a variety of reasons, including organizational, market, customer, or software engineer knowledge growth. Change requirements in an agile environment (CRAE) is a difficult undertaking; if ignored, it could result in project failure [3]. To the best of our knowledge, neither an exact description of the CRAE process nor a list of agile practices that complement the several components that make up the CRAE process exist. Our work is focused on developing a change management model and identifying the agile practices that support the CRAE process in order to fill this research gap [3, 4].

The literature has provided a wide range of techniques and strategies, including categorization, identification of change reasons, and theoretical models of requirement change management (RCM). The rate of insertions, removals, and updates of requirements in a given amount of time and change impact analysis [5–7]. The particular ways to reduce the impact of requirement changes, strategies for managing changes, and the agile method, which emphasizes extensive collaboration and direct communication between customers and delivery teams [7, 8]. Formal methods and tools to assess automated requirements and measure requirements quality features are advanced approaches for handling requirement changes, quantitative assessment of requirement quality, assessment of requirement change impact prediction tools, and recently the use of just-in-time methods to manage nonfunctional requirements in agile projects from a knowledge management perspective. On the other hand, not much research has been done on change prevention and prediction. This is presumably the primary cause of the requirement changes being a significant factor influencing the software success rate, which has remained at roughly 29% over the previous 25 years [7].

Requirement prioritization (RP) plays a role in the analysis phase of software to streamline the requirement engineering process [9]. The goal of RP is to consider the most important requirements that are recorded and handled out first. One of the crucial components of the requirement phase is to determine the needs based on requirement priority. The inconsistencies and dependencies of requirements can be handled by prioritization [9]. The main issues of RPs are irrelevancy and redundancy of requirements, and there is a need to improve these parameters. In this research, we improve the RP based on requirement change analysis by automating its impact on requirements. Requirements can be catered from requirement elicitation through the text mining (TM) technique. Text documents are analyzed using semantic analysis (SA) to extract relevant and meaningful information. To determine and comprehend the underlying meaning of words and sentences, SA uses natural language processing (NLP) techniques [10]. From unstructured text data, TM is collecting important insights and information. Large amounts of textual data may contain hidden trends, patterns, and relationships that could be found using the TM technique [11, 12]. We are employing NLP, the branch of artificial intelligence (AI) techniques, to conduct SAs in our research. Requirement engineer (RE) tasks can be automated with the help of NLP. The majority of suggested methods begin with different NLP stages that examine requirement statements, extract their linguistic content, and transform them into representations that are simple to handle, like feature lists or vector representations based on embeddings. These NLP-based representations are typically utilized later on as inputs for rule-based or machine learning (ML) algorithms, which are also types of AI techniques [13]. Thus, requirement representations are crucial in evaluating the precision of various methods [14]. In this research, we extended this traditional approach by implementing a function-based comparison methodology. The textual requirements extracted during the requirement specification phase were complemented with function data from source code files using an algorithm to extract and compare functions across multiple programming languages. It first preprocessed the function data through tokenization and removal of stop words in order to compute numerical representations of the code. Then, it applied cosine similarity measures to identify and analyze function similarities. Finally, it outputs the results, including a full mapping of function names, descriptions, and similar function details, which are exported into an Excel file. This hybrid approach in combining textual and functional data enhances accuracy and strengthens requirement representations, augmenting the use of reusable components from existing systems and assuring extensive analysis of similarities and patterns.

Numerous studies have been conducted regarding the automation of various RE tasks. The suggested methods typically begin with the application of a series of NLP procedures that gather information and linguistic characteristics from requirement texts to create a variety of NLP-based representations [13]. Later phases of the task, such as requirement classification, trace link detection, and quality defect discovery, are used with these representations to solve the intended problem [15–17]. The requirements must meet the traceability property in order to assure qualitative management of the requirements in software engineering projects. The term traceability describes the capacity or possibility of tracking the spread of a change across software system artifacts. Each trace path has a source and a target artifact, and there can be one or more possible trace paths for a given trace. An artifact may serve as both a source and a target for different trace paths at the same time. A trace relation is any trace link that is created between two sets of artifacts. The total of all generated traces is called a trace set, and all relationships are represented visually on a traceability graph [18].

The willingness to modify requirements on a regular basis is a key component of ASD. The current configuration of the software requirements is impacted by each change request, which makes the requirement traceability crucial in ASD. All of the information created, modified, and utilized during the requirement implementation process is referred to as a requirement artifact. Business objectives, requirement sources (such as legal regulations), user story acceptance criteria, behavior scenarios, test scenarios, requirements for the upcoming software release, domain model, and so on are some examples of requirement artifacts in ASD [19, 20]. The primary goals of requirement tracing are to learn how the requirements relate to one another and the development project’s artifacts, to comprehend the intricacy of the logical relationships between the requirements and the artifacts, and to be able to trace decisions that have already been made and make new ones regarding the revision of the requirements set in the event that a requirement changes. Stated differently, requirement tracing is a tool that enables us to respond to the following inquiries: who, what, where, when, why, and how changes to the requirements occur, or should they occur [19, 20].

Different researchers identified expert-based judgment (EBJ) as a method that is used in ML and AI [11, 21]. EBJ is a process of making decisions or assessments based on the experience, knowledge, and expertise of people who have specialized knowledge or insights in a particular domain [21]. The selection of experts depends on different criteria such as domain experience, impact analysis, and change management in our research.

- •

In this research, we present RCM and traceability analyses for ASD to improve the requirement change impact analyses, trace links, and prioritization of requirements after changes.

- •

The proposed model, named requirement change, prioritization, traceability, and impact analysis (RCPTIA), manages the changes during the development of software requirements in improving the change impact and prioritization by reducing irrelevancy and ambiguity using SAs based on NLP techniques.

- •

Afterwards, for impact analyses, we first identify the requirements semantically, and then based on reusability and restructuring, we prioritize the requirements and select them to reduce ambiguities and irrelevancy during change implementation. After that, we create trace links to map the changes from source to implementation and analyze their impact on the other requirements through the EBJ and NLP techniques.

- •

This research work transforms the conceptual model into a working tool (WT) to automate the RCPTIA in a real environment and increase the efficiency of the RCPTIA model.

- •

Evaluation of the RCPTIA model is carried out by using different projects from the software house. Different results from these projects are observed; it is discovered that the RCPTIA model identifies the accurate change impact analysis, trace links, and priority of the changed requirements.

- •

Finally, this WT facilitates the practitioners to automatically manage changes and their impact on the requirement in an ABD. It also provides the guidelines to initiate new research dimensions in this domain to enhance the productivity and quality of the software.

The remaining paper is categorized into four sections: Section 2 provides an overview of the related work. Section 3 provides the details of the RCPTIA model and prototype (working model), followed by Section 4, which provides the results and discussion of the RCPTIA model and working prototype. Finally, Section 5 concludes the paper and highlights essential directions for future research.

2. Related Work

ASD, in contrast to traditional software development, promotes requirement changes. Studies covering RCM in both traditional and ASD are becoming common due to their significance. In the literature, numerous approaches enhance the management of agile requirement change by looking at previous works [3]. A growing amount of research work has been reported on topics of requirement change process models, change taxonomies, and identifying change causes [22]. Research reveals the reasons behind requirement changes and divides them into two primary categories: (i) necessary and (ii) unintentional. In a similar manner, this study identified the numerous reasons why requirements change and grouped these reasons using taxonomy to facilitate comprehension and identification in the future. It highlights the primary reasons for the requirement changes and further classifies the requirement change elements according to the change’s origin and purpose [22].

Consequently, to comprehend how traditional requirement engineering problems are resolved utilizing agile techniques, we mapped the evidence regarding requirement engineering practices and difficulties encountered by agile teams. A comprehensive review of the literature on CRAE was presented in [23], wherein the causes of requirement changes and the methods and procedures that facilitate RCM were examined. The authors suggested future research areas and assessed how organizations manage the decision-making process when implementing requirement changes. A systematic mapping study was done in the research work [24], and in this study, the author found that five out of 15 research areas are requirement elicitation, change management, measuring requirements, software requirement tools, and comparisons between traditional and agile requirements that are the points to more research.

In the paper [25], requirements are ranked and chosen using a search-based software engineering approach. The objective is to prioritize and rank the software requirements. They provide an assessment and correlation that identified, analyzed, classified, and clustered search-based software methods proposed to address issues with need selection and ranking. According to a study, Hafeez et al. [26] claim that one of the most important stages of RE in the SDLC is prioritization. They propose a multicriteria decision-making model for RP. To find the best stakeholders while guaranteeing stakeholder satisfaction, they employ a case study approach that combines fuzzy AHP and a neural network-based model [27–31].

Certain quality criteria, such as efficacy, usability, and scalability, are not met by many of the prioritization strategies in the literature [27–31]. The [25] method included end-user (EU) involvement in a situation-transition framework for role-playing. In the paper [32], the perspectives of multiple users were presented, and a commercial off-the-shelf (COTS) prioritization method was suggested. In [7], the authors offer a methodical literature mapping to classify contemporary methods for resolving issues such as RP and selection. Similarly, a fuzzy-based engine (FBE) is proposed as a prioritization framework in the paper. The fuzzy rules are used to assign a user prioritization value as input to the requirement analysis process. This paper [33] proposed a technique to deal with new and current requirement priority orders through ML. In order to save money and time, the RP is based on user feedback and requires less human labor [34]. In order to effectively handle large amounts of data using ranks, a combination of evolutionary-based and clustering procedures was proposed in the paper [33]. As a result, the literature lists a variety of issues with RP, including accuracy, time consumption, and scalability [35].

TM techniques have a model of latent Dirichlet allocation and latent semantic index to identify term mismatch and SA [10]. This method is used to evaluate trace link assurance and looks at different and similar requirements to reduce complexity. SA using TM combines both these techniques to extract valuable insights and analyze large volumes of text data. The revival of research in AI techniques has been advantageous in the field of NLP. Deep neural networks trained on large text datasets have been used to perform NLP’s primary task of representing language for machine comprehension [36]. NLP is among the most difficult fields of AI. Its goal is to make natural language texts “understandable” and processable by computer programs so they can accomplish a particular task. Text classification, conceptual diagram extraction, semantic labeling, and semantic relation detection have been developed using NLP across multiple areas [14]. Deep learning techniques have been used more recently to tackle a variety of NLP tasks, particularly those that are semantic [17]. Introduced word embedding, an effective technique for extracting high-quality vector representations of words from massive amounts of unstructured text data [37]. Word embedding can identify relationships between words, semantic and syntactic similarity, and other aspects of a word’s context within a document. NLP automates the extraction, classification, and analysis of requirements written in natural language and analyzes unstructured data (e.g., textual documents, emails, or user stories). It identifies dependencies, redundancies, and ambiguities in requirements [14]. A rule-based technique uses predefined rules or heuristics to analyze requirements and relies on explicit relationships between requirements. The main challenge for rule-based techniques is limited scalability for complex or dynamic systems and time-consuming to define and maintain rules. Fuzzy logic handles uncertainty in requirements, especially for prioritization. It utilizes fuzzy sets and rules to evaluate subjective or ambiguous data. The limitation of fuzzy logic requires expertise in defining fuzzy rules and membership functions and may become complex for large systems [26].

Requirement traceability is primarily utilized in software development to ensure that the final product fulfills user and customer expectations [20]. The goals of the requirement tracing stage, as stated in the paper [20], are to verify that the test cases match the requirements, show the customer that requirement changes have been considered and the software product complies with the requirements, and ensure that no superfluous features were created for which no implementation requests have been made. In addition, ensuring completeness, looking for components that are subject to change, helping new project participants understand the requirements, and managing the data generated during software product development are the driving forces behind providing the traceability of requirements. According to the paper [14], the use of informal traceability techniques, ineffective coordination among those in charge of traceability, and the existence of barriers to acquiring essential data are some of the primary issues with supporting the traceability process. In the study [38], they examine the methods and strategies used today in DevOps environments to achieve software artifact traceability. This paper focuses on the tasks that allow for feasible traceability management in DevOps practice, such as change detection, change impact analysis, consistency management, and change propagation with continuous integrations. Additionally, software artifact traceability management can be used as an aid in the DevOps process. By taking into account the dependencies between artifacts, traceability links are created. It is therefore necessary in practice to have automated tool support for traceability management that takes into account the entire software development lifecycle in a DevOps environment.

In the paper [19], the difficulties with requirement traceability are covered. The expense of the time and effort needed to enter traceability data is the first major obstacle. The second obstacle is how hard it is to keep traceability intact when things change. The third obstacle is the diversity of opinions among project stakeholders regarding the usefulness of traceability. Because project participants do not fully understand the benefits of traceability, this leads to an intent to maintain traceability for as little time as possible. The organizational issues that result in the negligent upkeep of requirement traceability present the next difficulty. Many organizations do not train their employees regarding the importance of traceability, and traceability is not emphasized in undergraduate education, which is the root cause of this problem. The fifth difficulty is “inadequate tool assistance.” The software engineering industry is unsuitable for manual traceability methods due to the exponential growth of traceability relations in this field. However, COTS tools are generally sold as full requirement management packages; they are also hardly appropriate to the needs of the software development industry.

We concluded from the literature that the change in requirements is challenging during ABE. These challenges are identifying the accurate change impact analysis, tracing links and priority of the changed requirements, and reducing ambiguities and irrelevancy during change implementation, as depicted in Table 1. Therefore, identified challenges impact the RP after the change in requirement, which reduces the software’s quality and productivity.

| Parameters | [37] | [11] | [40] | [41] | [42] | [43] | [20] | [1] | [39] | [18] |

|---|---|---|---|---|---|---|---|---|---|---|

| Change analysis | □□ | □□ | ■□ | ■□ | □□ | ■■ | ■□ | ■■ | ■■ | ■■ |

| Trace links | □□ | □□ | ■■ | ■■ | ■■ | ■□ | ■■ | □□ | ■■ | ■■ |

| Impact analysis | □□ | □□ | ■□ | ■■ | ■■ | ■■ | □□ | □□ | ■■ | ■■ |

| Mapping | ■□ | □□ | ■□ | ■■ | ■■ | ■□ | ■□ | □□ | ■□ | ■□ |

| Communication and coordination | □□ | ■□ | ■□ | □□ | ■■ | □□ | □□ | ■□ | ■□ | ■□ |

| Automation | □□ | □□ | □□ | ■□ | □□ | ■■ | □□ | ■■ | ■□ | ■□ |

| Semantic analysis | □□ | ■■ | ■■ | ■■ | ■■ | ■□ | □□ | ■□ | □□ | □□ |

| ML techniques | ■■ | ■■ | ■■ | ■□ | ■■ | ■■ | □□ | □□ | ■□ | ■□ |

| Prioritization | □□ | ■■ | □□ | □□ | ■■ | □□ | □□ | □□ | □□ | □□ |

| Irrelevancy | ■■ | ■■ | ■■ | ■■ | ■■ | ■□ | □□ | ■□ | □□ | □□ |

| Completeness | ■■ | ■□ | ■□ | □□ | ■■ | ■■ | □□ | ■□ | □□ | □□ |

| Ambiguity | □□ | ■■ | □□ | ■■ | ■■ | □□ | □□ | ■□ | □□ | □□ |

| Prechange analysis | □□ | □□ | □□ | □□ | □□ | □□ | ■□ | □□ | □□ | □□ |

| Postchange analysis | □□ | □□ | ■□ | □□ | □□ | □□ | ■□ | □□ | □□ | □□ |

- Note: Not mentioned = □□, partially mentioned = ■□, mentioned = ■■.

3. Proposed Model

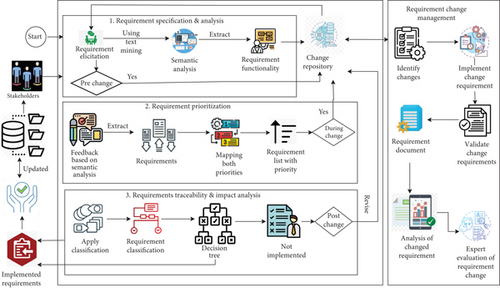

This section describes and presents the RCPTIA model to resolve the problems identified from existing literature. The RCPTIA model is based on RCM, prioritization, traceability, and impact analysis for change. In the RCPTIA model, mitigated problems of change requirement analysis, their accurate prioritization, and trace link generation along with their impact factors analysis during software development from requirement specification to testing. The RCPTIA model is depicted in Figure 1. There are four different phases of the RCPTIA model; the first phase describes the extraction of requirements through SA using NLP. The second phase is RP; a priority list is generated in which EBJ is involved and mapping the priorities. The third phase is about tracing the links between change requirements and finding their impact analysis. In parallel to all stages, the RCM phase is executed; it manages the change in requirements in three different ways: predevelopment, during development, and postdevelopment through EBJ, and also validates all the changes and their impact. The RCPTIA is explained in detail as follows.

3.1. Preprocessing of Input Data/Collection of Input Information

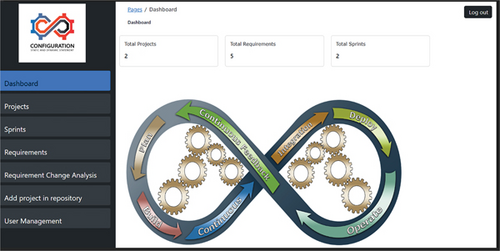

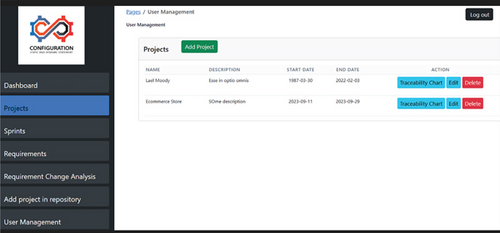

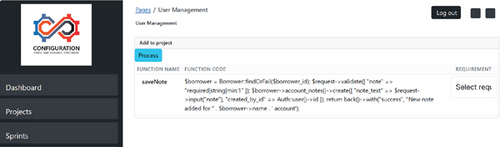

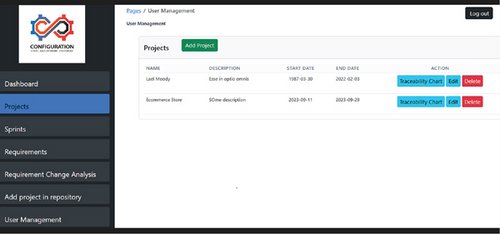

Data is collected from different users and stakeholders who are involved in change initiation and prioritization of requirements in the input collection phase. The requirement list is saved in the repository; from there, we can extract requirements from multiple programming languages like Java, Laravel, and Python, which previously were saved in the repository to extract similar functions related to new change requirements. This process is shown in the algorithm in Algorithm 1. We can start the project from scratch, check the requirements with the existing requirements, and restructure or reuse the existing requirements in a new project. We developed a prototype to automate the proposed model, and for this, we used Laravel, PHP, SQL, GitLab, NLP, and VS Code. The main interface of our prototype, as shown in Figure 2, represents the dashboard, which shows the total number of projects, requirements, and sprints. The list of projects is saved in the repository, as shown in Figure 3. In the same dashboard, we perform the action of changes and prioritization.

-

Algorithm 1: Extract and compare functions from multiple programming languages.

-

Input: Z (zip_file_path) // Containing source code files using programming languages.

-

L (set of language) // programming languages to process.

-

P (parsers for each L)

-

PF (Preprocessing functions) // tokenization, stopwords removal, etc.

-

Output: FD (set of containing function data and their similarities)

-

Import libraries // (zipfile for extracting files from the ZIP archive, os for working with file paths, pandas for creating a DataFrame to store function data, subprocess for running external processes (used for Java code parsing), ast for parsing Python code, re for regular expression pattern matching, nltk for natural language processing tasks, TfidfVectorizer and cosine_similarity from scikit-learn for text processing and similarity calculation)

-

P ← NLTK data for PF

-

Z ← set path to zip_file_path //Extracting files from the ZIP archive

-

TF ← set temporary folder (extracted_folder))

-

TF ← Z // Extract contents

-

FD ← function_data // Create a dictionary to collect function data

-

File ← FD // Store function data after preprocessing

-

While till end of function_data dictionary

-

function_data ← File

-

end while

-

while extract function_data single list similarity do

-

TF-IDF ← Compute vectors (CV) for function code as numerical features

-

CV ← TF-IDF

-

CS ← CV Compute cosine similarity (CS) // function code to find similarities between functions

-

end while

-

for each DF mapping do // Data Frame

-

first Add a description and similar name columns where the description column contains the function code, and the similar name column lists similar function names based on a similarity threshold

-

Then

-

Create a final DF with information about function names, code, descriptions, and similar names for each function in different programming languages

-

′function_data_with_description.xlsx′ ← DF //Export the final DF to an Excel file named

-

FD ← ′function_data_with_description.xlsx′

-

End for

-

Return FD

3.2. Prioritization Preference

After requirements are extracted using SA based on NLP, stakeholders are rated using EBJ. These specifications are categorized based on various factors such as the product, expenses, services, and conditions. As a result, requirements are split among stakeholders based on aspects to reduce effort and ambiguity in rating requirements for implementation. The following actions have been taken to rate requirements so that the most relevant and significant features are implemented first, reducing redundancy and irrelevancy among requirement management. A few examples of the requirements are a user ID with name and designation, a sound system, a finger and thumb scanner, a face detector, check-in and check-out procedures, and so on. Relevant stakeholders are asked to rate each of these factors on a scale of 1–5 in order of importance. The relevant stakeholders are changing control management (CCM), the EU, the RE, the development team (DT), and the requirement analyst (RA). According to the results, some requirements were overlooked because of irrelevant or diversified reviews. The likelihood of system failures may rise as a result of the missing requirements. Thereafter, missing values have been found from historical data to improve priority accuracy.

3.2.1. RCM Process

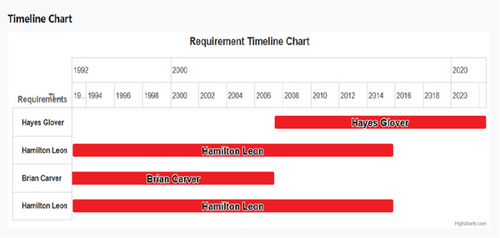

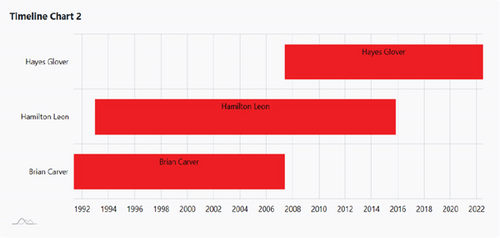

Changes in requirements must be managed effectively on a continuous and regular basis to enhance productivity and adaptability. Due to the complexity issues, we must properly combine multiple languages in the tool. Changes in requirements are of three types: predevelopment, during development, and postdevelopment. Figure 4 shows the output of the requirement change process for further implementation and previously performed traceability to map the changes.

A new request for a change in requirements was initiated by the client and communicated via the organization’s site. The DT of the CCM finds and examines changes from earlier cases that are pertinent to the most recent client requirement changes. The following tasks are carried out by the change control system for RCM.

Retrieve case: To identify similar cases, treat this new request as a new case and compare it to earlier instances that are similar. A similar case finds the relevant case, retrieves it, and adopts it for further action.

Reuse case: The selected case comparable to previous ones is reused in order to meet the requirements that have changed to increase client satisfaction.

The report that the CCM produces examines the further implications of changing requirements. The experts go through the report and assess the impact of the requirement changes on the basis of their prior knowledge. Analyze the software’s cost and impact on other requirements as well. If a requirement has an impact on other requirements, try to contain it if you can. If the changes can be made, accept them based on the EBJ review report and put them into practice; if not, reject them and let the client know.

3.2.2. SA Using NLP

NLP, which is based on the K-nearest ML algorithm, is used for SA. This algorithm is used to review and categorize textual data before extracting various user requirements and feedback. Aspects and opinions are mapped by the K-nearest algorithm based on their respective occurrence and similarity frequency. TM and SA help in the extraction of relevant details and their connections from the text. As a result, EBJ is extracted in the RCPTIA to rank the requirements and determine the best recommendation for considering a new user’s request.

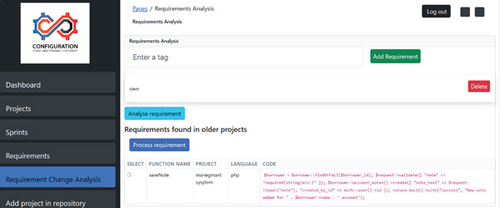

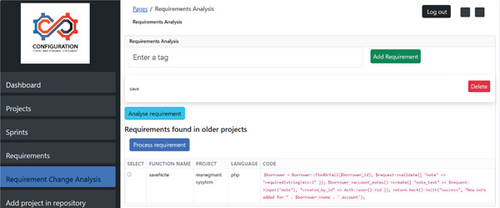

Figure 5 shows the change flow by function analysis to reuse requirements after changes. Both Figures 5 and 6 show the output of the function-based reusability using NLP.

3.2.3. Traceability and Impact Analysis

To identify and categorize requirements based on their dependencies with respect to other requirements and to verify functionality responsibilities with each other from different perspectives, requirement trace links that are generated from requirements to requirements throughout the development process using NLP are monitored and validated. It helps in putting new product requirements into practice. Requirements are mapped to requirement relationships, which explain how they are dependent on one another in the requirement traceability matrix (RTM). Reuse and retrieve requirements using RTM, which enables the prediction of changes and their relevant impact on the entire system, are used to validate and verify change analysis.

It is used to build a tree of requirements that starts with the root node and branches out into many leaves and subleaves until every dependency or requirement relationship is found. It facilitates learning by using the effective, error-free, and straightforward NLP algorithm. The findings are graphical statistical results that can be used to detect errors, ambiguities, and incompleteness in the data. As a result, it helps in confirming the correctness of aspects and their order of importance determined in earlier stages. Then, with fewer failure chances, implementation can proceed, and system modifications can be applied easily and error-free. Figure 7 shows the traceability interface of the running project in which changes are implemented after the process.

Figure 8a,b depicts the requirement trace links over time, helping the team members to link the changes from start to end for further development and changes. This timeline also helps to identify those requirements, which are frequently changed.

4. Results and Discussion

The experimental study was conducted for practitioners to evaluate RCPTIA model procedures and characteristics in order to select appropriate methods. The evaluation process is based on experience, applicants’ knowledge, and related projects. Applicants of the organization decided to implement the RCPTIA model for user satisfaction and product quality. Due to the limited number of participants permitted by the software house, only those employees authorized by the software house were allowed to participate. We selected 30 participants and evenly split them into the experiment group (EG) and control group (CG). We selected the same role of participants, but their numbers are different in both groups (i.e., EG and CG); the overall total number is the same, which is 30. The EG group developed using RCPTIA, and the CG group used the existing method; both groups worked in ABE. The project participants are REs, CCM, EUs, and RAs. Table 2 shows the demographic information of participants and their experience in the organization.

| Role of participants | Experience | EG participants | CG participants |

|---|---|---|---|

| RE | </> 2 years | 2 | 2 |

| </> 4 years | 3 | 3 | |

| </> 6 years | 2 | 2 | |

| EUs | </> 2 years | 3 | 3 |

| </> 4 years | 2 | 2 | |

| </> 6 years | 2 | 2 | |

| CCM | </> 2 years | 4 | 4 |

| </> 4 years | 3 | 3 | |

| </> 6 years | 3 | 3 | |

| RT | </> 2 years | 2 | 2 |

| </> 4 years | 2 | 2 | |

| </> 6 years | 2 | 2 | |

A questionnaire was employed for data collection, and Figure 9 details the entire experimental study procedure. As a result, we applied the pretest after the participant division and the post-test after some essential training to familiarize them with the RCPTIA model. Following the pre- and post-test, the questionnaire output was used for statistical analysis through SPSS software. The experiment’s efficiency and participant satisfaction with the suggested system are what determine how well it worked. Therefore, we extracted participant satisfaction using parametric evaluation from the existing studies (i.e., [11, 14, 20, 27, 33, 34, 43, 44]). Table 3 outlines these parameters, and information about these is gathered from participant feedback and experience according to the questionnaire. Pre- and post-tests were designed to investigate the efficacy of the RCPTIA. After the participant division, we used a questionnaire to evaluate prior knowledge, skills, and technology familiarity along with reviews of the RCPTIA model and WT.

| Sr. # | Factors |

|---|---|

| i. | Easy to understand (EU) |

| ii. | Less complicated (LC) |

| iii. | Team learner performance (TP) |

| iv. | Reduce team efforts (RE) |

| v. | Change management ability (CA) |

| vi. | Function detection ability (FD) |

| vii. | Personalized selection (PS) |

| viii. | Semantically analyzed information (SA) |

| ix. | Reduce irrelevancy and ambiguity (RI) |

| x. | Requirement selection process (RS) |

| xi. | Improve prioritization (IP) |

| xii. | User friendly (UF) |

| xiii. | Improve traceability (IT) |

| xiv. | Team relation enhancements (TR) |

| xv. | Team coordination and communication enhancements (TC) |

| xvi. | Stakeholders’ satisfaction (SS) |

| xvii. | Virtual user management environment (VU) |

| xviii. | Preimpact analysis (PI) |

| xix. | Development impact analysis (DI) |

| xx. | Postimpact analysis (PA) |

| xxi. | Team motivation (TM) |

| xxii. | Accurate and correct similar function detection (AF) |

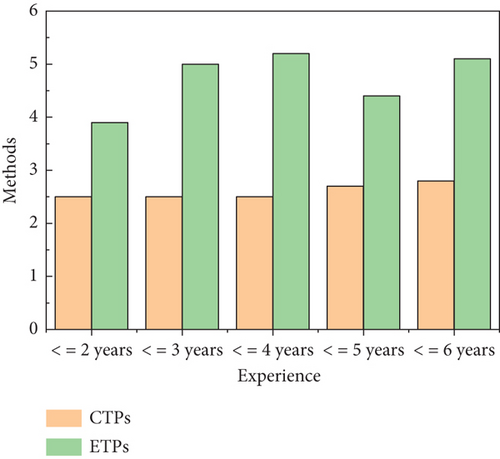

The pretest and participant training lasted for 5 days, followed by 15 days for content learning and 12 days for post-test data collection, comparison with the pretest results, and conclusion. This evaluation facilitates the comparison of treatment outcomes and attained improvement. The experiences of the construct threat (CT) and external threat (ET) participants differ, and they are less satisfied with switching to the RCPTIA model. Most participants have trouble understanding the RCPTIA model since they are unfamiliar with our technology in the RCPTIA model. Because the majority of participants had little to no experience with our RCPTIA model, the outcomes for CT and ET participants were nearly identical. After applying treatment to ET participants, an evaluation was conducted, and participant responses were gathered through a post-test. To ensure proper and accurate post-test assessment, ET should receive training on how to use the RCPTIA model before implementing the RCPTIA model treatment. Additionally, participants’ general knowledge should be improved. Based on the variables listed in Table 3, the pre- and post-test results were compared to determine any differences between the pre- and post-test values for validation following treatment.

From the responses both with and without treatment, we analyzed the data using SPSS software Version 23, as indicated in Table 4 for data dispersion analysis and Table 5 for reliability analysis results and a comparison of significant differences between the reliability analysis and pre- and post-test reliability results. With standard deviations (SDs) of 8.18 and 9.9, the CT and ET means are 27.88 and 36.66, respectively. When the post-test value beats the pretest alpha value, the reliability test is acceptable. Consequently, the CT and ET pretest results are 0.69 and 0.76, respectively. The post-test results for CT and ET are thus 0.88 and 0.99, respectively. An independent or paired t-test was used to assess the hypotheses and the significant difference between the groups’ pre- and post-test to analyze the important difference between CT and ET.

| Groups | Participants | Pretest | Post-test | ||

|---|---|---|---|---|---|

| U | SD | U | SD | ||

| CT | 30 | 27.88 | 8.18 | 36.5 | 9.55 |

| ET | 30 | 36.66 | 9.9 | 60.1 | 12.05 |

| Groups | Ps | Pretest | Post-test | ||

|---|---|---|---|---|---|

| t-value | sv | t-value | sv | ||

| CT | 30 | 2.75 | 0.001 | 2.989 | 0.000 |

| ET | 30 | 3.04 | 0.000 | 3.9877 | 0.0000 |

After the implementation of the RCPTIA model, participant satisfaction and performance increased, as indicated by the significance value (sv), that is, less than 0.05. Reject H null as a result, showing participants in ET and CT had varying degrees of skill, experience, and so on (t = 3.9877, sv = 0.0000). As a result, it also shows that semantic-based requirements in trace links greatly outperformed the current approach and enhanced the selection of relevant, personalized preferences. To compare the dependent (satisfaction level, performance, and novelty) and independent factors (semantically-based personalized preferences) between ET and CT, as shown in Table 6, we applied a one-way ANOVA test. The findings show that there is an sv difference (F = 3.860, sv = 0.001) between RCPTIA and the existing model (EM), with mean square values of 142.448 and 135.554, respectively. However, the ET mean score was higher than the CT mean score, indicating multiple viewpoints can enhance the RCM selection procedure. The descriptive analysis shows that the ET did better in terms of score.

| Groups | Mean sum of square | Degree of freedom | Mean square | F | Significance difference |

|---|---|---|---|---|---|

| Between CT and ET | 1878.823 | 15 | 142.448 | 3.860 | 0.001 |

| Within CT and ET | 2118.358 | 16 | 135.554 | ||

| Total value | 397.300 | 28 |

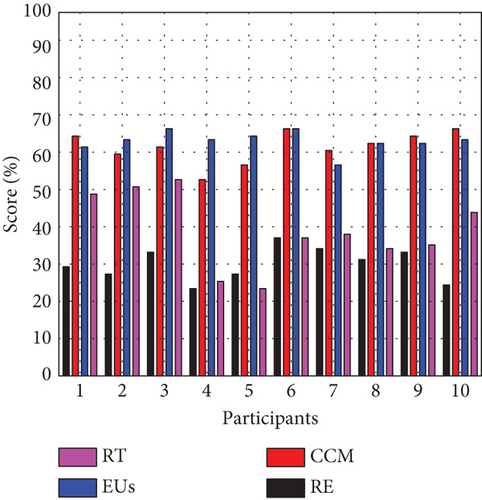

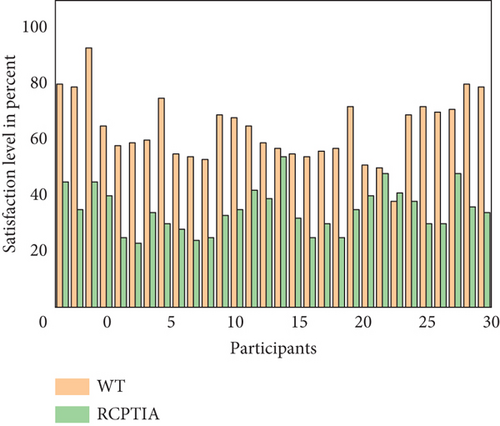

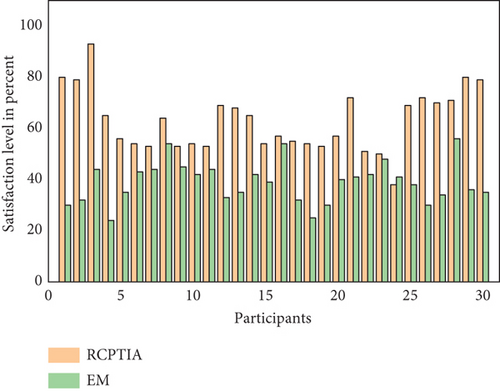

In addition, the questionnaire results demonstrate that RCPTIA performs better than EM, as evidenced by the participants’ satisfaction level (PSL) outcomes for CT and ET in Figures 10 and 11, respectively. The x-axis shows the participants’ degree of satisfaction, and the y-axis shows the number of participants’ scores. In order to compare the outcomes of EM and RCPTIA participants, the value of the satisfaction level of the EM or CT group data was calculated using a questionnaire instrument. As a result, the scores of the EM participants are shown in Figure 10, which was produced by averaging the questionnaire data for PSL using 5-Likert scales (strongly agree, agree, neutral, disagree, and strongly disagree) after the experiment. In contrast to RCPTIA participants, comparing greater than 90% of the sample, the PSL of RE, CCM, RA, and EUs is less than 65% (see Figure 11).

The RCPTIA model is used firstly to identify the changes in the ongoing project and then provide SA to match similar requirements for the extraction of similar functions. This helps in identifying reuse, modification, and new requirement development to implement continuous and frequent changes. Also, simultaneously prioritizing requirements based on EBJ and accurate generations of automatic trace links of changes to track these changes. This lowered the effort for managing change analysis semantically and automatically with the real involvement of stakeholders to reduce ambiguity, irrelevancy, and inaccuracy for change impact analysis and trace link generation. The RE, developers, and quality engineers are capable of improving their performance efficiently and effectively.

During our experiments, we assessed the time attribute as part of our analysis. In this research, our main aim is to introduce automation to streamline these processes. The goal of our research is to enable automated change management and reusability within a single platform, eliminating the need to use multiple tools such as RStudio, Jira, and Rational Pro for project and change management tasks. However, we achieved this within a time and a limited budget, but this should be measured in detail in future work.

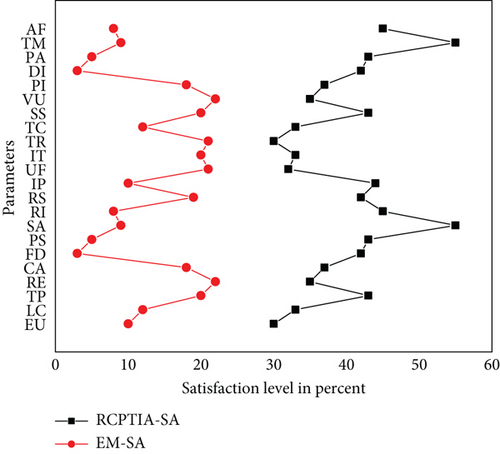

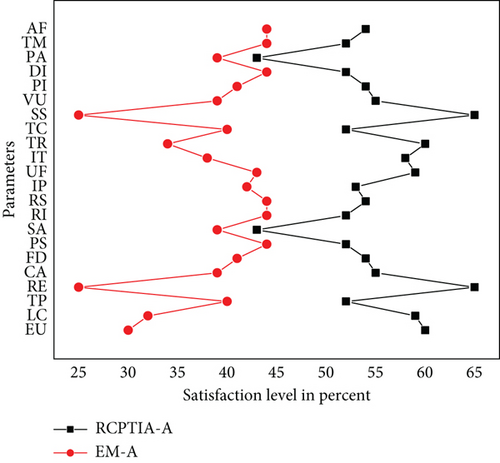

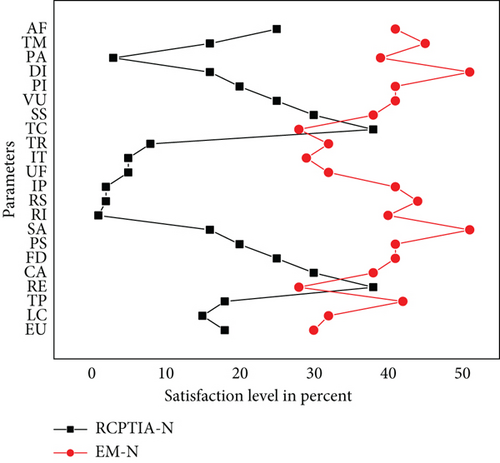

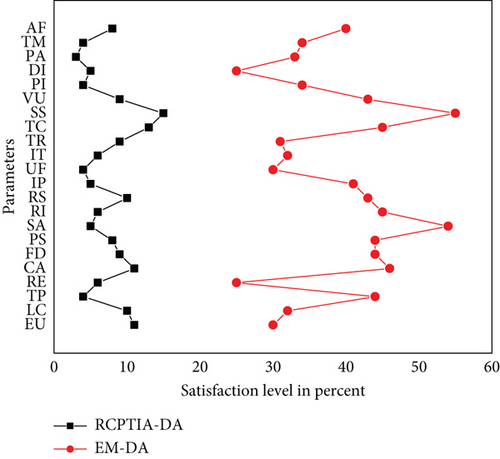

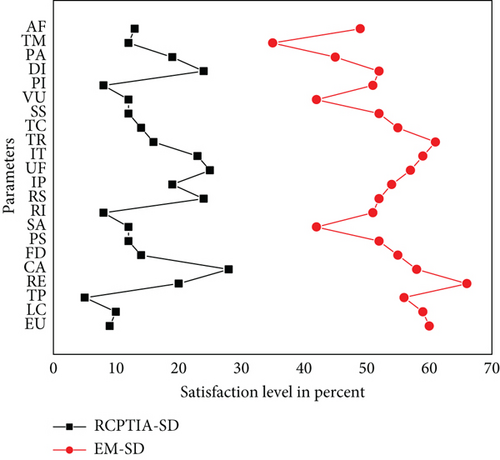

According to each Likert-style scale [45], the RPTCIA and EM findings are provided in Figures 12, 13, 14, 15, and 16, which show the PSL on the x-axis and different parameters on the y-axis. We compare parameters according to the Likert scale, and each figure describes which parameter has a greater impact on the PSL of both the participants of the RCPTIA model and the EM.

As a result, the average satisfaction levels of all participants found in Figures 12, 13, 14, 15, and 16 for each parameter expression are compared. This revealed that all RCPTIA participants had higher satisfaction than those who used traditional change management methods for impact analysis and implementation. As a result, RCPTIA outperforms EM and improves its performance to the highest level possible. Specifically, the RE, RS, SS, VU, UF, and CA play a significant role in increasing satisfaction levels.

The results shown in Figure 17 depicted that when using different methods, participants’ motivation, knowledge, learning ability, interest, and the ratio of successful goal achievement were more in ET as compared to CT participants. Hence, it proved that the automation of change impact analysis and traceability plays an important role in improving the practitioner’s performance.

F-measure and accuracy metrics are used [33, 42, 46, 47] to assess the effectiveness of RCPTIA in identifying relevant, appropriate predictions and selective fictitious data for change requirements and their implementations. SA is used to test prediction accuracy in individualized preferences for priorities. The F-measure is a quantitative indicator of the correlation between relevant actual predictions and anticipated preferences. The results then demonstrate that RCPTIA accuracy and EM accuracy are, respectively, 0.66 and 0.97 and that RCPTIA accuracy and F-measure are, respectively, 0.59 and 0.97. Participants’ overall satisfaction levels increased, as Figures 18 and 19 illustrate. The PSL and participant details are described on the y-axis and x-axis, used to evaluate each participant’s performance. Additionally, compared to EM, the data indicated that over 70% of RCPTIA participants had PSL. Therefore, we automated the proposed model, and by comparing the results of the proposed model with a WT, the satisfaction level of participants increases and is more than 80%, as depicted in Figure 19.

To address the research question, “Does the RCPTIA model increase enhanced change management through semantic requirement and traceability analysis?” the statistical test thus demonstrates a significant difference between the suggested system and the current methodology. The choice of semantically varied perspective preferences in requirements is crucial in the advanced technological era to boost motivation and capabilities. Existing information search systems often present similar information, along with redundant and irrelevant information, to potential users all at once and without assistance, impeding the development of their personalized preferences.

After the evaluation of RCPTIA and WT, we also compared results with the other most relevant studies in Table 7. DPPMCR is used for a developer-centric prediction and prevention model of requirement changes [1], TCM is used for technology change management [42], and OWL is used for the ontology web language [20].

4.1. Threats to Validity

We created an empirical review based on existing industrial research work in order to rigorously verify the relationship of theory and outcomes. We then proposed the RCPTIA as a way to streamline the change management, its impact, and the prioritization procedure. For the RCPTIA to function, there could be some restrictions that could affect the findings. This research focuses on four major threats: internal threat (IT), ET, CT, and reliability threat (RT). IT is linked to the process of requirement specification. We implemented mitigation measures to address this concern, employing a vast technique for requirement changes and prioritization. The outcomes of these actions demonstrated that using semantic and traceability analysis improves change management, prioritization, and prediction processes within requirement specifications significantly.

ET involves the generalization of findings from real-world projects. We increased the robustness of our conclusions by repeating the suggested model stages in various contexts, which increased the validity of our results. CT refers to the relationship of various perspectives and reflections. To address this, we developed a set of metrics that use semantic and traceability analysis to assess CTs in RCM. This included assessing different stages of the process, such as change initiation, analysis, prioritization, and traceability, along with comparing performance to other methodologies.

The term RT refers to the relationships between cause and effect. We used rigorous real-world calculations within the change verification to mitigate this threat. We included all authors in data collection and analysis, which further increased the reliability of our findings. Then, we ran an experiment to see how it affected learning, eliminating RT and potentially biasing our results.

5. Conclusion and Future Work

In this research, we improve change management based on SA and NLP techniques for managing and reducing the challenges in an agile environment. These challenges arose due to multiple viewpoints of the stakeholders, which impacted the prioritization and change implementation relevancy and correctness. For SA, we used NLP to enhance the requirement changes and carried out its impact analysis, traceability, and prioritization through the multiview stakeholder’s involvement. The results of the proposed model are based on experiments validating the effectiveness and efficiency in a real-world agile environment. It also provides a practical solution by automatically managing changes, traceability, and prioritization to significantly improve the quality and responsiveness of ABE. Furthermore, this research is an implementation of the proposed model that provides various scopes for researchers and practitioners. We conducted an experimental evaluation using a pretest and post-test treatment approach. Due to the limited number of participants permitted by the software house, only those employees authorized by the software house were allowed to participate. However, this work can be extended by conducting an industrial survey, which would allow us to involve multiple industries and gain broader insights. In the future, we will enhance our prototype by adding requirement-based SA, aspect-based analysis, and testing integration using deep learning techniques in agile-based and DevOps environments.

Ethics Statement

The authors have nothing to report.

Conflicts of Interest

The authors declare no conflicts of interest.

Author Contributions

Conceptualization: Irum Ilyas and Yaser Hafeez. Formal analysis: Irum Ilyas, Sadia Ali, and Shariq Hussain. Funding acquisition: Ibrahima Kalil Toure. Project administration: Shariq Hussain and Ibrahima Kalil Toure. Supervision: Yaser Hafeez and Muhammad Jammal. Validation: Irum Ilyas, Sadia Ali, and Muhammad Jammal. Writing—original draft: Irum Ilyas. Writing—review and editing: Irum Ilyas and Sadia Ali. All authors reviewed the results and approved the final version of the manuscript.

Funding

The authors received no specific funding for this work.

Acknowledgments

The authors have nothing to report.

Open Research

Data Availability Statement

Due to privacy considerations, the data supporting the study’s conclusions cannot be shared.