Enhancing Interpretability: A Hierarchical Belief Rule-Based (HBRB) Method for Assessing Multimodal Social Media Credibility

Abstract

User and artificial intelligence generated contents, coupled with the multimodal nature of information, have made the identification of false news an arduous task. While models can assist users in improving their cognitive abilities, commonly used black-box models lack transparency, posing a significant challenge for interpretability. This study proposes a novel credibility assessment method of social media content, leveraging multimodal features by optimizing the hierarchical belief rule-based (HBRB) inference method. Compared to other popular feature engineering and deep learning models, our method integrates, analyses, and filters relevant features, improving the HBRB structure to make the model layered, independent, and interconnected, enhancing interpretability and controllability, thereby addressing the rule combination explosion problem. The results highlight the potential of our method to improve the integrity of the online information ecosystem, offering a promising solution for more transparent and reliable credibility assessment in social media.

1. Introduction

The growth of social media platforms has fueled the production of user-generated content and, subsequently, of false information. The easy access and fast generation and diffusion of this undesirable information is a critical issue that challenges the stability of the social order. Assessing the credibility of the vast information available on social media is an arduous task as its mere existence is enough to consider it true [1]. Besides, language in social media has evolved very quickly transitioning from only verbal (unimodal) to verbal and nonverbal (multimodal) communication, resulting in new challenges for credibility assessment of online content. Previous research in this area has explored techniques, such as feature engineering and deep learning, to address this issue [1–5]. On the one hand, feature engineering methods focused on the analysis of characteristics associated with the user, content, and topic extracting key metrics in order to identify false information [1]. On the other hand, deep learning methods employed recurrent neural networks and attention mechanisms to detect rumors and false information [3, 6, 7]. These approaches leveraged various features, such as emotional tendency [8], user’s reputation [9], content complexity [10], dissemination patterns [11], and image-text fusion [3], among others, to effectively assess credibility.

However, they generally face different challenges when assessing credibility in multimodal social media content [1, 2, 5, 11–24]: (1) The complex input-output relationships of deep learning models and “black-box” models are usually difficult to explain, while the cross-modal correlation of feature engineering methods is low [11]; (2) Data limitation may result in low robustness for complex detection tasks; (3) Discrepancies between different information modalities generate unstructured data, for example, a text might be accurate but the content of the accompanying images could be misleading. The interpretability capacity of these models is subjective and thus, its efficiency remains an open question [17].

Model interpretability can be categorized into two groups: (1) post-hoc interpretability, which aims to explain models with weak interpretability through designed interpretation methods. For example, to explain the working mechanism of random forests and deep neural networks, a series of methods including rule extraction [25], activation maximization [26], feature inversion [27], and class-activation mapping [28] were suggested; (2) ante-hoc interpretability refers to the built-in interpretability of the model, allowing its working mechanism to be understood without external aids. Rule-based models, known for their ante-hoc interpretability, can have their mechanisms visualized through rules [29, 30]. Post-hoc interpretability is flexible and suitable for complex models. However, the generated explanations may be complex and not fully accurate requiring additional computation. In contrast, ante-hoc interpretability overall outperforms post-hoc interpretability models in terms of transparency and user acceptance as it contains built-in explanations, provides transparent and immediate feedback, is more trustworthy to users, and is easy to debug. It also presents limitations when dealing with complex nonlinear problems [31].

Given the challenges in readability of previous methods, the belief rule-base (BRB) inference method with ante-hoc interpretability has been widely used in the field of assessment and prediction becaue of its transparent inference pathway and high prediction accuracy [11, 28]. BRB is a generic rule-based model whose interpretability can be characterized by the readability and consistency of the rules. However, BRB encounters a significant challenge known as “rule combination explosion.” The rule combination explosion problem implies that the number of rules grow exponentially with the number of attributes, potentially diminishing the readability, the computational efficiency, and scalability of the model [32]. The optimization process of BRB aims to improve the model accuracy, yet this may inadvertently introduce incorrect rules. Erroneous rules are usually incomprehensible to users. In order to cope with this problem, the hierarchical belief rule-based (HBRB) model has been recently proposed [30]. Compared to the BRB method, the HBRB approach significantly reduces the rule size constructing an assessment system reckoning with the independent effect of each modality (i.e., features of text, image and users) and their relationships that allow credibility to be directly expressed by quantifying multimodal features and performing a correlation analysis.

Here, we introduce the hierarchical belief rule-base inference model for multimodal information credibility assessment (HBRB-MICA) with ante-hoc interpretability. Compared to the post-hoc interpretability model, the ante-hoc interpretability model allowed us to accurately identify the unreliability of each feature adjusting its weight accordingly to meet the needs of the credibility evaluation in different scenarios. We validated the model’s accuracy through training on an open-source annotated dataset of false information. Furthermore, we benchmarked the model’s performance against baseline models.

The main contribution of our work is the development of an innovative model (HBRB-MICA with ante-hoc interpretability) to assess the credibility of multimodal information published in social media by users. This model presents a hierarchic and independent structure based on sub-models providing a controllable and transparent assessment process. In the same line, it copes with the rule combination explosion problem subsequently reducing the computational cost and increasing the efficiency of the process. Therefore, the presented model is potentially scalable.

The rest of this paper is organized as follows: Section 2 provides a review of existing research on credibility assessment methods. Section 3 presents the proposed method (HBRB-MICA) and its components. Section 4 presents the experimental procedure and the results. Section 5 provides the conclusions and discussion of this research.

2. Relevant Research

Herewith, we present the relevant research performed around the selection and extraction of multimodal features for credibility assessment and around different credibility assessment models.

2.1. Feature Selection and Extraction for Credibility Assessment

False information is usually characterized by shorter content, more aggressive wording, and semantic inconsistencies [33, 34]. In order to assess the credibility of online information based on these semantic features, Machackova and Smahel focused on the clarity and understandability of the posted content, and on the users’ feedback using the principal component analysis (PCA) and the repeated-measures ANOVA for feature selection [35]. However, social media information is becoming more and more content-rich, thus focusing on a single textual modality is not effective. Nowadays, images are also included in the main content. Image inpainting algorithms such as DNNAM [36] and MICU [37] can improve the image quality, making the evaluation of their authenticity very difficult. Comprehensive reasoning based on multimodality can solve this problem.

Modality refers to a fine-grained concept when compared to multimedia data as each modality within multimodal data contributes to a unique value to the whole, offering insights not deducible from other modalities alone [38]. In addition to text and images, the modality of user information is also related to information credibility. Choi et al. conducted a study of the information on social Q&A platforms, considering the professional certification of the author and the relevance, richness, and accuracy of the content as criteria to assess credibility [39]. Multimodal research on assessing the credibility of information in the past 5 years has primarily focused on three modalities: text, image, and user characteristics (Table 1). Table 1 shows that, even when the dataset is imbalanced, the combination of rich modal features obtains better accuracy (F1 values) in the credibility assessment [22]. Meel and Vishwakarma [5] demonstrated that combining advanced text and image processing models (BERT, ALBERT, and Inception-ResNet-v2) achieved highly accurate multimodal assessment on different datasets. Qureshi and Malick [20] highlighted the effectiveness of traditional machine learning methods (e.g., logistic regression, decision trees (DTs), and light GBM) in processing text and user features, achieving an F1 value of 90.0%. Wang et al. [22] demonstrated the benefits of multimodal feature fusion by integrating text, image, and user features by using complex neural network models (ERNIE, VGG-19, and BP) obtaining a F1 value of 95.90% on the Weibo dataset. Qi et al. [24] focused on text, image quality, and publishing frequency, combining two neural network models to detect fake news achieving high accuracy values. Evans et al. performed a correlation analysis with a DT classifier to identify the most important features based on the root mean square (RMS) screening of the feature values [23]. This method presented the disadvantage of being less robust compared to the Spearman’s rank correlation in terms of sensitivity to detect and handle linear relationships and outliers.

| Reference | Year | Data source (# features) | Algorithm(s)2 | Analyzed features | Results3 |

|---|---|---|---|---|---|

| Meel and Vishwakarma [5] | 2023 |

|

BERT + ALBERT + inception-ResNet-v2 | Text, image |

|

| Qureshi and Malick [20] | 2023 | Platform X (5246) |

|

11, text, user | F1 = 90.0% |

| Wang, Wang, and Han [22] | 2022 | Weibo (5840 (T)1, 3963 (F)) | ERNIE + VGG-19 + BP | 16, text, image, user | F1 = 95.90% |

| Evans et al. [23] | 2021 | Platform X (208,209) |

|

34 + 21, content, context, user | F1 = 95.80% |

| Qi et al. [24] | 2019 | Weibo (4779 (T), 4749 (F))1 | MVNN + CNN-RNN | Text, frequency, and pixels of images | F1 = 90.60% |

- 1T refers to true information, and F refers to false information.

- 2RF = random forest, kNN = k-nearest neighbors, LR = logistic regression, NB = Naïve Bayes, SVM = support vector machine, DT = decision tree.

- 3Results shown based on the top-performing classifiers.

Despite the high accuracy scores obtained, these methods focused on the extraction and fusion of features, but these features were easily distorted after fusion making the detection of which of them actually affected the authenticity of information very difficult.

2.2. Credibility Assessment Models

Most studies in credibility assessment on social media were performed by supervised learning [40]. In 2011, Castillo et al. [1] pioneered an automated method to assess the credibility of tweets, selecting 15 key metrics to identify false information based on a DT model. Later, credibility assessment methods based on different machine learning models such as support vector machines [11], Naïve Bayes, k-nearest neighbors, DTs, logistic regression, and random forest [23] were proposed achieving good results (Table 1) in assessing the credibility of social media information based on feature engineering methods and machine learning algorithms.

With the emergency of neural networks, the exploration of deep learning models for rumor detection has been widely used. Deep learning presents a robust learning capacity by using feature vectors, enabling the extraction of higher quality and more representative data features. However, its “black box” nature often obscures the inference process [41]. Unimodal approaches typically capture text features through deep learning models such as Bi-LSTM, graph attention networks (GAT), and pre-trained models (BERT, GPT, etc.). In contrast, multimodal approaches integrate multiple features through feature fusion and feature interaction. Previous research focused on two aspects: (1) the fusion of different types of features and the learning of associations between multimodal features [12, 13, 19] and (2) the construction of features through cross-attention [42], semantic alignment [3], similarity matching [43], and consistency learning [44] mechanisms to discover patterns between associated features, aligned entities, similar semantics, and coherent images and texts during feature interaction. However, the complex interactions between multiple modalities and the intricate neural network architectures made the readability, i.e., the interpretation of how the model arrives at its decision, of these methods very difficult. To address this problem, some studies have attempted to link textual content with comment features (or external knowledge) [45] or to investigate the semantic inconsistency between images and text as indicators of false information [46]. Nevertheless, the performance of these methods was compromised as they tended to focus on single correlations or inconsistencies and ignored more complex interactions and underlying semantic relationships in multimodal information. They also faced challenges in explaining the modeling decision-making process, especially when dealing with large-scale and diverse social media data.

Because of the inexplicability of deep learning methods, BRB inference methods have been widely used in the fields of assessment and prediction in recent years [29, 30]. BRB inference methods present clear inference paths as well as high prediction accuracy [47]. They are developed on the basis of the traditional IF-THEN rule-based expert system and on the evidential reasoning (ER) method being suitable for constructing complex nonlinear causal relationships between both premise and result attributes under uncertain conditions [47]. Most scholars have been using the rule-base inference methodology using the evidential reasoning (RIMER) [48], as it fits both deterministic and stochastic systems with sufficient accuracy. RIMER is particularly effective in handling complex uncertain problems that cannot be easily expressed through deterministic and stochastic mathematical models [49]. However, when too many features are present, RIMER leads to an excessively large rule-base modeling, facing the rule combination explosion problem [50]. To solve it, scholars proposed the HBRB inference method [51], which can significantly reduce the size of the BRB approach, and it is able to construct an assessment system according to the actual structure of the system. Based on this, Cao et al. proposed a new optimization method to ensure the consistency of the HBRB model, making it more suitable for assessment of reliable fault diagnosis in inertial platforms [30]. This method was able to optimize the model by calculating the deviation between the assessing results of sub-models and the real values adjusting the parameters and the weights of rules. To date, it has never been used in information credibility assessment.

3. Method

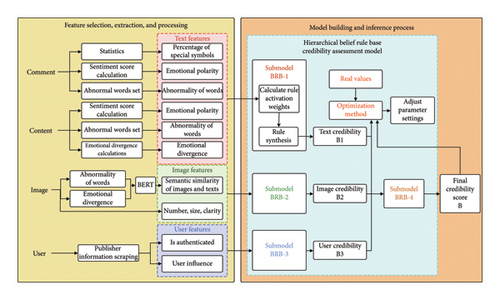

This section describes the proposed credibility assessment method with ante-hoc interpretability (Figure 1). The method was divided in two steps. Firstly, three features, i.e., text (comments and content), image, and user information, were selected, extracted, and processed, and secondly, the HBRB information credibility inference model was constructed for analyzing multimodal features.

3.1. Multimodal Feature Selection, Extraction and Processing

3.1.1. Text Features

Relevant text features were selected to identify false information as shown in Table 2. These features were reported to be effective when spreading false information to a wider audience [1, 9, 53, 54, 56].

| Dimension | Feature | Description | Reference |

|---|---|---|---|

| Text | Text_Exc_count | Percentage of “!” | [1, 14] |

| Text_Que_count | Percentage of “?” | [1, 33] | |

| Text_At_count | Number of “@” | [2, 52] | |

| Text_emotion | Emotional polarity in the original text | [9, 16, 53, 54] | |

| Text_abnormality | Text word abnormality in content | [10, 45] | |

| Comment_emotion | Emotional polarity in comments | [10, 45] | |

| Comment_abnormality | Text word abnormality in comments | [10, 42] | |

| Difference | Divergence in emotional comments | [55] |

- Note: Bold indicates the model scores that perform best on the Accuracy, Precision, Recall and F1 value.

The use of exclamation and question signs as well as referrals (Text_Exc_count, Text_Que_count, Text_At_count, respectively) are common techniques used to spread undesirable information [1, 2, 14, 52].

Text_emotion referred to the original text emotional polarity being positive or negative based on the sentiment analysis is performed on the text.

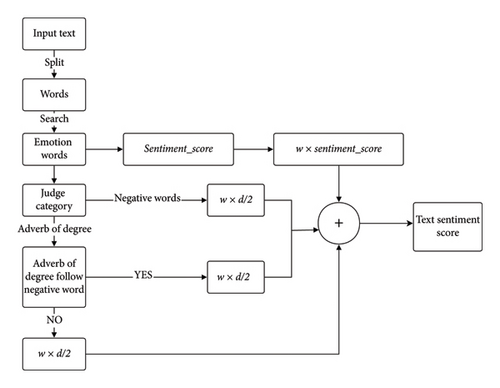

Comment_emotion referred to the overall emotion score per comment. For the original text and comment emotion tendency analysis (Figure 2), the scores of the emotion dictionary provided by BonsonNLP and HowNet were included. The process was presented in Figure 2 where, firstly, the emotion scores of the emotion-associated words were obtained by word splitting and emotion lexicon retrieval, then the associated scores were calculated. Finally, the text emotion scores were obtained by weighted summation.

3.1.2. Image Feature

False information often contains both text and image features whose content usually differs [60]. For example, when a piece of false information creates a fictional scenario or tells a fake story, it can be difficult to find matching images from real life [24, 44]. In addition, the resolution of images used in fake news is generally lower than that of real news as they have been manipulated and repeatedly shared. Repeated sharing also results in a lower number of images used in false information when compared to the number of images available for reliable information [24, 50].

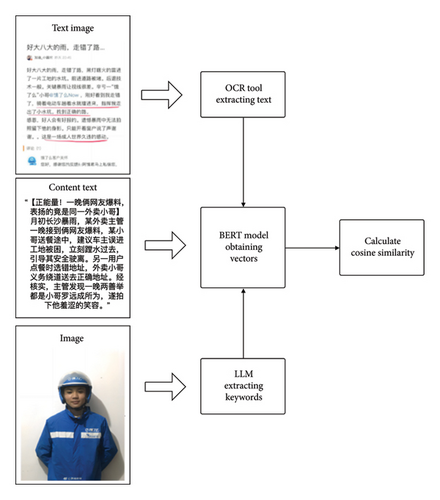

In this work, we selected semantic consistency features of images and text, and other related image features as measurement indicators, as shown in Table 3.

The complete process, supported by an example of the extraction of the semantic consistency features from images and texts, was included in Figure 3.

Img_definition referred to the image resolution. We used the OpenCV tool to obtain information about the average resolution of multiple images [61]. Then, we calculated the variance of the image grayscale data to measure the clarity characteristics of the image in order to verify its authenticity. Finally, img_num referred to the number of images of text.

3.1.3. User Features

Two user features, the certification and the influence, were included to assess veracity of social media information (Table 4).

The Is_certification referred to whether the users had authenticated their accounts on the platform. Influence was calculated as the ratio of the number of fans to the sum of the number of followers of a user. Generally, influential users are more careful when posting information in social media [9, 62]. In contrast, robots are typically used to spread false information holding less influential accounts [9, 52].

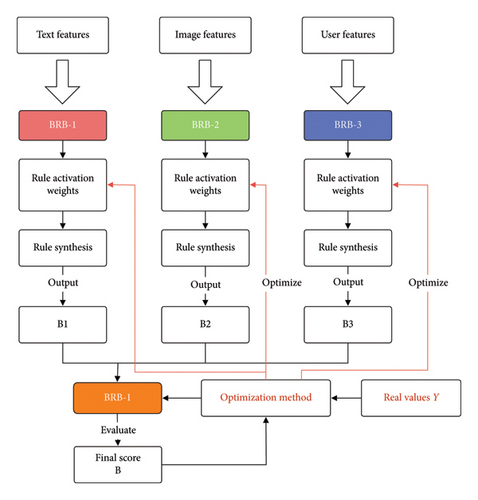

3.2. Credibility Assessment Model

We applied, for the first time, the HBRB model with ante-hoc interpretability to assess credibility of multimodal information in social media. The model presents a hierarchical inference structure for evaluating the credibility of multimodal information to solve the problems of illegibility and of excess in rule generation (rule combination explosion) (Figure 4).

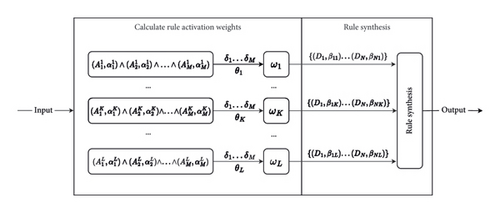

The model was divided into three parts: (1) The hierarchical rule activation for inference, (2) the parameter optimization algorithm, and (3) the comprehensive hierarchical inference to generate the final credibility scores. The algorithmic pseudocode of the model was described in Algorithm 1, and its notations were summarized in Table 5.

-

Algorithm 1: HBRB credibility assessment algorithm with parameter optimization.

- 1.

function HBRB Credibility Assessment (X);

-

Input: Input features X = {x1, x2, …, xM}

-

Output: Credibility score

- 2.

Initialize sub-models BRB-1, BRB-2, BRB-3, BRB-4;

- 3.

result1 ← Process SubModel (BRB-1, input features);

- 4.

result2 ← Process SubModel (BRB-2, input features);

- 5.

result3 ← Process SubModel (BRB-3, input features);

- 6.

result4 ← Process SubModel (BRB-4, [result1, result2, result3]);

- 7.

← result4;

- 8.

return;

- 9.

Function Process_SubModel (BRB, X):

- 10.

for each rule Rk in BRB do

- 11.

Calculate activation weight

- 12.

end

- 13.

Calculate μ:

- 14.

forj ← 1to N do

- 15.

Calculate :

-

- 16.

end

- 17.

Calculate sub-model result:

- 18.

return result;

- 19.

Function optimize_Parameter (training_data):

- 20.

Define objective function: ;

- 21.

Constraints: 0 ≤ βj,k ≤ 1, 0 ≤ θk ≤ 1, 0 ≤ δi ≤ 1;

- 22.

Use fmincon to minimize ξ(P) subject to constraints;

- 23.

return optimized parameters P = {βj,k, θk, δi};

| Symbol | Description |

|---|---|

| xi | Input feature |

| Set of reference values for xi | |

| M | Number of input features |

| Rk | k-th rule in the belief rule base |

| Dj | j-th possible result in the belief distribution |

| βj,k | Belief degree to Dj for the k-th rule |

| δi | Weight of the i-th feature indicator |

| θk | Weight of the k-th rule in the belief rule base |

| ωk | Activation weight of the k-th rule |

| Matching degree of the i-th attribute in the k-th rule | |

| L | Total number of rules |

| N | Total number of possible results |

| μ | Normalization factor in the ER algorithm |

| Combined belief degree to Dj | |

| Final credibility score | |

| ξ(P) | Objective function for parameter optimization |

| ym | Actual credibility score for the m-th training sample |

| Predicted credibility score for the m-th training sample | |

| P | Set of parameters to be optimized (δi, θk, βj,k) |

3.2.1. Framework

The HBRB model (Figure 4) adopted a top-down hierarchical inference process to finally produce the results. The hierarchical structure allowed for a nuanced assessment of credibility. Lower-level sub-models (BRB-1, BRB-2, BRB-3) processed individual modality features, producing intermediate credibility scores for each modality. These scores then flowed into the higher-level sub-model (BRB-4), which integrated the modality-specific assessments to produce a final credibility score. This structure enabled the model to capture both modality-specific and cross-modal credibility indicators.

3.2.2. Inference Process

The rule activation process began with the input data being matched against the reference values in each rule. The degree of activation for each rule was then calculated based on these similarity measures and the attribute weights. Rules with higher degrees of activation had a greater influence on the final credibility assessment. In addition, represented the weight of each feature indicator in this rule, and the weight of this rule in the confidence rule-base was θk. The results obtained by the rule activation and fusion were sent to the next submodel as input forming the layers of inference, as shown in Figure 5.

3.2.3. Parameter Optimization

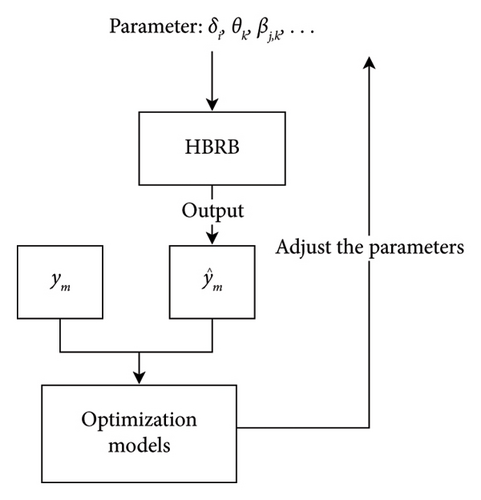

During the inference process, various parameters of the BRB model, such as the attribute weights δi, the rule weights θk, and the belief levels βj,k, played a crucial role in the accuracy of the assessment results. The attribute weight δi influenced the importance of each input feature. A higher δi value indicated that the corresponding feature had a greater impact on the rule activation and subsequent credibility assessment. The rule weight θk determined the significance of each rule in the BRB approach. Rules with higher θk values had a stronger influence on the final credibility assessment. The belief level βj,k distributed the credibility results over all possible outcomes. It represented the degree of belief that the k-th rule pointed to the j-th assessment grade, allowing for a nuanced representation of uncertainty in the assessment. Inaccuracies in the parameters directly affected the inference performance of the model. To improve the performance of the model, we built an optimization model (Figure 6) to find the optimal δi, θk, and βj,k.

4. Experiments

4.1. Dataset

The experimental data used in this research came from the Chinese Weibo information dataset provided by the Beijing Municipal Bureau of Economy and Informatization. The dataset contained multimodal information collected by using the Weibo ID. The final filtered dataset contained 1102 true entries and 1327 false ones.

4.2. Selection of Features

To select the most suitable features for assessing the credibility of information on the Weibo platform and to reduce the number of rules to solve the rule combination explosion problem, we calculated the correlation between the 14 previously extracted features (described in Tables 2, 3, 4). Credibility was assessed based on the Spearman’s rank correlation coefficient. As a result, nine highly relevant features were selected: (1) as text features: F1: Comment_emotion, F2: Text_abnormality, F3: Text_Exc_count and F4: Comment_abnormality; (2) as image features: F5: Similarity_ocr, F6: Img_num, and F7: Size; and (3) as user features: F8: Is_certification and F9: Influence. The results of the analysis were shown in the heat map of Figure 7.

Two user features, “Influence” and “Is_certification,” showed a strong correlation with credibility. Our results confirmed that authenticated users or users with a large number of followers tended to be more concerned about their reputation and, subsequently, were more careful when posting information.

The three image features “Img_num,” “Size”, and “Similarity_ocr” also presented a positive correlation with credibility. We found that most of the real information contained multiple images for a piece of news which reflected the authenticity of the content from multiple perspectives. Because these images had only been published once, they presented high resolution (high pixel value images) as they had not been compressed by copying and forwarding. In addition, users wrote the relevant text in the image to form an infographic and also repeated the relevant text in the content to make true information easier to share, resulting in similar OCR values from the extracted text and the actual content.

There was also a positive correlation between the “Comment_emotion” and credibility. We detected that truthful information tended to be positive while false information, on the other hand, was inflammatory causing negative emotions among netizens.

The features “Text_Exc_count,” “Text_abnormality,” “Comment_abnormality,” and credibility, respectively, showed negative correlation. False information was often accompanied by “!” to aggravate the tone, resulting in some keywords appearing very often. In addition, users also had a certain ability to identify dubious information questioning it or using critical words, such as “rumour,” “fake,” and “disbelief.”

4.3. Baselines and Evaluation Metrics

The output of the HBRB-MICA model was a credibility score, a value ranging from 0 to 1. The information extracted from the public dataset used was labelled as true or false (1 or 0), so we could classify the news with scores larger than 0.5 as true information and samples with scores lower than 0.5 as false information.

- •

The support vector machine (SVM) [52] model is an effective classification model. Its core idea is to achieve segmentation of data by constructing a hyperplane for classification. We used an SVM with a Gaussian kernel (RBF) function.

- •

Back propagation neural network (BPNN) [65] achieves efficient mapping from input space to output space by building a series of hierarchical structures to extract and learn key features in the data layer by layer. The structure of the BPNN model in this experiment included an input layer, an output layer, and a hidden layer.

- •

DT modeling [23] is an intuitive and easy-to-explain form of machine learning, where the core idea is to recursively partition data by constructing a series of simple decision rules to make predictions about the target variable.

- •

Fake news detection by semantic correlations between text content and images (FND-SCTI) [66] learns image and text features through VGG-19 and hierarchical attention mechanisms, respectively, and uses variational self-encoders to learn shared latent representations of text and images.

- •

Cross-modal attention residual and multichannel convolutional neural networks (CARMN) [67] proposes and fuses cross-modal attention residual network (CARN) and multichannel convolutional neural network (MCN) to selectively extract the information related to a target modality from another source modality.

- •

CAFE [4] is an ambiguity-aware multimodal method for fake news detection, which uses Kullback–Leibler (KL) divergence to quantify the degree of ambiguity between different modalities. CAFE uses a strategy of adaptive aggregation of unimodal features and cross-modal correlation to improve the accuracy of detection.

Three of these models are machine learning models (SVM, BPNN, and DT) and the other three are deep learning models (FND-SCTI, CARMN, and CAFE). We used accuracy, precision, recall, and F1 score as evaluation metrics [20].

4.4. Results of the HBRB-MICA Optimization Process and of the Comparison Experiments

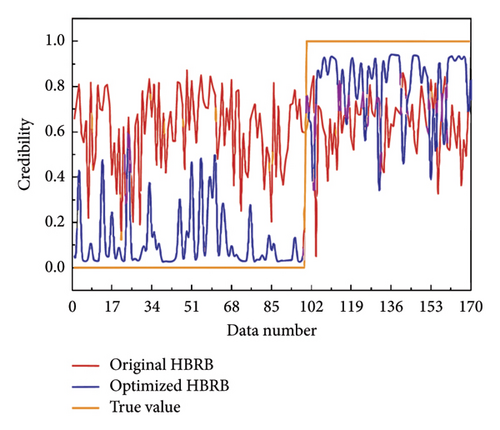

In this paper, 60% of the experimental data were sent to the sub-models BRB-1, BRB-2, and BRB-3 for training, and the remaining 40% of the data were evaluated by optimizing the parameters, of which 80% were used for training and 20% for testing. The optimized model achieved a better credibility score. The RMSE of the output results of the initial and the optimized models were 0.5463 and 0.2523, respectively, being the accuracy of the model improved by 53.8% through optimization (Figure 8).

Tables 6, 7, 8, 9 presented some rules and weights for the optimized sub-models BRB-1, -2, -3, and -4, respectively. The complete tables were included in appendix, respectively. In these tables, the levels of credibility were classified as fully credible (F), partly credible (P), and unbelievable (U).

| BRB-1 | Weight | Feature | The level of credibility | |||||

|---|---|---|---|---|---|---|---|---|

| F1 | F2 | F3 | F4 | Fully credible (F) | Partly credible (P) | Unbelievable (U) | ||

| 1 | 0.5243 | Pos | Low | Low | Low | 0.4740 | 0.3073 | 0.2187 |

| 2 | 0.5474 | Pos | Low | Low | Med | 0.2203 | 0.3028 | 0.4769 |

| 3 | 0.4219 | Pos | Low | Low | High | 0.3262 | 0.3311 | 0.3427 |

| 4 | 0.5017 | Pos | Low | Med | Low | 0.2790 | 0.3317 | 0.3893 |

| … | … | … | … | … | … | … | … | … |

| 41 | 0.8821 | Neg | High | Low | Med | 0.0266 | 0.0670 | 0.9063 |

| … | … | … | … | … | … | … | … | … |

| 54 | 0.4623 | Neg | High | High | High | 0.3296 | 0.3323 | 0.3381 |

| BRB-2 | Weight | Feature | The level of credibility | ||||

|---|---|---|---|---|---|---|---|

| F5 | F6 | F7 | Fully credible (F) | Partly credible (P) | Unbelievable (U) | ||

| 1 | 0.0227 | Low | Low | Low | 0.3551 | 0.3413 | 0.3036 |

| 2 | 0.0002 | Low | Low | Med | 0.3459 | 0.3500 | 0.3041 |

| 3 | 0.5729 | Low | Low | High | 0.4481 | 0.2942 | 0.2577 |

| 4 | 0.4112 | Low | Med | Low | 0.3277 | 0.3374 | 0.3349 |

| … | … | … | … | … | … | … | … |

| 27 | 0.5123 | High | High | High | 0.3384 | 0.3384 | 0.3384 |

| BRB-3 | Weight | Feature | The level of credibility | |||

|---|---|---|---|---|---|---|

| F8 | F9 | Fully credible (F) | Partly credible (P) | Unbelievable (U) | ||

| 1 | 0.6883 | False | Low | 0.0159 | 0.0313 | 0.9528 |

| 2 | 0.3635 | False | Med | 0.1926 | 0.0578 | 0.7496 |

| 3 | 0.1702 | False | High | 0.3825 | 0.3519 | 0.2656 |

| 4 | 0.4795 | True | Low | 0.0430 | 0.0304 | 0.9266 |

| 5 | 0.4652 | True | Med | 0.4709 | 0.5190 | 0.0101 |

| 6 | 0.3646 | True | High | 0.9803 | 0.0136 | 0.0061 |

| BRB-4 | Weight | Feature | The level of credibility | ||||

|---|---|---|---|---|---|---|---|

| B1 | B2 | B3 | Fully credible (F) | Partly credible (P) | Unbelievable (U) | ||

| 1 | 0.3605 | F | F | F | 0.8555 | 0.1026 | 0.0419 |

| 2 | 0.7610 | F | F | P | 0.4526 | 0.3067 | 0.2407 |

| 3 | 0.4223 | F | F | U | 0.4272 | 0.3206 | 0.2522 |

| 4 | 0.3116 | F | P | F | 0.5541 | 0.2692 | 0.1767 |

| … | … | … | … | … | … | … | … |

| 26 | 0.0239 | U | U | F | 0.3323 | 0.3326 | 0.3351 |

| 27 | 0.0061 | U | U | U | 0.3319 | 0.3328 | 0.3352 |

Table 6 presented four features, F1 (Comment_emotion), F2 (Text_abnormality), F3 (Text_Exc_count), and F4 (Comment_abnormality). F1 contained two levels of sentiment (positive and negative) and the others included three levels of credibility (low, medium, and high). Fifty-four rules were obtained by combining the different states of the four features. These rules were learned and optimized through model training to obtain the weights of rules and probabilities of the three credibility levels (fully credible, partly credible, and unbelievable) at the time the rule was triggered (Supporting Table 1 in appendix).

Similarly, the results on Table 7 indicated that the BRB-2 sub-model contained three types of image features: F5 (Similarity_ocr), F6 (Img_num), and F7 (Size), and each of them contained three levels of credibility (low, medium, and high). The sub-model contained a total of 27 rules (Supporting Table 2 in appendix).

The results shown on Table 8 indicated that the BRB-3 sub-model contained two types of user features and a total of six rules. F8 (Is_certification) included two types (true and false) and F9 (Influence) comprised three levels of credibility (low, medium, and high).

Finally, Table 9 showed the output of sub-model BRB-4, which referred to the evaluation results of the three sub-models, the corresponding result types (fully credible, partly credible, and unbelievable), and the 27 rules (Supporting Table 3 in appendix).

Therefore, to validate our model, we selected both real and fake information from the test set (Table 10). An example can be seen in Table 11.

| Information | Publisher | Description | Original text |

|---|---|---|---|

| Real | @Tonight newspaper | The rumor monger was caught | “Shouguang public security captured two suspects spreading plague rumors in violation of the law at 11:05 on August 25…” |

| Fake | @Mnekane-wang | Rumor about missing persons | “Missing person notice. There are clues and a reward of 100,000 yuan to help spread…” |

| News | F1 | F2 | F3 | F4 | F5 | F6 | F7 | F8 | F9 | B |

|---|---|---|---|---|---|---|---|---|---|---|

| Real | Negative | 1.28 | 0 | 0.0534 | 0.90 | 4 | 276,683 | True | 3844 | 0.94 |

| Fake | Positive | 4.77 | 0 | 0.0348 | 0.16 | 1 | 25,600 | False | 9 | 0.03 |

When we input the feature parameter X = {Fi, i = 1, 2, 3, 4} of the fake information into the BRB-1 sub-model, it activated the rules w33 = 0.0082, w34 = 0.0732, w39 = 0.0368, and w41 = 0.8818 through Equation (7). Then, based on Equation (8), the model performed rule fusion and calculated the result B1 = 0.2440 (U) using Equation (10). Notably, activated rule 41, which presented the highest weight, suggested that the text features of the information exhibited negative sentiment in comments, a low frequency of exclamation marks “!,” medium text anomalies, and medium level of comment disagreement. This indicated that it was difficult to determine the authenticity of the information based on these text features alone.

When we input the feature parameter X = {Fi, i = 5, 6, 7} into the BRB-2 sub-model, it activated the rules w1 = 0.0082, w2 = 0.00002, w10 = 0.0014, and w11 = 0.0005, finally obtaining B2 = 0.0628 (U). Here, the activated rule 1, with the highest weight, pointed to the poor graphic semantic consistency in the image features, a low number of images, and a low image quality, indicating potential false information in the image.

Inputting the feature parameter X = {Fi, i = 8, 9} into the sub-model BRB-3 activated the rules w1 = 0.9848, w2 = 0.0152, obtaining B3 = 0.0600 (U). The activated rule 1 presented the highest weight, indicating that the publisher was unverified and less influential, suggesting lower credibility of the published content. Combining the assessment results of all modalities into the BRB-4 sub-model gave a result of 0.0290, indicating extremely low credibility for this news (Table 11).

When assessing real information, the activation rules and weights obtained by BRB-1 through Equation (7) were w2 = 0.3432, w3 = 0.0010, w12 = 0.6542, w13 = 0.0017 (Supporting Table 1 in appendix). The activation rules and weights obtained by BRB-2 were w15 = 0.0039, w16 = 0.0006, w17 = 0.0850, w18 = 0.0043, w24 = 0.5942, w25 = 0.0036, w26 = 0.3067, and w27 = 0.0018 (Supporting Table 2 in appendix). The activation rules and weights obtained by BRB-3 were w4 = 0.3805 and w5 = 0.6195 (Table 8). The activation rules were fused by obtaining activation rules for each model based on Equation (8). The results obtained by the sub-models BRB-1, BRB-2, and BRB-3 based on Equation (10) were 0.5724 (P), 0.8301 (F), and 0.7587 (F), respectively. The rules activated by each sub-model collectively indicated that the real information was characterized by positive sentiment in comments, a low degree of text anomaly, low proportion of “!,” low comment disagreement, large number of images, high image quality, and that the publisher was verified and influential. When processed through the BRB-4 sub-model, a very high final credibility level of 0.9434 was achieved (Supporting Table 3 in appendix).

In the inference process, the model learnt the rules and weights of different modalities of feature activations, so they could be classified as fully credible, partly credible, and unbelievable. HBRB hierarchically explained the low credibility in certain information through rule activation as well as it provided detailed interpretability and increased the transparency of the assessment process. This may facilitate its integration into automated information assessment systems, allowing users to comprehend and trust the system’s judgments. This system could also be benefited from timely human-machine feedback, enhancing overall performance.

- 1.

The HBRB-MICA model constructed in this paper obtained the highest accuracy, precision, and F1 value when compared to the other models. The recall rate was slightly lower than that of the SVM model, which demonstrated the effectiveness of the HBRB-MICA model in assessing information credibility.

- 2.

Although the SVM and BPNN models obtained good accuracy results, the inference process was inexplicable, and thus the modeling process was not interpretable. In contrast to that, the HBRB model presented a transparent inference engine that could reasonably explain the output results letting the verification of the assessment results. This made the HBRB-MICA model more explanatory and reliable.

- 3.

FND-SCTI, CARMN, and CAFE performed effectively on detection of fake news (F1 = 0.757, F1 = 0.756, F1 = 0.842, respectively), but the results were slightly lower than those for HBRB-MICA. This was because the process of acquiring image features and fusing text features by deep learning models led to distortion, which reduced their ability to combine the two modalities to determine the credibility of the message. In addition to this, all these models ignored user’s account features. Again, our method effectively addressed this problem by identifying the text semantic information of the image and calculating the ambiguity of the text. It assessed credibility by integrating multiple features, which effectively overcame the semantic feature distortion problem.

| Model | Accuracy | Precision | Recall | F1 |

|---|---|---|---|---|

| SVM | 0.903 | 0.808 | 0.920 | 0.861 |

| BPNN | 0.904 | 0.842 | 0.891 | 0.866 |

| Decision tree | 0.895 | 0.828 | 0.870 | 0.848 |

| FND-SCTI | 0.758 | 0.757 | 0.757 | 0.757 |

| CARMN | 0.741 | 0.762 | 0.750 | 0.756 |

| CAFE | 0.840 | 0.855 | 0.830 | 0.842 |

| HBRB-MICA | 0.917 | 0.885 | 0.911 | 0.898 |

- Note: Bold values represent the best values.

5. Discussion and Conclusions

In this study, we present a model for assessing the credibility of social networking content leveraging multimodal features. Through a thorough review of related literature, we identified and selected key feature indicators that influenced the credibility of information from three distinct modalities: text, images, and user profiles. We employed the HBRB inference method for credibility assessment. This method effectively circumvented the “rule combination explosion” as the number of features with low relevance were reduced diminishing the impact of irrelevant features on the results. To date, this is the first time that HBRB is used for assessing multimodal social media credibility.

Using the hierarchical structure of the HRBR-MICA approach enables the presentation of the internal assessment process, providing a more transparent and controllable method. In line with this, in online information governance, this method allows for integration with expert knowledge or experience as it could be refined by adjusting the knowledge rules, adding significant practical value, and enhancing its result interpretability.

While this study presents a novel approach to assess multimodal credibility in social media using the HBRB-MICA model, there are still some limitations that need to be addressed. The current model relies heavily on the quality and comprehensiveness of the initial feature selection, which may not capture all nuances of credibility across different social media platforms or cultures. Future work should expand the feature set (including video images, speech, author tags, image similarity, image modification features, among others) to enrich the validity and interpretive power of the model. Also, the multimodal feature fusion using multilevel feature fusion network (MFFN) should be explored to optimize the hierarchical structure of HBRB [68]. Finally, the integration of this method with LLM technology on real-time social media monitoring systems to improve the interpretation of the model could be performed.

Conflicts of Interest

The authors declare no conflicts of interest.

Funding

This paper was supported by the National Natural Science Foundation of China (project numbers are 72274096, 71774084, 72301136, and 72174087) and the Foreign Cultural and Educational Expert Program of the Ministry of Science and Technology of China (G2022182009L).

Supporting Information

The supporting information file contains three tables of the rules and weights of three submodels of the HBRB model after training. They are Supporting Table 1, Supporting Table 2, and Supporting Table 3. Supporting Table 1 is the table of rules and weights for submodel BRB-1, which is the supporting information to Table 6. Supporting Table 2 is the table of rules and weights for submodel BRB-2, which is the supporting information to Table 7. Supporting Table 3 is the table of the rules and weights for submodel BRB-3, which is the supporting information to Table 9.

Open Research

Data Availability Statement

The data used to support the findings of this study are available on request from the corresponding author.