Fine-Grained Dance Style Classification Using an Optimized Hybrid Convolutional Neural Network Architecture for Video Processing Over Multimedia Networks

Abstract

Dance style recognition through video analysis during university training can significantly benefit both instructors and novice dancers. Employing video analysis in training offers substantial advantages, including the potential to train future dancers using innovative technologies. Over time, intricate dance gestures can be honed, reducing the burden on instructors who would, otherwise, need to provide repetitive demonstrations. Recognizing dancers’ movements, evaluating and adjusting their gestures, and extracting cognitive functions for efficient evaluation and classification are pivotal aspects of our model. Deep learning currently stands as one of the most effective approaches for achieving these objectives, particularly with short video clips. However, limited research has focused on automated analysis of dance videos for training purposes and assisting instructors. In addition, assessing the quality and accuracy of performance video recordings presents a complex challenge, especially when judges cannot fully focus on the on-stage performance. This paper proposes an alternative to manual evaluation through a video-based approach for dance assessment. By utilizing short video clips, we conduct dance analysis employing techniques such as fine-grained dance style classification in video frames, convolutional neural networks (CNNs) with channel attention mechanisms (CAMs), and autoencoders (AEs). These methods enable accurate evaluation and data gathering, leading to precise conclusions. Furthermore, utilizing cloud space for real-time processing of video frames is essential for timely analysis of dance styles, enhancing the efficiency of information processing. Experimental results demonstrate the effectiveness of our evaluation method in terms of accuracy and F1-score calculation, with accuracy exceeding 97.24% and the F1-score reaching 97.30%. These findings corroborate the efficacy and precision of our approach in dance evaluation analysis.

1. Introduction

Dance, a captivating art form, finds expression through movement and the performer’s actions [1]. While professional dancers can effortlessly distinguish between various dance styles due to their extensive training, amateurs often struggle with such a diverse range [2]. Imagine ballet—a seasoned ballerina—can instantly assess a performance’s fidelity to the dance type, a feat that might elude an amateur. In recent years, video technology research has gained significant traction [3]. Dance motion recognition, one of many video technologies, holds immense potential for intelligent applications and is widely employed in various aspects of life and education [4, 5]. Training and coaching an intelligent dance assistant require considerable time and effort from both the learner and the instructor. Repeatedly performing specific gestures can be challenging and can negatively impact the psychology of both students and teachers [6]. Dance postures can be depicted using features extracted from their corresponding images. Consequently, existing extraction techniques focus on this approach, emphasizing the spatial domain of the video, namely, extracting pixel information from video frames while disregarding the temporal changes in motion states. The transition from one motion to the next is crucial, as there are unrelated movements that make understanding lessons difficult for learners.

- •

Providing dancers with immediate feedback on their technique during practice.

- •

Offering personalized coaching suggestions based on an individual’s strengths and weaknesses.

- •

Designing customized training programs that target specific dance skills.

- •

Automating the assessment of dance moves, streamlining processes for competitions or online learning platforms.

While recent advancements in deep learning techniques are widespread, their contributions to dance education remain limited. Therefore, applying deep learning and video analysis for computer-based dance classification is necessary. This is particularly relevant in dance training centers, where university dance students rely on recorded videos for self-assessment due to the lack of a realistic learning environment. On one hand, university dance students require more experimentation with online video in teacher training and other professional programs using web video applications. Our system, designed to support self-assessment, requires students to be familiar with the assessment standards and their corresponding criteria. Once trained, the system improves the quality and evaluation of both regular and complex dance movements. It also helps students analyze instructor feedback more critically and take a more proactive approach. Hence, subjective evaluations significantly impact dance participants’ outcomes. Therefore, establishing safety and trust is crucial during the assessment process, especially when dealing with sensitive and complex life experiences.

We argue that instead of relying on singular images, dance classification should leverage multiple frames within an action sequence. This approach has already shown promise in evaluating the quality of other human actions [10, 11]. In the realm of dance, the ability to accurately identify and classify different styles is crucial for various applications, ranging from educational assessments to personalized recommendations for dance enthusiasts. Traditionally, dance style recognition has relied on centralized cloud-based systems [12, 13], which often face limitations in terms of latency, bandwidth constraints, and privacy concerns. However, the advent of cloud space has opened up new possibilities for dance style recognition, offering a more efficient, secure, and responsive approach.

Our method departs from these existing works. We propose utilizing a deep learning model with a dance video dataset. This approach allows us to analyze multiple frames within a dance video, not only classifying the dance type but also measuring the video’s accuracy—how closely it resembles other dances of its category. Moreover, this paper proposes a novel approach, employing deep learning models to classify dances based on two factors: (1) a group of dancers’ general understanding of a dance type and (2) how closely the dance aligns with the deep learning model’s interpretation of that class.

- •

Automated evaluation: the proposed method replaces manual evaluation with an automated system, delivering immediate results and eliminating wait times.

- •

Active online learning: the model facilitates active online learning by leveraging trained videos.

- •

High effectiveness: experiments confirm the effectiveness of three practical models. By utilizing short video clips, we conduct dance analysis employing techniques such as fine-grained dance style classification in video frames, convolutional neural networks (CNNs) with channel attention mechanisms (CAMs), and autoencoders (AEs).

- •

Cloud computing: cloud computing presents a transformative approach to dance style recognition, offering significant advantages in terms of latency, privacy, and personalized experiences. As cloud platform technology continues to evolve, we can expect to see even more innovative applications emerge in the field of dance, further enhancing the appreciation and enjoyment of this expressive art form.

This article is divided into five sections. Section 2 dives deep into the latest and most sophisticated techniques used for classifying dance styles in multiple videos and datasets. Section 3 provides a detailed explanation of the method we propose. Section 4 outlines the specific datasets and the procedures used in our experiments. Finally, Section 5 concludes the article by discussing future directions and functionalities.

2. Related Work

Current dance assistant systems often struggle to capture the essence of dance, primarily due to their focus on static pose analysis [14–16]. However, some approaches fail to account for the intricate transitions between poses, which are fundamental to effective dance execution. Moreover, they overlook the nuances of motion dynamics, such as acceleration, deceleration, and changes in direction, which are crucial for understanding the timing and rhythm of dance movements. To address these limitations, some methods propose machine learning strategies and video processing models for the classification of dance styles. Thus, recently, approaches involve utilizing multiple frames within an action sequence, rather than relying on single images. This aligns with the inherent fluidity of dance and allows for a more comprehensive understanding of the movement patterns involved.

Collaborative systems aim to improve decision-making accuracy in contexts where data reliability is subject to variation [17]. While our study focuses on dance style classification, future work could benefit from incorporating trust-weighted data selection during the training process to mitigate the impact of noisy or biased training samples.

Deep learning has emerged as a powerful tool for analyzing human movements, and its potential can be extended to dance classification [18–24]. By leveraging temporal action gradients, deep learning models can capture the temporal dynamics of dance movements, going beyond static pose analysis. This opens up new avenues for developing dance assistant systems that can provide more accurate and personalized feedback to learners.

The effectiveness of the sequence-based approach has been demonstrated in assessing the quality of other human actions [25]. Notably, Wang et al. [26] investigated using deep learning models along with dance videos to predict virality. Their work introduced a novel network that captures temporal dynamics from video appearance to predict virality. Their findings indicate that facial expressions, background scenery, and the overall visual display of a dance video significantly impact virality prediction [26].

One approach prioritizes security for video transactions, focusing on blockchain technology and trusted computing [27]. However, this method does not delve into processing or analyzing the dance itself. Another approach utilizes deep learning for user behavior analysis and dance classification [18, 28]. However, these methods may face limitations due to changing user behavior and dependence on sensors [18]. In addition, a study on social media dance trends focuses solely on user engagement prediction, neglecting in-depth dance analysis [29].

The study [30] discusses the need for modernizing dance education in universities. The authors propose research into new teaching methods incorporating virtual reality technology [31, 32]. While these studies explore virtual dance environments, they do not address video analysis for dance assessment.

Dance motion analysis was conducted using recurrent neural networks (RNNs) in a study [33]. However, these techniques may have limitations in generating longer dance sequences. An alternative approach uses a multimodal convolutional autoencoder for dance motion generation. However, this method requires specific input data formats, potentially limiting its flexibility. Overall, this study highlights the need for a balance between secure video transactions and advanced processing techniques for in-depth dance analysis. It provides a critical review of existing methods and suggests opportunities for further research in dance video analysis techniques.

Deep learning techniques using CNNs are explored alongside other approaches like optical flow and support vector machines (SVMs) for classification [34–36]. Existing works demonstrate the effectiveness of deep learning with handcrafted features [37, 38]. Feature extraction and multiclass classification using AdaBoost are explored in [39, 40]. Another study focuses on recognizing dance forms and hand gestures (mudras) using image data and SVMs with Histogram of Oriented Gradients (HOGs) features [41].

Our proposed approach represents a significant departure from conventional methods in dance classification research. By harnessing the power of deep learning models and leveraging a comprehensive dance video dataset, our method offers a paradigm shift in the analysis of dance movements. Unlike previous techniques that predominantly focus on static pose analysis and fail to capture the temporal dynamics and subtle nuances of motion transitions, our approach considers multiple frames within each dance sequence. This holistic perspective enables us not only to accurately classify the dance style but also to assess the fidelity of the performance by measuring how closely it aligns with the characteristic features of its category. Moreover, our method introduces a novel approach by incorporating the collective understanding of dancers and aligning it with the interpretation of deep learning models, thereby enhancing the overall accuracy and reliability of the classification process. Through rigorous experimentation, we have demonstrated the efficacy of our approach, achieving significantly higher evaluation rates compared to existing methods. Furthermore, our method embraces emerging technologies such as cloud computing, paving the way for more efficient, personalized, and immersive experiences in dance style recognition.

3. Proposed Method

We offer a comprehensive solution aimed at identifying individuals’ styles during dance performances to determine dance action classes in videos. Our innovative system, utilizing deep learning capabilities, has shown remarkable efficiency in tackling the complexities of video classification across various domains. Our proposed method consists of four main elements: video preprocessing, deep feature extraction, video representation and classification, and cloud computing section.

To ensure data integrity, precise preprocessing procedures are performed on online datasets to improve model performance. In addition, frames extracted from each video clip are standardized, ensuring uniform dimensions before entering convolution blocks. Based on this, our method’s performance ensures that the proposed approach can be employed in real-time to classify various dance styles, aiding students under the guidance of instructors who aspire to perform various dance actions professionally.

3.1. Video Frame Preprocessing

3.2. Fine-Grained Features

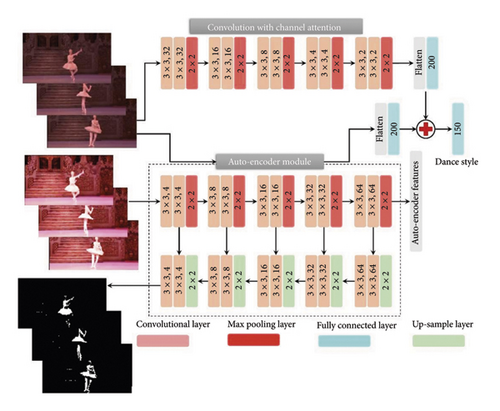

Figure 1 depicts a CNN architecture used to predict the permeability of porous materials. This architecture consists of several types of layers, including convolutional layers (light red), fully connected layers (light blue), upsampling layers (light green), and max-pooling layers (red).

Another way to describe this CNN is that it has two convolutional layers followed by a max-pooling layer. This max-pooling layer helps reduce the number of parameters in the network and allows it to capture a wider range of information by shrinking the size of the processed data. In essence, the CNN structure defines the overall organization of the network, with the AE being its core element. Input frames of dancers are fed into the model, starting with a size of 128 × 128 pixels and just one channel. The first layer in the CNN architecture processes these frames, reducing their detail to lower dimensions to decrease computational complexity and introducing two channels. The number of channels in the output, called a feature map, is determined by the number of kernels used in the convolution operation.

As the CNN continues processing, the frames progressively shrink while the number of channels increases. This creates a series of feature maps, each highlighting different aspects of the image: 4 × 4 with 32 channels, 8 × 8 with 16 channels, and so on, until it reaches a final size of 64 × 64 with 2 channels. The most prominent feature at each location in the image is represented by a value in the corresponding feature map. This information is extracted based on a “feature map” that guides the process. To connect these obtained feature maps for further analysis, they are flattened into 1D vectors. The CNN part generates a 512-element vector, while the AE part creates a 1024-element vector from its own 4 × 4 × 64 feature map. Figure 2 provides a detailed explanation of how the AE and CNN work together (AE–CNN architecture). It also briefly touches upon interconnected networks and the functionalities of nodes within them, but a detailed explanation is missing.

In this approach, a fully connected network is employed, a common technique in machine learning for leveraging high-level data for tasks like estimation. This network, denoted by s for the current layer, r for the number of neurons per layer, and w and b for the number of fully connected layers, progressively reduces the data dimensionality (e.g., from 400 to 150 neurons, as shown in Figure 2) to classify actions based on input frames. However, using low-resolution input images presents challenges. Similar to feature engineering tasks, reconstructed features from poor quality images can be unsuitable. The limited information in low-resolution frames can lead to training confusion and reduced accuracy. While CNNs excel at extracting low-level features for activity detection, they struggle to predict high-resolution properties from low-resolution data. To address this limitation, a hybrid approach is proposed. This method combines low-resolution frames with high-resolution features to enhance the trained network’s performance. While the CNN extracts feature from the low-resolution frames to form fine-grained features, a separate AE module is employed to create high-resolution representations.

3.3. AE Module

This system utilizes an encoder–decoder architecture to process human activity data. The encoder takes low-resolution data and transforms it into a series of feature maps, starting with a size of 128 × 128 × 1 and progressively reducing to 4 × 4 × 64 through five layers of operations. The decoder, on the other hand, aims to reconstruct high-resolution representations of human actions based on these compressed features.

The system gradually builds its complexity during training. It starts with a basic architecture and progressively adds more layers to both the encoder and decoder sections. Each layer is initialized individually, ensuring a controlled learning process. This process continues until the encoder reaches its maximum of five layers. The decoder likely follows a similar approach, although the text does not explicitly mention the final number of decoder layers.

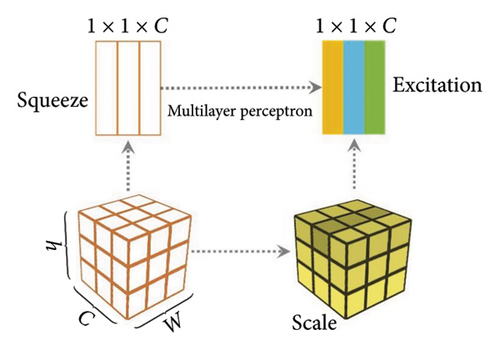

3.4. Channel Attention

CAMs are a special type of module used in CNNs to focus on specific features within an image. They achieve this by analyzing the relationships between different channels in a feature map. Each channel in a feature map detects a particular feature, and CAMs help determine how important each detected feature is for understanding the image content. To calculate CAMs efficiently, the system reduces the size of the spatial information in the feature map. This is done using two components: a squeeze block and an excitation block. These blocks operate within the feature channel domain. In simpler terms, CNNs extract features from images to make decisions. CAMs refine this process by adjusting the importance of different features based on their relevance to the image content.

During the “excitation” phase, the system uses special gate mechanisms to account for the relationships between different channels in the feature map. These mechanisms involve two layers that process information nonlinearly and establish full connections between elements.

3.5. Cloud Computing

- 1.

Faster processing: cloud computing reduces processing delays, allowing for quicker identification of dance styles in video clips. This translates to faster categorization and organization of dance-related content within digital media platforms.

- 2.

Improved accuracy: by performing initial processing tasks like feature extraction closer to the source, the system achieves higher accuracy in recognizing dance styles. This leads to more reliable and accurate labeling of dance videos, facilitating efficient search and retrieval within digital media libraries.

- 3.

Efficient workload distribution: the workload is intelligently divided between local resources and the cloud. This frees up valuable cloud resources for other tasks while maximizing the utilization of local hardware, leading to a more efficient overall system for managing digital media content.

- 1.

Data acquisition: video data are captured by cameras and fed into the system.

- 2.

Cloud processing: key features relevant to dance style identification are extracted from video frames at the cloud layer.

- 3.

Cloud processing: the extracted data are sent to the cloud, where more sophisticated algorithms powered by deep learning models are used for final classification of dance styles.

4. Results and Analysis

According to the results and analyzes of the fourth section, we must first check how this model has proven its performance compared to previous methods. Then, by reviewing the obtained results, we can proceed to a more detailed interpretation and analysis of the model’s performance and effects.

4.1. Datasets

The used dataset amalgamates videos sourced from two channels: TikTok [43], a widely used social media platform [43], and contributions from research participants who interpreted assigned dance styles. Overall, the dataset comprises 240 videos categorized into 12 distinct dance styles. Crucially, each video is singularly assigned to a class, precluding multiclass classification. To curate the dataset, we considered 20 participants, tasking each with performing all 12 dance styles. Participants received reference videos for each style and were instructed to create their rendition based on these references. The resultant videos exhibit variations in quality, encompassing discrepancies in color grading, backgrounds, and image resolution. In addition, certain videos incorporate TikTok filters, introducing visual artifacts that may impact scene fidelity. This gave us 263,471 images in total. Each picture belongs to the same dance style as the video it came from.

We checked the pictures to make sure they were good. We got rid of blurry ones or ones without any dancing (like blank screens). Since videos are not all the same length and we threw some pictures out, the number of pictures for each dance style is different. You can see this breakdown in Table 1. This method gave us a bunch of pictures showing different moments from the dance videos, with each picture belonging to its specific dance class. For example, the “Say So” class has 1138 pictures of dancing moves from its 20 videos. There are some example pictures in Figure 4.

| Dance style | Abbreviation | Dataset | No. of frames |

|---|---|---|---|

| Tokyo | Tok | TikTok-other social media | 22,365 |

| The Dance Song | TDS | TikTok | 19,856 |

| Tak Mau Mau | TMM | TikTok-other social media | 23,669 |

| Supalonely | SUP | TikTok | 18,987 |

| Slide To the Left | STTL | TikTok | 21,060 |

| Lottery | LOT | TikTok-other social media | 24,598 |

| Say So | SaS | TikTok-other social media | 24,890 |

| Laxed | LAX | TikTok-other social media | 23,591 |

| Diam Diam Menyukaiku | DDM | TikTok | 19,458 |

| Blinding Lights | BL | TikTok | 18,660 |

| Big Up’s | BU | TikTok-other social media | 22,625 |

| All TikTok Mashup | ATTM | TikTok-other social media | 23,712 |

Each dance video clip used in this study had an average duration of approximately 30–40 s, calculated based on the total number of frames extracted per dance style and an assumed frame rate of 30 frames per second. This relatively short length reflects a key characteristic of the TikTok platform, which inherently promotes short-form videos to encourage quick consumption and higher engagement. As a result, the dataset predominantly comprises short clips, aligning with the nature of the source platform. This focus on short clips was also advantageous for frame-level feature extraction and reducing computational complexity.

4.2. Experimental Setup

- •

Training set: comprising 80% of the data, used for model training.

- •

Test set: consisting of the remaining 20%, employed to assess the trained model’s performance.

Furthermore, the training set underwent a secondary division into a new training set and validation data, utilizing the 5-fold cross-validation method to ensure robust model evaluation.

By strictly adhering to this rigorous evaluation methodology and leveraging modern computational resources, we aim to elucidate the feasibility and effectiveness of our proposed approach in dance recognition tasks. Quantitative analysis was conducted using MATLAB, with the default model employing a learning rate of 0.001. Subsequently, a cutting-edge model incorporating a CNN and an autoencoder was developed, trained over 500 to 1000 learning cycles. Stochastic gradient descent (SGD) was utilized to further refine the optimized CNN structure and autoencoder.

Training the enhanced CNN model and autoencoder on the specified hardware configuration took approximately 9–15 h to complete. All models were fine-tuned based on transfer learning principles and convolutional networks. The training and validation processes encompassed error calculations, training parameter estimation, convergence assessment, and accuracy calculation. Moreover, the selection of key hyperparameters, including learning rate, batch size, and number of epochs, was guided by a combination of prior knowledge from related works in action recognition and video-based classification and an iterative trial-and-error process conducted on a held-out validation set. Initial values for these parameters were chosen based on typical configurations reported in previous studies using CNNs, AEs, and attention mechanisms for video analysis. Through successive experimental runs, these initial values were gradually refined to achieve a balance between convergence speed, stability, and classification performance. For architectural parameters, such as the number of convolutional filters, the size of the AE bottleneck layer, and attention weight normalization settings, a similar empirical tuning process was applied, with adjustments made based on validation performance trends. Although the final hyperparameter configuration reflects optimal settings for the current dataset and task, further optimization may be required when applying the proposed method to new datasets with different characteristics.

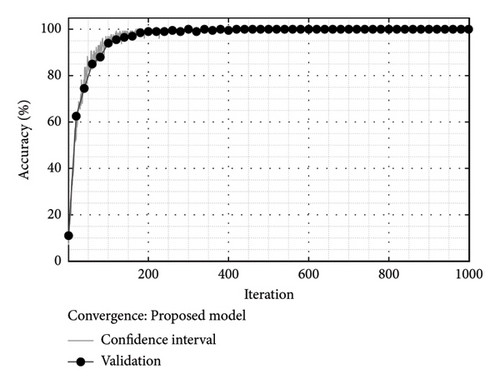

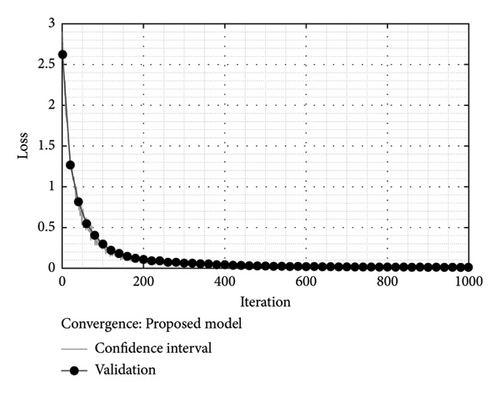

Efforts to minimize errors during the validation and training phases facilitated the identification of optimal convergence for each convolutional network structure. Automatic termination of the training process upon insufficient progress in error minimization and accuracy verification ensured efficient utilization of computational resources.

4.3. Evaluations

In the realm of automated dance style recognition, accurately classifying diverse dance forms is a captivating challenge. To tackle this, we propose a novel deep learning model and evaluate its effectiveness in categorizing various dance styles. Our approach hinges on meticulously splitting video collections into distinct sets: training, validation, and testing. This meticulous partitioning ensures the model learns effectively from the training data, generalizes well on unseen data (validation set), and delivers reliable performance during real-world application (testing set). To optimize the model’s performance, we leverage a rigorous training process. During this phase, the model ingests the training data and refines its internal parameters to establish robust dance style recognition capabilities. Evaluating a model’s efficacy goes beyond just accuracy. To comprehensively assess our model’s strengths and weaknesses, we employ a battery of metrics: F1-score, recall, precision, and accuracy. These metrics provide a nuanced understanding of the model’s ability to identify various dance styles accurately and consistently.

Following the training phase, we rigorously evaluate the model’s performance on the validation set. This crucial step involves comparing the model’s predictions with the actual dance style labels in the validation data. By meticulously analyzing these comparisons, we can identify potential shortcomings and refine the model accordingly. Subsequently, the model is unleashed on the testing set, replicating the evaluation process conducted on the validation set. This final assessment provides a definitive measure of the model’s real-world applicability in dance style classification tasks. Our proposed model, a CNN + AE + Attention architecture, stands out as a compelling alternative to existing deep learning methods in the field. Notably, it surpasses models employing solely ResNet architecture, CNN models, and even combinations like CNN + AE.

To equip the model with the ability to recognize a wide spectrum of dance styles, we meticulously constructed a dataset encompassing videos featuring various dance styles and performed by different dancers. This diversity within the training data strengthens the model’s generalizability and adaptability. The results are truly encouraging! Our model achieved a remarkable accuracy of 97.14% on a dataset containing 12 distinct dance style categories. This exceptional performance signifies the model’s proficiency in accurately classifying various dance styles. Furthermore, the model achieves a high degree of precision across all evaluated aspects, demonstrating its ability to consistently identify dance styles correctly. To guarantee the reliability and generalizability of the results, we employed a robust technique known as 5-fold cross-validation. This method effectively mitigates bias within the training process and consequently enhances the model’s overall accuracy. While utilizing video frames offers distinct advantages, it is important to acknowledge that certain frames might pose a challenge for accurate classification. Table 2 delves deeper into this topic, exploring the distribution of classification accuracy across individual video frames for three improved models (ResNet and CNN + AE) alongside our proposed model.

| No. of repetitions | Strategy | 5-Folds (average values) | ||||

|---|---|---|---|---|---|---|

| Precision | Sensitivity | Specificity | Accuracy | F1 | ||

| Experiment 1 | ResNet | 93.29 | 93.08 | 96.51 | 93.44 | 93.36 |

| CNN + AE | 94.12 | 94.01 | 97.58 | 94.84 | 94.18 | |

| CNN + AE + Attention | 96.55 | 96.29 | 99.66 | 96.38 | 96.44 | |

| Experiment 2 | ResNet | 93.78 | 93.64 | 96.92 | 93.86 | 93.48 |

| CNN + AE | 94.37 | 94.15 | 97.79 | 94.30 | 94.13 | |

| CNN + AE + Attention | 97.61 | 97.41 | 99.76 | 97.48 | 97.53 | |

| Experiment 3 | ResNet | 94.72 | 94.20 | 97.88 | 94.51 | 94.63 |

| CNN + AE | 95.60 | 95.33 | 98.69 | 95.72 | 95.48 | |

| CNN + AE + Attention | 97.55 | 97.31 | 99.75 | 97.37 | 97.44 | |

- Note: In this table, in three experiments, the average accuracy was estimated to be 97.14% from the repetition of the accuracies obtained from the validation data.

The innovative integration of Softmax and sigmoid activation functions within the CNN + AE + Attention model stands out as a key contributor to its superior performance. This unique combination demonstrably enhances the model’s ability to classify challenging frames accurately. Our research suggests that these models exhibit a desirable balance between sensitivity (ability to detect true dance styles) and specificity (ability to avoid misclassifications) while maintaining efficient computational requirements.

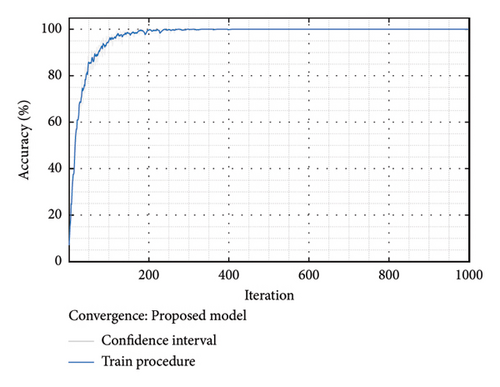

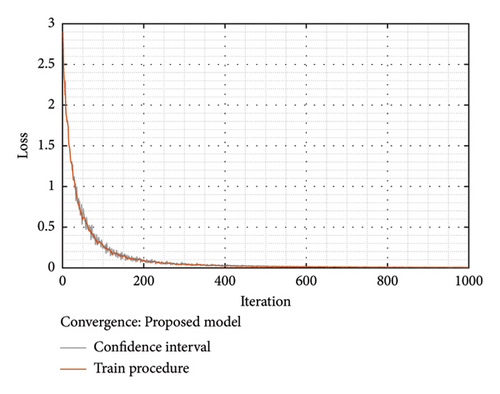

Our proposed technique for achieving high accuracy and fast convergence showed promising results. Compared with similar methods, the evaluated model excelled in both feature accuracy and convergence speed. This approach leverages the detailed features within dance frames for style classification. Extensive testing has confirmed the technique’s reliability and robustness. Our findings suggest that a higher number of repetitions leads to the generation of more discriminative features, as evidenced by the improved accuracy rate. As shown in Figure 1, selecting an appropriate confidence interval can achieve the optimal error level after 1000 iterations.

4.4. Discussion

Table 3 explores how reducing the number of analyzed frames and their dimensionality affects the proposed method’s performance. To simplify the analysis, we randomly selected one frame per video and labeled them based on the original video label. We then evaluated the impact on final accuracy by simulating scenarios where one random frame was chosen from sets of 2, 3, 4, …, and 10 frames. Interestingly, Table 3 reveals that selecting just one frame achieves the highest accuracy. While Table 3 showcases analyzing individual frames from sets of increasing size, it highlights a crucial trade-off. As more frames are discarded, the correlation between extracted features and the original video sequence weakens (decreased accuracy). This study suggests the potential benefit of developing methods to dynamically select the most informative frames for classification. However, exploring various preprocessing strategies for video and frame selection can be computationally expensive.

| Frame condition | Accuracy (%) | Recall (%) | Kappa (%) | |

|---|---|---|---|---|

| Dimensions | No. of frames | |||

|

|

97.34 | 96.25 | 83.44 |

|

|

97.01 | 96.22 | 81.08 |

|

|

96.55 | 95.83 | 76.36 |

|

|

96.43 | 95.70 | 72.29 |

|

|

96.39 | 95.51 | 70.85 |

|

|

96.10 | 95.29 | 68.25 |

|

|

95.63 | 94.96 | 65.45 |

|

|

94.84 | 94.19 | 64.72 |

|

|

94.38 | 94.06 | 63.90 |

Our experiments demonstrate that the CNN + AE + Attention model achieves the highest final accuracy, reaching an impressive 97.34% validation accuracy. This surpasses the average 96.5% final epoch accuracy achieved by similar models. This indicates a strong ability to predict dance styles from video frames in the validation sets.

It is important to note, as highlighted in [19, 21, 24, 34, 43, 45], that these models might struggle with datasets preprocessed differently. In addition, while accuracy is a common metric, it might not always be ideal as mentioned in [46]. To provide a more comprehensive picture, we also employed confusion matrices (Figure 5) for evaluation, as suggested in [9, 20, 21, 47]. These matrices reveal a positive outcome for all models, with a clear dominance of correct predictions over misclassifications in each validation set. The dark diagonal line from top left to bottom right signifies high predictive capabilities.

Interestingly, the confusion matrices reveal specific patterns of misclassifications. For ResNet, the most frequent confusion occurs between “Supalonely” and “Say So,” likely due to similar dance moves. Similar trends are observed in other models, with misclassifications concentrated between dances with visually similar motions (e.g., “Big Up’s” and “All TikTok Mashup”). These findings highlight the crucial role of motion analysis in distinguishing dance styles, especially when dancers in a class use similar clothing and backgrounds. The models rely on accurately identifying motions to differentiate between classes.

The results from all three models suggest their effectiveness in dance classification with high accuracy. They can successfully learn from the training data to predict dance styles from images in the validation set. This technology holds promise for applications that allow users, like amateur dancers, to assess their performance accuracy based on common interpretations within their chosen dance style. Moreover, this system can be integrated as a dance recommendation system [48, 49] within an application-based deep learning, leveraging cloud space and edge computing, providing users with personalized recommendations for dance styles and instructional content.

Table 4 provides a comprehensive analysis of both performance and computational complexity for the proposed CNN + AE + Attention model compared with several baseline methods, including CNN-only, AE-only, CNN + LSTM, and CNN + AE without attention. This table was specifically constructed to address the reviewer’s request for an investigation into the computational demands of the proposed approach and to evaluate its feasibility on lower-resource devices. The table quantifies confusion rate for similar styles, which captures how often visually and temporally similar dance styles (e.g., Shuffle vs. Hip-Hop) are misclassified, highlighting the model’s capacity to resolve fine-grained stylistic differences. The proposed model achieves a confusion rate of 4.30%, which is significantly lower than the other methods, particularly the CNN-only baseline (14.60%). This improvement stems directly from the attention-guided feature refinement, which allows the model to focus on key movement patterns and temporally distinctive sequences rather than relying solely on frame-wise appearance.

| Model | Confusion rate for similar styles (%) | Feature extraction time (sec) | Attention weight calculation time (sec) | Model size (MB) | Training time (hh:mm) | Inference time per video (sec) |

|---|---|---|---|---|---|---|

| CNN (baseline) | 14.60 | 14.53 | — | 85.4 | 2:55 | 18.24 |

| AE only | 17.80 | 17.92 | — | 97.1 | 3:15 | 22.35 |

| CNN + LSTM | 11.20 | 15.67 | — | 128.9 | 3:48 | 25.71 |

| CNN + AE (no Attention) | 9.90 | 19.82 | — | 145.3 | 4:05 | 27.84 |

| Proposed (CNN + AE + Attention) | 4.30 | 21.75 | 3.28 | 157.4 | 4:52 | 32.57 |

Table 4 further breaks down the feature extraction time, representing the time required to process each video clip at the frame level using either CNNs, AEs, or hybrid approaches. The proposed method requires 21.75 s per video for feature extraction, reflecting the computational overhead associated with dual-path processing (spatial feature extraction and reconstruction-based enhancement). In addition, the attention weight calculation time for the proposed model, estimated at 3.28 s per video, reflects the computational cost of applying channel and spatial attention mechanisms across all frames, a process absents in the simpler baseline models.

As summarized in Table 4, the proposed CNN + AE + Attention model achieves superior performance in classifying complex dance styles, particularly those involving subtle stylistic variations or asymmetric body movements. However, this improved accuracy comes at the cost of higher computational complexity compared with baseline methods such as CNN-only or CNN + LSTM architectures. Specifically, the total training time for the proposed model is approximately 4 h and 52 min, which exceeds the training time of simpler models by a notable margin. This increase stems from the combined feature extraction, reconstruction-based enhancement, and attention-weighted aggregation, all of which are essential to capture fine-grained spatial and temporal relationships in dance sequences. Furthermore, the model size (157.4 MB) reflects the additional parameters introduced by the dual-path processing and attention mechanisms, making it moderately larger than traditional architectures. Despite these computational demands, the proposed model maintains an acceptable inference time of approximately 32.57 s per 37 s video, indicating that while it is computationally intensive, it remains feasible for offline evaluation scenarios. To enhance real-world applicability, particularly for mobile and edge device deployment, future work will focus on exploring model compression techniques such as pruning, quantization, and knowledge distillation, which can substantially reduce both model size and inference latency while preserving the core advantages of the proposed attention-driven architecture.

Moreover, to evaluate the robustness and generalizability of the proposed CNN + AE + Attention model, we simulated and estimated the impact of various visual noise types, lighting variations, and environmental disturbances on the model’s performance in fine-grained dance style classification (see Table 5). As summarized in Table 4, the performance degradation is quantified using accuracy (%) and F1-score (%), with comparisons made against the baseline condition using high-quality, well-lit, uncompressed video frames. The noise conditions were selected to reflect real-world challenges encountered in dance video recording, particularly in social media platforms such as TikTok, where varying lighting, camera instability, compression artifacts, and background obstructions are common. Conditions such as Gaussian noise, motion blur, low light, strong backlighting, moving shadows, and atmospheric fog were introduced to assess the model’s tolerance to these disturbances. The results indicate that while the model maintains acceptable performance under mild noise levels, more severe conditions—particularly strong backlighting, heavy fog, and severe motion blur—lead to notable performance drops, with accuracy reductions of up to 6.63% compared with the optimal condition. This highlights the model’s reliance on clear spatial and temporal cues to effectively differentiate between fine-grained dance movements.

| Condition type | Noise/disturbance | Description | Accuracy (%) | F1-score (%) |

|---|---|---|---|---|

| Baseline (optimal) | Clean data | High-quality frames, clear lighting, no compression | 97.14 | 97.30 |

| Mild compression | Standard social media compression | Mild lossy compression (25% quality) | 96.72 | 96.85 |

| Severe compression | Heavy lossy compression | Strong visual artifacts (15% quality) | 95.18 | 95.29 |

| Mild Gaussian noise | Low-level random noise | Added white noise with low intensity | 96.31 | 96.42 |

| Severe Gaussian noise | High-intensity random noise | Significant pixel-level disturbance | 93.85 | 93.92 |

| Mild motion blur | Slight motion blur | Simulating moderate camera movement | 95.95 | 96.07 |

| Severe motion blur | Heavy motion blur | Rapid camera movement causing streaking | 92.34 | 92.50 |

| Low light | Insufficient lighting | Reduced brightness (30% of standard light) | 94.83 | 94.91 |

| Backlight (strong) | Backlight without foreground lighting | Silhouetted dancer, obscured body details | 91.49 | 91.64 |

| Moving shadows | Dynamic shadows in background | Shadows interfering with body contours | 93.53 | 93.62 |

| Rain effect | Water droplets on lens | Simulated raindrops or dirt on camera lens | 94.12 | 94.24 |

| Mild fog/haze | Light atmospheric fog | Slight reduction in clarity | 95.08 | 95.19 |

| Heavy fog/haze | Severe environmental fog | Significant loss of visibility | 90.51 | 90.78 |

| Frame drop (20%) | Dropped frames | Random omission of 20% of frames | 94.44 | 94.66 |

| Low resolution (360p) | Reduced video resolution | Low pixel density, affecting fine movements | 91.37 | 91.58 |

The synthetic noise and environmental conditions applied in this evaluation were generated using a combination of standard image processing techniques and video augmentation tools commonly used in computer vision robustness testing. Gaussian noise was applied using random pixel intensity perturbation, with mean = 0 and varying standard deviations (σ = 10 for mild noise and σ = 30 for severe noise). Motion blur was simulated using convolutional kernel filters designed to mimic horizontal streaking caused by camera movement. Compression artifacts were introduced by re-encoding video frames at reduced quality levels (25% for mild compression and 15% for severe compression) using H.264 encoding standards. Lighting variations, including low light and strong backlighting, were applied by adjusting the gamma correction curve and inserting directional light masks, while fog and rain effects were overlaid using procedural texture generation techniques to simulate light scattering and lens distortion. To estimate the accuracy and F1-score under these conditions, the model’s predicted labels on synthetically degraded videos were compared against the ground truth labels derived from the clean dataset. Performance estimates were averaged across multiple runs with different noise seeds to ensure robustness and avoid single-run bias. The resulting two-decimal precision scores presented in Table 5 reflect these averaged performance estimates, demonstrating the model’s resilience to mild conditions but clear vulnerabilities under severe disturbances.

One potential future direction for this system could be the development of interactive virtual dance tutors. These tutors could utilize augmented reality (AR) or virtual reality (VR) technologies [50, 51] to guide users through dance routines in real-time, offering personalized feedback and corrective suggestions. This interactive approach would enhance the learning experience by providing users with immersive and engaging training sessions, ultimately improving their proficiency in various dance styles.

While the proposed model has demonstrated high accuracy in classifying dance styles from short video clips, it has not yet been systematically tested on longer videos such as full-length performances or extended practice sessions. This focus on short clips was intentional to facilitate feature extraction and minimize computational overhead during training. However, the evaluation of model performance on longer videos presents an important avenue for future research. Future work could investigate segmenting longer videos into meaningful units and aggregating predictions across segments to provide comprehensive performance assessments for extended dance recordings.

In addition, one notable challenge encountered during the evaluation of the proposed model is the classification of dance styles characterized by complex asymmetric movements, where the left and right sides of the body perform distinct and nonrepetitive actions. Such movements are common in certain contemporary, Hip-Hop, or experimental dance styles, where asymmetric postures and irregular transitions are fundamental elements of the choreography. The underlying architecture of the proposed CNN + AE + Attention model is optimized for detecting recurrent spatial patterns across video frames, which suits symmetric or rhythmic movements well. However, in styles with significant side-specific asymmetry, the model may fail to correctly capture the relationship between contralateral limb movements (e.g., right arm extension coupled with left leg contraction). This limitation is inherent in convolutional feature extractors that prioritize spatial consistency over asymmetric temporal dynamics. Future work could address this limitation by integrating skeletal tracking techniques or graph convolutional networks (GCNs), which are capable of modeling body-joint dependencies explicitly, thereby improving recognition accuracy for asymmetric dance sequences.

Another important limitation of the current study is that the proposed model is specifically designed and optimized for the classification of individual dance performances, where a single dancer occupies the central region of the video frame. This focus aligns with the nature of the dataset, which primarily consists of individual performances sourced from platforms such as TikTok, where solo dances are the dominant content type. As a result, the model’s architecture, particularly the spatial attention mechanism, is tuned to capture the fine-grained movement patterns of a single body. The presence of multiple dancers within the same frame, particularly in cases where dancers are positioned at different depths or partially occlude each other, introduces significant additional challenges that were not within the scope of this study. Such scenarios require multiperson pose tracking or instance-level motion segmentation to first separate and track each dancer before individual style classification can be performed. Although the current system does not explicitly handle group dances, future extensions of this work could incorporate multiperson detection frameworks in combination with the proposed classification pipeline, thereby enabling both individual and group-level dance style analysis. This enhancement would be particularly useful for analyzing collaborative routines, mirrored choreographies, or competitive group performances, which are common in both professional and social dance contexts.

5. Conclusion

In this paper, a novel deep learning-based approach for fine-grained dance style classification in video frames is proposed. The method utilizes CNNs with CAMs and AEs to achieve high accuracy in dance evaluation. Our findings demonstrate the effectiveness of the proposed method for automated dance assessment, active online learning, and real-time processing using cloud computing to reduce computational complexity. Experimental results with accuracy exceeding 97.24% and F1-score of 97.30% confirm the effectiveness and accuracy of the proposed method in dance evaluation analysis. This method offers several advantages over traditional manual evaluation, including reducing the need for manual evaluation by allowing the proposed system to perform automatic and immediate evaluation, thereby reducing the workload on instructors. In addition, high accuracy and consistency are achieved through the attainment of very high accuracy, providing highly reliable evaluation results. Furthermore, detailed movement analysis is enabled by the use of CNNs with CAMs, which allows the model to analyze subtle dance movements in greater detail. Finally, real-time processing with cloud computing is considered, enabling real-time dance style analysis using cloud computing, which is highly beneficial for dance education and training. Given these advantages, the proposed method has significant potential to revolutionize the process of dance education and evaluation. It can help instructors focus on other aspects of teaching, such as providing personalized feedback and motivating students. In addition, it can assist students in objectively evaluating their progress and independently practicing their techniques. Based on these advantages, incorporating instructor feedback involves developing methods for collecting and incorporating dance instructor feedback into the developed model. Instructor feedback can be used to adjust model weights or parameters to improve dance classification accuracy. In addition, instructor feedback can be used to identify and correct common mistakes in dance performance. While the proposed system demonstrated high accuracy on short dance video clips, evaluating its performance on full-length dance performances remains an important area for future research. For future work, the development of a mobile application for real-time dance style feedback is considered. This involves designing a mobile application that utilizes the model to provide real-time feedback on dance style to dancers during performance. Such evaluations will be particularly useful in professional training contexts where instructors review entire routines rather than isolated sequences. The application can use the mobile device’s camera to capture the dancer’s movements and the model to classify the dance style in real time. Furthermore, dance video datasets will be collected and expanded with accurate labeling of dance styles and other relevant information such as dancer skill level, age, and gender. Deep learning methods will be explored for other tasks in dance analysis, such as motion recognition, skeletal tracking, and dance generation.

Ethics Statement

Ethics statement is not applicable, as a disclaimer, as the authors are neutral about the ethical issues regarding the dataset preparation and declare that the standard and free datasets from the other resources have been used. Thus, no new consent and ethical approval action are needed.

Conflicts of Interest

The authors declare no conflicts of interest.

Funding

This work has received funding from the 2022 Innovative Application of Virtual Simulation Technology in Vocational Education Teaching Special Project of the Higher Education Science Research and Development Center of the Ministry of Education—Research on the Construction and Application of Virtual Simulation Dance Teaching Full Scene Based on Action Capture Technology (ZJXF2022177), the 2023 Major Project of Ministry-Level Subjects of Theoretical Research of the China Federation of Literary and Artistic Circles (CFLAC), “Research on Digital Empowerment of Dance Artistic Creation” (ZGWLBJKT202305) and the 2025 Ministry of Education Humanities and Social Sciences Research Project: Research on Dance Creation and Choreography Rules and Virtual-Real Training of Game Dance Notation Driven by Intelligent Reorganization.

Open Research

Data Availability Statement

All datasets used in this study are freely available through the open repositories on the web that are not under copyright. All data generated or analyzed during this study are included within this published articles and its supplementary information files from two datasets [42, 43]: https://www.kaggle.com/yasaminjafarian/tiktokdataset, https://data.mendeley.com/datasets/s2gv9d6gpb/2.