LDSGAN: Unsupervised Image-to-Image Translation With Long-Domain Search GAN for Generating High-Quality Anime Images

Abstract

Image-to-image (I2I) translation has emerged as a valuable tool for privacy protection in the digital age, offering effective ways to safeguard portrait rights in cyberspace. In addition, I2I translation is applied in real-world tasks such as image synthesis, super-resolution, virtual fitting, and virtual live streaming. Traditional I2I translation models demonstrate strong performance when handling similar datasets. However, when the domain distance between two datasets is large, translation quality may degrade significantly due to notable differences in image shape and edges. To address this issue, we propose Long-Domain Search GAN (LDSGAN), an unsupervised I2I translation network that employs a GAN structure as its backbone, incorporating a novel Real-Time Routing Search (RTRS) module and Sketch Loss. Specifically, RTRS aids in expanding the search space within the target domain, aligning feature projection with images closest to the optimization target. Additionally, Sketch Loss retains human visual similarity during long-domain distance translation. Experimental results indicate that LDSGAN surpasses existing I2I translation models in both image quality and semantic similarity between input and generated images, as reflected by its mean FID and LPIPS scores of 31.509 and 0.581, respectively.

1. Introduction

As cyberspace activities become increasingly frequent, cyberspace portraits raise many security issues. Therefore, it is crucial to protect the privacy of portraits [1, 2]. Image-to-image (I2I) translation has become a popular research topic in recent years, and it aims to learn image-projecting functions from source to target domains. I2I protects portrait privacy and prevents facial recognition technology in cyberspace from infringing on personal identity by converting personal portraits into anime or other portraits. With the development of Generative Adversarial Networks (GANs) [3] and diffusion models [4], the powerful fitting ability of the models has further advanced the application of I2I in real-life applications. In addition to portrait protection, image coloring [5–7], makeup migration [8], fashion editing [9], style migration [10], and game character face generation [11] also involve I2I technology.

Existing I2I translation methods are mainly categorized as supervised [7–9, 12–14] and unsupervised. Supervised I2I methods use paired data to train models. However, it is tough to obtain paired data in realistic environments, so unsupervised I2I methods have received widespread attention. For example, CycleGAN [15] introduces two mirror-symmetric GANs for establishing constraints between the source and target domains and preserving the image information through skip connections for cross-domain image transformation. Similarly, Drit++ [16] proposed a multidomain unsupervised I2I model that uses an encoder to extract the content and feature information from an image and exploits it as input to the CycleGAN.

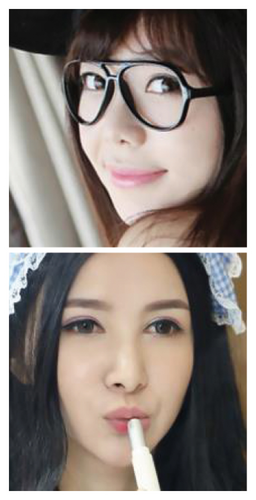

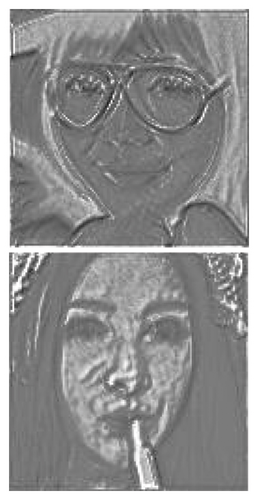

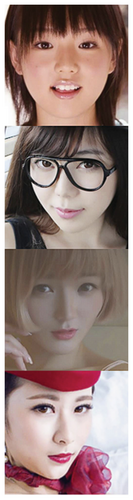

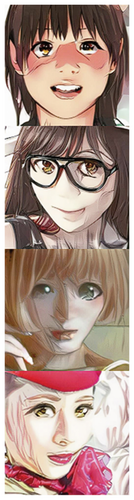

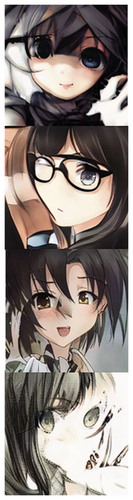

However, these models assume that the images in the source and target domains have high similarity in shape or texture. When in an I2I task with close inter-domain distances, shapes and textures can be considered as domain-independent features and preserved by skip-connected structures. Once in an I2I task with large inter-domain distances, shape and texture are no longer considered as domain-independent features due to the notable differences between the source and target domains. When using skip connections, the source domain image in long-distance domain translation (LDDT) cannot be transferred to the target domain correctly, and artifacts of the source domain may remain on the generated image (as shown in Figure 1(b)). The image generated by the proposed method is shown in Figure 1(c). Figures 1(d), 1(e), and 1(f) demonstrate the feature projecting passed by skip connection, which contains too much texture in the source domain, resulting in a degradation of the quality of the generated image. In addition, I2I models based on encoder–decoder [16–18] often struggle to accurately match the source image in the target domain due to severe information loss. Therefore, it is difficult for the existing methods to achieve satisfactory performance on the LDDT.

In contrast to the above approach, UI2I-via-StyleGAN2 [19] divides the I2I task into short-distance domain translation (SDDT) and LDDT by inter-domain distance. The target domain model is trained by fine-tuning the source domain model to minimize the effect of model distance. Meanwhile, the layer-swap approach directly swaps the high-level convolutional feature projecting from the source domain to the target domain, thereby preserving more features in the source domain. However, the color arrangement of the generated image still cannot match the input image completely, and there is still potential for further improvement of the image quality.

To solve the problem in LDDT, we propose an unsupervised I2I translation method which is called Long-Domain Search GAN (LDSGAN). It introduces a novel Real-Time Route Search (RTRS) module for generating high-quality images. RTRS module searches for a representation of the color arrangement and texture in the source domain. It projects it to a representation of the target domain, thus helping the model preserve domain-independent features and map to improve the domain’s domain-related features to the target domain’s nearest projected points. In addition, we propose Sketch Loss, which helps the model maintain the similarity between the generated image and the source domain image. However, it does not enforce that the generated image is identical to the source domain image in terms of distance.

- •

We proposed LDSGAN, which is capable of achieving higher generation quality in LDDT while maintaining the similarity between the input image and the generated image.

- •

We proposed RTRS module, which helps the model to find the nearest neighbor projection points of the source domain image in the target domain, thus improving the upper bound of the network fit.

- •

We proposed Sketch Loss to improve the similarity between the generated image and the source domain image in visual quality. This loss function does not limit the distance between the generated image and the source domain image.

The remaining sections of the thesis are organized as follows. In Section 2, the application of GAN to I2I is outlined. In Section 3, the proposed method for I2I is proposed and described in detail. In Section 4, we present the experimental setup, verify the detection performance of the proposed method on different datasets, and discuss the experimental results. Finally, a conclusion is made in Section 5 and future work is indicated.

2. Related Work

In recent years, with the development of GANs [3, 20], I2I translation research has achieved impressive synthetic image results. On the one hand, in the supervised I2I translation method, Pix2pix [13] utilizes the UNet [12] architecture. Experiments have shown that introducing skip connections in image translation tasks can significantly improve translation performance. In addition, supervised I2I methods can be customized to specific scenarios, such as grayscale image coloring, fashion editing, and makeup transfer. Supervised-based I2I mostly uses a similarity-based strategy that retains most of the information in the source domain and edits the target region.

On the other hand, among the unsupervised methods, CycleGAN [15] proposed a method to learn the input image to output image projecting without pairing samples. Drit++ [16] proposed a multidomain I2I translation model that achieves better results in terms of image quality by using multiple encoders to encode the context and features of the image.

To further enhance the quality of the generated images, the researchers explored the application of StyleGAN [21] to I2I translation as it is capable of generating impressive and high-quality images. For example, UI2I-via-StyleGAN2 [19] introduces a method that encodes images end-to-end into the latent space of StyleGAN [21]. It defines model distances based on StyleGAN2 [22] and proposes a GAN embedding-based inversion approach to achieve higher-quality results in long-range inter-model image-to-image (I2I) translation.

Although the above supervised networks have made significant progress in I2I translation, they require paired data. When using unpaired data, unsupervised networks may be unable to generate high-quality color images in LDDT. In addition, in the I2I task, many studies have assumed a high degree of consistency in the distribution of images in the source and target domains over the content space. These studies usually use texture-based detectors such as LPIPS [23] as loss functions or similarity evaluation metrics but ignore the differences in image texture types in LDDT.

To overcome the above problems, we propose LDSGAN, which has a wider search space in the target domain and thus can map the source domain image to the target domain more efficiently. It achieves feature aggregation by constructing RTRS modules to characterize the search for color alignments and textures in the source domain. Without limiting the distance between the generated image and the source domain image, Sketch Loss is utilized to improve the visual similarity between the generated image and the source domain image, which in turn enables LDSGAN to generate high-quality and more appealing color images during the long-distance domain transformation process.

3. The Proposed Method

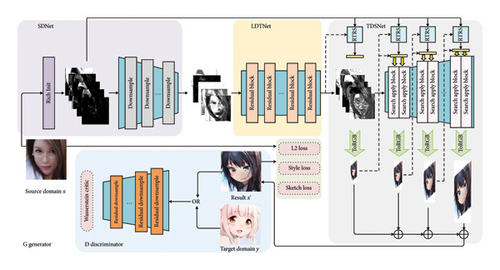

The proposed unsupervised learning LDSGAN searches for images close to the source domain in the target domain space and improves the performance of long-domain translation. As shown in Figure 2, the main components of LDSGAN include the source distill network (SDNet), the long-distance transform network (LDTNet), and the target domain search network (TDSNet).

Firstly, in SDNet, the model reduces the dimension of the source domain image while preserving the details, projecting it to a stream shape closer to the target domain. Secondly, LDTNet discards textures that are present in the source domain but not in the target domain by separating features and weakening domain-related features in the source domain and then translating the image to the target domain. Finally, TDSNet uses the RTRS module to search for the nearest neighbor projection points of the source domain image in the target domain, which improves the upper fitting limit of the network (shown in Figure 3). With Sketch Loss, the network preserves consistency between the edited image and the human-generated visual image, resulting in a high-quality generated image in the target domain that closely resembles the original.

3.1. SDNet

It is more workable to map a low-dimensional image to the target domain than map a high-dimensional image directly to the target domain. Lower dimensional images have less detail, so the source domain image is closer to the target domain in lower dimensional space. However, reducing the image from high to low dimensions leads to information loss in the source domain. We first apply Rich Init to preserve the high-frequency features, ensuring their transfer to the target domain’s high-frequency features. Therefore, SDNet can effectively preserve the source domain information while reducing the image dimension.

SDNet uses successive convolutional layers as the encoder architecture and does not contain a decoder part. Inspired by the model in [24], we use a convolutional layer to enhance the input image. Then, the feature projecting is performed N times downsampling to make it closer to the low-dimensional target domain. The value of N depends on prior knowledge. Usually, the greater the distance between domains, the higher the value of N. As shown in Figure 3, the output feature projecting of SDNet is input into LDTNet for further source-to-target domain transformation.

3.2. LDTNet

In LDDT, significant differences in texture and detailed shapes exist between the source and target domain images. For example, when a human face portrait is transformed into an anime-style portrait, there are substantial differences in the detailed shapes of the facial features. The low-dimensional feature maps of the source domain obtained by SDNet cannot be directly used to generate images in the target domain. For this purpose, we introduce LDTNet, which transforms the source domain feature projecting into a feature projecting suitable for the target domain in a low-dimensional space.

LDTNet uses multiple residual blocks [25] to transform the low-dimensional feature maps from the source domain to the target domain. It ensures that the information content of feature maps before and after conversion is the same without changing the number and size of feature maps. xs denotes the source domain image extracted by SDNet and ys denotes the target domain image corresponding to the source domain. LDTNet tries to learn a projecting function f such that , where is the nearest neighbor map of the f(xs) in the target domain.

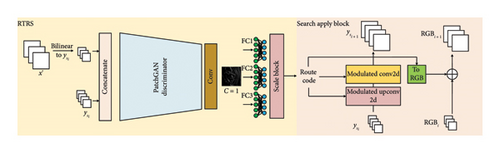

3.3. TDSNet

To restore the feature projecting to the high dimensional space and to make its projecting location close to the nearest neighbor projection point in the target domain, TDSNet consists of N times RTRSs and N times Search Application Blocks (SABlocks). Each RTRS and its corresponding SABlock projects the low-dimensional feature maps to a high-dimensional feature space with appropriate routes, and these routes are applied to each upsampling layer. The rich maps contain the shape and texture information of the source domain, while the low-dimensional feature maps are a low-dimensional representation of the target domain and contain less shape and texture information. The combination of the two generates a Route Code, which is used to control the strength of the convolutional kernel so that the convolutional layer can find the best path to project the low-dimensional feature maps to the nearest projection point. Thus, textures that are not decoupled and discarded in SDNet can be recovered by RTRS.

SABlock customizes the convolution kernel using the routing code obtained by RTRS to generate the target image. The structure of SABlock is shown in Figure 3. Inspired by the tunable convolution kernel in StyleGAN2, SABlock is used to customize the image generation route. During the generation process, noise is no longer added, as it would lead to inaccurate optimization of the route search. To make the route search process more focused on the color of the RGB channel, the RGB mapping obtained through ToRGB is combined with the routing code extracted by RTRS. In SABlock, using the same search code does not deviate from the translation route due to the short translation path of the feature mapping. Therefore, we use the same search code for all modulated convolutional kernels to reduce computational overhead.

After each convolutional layer of the generator, a Leaky ReLU [26] with a slope of 0.2 is used as the activation function. In addition, to avoid checkerboard artifacts, we use a sampled convolutional kernel similar to StyleGAN2.

3.4. Loss Function

Due to the differences in image textures and the diversity of colors and strokes in LDDT, network training becomes challenging. To stabilize the training process, the network employs a multiobjective weighting strategy.

4. Experiments

4.1. Baseline and Dataset

To evaluate the proposed LDSGAN model, we have selected several state-of-the-art works as baselines. CycleGAN [15] introduces Cycle Consistency Loss (CCL) to ensure that the identity of the input and output images remains consistent when trained with unpaired data. Drit++ [16] supports I2I conversion through high-quality multimodal translation. UI2I-via-StyleGAN2 [19] proposes a GAN embedding-based approach to obtain higher image quality and similarity in I2I tasks by fine-tuning the pretrained StyleGAN2.

This experiment is evaluated in two I2I translation scenarios with large differences between their domains. For the Yellow2Anime task, the Netflix face dataset and the Danbooru2018 [29] are used. The Netflix face dataset contains 136,723 images of 512 × 512 size. For the Sketch2Anime task, we used the DCS dataset collected in [7]. In the Sketch2Anime task, the image is cropped to a square with short side lengths and then scaled to 512 × 512 size.

4.2. Experimental Settings

The framework for the experiments is implemented in PyTorch [30]. All experiments are performed with an NVIDIA Tesla V100 GPU. The method uses the Adam optimizer with the learning rate set to 1e-4 and a total number of iterations of 400k. The momentum parameters of the generator and discriminator are set to β1 = 0 and β2 = 0.99, respectively.

The generator’s output is the nearest neighbor estimate of the input source domain image in the target domain. To accelerate convergence, we use WGAN-GP [27] to initialize the generator and the discriminator and impose no gradient penalty on the generator. The gradient penalty of the discriminator is executed every 16 iterations and the penalty strength is amplified 16 times to reduce the network training time. In addition, in all convolutional and fully connected layers, we use Kaiming normal initialization [31] to improve convergence speed while avoiding the problem of gradient explosion during training. Similar to NAH’s approach [32], we did not use any normalization layer in the network.

4.3. Comparison Experiment

To evaluate the quality of the images generated by the method in this paper and their similarity to the input images, we used the following metric: Frechet Inception Distance (FID) [33], which is used to calculate the distance of the feature vectors between the real image and the generated image to measure the quality of the output image. LPIPS [23] is a depth feature–based metric for evaluating structural and textural similarity between images. We use LPIPS to measure the structural similarity of images despite the textural differences between source and target domain images in LDDT.

To evaluate the performance of LDSGAN, we compared it with several other methods in the Yellow2Anime task, including CycleGAN [15], Drit++ [16], UI2I-via-StyleGAN2 (UI2I-StGAN2) [19], AttentionGAN [34], and E2 GAN [35]. Specifically, we retrained these models on the same training dataset using the same settings. For CycleGAN [15] and Drit++ [16], the training period is set to 10 and the decay period is set to 5. Then, the performance of these models is evaluated by FID and LPIPS. The experimental results are shown in Table 1, where 2000 test images are randomly selected as inputs. Figure 4 shows some comparison examples.

| Method | Yellow2Anime | Sketch2Anime | ||

|---|---|---|---|---|

| FID↓ | LPIPS↓ | FID↓ | LPIPS↓ | |

| CycleGAN [15] | 185.812 | 0.547 | 153.61 | 0.509 |

| Drit++ [16] | 60.156 | 0.605 | 59.995 | 0.583 |

| UI2I-StGAN2 LS = 1 [19] | 46.771 | 0.670 | 44.781 | 0.631 |

| UI2I-StGAN2 LS = 3 [19] | 71.361 | 0.644 | 63.056 | 0.627 |

| UI2I-StGAN2 LS = 5 [19] | 102.405 | 0.618 | 81.551 | 0.594 |

| AttentionGAN [34] | 62.983 | 0.586 | 65.276 | 0.598 |

| E2 GAN [35] | 73.03 | 0.603 | 72.615 | 0.618 |

| LDSGAN (ours) | 31.437 | 0.582 | 29.582 | 0.579 |

- Note: Best results are in italics. Suboptimal results are in bold.

As shown in Table 1, the FID scores of this paper’s method are better than other methods. Although some methods perform better on LPIPS, they mainly rely on the direct migration of textures from input images in nontarget domains. As can be seen in Figure 4, the images generated by CycleGAN and Drit++ contain too much texture of the input image, which is the advantage of skip connections in the SDDT task but is a major drawback in the LDDT task. The results of UI2I-via-StyleGAN2 perform poorly when the number of layer exchanges is 3 or 5. The main reason is that the resulting image is more prone to corruption when swapping more advanced convolutional layers, as the swapped features need to be mapped through more convolutional layers that are not in the original model. Therefore, the FID score is lower when the number of layer exchanges is 3 or 5. For the result of layer-swap = 1, although the generated image is similar to the input image in terms of facial orientation and expression, it fails to accurately preserve the color layout of the input image and loses the decorations in the input image. In summary, LDSGAN obtains the best image quality and effectively maintains the similarity between the input and output images.

4.4. Ablation Study and Analysis

4.4.1. Ablation Study of RTRS

To analyze the role of RTRS in LDSGAN, we designed w/o RTRS and one RTRS and compared their effectiveness with full RTRS. w/o RTRS means that the model does not use RTRS and SABlock, so the model is equivalent to an autoencoder. One RTRS represents the generation of all the search codes at the first call to RTRS, whereas full RTRS represents the complete method proposed earlier. The results of the experiments are shown in Table 2, and some examples from Sketch2Anime are illustrated in Figure 5.

| Yellow2Anime | Sketch2Anime | |||

|---|---|---|---|---|

| FID↓ | LPIPS↓ | FID↓ | LPIPS↓ | |

| w/o RTRS | 47.662 | 0.664 | 34.571 | 0.609 |

| One RTRS | 34.621 | 0.604 | 46.156 | 0.628 |

| Full RTRS | 31.437 | 0.582 | 29.582 | 0.579 |

- The italic values indicate the highest level and bold values represent the second highest level.

As can be seen in Table 2, in the Yellow2Anime task, the w/o RTRS model receives the lowest FID and LPIPS scores, while the one RTRS model scores in the middle of the pack and full RTRS model scores the highest.

In addition, the trend of LPIPS scores in the Sketch2Anime task is the same as in the Yellow2Anime task. One RTRS model significantly improves the FID score by utilizing the StyleGAN2 architecture of the network. In contrast, the full RTRS model exclusively improves the similarity between the input and generated images by searching the layout. For the Sketch2Anime task, the FID scores are relatively stable across the three modes because the input image does not have a complex color layout.

4.4.2. Ablation Study of Sketch Loss

The effectiveness of using Sketch Loss to maintain image consistency is analyzed by ablation studies. First, we conduct comparative experiments by excluding both the Soft-Sketch Loss and Hard-Sketch Loss. The comparison results are shown in Table 3, and their visualization is shown in Figure 5.

| Yellow2Anime | Sketch2Anime | |||

|---|---|---|---|---|

| FID↓ | LPIPS↓ | FID↓ | LPIPS↓ | |

| w/o + | 36.931 | 0.631 | 36.028 | 0.594 |

| 32.566 | 0.668 | 28.043 | 0.592 | |

| 33.676 | 0.649 | 34.752 | 0.609 | |

| + | 31.437 | 0.582 | 29.582 | 0.579 |

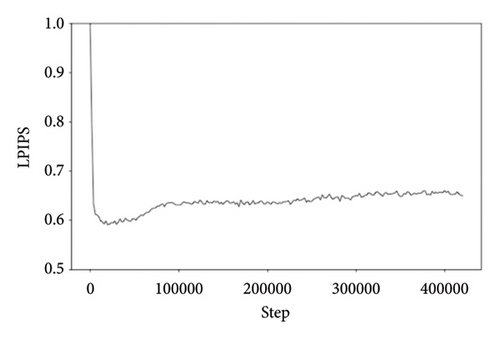

Table 3 shows that although and have higher FID scores than w/o + , they perform worse on LPIPS scores. This result is because the optimization process tries to direct the generated image to the target domain, which is further away from the source domain. We noticed the phenomenon that in our experiments, the LPIPS score is lower at the beginning of the optimization and increased at the later stages. As shown in Figure 6, the LPIPS is low at the beginning of the optimization but increased in the later stages.

The main reason for the above phenomenon is that either or is used, both of which cause the network to focus on only some of the features in the source domain. The network may become overly focused on these specific features as the number of training sessions increases. Therefore, to overcome this shortcoming, we finally used a combination of + to constrain the network training.

5. Conclusion

In this paper, we propose a novel LDSGAN method to translate images to the target domain further and generate high-quality images while maintaining the similarity between the input and generated images. The proposed LDSGAN uses SDNet to distill the input images’ message while leaving the rich maps of input images. Then LDTNet is adapted to further translate the feature maps to the target domain. Furthermore, the applied TDSNet restores the color layout of input images by searching for rich maps and generating maps in the target domain. Finally, Sketch Loss is used to maintain the image identity between input and generated images. Comprehensive comparisons demonstrate that the proposed LDSGAN generates more vivid images in LDDT and maintains the color and layout, which shows that the proposed method has obvious superiority over state-of-the-art work in LDDT. Since the proposed method suffers from the detailed shape dim problem, we aim to improve the coincidence of detailed shapes on input and generated images in future work.

Nomenclature

-

- ANA

-

- Anti-nuclear antibodies

-

- APC

-

- Antigen-presenting cells

-

- IRF

-

- Interferon regulatory factor.

Conflicts of Interest

The authors declare no conflicts of interest.

Author Contributions

Hao Wang: conceptualization, methodology, software, formal analysis, and writing – original draft.

Chenbin Wang: conceptualization, supervision, formal analysis, writing – review and editing, and supervision.

Xin Cheng: conceptualization, supervision, formal analysis, writing – review and editing, and supervision.

Hao Wu: conceptualization, supervision, formal analysis, writing – review and editing, and supervision.

Jiawei Zhang: methodology, validation, investigation, writing – review and editing, and supervision.

Jinwei Wang: project administration, methodology, validation, investigation, writing – review and editing, and supervision.

Xiangyang Luo: funding acquisition, resources, and methodology.

Bin Ma: data curation and visualization.

Funding

This work was supported by the National Key R and D Program of China (Grant No. 2021QY0700), National Natural Science Foundation of China (Grant Nos. 62472229, 62072250, U23A20305, U23B2022, 62371145, 62072480, 62172435, 62302249, 62272255, 62302248, and U20B2065), Zhongyuan Science and Technology Innovation Leading Talent Project of China (Grant No. 214200510019), Open Foundation of Henan Key Laboratory of Cyberspace Situation Awareness (Grant No. HNTS2022002), and Graduate Student Scientific Research Innovation Projects of Jiangsu Province (Grant No. KYCX24_1513).

Acknowledgments

This work was supported by the National Key R and D Program of China (Grant No. 2021QY0700), National Natural Science Foundation of China (Grant Nos. 62472229, 62072250, U23A20305, U23B2022, 62371145, 62072480, 62172435, 62302249, 62272255, 62302248, and U20B2065), Zhongyuan Science and Technology Innovation Leading Talent Project of China (Grant No. 214200510019), Open Foundation of Henan Key Laboratory of Cyberspace Situation Awareness (Grant No. HNTS2022002), and Graduate Student Scientific Research Innovation Projects of Jiangsu Province (Grant No. KYCX24_1513).

Open Research

Data Availability Statement

The data that support the findings of this study are available from the corresponding author upon reasonable request.