Medical Image Fusion Using Unified Image Fusion Convolutional Neural Network

Abstract

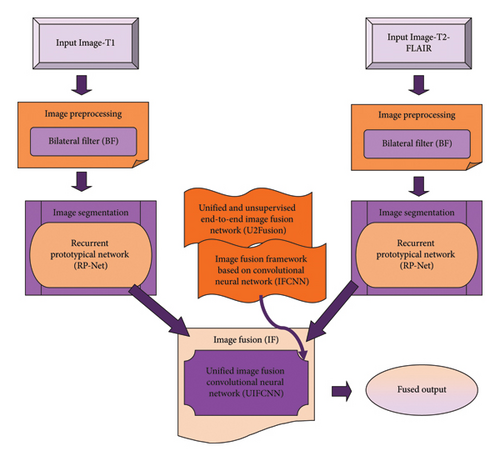

Medical image fusion (IF) is a process of registering and combining numerous images from multiple- or single-imaging modalities to enhance image quality and lessen randomness as well as redundancy for increasing the clinical applicability of the medical images to diagnose and evaluate clinical issues. The information that is acquired additionally from fused images can be effectively employed for highly accurate positioning of abnormality. Since diverse kinds of images produce various information, IF becomes more complicated for conventional methods to generate fused images. Here, a unified image fusion convolutional neural network (UIFCNN) is designed for IF utilizing medical images. To execute the IF process, two input images, namely, native T1 and T2 fluid-attenuated inversion recovery (T2-FLAIR) are taken from a dataset. An input image-T1 is preprocessed employing bilateral filter (BF), and it is segmented by a recurrent prototypical network (RP-Net) to obtain segmented output-1. Simultaneously, input image-T2-FLAIR is also preprocessed by BF and then segmented using RP-Net to acquire segmented output-2. The two segmented outputs are fused utilizing the UIFCNN that is introduced by assimilating unified and unsupervised end-to-end IF network (U2Fusion) with IF framework based on the CNN (IFCNN). In addition, the UIFCNN obtained maximal Dice coefficient and Jaccard coefficient of 0.928 and 0.920 as well as minimal mean square error (MSE) of 0.221.

1. Introduction

With the growth of medical imaging technologies, clinical images are currently becoming more significant in the medical diagnosing process. The medical images of diverse modalities have both merits and demerits [1]. As an essential and effectual supplementary tool, medical images have an increasingly crucial part in advanced medical diagnosis and treatments. Nevertheless, medical images from a single modality are generally not capable of providing adequate data to meet the necessities of complicated diagnosis owing to limitations of the imaging process [2, 3]. Thus, single form of the medical image is not possible to reflect pathological tissue data accurately and completely [1]. Multimodal medical image fusion (MMIF) can integrate complementary data of actual images and offer the necessitate data in a single fused image. Nowadays, it is medically imperative as general practitioners can access much comprehensive data regarding disease-related variations, thereby providing the patients more accurate clinical treatments and support. The multimodal medical image provides data from diverse views, thus supporting doctors to confirm diagnosis and to make decision concerning future treatments [4]. Currently, IF provides an efficacious solution for various medical image processing research studies. It automatically identifies the data in diverse images and incorporates them for producing single combined image, wherein every key element is obvious [5].

IF is the most popular method in image processing that can be employed for incorporating two or several data into one frame [6], which can support the performance of succeeding computer vision chores [6, 7]. An analytical and visual image quality is enhanced by incorporating various images [8]. An effectual IF can protect vital data by extracting every part of significant information from images and avoiding differences in the ultimate image. Afterward, fusion, the fused image is highly appropriate for machine and human perception [8]. The MMIF method is a robust technique in medical diagnosing and preparation of treatments. The multimodal fusion can incorporate images acquired from several modalities into a single image. The fusion schemes are normally utilized for mapping an operational image into a structural image in medical applications [9]. Presently, deep learning (DL) has gained more breakthroughs in several computer vision and image processing issues such as segmentation, super-resolution, and classification. In the domain of IF, the research based upon DL has also become the most active theme in past decades. Various DL-enabled IF techniques have been designed for digital photography [10]. These techniques are capable to extract the features from input images and making fused image with the required information [4].

The primary goal is to introduce the UIFCNN for IF utilizing medical images. IF is specified as the arrangement of remarkable data from various modalities employing several mathematical systems for generating a single composite image. In this research, two input images, namely, T1 and T2 fluid-attenuated inversion recovery (T2-FLAIR) are considered. Firstly, input image-T1 is obtained and it is preprocessed utilizing BF. After that, segmentation of the filtered image is done by the RP-Net. Thus, segmented output-1 is attained. Simultaneously, input image-T2-FLAIR is preprocessed utilizing BF. Then, image segmentation is accomplished by the RP-Net, and therefore segmented output-2. The two outputs, namely, segmented output-1 and segmented output-2 are fed to the IF stage. IF is conducted to fuse both images utilizing the UIFCNN, which is designed by merging U2Fusion with the IFCNN.

- •

Proposed UIFCNN for IF using medical images: This study introduces the UIFCNN, a novel deep-learning architecture specifically designed for medical IF

- •

The UIFCNN combines the strengths of the U2Fusion and the IFCNN

- •

The few-shot segmentation model (RP-Net) is used for the segmentation of images

The formation of beneath segments is as follows: Section 2 reveals the literature overview along with shortcomings, Section 3 represents the UIFCNN methodology, Section 4 interprets UIFCNN outcomes, and Section 5 exhibits the conclusion of the UIFCNN.

2. Motivation

Due to various challenges with IF, suitable, reliable, and accurate fusion methods are needed for diverse sorts of images in various areas to acquire supreme outcomes. This motivated to present a new technique for IF. In this part, methods taken for review and its shortcomings are interpreted.

2.1. Literature Survey

Duan et al. [3] designed the genetic algorithm (GA) for the fusion of medical images. It attained excellent performance for objective estimation and visual effects. Moreover, segmentation was highly precise, and comprehensive information was maintained in fusion outcomes, but still, this method was less effective as it took more running time. Zhou, Xu, and Zhang [4] presented a dilated residual attention network (DILRAN) for fusion tasks of medical images. It had rapid convergence speed and could extract multiple-scale deep semantic features, even though it failed to conduct well in few corner conditions. Shehanaz et al. [11] introduced optimum weighted average fusion–based particle swarm optimization (OWAF-PSO) for fusing medical images to enhance performance of multimodal mapping. This model lessened computational time, though it did not design registration approaches for the intention of the multicentral applications. Rajalingam et al. [10] devised non-subsampled contourlet transform and guided filtering (NSCT-GIF) for the fusion of medical images. It offered the information supportive for understanding and visualizing the diseases. However, this method failed to employ high potential of few DL approaches for IF. Maseleno et al. [12] established the deep convolutional neural network (DCNN)–based ensemble model for IF. Here, the number of the DCNN was extracted high-level features, and then these were combined with the help of the fusion model, which was based on the weighted averaging concept. It exhibited enhanced visual and feature representation quality. The evaluation was difficult for large-scale datasets. Ghosh and Jayanthi [13] established a Dense-ResNet framework for the medical IF. Here, a median filter was preprocessed by the different modality images, and edge-attention guidance network (ET-Net) has done the segmentation. The performance was superior, but the complexity was high.

2.2. Challenges

- •

The GA algorithm presented by authors in [3] was utilized for obtaining superior fusion outcomes. However, it did not consider quick postprocessing techniques for improving multimodal clinical IF performance.

- •

In [4], the DILRAN introduced for IF provided consistent reference for diagnosing disease in real-world medical routine, but it failed to concentrate on decreasing the noises when maintaining complete fusion quality.

- •

At present, IF is an effectual solution for detecting information from images in an automated manner, even though it suffers from decomposition grade selection, decomposition sorts in real-world applications, space and time consumption, as well as loss of contrast.

3. Proposed UIFCNN for IF Using Medical Images

IF is utilized to recover crucial information from a group of images and provides a single image for making it highly informative and supportive than other input images. IF enhances applicability and quality of data. Here, the UIFCNN is devised for IF using medical images such as T1 and T2-FLAIR. Firstly, input image-T1 is preprocessed by BF, and it is segmented employing the RP-Net, thus segmented output-1 is achieved. On the other side, input image-T2-FLAIR is preprocessed utilizing BF, and the filtered image is segmented by the RP-Net; therefore, segmented output-2 is obtained. Then, two outputs are fused in the IF phase that is accomplished by the UIFCNN. However, the UIFCNN is devised by integrating U2Fusion with the IFCNN. Figure 1 reveals pictorial depiction of the UIFCNN for IF using medical images.

3.1. Acquisition of Input Images

3.2. Preprocessing of Input Images Utilizing BF

A preprocessing phase has direct implications on the preparation of resultant images. Here, BF is employed to preprocess input T1 and T2-FLAIR images signified as Tf and Lf.

3.3. Image Segmentation Utilizing RP-Net

Image segmentation is a vital chore in various computer vision applications and image processing. An intention of image segmentation is partitioning an image to diverse areas regarding certain conditions for further process. To conduct image segmentation, filtered T1 andT2-FLAIR images indicated by Af and Ef are given as input.

3.3.1. Structure of RP-Net

- (i)

Feature encoder: This component extracts the features from query and support images. An input to a network is a group of Μ support images zn ∈ RΤ×Ε×1 and zp ∈ RΤ×Ε×1, padded to similar height Τ and width Ε. The query and support images are initially aligned utilizing affine transformation that is a general phase in various medical imaging tasks.

- (ii)

CRE: CRE utilizes a correlation for enhancing context association features and forces a system to concentrate on shape and contexts of the region of interest (ROI). In this component, the correlation evaluation is applied for acquiring context association features among background and foreground feature vectors at individual spatial position (u, v) of Cβ and (u − ε, u − τ) of with an offset ε and τ.

() - (iii)

Recurrent mask refinement module: This module iteratively employs CRE and prototypical network for recapturing the variation of local contextual features, and it refines a mask. The prototypical network computes a distance amid query feature vectors and estimated prototypes Κ = {κη|η ∈ N}. Moreover, this module acquires supportive features Cn,query features Cp and mask μb−1, utilizes CRE for enhancing query features, and employs a prototypical network to produce segmentation mask output μb.

() -

where fς indicates an augmented feature and μsoft denotes softmax function applied to generate an output. The segmented T1 and T2-FLAIR images are illustrated as Rf and Pf.

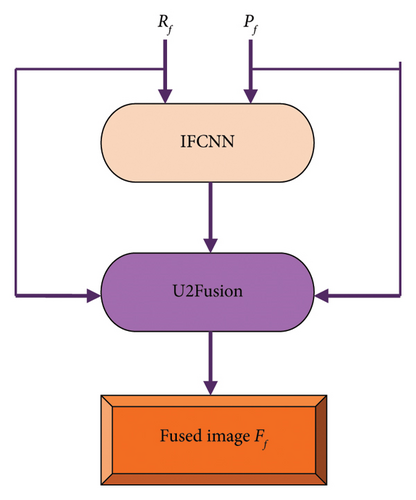

3.4. IF Using UIFCNN

In the IF process, the segmented images from various modalities are fused for superior visual appearance and better information. Here, IF is conducted by the UIFCNN that is introduced by merging U2Fusion with the IFCNN. The general outline of IF employing the UIFCNN is delineated in Figure 2. Initially, segmented T1 and T2-FLAIR images Rf and Pf are fed to the IFCNN network. Then, both segmented outputs along with the output from the IFCNN are subjected to the U2Fusion network, wherein the fused image is acquired [17].

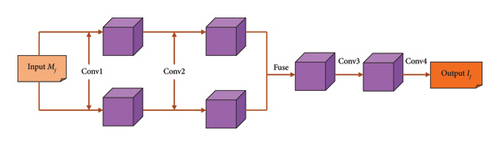

3.4.1. Structure of IFCNN

- (i)

FE module: Initially, two convolutional (conv) layers are adopted for extracting low-level features from an input image. FE is a critical process in transform-domain IF algorithms, and it is executed by the processing images with several filters. Conv1 is utilized for extracting an effectual image feature, and therefore, conv1 parameters are fixed during model training. Conv2 is added for tuning conv features of Conv1 in order to match for FF.

- (ii)

FF module: The FF module fuses several kinds of input images, and it can also fuse several counts of input images. Here, element-wise fusion rules are employed for fusing conv features of numerous inputs that can be computed by

() -

where implies Υth feature map of Xth input image that is extracted by the Conv2, mentions Υth channel of the fused feature map by the FF module, whereas fuse symbolizes element-wise fusion rules.

- (iii)

IR module: As the FE module only comprises two conv layers, the abstraction level of extracted conv features is not higher. Thus, conv layers such as conv3 and conv4 are utilized in the last phase of this network for reconstructing the fusion image from fused conv features . The architecture of the IFCNN is illustrated in Figure 3, and an outcome from the IFCNN is If.

3.4.2. Structure of U2Fusion

- (i)

FE: An input Zf is combined in a single channel, and it is duplicated into three channels. Thereafter, it is subjected into Visual Geometry Group-16 (VGG-16). An output of conv layers prior to max-pooling layers refers to feature maps for succeeding information measurement as along with their respective shapes.

- (ii)

Information measurement: For measuring the information comprised in extracted feature maps, gradients are employed for computation. It can be mathematically modeled as

() -

where mentions the feature map by the conv layer prior to rth max-pooling, h implies the feature map in the hth channel of Lr channels, ‖ . ‖Q denotes Frobenius norm, and ∇ refers to the Laplacian operator.

- (iii)

Information preservation degree: Two adaptive weights are allocated as information preservation degrees for preserving information in the source images that define similarity weights among source and fusion images. To embody and improve variation in the weights, a predefined positive constant ξ is employed for scaling values to assign weights superiorly. Therefore, ϖ1 and ϖ2 can be represented by

() -

A softmax operation is utilized for mapping kZf (1)/ξ, kZf (2)/ξ to real numbers among 0 and 1. The fused image acquired from U2Fusion is specified as Ff.

4. Results and Discussion

The outcomes achieved by the UIFCNN that are presented for IF are explicated in this segment.

4.1. Experimentation Setup

The UIFCNN devised for IF using medical images is experimentally implemented in PYTHON version 3.9.11 with Windows 10 OS, 1.7 GHz CPU, 8 GB RAM, and more than 100 GB ROM. Also, the parameter details of the UIFCNN are presented in Table 1.

| Parameter | Value |

|---|---|

| Learning rate | 0.01 |

| Batch size | 32 |

| Epoch | 100 |

| Loss function | MSE |

4.2. Dataset Description

The brain tumor segmentation (BraTS2020) dataset [14] contains multimodal scans available in the format of NIfTI files (.nii.gz). Moreover, this dataset describes native (T1), postcontrast T1-weighted (T1Gd), T2-FLAIR, and T2-weighted (T2) volumes. The BraTS2020 dataset has files of about 7 GB.

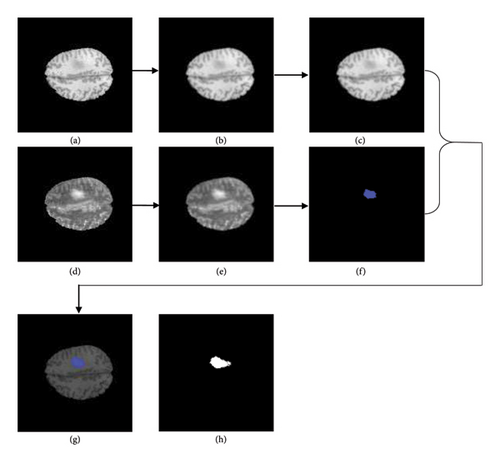

4.3. Experimental Outcomes

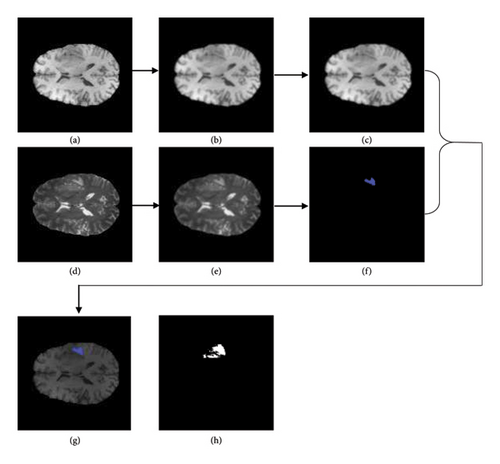

The acquired outcomes of the experimentation of image-1 are delineated in Figure 5. Figures 5(a), 5(b), and 5(c) denote input T1 image, filtered T1 image, and segmented T1 image. Input T2-FLAIR image, filtered T2-FLAIR image, and segmented T2-FLAIR image are indicated in Figures 5(d), 5(e), and 5(f), whereas Figure 5(g) mentions the fused image and Figure 5(h)) denotes the ground truth image.

The obtained experimental outcome of image-2 is deliberated in Figure 6. Figures 6(a), 6(b), and 6(c) denote input T1 image, filtered T1 image, and segmented T1 image. Input T2-FLAIR image, filtered T2-FLAIR image, and segmented T2-FLAIR image are indicated in Figures 6(d), 6(e), and 6(f), whereas Figure 6(g) mentions the fused image and Figure 6(h)) denotes the ground truth image.

4.4. Performance Measures

MSE, Dice coefficient, and Jaccard coefficient are the metrics taken for evaluating the UIFCNN [20–22].

4.4.1. MSE

4.4.2. Dice Coefficient

4.4.3. Jaccard Coefficient

4.5. Comparative Techniques

GA [3], DILRAN [4], OWAF-PSO [11], and NSCT-GIF [10] are the existing methods considered for a comparative estimation to prove efficacy of the UIFCNN.

4.6. Comparative Analysis

The evaluation of the UIFCNN is carried out by altering training data and k-value with concern to metrics.

4.6.1. Analysis Regarding Training Data

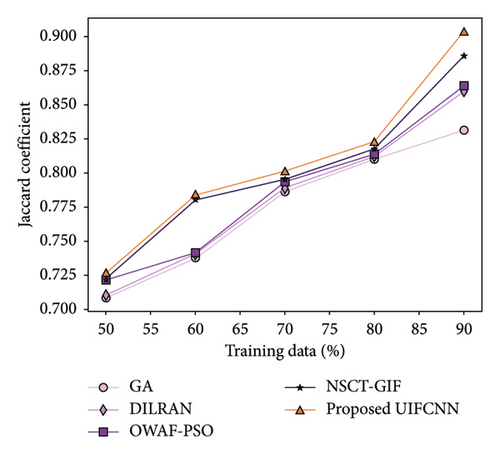

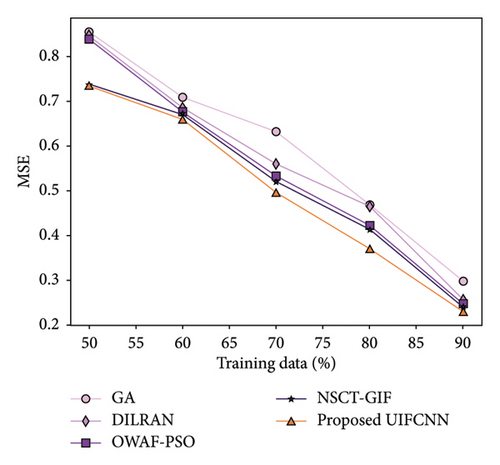

Figure 7 implies the estimation of the UIFCNN regarding measures by altering training data. The best values acquired by each method taken for assessment while training data being 90% are interpreted. Figure 7(a) reveals an assessment of the UIFCNN by means of the Dice coefficient. The Dice coefficient attained by the UIFCNN is 0.907, whereas GA, DILRAN, OWAF-PSO, and NSCT-GIF obtained 0.881, 0.886, 0.897, and 0.900. Evaluation of the UIFCNN with respective to the Jaccard coefficient is illustrated in Figure 7(b). The UIFCNN achieved 0.904 of the Jaccard coefficient while the value of the Jaccard coefficient acquired by GA is 0.832, DILRAN is 0.860, OWAF-PSO is 0.864, and NSCT-GIF is 0.886. Figure 7(c) exposes the analysis of the UIFCNN in relation to MSE. GA, DILRAN, OWAF-PSO, and NSCT-GIF achieved MSE of 0.298, 0.256, 0.248, and 0.240, whereas MSE attained by the UIFCNN is 0.231.

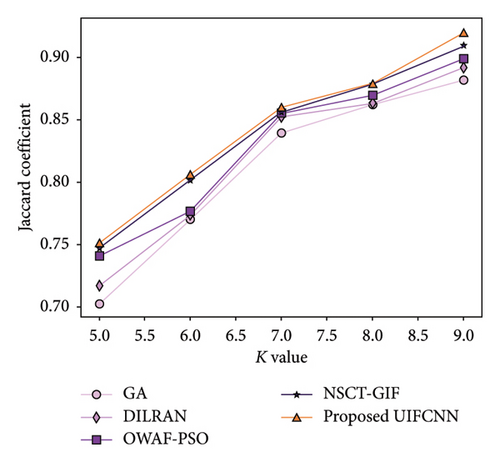

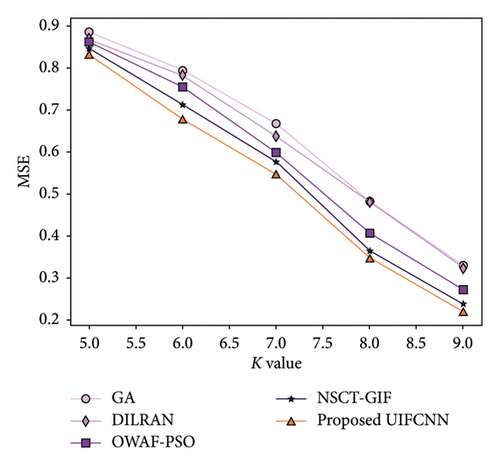

4.6.2. Analysis Regarding K Value

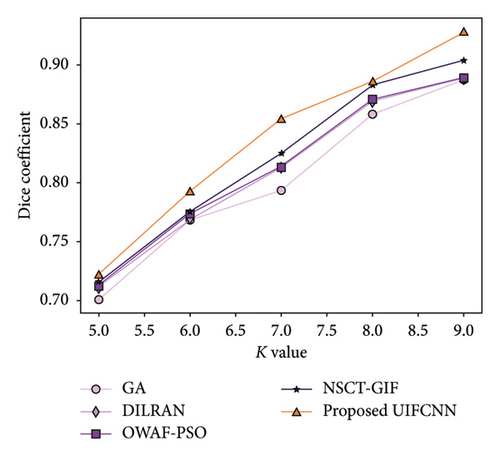

Assessment of the UIFCNN with consideration of evaluation measures by varying k-value is explicated in Figure 8. For k-value = 9, values achieved by existing and devised approaches are described. Evaluation of the UIFCNN considering the Dice coefficient is mentioned in Figure 8(a). The UIFCNN attained the Dice coefficient of 0.928 while the Dice coefficient achieved by GA is 0.887, DILRAN is 0.889, OWAF-PSO is 0.889, and NSCT-GIF is 0.904. Figure 8(b) reveals the analysis of the UIFCNN based upon the Jaccard coefficient. GA, DILRAN, OWAF-PSO, and NSCT-GIF acquired the Jaccard coefficient of 0.882, 0.892, 0.899, and 0.909, whereas the UIFCNN obtained 0.920. Figure 8(c) displays the assessment of the UIFCNN with regards to MSE. MSE achieved by the UIFCNN is 0.221 while GA, DILRAN, OWAF-PSO, and NSCT-GIF acquired 0.330, 0.324, 0.273, and 0.238.

4.7. Comparative Discussion

Table 2 presents the values obtained by the UIFCNN and other approaches such as GA, DILRAN, OWAF-PSO, and NSCT-GIF for conducted assessments. It is accepted that the UIFCNN achieved maximum values of the Dice coefficient about 0.928 and the Jaccard coefficient about 0.920 as well as minimum MSE about 0.221 for k-value = 9. This proves that the UIFCNN is an excellent model for IF using medical images.

| Setups | Metrics/methods | GA | DILRAN | OWAF-PSO | NSCT-GIF | Proposed UIFCNN |

|---|---|---|---|---|---|---|

| Training data = 90% | Dice coefficient | 0.881 | 0.886 | 0.897 | 0.900 | 0.907 |

| Jaccard coefficient | 0.832 | 0.860 | 0.864 | 0.886 | 0.904 | |

| MSE | 0.298 | 0.256 | 0.248 | 0.240 | 0.231 | |

| K value = 9 | Dice coefficient | 0.887 | 0.889 | 0.889 | 0.904 | 0.928 |

| Jaccard coefficient | 0.882 | 0.892 | 0.899 | 0.909 | 0.920 | |

| MSE | 0.330 | 0.324 | 0.273 | 0.238 | 0.221 | |

- Note: Bold values represent the proposed work results.

5. Conclusion

The medical IF process has attracted more attention in advanced diagnosing and healthcare over several decades. The primary objective of medical IF is fusing corresponding information from multimodal clinical images for acquiring higher quality images. It is expansively employed in biomedical research studies and medical diagnosing for the doctors. The procedure to fuse clinical images into one image without introducing loss or distortion of information is an encouraging research problem. In this research, the UIFCNN is presented for IF using medical images. The medical images taken for this IF process are T1 and T2-FLAIR. At first, preprocessing of input image-T1 is conducted by BF and segmentation of T1 image is accomplished by the RP-Net, thus segmented output-1 is attained. On the other side, input image-T2-FLAIR is preprocessed utilizing BF. The filtered image is segmented employing the RP-Net, and therefore, segmented image-2 is achieved. Both segmented outputs are fused in the IF phase utilizing the UIFCNN. However, the UIFCNN is devised by incorporating U2Fusion with the IFCNN. Furthermore, the UIFCNN achieved high values of the Dice coefficient and Jaccard coefficient about 0.928 and 0.920 as well as low MSE about 0.221 while considering the k-value as 9. The dataset used in this work only includes two types of input images. This may limit the generalization of the model to other imaging modalities, such as magnetic resonance imaging (MRI), computed tomography (CT), and positron emission tomography (PET) scans. Incorporating a more diverse dataset would help in testing and refining the capability of the model across various imaging techniques, which will be considered as the future work.

Ethics Statement

The authors have nothing to report.

Conflicts of Interest

The authors declare no conflicts of interest.

Author Contributions

Balasubramaniam S.: conceptualization, investigation, data curation, formal analysis, and writing–original draft; Vanajaroselin Chirchi: revision support; Sivakumar T. A.: writing support; Gururama Senthilvel P.: review and editing; Duraimutharasan N.: revision support.

Funding

This research received no specific grant from any funding agency in the public, commercial, or not-for-profit sectors.

Open Research

Data Availability Statement

The data used to support the findings of this study are available from the corresponding authors upon reasonable request.