A Scalable and Generalised Deep Learning Framework for Anomaly Detection in Surveillance Videos

Abstract

Anomaly detection in videos is challenging due to the complexity, noise, and diverse nature of activities such as violence, shoplifting, and vandalism. While deep learning (DL) has shown excellent performance in this area, existing approaches have struggled to apply DL models across different anomaly tasks without extensive retraining. This repeated retraining is time-consuming, computationally intensive, and unfair. To address this limitation, a new DL framework is introduced in this study, consisting of three key components: transfer learning to enhance feature generalization, model fusion to improve feature representation, and multitask classification to generalize the classifier across multiple tasks without training from scratch when a new task is introduced. The framework’s main advantage is its ability to generalize without requiring retraining from scratch for each new task. Empirical evaluations demonstrate the framework’s effectiveness, achieving an accuracy of 97.99% on the RLVS (violence detection), 83.59% on the UCF dataset (shoplifting detection), and 88.37% across both datasets using a single classifier without retraining. Additionally, when tested on an unseen dataset, the framework achieved an accuracy of 87.25% and 79.39% on violence and shoplifting datasets, respectively. The study also utilises two explainability tools to identify potential biases, ensuring robustness and fairness. This research represents the first successful resolution of the generalization issue in anomaly detection, marking a significant advancement in the field.

1. Introduction

- •

A novel framework has been introduced for incorporating a new anomaly class into an existing AD model without retraining from scratch.

- •

A deep feature fusion method is proposed to integrate diverse DL models for better feature representation.

- •

The deep feature fusion approach achieved an accuracy of 83.59% on the University of Central Florida (UCF)-Crime dataset and 97.99% on the RLVS dataset, outperforming all the previous methods.

- •

Based on our experiments with the UCF-Crime and RLVS datasets, the multiclassification approach we used was able to accurately detect and categorise two specific abnormal behaviour classes (shoplifting and violence) and normal behaviour, achieving an accuracy of 88.37%. This approach significantly enhances the ability to identify and categorise abnormal behaviours in different scenarios. To the best of our knowledge, an existing approach has yet to achieve a similar level of performance with a single model across multiple tasks in VAD.

- •

The proposed framework was tested on unseen datasets, achieving an accuracy of 87.25% and 79.39% on violence and shoplifting datasets, respectively.

2. Related Works

This section provides an overview of the evolving landscape of VAD research, highlighting key studies and methodologies that harness ML and DL’s potential to enhance AD systems’ accuracy and efficiency. A study [11] presented a new DL architecture for identifying violent behaviours in videos. The method leverages recurrent neural networks (RNNs) and 2D CNN to capture spatial and temporal features. Optical flow information is integrated to encode motion patterns. The proposed approach has been tested with success on multiple databases. In [12], the authors proposed an approach to assist monitoring staff in directing their attention to specific screens where the likelihood of a crime is higher. This approach involved identifying situations in video footage that may signal an impending crime. They employed a 3D CNN to examine surveillance videos and capture behavioural attributes for the identification of suspicious actions. The model was trained using carefully chosen videos from the UCF-Crimes dataset. In [13], a Hybrid CNN Framework (HCF) was presented to identify distracted driver behaviours by leveraging DL and image processing techniques. The framework employed pretrained CNN models in collaboration to extract behavioural features through TL, thereby improving result accuracy. In a study [14], TL was employed to enhance the accuracy of abnormal behaviour detection by extracting human motion characteristics from RGB video frames. The authors utilised the VGGNet-19 architecture for feature extraction and subsequently applied a Support Vector Machine (SVM) classifier to identify complex motion scenarios. In [15], several DL models, such as CNN, Long Short-Term Memory (LSTM), CNN-LSTM, and Autoencoder-CNN-LSTM, were explored to identify unusual behaviours in older people. The models were trained using temporal and spatial data, which enabled them to make accurate predictions. To tackle the issue of data imbalance, the researchers oversampled minority classes, especially for the LSTM model. Overall, the study provides insights into how DL can be used to detect and prevent unusual behaviours in elderly individuals. In [16], the authors tackled the issue of shoplifting by focusing on detecting suspicious behaviours that may lead to criminal activities. Instead of identifying the crime itself, their approach aims to model and detect behaviours that precede criminal acts, providing opportunities for prevention. They used a 3D CNN to extract video features and classify segments with potential shoplifting behaviour [17]. introduced a shoplifting detection system that utilises DNN. The system used the Inceptionv3 model for feature extraction and employed LSTM networks to understand temporal sequences. This system can accurately identify individuals involved in shoplifting activities with an accuracy of up to 74.53%. The paper [18] presented a deep violence detection approach that leverages handcrafted features related to appearance, speed of movement, and representative images. These features are input into CNN through spatial, temporal, and spatiotemporal streams. The spatial stream captured environment patterns, the temporal stream focused on motion patterns using modified optical flow, and the spatiotemporal stream introduced a novel feature to enhance interpretability. The CNN is trained on datasets containing both violent and normal behaviour frames. A study [19] used DL techniques to detect abnormal driver behaviours such as smoking, eating, drinking, and calling. A dataset was created to train and test models comprising these behaviours and normal driving. The study evaluated DL models, including a proposed CNN-based model and pretrained models such as ResNet101, VGG-16, VGG-19, and Inception-v3. Keyframe extraction was used to optimise computation. In [20], a shoplifting detection system was introduced. This approach involved using a hybrid neural network that combined convolutional and recurrent components to extract information from video frames and analyse their temporal sequence. Specifically, it employed gated recurrent units for data processing. Data augmentation was conducted to mitigate class imbalance and enhance the dataset. A pretrained MobileNetV3Large CNN was combined with a recurrent network incorporating gated nodes for classification. In reference [21], a method for detecting violent behaviour using keyframes is proposed. This approach treats video frames as discrete events and detects instances of violence by assessing whether the count of keyframes exceeds a predefined threshold, thereby reducing hardware demands. Furthermore, the paper introduced a novel training technique that leverages pairs of background-removed and original images to improve feature extraction for DL models while avoiding introducing extra network complexity. In [22], a model for detecting crowd violence behaviour, named HD-Net, was developed. It utilised a human contour extractor to minimise background noise in violence detection by focusing on individuals in video frames. A dynamic feature encoder also extracts dynamic features from adjacent frames. The model is built on a 3D CNN framework for spatial feature extraction and LSTM for temporal feature fusion [23]. proposed a convolutional autoencoder architecture that can detect anomalies in appearance and motion patterns. The architecture used two components, the spatial and temporal autoencoder, to differentiate between spatial and temporal representations. The spatial autoencoder captures appearance features by reconstructing the initial frame. On the other hand, the temporal component models motion through RGB differences across sequential frames. To further enhance the performance of the motion autoencoder, the paper incorporated a variance-based attention module that highlights critical movement areas. A novel deep K-means clustering approach was introduced to extract concise representations. In [24], the authors presented a CNN model to detect crowd anomalies in video sequences. The model comprises two convolutional layers followed by two fully connected layers that utilise Rectified Linear Unit (ReLU) and sigmoid functions. The intermediate layers generate features that are used for abnormality detection. The model’s performance was evaluated on three scientific datasets that included normal and abnormal activities. The outcomes demonstrated that the model performed effectively when applied to random YouTube videos exhibiting abnormal behaviour. In [25], a new approach was introduced to detect abnormal behaviour of workers in manufacturing environments. The model identifies and describes unusual worker actions based on their interaction with objects using a combination of technologies such as Mask R-CNN, Media Pipe Holistic, LSTM, and a worker behaviour description algorithm. The approach involved object recognition; worker poses identification, and pattern analysis to differentiate between typical and unusual actions. Anomalous behaviours include worker falls, slips, tool breakage, and machine failures. The article [26] presented an anomaly recognition model employing a deep CNN architecture. This model extracts deep features from surveillance video frames and directs them to a temporal convolution network (TCN) with a multihead attention module. The TCN comprises multiple layers of temporal convolutional filters with varying dilation rates, enabling the capture of diverse temporal contexts and long-range dependencies. It is trained by minimising an objective function, using cross-entropy loss, to optimise parameters for accurate classification or recognition of activities in sequential data. The research presented in the paper [27] utilised TL-InceptionV3 to improve AD in surveillance cameras. Two TL methods, pretraining and fine-tuning, were employed using InceptionV3 to classify frames as normal or abnormal behaviours. The UCF-Crime dataset was utilised for training and evaluation. The results demonstrated that the fine-tuning approach outperformed the pretraining approach significantly. This indicates substantial enhancements in the model’s performance. In [28], a comprehensive benchmark dataset consisting of 900 samples was evenly divided into 450 instances of shoplifting and 450 instances of nonshoplifting, annotated across different shoplifting scenarios. This dataset assessed shoplifting detection techniques, including 2D CNN, 3D CNN, and a novel hybrid method that combines InceptionV3 and bidirectional LSTM. Notably, the hybrid approach outperformed the others in terms of performance. In [29], a DL method is introduced for identifying violence in animation videos. The research involved modifying a Faster R-CNN model to handle the intricate aspects of violence depicted in cartoon and animation content. The modification included replacing the model’s backbone with a customised RegNet to capture frame features, utilising a modulated deformable convolutional (MDC) layer instead of the standard inner lateral connection for flexible feature map extraction, and introducing a novel distributed attention module (DAM) within the feature pyramid network to enhance feature extraction. Additionally, the researchers incorporated a modified multiscale Region of Interest (ROI) Align to enhance violence detection across diverse scenarios. Moreover, the method integrated a classification component into the detection model to categorise different levels of violence within each frame. In [30], an innovative semisupervised hard attention mechanism was introduced. This mechanism facilitated identifying and separating crucial regions within videos from less informative data segments. The model’s accuracy improved by efficiently eliminating redundant data and highlighting valuable visual information at a higher resolution. This approach obviated the necessity for attention annotations in video violence datasets, rendering them more widely applicable. The proposed model utilised a pretrained I3D backbone to expedite and stabilise the training process. Ref. [31] propoed a novel approach to enhance the generalisation of violence detection across multiple scenarios. This approach employed three pretrained CNN models, Xception, InceptionV3, and InceptionResNetV2, to extract significant features from RLVS and Hockey datasets. The extracted features from each dataset were then fused into a single feature pool separately. Finally, these feature pools from the first violence scenario and the second were combined into a unified feature space, facilitating the training of an ML classifier capable of generalising across multiple scenarios. However, it is essential to note that this approach needs to address generalisation across multitask AD. The article [32] explored advanced techniques to enhance aggression detection using multimodal fusion and DL in surveillance systems. The study addressed the limitations of traditional single-modality approaches by integrating audio, visual, and text-based features, along with additional meta-information such as Audio-Focus, Video-Focus, Context, History, and Semantics. Four distinct fusion methods were developed and compared: intermediate-level fusion, concatenation-based fusion, and two methods involving element-wise operations followed by concatenation. In the paper [33], the authors proposed a method to enhance the detection of abnormal actions, particularly human aggression and car accidents, using wavelet-based channel augmentation. The core of the proposed method was the MultiWave-Net, a spatiotemporal network designed to integrate wavelet transformation with traditional DL architectures such as CNNs and ConvLSTMs. The wavelet-based channel augmentation technique was applied to improve the feature extraction capabilities of these networks, allowing them to capture both spatial and temporal aspects of the input data better. The article [34] proposed an approach to recognising violent video behaviours by leveraging the MLP-Mixer architecture and a new dataset format called Sequential Image Collage (SIC). SIC aggregated video frames into SIC, capturing spatial and temporal dimensions to enhance the model’s understanding of violent actions. These collages and original frames were processed through the MLP-Mixer architecture, which relied solely on Multilayer Perceptrons (MLPs) for computational efficiency. The method involved patch embedding, token mixing, and channel mixing operations to capture the dataset’s local and global features. The paper [35] proposed a VAD approach, namely AnomalyCLIP, to detect and classify abnormal events at the frame level using only video-level supervision. The method manipulated the latent CLIP feature space to define a normal event subspace, allowing it to detect anomalies based on text-driven prompts. It incorporated a Transformer architecture to enhance this capability, capturing both short- and long-term temporal dependencies between frames [36]. proposed a lightweight, trainable 3D CNN to recognise violent actions in public places by processing spatial and temporal information. This model is designed to be computationally efficient for deployment on resource-constrained devices like mobile systems. In [37], the authors introduced an ensemble approach comprising three CNN architectures integrated with a stacked LSTM to enhance model performance. It utilised the RLVS and RWF-2000 datasets to evaluate the model’s generalisation ability across diverse datasets.

Despite the literature introducing advanced AI methods to detect and recognise anomaly behaviours in videos, a significant challenge emerges concerning generalisation. These methods necessitate retraining the entire model from scratch when a new anomaly class is introduced, resulting in increased time and computational resource requirements, especially in cases where the model is complex or requires extensive training. This challenge presents a practicality and efficiency issue for AD systems. Therefore, new methods are urgently needed to address the generalisation issue in multitask AD without requiring extensive retraining.

3. Materials and Methods

3.1. Datasets

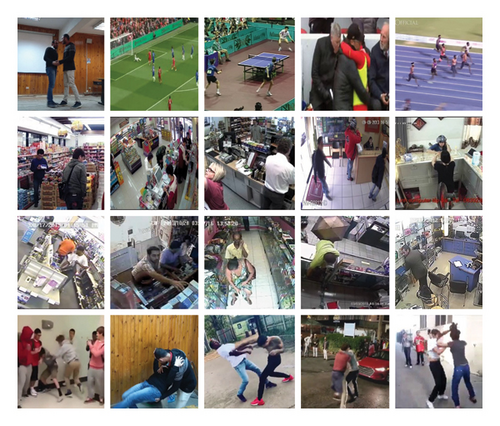

UCF-Crime and RLVS datasets were employed in this article. The UCF dataset [38] is widely recognised in criminal activity recognition research and was compiled by the UCF. It is publicly accessible for research purposes and includes 1900 untrimmed surveillance videos gathered from platforms such as YouTube, TV news, and documentaries, varying in quality and resolution. This dataset encompasses 128 h of footage depicting 13 real-world criminal activities, including abuse, arrest, arson, assault, road accidents, shoplifting, and more. For this investigation, samples were extracted explicitly from the “shoplifting” and “normal” classes, each comprising 50 video clips recorded in retail stores. On the other hand, the RLVS dataset [39] consists of 2000 video clips, equally divided between violent and everyday activities. The violent videos depict physical altercations in various environments, such as streets, prisons, and schools. Videos within the RLVS dataset feature high resolutions, ranging from 480p to 720p, with durations spanning 3–7 s. A frame extraction process was performed using a 10-frame interval, resulting in six frames per second. Notably, some frames within violent and shoplifting videos were eliminated during the data cleaning phase because they did not depict the relevant actions and were more similar to frames from normal videos. Figure 1 shows some samples from both the UCF and RLVS datasets.

3.2. CNN Architectures

This study utilised four deep CNN models, MobileNetV2, InceptionV3, InceptionResNetV2, and Xception, to tackle the challenge of detecting anomalous video behaviours. These models have several advantages worth noting. They have excelled in achieving outstanding results on the ImageNet dataset, which is widely acknowledged as the benchmark for CV tasks. Furthermore, their well-designed architecture excels at feature extraction, allowing them to capture a broad spectrum of features due to their diverse filter sizes, ranging from 1 × 1 to 7 × 7. Furthermore, incorporating ReLU activations and residual connections improves the feature representation quality and addresses the gradient vanishing problem. Dropout layers and global average pooling (GAP) are also utilised in these models to mitigate the risk of overfitting. Moreover, the incorporation of Batch Normalization layers expedites the training process. These advantages collectively make these models effective methods for VAD. The following subsections provide concise descriptions of the models used in the work.

3.2.1. MobileNetV2 Model

The MobileNetV2 model is an efficient and lightweight CNN technique explicitly designed for mobile and embedded devices. MobileNetV2’s architectural composition commences with fully convolutional layers containing 32 filters and encompasses 19 residual bottleneck layers. It consists of two modules, each comprising three layers. These blocks start and end with a 1 × 1 convolutional layer comprising 32 filters—notably, the second block functions as a fully connected layer with one depth. ReLU activation is applied at various levels throughout the architecture. The primary distinction between the two modules is their stride lengths; the first employs a stride length of 1, while the second utilises a stride length of 2 [40]. The MobileNetV2 model successfully achieves a delicate equilibrium between model size and performance, rendering it particularly suitable for resource-constrained applications like mobile devices and embedded systems.

3.2.2. InceptionV3 Model

InceptionV3 [41] is a CNN architecture for image classification and object recognition tasks. It is renowned for its deep structure and utilisation of specialised convolutional layers, including Inception modules designed to capture features at varying scales. InceptionV3 employs parallel convolutional layers of different sizes and pooling operations within these modules to capture features at various scales effectively. It leverages factorized convolutions to reduce network parameters, includes auxiliary classifiers to enhance training, and utilises GAP to mitigate overfitting. Extensive use of batch normalisation accelerates training. InceptionV3’s depth and parameter count make it suitable for various CV applications. These attributes contribute to InceptionV3’s ability to achieve high accuracy in image classification tasks while maintaining manageable computational complexity.

3.2.3. InceptionResNetV2 Model

InceptionResNetV2 is a deep CNN architecture combining elements from the Inception and ResNet architecture [42]. It was designed to improve feature learning and representation in CV tasks. InceptionResNetV2 integrates residual connections, similar to ResNet, to facilitate the training of deep networks while also utilising Inception modules to capture features at multiple scales. This architecture typically includes a stem module to preprocess input data, multiple Inception-ResNet blocks that increase network depth, and final layers for classification or feature extraction. InceptionResNetV2’s unique combination of these architectural elements aims to achieve superior performance in tasks such as image classification and object detection.

3.2.4. Xception Model

The Xception network [43] evolves from Inception by replacing conventional convolution layers with depthwise separable convolution layers. This design optimises spatial and cross-channel correlations within the network’s core functionality. XceptionNet, with 36 convolution layers segmented into 14 modules, supersedes Inception’s architecture. It maintains a continuous relationship between the remaining layers after removing the initial and final ones. The network transforms the original image to determine probabilities across multiple input channels and employs 11 depth-wise convolutions, offering an alternative to three-dimensional maps by visualising relationship patterns.

The selected CNN models—MobileNetV2, InceptionV3, InceptionResNetV2, and Xception—were chosen due to their unique architectural strengths and their proven effectiveness on the ImageNet dataset, a benchmark for CV tasks. MobileNetV2 is a lightweight and efficient model optimized for resource-constrained environments, making it ideal for real-time applications. InceptionV3 and InceptionResNetV2 are renowned for their ability to capture multiscale features through parallel convolutional layers, with the latter incorporating residual connections for enhanced gradient flow in deep networks. Xception leverages depthwise separable convolutions to optimise spatial and cross-channel correlations, reducing computational complexity while maintaining high accuracy. These models provide complementary advantages in feature extraction, offering a diverse set of representations for the AD task.

These models utilise prior knowledge from large-scale datasets like ImageNet, allowing them to transfer generalised features to new tasks. This significantly reduces the need for extensive labelled data, speeds up convergence, and improves performance with minimal computational resources. In contrast, non-pretrained networks require training from scratch, which demands substantial computational resources, larger datasets, and longer training times, making them less practical for real-world AD scenarios. In this context, self-supervised learning can be an alternative to transferring learning from imagenet [4]. By leveraging pretrained networks, the framework efficiently captures generalised features and refines them for specific tasks, ensuring robust and accurate AD.

3.3. Part1: Proposed Framework, Deep Feature Fusion Approach

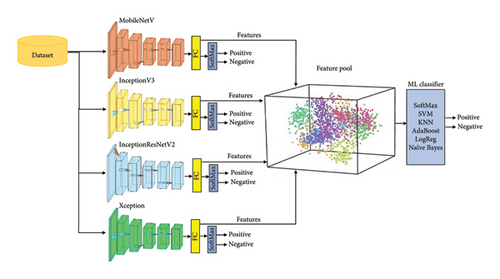

To leverage the features extracted by different CNN models for detecting anomalies in surveillance videos, since each model has its architecture and different filter sizes for feature extraction from input data, combining these features provides a better feature representation. This, in turn, improves overall performance. The proposed deep feature fusion approach (Figure 2) used four CNN models, MobileNetV2, InceptionV3, InceptionResNetV2, and Xception, as feature extractors to capture features from the input video frames. Next, these features, extracted from the individual models, are combined into a unified feature pool. The different colours in the feature pool correspond to the features extracted from different CNN models. The feature pool is then used to train ML classifiers. Finally, ML classifiers were employed to assign class labels and classify human behaviours as normal or abnormal. Six classifiers were used to recognise anomalies in captured videos: SVM, SoftMax, K-Nearest Neighbor (KNN), AdaBoost, Logistic Regression (LogReg), and Naive Bayes classifiers.

The deep fusion approach offers several benefits. It provides a flexible means of combining multiple CNN models without the need to train them from scratch. This approach allows for the incorporation of new models trained on specific datasets by extracting features from the final fully connected layer and then inserting them into the feature space. This incorporation method saves time and computational costs, eliminating the need to retrain the already-used individual models. Moreover, the fusion of features extracted from multiple models yields a broader and more inclusive array of information from which classifiers can acquire knowledge. This technique empowers ML classifiers to harness the distinct strengths and characteristics of the individual models, thus enhancing the holistic understanding of the target task. Furthermore, combining diverse models can mitigate the risk of overfitting and enhance generalisation capability.

3.4. Part2: Proposed Framework, Multitask Classification

Traditional AD models struggle with handling new or unforeseen anomalies, making them susceptible to false negatives (FN) or misclassifications. In contrast to binary AD, which deals with only one class of anomalies and one class of normal data, multi-AD addresses scenarios where multiple types of anomalies are present.

The proposed multitask classification approach introduces a critical innovation that eliminates the need to retrain the entire model from scratch when introducing a new anomaly class. This approach unifies features extracted from various CNN models and multiple datasets with different classes into a single feature space. These features are then used to train ML classifiers. Figure 3 provides a schematic diagram of the multitask classification model. In the scenario presented in this paper, two feature fusion pools were used: the UCF-features pool and the RLVS-features pool, which fused the features extracted from the UCF and RLVS datasets. The UCF-feature pool comprises features related to normal and violent classes, while the RLVS-features pool includes features representing normal and shoplifting classes.

To categorise incoming frames as violent, shoplifting, or usual, the proposed approach utilised the UCF-features pool and RLVS-features pool, along with their corresponding class labels, to create a unified feature space. This feature space was then used to train the ML classifiers to categorise and classify incoming frames based on their respective classes.

There are several advantages to incorporating new classes without going through full retraining. This approach can save time and computational resources, especially when dealing with complex models or models that require significant training time. It also enables the system to adapt to emerging anomaly types, which enhances its robustness in dynamic environments. This approach effectively addresses a considerable challenge in ML by allowing models to adapt to evolving anomaly patterns efficiently.

3.5. Training

- •

Training and testing each individual CNN model on the UCF dataset.

- •

Training and testing each individual CNN model on the RLVS dataset.

- •

Assessing the performance of the deep fusion model through distinct tests on the UCF and RLVS datasets.

- •

Finally, evaluating the proposed multitask classification approach combines the captured features from the UCF and RLVS datasets, each containing different abnormal behaviors.

3.6. Explainable Tools

- 1.

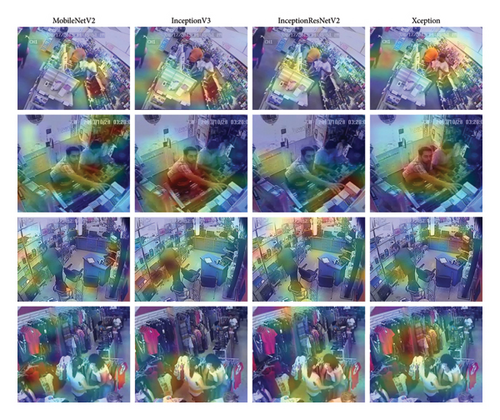

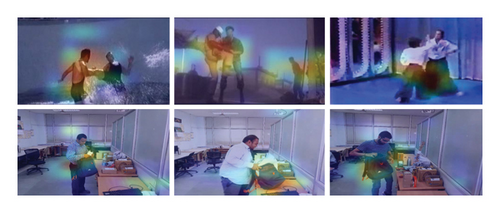

The Grad-CAM is an interpretability technique designed to elucidate the predictions of any DL model in a coherent and comprehensible manner. This is achieved through a focus on visualizing crucial regions within images. The approach capitalizes on gradients, effectively highlighting areas of the image that wield significant influence over the model’s decision-making process. Grad-CAM starts with a forward pass, processing an image through a pretrained CNN. Backpropagation calculates how changes in each feature map influence the final prediction score for a specific class. GAP computes importance scores for each feature map by averaging gradients. These important scores are used as weights for a weighted sum of feature maps, indicating their impact. The ReLU activation focuses on positive contributions, and the final output is a heatmap highlighting image regions that influenced CNN’s decision. Brighter heatmap areas correspond to more influential image regions in classification [45, 46]. Researchers can analyze and comprehend the crucial regions to better understand the model’s reasoning process. This approach also helps them to verify whether the influential regions align with their expectations, boosting confidence in the model’s predictions. In our study, we applied Grad-CAM to the final convolutional layers of the CNN, as these layers contain more detailed feature representations, providing a better insight into the model’s focus on higher-level patterns. We selected these layers because they capture complex features that are essential for making final predictions, unlike earlier layers that focus on basic edges and textures.

- 2.

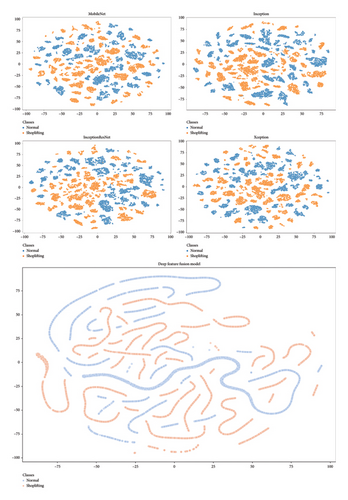

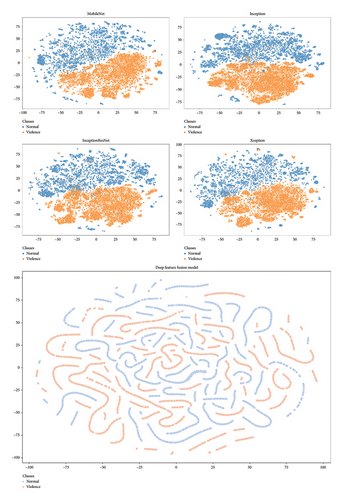

t-SNE, which stands for t-Distributed Stochastic Neighbor Embedding, is a nonlinear technique for reducing the dimensions of data while preserving the structure at various scales. It is particularly well-suited for visualizing high-dimensional datasets. The low-dimensional representation produced by t-SNE can be plotted, allowing you to visualise clusters, patterns, and relationships in the data that might be difficult to discern in the high-dimensional space. This paper uses t-SNE to understand the fusion techniques and how they improve the feature space. Feature fusion techniques often combine features from multiple layers or sources to create more comprehensive representations, which can improve model performance. By using t-SNE to visualize the extracted features, we aimed to observe how feature fusion enhances the distinction between different classes or categories in a lower-dimensional space. This visualization helps reveal patterns in the data that show the improved discriminative power of fused features compared to nonfused ones. Additionally, t-SNE allowed us to identify any potential biases in the feature space, as unintended clustering or overlap between certain categories could indicate problematic areas in the model’s learned representations. This insight was crucial in refining the feature fusion process to ensure that it contributed to both the model’s robustness and fairness.

4. Experimental Results

This section discusses the experimental results of various DL models used for AD, specifically focusing on tasks such as violence and shoplifting detection in video datasets. It outlines the performance of individual CNN models and a proposed deep feature fusion model, multitask classification approach assessed using metrics like accuracy, recall, precision, and F1 score.

4.1. Evaluation Metrics

4.2. Experimental Results of Individual CNN Models

The four deep CNN models used in this work were evaluated for performance in VAD tasks by being tested on the UCF and RLVS datasets, as described in subsequent sections.

4.2.1. Experiment Results on UCF Dataset

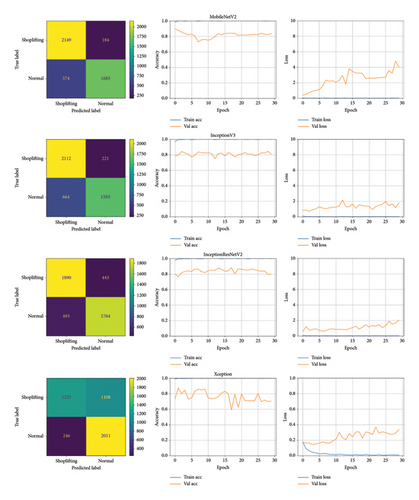

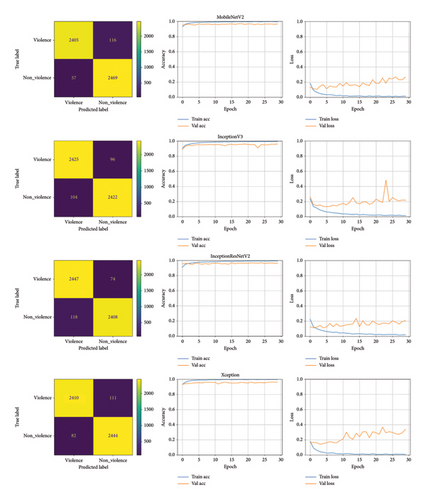

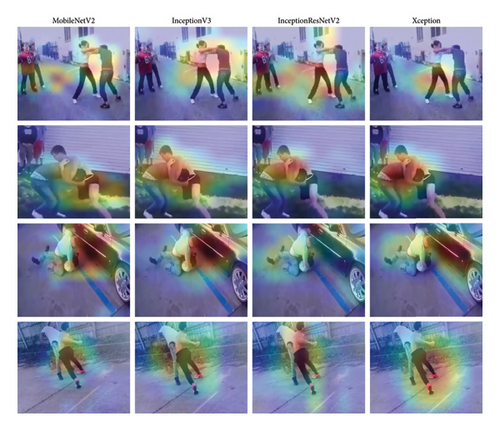

The results of the pretrained CNN models were evaluated based on their accuracy, loss curves for training and validation, and the confusion matrix, as shown in Figure 4. Table 1 presents the evaluation metrics obtained by testing these models on the UCF dataset. MobileNetV2 performed the best in accuracy, precision, and F1 score, making it the ideal choice when minimizing FP is a priority. Xception, on the other hand, had high recall but lower precision, making it a suitable option when capturing TP is a priority. InceptionV3 and InceptionResNetV2 achieved a balance between these two metrics. Additionally, Figure 5 displays the Grad-CAM-generated heatmaps for these individual models.

| Model | Accuracy (%) | Recall (%) | Precision (%) | F1 score (%) |

|---|---|---|---|---|

| MobileNet | 83.48 | 74.56 | 90.14 | 81.61 |

| Inception | 80.71 | 70.58 | 87.81 | 78.26 |

| InceptionResNet | 79.60 | 78.15 | 79.92 | 79.03 |

| Xception | 70.50 | 89.10 | 64.47 | 74.81 |

4.2.2. Experiment Results on RLVS Dataset

The RLVS dataset served as the training and testing data for four distinct models in this specific scenario. These models underwent training and validation across multiple epochs, allowing the measurement of losses, accuracies, and confusion matrices to assess their performance. The results of this evaluation are presented in Figure 6. Table 2 provides a summary of the performance metrics of these models on the RLVS dataset. All four models demonstrated high accuracy, highlighting their proficiency in correctly classifying videos as either violent or normal. The differences in accuracy, recall, and F1 score between the models are minimal, with MobileNet holding a slight edge, demonstrating a strong capability to detect violent instances. InceptionResNet exhibited the highest precision. These models deliver robust performance in violence recognition, characterized by their high accuracy. Figure 7 displays the heatmap generated by Grad-CAM.

| Model | Accuracy (%) | Recall (%) | Precision (%) | F1 score (%) |

|---|---|---|---|---|

| MobileNet | 96.57 | 97.74 | 95.51 | 96.61 |

| Inception | 96.0 | 95.88 | 96.18 | 96.0 |

| InceptionResNet | 96.19 | 95.32 | 97.0 | 96.16 |

| Xception | 96.17 | 96.75 | 95.65 | 96.20 |

4.3. Experimental Results of the Deep Feature Fusion Model

In the following subsections, we provide detailed information about the experimental results achieved by utilizing the deep Fusion model on both the UCF and RLVS datasets. Each model focused on a distinct ROI, and the fusion of these four models proved to be highly effective in capturing features for the ML classifiers.

4.3.1. Experimental Results of the Deep Fusion Model on the UCF Dataset

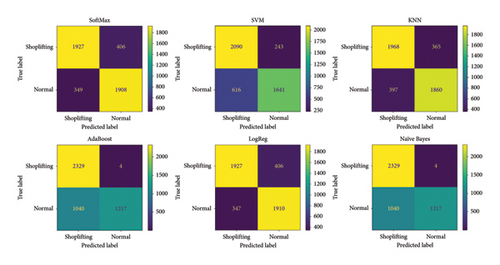

The proposed feature fusion model was evaluated on the UCF dataset to evaluate its effectiveness in recognizing shoplifting behavior in surveillance videos. As mentioned earlier, this model involved combining features extracted from individual CNN models, which were trained on the UCF dataset, into a unified feature pool. Subsequently, we assessed the model’s performance on the UCF testing dataset using six classifiers. Table 3 and Figure 8 present the results and their corresponding confusion matrices. The results demonstrate that the proposed model outperforms the individual CNN models, achieving an accuracy of 83.59%, a recall of 84.62%, a precision of 82.46%, and an F1 score of 83.53%. This represents an improvement of 0.11% over the MobileNetV2 model, which achieved the highest accuracy among the individual models. Figure 9 shows the Feature distribution visualized using t-SNE for UCF dataset including CNN models and features after fusion.

| Classifier | Accuracy (%) | Recall (%) | Precision (%) | F1 score (%) |

|---|---|---|---|---|

| LogReg | 83.59 | 84.62 | 82.46 | 83.53 |

| SoftMax | 83.55 | 84.53 | 82.45 | 83.48 |

| KNN | 83.39 | 82.41 | 83.59 | 82.99 |

| SVM | 81.28 | 72.70 | 87.10 | 79.25 |

| AdaBoost | 81.28 | 72.70 | 87.10 | 79.25 |

| Naïve Bayes | 77.25 | 53.92 | 99.67 | 69.98 |

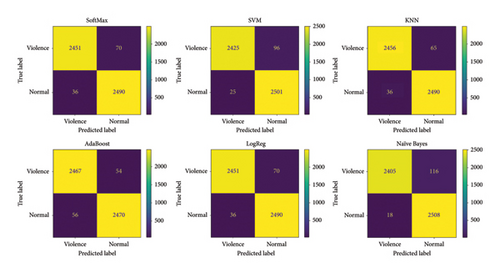

4.3.2. Experimental Results of the Deep Feature Fusion Model on the RLVS Dataset

Once again, the proposed deep feature fusion approach outperformed the individual CNN models used in this work when tested on the RLVS dataset for detecting violent activities in videos, achieving an accuracy of 97.99%. This represents an increase of 1.42% over the MobileNetV2 model, which achieved the highest accuracy among the individual models. Table 4 and Figure 10 present the experimental results, including the confusion matrices of the ML classifiers. Figure 11 shows the Feature distribution visualized using t-SNE for RLVS dataset including CNN models and features after fusion.

| Classifier | Accuracy (%) | Recall (%) | Precision (%) | F1 score (%) |

|---|---|---|---|---|

| KNN | 97.99 | 98.57 | 97.45 | 98.01 |

| LogReg | 97.89 | 98.57 | 97.26 | 97.91 |

| SoftMax | 97.89 | 98.57 | 97.26 | 97.91 |

| AdaBoost | 97.82 | 97.78 | 98.86 | 97.82 |

| SVM | 97.60 | 99.01 | 96.30 | 97.63 |

| Naïve Bayes | 97.34 | 99.28 | 95.57 | 97.39 |

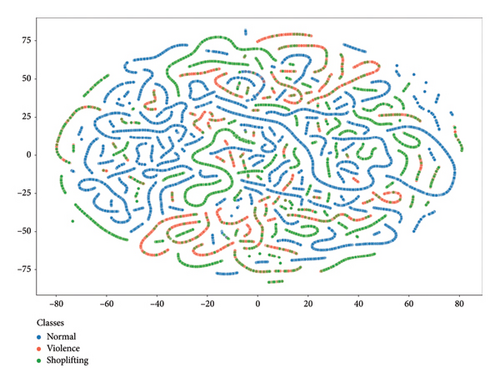

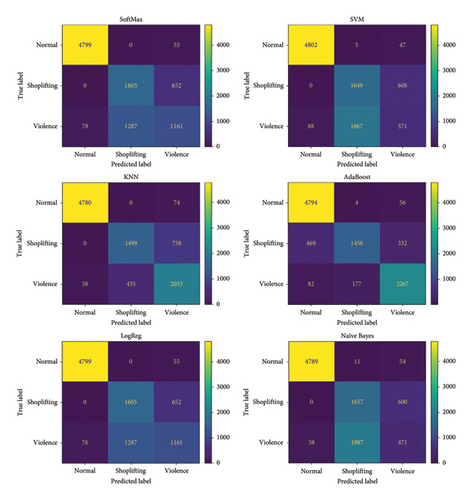

4.4. Experimental Results of the Multitask Classification Model

We assessed the effectiveness of our multitask classification model in identifying multiple anomaly classes by using two video anomaly behavior datasets, UCF, which has normal and shoplifting classes, and RLVS, which has normal and violent classes. The proposed model used four pretrained CNN models as feature extractors to extract the features from the UCF and RLVS datasets. The extracted features from these different models for each dataset were fused to create a single feature set (Figure 12). It is worth noting that the features for normal behavior from both datasets were merged due to their similarity in behavior. After that, ML classifiers were trained to categorize and classify incoming frames as shoplifting, violent, or normal behavior. Table 5 shows our results, including accuracy, recall, precision, and F1 scores for six different classifiers—AdaBoost, KNN, LogReg, SoftMax, Naïve Bayes, and SVM. We also included their respective confusion matrices in Figure 13. AdaBoost showed the highest accuracy at 88.37%, recall at 84.34%, precision at 88.0%, and an F1 score of 85.43%, indicating strong overall performance. KNN also performed well with a high accuracy of 86.45%, recall of 82.05%, precision of 82.62%, and an F1 score of 82.08%. LogReg and SoftMax yielded similar performance metrics with moderate accuracy and F1 scores. SVM and Naïve Bayes showed lower accuracy, recall, precision, and F1 scores compared to the other classifiers.

| Classifier | Accuracy (%) | Recall (%) | Precision (%) | F1 score (%) |

|---|---|---|---|---|

| AdaBoost | 88.37 | 84.34 | 88.00 | 85.43 |

| KNN | 86.45 | 82.05 | 82.62 | 82.08 |

| LogReg | 78.49 | 71.98 | 72.01 | 71.27 |

| SoftMax | 78.49 | 71.98 | 72.01 | 71.27 |

| SVM | 72.86 | 64.86 | 63.86 | 62.02 |

| Naïve Bayes | 71.87 | 63.70 | 62.17 | 60.35 |

We evaluated six ML classifiers (SVM, SoftMax, KNN, AdaBoost, LogReg, and Naïve Bayes) using the unified feature pool generated by the proposed deep feature fusion framework. Each classifier was trained on the same feature pool to ensure a fair comparison, and their performance was assessed using four metrics: accuracy, recall, precision, and F1 score. The results indicated that AdaBoost achieved the highest overall performance, particularly in multitask classification, due to its ability to balance precision and recall effectively. AdaBoost demonstrated robustness in identifying anomalies across datasets, benefiting from its adaptive boosting mechanism, which enhances performance by focusing on misclassified samples during training. Other classifiers, such as SVM and KNN, exhibited strong performance in specific metrics (e.g., precision or recall) but did not achieve the same balance as AdaBoost. This comparative evaluation highlights the suitability of AdaBoost as the best-performing classifier for the proposed framework.

4.5. Comparative Studies With Recent Research

In this section, we compared the performance of the proposed deep feature fusion approach to recent research that utilizes DL models to detect anomalous behaviors in surveillance videos, particularly those related to violence and shoplifting crimes. Table 6 presents a comparison of the accuracy values achieved by the proposed deep feature fusion model with existing methods for automatic shoplifting detection using the UCF dataset. On the other hand, Table 7 presents the results of the experiments conducted by applying our deep feature fusion model and various DL methods for violence detection on the RLVS dataset. Our proposed Deep Fusion approach has demonstrated remarkable superiority over contemporary methodologies by achieving notable accuracies of 83.59% and 97.99% on the UCF and RLVS datasets, respectively. These substantial performance metrics unequivocally affirm our approach’s outstanding quality and efficacy in VAD. Such results establish our method’s prowess and signify a substantial stride forward in advancing the current state-of-the-art in this intricate field. The exceptional accuracy attained on both datasets underscores our approach’s potential to address real-world challenges in video surveillance and AD scenarios, signifying its value in practical applications and its potential for further research and development.

| Ref., year | Model | Accuracy % |

|---|---|---|

| [12], 2020 | 3D CNN | 75.0 |

| [16], 2021 | 3D CNN | 75.7 |

| [17], 2021 | InceptionV3 and LSTM networks | 74.53 |

| [28], 2023 | InceptionV3 and bidirectional LSTM | 81.0 |

| [35], 2024 | AnomalyCLIP | 75.03 |

| Proposed deep feature fusion model | 83.59 | |

- Note: The bold value indicates the result of the proposed method.

| Ref., year | Model | Accuracy % |

|---|---|---|

| [39], 2019 | VGG16 + LSTM | 88.20 |

| [11], 2020 | ValdNet2 + GRU | 96.74 |

| [47], 2021 | Flow gated RGB | 87.25 |

| [21], 2022 | Keyframe + ResNet18 network | 94.60 |

| [22], 2022 | 3DCNN + LSTM networks | 96.50 |

| [26], 2023 | BoTNet152 + TCN | 93.15 |

| [33], 2024 | MultiWave-Net | 96.0 |

| [34], 2024 | MLP-mixer architecture | 96.0 |

| [36], 2024 | Lightweight 3D CNN | 88.0 |

| [37], 2024 | Ensemble approach (CNN + LSTM) | 96.6 |

| Proposed deep feature fusion model | 97.99 | |

- Note: The bold value indicates the result of the proposed method.

The model for multiclassification achieved a high accuracy of 88.37% by using the AdaBoost classifier. Its primary task was to recognize three classes of behavior, which included two distinct types of abnormal behavior (shoplifting and violence) and a normal class. The model leveraged the UCF and RLVS datasets, making it highly effective in precisely recognising anomalies. It provided a robust solution for multianomaly recognition and significantly enhanced the capacity to detect and classify diverse anomalous behaviors across various scenarios. This approach is groundbreaking and innovative as it addresses the challenge of integrating multitasks within video anomaly systems without requiring complete model retraining when incorporating a new anomaly class. As a result, our approach is unique and incomparable to prior studies regarding this specific aspect.

4.6. Independent Test

An independent test involves evaluating a model’s performance on data it has not seen or been trained on, a crucial step in assessing the model’s generalization capabilities. In this study, we conducted an independent test on the proposed multitask classification model to evaluate its performance on unseen data with new scenarios and gauge its ability to generalize learned patterns to new information. The model underwent training on the UCF dataset, encompassing shoplifting actions, and the RLVS dataset, which includes instances of violent actions. The model was then tested on two independent datasets: a Movie dataset [48] featuring both normal and violent actions, and a shoplifting dataset [49] consisting of normal and shoplifting actions. Table 8 lists the results achieved by the multitask classification demonstrated on the movie test set. KNN stands out with the highest accuracy at 87.25% among the presented classifiers. It also exhibits balanced recall at 80.25%, precision at 97%, and an F1 score at 87.87%, indicating robust performance across different metrics. AdaBoost also performs well across all metrics: accuracy at 86.23%, recall at 77.80%, precision at 99.43%, and F1 score at 87.30%. On the other hand, the results in Table 9 show how different classifiers performed on the Shoplifting dataset for a multitask classification model. Naïve Bayes performed the best, achieving the highest accuracy 79.39%, recall 76.79%, precision 83.28%, and F1 score 79.91%. KNN followed closely, with a good balance between precision 82.17% and recall 69.86%. SoftMax, AdaBoost, LogReg, and SVM all had similar results, with accuracy around 75%. This noteworthy result suggests the model’s proficiency in generalizing to sequences of violence and shoplifting behaviors across diverse scenarios, validating its robustness and potential practical utility. Figure 14 displays heatmaps generated by the Grad-CAM method on samples from the dependent datasets.

| Classifier | Accuracy (%) | Recall (%) | Precision (%) | F1 score (%) |

|---|---|---|---|---|

| KNN | 87.25 | 80.25 | 97.00 | 87.87 |

| AdaBoost | 86.23 | 77.80 | 99.43 | 87.30 |

| SoftMax | 82.90 | 74.60 | 99.50 | 85.25 |

| LogReg | 82.90 | 74.60 | 99.50 | 85.25 |

| Naïve Bayes | 81.79 | 75.90 | 97.30 | 85.36 |

| SVM | 78.46 | 71.58 | 98.50 | 82.97 |

| Classifier | Accuracy (%) | Recall (%) | Precision (%) | F1 score (%) |

|---|---|---|---|---|

| Naïve Bayes | 79.39 | 76.79 | 83.28 | 79.91 |

| KNN | 75.83 | 69.86 | 82.17 | 75.52 |

| SoftMax | 75.05 | 68.06 | 82.12 | 74.43 |

| AdaBoost | 74.96 | 67.80 | 82.15 | 74.29 |

| LogReg | 74.92 | 67.80 | 82.07 | 74.26 |

| SVM | 74.92 | 67.80 | 82.07 | 74.26 |

5. Conclusion

This paper addresses the challenging task of detecting anomalies in complex image data that is characterized by noise and diverse actions such as violence, shoplifting, and property destruction. While DL has shown promising results in this domain, previous studies have often needed help with the crucial problem of generalization across different AD tasks without resorting to training from scratch for each new task. This approach is not only time-consuming and computationally expensive but also unfair. To mitigate these issues, our paper introduces a novel DL framework that comprises three key components. First, TL is used to enhance feature generalization. Second, model fusion is employed to improve feature representation, which enhances generalization. Finally, multitask classification is used to enable the generalization of the classifier across various tasks. Empirical results demonstrate the effectiveness of our approach, surpassing state-of-the-art methods. Using a single classifier, we achieved an excellent accuracy of 97.99% on the RLVS dataset for violence detection, 83.59% on the UCF dataset for shoplifting detection, and 88.37% on both datasets, all without the necessity of training from scratch for each task. To the best of our knowledge, this represents the first successful resolution of the generalization problem in AD, which is a significant advancement in this domain. In conclusion, our novel DL framework provides a more efficient and fair approach to AD in complex image data. The limitation of our framework lies in the potential increase in model complexity and computational overhead, particularly in multitask learning scenarios. This limitation will be addressed in future work by introducing a lightweight DL approach aimed at reducing complexity while maintaining performance efficiency. Our goal is to optimise the framework for practical applications, ensuring it remains suitable for deployment in real-world environments.

Disclosure

A preprint has previously been published [50]: https://arxiv.org/abs/2408.00792.

Conflicts of Interest

The authors declare no conflicts of interest.

Funding

The authors would like to acknowledge the support received through the following funding schemes of the Australian Government: Australian Research Council (ARC) Industrial Transformation Training Centre (ITTC) for Joint Biomechanics under Grant IC190100020. Open access publishing facilitated by Queensland University of Technology, as part of the Wiley-Queensland University of Technology agreement via the Council of Australian University Librarians.

Open Research

Data Availability Statement

All used datasets were cited within the article.