Locality Sensitive Hashing-Based Deepfake Image Recognition for Athletic Celebrities

Abstract

The rapid advancement of deepfake technology poses significant challenges to athletic celebrities, where altered or falsified media can impact athletes’ reputations, fan engagement, and the integrity of match broadcasting. This paper proposes a novel framework for deepfake image recognition for athletic celebrities using locality sensitive hashing (LSH). LSH, an efficient technique for high-dimensional nearest neighbor searches, is employed to detect and differentiate deepfake images from authentic media. By extracting high-dimensional features from images and videos using convolutional neural networks (CNNs), LSH is applied to hash similar content into clusters for quick and accurate deepfake detection. The proposed method is tested on real-world dataset, showing promising results in terms of accuracy and computational efficiency. This research highlights the importance of integrating advanced hashing techniques like LSH in safeguarding the authenticity of digital content and provides insights into future directions for deepfake detection mechanisms.

1. Introduction

The advent of digital technology has transformed the competitive industry in unprecedented ways, enhancing fan engagement and broadening the reach of athletic competitions [1, 2]. However, this transformation has also brought about new challenges, particularly in the realm of media authenticity. Among the most pressing issues is the rise of deepfake technology, which enables the creation of hyper-realistic yet entirely fabricated images and videos [3, 4]. As these technologies become increasingly sophisticated and accessible, they pose a significant threat to the integrity of media and the reputations of athletes.

- 1.

This study introduces the innovative application of LSH in deepfake image recognition specifically tailored for the competitive industry. By leveraging LSH’s ability to efficiently map high-dimensional feature spaces, the proposed framework enables rapid and accurate identification of deepfake images, significantly improving detection speed compared with traditional methods.

- 2.

The research employs convolutional neural networks (CNNs) to extract intricate and nuanced features from images and videos. This approach enhances the quality of the input data for the LSH algorithm, allowing for more precise comparisons and improved detection accuracy, thus addressing the limitations of existing detection techniques.

- 3.

The paper presents a comprehensive experimental framework that evaluates the performance of the proposed LSH-based detection method against existing state-of-the-art techniques. By utilizing real-world datasets and conducting rigorous benchmarking, the study provides empirical evidence of the effectiveness and efficiency of the proposed approach, thereby establishing a new standard for deepfake detection in this context.

The rest of article is organized as follows. The literature review in Section 2 summarizes existing deepfake detection methods, their limitations, and the relevance of LSH and CNNs. In Section 3, we detail the proposed detection framework, including feature extraction step, hash index creation step, and similarity evaluation step. In Section 4, the effectiveness of the proposed method is evaluated and compared with state-of-the-art techniques. At last, in Section 5, we summarize the key insights and suggest the directions for further research.

2. Related Work

In this section, we summarize the current research outcomes associated with this paper with the following categories: Deepfake Technology and Detection Methods and Application of LSH and CNNs.

2.1. Deepfake Technology and Detection Methods

The foundational paper [16] presents FaceForensics++, a large dataset of manipulated facial images and videos, which has become a benchmark for deepfake detection research. The dataset includes facial forgeries created using four popular manipulation techniques: FaceSwap, Face2Face, Neural-Textures, and DeepFakes. The paper [17] presents a new method for detecting deepfakes by examining convolutional traces; these are artifacts that deep generative models leave behind during the image generation process. The authors observe that deepfake images exhibit specific inconsistencies in their frequency domain representations, as generative models tend to manipulate images differently from natural images captured by cameras. In [18], the authors propose a method to detect deepfake videos by identifying face warping artifacts. Deepfake generation often involves resizing or warping a subject’s face to fit another body, leading to geometric inconsistencies between the face and the surrounding area. The authors develop a detection algorithm that analyzes these spatial inconsistencies, focusing on the discrepancies in the shape and appearance of facial features. In [19], MesoNet is introduced as a lightweight and compact neural network designed for real-time deepfake detection. The model is specifically built to capture mesoscopic-level features, which are intermediate features between pixel-level and global features in images.

The paper [20] takes a unique approach to deepfake detection by using biological signals such as subtle blood flow patterns in the human face, which are difficult for generative models to replicate accurately. The authors illustrate that deepfakes can be detected by analyzing the photoplethysmographic (PPG) signals derived from facial videos. They demonstrate that identifying disruptions or the absence of these natural biological rhythms is key to recognizing deepfake content. In [21], the authors explore the use of capsule networks for deepfake detection, proposing a method known as Capsule-Forensics. Capsule networks, unlike traditional CNNs, are designed to capture spatial relationships between different parts of an image. This makes them particularly effective at detecting subtle manipulations in deepfake videos, where the global structure of the face might remain intact, but local manipulations create inconsistencies. The survey paper [22] provides a comprehensive overview of deepfake detection techniques, covering a wide range of methods from image manipulation forensics to deep learning approaches. The authors categorize the detection methods into three main groups: traditional image forensics, machine learning-based detection, and physiological signal-based methods. The survey also highlights the strengths and weaknesses of each approach, offering insights into which methods are most effective under different conditions, such as varying image resolutions and compression levels. In [23], FakeCatcher is introduced as a tool to detect deepfake videos by examining biological signals, specifically the subtle color variations in human skin due to blood circulation. These natural fluctuations are typically present in authentic videos but are often missing or inaccurately represented in synthetic deepfake videos. The system extracts physiological cues from the video and uses these signals as a proxy for determining the authenticity of the video content.

However, the abovementioned literature seldom considers the high time cost for deepfake image recognition, which render current research outcomes are not very suitable for the big data scenarios where massive images need to be considered and handled.

2.2. Application of LSH and CNNs

The paper [24] presents an interpretable deepfake detection method by incorporating frequency spatial transformers into CNN filter kernels. The novel architecture enhances the ability to detect manipulated facial images by analyzing frequency and spatial domains concurrently, leading to improved accuracy in detecting deepfakes in compressed videos. In [25], the authors explore the use of a CNN-based discriminator in a GAN-based structure to enhance the detection and localization of small forgeries in satellite images. The approach achieves 86% accuracy in detecting subtle manipulations, making it applicable in media forensics, including deepfake detection in low-resolution videos. The authors of [26] address the challenges of detecting deepfakes by incorporating adversarial learning techniques and LSH for efficient hashing of media features. It focuses on applying CNNs for detecting synthetic faces in fake media, highlighting the use of LSH for fast retrieval of manipulated content. To improve processing speed, the authors in [27] introduce a sensitive content detection framework that employs perceptual hashing and CNNs to identify deepfake media. By integrating LSH with deep learning techniques, this approach enables adaptive detection. This combination enhances both the speed and robustness of real-time media monitoring.

The comprehensive survey in [28] highlights the use of CNN-based models for detecting copy-move forgeries and deepfakes. It emphasizes the potential of integrating LSH for rapid comparison of image regions, facilitating faster and more efficient detection in multimedia forensics. Similarly, literature [29] presents a CNN-based deepfake detection system aimed at identifying fake video clips. The system utilizes a combination of CNNs and LSH for hashing video features to accelerate the detection process, demonstrating superior performance in deepfake recognition tasks. Likewise, the authors in [30] provide a broad overview of current deepfake detection techniques, including LSH-based methods for quickly identifying manipulated media. The use of CNNs for feature extraction is discussed, and the paper highlights the importance of using LSH to speed up detection in large-scale datasets. The paper [31] systematically reviews various deepfake detection techniques and focuses on the role of CNNs in classification. It also examines the use of LSH for efficient deepfake detection by retrieving manipulated media from large datasets, making it ideal for social media monitoring. To improve the recognition performances, the work in [32] combines CNN and vision transformer architectures for video hashing and retrieval. LSH is employed for efficient media tracing, which is crucial for deepfake detection in large-scale video datasets. This hybrid approach enhances accuracy and reduces computational load.

However, the abovementioned literature did not the concrete characteristics of deepfake image recognition issue in competitive industry, which makes them hard to be directly employed in current athletics-related application scenarios. In the next section, we will introduce a new deepfake image recognition method tailed for competitive industry.

3. Our Proposal: DIRLSH

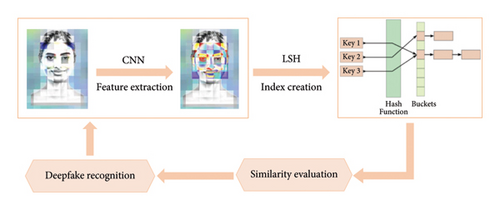

In summary, our proposed deepfake detection method DIRLSH could be divided into the following five steps (see Figure 1). Next, we will introduce the details of each step one by one.

3.1. Step 1: Feature Extraction Using CNNs

The first stage in LSH-based deepfake detection involves extracting relevant features from video frames or images using CNNs. The features extracted from CNNs can represent essential information about the content [33, 34], such as facial expressions, lighting patterns, and textures, that can distinguish real from fake media.

3.2. Step 2: Hashing Feature Vectors With LSH

- 1.

Random projection: LSH uses random hyperplanes to partition the feature space. A random vector ri is sampled from a Gaussian distribution N (0, 1). LSH employs random projections, specifically through the use of random hyperplanes, as a fundamental technique for efficiently approximating nearest neighbor search in high-dimensional data spaces. The use of random vectors sampled from a Gaussian distribution N (0, 1) is crucial because Gaussian projections are known to preserve the dot product between vectors, a property pivotal in maintaining the geometric structure of the data. When a dataset is projected onto these random hyperplanes, the relative distances between points (both angular and Euclidean) are approximately maintained, which is vital for tasks such as nearest neighbor searches.

- 2.

Each feature vector fI is projected onto random vectors as follows:

() -

where sign (·) outputs one if the result is positive, and 0 otherwise. This creates a binary hash code for each feature vector.

- 3.

The process is repeated for k different random vectors, producing a k-dimensional binary hash code as follows:

() -

where (fI) ∈ {0, 1}k.

3.3. Step 3: Constructing the Hash Table

- 1.

Initialization: first, initialize a hash table. The structure of the table will depend on the number of distinct hash codes expected, which is influenced by the number of bits used in each hash code and the hash functions applied. Each unique hash code corresponds to a bucket within the table.

- 2.

Hash code generation: for each item in the dataset, compute its hash code. This involves projecting the item’s feature vector onto the predefined random hyperplanes and assigning a binary value based on the side of the hyperplane where the item lands. The sequence of binary values forms the hash code.

- 3.

Insertion into hash table: use the generated hash code as a key to place the item into the corresponding bucket in the hash table. If a bucket for a particular hash code does not yet exist, create it. If it does exist, add the item to the bucket.

- 4.

Handling collisions: since multiple items might generate the same hash code, these items will share the same bucket. This is intentional in LSH, as it groups similar items together, reducing the search space for similarity queries.

- 1.

For each frame I, its hash code H(fI) is used as a key in the hash table :

() - 2.

During detection, when a new frame I′ is input into the system, its hash code H(fI) is computed, and the system looks for matching or similar hash codes in the table as follows:

()

Given a new query frame I′, the system retrieves all frames I such that .

3.4. Step 4: Similarity Search and Approximate Nearest Neighbor Retrieval

- 1.

Given a query frame I′, compute its feature vector and hash code as follows:

() - 2.

Search for similar hash codes in the table . If a match I is found, compute the Euclidean distance between the feature vectors and fI as follows:

() - 3.

If the distance is below a predefined threshold τ, the frame I′ is flagged as similar or potentially manipulated.

3.5. Step 5: Deepfake Detection Decision

- 1.

If the distance is smaller than τ, classify the frame as a deepfake as follows:

() - 2.

Otherwise, classify the frame as authentic as follows:

() -

The detection decision is formalized as a binary classification problem as follows:

()

The LSH-based deepfake detection system provides both computational efficiency and accuracy for identifying manipulated media in large-scale datasets. This method is particularly useful in detecting deepfakes in real-time, high-volume applications such as social media.

Pseudocode of the above DIRLSH method is presented in Algorithm 1.

-

Algorithm 1: LSH-based Deepfake Detection Algorithm.

-

Input: I: Input frame

-

: Hash table

-

τ: Similarity threshold

-

Output: Label of the frame: “deepfake” or “authentic”

-

begin

-

Step 1: Feature Extraction

-

Extract feature vector fI from input frame I using a CNN:

-

fI⟵F(I)

-

Step 2: Hash Code Generation

-

Generate LSH-based hash code H(fI) from the feature vector:

-

H(fI)⟵[h1(fI), h2(fI), ⋯, hk(fI)]

-

Store hash code in the hash table :

-

Step 3: Query Hash Table for Similar Frames

-

For a new query frame I′, extract feature vector:

-

-

Compute its LSH-based hash code:

-

-

Retrieve matching frames from the hash table:

-

-

Step 4: Compute Similarity Scores

-

For each retrieved frame I:

-

-

Step 5: Deepfake Detection

-

Compare similarity distance with threshold τ:

-

if then return “deepfake”

-

end else

-

return “authentic”

-

end

-

end

4. Experiments

4.1. Experimental Configuration

To validate the feasibility of the proposed DIRLSH method, a group of experiment are tested in this section, which is conducted on the WS-DREAM dataset [35]. The dataset contains 1,974,675 values corresponding to 339 users and 5825 services. In the scenario of deepfake image detection focused in this article, we can regard the dataset as 339 images (parameter N), each of which is constituted by 5825 frames or dimensions (parameter d).

To observe the advantages of DIRLSH method, we compare it with three SOTA methods, that is, UserCF [36], SS-ICF [37], and RP-UCF [38]. Evaluation performance metrics include MAE for measuring the accuracy and time cost for measuring the efficiency. Experiments are conducted on a Microsoft laptop with 12th Gen Intel (R) Core (TM) i5-1235U (2.50 GHz) and 16.0 GB RAM. Software settings include Win 11 OS and Python 3.6.

4.2. Evaluation Results

Next, we observe the performances of the proposed DIRLSH method from various perspectives. In concrete, we have conducted the following four groups of experiments.

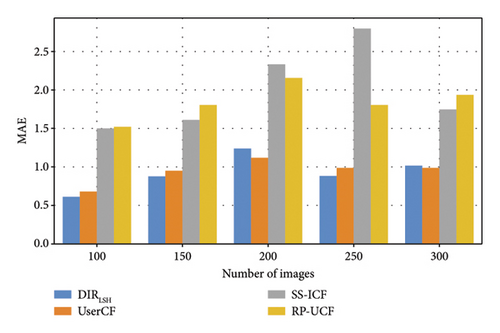

4.2.1. Profile 1: Accuracy Comparison

Here, we measure the accuracy of each method by calculating the average difference between the real value and predicted value of every frame in each image. In other words, we use the MAE metric to measure the deepfake detection accuracy. Regarding the parameters used in this profile, N is varied from 100 to 300 and d is varied from 1000 to 5000, respectively. Regarding our DIRLSH method, there are two parameters, that is, size of hash function set k is equal to 8 and size of hash table set K is equal to 10.

Evaluation results are reported in Figure 2, where subgraph (a) is to measure the accuracy of four methods with respect to the variation of parameter N (here, another parameter d is fixed and equal to 1000) while subgraph (b) is to measure the accuracy of four methods with respect to the variation of parameter d (here, another parameter N is fixed and equal to 100). Overall, the fluctuation trend of the MAE values of four methods is not obvious with the growth of parameters N and d. In addition, the MAE values of our DIRLSH method are much lower than the SS-ICF and RP-UCF methods, which indicate a higher accuracy of the proposed deepfake image detection solution in our article. Moreover, DIRLSH and UserCF achieve the close MAE values with respect to parameters N and d, which show a good performance of DIRLSH since UserCF is the classic baseline method.

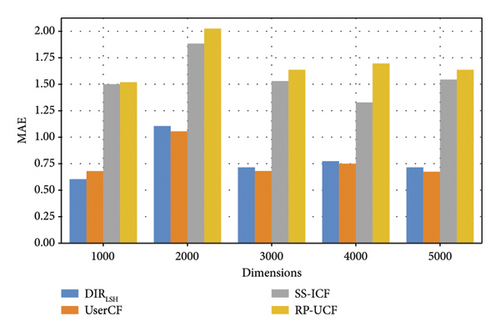

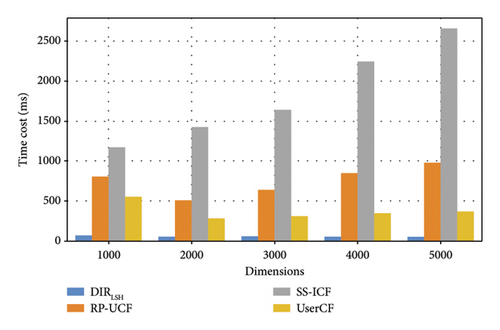

4.2.2. Profile 2: Efficiency Comparison

Time efficiency is a key metric to measure the overall performance of big data-driven decision-making solutions [39–42]. The number of candidate images in deepfake image detection problem is often large and, therefore, the time cost for detection is a significant metric for performance evaluation. Inspired by this observation, we evaluate the time costs of different methods with respect to the parameters N and d. Similar to Profile 1, parameter N is varied from 100 to 300 and d is varied from 1000 to 5000, respectively. In our DIRLSH method, the size of hash function set k is equal to 8 and the size of hash table set K is equal to 10.

Evaluation results are shown in Figure 3 where subgraph (a) is to measure the time costs of four methods with respect to the variation of parameter N (here, another parameter d is fixed and equal to 1000) while subgraph (b) is to measure the time costs of four methods with respect to the variation of parameter d (here, another parameter N is fixed and equal to 100). From the two figures, we can see an approximate increment trend of the time costs of four methods with the growth of N and d, which is because more images or more dimensions of each image often require additional processing time. Another observation from these two figures is that our proposed DIRLSH method only needs less time costs compared to the rest three methods. This is because LSH used in DIRLSH method has been proven a time-efficient neighbor searching technique and especially suitable in massive data processing scenarios.

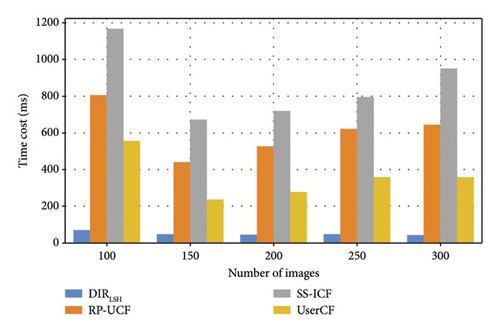

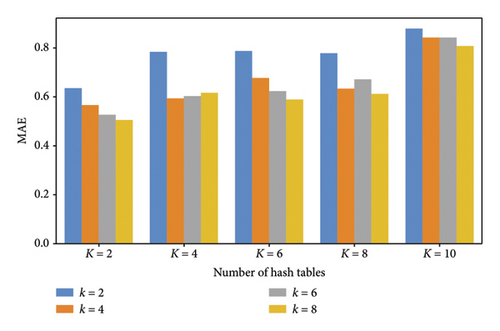

4.2.3. Profile 3: Accuracy of DIRLSH w.r.t. Parameters

In DIRLSH method, there are still another two parameters k and K, which mean the size of hash function set and hash table set, respectively. In this profile, we evaluate the influence of parameters k and K toward the accuracy of DIRLSH . Here, another two parameters N and d are equal to 100 and 1000, respectively. Evaluation results are shown in Figure 4. One observation from Figure 4 is that the MAE of DIRLSH increases when K grows from 2 to 10. This is because more neighboring frames are judged to be similar to the target frame for deepfake image detection and as a result, the detection accuracy declines accordingly. The second observation from Figure 4 is that the MAE of DIRLSH declines when k grows from 2 to 8. This is because when k grows, the returned neighboring frames are more similar to the target frame for deepfake image detection. Accordingly, the accuracy of DIRLSH is enhanced.

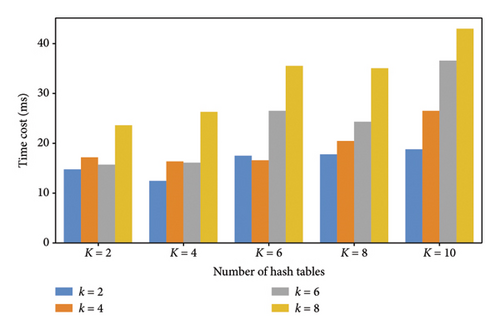

4.2.4. Profile 4: Efficiency of DIRLSH w.r.t. Parameters

In our DIRLSH, k and K are two factors that are related to the algorithm efficiency. In this profile, we evaluate the influence of parameters k and K toward the time cost of DIRLSH. Here, similar to Profile 3, another two parameters N and d are equal to 100 and 1000, respectively. Evaluation results are shown in Figure 5. The first observation from Figure 5 is that the time cost of DIRLSH increases when K grows from 2 to 10. This is due to the fact that more neighboring frames are judged to be similar to the target frame for deepfake image detection and as a result, the detection time cost increases accordingly. Another observation from Figure 5 is that the time cost of DIRLSH grows when k rises from 2 to 8. We can explain it as follows: when k grows, the evaluation condition for detecting similar frames is becoming narrow; in this situation, DIRLSH probably cannot return any qualified similar frames, which may result in repeated executions of algorithm as well as higher time cost.

4.3. Further Discussion

Although our proposal achieves good performances in terms of deepfake recognition efficiency and accuracy, there are still several limitations. First of all, although our proposed deepfake recognition method based on LSH can achieve certain privacy protection capability, it is beneficial to integrate more effective privacy-preserving techniques into our work, such as unlearning [43], federated learning [44–46], and generalization technique [47]. In addition, when the image data for deepfake detection are massive, the so-called big data challenge is inevitable; in this situation, we need to recruit more time-efficient data processing techniques like the ones in [48–50]to achieve fast detection goal.

While our DIRLSH method may not consistently surpass all other methods in deepfake image recognition accuracy, it generally performs better than most in terms of accuracy, as illustrated in Figure 2. Our DIRLSH method exhibits significantly higher time efficiency compared with other methods, demonstrating swift deepfake image recognition, particularly in a big data context. Consequently, we contend that DIRLSH is the optimal choice when considering the overall performance metrics, which include both time efficiency and recognition accuracy.

5. Conclusion

In this paper, we proposed a novel approach to deepfake detection in the competitive industry using LSH combined with CNNs. The primary goal was to enhance the efficiency and accuracy of detecting manipulated media, particularly in scenarios where large datasets, such as videos, are analyzed in real-time. In concrete, our method utilizes CNNs to extract high-dimensional feature vectors from athletic celebrities images and videos, which are then processed by LSH to generate hash codes that allow for efficient nearest-neighbor searches in high-dimensional spaces. By mapping similar frames into the same hash buckets, our approach significantly speeds up the retrieval of matching frames, making it ideal for handling the large volumes of media generated in the competitive industry. Experimental results demonstrate that the proposed system achieves promising performance in terms of both accuracy and computational efficiency when compared to state-of-the-art methods.

In the future work, we will further improve our proposal in this paper by introducing more context factors such as time and location. In addition, how to minimize the false-positive and false-negative probability incurred by the inherent limitation of LSH technique adopted in this paper is still another research topic that calls for intensive study in the future work.

Conflicts of Interest

The authors declare no conflicts of interest.

Funding

The authors have nothing to report.

Open Research

Data Availability Statement

The data that support the findings of this study are available from the corresponding author upon reasonable request.