Systematic Analysis and Critical Appraisal of Predictive Models for Lung Infection Risk in ICU Patients

Abstract

Purpose: To systematically review and evaluate predictive models for assessing the risk of lung infection in intensive care unit (ICU) patients.

Methods: A comprehensive computerized search was conducted across multiple databases, including CNKI, Wanfang, VIP, SinoMed, PubMed, Web of Science, Embase, and the Cochrane Library, covering literature published up to March 2, 2024. The PRISMA guidelines were followed for data synthesis, and data extraction was performed according to the CHARMS checklist. The PROBAST tool was used to evaluate the risk of bias and the applicability of the included studies.

Results: Fourteen studies encompassing 20 predictive models were included. The area under the curve (AUC) values of these models ranged from 0.722 to 0.936. Although the models demonstrated good applicability, the risk of bias in the included studies was high. Common predictors across the models included age, length of hospital stay, mechanical ventilation, use of antimicrobial drugs or glucocorticoids, invasive procedures, and assisted ventilation.

Conclusion: Current predictive models for lung infection risk in ICU patients exhibit strong predictive performance. However, the high risk of bias highlights the need for further improvement. The main sources of bias include the neglect of handling missing data in the research, use of univariate analysis to select candidate predictors, lack of assessment of model performance, and failure to address overfitting. Future studies should expand the sample size based on the characteristics of the data and specific problems, conduct prospective studies, flexibly apply traditional regression models and machine learning, effectively combine the two, and give full play to their advantages in developing prediction models with better predictive performance and more convenient operation.

1. Introduction

The intensive care unit (ICU) is a centralized facility for the treatment of patients with critical and complex conditions. Due to the severity and complexity of patients’ conditions, the presence of more underlying diseases, and compromised immune function, ICU patients experience a hospital infection rate that is 5–8 times higher than that in other general wards [1]. Relevant studies have indicated that 54% of ICU patients have suspected or confirmed infections [2]. Hospital-acquired infections contribute to increased mortality and place a substantial economic burden on ICU patients, significantly diminishing their quality of life (QOL). Common hospital-acquired infections include lung, urinary tract, and surgical site infections. In the ICU, invasive procedures, such as sputum suction, tracheal intubation, tracheotomy, and mechanical ventilation, expose the patient’s airway to the external environment. Coupled with multiple risk factors, the respiratory tract is more susceptible to bacterial stagnation, ultimately leading to lung infections [3]. Research has shown that ventilator-associated pneumonia is the most prevalent healthcare-associated infection in ICUs, occurring in approximately 10% of mechanically ventilated patients [4], with a mortality rate of approximately 13%. Predictive modeling, which uses mathematical models to describe the intrinsic relationship between variables based on data, plays a crucial role in clinical research, particularly in disease prognosis [5]. Despite advances in infection prevention and control, hospital-acquired infections remain a major challenge. Risk predictions for hospital infections are increasingly moving towards quantitative and precise models, incorporating a variety of complex and relevant risk factors. Predictive models offer a valuable tool for predicting the probability of hospital-acquired infections, enabling early identification and warning of risk factors, screening high-risk groups, and guiding targeted interventions. These models also provide a theoretical basis for developing policies aimed at preventing hospital infections with substantial clinical and practical significance [6, 7]. Several researchers, both domestically and internationally, have developed risk predictive models for lung infections in ICU patients. However, discrepancies were observed in the identified predictors. At the same time, many current studies have some limitations, such as insufficient sample size when building models, inadequate validation methods, and the role of machine learning in improving prediction accuracy not being fully exploited [8, 9]. This study aimed to systematically review and summarize existing lung infection risk prediction models for ICU patients, compare their predictive performance, assess bias and applicability, and provide a reference for the prevention of lung infections in ICU settings.

2. Methods

2.1. Search Strategy

A comprehensive computerized search was conducted using the following databases: China National Knowledge Infrastructure (CNKI), Wanfang, Wipro, SinoMed, PubMed, Web of Science, Embase, and Cochrane Library. The search covered the literature on the development of risk prediction models for lung infections in ICU patients from database inception to March 2, 2024.We take PubMed as an example. The English search formula is (“intensive care units” [MeSH Terms] OR “ICU”) AND (“Lung infection” OR “Respiratory tract infection”) AND (“Risk Prediction Model” OR “Risk Prediction” OR “Prediction Model”).

2.2. Inclusion and Exclusion Criteria

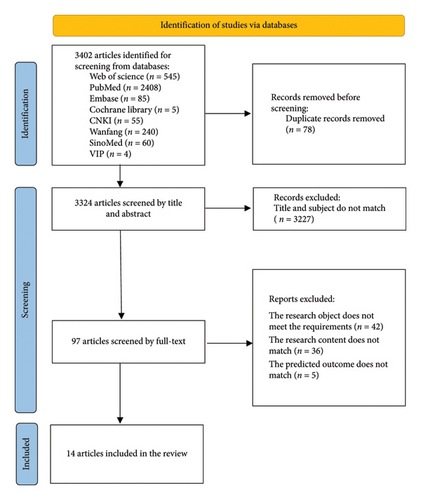

The inclusion criteria were as follows: (1) studies involving ICU patients; (2) studies focused on developing lung infection risk prediction models; (3) cross-sectional, case-control, or cohort studies; and (4) studies that described the modeling process and statistical methods. Exclusion criteria were as follows: (1) models based on meta-analysis or systematic reviews; (2) studies with fewer than two predictors in the final model; (3) studies in which the full text was unavailable, data were missing, or data could not be extracted; and (4) duplicated studies (Figure 1).

2.3. Study Selection

Two researchers need to be familiar with the content of the data extraction form and the extraction criteria and receive training if necessary. After completing the data extraction independently, the researchers compare the extracted results and discuss any inconsistencies. If an agreement cannot be reached through discussion, a third researcher will arbitrate and make the final decision. The initial screening involved reading titles and abstracts to exclude obviously irrelevant studies, followed by a full-text review of remaining studies to determine final inclusion.

2.4. Quality Appraisal

The risk of bias, applicability, and overall evaluation of the included studies were evaluated using the Prediction Model Risk of Bias Assessment Tool (PROBAST). Two researchers performed the evaluation, with a third researcher resolving disagreements [10]. The specific criteria for determining the “high risk of bias” include a mismatch between the study population and the target population, insufficient sample size, unclear definition or inconsistent measurement methods of predictor variables, a large amount of missing data in predictor variables without appropriate handling, unclear definition or inaccurate measurement methods of outcomes, presence of information bias in outcomes, failure to address overfitting during model development, lack of internal or external validation, and failure to report key performance metrics of the model.

2.5. Data Extraction

Data were extracted following the “Critical Appraisal and Data Extraction for Systematic Reviews of Prediction Modeling Studies: The CHARMS Checklist.” Extracted information included the first author, country, year of publication, study type, data source, model type, modeling method, sample size, predictor screening method, predictors, methods for handling missing data, model presentation format, model performance, and validation method [11].

2.6. Synthesis

A systematic overview was taken in this study because the data could not be evaluated for heterogeneity.

3. Results

3.1. Results of the Search

A total of 3324 Chinese and English articles remained after the initial search and removal of duplicates. After screening the titles and abstracts and excluding irrelevant studies or those with inconsistent subjects, 14 articles were ultimately included in the review [12–25].

3.2. Basic Characteristics of the Included Studies

The majority of the included studies were retrospective, with sample sizes ranging from 137 to 61,532 cases. A total of 6 studies involved both model development and validation, with most data sourced from medical record systems. Candidate predictors were typically screened using univariate and multivariate analyses, with two studies employing LASSO regression and multivariate logistic regression, one study using stepwise backward regression, and another utilizing stepwise forward regression. The number of candidate predictors ranged from 11 to 42, while the number of final predictors selected ranged from 4 to 8 (Tables 1 and 2.)

| Author(s) | Study design | Participants | Data sources | Model type | Sample size | Predictor screening methods | Candidate factor | Ultimate factor |

|---|---|---|---|---|---|---|---|---|

| Xu 2020 | Retrospective | ICU patients | Medical record system | Development + validation | 595 | Single-factor and multifactor analyses | 24 | 8 |

| Wu 2020 | Retrospective | ICU patients | Medical record system | Development + validation | 648 | Single-factor and multifactor analyses | 16 | 7 |

| Huang 2021 | Retrospective | ICU patients | Medical record system | Development | 203 | LASSO regression, multifactor logistic regression | 13 | 5 |

| Xue 2022 | Retrospective | ICU patients | Medical record system | Development + validation | 198 | LASSO regression, multifactor logistic regression | 16 | 8 |

| Tang 2022 | Retrospective | ICU patients | Medical record system | Development + validation | 213 | Single-factor and multifactor analyses | 18 | 7 |

| Mo 2022 | Retrospective | ICU patients | Medical record system | Development | 1149 | Single-factor and multifactor analyses | 19 | 5 |

| Zhang 2023 | Retrospective | ICU patients | Medical records + bedside surveys | Development | 570 | Single-factor and multifactor analyses | 11 | 4 |

| Luo 2023 | Retrospective | ICU patients | Medical record system | Development | 137 | Single-factor and multifactor analyses | 13 | 4 |

| Wang 2024 | Retrospective | RICU patients | Medical record system | Development | 796 | Single-factor and multifactor analyses | 12 | 6 |

| Ali 2023 | Retrospective | ICU patients | Philips eRI dataset | Development + validation | 57,944 | 18 | 6 | |

| Duraid 2020 | Retrospective | ICU trauma patients | Database | Development | 1403 | Single-factor and multifactor analyses | 30 | 6 |

| Ying 2022 | Retrospective | ICU patients | MIMIC-III dataset | Development + validation | 61,532 | Single-factor analysis, multifactor analysis, stepwise backward regression | 42 | 8 |

| Xu 2019 | Retrospective | > 80-year-old ICU patients | Medical record system | Development | 901 | Single-factor analysis, multifactor analysis, stepwise backward regression | 22 | 7 |

| Wu 2020 | Retrospective | ICU patients | ARDSNet database | Development | 1000 | Single-factor and multifactor analyses | 19 | 6 |

| Author(s) | Predictor |

|---|---|

| Xu 2020 | Age ≥ 65 years, fever ≥ 2 consecutive days, surgery, previous CRE, previous CRAB, previous CRPA, history of cerebrovascular accident, elevated blood calcitoninogen |

| Wu 2020 | Age, gender, congestive heart failure, COPD, stroke, nasogastric tube placement, mechanical ventilation |

| Huang 2021 | Age ≥ 60 years, APACHE2 score ≥ 20, number of invasive maneuvers ≥ 3, duration of mechanical ventilation ≥ 2 weeks, impaired consciousness |

| Xue 2022 | Age ≥ 60 years, coadministration of antimicrobials, serum albumin < 40 g/L, mechanical ventilation ≥ 7 days, hospitalization ≥ 14 days, use of acid-suppressing agents, tracheotomy, diabetes mellitus |

| Tang 2022 | Age ≥ 60 years, history of smoking, history of diabetes mellitus, GCS score ≤ 8, glucocorticoid use, duration of tracheal intubation > 7 days, emergency surgery |

| Mo 2022 | Age ≥ 60 years, ventilator use ≥ 5 days, use of antimicrobials, APACHE2 score ≥ 20, ICU stay ≥ 14 days |

| Zhang 2023 | Malnutrition, invasive operations, ICU stay > 10 days, chronic lung disease |

| Luo 2023 | Age, length of hospitalization > 14 days, impaired consciousness, glucocorticoid use |

| Wang 2024 | Cerebral infarction, presence or absence of antimicrobials in combination, central venous catheter, urinary catheter, days on ventilator, days on antimicrobials |

| Ali 2023 | High temperature, high heart rate, low oxygen saturation, shortness of breath, low creatinine, anemia |

| Duraid 2020 | Massive blood transfusion, acute kidney injury, facial injury, sternal injury, spinal injury, ICU length of stay |

| Ying 2022 | CPIS score ≥ 6, admission diagnosis, sputum frequency, worst temperature value, PaO2/FiO2 ratio, WBC within the first 24 h of ventilation, worst APACHE III and SOFA score values within the first 24 h of ICU admission |

| Xu 2019 | Chronic obstructive pulmonary disease (COPD), intensive care unit (ICU), method of MV, antibiotic dosage, number of central venous catheters, duration of indwelling urinary catheter, use of corticosteroids before MV |

| Wu 2020 | Use of neuromuscular blocking agents for trauma, severe ARDS, admission to hospital for unplanned surgery |

3.3. Construction of the Risk Prediction Model and Prediction Performance

Among the 14 studies, 85.7% constructed lung infection risk prediction models using logistic regression, while the remaining studies employed machine learning techniques. The logistic regression model dominated in 14 studies and was widely used due to its simplicity and ease of interpretation. A few studies employed machine learning methods (such as random forest and support vector machine), which may be more suitable for handling high-dimensional data and nonlinear relationships. Two studies used implicit interpolation and multiple interpolation methods to handle missing data, and six studies presented models as nomograms, with two studies establishing scoring systems. Three studies involved model development and internal validation, while another three conducted both internal and external validation. In addition, two studies used bootstrap and cross-validation for model validation. A total of 13 studies evaluated model discrimination using the area under the curve (AUC), with values ranging from 0.722 to 0.936, and validation AUC values reported in 4 studies, ranging from 0.59 to 0.936. The AUC value of the model building is relatively high (ranging from 0.722 to 0.936), indicating that the model has good discriminative ability in the development set. The validation AUC values have a wide range (from 0.59 to 0.936), suggesting that the generalization ability of some models in external data is limited. One study employed decision curve analysis (DCA) to assess the diagnostic accuracy. Seven studies reported model calibration methods: six used the Hosmer–Lemeshow test, one employed the Omnibus model coefficients test and Hosmer–Lemeshow goodness-of-fit test, and one used both the Hosmer–Lemeshow test and calibration curve plots (Table 3). The differences in model performance are mainly related to factors such as predictive factors, model construction methods, and datasets. Models with better performance usually incorporate predictive factors that are more clinically meaningful and statistically significant. For example, some studies may have included variables highly correlated with pulmonary infection (such as duration of mechanical ventilation, white blood cell count, and body temperature), and these factors can significantly improve the discriminative ability of the model. The reasonable handling of predictive factors can enhance the stability of the model. For instance, a model that uses the multiple imputation method to deal with missing data may perform better than a model that directly deletes the missing data. Although logistic regression performs well in most studies, its performance is limited by the linear assumption. For datasets with strong nonlinear relationships, logistic regression may not be able to fully capture the complex patterns. Among the models with better performance, some studies have adopted machine learning methods. These methods can handle nonlinear relationships and interactions, thus performing better in complex datasets. A model using LASSO regression can automatically screen important variables and reduce the risk of overfitting, thereby improving the generalization ability of the model. Studies with a larger sample size usually can provide more stable model performance. A larger sample size can reduce random errors and enhance the generalization ability of the model. Studies with higher data quality usually can construct more reliable models. For example, studies using structured data from electronic medical record systems may perform better than those relying on unstructured data. The wider the patient population covered by the dataset, the stronger the generalization ability of the model usually is. Studies from a single center may have poor performance in external validation due to the limitations of patient characteristics.

| Author(s) | Modeling methodology | Model performance | Missing data | Model presentation | Model validation type | Validation model methods | |

|---|---|---|---|---|---|---|---|

| Discrimination | Calibration | ||||||

| Xu 2020 | Logistic regression |

|

Predictive scoring system | Internal validation | |||

| Wu 2020 | Logistic regression |

|

Hosmer–Lemeshow test, calibration curves | Line graph | Internal and external validation | Bootstrap | |

| Huang 2021 | Logistic regression | AUC: 0.817 | Omnibus model coefficient test, Hosmer–Lemeshaw goodness-of-fit test | Line graph | |||

| Xue 2022 | Logistic regression | AUC: 0.815 | Line graph | Internal validation | Bootstrap | ||

| Tang 2022 | Logistic regression | AUC: 0.829 | Line graph | Internal validation | Bootstrap | ||

| Mo 2022 | Logistic regression |

|

Scoring model | ||||

| Zhang 2023 | Logistic regression | AUC: 0.895 | Hosmer–Lemeshow test | ||||

| Luo 2023 | Logistic regression | AUC: 0.806 | Hosmer–Lemeshow test | ||||

| Wang 2024 | Logistic regression | AUC: 0.925 | Hosmer–Lemeshow test | Line graph | |||

| Ali 2023 | Machine learning | AUC: 0.76 | Implicit interpolation | Decision tree | Internal and external validation | Cross-validation | |

| Duraid 2020 | Logistic regression | ||||||

| Ying 2022 | Machine learning |

|

Random forest | Internal and external validation | Cross-validation | ||

| Xu 2019 | Logistic regression | AUC: 0.722 | Hosmer–Lemeshow test | ||||

| Wu 2020 | Logistic regression | AUC: 0.744 | Hosmer–Lemeshow test | Multiple interpolation | Line graph | ||

3.4. Results of Risk of Bias and Applicability Evaluation

All included studies were assessed as having a high risk of bias. Specifically, 13 studies were rated as high risk of bias in terms of the study population, one study had an unclear risk concerning predictors, and the remaining studies were rated as low risk of bias. Regarding outcomes, 14 studies were classified as having a high risk of bias, and 12 studies had a high risk of bias due to the lack of reporting on missing data handling and complex data treatments. Despite these issues, the overall applicability across the three domains, study population, predictors, and outcomes, were deemed good, indicating a high level of applicability (Table 4). Although most studies have a low risk of bias in the field of predictive factors, the risk of bias in the fields of study subjects, outcomes, and analysis is relatively high, which may affect the reliability of the research results. The high evaluation of applicability indicates that the research results have potential application value in the targeted clinical problem (prediction of the risk of pulmonary infection in the ICU).

| Author(s) | Risk of bias | Suitability | Total | ||||||

|---|---|---|---|---|---|---|---|---|---|

| Research target | Predictor | Conclusion | Analyze | Participants | Predictor | Conclusion | Risk of bias | Suitability | |

| Xu 2020 | − | + | + | − | + | + | + | − | + |

| Wu 2020 | − | + | + | − | + | + | + | − | + |

| Huang 2021 | − | + | + | + | + | + | + | − | + |

| Xue 2022 | − | + | + | + | + | + | + | − | + |

| Tang 2022 | − | + | + | − | + | + | + | − | + |

| Mo 2022 | + | + | + | − | + | + | + | − | + |

| Zhang 2023 | − | + | + | − | + | + | + | − | + |

| Luo 2023 | − | + | + | − | + | + | + | − | + |

| Wang 2024 | − | + | + | − | + | + | + | − | + |

| Ali 2023 | − | ? | + | − | + | + | + | − | + |

| Duraid 2020 | − | + | + | − | + | + | + | − | + |

| Ying 2022 | − | + | + | − | + | + | + | − | + |

| Xu 2019 | − | + | + | − | + | + | + | − | + |

| Wu 2020 | − | + | + | − | + | + | + | − | + |

- Note: +, Low risk of bias/high applicability; −, high risk of bias/low applicability; ?, the unclear risk of bias or applicability.

4. Discussion

4.1. Predictive Performance of Risk Prediction Models

This study systematically reviewed Chinese and English literature on risk prediction models for lung infection in ICU patients. Literature was screened according to the inclusion and exclusion criteria, and information was extracted using the CHARMS list. A total of 14 studies were included, encompassing 20 risk prediction models. The models were presented in various forms, including nomograms, scoring systems, decision trees, and random forests, with 42.9% presented as nomograms. Logistic regression was used in 85.7% of the studies, and 78.6% of the models employed multivariate and multivariate analysis for predictor screening. Of the models reporting AUC values, 94.1% had an AUC greater than 0.7 and 70.6% had an AUC greater than 0.8. These results suggest that models constructed using logistic regression generally exhibit good predictive ability and discrimination. Traditional regression methods and machine learning models each have their own advantages and disadvantages, and the choice of method should be based on research objectives and data characteristics. Traditional regression is simple and easy to interpret, suitable for small sample data, and supported by mature statistical tools, making it easy to understand. However, it is prone to overfitting in complex data and is sensitive to missing data. Machine learning models are well-suited for handling complex, large sample data, capable of capturing nonlinear relationships and complex interactions, and generally offer higher predictive performance. However, these models are more complex and require additional tools to interpret their workings.

4.2. Risk of Bias and Applicability Evaluation of Risk Prediction Models

Assessing the risk of bias is a crucial component of systematically evaluating prediction models. All 14 included studies were evaluated for risk of bias and applicability using the PROBAST assessment tool [26]. The studies were found to have a high risk of bias due to several factors, including inadequate handling of missing data, reliance on univariate analysis for predictor selection, lack of performance evaluation, and failure to address overfitting. A high risk of bias can significantly reduce the reliability and clinical usability of predictive models. In the prediction of pulmonary infections in the ICU, infected patients usually constitute a minority, causing the model to tend to predict the majority class. As a result, the ability to identify infected patients is decreased, leading to a relatively low sensitivity of the model to positive patients. Many studies only conduct internal validation without external validation or temporal validation. This may lead to poor performance of the models in new data or future data. If the definitions of predictive variables are unclear or the measurement methods are inconsistent, the model may not be able to accurately capture the true risks of patients. If missing data are not properly handled (such as directly deleting samples with missing values), it may lead to model bias or loss of information.

In the process of model development, validation, or reporting, technical errors such as failure to report key performance metrics, failure to address overfitting, or inappropriate selection of statistical methods may occur. These issues often arise from researchers’ lack of in-depth understanding of statistical modeling, leading to the omission of critical steps in data analysis, such as failing to perform model calibration or failing to evaluate clinical utility. Unaddressed overfitting may result in a model performing well on the training dataset but poorly on new datasets, while the failure to report key performance metrics may hinder the comprehensive assessment of the model’s clinical applicability [27]. These issues can be mitigated by employing techniques such as cross-validation and external validation to address overfitting and by comprehensively reporting model performance metrics, including discrimination, calibration, and clinical utility [28]. On the other hand, clinical limitations may arise due to restricted data sources, ethical constraints, or heterogeneity in clinical practices, leading to issues such as insufficient representation of the study population, inadequate control of confounding factors, or insufficient time span for data collection [29]. Insufficient representation of the study population may result in poor model performance on new datasets. These limitations can be addressed by expanding the representativeness of the study population through multicenter studies, thoroughly considering confounding factors during model development, and conducting sensitivity analyses.

For future model development, addressing key challenges such as missing data, overfitting, and insufficient external validation requires a comprehensive approach. To handle missing data, strategies such as deletion methods, single imputation, multiple imputation, algorithms capable of managing missing data (e.g., XGBoost and random forests), or Bayesian framework–based models, combined with sensitivity analyses, can be employed. To mitigate overfitting, techniques such as cross-validation, external validation, and variable reduction methods such as LASSO regression are recommended. For enhancing external validation, multicenter data sharing, prospective validation, and simulated external validation (e.g., temporal or geographical splitting of datasets) should be utilized [30]. These measures collectively contribute to the development of more reliable, generalizable, and clinically useful predictive models, ultimately improving patient outcomes and clinical decision-making.

4.3. Clinical Significance of Analyzing Model Predictors

Common predictors identified across studies included age, length of hospital stay, mechanical ventilation, use of antimicrobial drugs or glucocorticoids, invasive procedures, and assisted ventilation. For instance, Zhang Xiangjun’s study revealed a significant correlation between prolonged hospitalization and the risk of hospital-acquired infections, especially in patients with preexisting lung diseases that compromise respiratory defenses, increasing their susceptibility to lung infections [18]. Invasive procedures during hospitalization can further weaken the patient’s immunity, heightening the risk of infection. Elderly patients are particularly vulnerable due to age-related physiological degradation of the respiratory system, reduced lung elasticity, and diminished local defense mechanisms, making them more prone to lung infections [31]. Procedures such as tracheotomy and mechanical ventilation disrupt the body’s natural barriers, facilitating bacterial entry into the lower respiratory tract and increasing the likelihood of ventilator-associated pneumonia [32]. Klompas’s study emphasized that avoiding intubation and minimizing the duration of mechanical ventilation can effectively reduce ventilator-related events [32]. Rudnick’s research highlighted that prolonged and extensive use of antimicrobial drugs can lead to bacterial resistance, resulting in infections [33]. In addition, Li et al.’s analysis of 716 pneumonia patients demonstrated that glucocorticoid use increased the risk of infections by opportunistic pathogens, with a mortality rate as high as 45% in patients on long-term glucocorticoid therapy [34]. These predictors provide important clinical insights for preventing pulmonary infections in ICU patients.

5. Conclusion

This systematic evaluation of lung infection risk prediction models for ICU patients revealed that, while the models showed good applicability, they were still in a developmental stage and carried a high risk of bias. To improve predictive performance and usability, future models should leverage machine learning techniques instead of traditional regression methods. Machine learning has great potential in the prediction of pulmonary infections in the ICU, but it is necessary to address the current limitations. Appropriate algorithms can be selected according to the characteristics of the data. For high-dimensional data, ensemble learning or deep learning should be preferentially chosen. In model development, we combine multiple algorithms, compare their performance, and select the optimal model. Suitable multicenter datasets can be selected according to the research plan. We integrate the prediction results of the machine learning model with clinical practical experience for judgment. In addition, more extensive and rigorous validation studies, including prospective studies with large sample sizes and diverse populations, are recommended to develop more robust and user-friendly predictive models.

Conflicts of Interest

The authors declare no conflicts of interest.

Author Contributions

X.W. and Z. D. contributed to the study conceptualization and design. X.W., J.K., Z. S., and G. Q. performed the literature search and contributed to data acquisition. X.W. performed statistical analyses. All authors contributed to the interpretation of data. X. W. contributed to manuscript drafting. All authors critically reviewed and revised the draft manuscript and approved the final version for submission.

Funding

This work was supported by the Postgraduate Research & Practice Innovation Program of Jiangsu Province (SJCX24_0845) and the Open Subject of Jiangsu Province Chinese Medicine Epidemic Disease Research Center in 2024 (JSYB2024KF19).

Acknowledgments

The authors would like to thank TopEdit (https://www.topeditsci.com) for its linguistic assistance during the preparation of this manuscript. This study was registered in PROSPERO (registration number CRD42024532520).

Supporting Information

The PRISMA 2020 checklist has been provided in this paper and is detailed in the Supporting Information section.

Open Research

Data Availability Statement

The datasets used and/or analyzed during this study are available from the corresponding author upon reasonable request.