Multiagent Energy Management System Design Using Reinforcement Learning: The New Energy Lab Training Set Case Study

Abstract

This paper proposes a multiagent reinforcement learning (MARL) approach to optimize energy management in a grid-connected microgrid (MG). Renewable energy resources (RES) and customers are modeled as autonomous agents using reinforcement learning (RL) to interact with their environment. Agents are unaware of the actions or presence of others, which ensures privacy. Each agent aims to maximize its expected rewards individually. A double auction (DA) algorithm determines the price of the internal market. After market clearing, any unmet loads or excess energy are exchanged with the main grid. The New Energy Lab (NEL) at Staffordshire University is used as a case study, including wind turbines (WTs), photovoltaic (PV) panels, a fuel cell (FC), a battery, and various loads. We introduce a model-free Q-learning (QL) algorithm for managing energy in the NEL. Agents explore the environment, evaluate state-action pairs, and operate in a decentralized manner during training and implementation. The algorithm selects actions that maximize long-term value. To fairly consider the algorithms for both customers and producers, a fairness factor criterion is used. QL achieves a fairness factor of 1.2643, compared to 1.2358 for MC. It also has a shorter training time of 1483 compared with 1879.74 for MC and requires less memory, making it more efficient.

1. Introduction

The electric power industry is facing numerous challenges, including depleting fossil fuel resources, regulatory and public pressure to cut pollution, growing energy demand, and aging infrastructure. Despite these difficulties, the industry has access to abundant clean energy resources, such as solar and wind power, which offer promising solutions [1]. In the future net zero buildings, natural gas, as an energy carrier, will need to be replaced by renewable energy systems including, photovoltaic (PV) panels, small wind turbines (WTs), renewable hydrogen, H2 fuel cell (FC), and energy storage systems (e.g., battery, H2 generation, and storage). Microgrids (MGs) are becoming increasingly important in today’s power systems because they enhance reliability, integrate renewable energy sources (RESs), improve energy efficiency, reduce costs, and increase energy availability [2]. According to the International Council on Large Electric Systems (CIGRE), MGs are defined as electricity distribution systems containing loads and distributed energy resources (DERs). These systems can operate in a controlled and coordinated manner, whether connected to the main grid or functioning independently in the islanded mode [3]. However, integrating DERs poses significant challenges for maintaining the stable and economical operation of a MG. The intermittent and stochastic nature of DERs, such as PV and WT, introduces uncertainty in energy generation. Combined with load variations, these uncertainties make it difficult to develop accurate generation schedules [4]. An energy management system (EMS) is a systematic approach to optimizing, planning, controlling, monitoring, and conserving energy. Its goal is to enhance operational efficiency and reduce energy consumption [5]. Numerous articles are devoted to managing energy in MGs. The authors in [6] used mixed integer linear programming to minimize fuel costs, employing a piecewise linear function for diesel generators. Notably, their work does not account for the uncertainties associated with PV generation and load demand. In [7], the authors present a modified particle swarm optimization algorithm for real-time energy management in grid-connected MGs, achieving a significant reduction in operational costs. The authors in [8] utilized the multiobjective nondominated sorting genetic algorithm II. This approach is aimed at optimizing energy management in MGs, with a primary focus on reducing costs and environmental pollution. In [9], the article focuses on the application of model predictive control (MPC) for energy management in grid-connected smart buildings with electric vehicles (EVs). The MPC-based EMS involves forecasting information on building demand and EV charging demand to optimize energy consumption and cost. The study assesses the effectiveness of MPC in achieving efficient energy management in the context of grid-connected smart buildings with EVs. In [10], the authors implemented an advanced EMS in a community of buildings by using nonlinear MPC based on successive linear programming. They optimized electricity flow by considering real-time factors such as weather forecasts and market prices. All the previously mentioned methods for managing energy in MGs rely on utilizing the system’s model estimation. Creating a model-based energy management strategy for a MG necessitates expertise in modeling each system component. The choice of models and parameters can significantly impact the results or predictions, and it is important to carefully consider and validate these selections to ensure the robustness and generalizability of the models. Inaccurate probability distribution models, coupled with low-accuracy parameters, hinder traditional model-based methods, leading to suboptimal solutions. In addition, adapting to changes in MG topology, scale, or capacity requires system remodeling and solver redesign, a process that is both cumbersome and time-consuming [11]. This typically occurs due to the complexity of the environment, making the priori design of effective agent behaviors challenging or even impossible. In addition, in a dynamic environment, a preprogrammed behavior may become unsuitable over time [12]. As an alternative to model-based approaches, learning-based algorithms have surfaced in recent years. They hold the potential to reduce dependence on explicit system models, improve the scalability of EMS, and decrease associated maintenance costs [13]. In recent years, there has been growing enthusiasm among academic and industrial research for energy management methods based on machine learning and artificial intelligence [14]. Reinforcement learning (RL) is one branch of machine learning that has been extensively applied to perform complex tasks, spanning from games [15] to robotics [16]. Lots of work has been devoted to using RL in managing energy in smart grids. For instance, in [17], a decentralized Q-learning (QL) algorithm was utilized to manage energy in a MG containing various energy resources. The performance of the agents was compared under four scenarios, which include no learning, generator learning, customer learning, and whole learning. In [18], the authors presented a novel decentralized multiagent approach for energy management in a grid-connected MG, incorporating both electrical and thermal power. Their approach treated energy generators and consumers as autonomous agents capable of making decisions, each seeking to maximize their own profit using a model-free QL algorithm. The study evaluated three different action selection methods and compared their results. This approach holds promise for more efficient and effective energy management in MG systems. In [19], a multiagent-based model is used to study energy management in a MG. In this article, WT, PV panel, diesel generator, and battery were considered within the MG. QL was used to solve the energy management problem. Loads were considered as active agents, which can curtail their demand in order to achieve more profit. The main focus of [20] has been on mitigating fluctuations and mismatches between power supply and demand. To tackle this issue, they introduced an innovative approach known as the enhanced RL-based method. By using the SARSA algorithm in RL, this method mainly optimizes energy usage from renewable sources like solar panels. The main objective of their work is to maximize the utilization of renewable energy while minimizing the consumption of fossil fuel energy. In [21], a novel SARSA-based method was proposed to solve a complex scenario-based nonconvex optimization problem. In [22], a method for managing energy in a multi-MG environment is introduced. To determine the retail price, the MC method was employed. It enhances decision-making by using predictive techniques, aiming to maximize profits from power sales while minimizing the peak-to-average ratio. The authors in [23] utilized the MC method to handle uncertainties in wind power, aiming to generate profits from wind energy investments. Numerous studies have delved into the domain of energy management in MGs. The distributed architecture of these agents allows for the application of this optimization technique as a decentralized control strategy employed in MG EMS [24]. The significant research gap in the literature is that none of the referenced articles integrate practical settings into their research methodologies. Consequently, there is a lack of comprehensive research aimed at validating theoretical concepts in real-world scenarios and utilizing real technical data. Hence, this article develops a smart and efficient scheduling mechanism for optimizing the performance of a MAS-based EMS in a practical setting to address real-world challenges. Therefore, the New Energy Lab (NEL) training set [25] is selected, a facility equipped with different DERs and some loads. Notably, this lab can be seamlessly integrated with the power main grid. A fully decentralized QL algorithm is proposed for the NEL’s energy management. To evaluate the performance of the presented algorithm, the simulations are conducted using real weather data. In addition, the results of the presented method are compared with SARSA and MC algorithms. Based on the simulation results, the efficiency and superiority of the proposed method are confirmed.

- •

A comprehensive MG model is created to simulate the components of the NEL. The model encompasses WTs, PV panels, an FC, a battery, and various loads. Each component is carefully modeled to ensure an accurate representation in the simulation.

- •

The control problem is formulated as a Markov game, considering a wide range of control actions like the price of energy for all of the agents, as well as the current of the FC and battery.

- •

This proposed model takes into consideration the technical constraints of DERs, as well as consumption by customer agents.

- •

The market clearing process is facilitated using a DA algorithm.

- •

The proposed decentralized approach is implemented using real-world data and compared with SARSA and the MC algorithm.

The remainder of this paper is organized as follows: In Section 2, we provide the model of the components of the NEL. Section 3 discusses the energy management design based on reinforcement learning, starting with the Markov game framework and its state, action, and reward components in the multiagent NEL environment. It then covers QL, SARSA, and Monte Carlo algorithms, followed by hyperparameter tuning and the double auction algorithm. Section 4 presents the simulation results and analysis. Finally, we conclude this paper in Section 5.

2. Modeling Agents and Environment in the NEL

The NEL comprises PV panels, WTs, an FC, a battery, and various loads. It serves as an energy management platform for integrating renewable wind and solar generation with battery storage, H2 generation through electrolysis, H2 storage, and power regeneration using an FC. Its flexible energy management unit allows quick modifications to the system’s setup, enabling the integration of multiple renewable inputs with various energy storage options.

In this study, the NEL is approached as a multiagent system (MAS), where each DER, battery, and load is modeled as an autonomous agent. An agent is an entity capable of perceiving its environment, processing information, and taking actions to achieve specific objectives. These agents operate in a fully decentralized manner, making independent decisions based on local observations while interacting through a shared communication network.

At the system level, the MAS ensures efficient resource utilization, minimizes reliance on the main grid, and guarantees reliable energy balancing. The scalable decentralized framework allows the seamless integration of additional agents without disrupting the learning process, while maintaining flexibility to adapt to dynamic changes in system configuration and operational goals.

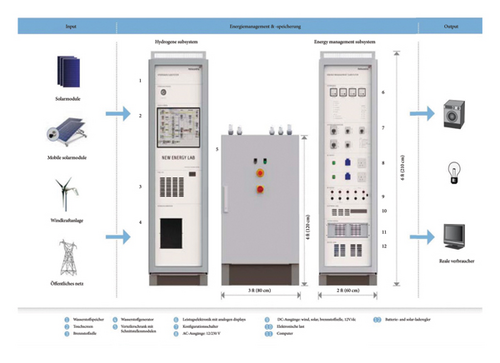

The architecture of the NEL system is depicted in Figure 1, providing a visual representation of its components and interactions.

The NEL is connected to the main grid via a bidirectional power flow and a communication channel.

In the following, mathematical models of different agents are given.

2.1. WT

2.2. PV Panels

In the context of this equation, T and TA correspond to the cell and ambient temperatures, expressed in °C. Ki and Kv denote the current and voltage temperature coefficients, measured in amperes/°C and volts/°C, respectively. NOT signifies the nominal operating temperature of the cell in °C, and Voc and Isc stand for the open circuit voltage and short circuit current. VM and IM, respectively, refer to the voltage and current at the maximum power point.

2.3. FC

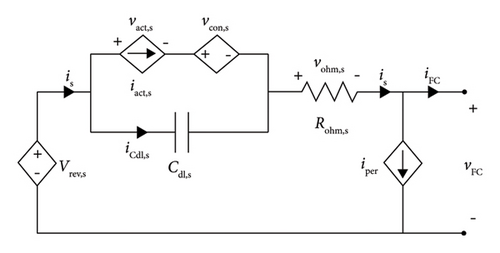

Figure 2 illustrates the model depicting the electrical performance of the FC in both steady-state and dynamic operational modes [30].

2.3.1. Thermodynamic Phenomena

The cell operating temperature is represented by T in °C, while and correspond to the hydrogen and oxygen pressures, respectively, measured in bars.

2.3.2. Activation Phenomena

The overvoltage caused by this phenomenon is known as activation voltage.

2.3.3. Concentration Phenomena

2.3.4. Double Layer Phenomena

2.3.5. Ohmic Phenomena

2.3.6. Peripheral Energy Consumption

2.4. Battery

In [31], the Manegon model of the battery is given, and it has two modes: discharge mode and charge mode.

2.4.1. Discharge Mode

2.4.2. Charge Mode

2.5. Loads

- •

Critical appliances, such as refrigerators, are essential for daily life and must always receive their full power demand. Since these appliances cannot be turned off or reduced without causing inconvenience or disruption, they are excluded from the demand response program.

- •

Curtailable appliances, such as air conditioners and water heaters, offer more flexibility. Their power consumption can be adjusted based on system conditions, electricity prices, or incentives, making them valuable participants in the DR program.

Each load acts as an independent agent, deciding how much energy it can reduce when needed. This flexibility helps balance supply and demand, reduce peak loads, and lower electricity costs. By allowing real-time adjustments, curtailable loads also make it easier to integrate renewable energy sources into the microgrid, leading to a more stable and efficient energy system.

2.6. Environment Design

3. Energy Management Design Based on RL

In MGs, agents’ profit depends on their own actions and the actions of other agents. Due to the stochastic nature of the MG environments, agents do not follow predetermined and fixed policies; they continuously adapt their strategies to maximize their profits. To represent these dynamic and nonstationary interactions among agents within MGs, the Markov game framework is suitable. Markov games are extensions of MDPs to MASs, in which multiple actions are chosen and executed at the same time, resulting in new states that are influenced by the actions taken by each individual agent [32].

A Markov game is a tuple , in which N shows the number of agents, S is a finite set of states, A is the action set where each Ai is the action set of agents i ϵ N, P is the transition probability which indicates the probability of going to new state given the current states and actions, Ri is the reward function of agent i, and γϵ(0, 1] is the discount factor [33].

3.1. Design of Agents’ Elements in Markov Game

In the Markov game setting, producer agents aim to maximize their profits, while customers seek to minimize costs. The primary objective is to reduce reliance on the main grid by establishing a self-sufficient MG. A summary of the agent’s role, state, action, immediate reward, and objective of all agents is provided in Table 1.

| Agent’s name | Agent’s role | States | Actions | Immediate rewards | Objectives |

|---|---|---|---|---|---|

| WT | Energy producer | (t, PrS, PrB) | Price of WT energy | profit_WT − cost_WT | Maximize profit of selling produced energy |

| PV | Energy producer | (t, PrS, PrB) | Price of PV energy | profit_PV − cost_PV | Maximize profit of selling produced energy |

| FC | Energy producer | (t, PrS, PrB) | Price of FC energy, current of FC | profit_FC − cost_FC | Maximize profit of selling produced energy |

| Load | Energy consumer | (t, PrS, PrB) | Price of load demand, load curtailment percentage | reward_load = −(pc∗(ci(t) + ri(t)) + K∗|di(t) − ei(t)|) | Minimize costs and enhance customer satisfaction |

| Battery | Energy storage | (t, PrS, PrB, SoC) | Price of battery energy, current of battery |

|

Optimize charge/discharge cycles |

Next, we describe the key elements of the Markov game, including states, actions, and rewards.

3.1.1. Description of State Elements in the NEL Multiagent Environment

- •

The time of day serves as a valuable indicator for all agents, as demand fluctuations, renewable energy availability, and grid congestion can depend on the time. This helps in identifying patterns more effectively.

- •

The prices for buying and selling energy from the main grid are chosen as states for all agents, providing essential information on the current energy cost. This enables both producers and consumers to make informed decisions about their energy bids. Producers, for example, can choose to sell energy to the grid when prices are high, thereby maximizing their revenue, while consumers can decide whether to purchase energy from the main grid based on the affordability of the prices. By relying on real-time price data, both types of agents can improve their operations and contribute to more efficient energy management.

- •

The SoC of the battery is selected as a state for the battery agent to represent available energy storage capacity. This enables strategic management of battery usage, balancing supply and demand, reducing reliance on the main grid, and addressing the intermittency of renewable energy sources.

3.1.2. Description of Action Elements in the NEL Multiagent Environment

- •

PV and WT, as nondispatchable energy sources, generate power based on environmental factors such as solar irradiance, temperature, and wind speed. Due to their dependence on these fluctuating variables, their energy output is inherently variable and cannot be controlled to match demand. As such, no action is defined for controlling their energy output. In contrast, FCs are dispatchable, as their energy output can be adjusted by controlling the operating current, which is chosen as an action to allow regulation in response to demand.

- •

Batteries enhance energy management by optimizing energy storage, meeting demand requirements, and contributing to grid stability. This is achieved through the precise control of charge and discharge currents, with battery current specifically chosen as an action for regulating their energy output.

- •

All producer agents, batteries, and loads determine the prices for their energy production and consumption, enabling producers to strategically set prices for revenue optimization and consumers to minimize costs, while taking into account factors such as demand, supply, and market conditions.

- •

Customers’ action is the percentage of their load they choose to curtail, representing their willingness to reduce electricity consumption. They select a curtailment percentage from the set {0, 0.05, …, 0.65, 0.70}. A selection of 0 means the customer opts not to curtail any part of their load, maintaining full electricity usage. Conversely, a selection of 0.7 indicates that the customer curtails 70% of their load, significantly lowering their electricity consumption to contribute to system efficiency or cost savings.

3.1.3. Description of Reward Elements in the NEL Multiagent Environment

- •

The rewards for producers, such as PV, WT, and FCs, are defined based on the profit they earn from selling their generated energy, either directly to local loads or to the main grid. These rewards are higher when they sell energy within the internal market, which offers greater profits compared with selling excess energy to the grid. The main grid encourages this behavior by prioritizing the utilization of green energy, reinforcing the incentive with a lower purchase price for energy bought by the grid.

() - •

The reward for the customers balances cost and satisfaction. In each time slot t, every customer i has an accumulated load demand, denoted as di(t), which represents the total energy they wish to consume for their appliances within that time slot. The desired energy consumption of customer i for their critical load and curtailable load at time slot t is represented by ci(t) and ri(t), respectively. Thus, di(t) can be expressed as di(t) = ci(t) + ri(t). After customer i consumes energy ei(t) during time-slot t, it fulfills a portion of di(t), and the remainder, denoted as di(t) − ei(t), remains unsatisfied. This unsatisfied load demand, referred to as the remaining load demand, leads to customer dissatisfaction during that time slot. Besides the price of energy that customer agent must pay, we have the penalized part, the unsatisfied amount of load will appear in the cost function. The reward of the customer’s energy at each time step is written as follows [19].

() -

The value of K can be selected based on customer preferences. For instance, customers who prioritize having all their appliances fully satisfied need to choose a higher K. The system adjusts dynamically to these preferences, ensuring that rewards are optimized to effectively align with individual priorities. In this formula, pc shows the mean of energy price in the main grid.

- •

The battery operates in three distinct modes: charging, discharging, and idle. Each mode has a specific reward mechanism designed to guide the battery’s behavior and ensure efficient energy management.

- 1.

Charging mode (battery as a buyer)

-

In the charging mode, the battery stores energy for future use. The reward formula is

() -

The parameter a plays a crucial role in encouraging or discouraging charging based on the selling price of energy by the main grid. We consider two following scenarios.

-

First, high selling price (PrS〉P1): a negative value is assigned to a, discouraging charging during high selling prices. This ensures the battery does not store energy when it is more profitable to sell.

-

Second, low selling price (PrS ≤ P1): a positive value is assigned to a, encouraging the battery to charge when energy prices are low, maximizing cost-effectiveness.

- 2.

Discharging mode (battery as a seller)

-

In the discharging mode, the battery releases stored energy to the grid or consumers. The reward formula is

() -

The parameter b determines the reward based on the selling price.

-

First, high selling price (PrS ≥ P2): a positive value is assigned to b, encouraging the battery to discharge during periods of high profitability.

-

Second, low selling price (PrS < P2): a negative value is assigned to b, penalizing discharging during low selling prices to avoid financial loss.

- 3.

Idle mode (no energy exchange)

-

When the battery is idle, it neither charges nor discharges. The reward is fixed as follows:

() -

The idle mode plays a crucial role in enhancing the overall efficiency and reliability of the system. By avoiding energy exchanges under unfavorable conditions, it enables more informed decision-making, ensuring that the battery is utilized only when it yields maximum benefits. This approach not only optimizes resource allocation but also enhances the system’s economic efficiency by preventing energy losses associated with inefficient charging and discharging. Furthermore, the idle mode significantly improves battery longevity by minimizing excessive cycling, thereby reducing capacity degradation over time. As a result, this mode supports both operational sustainability and long-term system stability, establishing it as a key element of energy management strategies.

- 1.

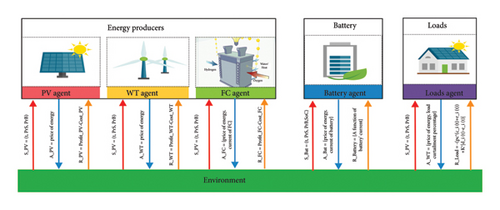

Figure 3 provides a visual representation of the NEL environment, highlighting the states, actions, and rewards associated with each agent in the multiagent setup. In this decentralized framework, agents operate independently, without awareness of each other’s existence, and interact solely with the environment to make decisions.

3.1.3.1. Cumulative Reward: System-Wide Performance

A higher cumulative reward indicates better overall coordination and efficiency among agents, reflecting an improved multiagent energy management strategy.

3.2. Multiagent Reinforcement Learning (MARL) Algorithms: An Overview of QL, SARSA, and Monte Carlo

RL is utilized for managing energy in MGs due to its capability to dynamically optimize energy distribution, storage, and consumption, adapt to changing conditions, handle complex decision-making tasks, provide flexibility in exploring strategies, and improve efficiency and reliability.

In RL, achieving an effective balance between exploration and exploitation is essential for agent success. Exploration involves trying new actions to discover potentially better strategies, while exploitation focuses on selecting the best-known actions to maximize immediate rewards. An overemphasis on exploration may delay convergence, while excessive exploitation can cause the agent to become stuck in suboptimal strategies.

In this article, the epsilon-greedy algorithm is used for choosing actions in the learning process as it effectively balances exploration and exploitation. In the ε-greedy scheme, the agent selects the action with the highest Q-value with a probability of 1 − ε or explores a random action with a probability of ε. It is beneficial for ε to vary over time; initially, it is set to a high value, promoting random action selection. As training progresses, ϵ gradually decreases, allowing the agent to shift from exploration to exploitation and better utilize accumulated knowledge [34].

The epsilon-greedy algorithm was chosen for its simplicity, flexibility, and computational efficiency, which make it suitable for real-time energy management. The algorithm’s smooth transition from exploration in early training to exploitation in later stages ensures that agents discover effective strategies while achieving stable performance.

In the following section, we will present a detailed explanation of the various algorithms employed in this article.

3.2.1. QL

This algorithm is a model-free off-policy. It means that for finding the optimal policy, the agents do not involve in constructing a model of the environment. It helps an agent to learn the optimal actions in different states by estimating their expected rewards. In RL, the learner (decision-maker) is called the agent and everything outside the agent is called the environment. The agent interacts with the environment over a sequence of discrete time steps (t = 0, 1, 2, 3, …). At each time step t, the agent receives a state, St ∈ S from the environment, where S is the set of possible states. Based on that state, the agent selects an action, At ∈ A (St), where A (St) is the set of actions available in the state St and it receives a reward, Rt ∈ R, and finds itself in a new state, St+1. The pseudocode for the QL algorithm for each agent is provided in the following (Algorithm 1).

-

Algorithm 1: Pseudocode of the QL algorithm for multiagent reinforcement learning.

-

Initialize Q (sp, ap) arbitrarily for all states sp and actions ap

-

For each episode:

-

Initialize for each agent p

-

Repeat for each step of the episode (t = 0, 1, 2, …, T − 1):

-

For each agent p:

-

Choose action using ε-greedy policy

-

Execute actions and observe reward for each agent p, and next state for each agent p

-

For each agent p:

-

Compute # Best action in next state

-

Update Q-value using the following formula:

-

-

Set

-

Until terminal condition (end of episode).

In this formula, α is the learning rate and determines in which degree the agent blends new information into its prior ones. Agents who care about their immediate reward choose a higher learning rate [33]. The discount factor γ determines the importance of future rewards [35]. Agents which think that expected future reward is much more valuable to them choose a higher discount factor.

3.2.2. SARSA

The SARSA [36] is a model free on-policy RL algorithm. A SARSA agent interacts with the environment and updates the policy based on actions taken, hence this is known as an on-policy learning algorithm. The pseudocode for the SARSA algorithm for each agent is given in the following (Algorithm 2).

-

Algorithm 2: Pseudocode of the SARSA algorithm for multiagent reinforcement learning.

-

Initialize Q (sp, ap) arbitrarily for all states sp and actions ap

-

For each episode:

-

Initialize for each agent p

-

Choose action using ε-greedy policy for each agent p

-

Repeat for each step of the episode (t = 0, 1, 2, …, T − 1):

-

Observe reward for each agent p, and next state for each agent p

-

Choose action using ε-greedy policy for each agent p

-

# Update Q-value using the SARSA formula:

-

-

# # Update state and action for next iteration.

-

Set

-

-

Until terminal condition (end of episode).

3.2.3. Monte Carlo

The Monte Carlo method also belongs to the category of model-free approaches. This method is an off-policy method. In order to acquire state and reward information, the Monte Carlo method employs a straightforward policy. It calculates the reward value by taking the average of sample rewards for a specific action. By leveraging the law of large numbers, which states that with a sufficient number of simulations and an adequate sample of rewards, the average value approximates the true value, the validity of the Monte Carlo method is confirmed [22]. The procedural form of the algorithm is shown in the following (Algorithm 3).

-

Algorithm 3: Pseudocode of the MC algorithm for multiagent reinforcement learning.

-

Initialize:

-

arbitrarily for all s ∈ S for each agent p

-

Returnp(s) ⟵ an empty list for all s ∈ S for each agent p

-

Loop forever (for each episode).

-

Generate an episode following policy

-

Gp⟵0 # Initialize return

-

Loop for each step of episode, t = T − 1, T − 2, …, 0:

-

-

Append Gp to

-

3.3. Hyperparameter Tunning

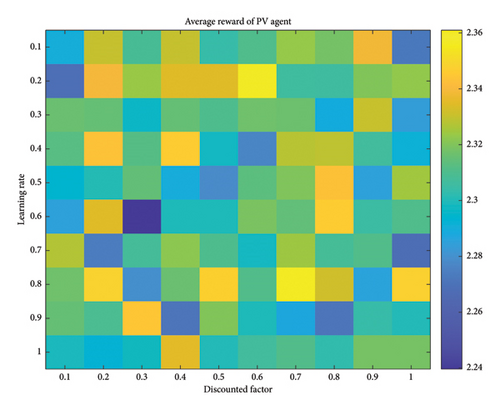

Hyperparameters like the discount factor and learning rate need to be tuned in RL to optimize the agent’s learning process and improve its performance. These hyperparameters influence the agent’s exploration–exploitation trade-off, convergence rate, and overall stability, impacting its ability to learn an effective policy and achieve desired outcomes in various environments. For finding the optimal value of each agent in each algorithm, the learning rate and discount factor of all the agents except the one that is examined are considered to be 0.5 and then the optimal value for the considered agent is calculated.

The parameters a, b, and c and the price thresholds P1 and P2, which influence the battery’s reward mechanism, are determined through an iterative trial-and-error approach. This process ensures that the reward structure effectively supports the system’s operational objectives.

3.4. Double Auction Procedure for Energy Management of the NEL

As a general representation, nb signifies the total number of buyers and specifically refers to this quantity in round k.

The DA agent organizes Pricek in ascending order and Bidk in descending order. In the kth round, the minimum price is denoted as , and the maximum bid is . If , suppliers and demands will exchange their matched energy. The participating parties are the suppliers offering and the buyers bidding . If supplier i exhausts or buyer j is satisfied with , their respective quotes are removed from QSupplierk+1 or QDemandk+1. However, if there is still some quantity remaining for or at their specified prices, a new quote is formed, and it joins either QSupplierk+1 or QDemandk+1.

In this formula, Lt represents the total number of auction round at time slot t.

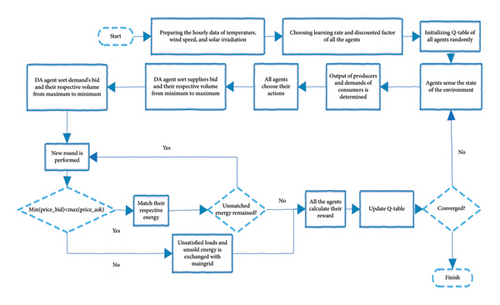

In accordance with the above explanation, Figure 4 depicts the proposed algorithm for energy management within a MG based on RL.

4. Simulation and Results

In this section, we present the implementation of the proposed EMS tailored for the NEL. The simulation was executed on a laptop featuring an Intel(R) Core (TM) i7 processor and 8.00 GB of RAM. The algorithms, including QL, SARSA, and MC, were implemented using MATLAB R2020b with a decentralized control approach. Each agent independently updates its values based on local interactions with the environment, learning through exploration and exploitation within the MATLAB-defined environment.

4.1. Case Study

The simulation was carried out considering the NEL as a case study. Table 2 provides the nominal power ratings for various suppliers and loads in the NEL.

| Agent name | Nominal capacity (kW) | Cost of energy production (cents/kW) |

|---|---|---|

| PV | 1 | 7 |

| WT | 0.4 | 7.8 |

| Battery | 0.4 | — |

| Load | 5 × 0.8 = 4 | — |

The technical parameters for the WT, PV, FC, and battery utilized in the NEL are summarized in Tables 3, 4, 5. Furthermore, the technical data employed for modeling the FC is derived from empirical results obtained for the Nexa 1200 FC, which is utilized in the NEL formulation.

| Parameter | Value |

|---|---|

| Rotor diameter | 1.7 m (46 inches) |

| Quantity blades | 3 pieces |

| Pr | 400 W at 12.5 m/s |

| vci | 3.6 m/s |

| vr | 12.5 m/s |

| vco | 49 m/s |

| Parameter | Value |

|---|---|

| Isc | 8.63 |

| Ki | 0.055∗10−2 |

| Voc | 37.4 |

| Kv | −0.33∗10−2 |

| VM | 30.7 |

| IM | 8.15 |

| Description | Value |

|---|---|

| Type | Lead-acid |

| Rated battery voltage | 4 × 12 = 48 V |

| Nominal capacity C 20 | 55 Ah |

| Nominal capacity C1 | 34 Ah |

Considering the models described in Section 2 and incorporating the weather data and technical specifications from these tables, various agents’ behaviors were simulated.

4.2. Configuration of MG

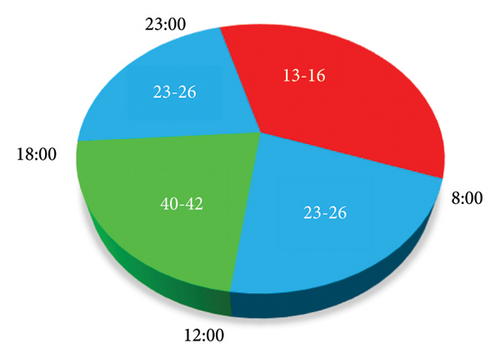

The main grid purchases electrical power from the MG at rates ranging from uniform distribution 5–15 cents per kilowatt-hour. In addition, it sells power to the MG at rates that vary, specifically at hours 23–8, 8–12, 12–18, and 18–23 from uniform distribution 13–16, 23–26, 40–42, and 23–26, respectively, as it is shown in Figure 5. To discourage the suppliers for the sole purpose of selling it to the main grid at a higher profit, the buying price of energy is set lower than the selling price.

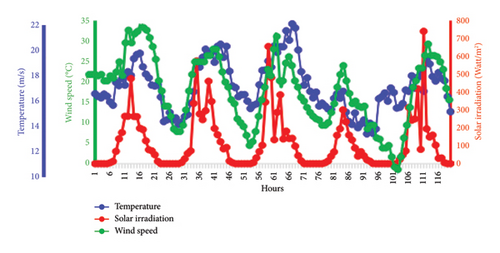

During the training and testing phases, distinct datasets are employed. The training dataset encompasses temperature, wind speed, and solar irradiation data spanning from 01/01/2018 to 01/01/2023. To augment the training dataset, additional data are generated based on the existing records. Subsequently, the testing phase utilizes data collected over 30 days, specifically from 01/07/2023 to 30/07/2023, to evaluate the effectiveness of the algorithms [40]. Figure 6 illustrates these data for five consecutive days.

As an example, Figure 7 illustrates the hourly energy outputs of WT, PV, and FC, along with the buying and selling prices of the main grid, observed over 24 h. Hourly mean profile of customers is given in Table 6 [38].

| Hours of a day | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 | 11 | 12 |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Load consumption (pu) | 0.4 | 0.4 | 0.4 | 0.4 | 0.4 | 0.4 | 0.4 | 0.4 | 0.4 | 0.6 | 0.8 | 1 |

| Hours of a day | 13 | 14 | 15 | 16 | 17 | 18 | 19 | 20 | 21 | 22 | 23 | 24 |

| Load consumption (pu) | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 0.8 | 0.6 | 0.5 |

- Note: The values are per unit based on the maximum value of the load.

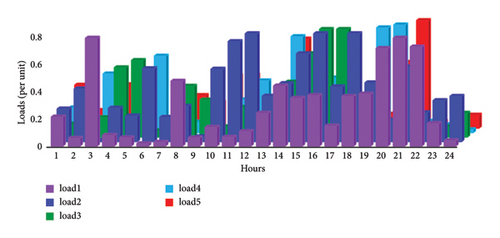

In this article, we examine five loads, each with an average of 0.8 kW. Figure 8 provides a visual representation of these loads over 24 h, which were created by using an exponential distribution. We have taken into account the following approach: 30% of each customer’s load is vital and should be fulfilled, while the remaining portion is subject to curtailment. Customers, in turn, have the ability to curtail only 70% of their load.

4.3. Sensitivity Analysis of Hyperparameters

In this article, three different algorithms are used for managing energy between generators, loads, and main grid. For all agents, we experimented with different values of epsilon in the epsilon-greedy approach, and the best result was achieved when we set epsilon to one. The optimal parameters of the agents for different algorithms are given in Table 7:

| Agent name | QL | SARSA | MC | ||

|---|---|---|---|---|---|

| Learning rate | Discount factor | Learning rate | Discount factor | Discount factor | |

| WT | 0.3 | 0.8 | 0.6 | 0.2 | 0.1 |

| PV | 0.2 | 0.7 | 0.7 | 0.4 | 0.9 |

| FC | 0.2 | 0.8 | 0.4 | 0.2 | 0.7 |

| Battery | 0.1 | 0.1 | 0.3 | 0.4 | 0.8 |

| Load | 0.4 | 0.9 | 0.9 | 0.3 | 0.2 |

For instance, the performance of the PV agent was assessed using the QL algorithm, considering various combinations of learning rates and discount factors. Details of these evaluations are presented in Figure 9, where the profit of the PV agent is illustrated across different learning rates and discount factors. Notably, the PV agent achieves its maximum reward at a learning rate of 0.4 and a discount factor of 0.5.

4.4. Details of Markov Game Problem

Information regarding the designed state, reward, and action of these agents is provided in the following.

All of the agents select a price for the energy they intend to buy or sell value from a discrete set of prices uniformly distributed between 7 and 45 cents/kWh.

PV and WT establish the selling price of energy from the aforementioned interval. Consequently, their action space consists of nine possible values. The battery can engage in both buying and selling electricity, and they express their intention through the sign of their current. The current has one of the 5 values, including two positives, two negatives, and zero. Consequently, a battery agent’s action set comprises 9 × 5 = 45 values. Similar to the battery, an FC has the ability to select its current from the following ranges: 0, 0–10, 10–20, 20–30, 30–40, and 40–50. In summary, its action space consists of 9 × 6 = 46 values. More than choosing the price of energy for their energy demand, customers can also select their load curtailment percentage from the range {0, 0.05, …, 0.65, 0.70}. Therefore, their action space comprises 9 × 15 = 135 values.

4.5. Simulation Results and Analysis

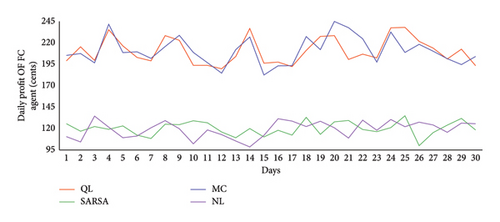

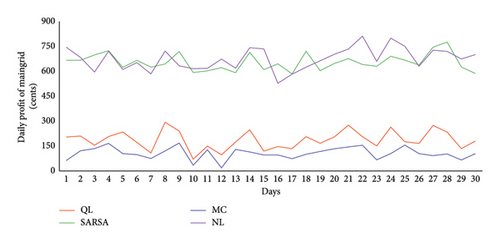

Figure 10 illustrates the daily profit of the FC agent under different algorithms. The mean profit of the MC algorithm is 211.03 cents, closely followed by the QL algorithm, which achieved a mean profit of 210.69 cents. SARSA exhibited the lowest profit among the learning algorithms at 120.06 cents, which was comparable to the NL scenario, with a mean profit of 119.61 cents. It is noteworthy that both MC and QL algorithms perform similarly, significantly outperforming the NL scenario.

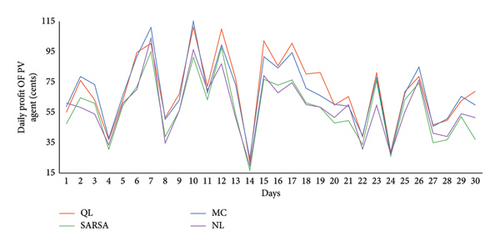

In Figure 11, the daily profits associated with various algorithms for managing the PV agent are depicted. Discerning the most effective algorithm through visual observation alone poses a challenge. According to the mean profit for the PV agent, QL demonstrates superior performance, achieving 70.24 cents. Following QL, the MC and SARSA algorithms show mean profits of 68.84 and 57.74 cents, respectively. QL and MC exhibit remarkably close performances. Surprisingly, in a scenario where agents lack learning capability, referred to as NL, the PV agent achieves a profit of 58.87 cents, even outperforming SARSA.

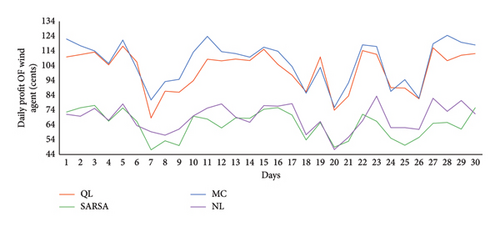

In Figure 12, the daily profit of the wind agent over 30 days is presented. The mean profits of the wind agent for MC, QL, and SARSA over 30 days are 107.14, 101.78, and 64.55 cents, respectively. For the NL scenario, the mean profit is 68.58 cents. It is important to highlight that both MC and QL algorithms performed well, with MC achieving the highest mean profit, followed closely by QL. However, SARSA’s performance was inferior to all of the algorithms, even NL.

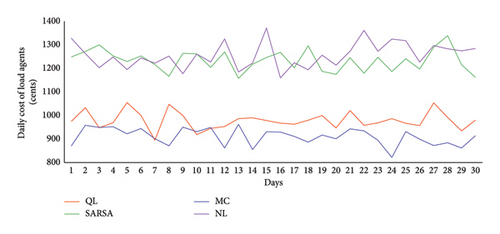

Figure 13 illustrates the daily accumulated costs of all five load agents. Notably, the cost is significantly higher when the agents lack learning capabilities. In the NL scenario, they struggle to efficiently curtail their loads and submit appropriate bids, resulting in a load cost average of 1237.89 cents in this scenario. Among the RL algorithms, MC demonstrates the best performance, with a mean cost of 910.97 cents over 30 days. Following SARSA, QL and SARSA have mean costs of 979.67 and 1234.25 cents, respectively. It is worth noting that SARSA’s performance closely mirrors the NL scenario, indicating that significant learning has not occurred in the SARSA algorithm.

One of the primary objectives for each MG is to achieve self-sufficiency and reduce reliance on the main grid, thereby minimizing transactions between the MG and the main grid. This can be accomplished by utilizing RES such as PV, WT, FC, and batteries within the NEL framework.

For MGs connected to the main grid, this involves reducing the financial gains of the main grid through fewer transactions. In Figure 14, the daily profit generated by different algorithms is presented. As previously discussed, an algorithm that can effectively diminish the main grid’s profit is preferable. Notably, when employing the MC algorithm across all agents, the main grid attains its lowest profit, i.e., 106.54 cents. After MC, QL and SARSA were next in performance, achieving mean profits of 185.05 and 654.86 cents, respectively. In cases where agents lack learning abilities, they tend to have the most interactions with the main grid, resulting in the highest profit for the main grid which is 675.33 cents—an undesirable outcome. It is significant to mention that the SARSA algorithm did not perform well, and its outcome closely resembles that of the NL scenario.

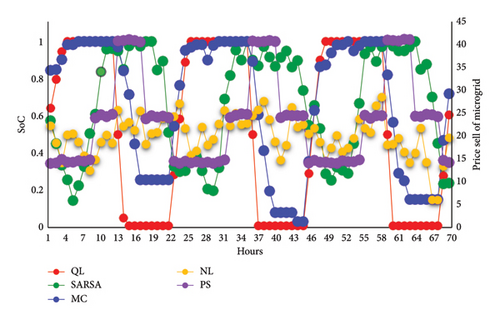

In Figure 15, the left axis displays the SoC of the battery agent under various algorithms, while the right axis shows the selling price of the main grid. It is evident that battery agent utilizing the QL algorithm effectively learn and exhibit the desired behavior as previously discussed. By using the MC algorithm, the battery agent learns to charge itself during periods of low energy prices similar to the QL algorithm. However, its performance suffers when energy prices are low, as it struggles to fully discharge itself to maximize profit. In contrast, the SARSA algorithm does not perform as well and cannot consistently follow the charge and discharge pattern seen in the QL algorithm. However, it still outperforms the NL scenario, where there is no specific pattern, and all behavior is entirely random. The mean profit of the battery in the NL scenario was −21.9 cents, and with the implementation of different learning algorithms such as SARSA, QL, and MC, it increased to −15.42, −6.06, and −6.24 cents, respectively. By using different learning algorithms, the battery’s profit remains close to zero. Several factors may contribute to this outcome. First, by the technical constraints of the NEL, the battery’s capacity is low compared with other agents, thereby hindering its ability to achieve higher profits. In addition, within MGs, the battery is primarily allocated for critical loads, such as medical facilities and emergency services, where the priority is to ensure a reliable energy supply. Furthermore, the algorithm prioritizes DER agents such as PV, WT, and FC over the battery, promoting the usage of RES in the market, and they are given precedence over the battery when all agents sell energy at the same price. However, as depicted in Figure 15, the SoC of the battery is effectively managed via QL, allowing the battery to optimize its charging and discharging based on the price of energy and thereby assisting the MG.

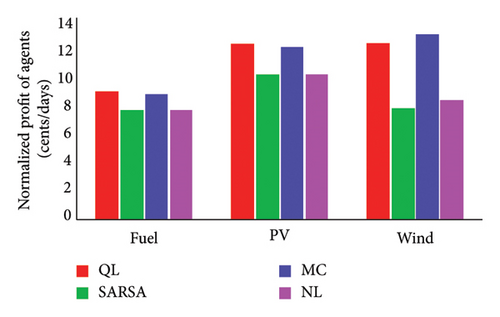

In Figure 16, the normalized profit of DER agents is depicted. We can observe that, for the FC and PV agents, the QL algorithm outperforms others, followed by the MC algorithm. In addition, the SARSA algorithm’s performance is slightly better than the NL scenario. In the case of the WT agent, the MC algorithm performs better than QL. However, for this agent, the SARSA algorithm performed worse than the NL scenario.

In summary, Table 8 presents the mean profit for various components achieved by employing different RL algorithms, while Table 9 displays the daily volume of DER agents for these algorithms. Based on the results presented in Table 8, it is apparent that MC achieves higher profits in FC, WT, and load, while QL outperforms others specifically in PV. Moreover, MC also attains the highest collective reward −523.96, followed by QL −596.96, indicating better overall system efficiency. Evaluating algorithms solely based on agent profits may not provide a comprehensive assessment. Therefore, it is imperative to establish criteria that encompass both profit and agent volume. In the next section, a criterion will be introduced to facilitate the comparison of these algorithms. This criterion will assist us in selecting the most suitable algorithm based on the preferences of all agents. As it can be seen in Table 9, the main grid volume by using learning algorithms such as QL and MC has decreased from 14.59 to −3.75 and −7.47, respectively. This shows that the dependency of the MG on main grid has reduced and in addition, the MG has sold power to main grid.

| Algorithm | NL (cents/day) | QL (cents/day) | SARSA (cents/day) | MC (cents/day) |

|---|---|---|---|---|

| Profit FC | 119.61 | 210.69 | 120.06 | 211.03 |

| Profit PV | 58.24 | 70.24 | 57.74 | 68.84 |

| Profit WT | 68.58 | 101.78 | 64.55 | 107.14 |

| Cost load | 1237.89 | 979.67 | 1234.25 | 910.97 |

| Profit main grid | 675.33 | 108.05 | 654.86 | 108.05 |

| Collective reward | −991.46 | −596.96 | −991.90 | −523.96 |

| Algorithm | NL (kw/day) | QL (kw/day) | SARSA (kw/day) | MC (kw/day) |

|---|---|---|---|---|

| Volume FC | 15.66 | 23.71 | 15.89 | 24.2 |

| Volume PV | 5.71 | 5.71 | 5.71 | 5.71 |

| Volume WT | 8.26 | 8.26 | 8.26 | 8.26 |

| Volume load | 44.23 | 33.94 | 43.45 | 30.71 |

| Volume main grid | 14.59 | −3.75 | 13.57 | −7.47 |

4.6. Analysis of Dissatisfaction Factor on Cost of Customers

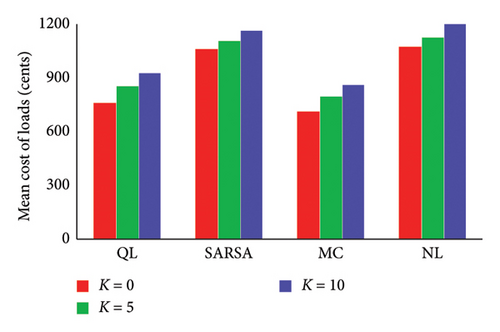

To illustrate the importance of selection parameter K, three different K, i.e., 0, 5, and 10 are considered for all the five customers inside the MG. It is worth noting that customers who prefer not to reduce their energy demand should opt for a higher dissatisfaction coefficient. In Figure 17 different algorithms and different respective K are shown. As it can be seen evidently as the K increase cost of customers increases dramatically. For example, when employing the QL algorithm for all loads, we observed a notable increase in the mean cost as the value of K varied. Specifically, the mean cost of loads rose by 12.11% when K increased from 0 to 5. Further elevating K to 10 resulted in a more substantial increase, reaching 8.42%. This trend indicates that higher values of K are associated with higher mean costs in the context of all the algorithms.

4.7. Comparison of Learning Algorithms

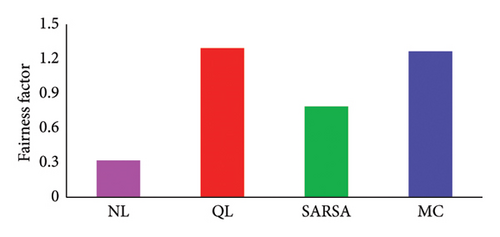

In this section, we used a fairness factor [19] in order to compare the results of different learning algorithms. The factor increases with a rise in the average profit of DERs, a decrease in the average cost for customers, or both. Consequently, a system that optimizes benefits for both customers and DERs achieves the highest fairness factor, ensuring fairness for all participating agents. We have computed this parameter for various algorithms, and the results are depicted in Figure 18. As shown, QL stands out with the best performance, achieving the highest value of 1.2643. Following that, we observe the MC algorithm with a value of 1.2358 and SARSA with a value of 0.7640. It is worth noting that in the absence of learning, the fairness factor was 0.30. Comparatively, when considering fairness improvement, the use of learning algorithms such as QL, MC, and SARSA demonstrated substantial increases in the fairness factor.

Another crucial aspect to consider is the simulation time. Table 10 provides simulation times for various learning algorithms, highlighting the superior performance of the QL algorithm. The QL algorithm stands out due to its exceptional efficiency and rapid adaptability to dynamic environments. Its ability to converge to optimal or near-optimal policies faster than other algorithms showcases remarkable computational efficiency, resulting in significant cost savings and resource conservation. This makes it a more economical solution for practical applications.

| Algorithm | QL (s) | SARSA (s) | MC (s) |

|---|---|---|---|

| Simulation time | 1483 | 4637 | 1879.74 |

Another essential factor is the memory required for the simulation, which depends on the dimensionality of the matrices utilized. It is evident that MC requires significantly more memory compared with QL. This difference arises from the larger data storage tables used in MC, as it stores entire episode trajectories, while QL updates its estimates incrementally during the learning process. Hence, QL is the preferred choice to select in terms of memory efficiency.

5. Conclusion

In this research, a decentralized energy management approach based on RL was implemented to oversee energy operations within the NEL. Within this framework, both energy producers and consumers were regarded as agents with the capacity to make decisions aimed at maximizing their profits. By employing a DA agent, the price of the internal market was determined, and any remaining unmet loads and surplus energy from producers were exchanged with the main grid. The result of the QL algorithm was compared with SARSA and MC. When comparing their results using real-world data, it became evident that the QL algorithm outperformed the other algorithms. Based on the fairness factor results, the performance of QL and MC are almost similar. However, considering QL’s lower computational complexity, reduced memory requirements, and faster execution compared to MC, it is recommended that QL be preferred for practical applications. This decentralized energy management approach can be used in the future net zero building to help in achieving net zero targets for smart cities by 2050.

Conflicts of Interest

The authors declare no conflicts of interest.

Funding

This research received no specific funding.

Open Research

Data Availability Statement

The data that support the findings of this study are available from the corresponding author upon reasonable request.