Reinforcement Learning-Based Injection Schedules for CO2 Geological Storage Under Operation Constraints

Abstract

This study develops an advanced deep reinforcement learning framework utilizing the Advantage Actor–Critic (A2C) algorithm to optimize periodic CO2 injection scheduling with a focus on both containment and injectivity. The A2C algorithm identifies optimal injection strategies that maximize the CO2 injection volume while adhering to fault-pressure constraints, thereby reducing the risk of fault activation and leakage. Through interactions with a dynamic 3D geological model, the algorithm selects actions from a continuous space and evaluates them using a reward system that balances injection efficiency with operational safety. The proposed reinforcement learning approach outperforms constant-rate strategies, achieving 22.3% greater CO2 injection volumes over a 16-year period while maintaining fault stability at a given activation pressure, even without incorporating geomechanical modeling. The framework effectively accounts for subsurface uncertainties, demonstrating robustness and adaptability across various fault locations. The proposed method is expected to serve as a valuable tool for optimizing CO2 geological storage that can be applied in complex subsurface operations under uncertain conditions.

1. Introduction

CO2 geological storage in subsurface formations, such as depleted gas reservoirs and saline aquifers, requires a detailed analysis of its capacity, injectivity, and containment. The storage capacity indicates the quantity of CO2 that can be commercially stored in discovered and characterized geological deposits, including assessments of CO2 injectivity and containment throughout the lifetime of the project. The containment, which involves features such as seals and caprocks, ensures that the injected CO2 remains secure within the storage layers and does not migrate to unintended zones. Injectivity pertains to the ability to inject CO2 at an adequate rate using available wells.

Owing to geological heterogeneity and the uncertainty involved in the geological storage of CO2, the capacity, injectivity, and containment of this process are nonlinearly interrelated. Consequently, the quantitative analyses of these factors are challenging [1, 2]. Key uncertainties in the storage capacity of CO2 include the formation geometry and spatial heterogeneity of pores, which affect the gross rock volume. Uncertainties in containment arise from hydrodynamic and geochemical factors that affect the mobility of injected CO2. For instance, difficulties in evaluating leakage risks from legacy wells in depleted gas reservoirs make the assessment of containment reliability challenging. Moreover, injectivity issues, such as salt precipitation and CO2 hydrate formation near the wellbore, are predominant factors affecting the site operation phase [3–6].

Ensuring the security of CO2 injection strategies requires an in-depth analysis of the effective containment of stored CO2. Designing injection rates that ensure adequate containment enables an accurate estimation of the storage capacity and reduces the risk of potential leakage through various pathways. Jung et al. [7] investigated CO2 injection at the Frio site and found that CO2 dissolution in brine reduced the pressure build-up by 33%. Laboratory tests and simulations confirmed the safety of the proposed injection strategy, with fault reactivation and hydraulic fracturing requiring significantly larger volumes and rates than those used. Moreover, rock sample tests confirmed the minimal risk of increased fault permeability. Newell and Martinez [8] examined the caprock integrity in geological storage systems, focusing on how reactivated fractures and faults compromised seal reliability by exploring the effects of the wellbore orientation and injection rate on leakage pathways. Numerical analyses indicated how single and multiple faults influenced CO2 leakage, highlighting the importance of fault hydrological properties in the formation of these pathways.

Determining the optimal injection rate requires an integrative study of spatiotemporal data, such as information on the rock properties, well performance, and fluid data, which requires the use of time-consuming numerical simulations. Previous studies have confirmed the effectiveness of cyclic CO2 injection in enhancing the storage capacity of CO2 [9–11]. Sawada et al. [12] provided guidelines for periodic CO2 injections, including injection pressures, based on the Tomakomai CO2 Capture and Storage demonstration project in Japan. Several studies have provided guidelines for halting CO2 injection, particularly in response to fault activation [13–18]. However, simulating various periodic scenarios, particularly determining the optimal time to stop injection owing to fault activation, requires significant computational resources to explore all possible options and identify the optimal scenarios. Moreover, accurate predictions of fault activation conditions that ensure the safe and effective management of the injection process involve repetitive simulations.

The decision-making process can be mathematically modeled using a discrete-time stochastic process, such as the Markov decision process (MDP), to manage its complexity. The MDP, which enables a formal description of the agent/dynamic environment interactions by assuming that the future state depends only on the current state, aims to determine the action corresponding to each state that maximizes the expected cumulative rewards, which is known as the optimal policy. Although methods such as dynamic programming and tree search can be used for the MDP, reinforcement learning is particularly suitable for high-dimensional and non-stationary environments, such as CO2 geological storage, in which complex system dynamics can evolve over time.

Several studies have addressed decision-making processes for underground geosystem management, such as reservoir history matching, operation strategies, and scheduling, using reinforcement learning [19–27]. Guevara, Patel, and Trivedi [22] implemented a state-action-reward-state-action algorithm to optimize steam injection schedules, aiming to maximize the net present value in steam-assisted gravity drainage, a process that traditionally depends on empirical methods, and determined the steam injection rate from three options (increasing, decreasing, or maintaining the given rate) based on observations at each time step.

Deep reinforcement learning combines traditional reinforcement learning with deep-learning architectures to address complex problems. A deep Q-network (DQN) approximates the action-value function (or “Q-function"), which estimates the value of taking a particular action in a given state, using deep neural networks. A DQN enables an agent to handle complex environments with high-dimensional inputs for stable training by reusing previous experience [28]. Sun [20] implemented a DQN for sequential decision making in CO2 injection strategies, maximizing a reward function that included tax credits and monitoring costs.

Each reinforcement learning algorithm operates under its own set of assumptions, which can introduce limitations, such as sampling inefficiency and applicability, to a limited set of actions. Previous studies focusing on optimal operational strategies for geosystems [20, 22, 24–26] have been based on predefined discrete-type actions. This type of action space limits the ability of the agent to explore the optimal solution and complicates the learning process by considering actions with only minor differences as distinct.

The Advantage Actor–Critic (A2C) algorithm offers relatively high flexibility by separately approximating the value function and policy, enabling the handling of continuous actions and stochastic policies. A2C enables real-time updates to the policy, thereby facilitating the rapid exploration of optimal solutions. However, this algorithm requires precise problem settings and implementation details, such as the design of inputs and reward functions, because it can introduce additional complexity and increase the risk of learning instability.

This study aims to develop an A2C-based framework for the efficient formulation of periodic CO2 injection schedules into a 3D heterogeneous saline aquifer that are applicable to an extensive and continuous action space. Periodic injection scenarios are designed to operate until fault activation, without violating the operation constraints. The proposed framework is validated by comparing the training trends across epochs and different reward systems. The effectiveness of the proposed periodic CO2 injection schedule is examined against constant-injection cases, and its applicability under fault uncertainty is discussed.

2. A2C Algorithm

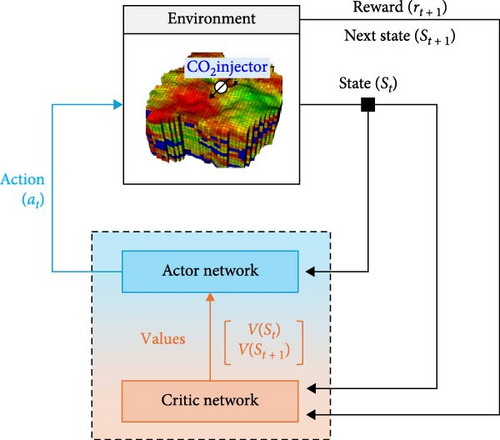

For a policy that maximizes the cumulative rewards across all states, which is a typical goal of reinforcement learning, an agent begins by taking action at at time t based on the current state St of the environment. Subsequently, the agent receives feedback in the form of reward rt+1 and transitions to a new state St+1. Instead of relying on a precise model of the environment, the agent learns the desired behavioral patterns through repeated interactions. The actions of the agent are guided by a predefined policy π(a | S), which is the probability of selecting action a in a given state S. Over time, the agent evaluates the effectiveness of its actions by calculating the value associated with each state–action pair, known as the action-value function Q(S, a). Typically, an agent selects an action that maximizes the action value in a given state. The overall value of the state V(S) is then calculated as the expected value of Q(S, a) under the current policy.

The A2C algorithm is a model-free reinforcement learning method that directly learns both policy and value functions through interactions with the environment, without requiring the construction or utilization of complex dynamic models. A2C overcomes the limitations of typical policy-based algorithms, which typically struggle with long or indefinite episodes, by enabling policy updates based on temporal-difference predictions at each time step. Figure 1 shows the framework of the A2C algorithm comprising two neural networks: the actor network (policy network), which represents the policy, and the critic network (value network), which evaluates the value function. The interaction process between these two networks can be explained in five steps. First, the actor network selects an action based on the state information of the environment. Second, the agent executes the action in the environment, advances by one time step, and receives the reward along with the next state information. Third, the critic network estimates the values of both the current and next state. Fourth, the networks are updated by calculating the advantages and loss functions. Finally, the entire process (steps 1–4) is repeated.

The hyperparameters in the training process of the A2C algorithm include the weights for both the actor and critic network loss functions, the discount rate γ, and the learning rate η.

3. Design of Injection Schedules With A2C

This section outlines the strategies proposed for deriving periodic injection schedules based on operational constraints for safe CO2 geological storage in saline aquifers considering the geological model and operational conditions, such as fault pressure constraints. Details regarding the settings and criteria for the A2C algorithm components, including the environment, state, action, reward system, and neural networks are provided in this section.

3.1. Geological CO2 Storage Modeling

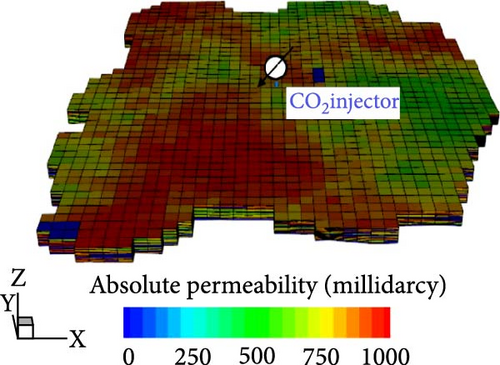

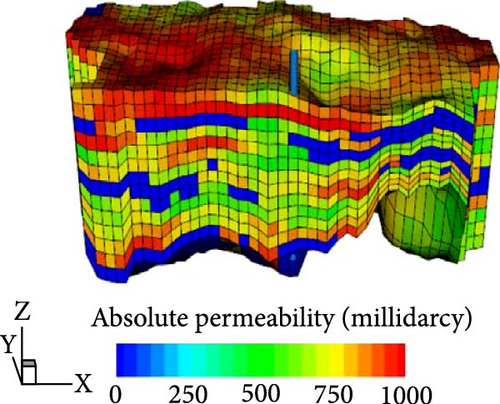

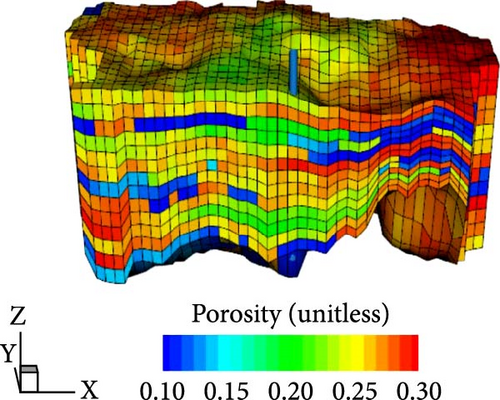

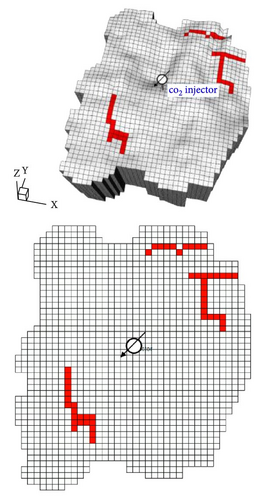

A 3D heterogeneous saline aquifer was generated using geostatistical property modeling (Figure 2); the porosity, absolute permeability, and shale volume of the system are listed in Table 1. The target aquifer for geological CO2 storage was slightly confined, with a thickness of ~250 m, located between the true vertical depths of 840 and 1090 m. The reference pressure at the top of the aquifer and bottom of the overlying impermeable caprock was 9000 kPa at 840 m. The aquifer, with dimensions (x, y, z) of (6310, 7076, 250 m), consisted of 22,230 unstructured grids (38 × 45 × 13) and showed an average horizontal permeability of 630 millidarcies (md), average porosity of 0.235, and a Dykstra–Parsons coefficient of permeability value of 0.855, indicating high spatial heterogeneity. Moreover, the aquifer contained interbedded shale layers that facilitated trapping at various locations.

| Parameter | Value | Unit |

|---|---|---|

| Initial pressure (reference pressure) | 9000 (at 840 m) | kPa |

| Average porosity | 0.235 | — |

| Average horizontal permeability | 630 | md |

| Average vertical permeability | 315 | md |

| Shale volume ratio | 20 | % |

| Temperature | 49.875 | °C |

| Salinity | 10,000 | ppm |

| Dykstra–Parsons coefficient | 0.855 | — |

| Maximum injection rate | 400,000 | m3/day |

| Upper limit for BHP | 12,000 | kPa |

| Pressure to activate a fault | 11,000 | kPa |

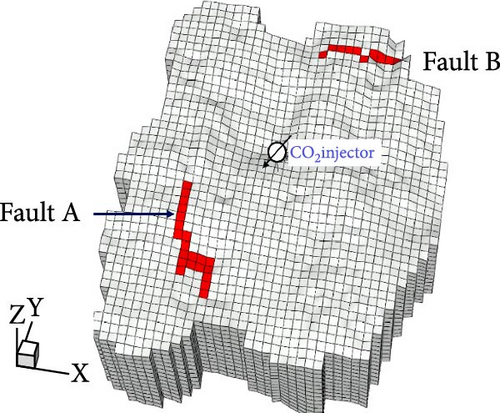

A single CO2 injection well was installed at the center of the aquifer with a maximum daily injection rate of 400,000 m3/day under surface conditions and a maximum allowable bottomhole pressure (BHP) of 12,000 kPa. For containment analysis, two faults (A and B) were positioned near the boundaries with an assumed activation pressure of 11,000 kPa (Figure 2d). In the proposed model, CO2 injection was restricted to facilitate halting when the pressure at either fault attained the activation pressure, while the BHP was maintained below the maximum allowable value of 12,000 kPa. This assumption was designed to support long-term operational strategies by mitigating leakage risks associated with fault activation. With the exception of the fault-activation constraint, the analysis neglected geomechanical features, such as fault deformation, changes in the pore structure, and stress variations.

Designing a periodic CO2 injection schedule involves formulating a strategy for CO2 injection at 4-month intervals to maximize the cumulative injection volume over a 16-year period without triggering fault activation. To meet the required criteria, 48 distinct injection rates were assigned over a total period of 16 years.

3.2. Design of A2C

The A2C algorithm uses numerical simulations of a saline aquifer geological model to represent the environmental dynamics of the system. Key observations, such as the BHP and fault pressure, are used to define the state space; the state is represented as a tensor of stacked past observations. Actions are determined within a continuous space guided by the parameters of a normal distribution generated by the actor network. Here, the reward functions were designed to maximize the CO2 injection amount while controlling the fault pressure. The training process used batch learning with specific configurations to optimize the network performance.

3.2.1. Environment

The dynamics of fluid flow in porous media are typically governed by partial differential equations (PDEs) that indicate the mass and energy balance in each grid block. This study used a model-free reinforcement learning approach, treating PDEs as elements with which the agent interacts at each time step, not as targets for the agent to learn, considering them as a black box. A compositional reservoir simulator solved these PDEs at each time step [30]. Despite the feasibility of training a surrogate model to approximate the state transitions of the environment, enabling relatively rapid predictions and frequent interactions with the agent, this study did not adopt this approach. Owing to training with data derived from PDEs, such surrogate models can introduce prediction errors that tend to accumulate, particularly in high-dimensional and heterogeneous geological models, thereby hindering optimal outcomes in long-term operations involving continuous actions. To ensure accuracy in such complex settings, this study relied on direct interactions with the PDE-based simulator rather than a surrogate model.

3.2.2. States

In Equation (6), BHPt represents the BHP at time step t, while and denote the average pressure at Faults A and B, respectively, at time step t. Each variable was normalized between 0 and 1 based on a BHP upper limit of 12,000 kPa and average fault pressure threshold of 11,000 kPa (Table 1). Elements related to these changes were calculated by subtracting the previous observations from the current observations. and represent changes in the average pressure for Faults A and B, respectively, while Δqt refers to the change in the normalized daily injection rate. The term t/ttarget indicates the temporal position of the current injection point with respect to the target control period (i.e., ttarget = 48).

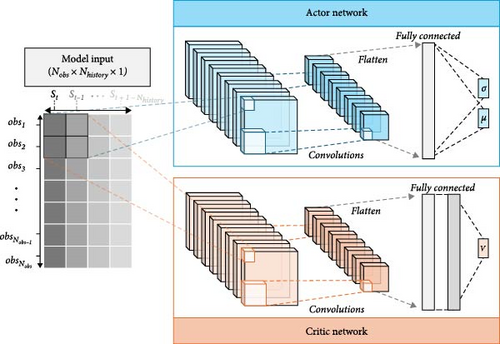

A sequence of past information leading to the current state can enable an agent to understand the dynamic changes in the environment [33]. Here, multiple states from different time steps were treated as history; a total of Nhistory states were stacked to form the input data for the neural network. The state vector St defined in Equation (6) was combined with the three previous state vectors, St−1, St−2, and St−3, to form a matrix of size (7, 4) that was used as input data for the actor and critic neural networks. With time, each new state vector sequentially replaced the oldest state vector in the input data; Nhistory was set to four.

3.2.3. Actions

This study defined a continuous action space that enabled the agent to select actions freely within the maximum and minimum CO2 injection ranges by considering the output of the actor network in the A2C algorithm to be the mean (μ) and standard deviation (σ) of a normal distribution, N(μ, σ2); the mean and standard deviation determined the magnitude of the action and exploration range, respectively. Subsequently, the action of the agent in the next state was determined by sampling from this normal distribution.

In Equation (7), a represents the value sampled from the normal distribution generated by the actor network output, qreal_ max represents the maximum daily injection rate (400,000 m3/day), qreal_ min is the minimum daily injection rate (10 m3/day), and qreal refers to the actual injection rate applied to the environment, which ranges within 10–400,000 m3/day.

3.2.4. Reward Functions

In the above equations, Ct represents the volume of CO2 injected during an individual time step, i.e., within 4 months, and the constants α, τ, and β scale the rewards to ensure the reliability of the training process. Based on the mean daily injection rate (200,000 m3/day; Table 1), ~2.43 × 107 m3 of CO2 was injected over a 4-month period. Therefore, α was set to 2.00 × 10−9 to adjust the reward value for Ct to ~0.05. τ was set to 0.05, aligning its scale with α × Ct, and β was set to 1.25 to ensure that the additional reward—proportional to the total injected amount after the targeted injection period—was comparable in magnitude to R(B)score. For R(C), if any fault was activated before the 16-year mark, a negative reward of −1 was applied.

3.2.5. Training Process

The A2C architecture with tensor-stacking state vectors (Nobs = 7 and Nhistory = 4) is shown in Figure 3. At each time step, new observations replace the oldest column of data in the existing tensor. The He-normal initializer [34] implements neural networks for both the actor and critic. Here, each network underwent two convolutional operations with 32 kernels (kernel size: 2 × 2; stride: 2 × 2; and activation function: LeakyReLU [35]). Subsequently, the output was processed through a fully connected layer with 24 nodes using a sigmoid activation function for the actor and a linear function for the critic.

Value function estimation is more complex than action selection and requires precise feedback for policy updates; therefore, an additional fully connected layer with 24 nodes was used in the critic network to improve its value estimation accuracy. A total of 15,443 parameters was used in the two neural networks, rendering the structure relatively simple and computationally efficient.

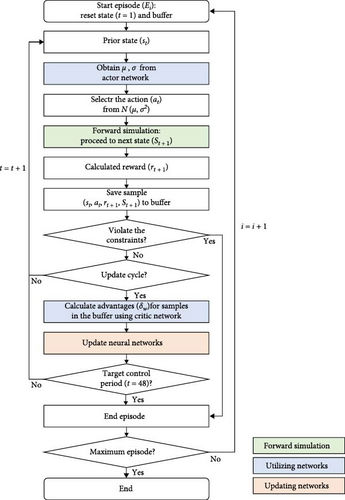

The training workflow of the A2C-based injection scheduling is shown in Figure 4. In this study, a set of states St, action qt, reward rt+1, and the next state St+1 at a specific time step t within a given episode Ei is considered to be a single sample. Samples from the same episode were stored in a buffer, and the collection of a predetermined number of samples was followed by batch training. The networks were updated after the accumulation of 12 samples. This approach considers the long-term behavior of the environment, particularly delayed rewards, and addresses the limitations of the baseline A2C, which updates the actor and critic networks at each time step, relying only on the most recent information.

Among the hyperparameters of the training procedure, the weight of the actor network loss function (Equation (4)) was set to 0.1 and added to the loss function of the critic network (Equation (5)). The A2C algorithm was trained by minimizing the combined loss function using the Adam optimizer [36] with a η (Equation (3)) of 0.0005. The value of γ in Equation (2) was considered to be 0.985.

4. Results and Discussion

The performance and reliability of the proposed framework for developing a secure CO2 injection schedule under operational constraints were validated through designing a suitable reward system and conducting a thorough analysis of the selected injection schedule. Additionally, the applicability of the framework to environments with uncertainty was assessed, and potential directions for future research were explored.

4.1. Validation of the Proposed Framework Under Different Reward Systems

Three distinct cases based on the components of the Reward A system, as outlined in Section 3.2.4, were considered: Reward A, which is based on the amount of CO2 injected (Equation (8)), Reward B, which includes a scalar reward on increasing the control or injection period (Equation (9)), and Reward C, which incorporates an additional reward based on the attainment of the targeted injection period (Equation (10)). This section discusses the training trends and selected injection schedules to identify the optimal reward system.

4.1.1. Evaluation of the Training Trend of the A2C Algorithm

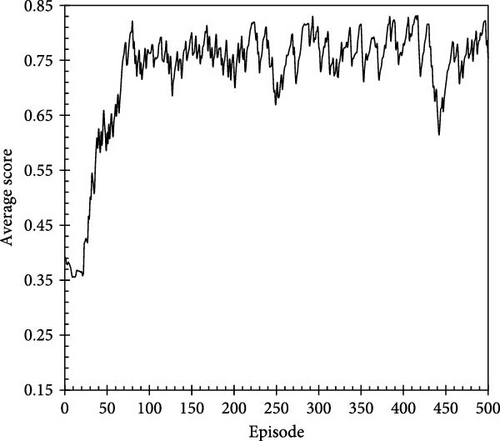

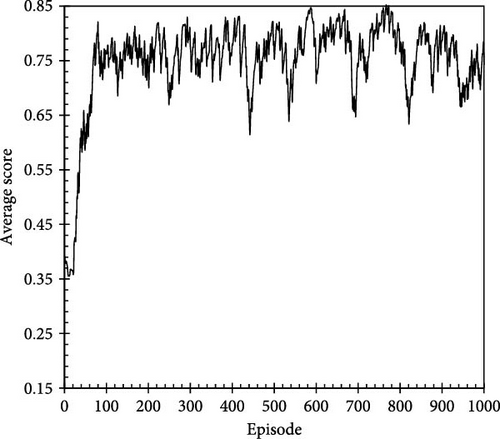

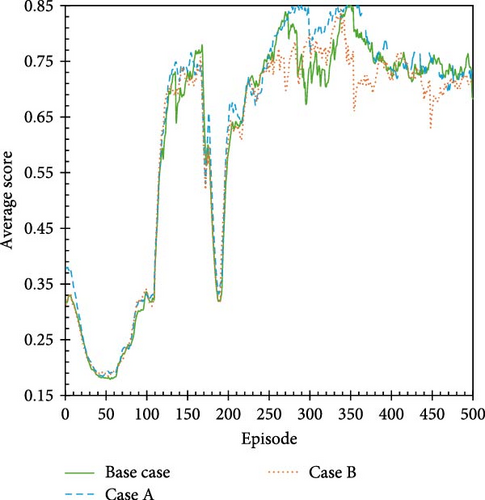

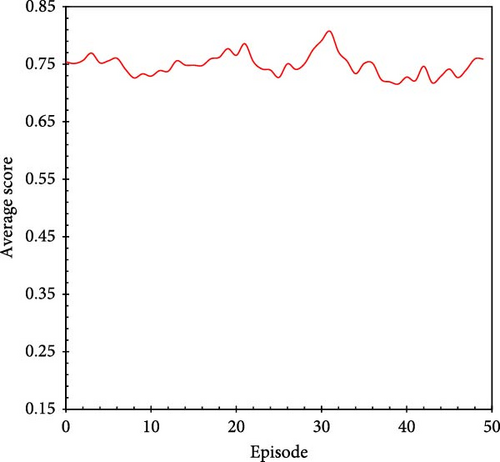

The average score was calculated using an exponentially weighted moving average (EWMA), which assigns a weight of 0.9 to the previous score and 0.1 to the new score. This method gradually reduces the influence of older scores, expediting the reflection of current trends. Figure 5a shows the results of training the A2C algorithm with Reward C over 500 episodes, with each average score normalized by the maximum score. With training, the average score steadily increases, stabilizing within the range of 0.75–0.8 after ~100 episodes, indicating that the agent effectively learns to generate preferred action trajectories within a large continuous action space. Reinforcement learning focuses on training the agent to explore various actions and progressively favors the actions that yield higher scores. The shape of the average score profile in Figure 5 indicates that the agent learned from repeated interactions with the environment to select higher-reward actions, resulting in a stable training performance [20, 28, 33]. This trend continued even when the training was extended to 1,000 episodes (Figure 5b). The slight oscillation in the average score can be attributed to the stochastic nature of sampling actions from a continuous action space and the variability in delayed rewards specific to each episode under Reward C.

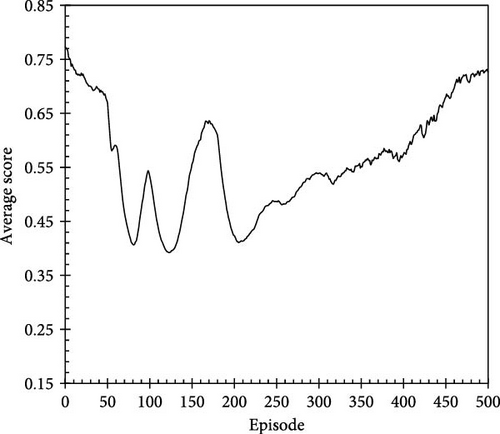

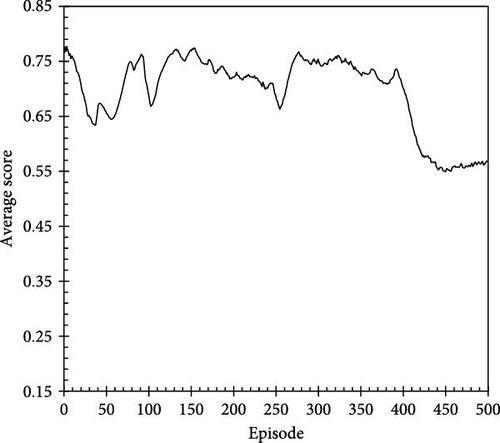

The trends of the average score over 500 training episodes for the other reward systems, i.e., Reward A (Figure 6a) and Reward B (Figure 6b), are shown in Figure 6. To compare the scores across the different reward systems quantitatively, Equations (8) and (9) were used to scale the results for Rewards A and B based on using the maximum score attained in Reward C as a reference. Figure 6 indicates that the performance of the agent under Rewards A and B did not show a stabilizing trend in the average score; moreover, the performance did not significantly improve compared with the initial episodes. These results highlight the importance of incorporating elements that reflect delayed responses toward the environment into the reward system; reward systems lacking appropriate feedback for action trajectories make it challenging for an agent to explore a globally optimal solution that adheres to operational constraints. Furthermore, after ~100 episodes of training, the average scores for Reward C (Figure 5a) were generally higher than those observed for Rewards A and B, confirming the higher efficacy of the action trajectories or injection scenarios under Reward C in terms of both the injected volume and duration.

4.1.2. Comparative Analysis of Injection Schedules

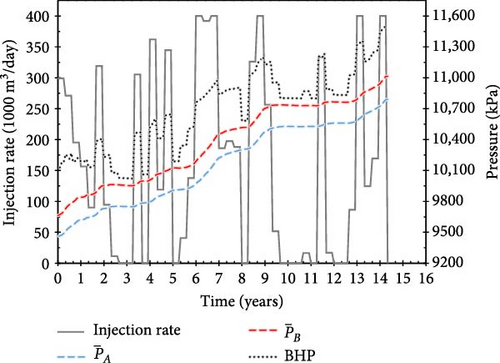

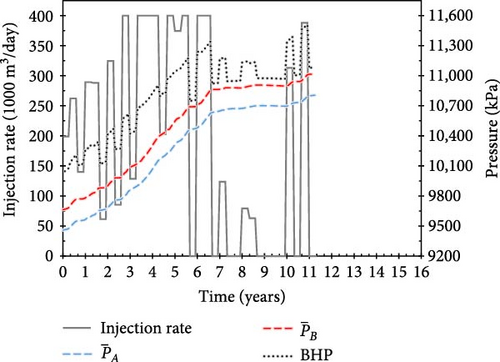

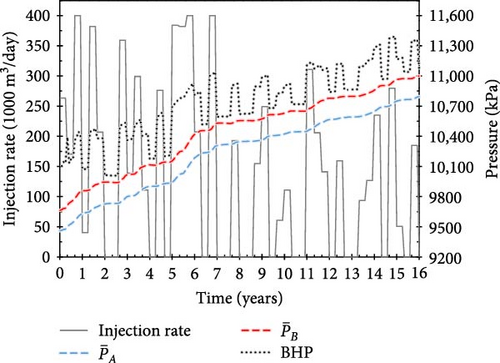

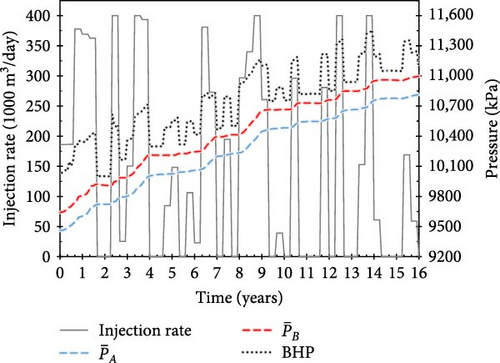

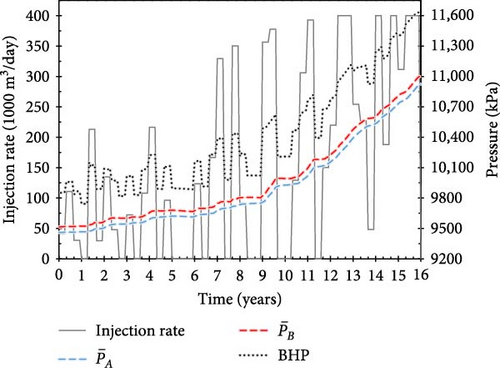

This section compares the results of each reward system by examining the action trajectories and several injection schedules, highlighting the excellent performance of Reward C. Figure 7 shows the CO2 injection rate (black solid lines) and monitoring parameters over time, specifically BHP (black dotted lines) and the average pressure of Faults A (blue dashed lines) and B (red dashed lines), for episodes with the maximum score during training under Rewards A and B. The injection schedules under these two reward systems were assessed for injecting 854 million cubic meters (MM m3) (Reward A) and 887 MM m3 (Reward B) of CO2. However, in both cases, the pressure in Fault B exceeded the critical value of 11,000 kPa after 14 years and 4 months for Reward A and 14 years and 5 months for Reward B (Figure 7a,b). These results indicate that Rewards A and B are inadequate for deriving a safe injection schedule over the targeted injection period, consistent with the analysis described in the previous section.

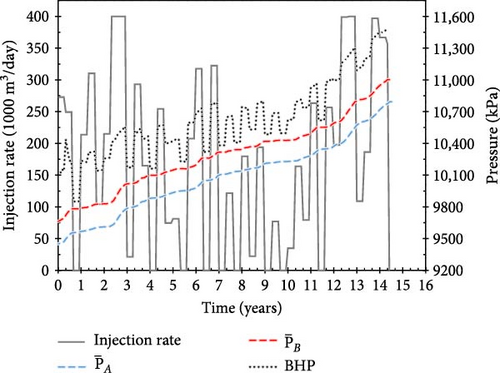

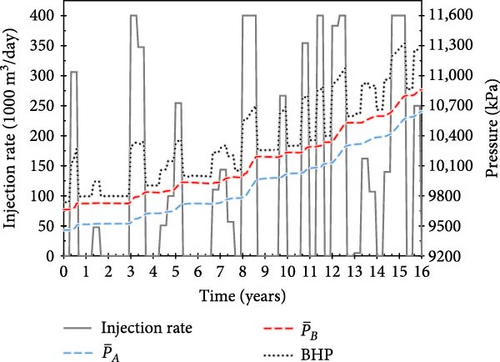

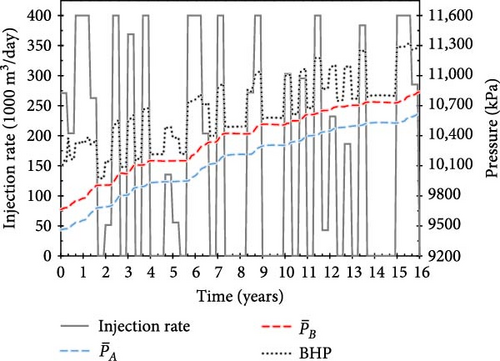

Figures 8 and 9 show the CO2 injection rates, BHPs, and average fault pressures over time for several injection scenarios developed during the training process based on Reward C (as shown in Figure 5a). The injection scenarios from the early stages of training failed to achieve the target injection period, resulting in penalties as delayed rewards. Specifically, in the 2nd episode, 742 MM m3 of CO2 was injected over 29 control periods (Figure 8a); in the 8th episode, 696 MM m3 was injected over 29 control periods (Figure 8b); and in the 49th episode, 788 MM m3 was injected over 34 control periods (Figure 8c). Because the pressure at Fault B exceeded the critical value during these control periods, the scores for these three scenarios were 1.84, 1.79, and 2.16, respectively.

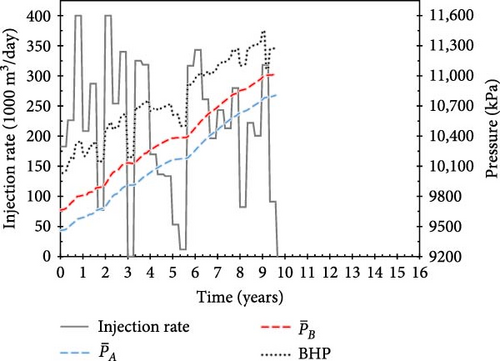

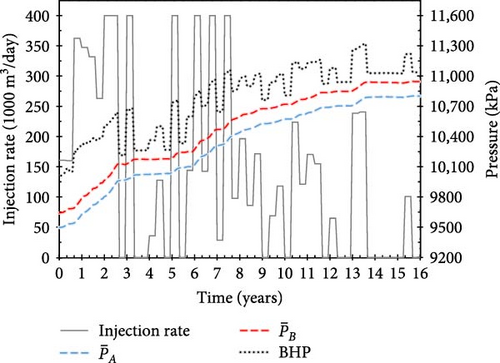

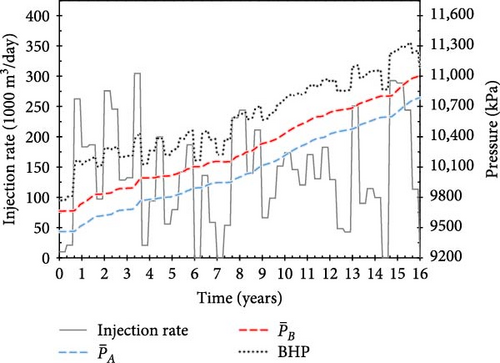

With training, the agent develops injection scenarios that maintain the average fault pressure below the critical value, thereby earning additional positive rewards proportional to the total amount of CO2 injected (Equation (10)). Figure 9a–c shows examples of operational scenarios that achieved the target injection period; the scenarios, with scores of 5.46, 6.41, and 5.95, respectively, injected 717, 959, and 830 MM m3 of CO2, respectively, over 16 years.

Notably, the injection schedule gradually evolved from a strategy with a tendency to continuously inject large amounts of CO2 (Figure 8) to one with minimal injection rate (10 m3/day) periods (Figure 9). Although the amount of CO2 injected during “buffer periods” (with minimal injection rates) is minimal, their strategic placement between injection schedules helps mitigate increasing pore-pressure trends and pressure propagation in the saline aquifer caused by previous actions. Therefore, the proposed approach enables the fault pressure to be maintained below the critical value. Figure 9b shows the scenario with the highest score across all reward systems, which injects the largest amount of CO2 (959 MM m3) over a 16-year injection period.

Table 2 shows that Reward C leads to the most reliable and meaningful results among all the tested reward systems. When a reward system includes both the reward associated with each control period and feedback on the trajectory of actions, the A2C algorithm shows stable learning trends and effectively derives an injection scenario that maximizes CO2 injection within operational constraints. The baseline A2C algorithm, which updates the neural network at each time step using only the BHP and average fault pressure as the state of the environment under Reward C, is detailed in the Appendix.

| Reward system | Injectable period | Cumulative injected volume (MM m3) | Fault B pressure at the last monitoring time (kPa) |

|---|---|---|---|

| Reward A | 14 years and 4 months | 854 | 11,014 |

| Reward B | 14 years and 5 months | 887 | 11,002 |

| Reward C | 16 years | 959 | 10,837 |

4.2. Effectiveness of the CO2 Injection Schedule Proposed by the A2C Algorithm

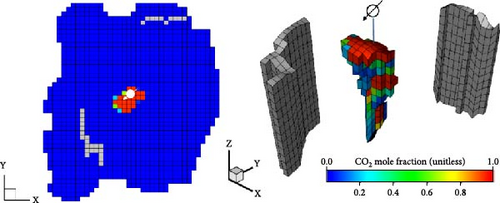

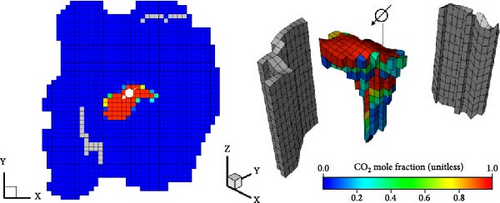

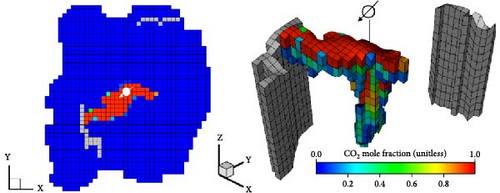

The optimal periodic CO2 injection strategy proposed by A2C (Figure 9b) maximizes the cumulative CO2 amount over 16 years without activating any fault. If the CO2 plume—movable CO2 migrating through pores—reaches a fault after activation, the risk of leakage may increase. Figure 10 shows the gas mole fraction of the injected CO2, showing the CO2 plume after 8, 16, and 50 years of injection. Upon reaching the impermeable caprock, the injected CO2 spreads extensively beneath it, migrating from the injection well to Fault A with relatively high permeability. Figure 10b,c indicates that the injected CO2 does not reach Fault A by the end of the injection schedule (16 years); however, the mobile plume may reach Fault A at around the 50th year. As Fault A remains inactive, CO2 storage is maintained without leakage through the fault aperture. Despite uncertainties regarding fault sealing, the simulation results confirm geological containment under these conditions for a minimum period of 50 years.

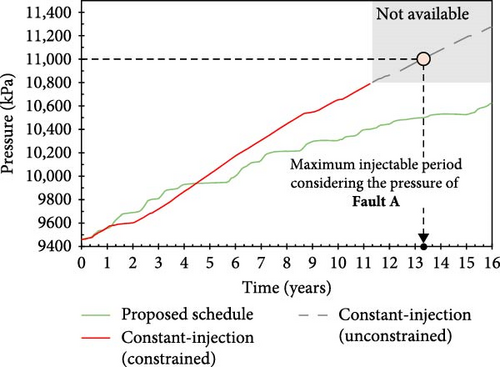

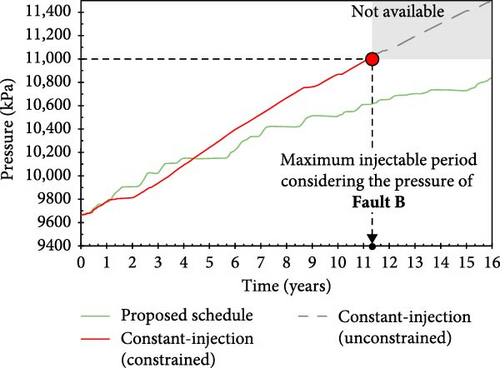

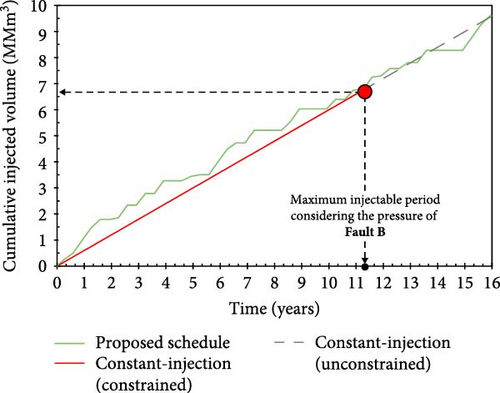

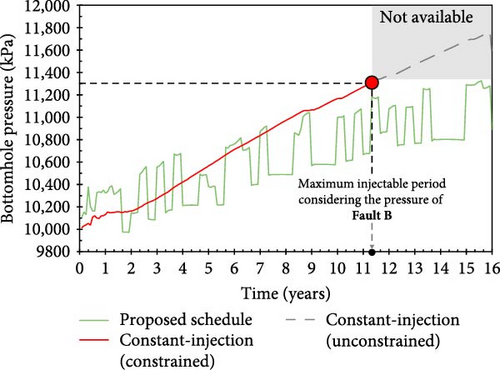

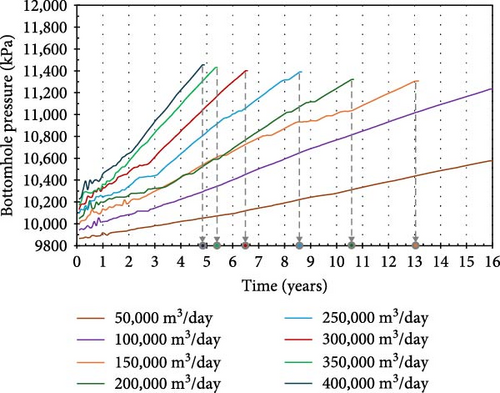

To assess the effectiveness of the proposed periodic schedule, this study compared the cumulative CO2 injection volume and operational period of the proposed schedule with those of a constant-injection-rate scenario. The constant injection rate was estimated to be 164,174 m3/day by dividing the total amount of CO2 injected by the duration of the injection period (16 years), i.e., the calculated value is the average daily rate for the optimal periodic strategy (Figure 9b).

The pressure increase in the optimal periodic strategy and the constant-injection-rate scenario are compared in Figure 11. The pressure increases relatively rapidly in the constant-injection-rate scenario, causing the activation of Fault A within 13.3 years (13 years and 4 months from the start of injection) and Fault B within 11.3 years. Owing to the operational constraint of halting injection when the activation pressure is measured at either fault, the constant-injection case is expected to end at 11.3 years. Within this period, the cumulative injected volume (674 MM m3) is 70.3% of the optimal volume (959 MM m3) (Figure 12). This result confirms the high efficacy of the proposed optimal schedule, which extends the injection period by 4.7 years, thereby increasing the cumulative injected volume by ~ 30%.

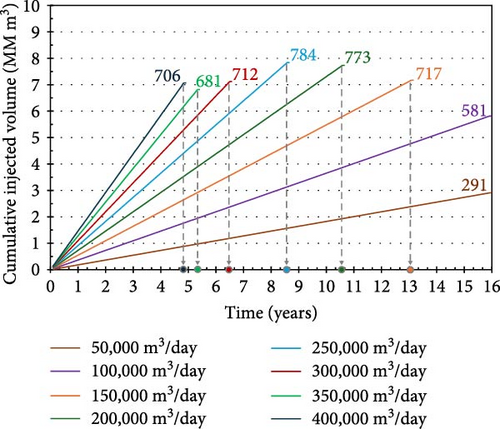

Figure 13 and Table 3 show the injection termination times and cumulative injection volumes of various constant-injection-rate schedules. As the daily injection rate increases, the pressure in the aquifer increases more rapidly. Consequently, the time required to reach the fault activation pressure is reduced, which in turn lowers the cumulative injection volume. For instance, at an injection rate of 400,000 m3/day, the cumulative volume over 4 years and 11 months is ~706 MM m3. In comparison, the largest cumulative injection volume achieved with a constant rate is 784 MM m3—77.7% of the optimal injectable volume—over 8.7 years at a rate of 250,000 m3/day. Therefore, the A2C-based optimal periodic schedule proposed in this study outperforms the constant-rate strategy, showing 22.3% greater CO2 injectable volumes while maintaining fault stability. Compared with scenarios with constant CO2 injection rates, the A2C-based periodic CO2 injection design provides advantages in terms of both cumulative injection volume and duration, while ensuring containment.

| Constant injection rate (m3/day) | Injectable period | Cumulative injectable volume until activating any fault (MM m3) |

|---|---|---|

| 50,000 | 16 years | 291 |

| 100,000 | 16 years | 581 |

| 150,000 | 13 years and 2 months | 717 |

| 200,000 | 10 years and 8 months | 773 |

| 250,000 | 8 years and 8 months | 784 |

| 300,000 | 6 years and 7 months | 712 |

| 350,000 | 5 years and 5 months | 681 |

| 400,000 | 4 years and 11 months | 706 |

4.3. Applicability Under Geological Uncertainty

Despite the precise modeling of the environmental dynamics in CO2 injection projects through computational simulations, accounting for all uncertainties that arise in on-site field operations remains challenging. For maximum safety, incorporating uncertainties due to additional weak zones or faults that might be activated by injection activities into injection scheduling is critical. This section evaluates the practicability of the reinforcement learning-based framework developed in this study. Training the A2C algorithm under various environments enables the proposed framework to function accurately in uncertain cases that differ from those encountered in the training process.

This framework enables the probabilistic determination of the injection rate at each time step within a continuous action space, permitting the generation of additional operational scenarios using the trained algorithm. Figure 14 shows the average score trends for 100 testing episodes, which are additional scenarios derived from the A2C algorithm trained in Section 4.1. The scaled average score ranges within 0.75–0.80, consistent with the results shown in Figure 5. The operational scenarios during the testing episodes show an average injection period of 15 years and 3 months, with an average injected CO2 volume of 724 MM m3 and standard deviation of 109 MM m3. The P10, P50, and P90 scenarios—based on total injection volumes—attain the targeted injection period, allowing for the injection of 843, 733, and 583 MM m3 of CO2, respectively. These results demonstrate that the developed framework can reliably suggest additional candidate scenarios and exhibits high potential to facilitate the selection of an appropriate injection schedule based on the total amount of CO2 to be injected.

Although the stochastic nature of sampling actions from a continuous space enables the generation of diverse operational scenarios, designing a system for monitoring optimization while considering environmental uncertainty remains a significant challenge. This study examined the robustness of the proposed A2C-based method by testing the training processes for different fault locations (i.e., under environmental uncertainty).

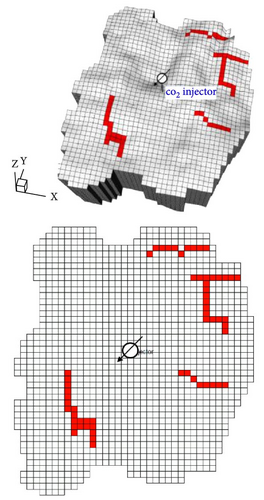

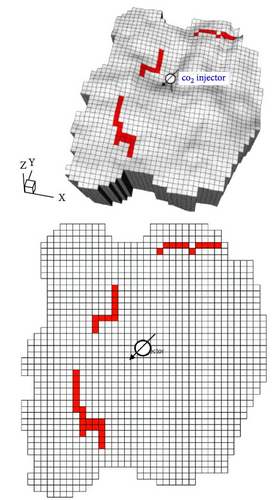

Figure 15 shows the fault locations in the environments used in this study. The geological model described in Section 4.1(Figure 2d) was used as the Base Case; the new geological models incorporated additional fault grids into the Base Case (Figure 15a–c). Figure 15c shows the geological model used to test the trained agent; compared with the other geological models, this model contains additional fault grids on the opposite side. Owing to high variability in flow-related parameters, such as permeability and porosity, pressure changes in the aquifer are not simply proportional to the distance between the injection well and the fault. Therefore, the additional grids were considered as extensions of existing faults (rather than separate faults). Specifically, the fault grids south of the injection well were labeled as Fault A, whereas those to the north were labeled as Fault B.

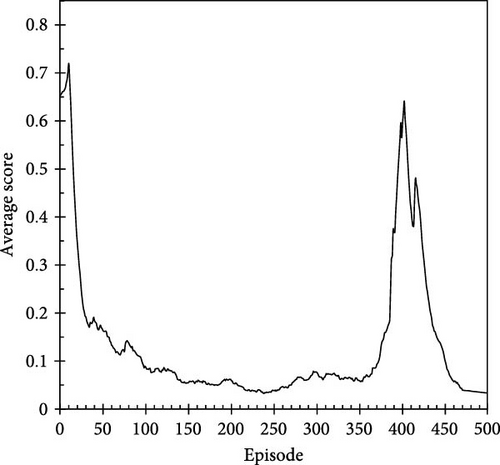

In this case study, the agent was trained by interactions with a different geological model in each episode. The settings for the state, reward system, and neural network architecture are consistent with the settings described in Section 4.1. Figure 16a shows the scaled trend of the average score over 1500 episodes, and Figure 16b shows the trends for each geological model during the training process. All the geological models show similar average score trends because the monitoring locations in Cases A and B are based on the positions of existing faults in the Base Case.

The average score of the A2C algorithm stabilizes at ~0.75 after ~750 training episodes across different geological models (~250 episodes per geological model). Although the number of episodes required for stable learning is slightly higher than that indicated by the results described in Section 4.1, the algorithm is trained to establish schedules that enable adequate CO2 injection within the targeted injection period across various environments.

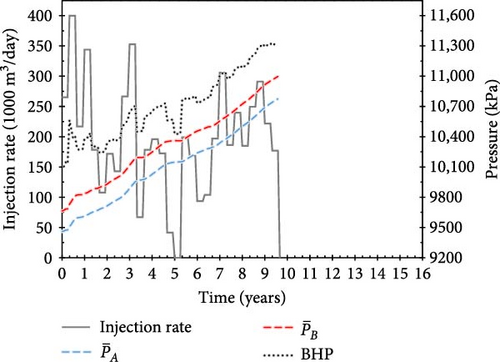

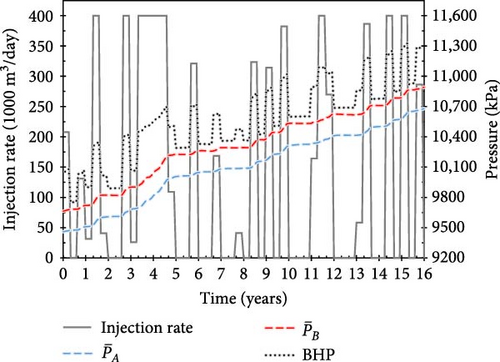

Figure 17 shows the injection scenarios with the highest scores for each geological model during the training process. The training process, which accounts for uncertainty, effectively derives cyclic injection schedules tailored for each geological model. The estimated cumulative CO2 injection volumes for the Base Case, Case A, and Case B are 841, 874, and 841 MM m3, respectively. Notably, in this case study, the injection volume for the Base Case is ~87.7% of the volume evaluated in Section 4.1; this reduction possibly occurs because the algorithm used in this case study is trained across geological models that include areas closer to the injection well, leading to a more conservative policy than that specific to a single geological model.

Figure 18 shows the trend of the scaled average score over 50 episodes while evaluating the trained algorithm in the test environment (Figure 15c). The average score stabilizes at ~0.75, consistent with the results shown in Figure 14, indicating that the developed framework can be effectively applied to geological models that are not used during training. In these test episodes, the average injection period, average injection volume, and standard deviation are ~15 years and 11 months, 638, and 194 MM m3, respectively. According to total injection volume calculations, the P10, P50, and P90 scenarios enable the injection of 882, 648, and 363 MM m3 of CO2, respectively, within a 16-year injection period. Figure 19 shows the scenario with the highest score among the test episodes, confirming the maximum safe CO2 injection volume for the Case C geological model to be 914 MM m3.

4.4. Challenges for Future Research

This study developed a framework to explore optimal operating scenarios for a single CO2 injection well under geological uncertainty. In addition to the work described in Section 4.1 and the Appendix, the main challenge was to define an appropriate criterion for the A2C model, including the selection of suitable hyperparameters. Specifically, it may be possible to enhance the analyses related to the definitions of the state (or observation), reward system, scale factor, and weight distribution of the neural networks. These challenges are inherent to reinforcement learning and are further amplified in complex geosystems, where evaluating agent interactions requires significant computational resources.

Future research should focus on extending this study to larger-scale problems, such as multi-well operations and other geosystems such as depleted gas reservoirs. It should also aim to integrate transport, injection, and storage facilities within a comprehensive system. This would require detailed investigations of various operational constraints and monitoring parameters, including geomechanics, thermal parameters, caprock integrity, reservoir fracture pressure, well stability, and flow assurance. By leveraging the model-free nature of the A2C algorithm, incorporating real-time sensor data from distributed temperature sensing, permanent drilling pressure and temperature gauges, and other sensors into the reinforcement learning framework could improve its effectiveness. Integrating extensive raw sensor signals using supervised learning (to convert raw data into processed forms) or unsupervised learning (to extract low-dimensional latent features) may provide valuable insights.

To facilitate rapid environmental response predictions, future studies should implement advanced surrogate models. Recurrent neural networks or convolutional long short-term memory networks can estimate sequential transitions in the environment [27], although their reliability depends on the size of the available dataset. Alternatively, physics-informed neural networks or deep operator networks, which are mesh-free and rely on physics-based data to solve PDEs of the environment, could be viable options [37–39].

Exploring alternative reinforcement learning algorithms could address challenges related to sample efficiency and dependency. For example, the soft actor-critic algorithm, i.e., an off-policy approach, could improve sample efficiency by reusing past experiences [40]. The asynchronous advantage actor–critic algorithm, which employs multiple agents simultaneously, can reduce dependency among training samples and help mitigate optimization challenges in high-dimensional geosystems [41].

In summary, a comprehensive analysis of geological settings tailored to target storage sites, along with a thorough consideration of monitoring and uncertainty parameters, is crucial. Developing solutions to improve the scalability and efficiency of reinforcement learning algorithms remains a priority. Advanced methodologies, such as integrating large language models (LLMs) [42] without human supervision—particularly for designing reward systems—could offer significant advancements.

5. Conclusions

This study developed a deep reinforcement learning-based framework utilizing the A2C algorithm to address the challenges of CO2 geological storage under operational constraints, such as fault activation. By appropriately configuring the reward system, state vector, and training process, the framework can effectively derive injection scenarios consisting of injection rates sampled from a continuous action space. The proposed framework shows stable learning trends with a reward system that considers the cumulative injectable CO2 amount, injection duration, and feedback from the trajectory of actions.

The injection scenario proposed in this study involves significant improvements in the cumulative injectable amount of CO2 over a 16-year period, injecting up to 959 MM m3 of CO2, which is 22.3% higher than the maximum CO2 volume injected in constant-injection scenarios. The applicability of the proposed framework under geological uncertainties was confirmed through reliable periodic injection scenarios tailored to different fault locations. The framework effectively adapted and maintained stable performance, even under unique environments not encountered during training. Therefore, the proposed framework for optimizing CO2 injection strategies shows robustness, balancing the CO2 storage efficiency with operational constraints and environmental uncertainties.

The proposed method is expected to expedite the development of efficient operational strategies for CO2 geological storage projects. Moreover, the method exhibits high potential to address additional monitoring parameters, such as caprock integrity, and optimize complex scenarios involving surface facilities, or multiwell operations.

Nomenclature

-

- A2C:

-

- Advantage actor–critic

-

- BHP:

-

- Bottomhole pressure

-

- CO2:

-

- Carbon dioxide

-

- DQN:

-

- Deep Q-network

-

- EWMA:

-

- Exponentially weighted moving average

-

- kPa:

-

- Kilopascal

-

- LLMs:

-

- Large language models

-

- md:

-

- Millidarcy

-

- MDP:

-

- Markov decision process

-

- MM:

-

- Million

-

- PDEs:

-

- Partial differential equations

-

- TD:

-

- Temporal-difference

-

- a:

-

- Action

-

- Ct:

-

- Volume of CO2 injected during a time step

-

- E:

-

- Episode

-

- Nobs:

-

- Number of observations

-

- Nhistory:

-

- Number of historical state data

-

- :

-

- Average pressure at Fault A

-

- :

-

- Average pressure at Fault B

-

- q:

-

- Normalized injection rate of CO2

-

- Q:

-

- Action-value function

-

- qreal:

-

- Actual injection rate of CO2

-

- qreal_ max, qreal_ min:

-

- Maximum and minimum injection rates of CO2

-

- r:

-

- Reward

-

- R:

-

- Reward system

-

- Rscore:

-

- Total rewards accumulated over an episode

-

- S:

-

- State

-

- t:

-

- Time step

-

- tmax:

-

- Time at the stopping criterion in an episode

-

- ttarget:

-

- Target control period

-

- V:

-

- Value function

-

- α, β:

-

- Scale factor

-

- γ:

-

- Discount rate

-

- δω:

-

- Advantage function in terms of temporal-difference error

-

- η:

-

- Learning rate

-

- θ:

-

- Weight parameter of actor network

-

- μ:

-

- Mean of normal distribution

-

- π:

-

- Policy

-

- σ:

-

- Standard deviation of normal distribution

-

- τ:

-

- Scalar reward for duration of injection

-

- ω:

-

- Weight parameter of critic network

Abbreviations

-

- A2C:

-

- Advantage actor–critic

-

- MDP:

-

- Markov decision process

-

- DQN:

-

- Deep Q-network

-

- TD:

-

- Temporal-difference

-

- BHP:

-

- Bottomhole pressure

-

- PDEs:

-

- Partial differential equations

-

- EWMA:

-

- Exponentially weighted moving average.

Conflicts of Interest

The authors declare that this study was conducted in the absence of any commercial or financial relationships that could be construed as potential conflicts of interest.

Author Contributions

Suryeom Jo: Conceptualization, methodology, software, validation, investigation, formal analysis, visualization, writing – original draft preparation, writing – review, and editing. Tea-Woo Kim: Validation, investigation, visualization, writing – original draft preparation, and writing – review, and editing. Changhyup Park: Conceptualization, validation, investigation, formal analysis, visualization, writing – original draft preparation, writing – review, and editing. Byungin Choi: Methodology and software.

Funding

This research was supported by the Ministry of Trade, Industry, and Energy (MOTIE) (No. 20212010200020) and Korea Institute of Geoscience and Mineral Resources (KIGAM) (GP2025-017; GP2025-021), Korea.

Appendix A: Training Results for the Baseline A2C Algorithm

This appendix includes the results of training a baseline or “vanilla” A2C algorithm, which updates the neural network at each time step using only basic observational data. The environmental settings and reward system are consistent with those described in Section 4.1.

Figure A1 shows the trend of scores during the training process over 500 episodes under the conditions described above. Unlike previous cases, in which interactions with the environment gradually led to stable rewards (Figures 5 and 16), the baseline model did not maintain high scores. This indicates that the baseline model struggled to discover an optimal policy and adapt to the environment. Figure A2 shows the injection scenario that showed the highest score during the training of the baseline model. Notably, the maximum daily injection rate of 400,000 m3/day was not used in any period, and the cumulative CO2 volume was calculated to be 810 MM m3, which is ~84.5% of the maximum injection volume evaluated in Section 4.1.

Open Research

Data Availability Statement

Data supporting the findings of this study are available upon reasonable request.