Research on University Teachers’ Perceptions of Gen-AI From the Perspective of Actor-Network Theory

Abstract

Employing the lens of Actor-Network Theory (ANT), this paper explores university educators’ perceptions of Gen-AI and its potential impact on education through a qualitative research approach. It focuses on how educators perceive generative AI technologies and how these technologies influence their teaching practices and role identification. The study reveals that multiple factors shape teachers’ acceptance and utilization of AI, encompassing personal attitudes, student responses, teaching environments, and institutional policies. The interaction between teachers and AI is conceptualized as part of a human-machine interaction network, where AI transcends being merely a teaching tool and is attributed human-like qualities such as a wise mentor and learning companion. This research underscores that the realization of human-AI integration necessitates a synergistic development between educators and technology, coupled with the concerted efforts of other actors within the educational ecosystem. The paper concludes by discussing the implications and challenges of human-AI integration for educational reform.

1. Introduction

In November 2022, the release of GPT-3 by OpenAI marked the dawn of a new era, heralding the advent of a powerful and readily accessible generative artificial intelligence (AI). AI has become an indispensable part of contemporary life through its extensive application across various domains, including personal assistants (such as Siri and Alexa), weather forecasting, facial recognition, medical diagnostics, and legal support [1]. Against this backdrop, it has been observed that AI has the potential to benefit education in multiple ways, such as through personalized learning platforms, adaptive assessment systems, intelligent predictive analytics, and the provision of conversational agents [2].

Educational AI (AIED) systems encompass various forms, which can be categorized into learner-facing AIED systems such as Intelligent Tutoring Systems (ITS), educator-facing AIED systems like teacher dashboards and automated grading support, and institution-supporting AIED systems capable of identifying students at risk of dropping out [3]. Recently, the advent of Large Language Model (LLM)-based generative AI systems has revolutionized the landscape. These systems exhibit enhanced capabilities in answering a broader range of questions and engaging in more intelligent conversational or dialectical interactions with users compared to previous AI platforms [4].

The application of AI in education has long been a subject of profound inquiries within the realms of epistemology and didactics. Holmes et al. posed a challenging question: “If you can search for or have an intelligent agent find anything, then why learn anything at all? What truly merits learning? [1]” Touretzky et al. argued that mere proficiency in using AI tools is insufficient; AI must be integrated as a compulsory component throughout the curriculum to ensure that all students gain essential understanding of its workings [5]. The debate over whether and how students should utilize AI in the classroom has been likened to the question of whether students should be allowed to use spell-checkers in writing or calculators in math [6]. Nevertheless, sophisticated emerging generative AI technologies like ChatGPT have achieved a significant leap in cognitive and learning tasks that can potentially be automated, raising concerns that students might simply submit copied and pasted ChatGPT outputs to fulfill even complex assessment requirements.

Despite the relatively recent surge in popularity of generative AI, its application in education has already unfolded in various other forms. Research on AI usage in classrooms has primarily focused on how it can be leveraged for student feedback, reasoning support, adaptive learning, interactive role-playing facilitation, and gamified learning enhancement [7]. A recent review of AI applications in education identified four primary roles for AI in classrooms: (1) assigning tasks based on individual capabilities, (2) enabling human-machine dialogue, (3) analyzing student work to provide feedback, and (4) enhancing adaptability and interactivity within digital environments. ITS represent a particularly prevalent application of AI, supporting learning by teaching course content, diagnosing students’ strengths and weaknesses in knowledge, providing automated feedback, tailoring learning materials to individual student needs, and even fostering collaboration among learners [8].

In supporting teachers’ work, AI can be employed to assist teachers in making evidence-based educational decisions, offering adaptive teaching strategies, and fostering their professional development and learning [9]. The role of teachers in the classroom and how they position the use of AI are emerging as crucial factors influencing learning outcomes. A study investigating the use of AI chatbots by 10th-grade students found that teacher support significantly impacted students’ motivation and ability to learn through AI platforms [10]. Conversely, other research has revealed that not all students benefit equally from AI in education, and passive/mechanical AI usage may even lead to diminished learning effects [11]. Hence, it is imperative to understand teachers’ perceptions of AI and their roles in facilitating learning methods when helping students engage with AI for learning purposes.

While teachers generally acknowledge that AI presents a range of opportunities for education, many possess limited understanding of AI and how it can be effectively integrated into teaching practices [12]. Some teachers lack the interest or motivation to incorporate AI into their classrooms [13]. Nevertheless, it is crucial that teachers possess the necessary AI readiness, meaning they at least comprehend the underlying principles and functionalities of AI in nontechnical terms, enabling them to make informed decisions about integrating it into their classrooms effectively [3].

While existing studies have extensively explored the impact of AI on educational outcomes and student experiences [7, 8], three critical gaps remain. First, most research adopts a human-centric perspective, focusing on teachers’ unilateral acceptance of technology (e.g., TAM-based studies by [13]), while neglecting the dynamic interplay between human and nonhuman actors (e.g., policies, institutional cultures, and AI systems themselves) in shaping pedagogical practices. Second, empirical evidence on how university teachers reconfigure their professional identities through human-AI interactions remains scarce, particularly in non-Western contexts [12]. Third, prior works often treat AI as a static tool, overlooking its role as an active agent that co-evolves with educators within socio-technical networks.

- 1.

How does generative AI bring about changes in university teachers’ teaching practices?

- 2.

How do actors interact within the generative AI network?

- 3.

How do university teachers identify the actors within these interactions and perceive their own roles?

2. Theoretical Framework

The ANT [14] first appeared in the field of Science and Technology Studies (STS) by Bruno Latour, Michel Callon, John Law, and many other colleagues. ANT posits that the world is replete with hybrid entities encompassing both human and nonhuman elements, which it employs to analyze situations where these elements are intricately intertwined and difficult to disentangle. According to ANT, the term “actor” is not confined to social entities, as nonhuman actors are also recognized as agents [15]. ANT portrays nonhuman actors as enablers of the persistence and coherence of society, as providers of possibilities. Any element that alters an established situation by introducing a difference is considered an actor [16]. Furthermore, ANT does not view technology as static or perpetual but rather as something that must be continually reproduced. Consequently, technology emerges as the moment when actors coalesce to uphold societal structures. Society and technology are not ontologically distinct entities but rather different facets of the same action [17]. “Thus, technological objects must be seen as the outcome of the concerted efforts of numerous interrelated and heterogeneous elements. Hence, we cannot merely describe technological objects without also depicting the actor-world that shapes them, along with all its diversity and scope [15].” One notable advantage of ANT over other approaches to understanding technology acceptance lies in its symmetrical treatment of humans and technological artifacts, thereby revealing relationships and contexts that might otherwise remain undetected by alternative methods.

ANT’s unique value in educational research lies in its rejection of technological determinism and human exceptionalism. Unlike models such as the Technology Acceptance Model (TAM), which positions technology as a passive object to be adopted or rejected by users [18], ANT reconceptualizes technology as an actant that exerts agency through its material properties, algorithmic operations, and institutional embeddings [16]. For instance, iFlytekSpark’s capacity to generate personalized content not only responds to teachers’ prompts but also actively reshapes their workflow, prompting them to renegotiate their roles as curators rather than sole knowledge authorities.

This symmetry between human and nonhuman actors allows us to trace how generative AI becomes an obligatory passage point (OPP) [15] in education—a node through which teachers, students, and policies must align to sustain network coherence. By analyzing the ‘translation’ processes (e.g., how teachers reinterpret institutional mandates to legitimize AI use), we uncover the emergent power dynamics that traditional pedagogical theories often obscure. Such an approach is particularly apt for China’s educational landscape, where centralized policies [19] interact with localized resistance and innovation, creating a fertile ground for ANT-driven inquiry.

ANT de-emphasizes the socially constructed constructs of traditional sociology, such as class and gender, and instead conceives society as a flat network of relationships, emphasizing the interactions and connections among “actors” within society. Adhering to the “Generalized Symmetry Principle,” which treats all entities equally, “actors” encompass not only humans but also extend broadly to include biological beings, objects, rules, and other tangible and intangible entities. Humans and nonhumans are equally regarded as potential contributors, participants, and transformers of the network. When actors act upon and are influenced by others, they are transformed into part of an action network, exhibiting specific roles, intentions, and subjectivity. This process is known as “translation.” The “network” emerges and continually evolves through such interactive relationships. In some cases, core actors can reshape the network’s form by establishing an “OPP”, such as a particular idea, a problem to be solved, or an interest to be pursued, to select and filter other actors into the network [15].

Since its inception and continuous development, ANT has been widely applied across various social science disciplines, including sociology, communication studies, and management. ANT has also found its place in multiple educational contexts. Fenwick and Nerland employed it to underscore the significance of nonhuman aspects in professional learning [20]. In educational research, ANT has brought back into focus crucial yet long-neglected elements such as technology, tools, and the physical environment, drawing attention to the social attributes and meanings of nonhuman entities, particularly technological artifacts. This approach facilitates the revelation of intricate power relations and nonlinear, long-term changes within educational fields [21].

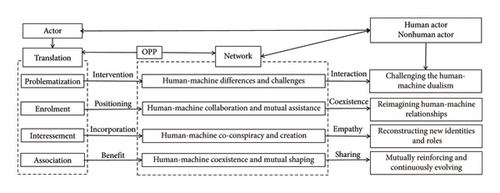

This study, leveraging the OPP of “technology use,” recruited and mobilized 15 university teachers into a human-machine interaction network. By employing the core concepts of ANT, including “actors,” “translation,” and “enrolment,” it showcases teachers’ perceptions of technology, their new understanding of their roles during technology interactions, and their envisioned future network configurations of artificial technology integration in education, as illustrated in Figure 1.

3. Materials and Methods

3.1. Sample

This study employs the technique of maximum variation sampling within purposeful sampling, aiming to achieve the broadest possible coverage of diverse scenarios within the research phenomenon. It endeavors to encompass participants with varying teaching experience, disciplines, and geographical distributions, thereby exploring the technology usage experiences among participants with distinct characteristics. The inclusion and exclusion criteria for participants were as follows.

3.1.1. Inclusion Criteria

- 1.

Full-time university faculty members

- 2.

At least 2 years of teaching experience

- 3.

Voluntary participation in the 15 day iFlytekSpark trial.

3.1.2. Exclusion Criteria

- 1.

Part-time or short-term adjunct faculty

- 2.

Prior systematic involvement in AI-driven educational projects (to avoid experience bias).

Through online community recruitment, we successfully contacted 15 university faculty members from 11 distinct regions as our survey respondents. Their basic information is presented in Table 1. Heterogeneity control and contextual commonality: The participants spanned diverse disciplines (e.g., humanities for E03, engineering for E05), age groups (26–52 years), and regions (11 provinces). Despite disciplinary differences, all teachers operated under similar institutional pressures driven by China’s national smart education policies (e.g., China Education Modernization 2035). This shared context allowed us to identify common patterns in teachers’ technology adoption while preserving the richness of individual variations.

| Participants | Gender | Age | University | Location |

|---|---|---|---|---|

| E01 | Male | 26 | Huainan normal university | Hefei |

| E02 | Male | 31 | Hefei university | Hefei |

| E03 | Female | 36 | Anhui university | Hefei |

| E04 | Male | 35 | Zhoukou normal university | Zhoukou |

| E05 | Female | 40 | Jianghan university | Wuhan |

| E06 | Female | 32 | Hefei university | Hefei |

| E07 | Female | 38 | Anqing normal university | Anqing |

| E08 | Male | 34 | Anqing normal university | Anqing |

| E09 | Male | 43 | Henan university | Kaifeng |

| E10 | Male | 40 | Northwest university | Xi’an |

| E11 | Female | 50 | Shenzhen university | Shenzhen |

| E12 | Female | 48 | Anhui normal university | Wuhu |

| E13 | Male | 46 | Hubei normal university | Huangshi |

| E14 | Female | 33 | Anhui university of technology | Huainan |

| E15 | Male | 52 | Beijing Technology and business university | Beijing |

3.2. Data Collection and Qualitative Analysis

The empirical data for this study were primarily sourced from in-depth interviews and questionnaires administered to the study participants. The interview protocol was self-developed, grounded in the key conceptual elements of the theoretical framework, and informed by relevant research on the impact of technology on teachers and their roles. The interviews encompassed teachers’ experiences with AI technology, their perspectives on AI technology, their own roles, and the envisioned landscape of education and teaching post-AI integration. The interview protocol served as a structural reference, with follow-up questions or adjustments to the question order made during actual interviews based on respondents’ answers. Each formal interview lasted between 40 and 60 min, was fully recorded with the participants’ consent, and was supplemented by multiple informal interviews conducted via WeChat. The interview data were analyzed primarily using categorical analysis, which encompasses three stages: open coding, axial coding, and selective coding. To ensure the validity of the study, the “relevance test” and “feedback method” were employed.

We invited participants to engage in a 15 day trial of the iFlytekSpark, a generative AI large model, and provided them with recommendations encompassing search techniques, commonly used prompts, and awareness of technical risks. Throughout the usage period, teachers were informed of the research objectives and participation was entirely voluntary. To monitor progress, surveys were administered both before and after the completion of the trial. In our study, we adhered to ethical procedures for research involving human participants. Confidentiality and anonymity were upheld for all research subjects.

- 1.

When using iFlytekSpark, what metaphor would you use to describe it?

- 2.

Explain the reasons why you identify with those metaphors.

- 3.

What metaphor do you use to link AI with teaching work?

- 4.

Explain why you associate AI with these metaphors?

This study integrates a theoretical framework to analyze the collected data, fostering a dialogue between diverse sources of information and theories, with the aim of elucidating the interactive processes within the educational sector influenced by AI technologies, as well as the implications and transformations of the professorial role within this context. The research findings are constructed as a consensus between ourselves and the study participants.

4. Results

4.1. Changes in Teaching Practices

“The tool generates problem sets with varying difficulty levels and even provides step-by-step solutions. This allows me to focus on guiding students through complex applications rather than repetitive content creation.”

“While AI drafts comparative analyses of political theories efficiently, its outputs often lack contextual depth. For example, it misattributed a quote from Marx’s Capital to Engels. Such errors demand meticulous verification, offsetting time savings.”

| Task type | Pre-AI (hours/week) | Post-AI (hours/week) | Reduction rate (%) |

|---|---|---|---|

| Material creation | 12.4 ± 3.2 | 5.1 ± 1.8 | 58.9 |

| Student assessment | 8.7 ± 2.1 | 3.5 ± 1.2 | 59.8 |

| Administrative work | 6.3 ± 1.5 | 2.9 ± 0.9 | 54.0 |

“Our department now mandates manual review of all AI-generated content for ‘Ideological and Political Education’ courses. While this ensures compliance with national guidelines, it adds layers of bureaucratic oversight.”

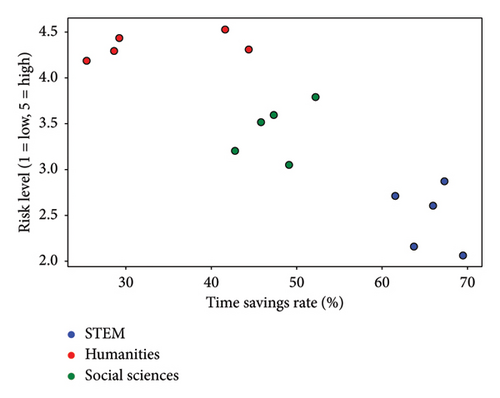

These dual forces—efficiency gains and emergent complexities—are synthesized in Figure 2, which maps the interplay between time savings (horizontal axis) and risk mitigation efforts (vertical axis). The data reveal a disciplinary divide: STEM fields cluster toward high efficiency and moderate risk, while humanities disciplines scatter toward lower efficiency and higher risk due to content sensitivity.

4.2. Actor Interactions in the AI Network

The integration of generative AI into higher education has catalyzed a complex network of interactions among human and nonhuman actors, as posited by ANT. This network operates through continuous processes of translation [15], wherein actors negotiate roles, interests, and power dynamics to stabilize or transform the socio-technical system. Our analysis reveals that teachers’ adoption of AI is not merely a unilateral decision but a collective outcome shaped by institutional mandates, student behaviors, infrastructural constraints, and cultural norms.

4.2.1. Institutional Mandates as OPPs

“Our annual teaching evaluations now include ‘AI utilization scores.’ Even reluctant colleagues feel pressured to tokenize AI tools in lesson plans, though some merely paste ChatGPT outputs into PowerPoint slides.”

“While Tsinghua has AI-powered smart classrooms, we struggle with intermittent Wi-Fi. During evaluations, we mimic AI use through scripted demos—a façade that strains both ethics and pedagogy.”

4.2.2. Students: Catalysts and Constraints

“Students submit essays polished by Grammarly and ChatGPT. If I don’t leverage similar tools, my feedback risks irrelevance. It’s an arms race of technological literacy.”

“Some students blindly accept AI-generated code without debugging. This undermines problem-solving skills—the core of engineering education.”

4.2.3. Technological and Cultural Mediations

“Teaching is an art of dialogue, not algorithms. Over-reliance on AI risks reducing educators to button-pushers.”

“When iFlytekSpark generates controversial interpretations, I task students with critiquing them. It sparks deeper engagement than static textbooks ever could.”

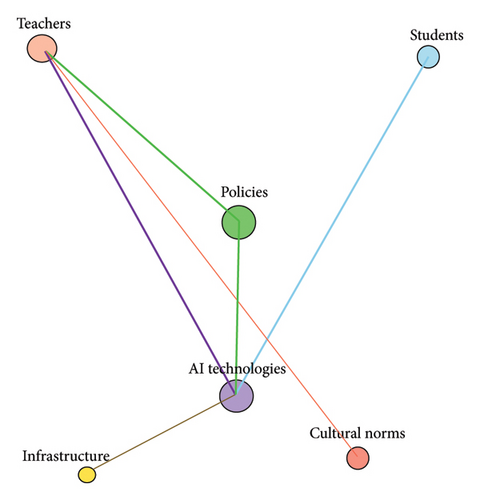

These interactions coalesce into the actor-network is depicted in Figure 3, where solid lines represent alliances (e.g., policy-technology integration) and dashed lines signify tensions (e.g., cultural resistance). The network’s stability hinges on balancing coercive forces (e.g., policy OPPs) with localized adaptations (e.g., disciplinary critiques).

4.3. Role Perception and Identity Reshaping

“I now act as a ‘debate moderator’—students critique AI-generated interpretations of Kafka, challenging both the technology and their own assumptions. This shifts my focus from lecturing to fostering critical dialogue.”

“AI drafts lesson plans on Marxist theory in minutes, but its outputs lack ideological nuance. My role now includes ‘content policing’—a task that paradoxically amplifies my responsibility as a moral guide.”

“Like gardeners, we cultivate critical thinking through personalized mentorship, while AI acts as an irrigation system, delivering data-driven resources. This synergy doesn’t diminish our role—it redefines it.”

This paradigm, reinforced by policy mandates such as China Education Modernization 2035, illustrates how teachers reconcile external pressures with pedagogical values through ANT’s translation processes, forging hybrid identities that balance human agency with technological augmentation.

5. Conclusion

This study unveils the complex socio-technical negotiations underlying university teachers’ adoption of generative AI, offering three key advancements to the literature on educational technology: (1) a critique of individualistic models of technology acceptance, (2) empirical evidence of nonhuman actors’ coercive agency in shaping pedagogical practices, and (3) actionable strategies for equitable AI integration. Below, we contextualize these contributions, address their implications, and acknowledge limitations.

5.1. Beyond Individual Choice: How ANT Reframes Technology Acceptance

Prior studies, such as Celik’s [13] categorization of “rational” versus “emotional” adopters, predominantly attribute AI adoption to teachers’ personal attitudes or skills. While this individual-centric lens captures psychological dynamics, it neglects the systemic forces that constrain or enable agency. Our ANT analysis reveals that even “emotionally conservative” teachers (e.g., E12, who resisted AI philosophically) engaged in tokenistic adoption to comply with institutional mandates—a behavior inexplicable through TAM’s perceived usefulness/ease-of-use framework [18].

This divergence highlights ANT’s unique value: by framing policies (e.g., China Education Modernization 2035) as OPPs [15], we demonstrate how nonhuman actors reconfigure teacher agency. For instance, E07’s department tied promotion criteria to AI-integrated lesson plans, coercing participation regardless of personal inclinations. Such findings align with Latour’s [16] notion of technology as “frozen organizational discourse,” where policies and tools crystallize institutional power into material practices.

5.2. Nonhuman Actors as Coercive Forces: Policy, Infrastructure, and Cultural Artifacts

This study reveals through the ANT framework that the adoption behavior of AI by teachers is essentially the result of a multiactor game. Policies, as a core coercive force, reconstruct the actor network in the form of “OPPs”. The China Education Modernization 2035 policy forms institutional coercion by tying resource allocation (e.g., the strong association of 30% of the budget of E10 universities with AI construction), forcing teachers to transform the use of technology from a personal choice into a survival strategy. Material infrastructure constitutes a secondary constraint layer. In institutions with resource shortages (such as the university where E15 is located), the phenomenon of unstable WiFi gives rise to “performative compliance” strategies—teachers are forced to create false adoption traces within flawed systems. Cultural legacies continue to function as implicit counter-programs, with the “chalk-lecture” tradition of senior teacher groups (such as E12) resisting technological intrusion through daily practice, forming friction points in the network. This three-dimensional coercion mechanism breaks the linear assumption of technology adoption, showcasing the dynamic struggle between human actors and nonhuman elements at the micro level, and provides a new perspective for understanding the reconfiguration of power in educational digital transformation.

5.3. Toward Equitable Implementation: A Three-Tiered Strategy

To address the tension between technological coercion and individual agency, this study proposes a three-tier intervention framework to promote the fair implementation of educational AI. In the capability dimension, it advocates for the development of “critical AI literacy”: moving beyond tool operation training, establishing algorithmic auditing capability modules (such as simulating the generation of politically sensitive content scenarios), enabling teachers to identify and correct technological biases (referencing the E13 case). Policy design should introduce a subject risk grading mechanism, drawing on the logic of the EU AI Act, implementing a “human-machine dual review” system in high-risk fields such as history, and encouraging AI-assisted innovation in low-risk fields such as engineering (as in the case of E05’s simulation experiments). At the system level, a “readiness assessment-subsidy support” dual-track mechanism is constructed, establishing an access system with quantitative indicators such as minimum network standards (≥ 50 Mbps) and per capita training hours, combined with targeted resource allocation (as in the case of E15 university), to eliminate the infrastructure gap. This strategy system, through the synergistic effects of technological empowerment, policy adaptation, and systemic compensation, provides an actionable governance path for the inclusive development of educational AI.

6. Conclusion

This study demonstrates that university teachers’ perceptions of generative AI are shaped by a dynamic network of human and nonhuman actors, including policy mandates, student expectations, technological infrastructures, and cultural norms. Under the coercive force of China’s national educational policies, teachers are transitioning from “knowledge authorities” to “intelligent collaborators,” leveraging AI to enhance pedagogical efficiency while grappling with ethical and disciplinary tensions. However, this transition is unevenly realized, hindered by digital divides (e.g., E05’s resource constraints) and generational resistance (e.g., E12’s adherence to traditional pedagogies).

Two key limitations warrant attention. First, the study’s focus on Chinese universities limits cross-cultural generalizability. Future research could compare how Western liberal education models mediate AI adoption differently. Second, the 15 day trial may inflate teachers’ short-term enthusiasm; longitudinal studies are needed to assess sustained integration. Despite these constraints, our ANT framework provides a robust foundation for reimagining teacher-AI collaboration as a negotiated, context-sensitive praxis rather than a deterministic outcome. Policymakers and institutions must prioritize equitable resource distribution and teacher agency to foster responsible human-AI symbiosis in education.

Consent

Informed consent was obtained from all subjects involved in the study.

Conflicts of Interest

The authors declare no conflicts of interest.

Author Contributions

Conceptualization, Yunlai Liu; methodology, Yunlai Liu; formal analysis, Yunlai Liu and Yuwei Zhang; investigation, Yuwei Zhang; data curation, Yuwei Zhang; writing–original draft preparation, Yunlai Liu; writing–review and editing, Yunlai Liu and Yuwei Zhang; supervision, Yunlai Liu; project administration, Yunlai Liu; funding acquisition, Yunlai Liu. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by Anqing Normal University (grant number: 2023aqnujyxm32).

Open Research

Data Availability Statement

This dataset is available upon request from the authors.