Research on Machine Vision–Based Intelligent Tracking System for Maintenance Personnel

Abstract

Upon returning to the depot, rail transit vehicles require necessary maintenance. The working condition of train maintenance personnel directly impacts the safety of both staff and equipment. Therefore, effective monitoring and control of activities within train roof access platforms are essential. Traditional manual monitoring demands substantial manpower and is prone to human error, whereas machine vision–based intelligent monitoring offers a promising alternative, reducing the dispatch control center (DCC) workload while enhancing safety management. Our intelligent monitoring approach involves three key steps: train maintenance personnel identification, tracking of maintenance activities to generate movement trajectories, and analysis of movement patterns to detect anomalous behavior. This study primarily addresses the challenges of personnel identification and process tracking. In the scenario of train maintenance, facial recognition is limited by posture variations, making direct video tracking impractical. Pedestrian reidentification (Re-ID) also struggles with posture and attire changes. To address these issues, we propose a hybrid approach: facial recognition confirms personnel identity upon entry, followed by pedestrian feature extraction for Re-ID-based tracking throughout the maintenance process. To handle occlusion, we designed a Re-ID method based on body part recognition, segmenting features into head–shoulder, body, arm, and leg components, with higher weights assigned to visible parts. This method achieved improved mean average precision (mAP) and Rank-1 values of 87.6% and 95.7%, respectively, on the Market1501 dataset. A tracking and monitoring system was developed, effectively identifying and tracking maintenance activities, demonstrating a strong practical value. Furthermore, this work lays the groundwork for future research into trajectory-based abnormal behavior detection.

1. Introduction

Upon the rail transit vehicle’s return to the depot, it must undergo repairs in accordance with regulations. Accessing the train roof access platform is imperative for tasks such as climbing, debugging, and maintaining equipment such as pantographs and air conditioning systems. Due to its proximity to the overhead contact line, entry onto the roof platform without power shutdown poses grave risks to operators’ safety. To address this, interlock access control and video monitoring systems have been implemented [1]. Despite these safety measures, instances of personnel error or noncompliance with management regulations, such as obstructing operation doors or unauthorized entry and exit, may occur. Illegal entry to the roof platform presents serious security hazards, potentially jeopardizing the safety of both operators and equipment [2, 3].

Advancements in intelligent monitoring technology invite an exploration of its potential to assist dispatch control center (DCC) in overseeing operational processes, verifying operator identities, assessing personnel authorization, tracking operators, and identifying their anomalous behavior [4].

Presently, many vehicle roof platforms are outfitted with surveillance cameras to facilitate monitoring by the DCC [5, 6]. However, the resolution of these surveillance cameras is often limited, resulting in obscured views of the back of the head and side of the face, rendering direct application of face identification technology for personal identification and trajectory tracking unfeasible. Conversely, human appearance cues (pedestrian characteristics) on the monitoring screen are more readily discernible, suggesting the potential utility of pedestrian reidentification (Re-ID) technology for identity verification. Pedestrian Re-ID entails leveraging pedestrian attributes such as clothing, body shape, and hairstyle across multiple camera views to identify specific individuals. Nevertheless, the current pedestrian Re-ID technology encounters challenges, including variations in appearance due to changes in lighting and perspective across different cameras, fluctuations in pedestrian posture and attire over time, and potential obstruction of personnel by guardrails, columns, and maintenance tools on the roof platform.

In addressing these challenges, illumination variations can be effectively addressed through data augmentation [7]. To tackle the issue of variable pedestrian poses induced by changes in perspective, techniques such as spatial transformer networks (STN) [8] and similar approaches can be leveraged to achieve feature alignment. Crucially, attention must be paid to attire variation and partial occlusion. Considerable research has explored local occlusion, demonstrating the efficacy of combining global and local features to enhance identification networks. In this approach, pedestrian images are segmented into several regions, with local features extracted from each region and subsequently combined to form the final feature representation. Sun et al. [9] partition pedestrian images into multiple horizontal segments, extracting and classifying features independently for each segment before combining them to obtain global features, known as the part-based convolutional baseline (PCB) method. Zheng et al. [10] extract horizontal segmentation features across multiple scales to yield more nuanced image features, resulting in significant performance improvements over PCB. However, these methods adopt a uniform block approach, failing to utilize pedestrian component features or eliminate background information, thereby limiting pedestrian identification efficacy. Zhang et al. [11] propose the relation-aware global attention (RGA) module, which calculates correlations between different blocks in pedestrian images to adaptively generate weights for feature representation across different parts. Nevertheless, RGA fails to fundamentally address the limitations of uniform block convolution and exhibits relatively low precision. Tian et al. [12] employ conditional random fields to segment pedestrian images into foreground and background, extracting subregions comprising pedestrian foregrounds for feature extraction. Gao et al. [13] introduce the Pose-guided Visible Part Matching (PVPM) model, aiming to identify visible portions of specific parts through posture-guided attention and subsequently leveraging the correlation between visible parts of two graphs. However, both methods necessitate the introduction of additional semantic component information estimation models and consume substantial computational resources. To effectively leverage feature dependencies on a global scale, He et al.’s TransRe-ID [14] pioneers the application of the vision transformer (ViT) architecture to pedestrian Re-ID tasks, demonstrating ViT’s efficacy in achieving superior performance in such tasks. However, owing to its limited capacity to capture local features, the transformer is susceptible to misjudgment in pedestrian Re-ID tasks. Building upon this foundation, Wang and Liang [15] propose a local enhancement module to obtain more robust feature representations and enhance performance. Nevertheless, for pedestrian identification tasks, valuable pedestrian appearance features are overlooked, resulting in the underutilization of effective features.

In addressing clothing changes, the prevailing consensus emphasizes the extraction of clothing-independent features, such as facial characteristics, hairstyle, body morphology, and gait. Gu et al. [16] explore this by extracting irrelevant clothing features from original RGB images, penalizing the model’s reliance on clothing predictive capabilities. Wang et al. [17] alter RGB image channels to produce pedestrian images with diverse color appearances for training, thereby reducing the model’s reliance on clothing color features. Shu et al. [18] introduce a pixel sampling approach guided by semantics, which involves sampling pixels from other pedestrians and randomly swapping pixels of pedestrian attire to compel the model to learn clues unrelated to clothing. Liu et al. [19] replace conventional scalar neurons with vector–neuron (VN) capsules, wherein a dimension in the VN can detect clothing changes in the same individual, enabling the network to categorize individuals with the same identity. Although these methodologies yield improvements in certain metrics, the overall performance of pedestrian Re-ID remains suboptimal, posing challenges for practical applications. Furthermore, these methodologies frequently neglect significant appearance features, such as clothing, leading to the underutilization of valuable features.

Hence, considering the operational dynamics of the roof platform, we assess the feasibility of these methodologies and introduce an engineering-oriented approach for identifying personnel entering the roof platform or similar maintenance sites and tracking their movement trajectories. This constitutes a crucial step in our intelligent safety interlocking system. Our work focuses on this objective and the associated research. At the outset, we deploy an array of cameras at the platform entrance. These cameras are capable of concurrently capturing both facial and pedestrian attributes, utilizing facial data for identity verification while also storing pedestrian attributes, including clothing features, in the corresponding identity database. Subsequently, as individuals enter the platform, the pedestrian information captured by the surveillance cameras is cross-referenced with the features in the pedestrian signature database to confirm their identity and track their movements. Furthermore, in response to potential occlusion challenges, we propose a fusion identification method focusing on human body parts. By leveraging the effective features of the human body alongside the strengths of the aforementioned techniques, our aim is to enhance the identification capability of partially obscured pedestrians.

- (1)

We developed a camera setup capable of simultaneous face identification and pedestrian attribute collection. Utilizing this setup, we effectively resolved the issue where facial recognition alone could not achieve pedestrian tracking, and pedestrian Re-ID could not confirm pedestrian identity. This approach enables the correspondence of pedestrian features with their identities within a defined spatial and temporal range.

- (2)

In scenarios such as the train roof access platform and other controlled areas, pedestrian features are frequently occluded. To address this issue, we propose a model for human body part recognition. This model identifies different body parts and assigns varying weights based on the degree of occlusion, thereby enhancing the local features of unobstructed body parts. By combining these local part features with holistic ones, we significantly improve the identification accuracy of occluded pedestrians.

- (3)

Building on the foundation of train maintenance personnel identification, we developed an intelligent tracking system tailored for maintenance operators. This system autonomously identifies personnel and tracks their work processes, generating comprehensive job trajectory. This serves as the basis for our next step in developing abnormal behavior detection.

In the process of implementing the intelligent tracking system, we introduced several distinctive features compared to the existing methods. Firstly, the intelligent monitoring design based on personnel identification presented in this paper is pioneering, as current methods rely entirely on manual inspection. Secondly, for personnel identification, we proposed a novel approach based on human body part recognition, which is superior to the widely used method of enhancing identification through local partitioning. Finally, to address the challenge of matching facial and pedestrian identities, we employed an innovative setup that binds personnel identity with pedestrian features at mandatory passage points. This method circumvents the limitations of the existing facial recognition and pedestrian Re-ID techniques, combining and leveraging their advantages.

2. Camera Array Design

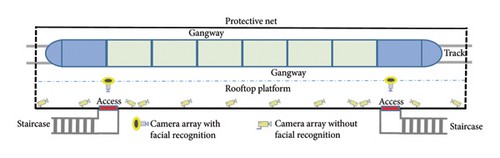

The deployment of the surveillance camera array necessitates considerations of lighting conditions, personnel movement patterns, and other factors. For optimal performance, cameras capturing facial data should maintain a deflection angle of less than 30° horizontally and 15° vertically. Most existing access control systems for roof platforms do not utilize facial recognition technology [20]. To effectively monitor access points in these controlled areas, it is advisable to install surveillance camera units at the entrances of these channels, as illustrated in Figure 1. Each passage is equipped with three surveillance camera units: one positioned directly in front of the entrance and two at the rear. The front-facing camera unit enables facial identification and comprises three cameras mounted on the same stand. In contrast, the rear-mounted unit does not incorporate facial identification technology and consists of two cameras. Additional surveillance camera units without facial identification are strategically positioned along the side walls of pedestrian passages according to the required monitoring distance within the platform.

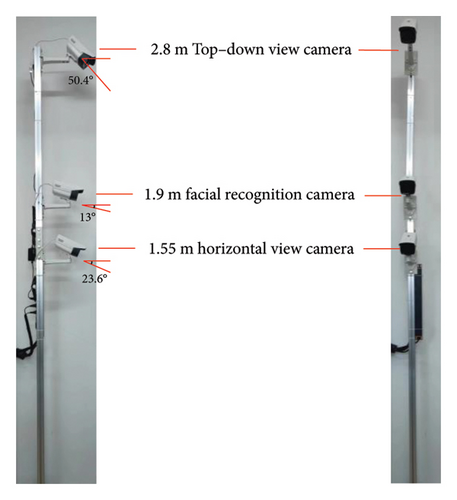

The A-type camera unit, as indicated in Figure 2, consists of three cameras, all featuring the widely employed 2-megapixel 1/2.7″ CMOS infrared array tube network camera. The image sensor’s imaging size is reported as w∗h = 5.27 mm∗3.96 mm [21]. Given the confined space at the entrance of the access control channel, measuring 1.5 m in width, the cameras are strategically positioned to effectively capture pedestrians situated 1.5 m away. To achieve this, a 4-mm focal length lens is selected, affording a horizontal field of view of 83.6° and a vertical field of view of 44.6°.

The support stands at a height of 2.8 m, featuring a mounted camera atop designed to capture comprehensive pedestrian characteristics. To effectively cover the height of pedestrians at the passage entrance (set at 2 m), the camera lens is angled downward to 50.4°, ensuring a minimum viewing distance of 0.87 m for complete target visibility. However, when capturing pedestrian features from above, the area below the shoulder may be obstructed. To mitigate this, a horizontal viewing angle camera is positioned at a height of 1.55 m on the bracket, with its vertical field of view extending to ground level at the entrance. Consequently, the camera lens is tilted downward by 23.6°, covering the area within 1.52 m above the ground, thus accommodating pedestrian features below the shoulder.

The rear camera unit, lacking facial identification capabilities, is designated as the A-minus–type camera unit. It comprises only the top-overlooking camera and the horizontal viewing angle camera. The camera parameters are identical to those of the A-type camera unit, enabling the collection of comprehensive pedestrian data from side and rear perspectives.

The roof maintenance platform typically spans a width of approximately 6.38 m. Taking into account the field of view angle and the required coverage, the camera setup on the platform, designated as the B-type set, is equipped with 8-mm focal length lenses. These lenses offer a horizontal field of view angle of 38.8° and a vertical field of view angle of 21.1°. The camera lenses are tilted toward the vehicle roof at an angle of 19.4°, necessitating a minimum horizontal viewing distance of 7.9 m to effectively cover the entire control area. The maximum clear visual distance of the camera is 24 m. To ensure visibility of a complete 2-m pedestrian target, the top camera lens is tilted downward by 12.5°, requiring a minimum distance of 3.9 m to guarantee clear visibility of the entire target in the vertical direction. Similarly, the horizontal camera lens is also tilted downward, with a tilt angle of 10.6°, enabling clear visibility of the complete target at a minimum distance of 4 m in the vertical direction. Any areas not covered by this camera setup are addressed by adjacent camera setups, therefore requiring an approximate distance of 16 m between camera setups.

3. Facial and Pedestrian Feature Identification

When accessing the roof work platform, train maintenance personnel are required to pass through the access control channel [22], where the camera captures facial images for identification purposes. Concurrently, a pedestrian feature acquisition camera captures and stores pedestrian attributes in the picture database, linked to the respective identity. Subsequently, upon personnel entry onto the roof platform, their images collected by the monitoring cameras are Re-ID and cross-referenced with the feature database to confirm identity and track movement trajectories.

If personnel do not use the designated access channel to enter the controlled area, pedestrian Re-ID may be unable to determine their identity. In such cases, the system will track the individual as an unidentified person and trigger an alert at the DCC to notify the on-duty personnel for appropriate action.

3.1. Face Identification

With advancements in machine learning and deep convolutional neural networks, certain face identification models have achieved test set accuracies nearing 100%. However, in practical applications, accuracy is primarily influenced by variables such as lighting conditions and posture [23].

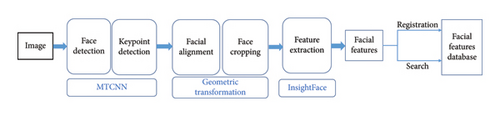

The identification method implemented in this study is tailored to the specific traffic conditions observed on the roof platform. In this context, individuals entering the control area must traverse through the access control channel, which ensures optimal lighting and positioning conditions ideal for face identification. Consequently, a well-established face identification framework is employed, as depicted in Figure 3. Initially, MTCNN is utilized for face detection, accurately predicting the five key facial landmarks. Subsequently, affine transformation is applied to rectify the facial orientation, yielding a standardized facial image. The open-source InsightFace model [24, 25] is then employed to extract distinct facial features, which are subsequently compared with entries in the face feature database to identify one or more faces with the closest resemblance.

3.2. Pedestrian Feature Registration

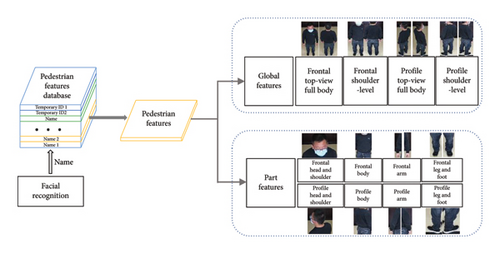

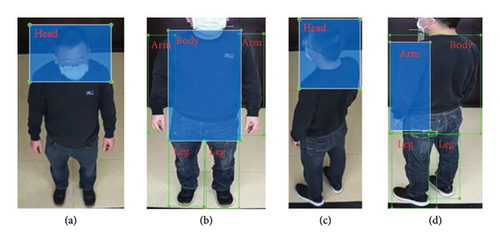

When individuals pass through the access control channel, the facial identification camera captures their facial image and cross-references it with entries in the signature database to ascertain their identity information. If a person cannot be identified, they are assigned a temporary number. Simultaneously, the six additional cameras at the channel capture various views: frontal top-view full body, frontal shoulder-level view, left rear top-view full body, left rear shoulder-level view, right rear top-view full body, and right rear shoulder-level view. As illustrated in Figure 4, the system establishes a pedestrian feature database linked to each person’s identity or temporary number, storing top-view full body features and shoulder-level view features. In real-world scenarios, capturing pedestrian features can sometimes result in blurred or partially obscured images. Furthermore, upon entry onto the top platform, pedestrian occlusion caused by device obstruction can impede Re-ID processes. To address this challenge, pedestrian features are categorized into four subsets: head and shoulder, body, arm, and leg. These subset features are stored in the database as body part features, which are subsequently integrated with the overall pedestrian features to enhance the accuracy of pedestrian Re-ID.

We developed a human body part recognition model through the creation of self-made parts of human body (PHB) dataset and subsequent training. The model includes four labeled categories: head, body, arm, and leg. From the top-view image, the model primarily extracts frontal head and shoulder features. From the shoulder-level view image, it mainly captures body, arm, and leg features, as illustrated in Figure 5.

3.3. Pedestrian Re-ID

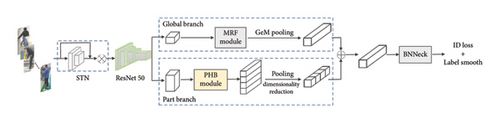

Train maintenance personnel entering the roof platform via the access control channel have their identity information, such as name and number, confirmed through face identification, correlating with their clothing, hairstyle, and other details. Pedestrian Re-ID is relatively straightforward under these conditions. Due to personnel working with tools and being in proximity to equipment, their bodies can be partially obstructed, and their postures may change frequently. This situation can lead to the loss of crucial features, affecting identification accuracy. To tackle this challenge, we developed a pedestrian Re-ID model integrating whole-body and body part features. We leveraged the bag of tricks and a strong Re-ID baseline (RSB) open-source codes [26, 27] to design the model. The network structure is illustrated in Figure 6.

The workflow is outlined as follows: Initially, the pedestrian image undergoes spatial transformation via the STN for feature alignment. Subsequently, it enters the ResNet50_IBN backbone network, pretrained on the ImageNet dataset, for feature extraction. Within the backbone network, the last pooling layer and full connection layer are excluded. To generate larger and more comprehensive feature maps, the convolutional step in the fourth stage of the network is set to 1 [28].

The holistic branch then channels the processed feature map into the multireceptive field (MRF) module, followed by generalized-mean (GeM) pooling [29]. Meanwhile, the parts branch utilizes the PHB recognition module to discern key body part features in the image. Upon obtaining the component feature map, fusion with the overall feature map occurs via the attention network, resulting in the final image feature.

Subsequently, the image features traverse the batch normalization neck (BNNeck) module, and the network undergoes supervised training employing the classification loss with label smoothing.

3.3.1. Holistic Branch

The holistic branch functions to glean the overarching features from pedestrian images, amalgamating multisensory field fusion to extract global feature information of pedestrians. Fine-grained features are subsequently acquired through GeM pooling.

The MRF fusion module comprises three branches, each utilizing 3 × 3 convolution to perform the cavity convolution operation on the input feature map. The cavity rates are set to 1, 2, and 3, respectively, enabling an expanded receptive field without adding to the parameter count. Furthermore, the channel attention module calculates the attention weight coefficient for each branch head and applies it to the corresponding feature map. Subsequently, the channel-weighted features are combined to yield the ultimate comprehensive output features.

3.3.2. Parts Branch

The parts branches are employed to discern the characteristics of pedestrian body parts within images. Currently, most local feature segmentation methods utilize uniform segmentation, which retains background information. Moreover, pedestrian features may lack distinctiveness due to attire such as overalls, increasing the likelihood of misidentification. Therefore, enhancing the recognition of local distinguishing information is crucial. This study adopts a component recognition approach to extract local data from pedestrian images. The methodology involves using a trained human body parts recognition network to identify body parts in pedestrian pictures and extract head and shoulder, body, arm, and leg details of pedestrian features. This ensures that each sub-block contains comprehensive component information, effectively minimizing background information and enabling the network to focus on pedestrian-specific local variations. This strategy not only mitigates identification challenges posed by pedestrian or equipment obstruction but also enhances feature learning effectiveness.

3.3.3. Feature Fusion

The feature maps from the holistic branch and parts branch offer distinct sets of information, and their integration can enhance the overall performance of network models. Commonly employed fusion techniques include sum fusion, max fusion, and concatenation fusion.

In this equation, Fcat denotes the outcome of concatenation fusion, while d represents the number of channels. Additionally, and signify the eigenvalues of the entire and component features at the position (i, j), respectively.

3.3.4. Loss Function

In this equation, LID represents the classification loss postlabel smoothing, while LTriplet signifies the triplet loss and LC denotes the center loss. β denotes the weight parameter.

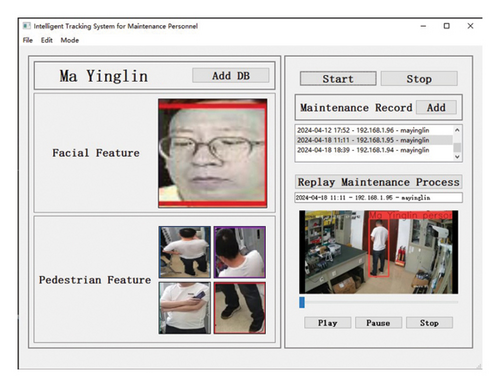

3.4. Personnel Tracking and Process Reproduction

The roof platform is outfitted with several surveillance cameras. Upon detecting a pedestrian, these cameras capture the pedestrian’s image, which is then compared with pedestrian characteristic data for identification purposes. Subsequently, their movement track is recorded and stored in the database. This enables DCC to reproduce the operational process as needed, forming the foundation for our subsequent efforts in identifying abnormal behavior based on movement trajectories. The monitoring interface, designed using PyQt5, is illustrated in Figure 7.

4. Experimental Environment and Results

The experiment in this study comprises three distinct phases. Firstly, we conduct a series of experiments to evaluate the effectiveness of the pedestrian Re-ID model, which integrates both holistic and parts features. This includes ablation experiments and comparative analyses against the existing methodologies.

In the second phase, we conducted a comprehensive evaluation of the entire system. We curated a dataset for facial-enhanced pedestrian Re-ID, leveraging the Market1501 and DukeMTMC-reID datasets. This enabled us to conduct identification experiments and assess the effectiveness of our technology in this aspect.

Subsequently, we proceeded with field experiments where the system’s hardware and software were deployed within the control area. Through this approach, we conducted exhaustive end-to-end testing to validate the overall functionality and performance of the system.

4.1. Pedestrian Re-ID Model Performance Experiment

4.1.1. Experimental Environment

The dataset used for this study comprised Market1501 and DukeMTMC-ReID datasets. Market1501 dataset encompasses 32,668 images, featuring 1501 pedestrians, captured across six cameras. On the other hand, DukeMTMC-ReID dataset includes a total of 36,411 images, featuring 1812 pedestrians, obtained from eight cameras [32, 33].

The evaluation metrics employed in this study encompass mean average precision (mAP) and Rank-n. mAP is computed by summing and averaging the AP across multiple classification tasks, providing an overview of the accuracy of the retrieval outcomes. Rank-n signifies the percentage of correct matches within the top n results retrieved from the pedestrian image database for all queried images.

4.1.2. Experimental Results

We adopt an approach that integrates both the features of body parts and overall pedestrian features to enhance pedestrian Re-ID effectiveness. The experiment validates the necessity of incorporating these two types of feature recognition. Throughout the experiment, we separately conducted training and testing on the holistic pedestrian branch and the parts branch, as well as on the integrated network. The outcomes are presented in Table 1. The data clearly illustrate that the performance of the holistic branch and the parts branch is inferior to that of the fusion network. This observation validates that the holistic branch and the parts branch offer distinct feature expressions, and after fusion, they provide a richer set of information.

| Network architecture | Market1501 (%) | DukeMTMC-ReID (%) | ||

|---|---|---|---|---|

| mAP | Rank-1 | mAP | Rank-1 | |

| Holistic branch | 85.9 | 94.5 | 76.4 | 86.4 |

| Parts branch | 86.1 | 94.9 | 77.5 | 87.8 |

| Holistic + parts | 87.6 | 95.7 | 79.3 | 89.4 |

In evaluating the model’s performance, we undertook a thorough comparative analysis with several state-of-the-art network models commonly utilized in contemporary research. Specifically, our evaluation encompassed the following models: the PCB network, which utilizes pedestrian segmentation for local feature extraction; the SVDNet, integrating singular value decomposition within the fully connected layers; the mixed high-order attention network (MHN); the RSB model, serving as a robust benchmark for person Re-ID tasks; and a range of transformer-based models, including ViT, Swin Transformer, and various hybrid CNN-transformer approaches. A detailed summary of the comparative results is presented in Table 2.

| Network models | Market1501 (%) | DukeMTMC-ReID (%) | ||

|---|---|---|---|---|

| mAP | Rank-1 | mAP | Rank-1 | |

| PCB [9] | 81.6 | 93.8 | 69.2 | 83.3 |

| SVDNet [34] | 62.1 | 82.3 | 56.8 | 76.7 |

| RSB [7] | 85.9 | 94.5 | 76.4 | 86.4 |

| MHN [35] | 85.0 | 95.1 | 77.2 | 89.1 |

| ViT-transformer [36] | 83.3 | 93.5 | 73.3 | 85.7 |

| Swin-Transformer [37] | 83.7 | 93.7 | 75.9 | 85.5 |

| CNN-transformer | 85.3 | 94.7 | 76.5 | 86.5 |

| CNN-transformer (RK) [38] | 93.5 | 95.6 | 88.8 | 90.4 |

| Ours | 87.6 | 95.7 | 79.3 | 89.4 |

The results presented in Table 2 demonstrate notable performance metrics of our network model on the Market1501 dataset. Specifically, the mAP and Rank-1 values achieve 87.6% and 95.7%, respectively. Comparing with the RSB, our model exhibits a 1.7% increase in mAP. Comparing with the MHN, our model exhibits a 0.6% increase in Rank-1. Similarly, when evaluating on the DukeMTMC-reID dataset, our model achieves an mAP of 79.3% and a Rank-1 of 89.4%. Compared to the MHN, our approach demonstrates improvements with an increase of 2.1% in mAP and 0.3% in Rank-1. It is noteworthy that Table 2 also illustrates the performance of the CNN-transformer models combined with a reranking strategy, which surpasses that of our proposed method. However, it should be emphasized that this improvement is primarily attributable to the reranking process, rather than to the CNN-transformer architecture itself [39]. These findings, therefore, substantiate the effectiveness of the proposed approach in enhancing person Re-ID accuracy.

Simultaneously, it can be observed that the metrics of our proposed method are very close to those of MHN. This similarity may be attributed to the fact that MHN is essentially an enhanced self-attention structure, which, by considering higher-order relational patterns, improves the model’s comprehension of both local and global features, akin to our proposed method. However, the implementation of MHN’s higher-order attention mechanism is relatively complex, requiring more computational resources and time, making it challenging to directly apply to real-time tracking systems.

4.2. Pedestrian Identification Performance Experiment

Given the considerable influence of variables such as pedestrian attire and hairstyle on identification accuracy, pedestrian Re-ID technology often falls short of the precision achieved by facial identification. Meanwhile, face images may not always be accessible in various scenarios. To address this challenge, our approach takes an indirect route: by imposing constraints on spatial and temporal factors and to minimize variations in pedestrian appearance. On this basis, we leverage face identification as an intermediary to link pedestrian appearance information with identity.

4.2.1. Experimental Environment and Methodology

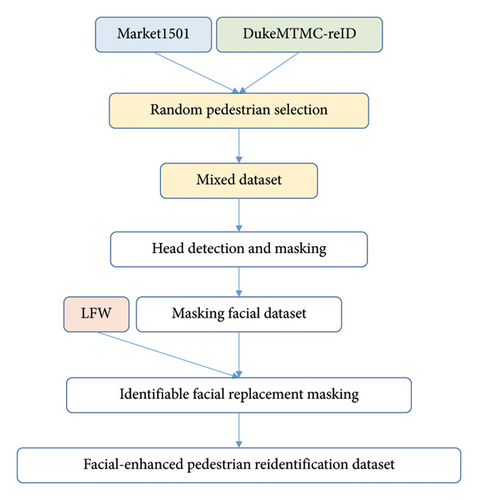

The accuracy of this conversion hinges on the effectiveness of the face detection, identification, and pedestrian Re-ID models employed in our study. Notably, direct facial recognition proves challenging due to the presence of blurred images within datasets such as Market1501 and DukeMTMC-reID. Conversely, datasets, such as LFW, tailored for face identification, lack essential pedestrian appearance features. To bridge this gap, we adopted a hybrid approach, as illustrated in Figure 8. We initially selected 1400 individuals from the Market1501 dataset and 1600 from DukeMTMC-reID, totaling 28,452 images. Following the screening process, which identified 27,368 recognizable pedestrian images, we applied a head detection algorithm to pinpoint pedestrian heads in the images and simulate mask effects. Subsequently, we randomly sampled facial images of 3000 individuals from the LFW dataset, adjusting their sizes to match those of the obstructed pedestrian heads, and integrated them into the encoded facial dataset. This process culminated in the creation of the facial-enhanced pedestrian Re-ID dataset, leveraging the facial features and corresponding names of 5749 pedestrians from the LFW dataset as the cornerstone of our facial feature database.

The simulation verification process unfolds as follows: Individuals are sequentially extracted from the verification dataset, and their facial images are detected by the software, which then retrieves information from the facial signature database. Upon confirming the identity (name) information, the software proceeds to extract n images at regular intervals from the row of individuals’ pictures, storing them in the pedestrian feature database. Once the pedestrian features of 3000 individuals are cataloged in the database, the unsampled pedestrian images a undergo comparison with the images in the feature database to ascertain their identities, with technical metrics such as accuracy being calculated accordingly.

4.2.2. Experimental Results

In light of the dearth of similar research, our primary objective centers on comparing the identification performance across varying numbers of feature images, covering metrics such as TAR, FRR, FAR, and speed, as outlined in Table 3.

| n | TAR (%) | FRR (%) | FAR (%) | Speed (s) |

|---|---|---|---|---|

| 1 | 78.1 | 21.9 | 32.4 | 3.2 |

| 2 | 82.6 | 17.4 | 21.3 | 5.8 |

| 4 | 84.4 | 15.6 | 20.6 | 11.1 |

The data presented in Table 3 reveal that with four images extracted, the identification accuracy peaks at 84.4%, albeit at the expense of increased processing time. This can be attributed to the need for multiple comparisons of the extracted image features, resulting in longer processing durations with a greater number of images. Given our direct comparison of original face images from the face feature database, assuming a face identification accuracy rate of 100%, the system’s performance predominantly hinges on the effectiveness of the pedestrian Re-ID model. Notably, the InsightFace open-source model achieved an accuracy rate of 99.18% on the LFW dataset [40], substantially surpassing the accuracy rate of the pedestrian Re-ID model. Therefore, the hypothesis set forth in the simulation experiment aligns closely with real-world conditions.

4.3. Field Experiment

We conducted field experiments on a simulated top platform featuring a single access control channel. At the channel entrance, we deployed a group of A-type cameras and a set of A-minus–type camera crews. The platform spans approximately 30 m in length, with its widest part measuring about 6 m. To cover the equipment layout on the platform, we arranged three groups of B-type camera units. Additionally, a dedicated server and intelligent tracking and monitoring software were set up at the console. For the face signature database, we utilized facial information sourced from the access control system of the experimental hall.

Our field experiment spanned 30 days, during which a total of 109 individuals accessed the experimental platform. Among them, 72 people were successfully captured and identified by the A-type camera, while the remaining 37 individuals also had their faces captured. However, five of them could not be identified due to blurred faces, and the other 32 were temporary construction workers whose facial information was not available in the signature database. To address this, we devised a temporary personnel database, associating their facial information with pedestrian characteristics and assigning temporary numbers in place of real names.

Meanwhile, the B-type camera crew recorded 208 videos of pedestrian activities. Out of these, 187 videos were correctly identified under the assumption that the temporary numbers corresponded to their names, achieving an accuracy rate of 89.9%. However, there were 14 segments that were misidentified (6.7%) and seven segments that could not be recognized (3.4%). Notably, the unrecognized video clips exhibited significant feature occlusion, while most of the misrecognized images lacked obvious occlusion but featured indistinct parts with similar characteristics dominating the pictures.

5. Conclusion

To strengthen train maintenance personnel safety management, we devised an intelligent tracking system based on machine vision technology. This system enables continuous intelligent monitoring and control throughout operations, assisting DCC in overseeing maintenance activities while significantly reducing their workload and elevating safety management standards.

To identify the train maintenance personnel accessing the platform, we integrated face identification and pedestrian Re-ID technologies. By deploying specialized surveillance cameras and employing a feature fusion approach combining body part and overall features, the identification performance for obscured pedestrians was enhanced. Our experimental findings validate the effectiveness and practical utility of this technological solution.

However, our experiments also revealed certain limitations. Firstly, the pedestrian feature comparison process proved time-consuming, particularly within a database of 3000 individuals, where identification took approximately 11.1 s per instance. Consequently, its applicability may be constrained to settings with fewer registered personnel. Secondly, the accuracy rate fell short of expectations. Furthermore, the prevailing methodology requires the deployment of a substantial number of surveillance cameras, which presents considerable challenges in terms of cost management. Therefore, we are exploring the use of fewer cameras as an optimization solution.

Conflicts of Interest

The authors declare no conflicts of interest.

Funding

This work was supported by the National Key R&D Program of China under grant number: 2022YFB2603200.

Acknowledgments

The authors would like to thank the research team for their invaluable assistance and advice.

Open Research

Data Availability Statement

The data that support the findings of this study are available from the corresponding author upon reasonable request.