Pump Scheduling Optimization in Urban Water Supply Stations: A Physics-Informed Multiagent Deep Reinforcement Learning Approach

Abstract

In the urban water supply system, a significant proportion of energy consumption is attributed to the water supply pumping station (WSPS). The conventional manual scheduling method employed by water supply enterprises imposes a considerable economic burden. In this paper, we intend to minimize the energy cost of WSPS by dynamically adjusting the combination of pumps and their operational states while considering the pressure difference of the main pipe and switching times of pump group. Achieving this goal is challenging due to the lack of accurate mechanistic models of pumps, uncertainty in environmental parameters, and temporal coupling constraints in the database. Consequently, a WSPS pump scheduling algorithm based on physics-informed long short-term memory (PI-LSTM) surrogate model and multiagent deep deterministic policy gradient (MADDPG) is proposed. The proposed algorithm operates without prior knowledge of an accurate mechanistic model of the pump units. Combining data-driven with the physical laws of fluid mechanics improves the prediction accuracy of the model compared to traditional data-based deep learning models, especially when the amount of data is small. Simulation results based on real-world trajectories show that the proposed algorithm can reduce energy consumption by 13.38% compared with the original scheduling scheme. This study highlights the potential of integrating physics-informed deep learning and reinforcement learning to optimize energy consumption in urban water supply systems.

1. Introduction

Water purification plants are an important part of the city’s infrastructure system. The normal operation of the pumping station is crucial for maintaining the proper functioning of the water treatment facilities in the water purification plant and ensuring a reliable water supply to the town. Water purification plants have a large energy consumption, of which water supply pumping stations (WSPSs) account for a large part [1]. The manual scheduling method lacks scientific theoretical guidance as well as long-term planning, and the irrational method brings unnecessary economic burden to the water plant. Therefore, it is very necessary to improve energy efficiency and reduce energy consumption [2]. While ensuring that the pressure difference of the main pipe and switching times of the pump group are within a safe range, minimizing energy consumption can improve the working efficiency and save the operating cost of the waterwork, which is of great significance to saving urban energy consumption and reducing carbon dioxide emissions [3].

Surrogate models are pivotal for alleviating the computational burden associated with multiquery tasks and are crucial for ensuring the efficient and reliable operation of water supply systems. Surrogate modeling for the pumping stations can be categorized into two principal methodologies: physical modeling and data-driven approaches. Physical modeling involves developing detailed simulations of pump groups or piping networks in water treatment facilities, refined via calibration and parameter identification from operational data to predict operational parameters [4, 5]. However, crafting accurate and controllable physical models for waterwork is particularly challenging. It requires the integration of various complex factors, including the dynamic characteristics of pump units, intricate pipe network layouts, nonlinear fluid mechanics, pump performance curves, mechanical properties, and energy losses. Additionally, the state of maintenance, aging, and consequent changes in the performance of pumps and pipes present significant challenges to the long-term accuracy of these models. The efficacy of this approach is heavily dependent on the specific waterwork environment, which limits its generalizability when applied to diverse waterwork contexts [6]. In contrast, data-driven surrogate models, such as support vector machines [7], radial basis functions [8], and long short-term memory (LSTM) [9], operate akin to black boxes. These models do not require a priori knowledge or insight into the underlying system during the learning process, which facilitates ease of use, particularly for complex systems that are not fully understood. LSTM networks can retain a memory of periodic features in time series data by incorporating gate nodes, leading to more accurate predictions [10]. Their function approximation capabilities exceed those of traditional neural network architectures, which has contributed to their growing popularity in recent years [11]. However, they largely depend on the assumption that sufficient data are available to train deep learning models [12]. Black box models can encounter difficulties when the available dataset lacks coverage for certain process variables, especially those that operate at infrequent points. Collecting extensive datasets is often time-consuming, costly, and challenging, and purely data-driven models may not always conform to physical constraints. In comparison to purely data-based deep learning models, physics-informed deep learning integrates data-driven approaches with physical laws, significantly enhancing the predictive accuracy, generalizability, and physical interpretability of the models. By incorporating system dynamics into its loss function, it can accurately and a priori identify nonlinear systems even with smaller datasets and limited computational power. Recently, physics-informed deep learning models have been applied to chaotic systems [13], nonlinear structures [14, 15], structural responses [16], and wind turbine responses [17]. The successful implementation in these areas has inspired application in fluid mechanics where the flow phenomena can be described by the Navier–Stokes equations [18]. However, their application to waterwork pump scheduling remains unexplored.

In recent years, several approaches have been used to solve the problem of scheduling and operational optimization of pump groups in waterwork. These methods include both rule-based algorithms and optimization techniques, such as genetic optimization algorithms [19], dynamic programming [20, 21], particle swarm optimization [22], metaheuristics [23], and more advanced methods such as reinforcement learning [24, 25] and deep reinforcement learning (DRL) [26, 27]. Rule-based algorithms are straightforward and easy to interpret but often lack the flexibility to adapt to new or changing environments. Optimization techniques such as dynamic programming require an exact mathematical formulation of the problem and are usually unsuitable for dealing with the stochastic nature of real-world systems. Genetic algorithms and particle swarm optimization introduce stochastic search methods that can explore a wider solution space but may converge to suboptimal solutions and require extensive tuning of hyperparameters. DRL has made significant progress in solving complex scheduling and optimization problems. Deep deterministic policy gradient (DDPG) integrates policy gradient and Q-learning techniques to effectively optimize policies in high-dimensional continuous action spaces [28]. Additionally, soft actor-critic (SAC) incorporates an entropy regularization term to balance exploration and exploitation during policy optimization, thereby enhancing policy robustness and convergence speed [29]. In [30], a duel-depth Q network is proposed to train an agent that controls the speed of the pump based on the pressure of the instantaneous node. In [31], knowledge-assisted near-end policy gradient is performed using historical nodal pressure data from waterworks to generate optimal parameter trajectories, accommodating pumping station topology and adapting to time-varying water demand. The above methods use a single agent model to control the pumps, and energy-saving studies for WSPSs have not been carried out. Considering the energy-saving scheduling of fixed-frequency pumps and variable-frequency pumps together, directly using a single agent to control the pump group will lead to a sharp increase in the action space of the agent, resulting in low learning efficiency. While ensuring the safety of water pumps and meeting water supply requirements, it is difficult to effectively save energy consumption. In fact, the water supply pump group of the waterwork contains multiple pumps with different parameters, and the waterwork scheduling requires coordinated control between multiple pumps.

Based on existing research, this paper investigates a new method to minimize the energy consumption of pump group in WSPS. The method considers minimizing the switching times of pump group in a day and reducing the pressure difference of the main pipe in adjacent time periods. The objective is to optimize the scheduling of pump group in WSPSs, controlling the combination of pumps and frequency of variable-frequency pump to minimize energy costs while ensuring that the pressure difference of the main pipe and switching times of pump group remain within safe limits. However, there are several challenges to achieving these goals. Firstly, the dynamic modeling of the system is not sufficiently well defined. Secondly, there are spatiotemporal coupling constraints in the database. Finally, uncertain parameters such as the dynamic environment further complicate the problem. Given these challenges, existing model-based or model-free approaches are not suitable for solving our specific problem. To overcome these obstacles, a Markov game formulation is employed to address the challenge of optimal multipump scheduling in WSPS, utilizing a multiagent system for cooperative management of several pumps to enhance energy efficiency, all the while adhering to constraints regarding the pressure difference of the main pipe and switching times of pump group. Then, a PI-LSTM-based surrogate model is developed to incorporate the laws of fluid mechanics into a LSTM network, which accurately predicts the pressure of the main pipe and energy consumption of the pump group. It is used to provide a simulation environment for agent training, eliminating the need to know the pumps’ precise mechanistic model and specific water demand. Furthermore, a water supply pump group control algorithm based on the surrogate model and multiagent deep reinforcement learning (MADRL) [32] is proposed. Each pump agent learns from the dynamic environment and its own experience while taking into account the presence and potential actions of other pump agents, which facilitates flexible and computable coordination among different agents, thus achieving efficient management of the water supply system. Finally, extensive simulations based on real-world trajectories are performed to evaluate the performance of the proposed algorithm. The simulation results demonstrate the effectiveness and robustness of our approach.

2. Methods

2.1. System Model and Problem Formulation

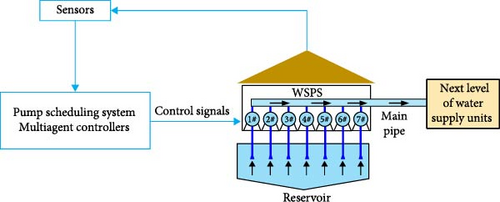

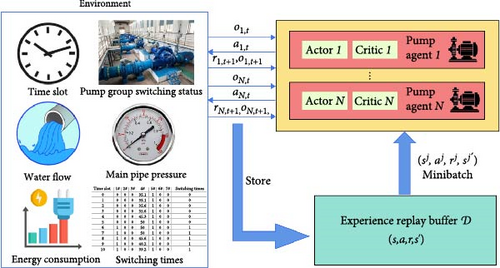

The system model of a waterwork is shown in Figure 1. The WSPS within the waterworks is controlled by a pump scheduling system with multiple fixed-frequency pumps and variable-frequency pumps. We assume that there are N pumps, including M fixed-frequency pumps and N − M variable-frequency pumps. The objective of this paper is to minimize the energy consumption of the WSPS by dynamically adjusting the combination of pumps and their operational states. The scheduling period is assumed to be 1 hr in this paper. To describe the scheduling time more clearly, a day is divided into 24 time slots, i.e., 0 ≤ t ≤ T − 1, where T = 24.

2.1.1. Target Constraint

To ensure the production safety and efficient operation of the waterwork, this paper introduces three key constraints: an upper limit on the pressure difference of the main pipe, an upper limit on the switching times of pump group per day, and a range of operating frequencies for the variable-frequency pumps.

2.1.2. Energy Minimization Problem

However, solving the problem directly has significant challenges. Firstly, high dimensionality and complexity are a major obstacle. Since the optimization problem considers multiple pumps, each of which may have different operating states at different time slots, the state space and decision space are unusually large. This not only increases the computational burden but also makes it more difficult to find the global optimal solution. Then, the uncertainty of the dynamic environment further increases the solution difficulty. Key environmental parameters may change over time, and these changes are often difficult to predict accurately, which means that the solution needs to be able to adapt to possible changes to maintain its effectiveness and robustness.

Given these challenges, transforming the problem into a Markov game provides an efficient solution path. In the Markov game, the pump system is considered as a multiagent system, where each pump is an agent that interacts with each other in a given environment with the common goal of minimizing the overall energy consumption.

2.1.3. Problem Reformulation

Markov game is a multiagent extension of Markov’s decision-making process. Specifically, Markov games can be defined by a series of states, behaviors, state transfer functions, and reward functions. In the Markov game, each agent maximizes its expected return (i.e., the expected value of the cumulative discount reward) based on its current state and choice of its behavior. In this study, each agent i is designated as a pump controller, with the environment encompassing everything interacting with the agents. The objective for each agent is to maximize the cumulative discount rewards received in the future from state st and action at. Then, the state, action, and reward function in the Markov game are designed.

(1) State. Energy consumption of WSPS is related to pt, qt, vt, ki,t and fi,t, with all state components being time-dependent, linked to time slot t. The local observed state of the agent is expressed by oi,t. Due to the limited availability of local observation information pertaining to the state, the state of each fixed-frequency pump agent can be designed as follows, si,t = oi,t = (pt, qt, vt, ki,t), 1 ≤ i ≤ M, while the state of each variable-frequency pump agent can be designed as follows, si,t = oi,t = (pt, qt, vt, ki,t, fi,t), M < i ≤ N, where pt is the pressure of the main pipe at time slot t, qt is the water supply flow at time slot t, vt is the number of switching at time slot t, ki,t(1 ≤ i ≤ N) is each pump’s switch condition at time slot t, and fi,t(M < i ≤ N) is the operating frequency of the ith variable-frequency pump at time slot t.

(2) Action. The behavior at time slot t is denoted by at, at = (a1,t, …, aM,t, aM+1,t, …, aN,t). For fixed-frequency pump agents, ai,t = mi,t, 1 ≤ i ≤ M, and for variable-frequency pump agents, ai,t = {mi,t, Δfi,t}, M < i ≤ N, where mi,t ∈ {0, 1}, 1 ≤ i ≤ N denotes the switching action of each agent at time slot t, mi,t = 0 denotes that the ith pump is switched off at time slot t, mi,t = 1 denotes that the ith pump is switched on at time slot denotes the frequency change of the ith variable-frequency pump at time slot t.

-

(1) The penalty associated with WSPS energy consumption Φt is defined as follows:

-

(2) The penalty for violation of the pressure difference of the main pipe is defined as follows:

-

(3) The penalty for switching pump i at time slot t is defined as follows:

-

(4) The penalty for violating the switching times of pump group per day is defined as follows:

Among them, α1 is the important factor of the penalty caused by the violation of the safety limit of pressure difference relative to the energy consumption penalty cost, α2 is the important factor of the penalty caused by switching pumps relative to the energy consumption penalty cost, and α3 is the important factor of the penalty caused by the violation of the safety range of the switching times relative to the energy consumption penalty cost.

To promote the efficient training of agents, the specific values of these weight coefficients were fine-tuned through experiments and expert opinions to find the optimal balance. In practice, their values should be chosen so that α1C2,t, α2C3,t, and α3C4,t are comparable to C1,t. Therefore, in this study, α1 = 0.03, α2 = 0.94, and α3 = 3.76.

2.2. Water Supply Pump Group Control Algorithm

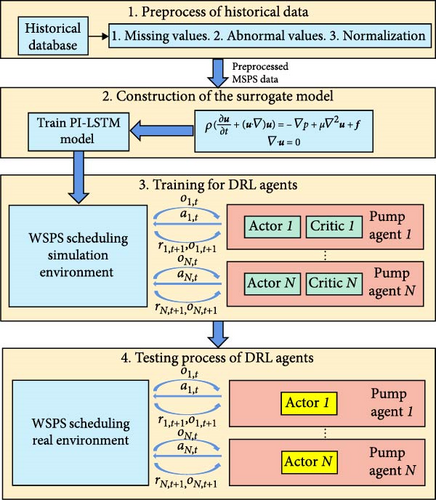

To solve the Markov game in Section 2.1.3, a pump scheduling algorithm for WSPSs based on surrogate model and MADDPG is designed. Employing PI-LSTM as a surrogate model provides a virtual training environment for DRL agents [33]. It obviates the need for direct interaction between multiagent systems and the actual WSPS environment, thereby reducing exploration costs and mitigating associated safety concerns [34]. The framework of the proposed pump scheduling algorithm is shown in Figure 2.

2.2.1. Surrogate Model

Empirical physical models typically cover only common pump combinations [3], whereas multiagent reinforcement learning requires the exploration of novel pump combinations to derive optimization strategies independent of prior experience. To address this need, deep learning-based surrogate models can efficiently simulate the operation state under different pump group configurations to quickly provide an accurate simulation environment for training reinforcement learning agents. Accurate prediction of energy consumption and the main pipe pressure is very important for planning the reliable and effective implementation of WSPS scheduling, so we established a PI-LSTM model to specialize in time series data. The model takes the data related to the state behavior of the pump group of the waterwork at the beginning of the time slot as the input and then outputs the energy consumption Φt+1 and the main pipe pressure pt+1 at the end of the time slot t.

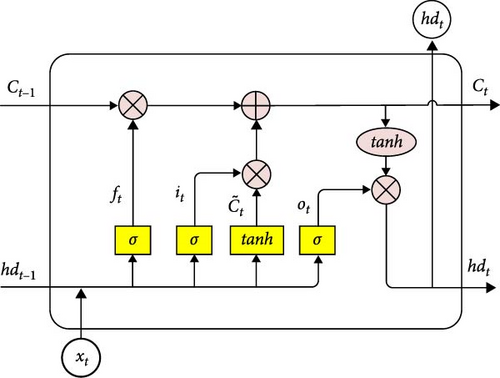

(1) The LSTM cell

LSTM neural network is comprised of a memory unit and a gating unit, primarily achieving information preservation and control through three gates: the input gate, forget gate, and output gate. The working principle of the neural network t moment is shown in Figure 3.

(2) Fluid mechanics equation

- (i)

Navier–Stokes equation

- (ii)

Continuity equation

The Navier–Stokes and continuity equations are central to modeling fluid flow and pressure in pipes, and integrating them into the LSTM model can enhance its predictive accuracy.

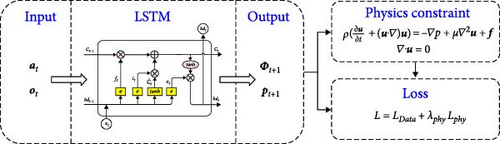

(3) The PI-LSTM Model

The structure of the proposed PI-LSTM model is shown in Figure 4. This model is designed to integrate the core principles of fluid mechanics with the predictive capabilities of machine learning. The construction of the model adheres to empirical data as well as established physical laws, thereby enhancing the reliability and accuracy of its predictions.

The loss function is critical for training neural networks. It calculates the deviation between the predicted and actual data to guide the optimization algorithm to adjust the network weights and biases of the network to minimize the loss [35]. It is typically composed of two main parts: the data loss Ldata and the physical constraint loss Lphy. Here is how each of these components is defined.

The loss function is related to the mean square error term and the system dynamics. Most neural network models only use the mean square error as their loss function. This is the basic difference between other neural networks and physical information neural networks [36].

2.2.2. Training Process of the Proposed Algorithm

To train DRL agents effectively, the MADDPG algorithm is adopted [37]. The algorithm aims to enhance the actor-critic framework and the DDPG algorithm by incorporating a centralized training and decentralized execution paradigm. This approach enables the algorithm to tackle intricate multiagent environments, where traditional single-agent reinforcement learning techniques fall short [38, 39].

The framework of MADDPG algorithm is shown in Figure 5, where agents can be identified and each agent consists of one actor network, one critic network, one target actor network, and one target critic network. The actor network and target actor network have the same network architecture, while the critic network and target critic network have the same network architecture.

In the MADDPG algorithm environment, there are N agents. For the ith agent, the current state is si, the next state is , and the reward is ri. Each agent has its own action strategy μi, policy parameter θi, and action ai, . For all the agents, we define the state set is s = (s1, …, sN), the reward set is r = (r1, …, rN), the action set is a = (a1, …, aN), the action strategy set is μ = (μ1, …, μN), and the policy parameter set is . The centralized action-value function of each agent is denoted as , which combines the states and actions of all agents.

In the decision-making process, all the agents interact with the environment and generate and store data in their own experience replay buffer . For the ith agent, the array stores in the experience replay buffer. When updating the network, groups of data are randomly taken from each agent’s experience replay buffer of each agent and then spliced to obtain new experience (s, a, r, s‘), which is used for training the network.

Algorithm 1 is the pseudocode of MADDPG algorithm for WSPS scheduling.

-

Algorithm 1: Multiagent deep deterministic policy gradient algorithm for N agents.

-

Initialize: the actor’s evaluation and critic’s target networks for each pump agent

-

01:for episode = 1 to M do

-

02: Initialize a radon process for action exploration

-

03: Receive initial state s

-

04: for t = 1 to max-episode-length do

-

05: for each pump agent i, select action w.r.t. the current policy and exploration

-

06: Execute actions a(t) = (a1(t), …, aN(t)) and observe reward r and new state s′

-

07: Store (s, a, r, s′) in replay buffer

-

08: s←s′

-

09: for agent i = 1 to N do

-

10: Sample a random minibatch of samples (sj, aj, rj, s′j) from

-

11: Set

-

12: Update critic by minimizing the loss

-

-

13: Update actor using the sampled policy gradient:

-

-

14: end for

-

15: Update target network parameters for each agent i:

-

-

16: end for

-

17:end for

2.2.3. Testing Process of the Proposed Algorithm

After finishing the training process of the algorithm, the obtained actor networks can be adopted for practical pump scheduling. To be specific, in each time slot t, each pump controller observes the state oi,t and takes action ai,t ~ πθ(⋅|oi,t) in parallel. Then, all actions are executed. At the end of time slot t, new state oi,t is observed by pump controller i. The above decision process repeats until the end of slot Htest. Based on the above description, it can be inferred that the proposed algorithm can support real-time decision based on the current system state. Since just the forward propagation of deep neural networks is involved, the proposed algorithm has low computational complexity. Algorithm 2 is the proposed test code.

-

Algorithm 2: The proposed MADDPG-based WSPS scheduling algorithm.

-

Input: The weights of the actor network, i.e., θ

-

Output: Action at

-

1 All agents receive initial local observation o1 = (o1,1, ⋅⋅⋅oN+1)

-

2 for t = 1, 2, · · ·, Htest do

-

Each agent i selects its action ai,t in parallel according to the learned policy πθ(⋅|oi,t) at the beginning of slot t

-

4 Each agent i takes action ai,t in parallel, which affects the operation of the control system

-

5 Each agent i receives new observation oi,t+1 at the end of slot t

-

6 end

3. Results and Discussion

In simulations, real-world waterwork operation data related to the Baiyangwan Waterwork in Suzhou City, China, during November 1, 2020, and April 30, 2021, are used. Surrogate model uses 80% of the data as a training set and 20% as a test set. All DRL agents are trained using the data during November 1, 2020, and February 28, 2021, while the remaining data are used for performance testing.

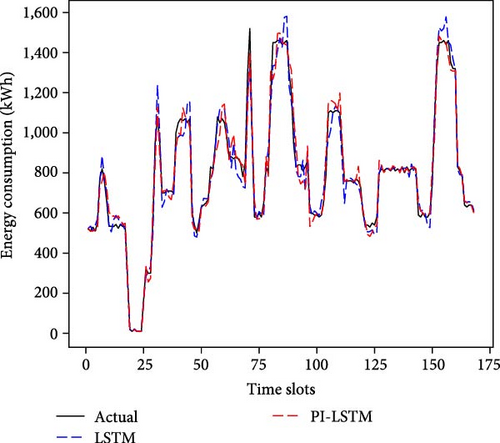

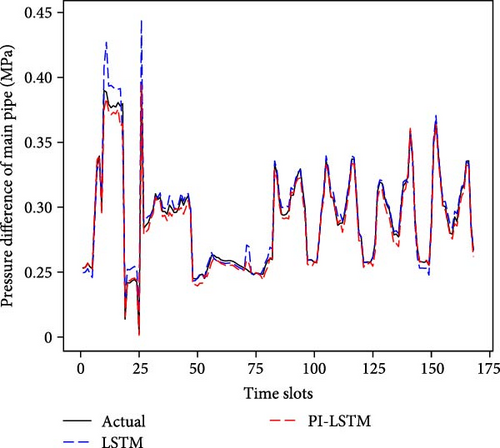

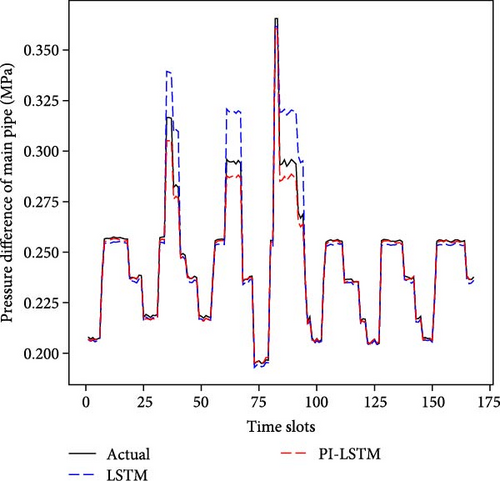

3.1. Performance of Surrogate Model

Figure 6(a) shows the comparison of energy consumption prediction for a week, and Figure 6(b) shows the comparison of stress prediction for a week. To assess the generalizability of the PI-LSTM model, especially in the case of limited data availability, we halved the data for the experiment, and Figure 6(c) shows the comparison of pressure prediction for a week in that case. As can be seen in Figure 6(a), both models can capture the general trend and major fluctuations in actual energy consumption better. Although the predicted values of the PI-LSTM model are closer to the actual values than those of the LSTM at some points, especially during the peak energy consumption period, in general, the predictive performances of the two models are very similar. The result suggests that, although the PI-LSTM model incorporates fluid mechanics laws, the contribution of this information to the enhancement of energy consumption prediction accuracy is marginal. In this case, the standard LSTM model already captures the key features of the energy consumption of the WSPS better, and the additional physical information does not significantly improve the prediction. As can be seen in Figure 6(b), both models effectively track the general trend of actual pressure. The PI-LSTM model generally exhibits a tighter fit, while the LSTM predictions show larger deviations at certain points, particularly during instances of pressure fluctuation. It shows that the PI-LSTM model, which introduces the laws of fluid mechanics as constraints in the framework and takes into account the movement of fluid and pressure distribution in the pipe, is able to capture the intrinsic laws of pressure changes more accurately compared to LSTM. These physical laws provide additional prior knowledge that helps the model better predict complex pressure dynamics. The standard LSTM model, on the other hand, mainly relies on the laws learned from historical data and lacks the consideration of the underlying physical processes, and the prediction is not accurate enough when facing complex pressure dynamics. As can be seen in Figure 6(c), the PI-LSTM model is still able to generate pressure predictions that are very close to the actual values, despite the fact that the training data is reduced by half. In contrast, the performance of the LSTM model is significantly degraded, with large deviations of the predictions from the actual pressure at more time points. The PI-LSTM model maintains a high predictive accuracy even with less data due to the fluid mechanics equations offering a priori constraints on pressure dynamics, acting as a physics-driven regularization term that allows the model to follow physical laws in making predictions, instead of solely relying on data-driven learning. The standard LSTM model, which is heavily data-dependent, struggles to sufficiently learn key features of pressure dynamics with limited data, resulting in a significant drop in predictive performance. This underscores that pure data-driven learning is not robust when data are scarce, whereas the PI-LSTM model, which integrates physical laws, exhibits stronger generalization capabilities.

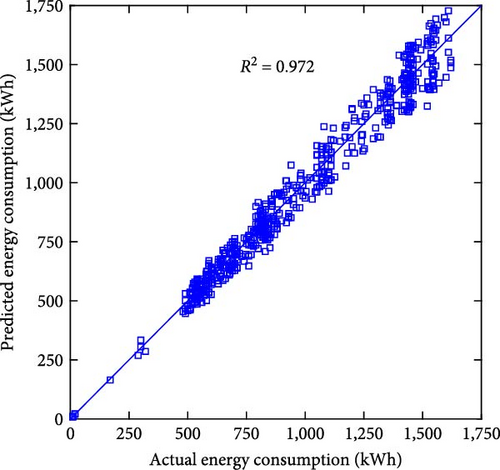

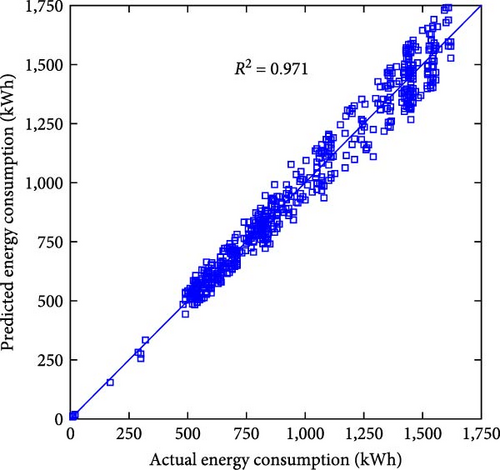

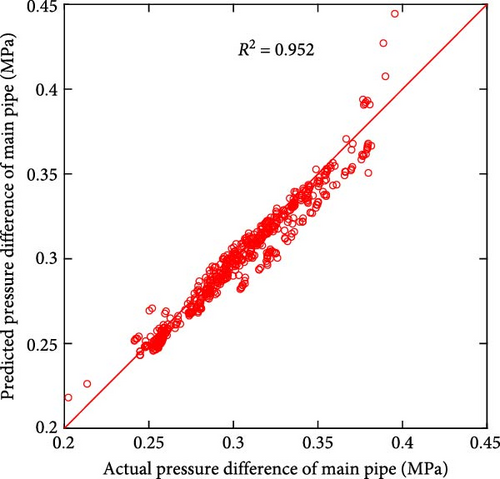

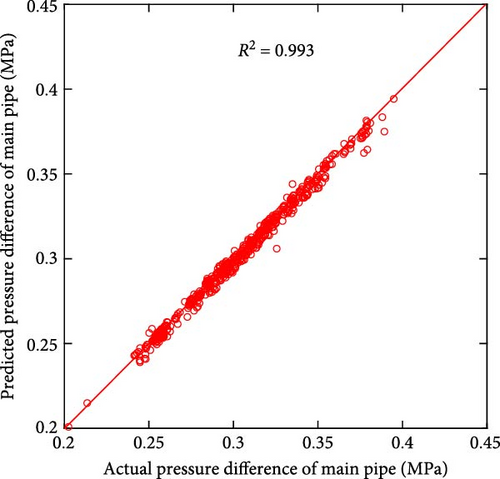

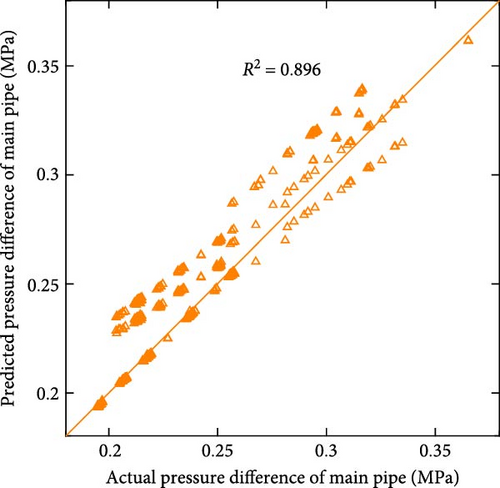

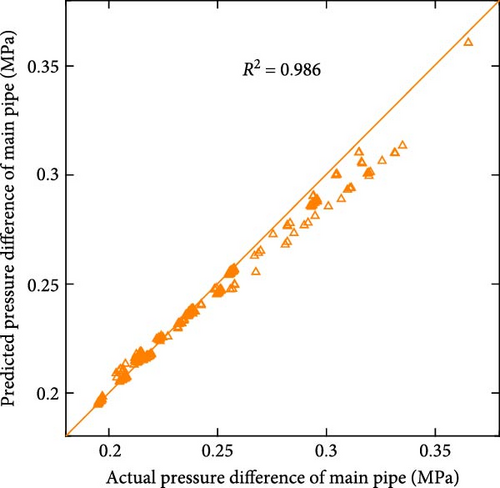

Figure 7 shows the scatter plot of the actual and predicted values of the two models, and Table 1 shows the comparison of the performance error metrics of the two models. Figures 7(a) and 7(b) demonstrate the relationship between the actual and predicted energy consumption for the entire test set for both models. The R2 values of the two models are 0.972 and 0.971, respectively, indicating that there is a strong correlation between the predicted and actual values, and both models can effectively capture potential energy consumption patterns. When examining the error metrics, the MAE and MAPE of LSTM are 50.101 kWh and 5.180%, respectively, while those of PI-LSTM are 49.733 kWh and 5.097%. This similarity in performance suggests that PI-LSTM does not have a substantial advantage over LSTM in predicting energy consumption due to the predominance of factors other than fluid mechanics affecting energy consumption patterns. Figures 7(c) and 7(d) demonstrate the relationship between the actual pressure and the predicted pressure for the entire test set of the two models. The R2 value of PI-LSTM is 0.993, which is better than 0.952 of LSTM. In terms of error metrics, the MAE of PI-LSTM is 0.003 MPa, which is lower than the 0.006 MPa of LSTM. The MAPE of PI-LSTM is 0.832%, which is significantly lower than 2.069% of LSTM. These show that the PI-LSTM model is significantly more accurate, and the improved accuracy can be attributed to its integration of fluid mechanics constraints. Figures 7(e) and 7(f) demonstrate the relationship between the actual pressure and the predicted pressure for the entire test set of the two models when the data are halved. The PI-LSTM still maintains a high R2 of 0.986, while the LSTM decreases to 0.896. The MAE of PI-LSTM is 0.003 MPa, which is lower than the 0.009 MPa of LSTM. The MAPE of PI-LSTM is 1.184%, which is a less significant increase compared to when there is sufficient data, while the MAPE of LSTM is 3.536%, which is a significant increase. These indicate that even with limited data, the PI-LSTM model maintains predictive reliability and accuracy, highlighting its robustness and generalization ability. In contrast, the predictive ability of the LSTM model is greatly reduced, highlighting the limitations of purely data-driven approaches in the presence of insufficient data.

| Metric | Energy consumption | Pressure | Pressure (data halved) | |||

|---|---|---|---|---|---|---|

| LSTM | PI-LSTM | LSTM | PI-LSTM | LSTM | PI-LSTM | |

| MAE | 50.101 | 49.733 | 0.006 | 0.003 | 0.009 | 0.003 |

| MAPE (%) | 5.180 | 5.097 | 2.069 | 0.832 | 3.536 | 1.184 |

A comparative analysis of the performance of the LSTM model and the PI-LSTM model reveals significant advantages of incorporating the laws of physics into a machine learning framework. The higher prediction accuracy of PI-LSTM is attributed to its ability to account for the principles of fluid mechanics that are critical to the operation of WSPS. The LSTM model, though effective in capturing the general patterns, is not sufficiently accurate, which may lead to suboptimal scheduling decisions that can increase operational costs or system instability.

3.2. Performance of the Proposed Algorithm

The specific network parameter design is shown in Table 2. Other main simulation parameters are given as follows: N = 7, M = 5, pmax = 0.08MPa, vmax = 4/day, fmin = 35Hz, fmax = 50Hz, and Htest = 1440h. 1#, 2#, 3#, 5#, and 6# are fixed-frequency pumps, and 4# and 7# are variable-frequency pumps.

| Parameter | Value |

|---|---|

| Neurons of hidden layers for critic networks | 128 |

| Learning rate of critic network | 0.002 |

| Neurons of hidden layers for actor networks | 128 |

| Learning rate of critic network | 0.002 |

| Minibatch | 120 |

| Buffer size | 192,000 |

| Discount factor | 0.95 |

| Episodes | 40,000 |

3.2.1. Algorithm Convergence Process

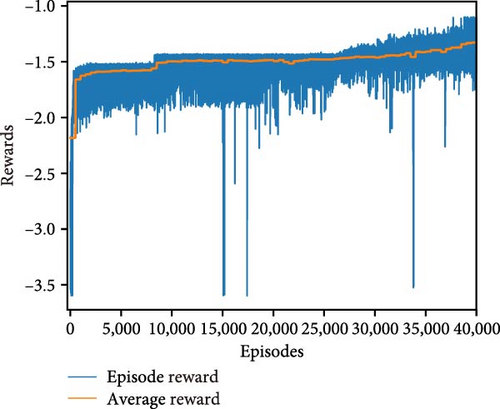

Figure 8 shows the convergence process of the proposed reinforcement learning algorithm over a series of episodes. It can be seen that the curve of episode reward gradually increases and then becomes more and more stable. However, due to the existence of the exploration process and uncertain parameters, the episode reward curve still fluctuates within a narrow gap. It suggests that the algorithm is successfully optimizing its policy toward maximizing rewards. To provide a clearer visualization of the algorithm’s convergence, we present an average reward curve computed over the past 400 episodes. The average reward curve displays an initial increase followed by a stable pattern, indicating that the algorithm’s learning is stabilizing and converging towards a steady and optimized policy.

3.2.2. Algorithm Effectiveness

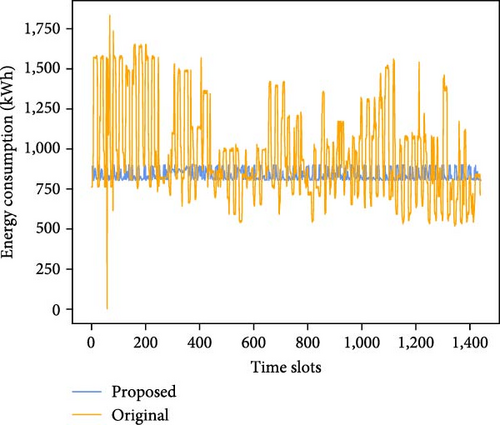

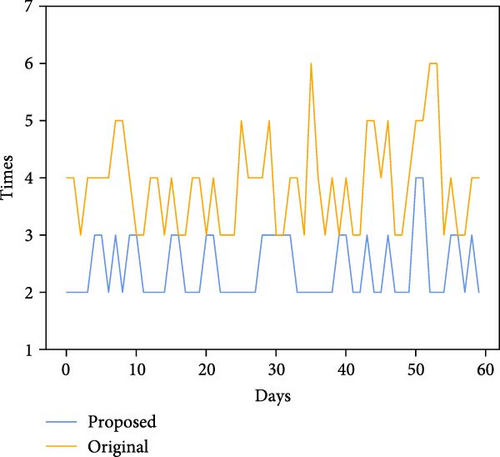

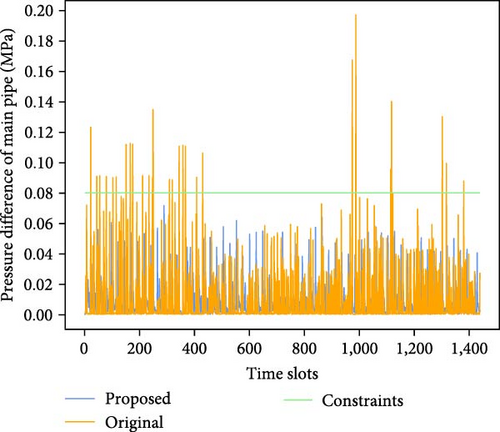

Figure 9 demonstrates the comparison of energy consumption, the pressure difference of the main pipe, and switching times of pump group for the original scheme and the proposed scheme. Table 3 shows the performance comparison for two schemes. From Figure 9(a), it can be seen that the energy consumption of the proposed algorithm is much stable and significantly lower than that of the original scheme, which exhibits substantial fluctuations. From Figure 9(b), it can be seen that the switching times of the proposed scheme are overall much lower than the original. From Figure 9(c), it can be seen that the proposed scheme does not exhibit any pressure difference limit violations, whereas the original scheme experiences multiple occurrences. Manual scheduling relies on experience and heuristic rules, making it difficult to account for various complex factors. In some cases, it exhibited lower energy consumption. To ensure sufficient water supply, waterworks often increase the number of pumps running and crank up the operating frequency of variable-frequency pumps. This results in frequent switching of pumps on and off, leading to frequent large fluctuations in energy consumption and pressure. Although this irrational scheduling scheme can guarantee water supply, it causes substantial energy waste and increases the risk of accidents. The method proposed in this paper focuses on long-term energy optimization, better ensuring the long-term safe and stable operation of the pump system in water plants.

| Schemes | AEC | AST | APV |

|---|---|---|---|

| Original | 967.5 | 3.883 | 2.1e-3 |

| Proposed | 838.1 | 2.417 | 0 |

The average energy consumption per time slot of the original scheme is 967.5 kWh, while the optimized average energy consumption per time slot is 838.1 kWh, which saves 13.38% of the electrical energy. Under dynamic operating conditions, each pump’s energy efficiency varies. The proposed algorithm can flexibly select the most energy-efficient pump combination based on different operating conditions, thereby achieving lower energy consumption. By reasonably planning the pump combination and variable-frequency pump operation frequency, it can minimize the unnecessary pump starts and stops, so that the switching times is greatly reduced from the original average of 3.883 per day to 2.417, a reduction of 37.77%. Fewer switching times of pump group and smaller frequency adjustments of variable-frequency pumps also resulted in reduced fluctuations in energy consumption, which remained in the 800–900 kWh range overall. In contrast, manual scheduling schemes struggle with long-term planning and perform excessive unnecessary switching operations, increasing energy consumption. Specifically, in MADRL algorithm, each pump is considered an agent. Through continuous interaction and game-playing among agents, the globally optimal scheduling strategy is sought. In this process, each pump agent learns and adjusts its behavior strategy based on its own state and environmental feedback to maximize the long-term reward of the entire water supply system. Through distributed learning and collaboration of multiple agents, the algorithm can effectively cope with the complexity, uncertainty, and dynamic changes of the water supply system, which traditional manual scheduling methods cannot effectively address. The learning framework of the algorithm ensures that, over time, the agents become more skilled at maintaining the optimal operating state consistent with the energy-saving objective. In addition, the original scheme had 28 pressure difference transgressions with an average of 0.0021 MPa per time slot, while the proposed algorithm did not have a single transgression. This indicates that the proposed algorithm successfully keeps the pressure variations within safe limits and reduces the potential risks associated with pressure difference overruns.

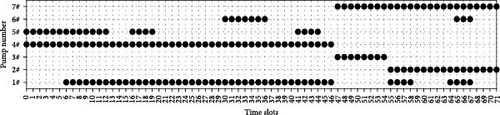

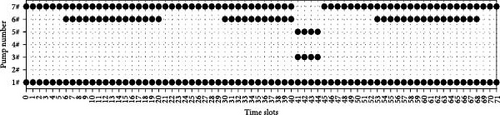

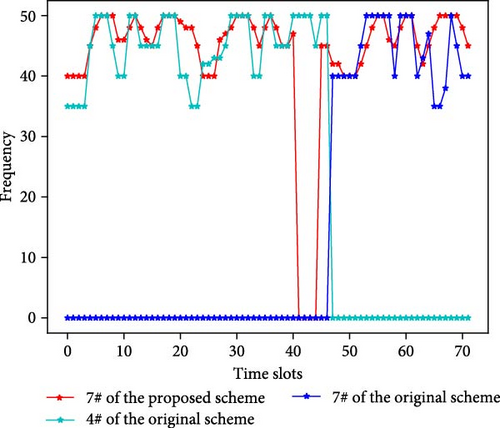

To present the specific scheduling plan of the proposed algorithm in more detail, Figure 10 shows the scheduling comparison charts of the two schemes for 3 consecutive days, and Figure 11 shows the operation of the variable-frequency pumps.

From Figure 10, it can be seen that the switching times of pump group in the original scheme are 14, while the proposed scheme is 7. During this period, the AEC of the original scheme is 973.5 kWh, while the proposed scheme is only 835.8 kWh. As can be seen in Figure 11, the frequency variation range of the variable-frequency pump of the proposed scheme is small in adjacent time slots, while the frequency variation of the original scheme is large, which is not conducive to the protection of the pumps. The proposed scheme is mainly operated by two or three pumps. To be specific, during the daytime and early evening, it is mainly operated by two fixed-frequency pumps and one variable-frequency pump, with the variable-frequency pump running at the highest frequency during the morning, midmorning, and evening peaks and lowering it at other times. During late night and early morning, it is mainly operated by one fixed-frequency pump and one variable-frequency pump, and the variable-frequency pump maintains a lower operating frequency. Over a longer period, the working pumps are more fixed. The frequent switching of the original scheme is unreasonable. There is a situation where four pumps are working at the same time. The working pumps are not fixed.

The frequent switching in the original scheme is due to the lack of comprehensive consideration of various factors and the absence of long-term planning, which resulted in only passive switching to cope with fluctuating demand, leading to energy inefficiency and wear and tear on the pump units. In contrast, the proposed scheme maintains a relatively stable number of pumps throughout the time period, with only minor adjustments when necessary. This shows that the proposed scheme minimizes unnecessary pump switching and improves the reliability and efficiency of the water supply system.

4. Conclusion

This study addresses the complex problem of optimizing energy-saving scheduling in a WSPS with multiple pumps while ensuring compliance with constraints related to the pressure difference of the main pipe and switching times of pump group. The optimization problem is challenging due to parameter uncertainty, temporal coupling constraints, and the lack of an explicit mechanistic model for the pumps. Therefore, the problem is reformulated as a cooperative Markov game. To deal with Markov games, an innovative PI-LSTM surrogate model is developed for training agents, and a WSPS scheduling algorithm based on the surrogate model and MADDPG is proposed. PI-LSTM provides a physical information-driven improvement of the LSTM architecture by incorporating the knowledge of fluid mechanics, which enables it to predict the pressure variations more accurately in the main pipe, especially when there is fewer data. Moreover, the algorithm does not require prior knowledge of the pumps, making it easier to implement in different water plants. Future research could consider integrating more physical laws that are directly related to energy consumption, such as the laws of thermodynamics and electricity, to further improve the performance of the model in energy consumption prediction. In addition, exploring the impact of combining these physical laws with different deep learning models on MADRL could be a valuable direction. Compared with the original scheduling scheme, the proposed algorithm provides a more flexible combination of pumps and achieves the best energy-saving effect, which can reduce energy consumption by 13.38%. This study demonstrates the great potential of multiagent reinforcement learning in the optimal scheduling of water supply systems, which provides important insights for further improving urban water supply management.

Abbreviations

-

- WSPS:

-

- Water supply pumping station

-

- LSTM:

-

- Long short-term memory

-

- PI-LSTM:

-

- Physics-informed long short-term memory

-

- MADDPG:

-

- Multiagent deep deterministic policy gradient

-

- DRL:

-

- Deep reinforcement learning

-

- MADRL:

-

- Multiagent deep reinforcement learning

-

- DDPG:

-

- Deep deterministic policy gradient

-

- SAC:

-

- Soft actor-critic

-

- MAPE:

-

- Mean absolute percentage error

-

- MAE:

-

- Mean absolute error

-

- R2:

-

- Coefficient of determination

-

- AEC:

-

- Average energy consumption

-

- AST:

-

- Average switching times

-

- APV:

-

- Average pressure violation.

Conflicts of Interest

The authors declare that they have no known competing financial interests or personal relationships that could have appeared to influence the work reported in this paper.

Authors’ Contributions

Haixiang Ma contributed to the methodology and wrote, reviewed, and edited the manuscript. Xuechun Wang revised and edited the manuscript. Dongsheng Wang contributed to the conceptualization and methodology.

Acknowledgments

This work was supported by the National Natural Science Foundation of China (grant no. 52170001).

Open Research

Data Availability

The data used to support the findings of this study are available from the corresponding author upon request.