Virtual Reconstruction of Visually Conveyed Images under Multimedia Intelligent Sensor Network Node Layout

Abstract

In this paper, multimedia intelligent sensing technology is applied to the virtual reconstruction of images to construct or restore images to the communication media for visual communication. This paper proposes image virtual reconstruction theory based on visual communication research, treats image virtual reconstruction content as open data links and customized domain ontology, establishes an interdisciplinary interactive research framework through the technical means of visual communication, solves the problem of data heterogeneity brought by image virtual reconstruction, and finally establishes a three-dimensional visualization research method and principle of visual communication. The research firstly visual communication cuts into the existing conservation principles and proposes the necessity of image virtual reconstruction from the perspective of visual communication; secondly, the thinking mode of digital technology is different from human thinking mode, and the process of calculation ignores the emotional and spiritual values, but the realization of value rationality must be premised on instrumental rationality. This requires a content judgment and self-examination of the technical dimensional model of image virtual reconstruction on top of comprehensive literature and empirical evidence. In response to the research difficulties such as the constructivity of visual communication, the solution of image virtual reconstruction of visual communication is proposed based on the data collection method and literature characteristics. The process of introducing the tools of computer science into humanity research needs to be placed in a continuous critical theory system due to the uncontrollable and subjective nature of visual content, and finally, based on the construction of information models for image virtual reconstruction, the ontology and semantics of information modeling are thoroughly investigated, and the problems related to them, such as interpretation, wholeness, and interactivity, are analyzed and solved one by one. The transparency of image virtual reconstruction is enhanced through the introduction of interactive metadata, and this theoretical system of virtual restoration is put into practice in the Dunhuang digital display design project.

1. Introduction

With the rapid progress of society and the continuous development of technology, the world has now fully entered the information age. The information age, also known as the digital age, is a period in which the generation and transmission of digital information is the main mode of operation of society [1]. Under the impetus of such a development trend, electronic data has gradually replaced some previously indispensable material elements, the form of production has been transformed from manual labor to computer manipulation, the cultural form has been transformed from materialization to informatization, and the world economic system has been transformed from physical exchange to digital exchange [2]. It can be said that the widespread use of information technology has fundamentally changed the functioning of all aspects of society and has been integrated into different fields, significantly changing the way of production and life of human beings. With the application of virtual image reconstruction, the continuous emergence of new exhibit forms such as digital exhibits and digital media art has enriched today’s display methods, and the mode of display activities has gradually begun to shift to two-way information interaction modes such as interactive experience. Each change of the times provides a more efficient way to disseminate information and drives the evolution and renewal of media forms. From oral transmission in ancient times to written communication in ancient times to radio and television in the electric age, each communication era has its unique form of media. From the technical point of view of digital media technology, digital media technology is applied in a wide range of fields, so this article combines its research scope to collate and summarize the relevant research results of digital media technology used in the display field. In the information age, people access information and express and exchange ideas through different digital technology devices. The widespread popularity of digital technology has greatly enhanced the mobility and integration of information, and with the maturity of computer technology and network technology, image virtual reconstruction technology has emerged [3].

In the era of more open technology development, product competition no longer depends solely on the quality and price competition but begins to gradually expand to the brand and corporate image; marketing methods have gradually become an aspect of competition [4]. With the continuous development and innovation of computer technology, information network, and intelligent devices, the conventional display methods alone cannot satisfy the contemporary people who are full of various design concepts, so we need to always walk in the front of technology and seek more innovative and creative ways to attract people’s attention [5]. With the application of image virtual reconstruction, digital exhibits, digital media art, and other new exhibit forms have emerged to enrich today’s display methods, and the mode of display activities has gradually begun to shift to interactive experience and other two-way information interaction modes. Window adjustment of two-dimensional images is a necessary operation for medical image processing. The main reason is the limitation of human eye recognition. Human eyes can only recognize 16 gray levels, but CT can recognize 2000 gray levels. Degree, there is a huge gap between the two. The display activities under the intervention of image virtual reconstruction realize the transmission of information through the two-way interaction between exhibits and audiences with a multidimensional expression. The development of image virtual reconstruction technology has entered various fields and achieved very significant results [6].

The ever-changing digital media technology takes new technologies such as multimedia technology, virtual reality technology, and interactive technology to realize the transformation of information dissemination from text to image, from delayed time to instant, from the one-way transmission to two-way transmission, which has completely changed the way of information dissemination, communication efficiency, and communication effect. Every change of the times will provide a more efficient way of dissemination of information and promote the evolution and update of the media form. From word of mouth in ancient times to written dissemination in ancient times, to the electric power dissemination form of radio and television in the electric age, each dissemination age has its unique media form. In the information age, people obtain information through different digital technology devices to express and exchange ideas. With each innovation in media form, we gradually realize that media technology is not only a tool to carry information but also a language to express information. In general, the development of the field of the virtual reconstruction of images has made great progress, and it is believed that soon there will be a great breakthrough in this field.

2. Related Works

With the rapid development of computer information technology, augmented reality has gradually come into people’s lives. By establishing a relationship between real scenes and computer-generated virtual environments, augmented reality technology has played a great role in the medical field, industry, military field, education field, and monument protection field. Augmented reality (AR) is a brand-new technology further developed based on virtual reality technology, first proposed in 1992, which extends the human visual perception of the real environment by accurately superimposing computer-generated virtual objects or other auxiliary information into the real scene (three-dimensional registration) and allowing users to interact with this virtual information fused to the real world in real-time [7]. By establishing a relationship between the real scene and the virtual environment generated by the computer, augmented reality technology has fully played a huge role in the medical field, industrial field, military field, education field, and historical site protection field. It allows users to interact with this virtual information fused to the real world in real-time, extending the human visual perception of the real environment, thus completing the “augmentation” of the real world. At the same time, thanks to the rapid improvement in the performance of smartphones, tablets, and other wearable mobile devices, as well as the increasing maturity of computer vision and mobile cloud computing technologies on mobile devices, combined with a variety of advanced sensors and ubiquitous and stable network connections, augmented reality technology continues to move towards the more convenient mobile augmented reality (MAR) direction [8].

The scientific (deterministic and integrity level) process of 3D visualization documentation has facilitated the creation of interactive and immersive information models, enabled by more than a decade of technological development, that allow users to visually isolate themselves from the real world through external devices, thus manipulating the digital virtual environment and creating a sense of belonging [9]. These immersive information models transform the digital environment “perception” into a metric of the real environment. Web-based solutions and applications enable efficient 3D digitization methods, as well as postprocessing tools for rich semantic modeling. This is a complex “reverse engineering” where data must be processed without losing important information such as metadata and interaction metadata [10]. When using interactive solutions for mobile devices such as AR glasses, the main considerations are the limitations of mobile device performance, the visual interference caused by too many tissue models during surgery, and the single issue of auxiliary information interaction. In medicine, a handheld augmented reality neuronavigation (AR-IGNS) with three navigation modes was proposed and built in 2013, which first precisely segments the tumor target area in the original 2D image of the patient and then combines the segmented tumor information with the actual surgery scene to generate the corresponding navigation image and display it on the iPad to assist the surgeon in the surgery. Professor Xiaorong Xu’s team proposed to apply mobile augmented reality technology to the treatment of breast cancer surgery and developed a Google Project Glass-based dual-modality ultrasound and fluorescence image navigation system and a HoloLens-based breast reconstruction navigation system to assist doctors in locating and removing anterior lymph nodes, and the latter to guide physicians in mammoplasty reconstruction [11]. On June 26, 2017, a complex hip fracture surgery guided by augmented reality technology was completed, and a mobile augmented reality surgical planning and navigation system based on the visor ST60 headset was researched and developed, and a series of calibration algorithms were proposed to improve the problems of insufficient positioning accuracy, poor intuition, and poor real-time interactivity in the clinical application of augmented reality surgical navigation systems.

From the technical perspective of digital media technology, which is applied in a wide range of fields, this paper thus collates and summarizes the relevant research results on digital media technology corresponding to its use in the field of display, considering the scope of its research [12]. Distinguishing immersion in the age of digital media from earlier forms of illusionary art, drawing on actual works by contemporary artists and groups in the analysis, it summarizes how the use of technological tools such as 3D, IMAX, and virtual reality can create immersive illusions and outlines the impact of virtual reality on the conception of contemporary art, outlines and discusses information about augmented reality and its functions, and introduces people to augmented reality from various perspectives [13]. Functional design is the core part of the entire mobile augmented reality application development, and a good interaction design helps to improve the overall application display effect. The three-dimensional virtual model and multimedia resources are integrated into the application, and the effect of virtual and real fusion is realized through buttons and human-computer interaction, and the user experience is improved. In 2013, we introduced the types of haptic sensors and described how to build holistic and localized haptic display systems, brought together the research of advanced practitioners in the VR field, and outlined the main hardware and software technologies that currently make up. The main hardware and software technologies that make up virtual reality systems are described, and the main developments and issues in the field are elaborated, exploring how digital media technology tools can be used to incorporate olfactory, tactile, and thermal sensations into media objects, thereby enriching traditional multimedia content and enhancing immersion [14].

3. Multimedia Intelligent Sensor Image Virtual Reconstruction Model Design

3.1. Smart Sensor Model Construction

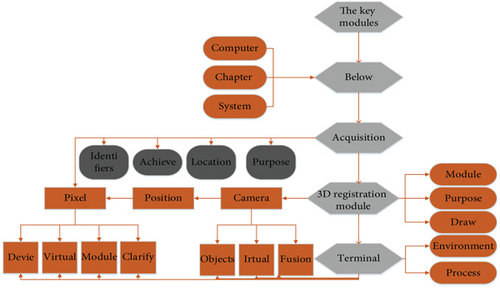

- (1)

Image acquisition module: the image acquisition module is the first step in the whole system; it is like the “eyes” of the system, using the camera to acquire the image in the scene and using the optical components inside the camera to obtain the real-world position and finally store it in pixel representation and display it through the terminal display device

- (2)

Recognition and tracking module: the recognition and tracking module is necessary in augmented reality systems to perceive the real world through cameras, track real scenes in real-time, use features in the environment for recognition, and clarify the location and direction of objects, thus enhancing information about the real world

- (3)

Virtual and real registration module: the virtual and real registration module is the core module of the whole system, its purpose is to draw the virtual model in the real environment, and tracking registration is an important step to achieve this process, usually based on two-dimensional identifiers or certain features in the field, through the calculation of the corresponding matrix to achieve the purpose of real-time registration

- (4)

Virtual-real fusion module: the virtual-real fusion module is to seamlessly integrate the computer-generated virtual objects with the real world, which includes the optimization of the generated virtual model and lighting processing, and the accurate addition of the location of the virtual objects. The relationship between the key modules of the system is shown in Figure 1

In the system in which the camera is located, the key problem to be solved is to place the virtual objects drawn by the computer in the correct position, through the alignment and then achieve the purpose of accurate fusion, so to clarify the conversion relationship between the various coordinate systems is the key to achieve this problem. The meaning of space technology is not simply to mechanize the assembly of installations and space but to inform and media space at a deeper level. The purpose is to use the advantages of technology to create an intelligent space environment, which is fundamentally realized. Communication between people and space. The whole augmented reality system mainly involves four kinds of coordinate systems, respectively, the image plane coordinate system (image coordinate system), the camera coordinate system (video camera coordinate system), the physical coordinate system (world coordinate system), and the virtual object in the coordinate system (virtual object coordinate system). This includes the conversion between real-world and camera coordinate systems, the conversion between camera coordinate system and image plane coordinate system, and the transformation between virtual coordinate system and real coordinate system. The conversion relationship between the four coordinate systems is shown in Figure 2.

The current tension between doctors and patients is mainly due to poor communication. First, there is a huge difference in the amount of medical knowledge and medical information held by doctors and patients, which makes it sometimes difficult for patients and their families to understand the specific conditions of patients. Secondly, in traditional doctor-patient communication, patients play a passive role and lack effective interaction with doctors. The advent of mobile augmented reality technology provides excellent solutions to both problems. Using the patient’s computed tomography, magnetic resonance imaging, and other image data, the patient’s lesion model is segmented and reconstructed in three dimensions by medical image processing software, and then, the patient-specific personalized medical 3D model is presented directly to the patient or the patient’s family using the MAR system based on the mobile device side (mobile phone and tablet) [16]. Through the visual demonstration and operation of the model (pan, rotate, and zoom) and the doctor’s explanation, the patient will have a deeper understanding of the condition, and this new way of medical interaction will make the traditional doctor-patient communication more simple, clear, and direct. Most of the existing medical augmented reality systems based on mobile devices are based on the 3D registration technology of manual signs and applied to intraoperative navigation, while the 3D registration technology based on manual signs can only be applied to scenes with signs, resulting in a limited tracking area, which not only affects the scope of use of medical mobile augmented reality systems but also makes the system not convenient and stable enough. Therefore, in this paper, we choose to use the ARKit framework of SLAM technology to complete the development of a mobile augmented reality convenient display system, which can be used by medical personnel to display the lesion model for patients and their families anytime and anywhere without the limitation of markers. In addition, the system can also be applied to medical education, so that the complex structure of two-dimensional medical images on paper is more conducive to the understanding and memory of learners.

3.2. Image Virtual Reconstruction Model Design

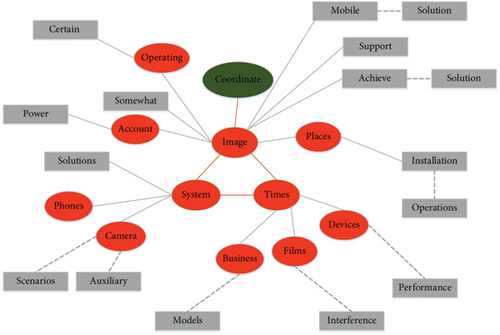

The design of multimedia resources contains three parts: audio, video, and 3D model. Audio is an important component, and this topic selects the intelligent voice generated by reading aloud female in the processing of voice and plays the voice by scanning the text to enhance the user’s memory of the text; integrates video elements and plays the promotional video of the property by scanning the graphics of the property, which has more visual impact relative to the image; and produces a realistic virtual 3D model, so that the user can view house models and indoor roaming without leaving home [17]. Through these multimedia resources, the user can interact with the computer in real-time, which is a new model of human-computer interaction. The mobile augmented reality application consists of three major interfaces, the main interface, AR scanning interface, and indoor roaming interface; the key to interface design is icon design and interaction design; icon design should have the role of content orientation and impact while paying attention to the color and style of unity; interaction design should follow the logical relationship between the interface and the buttons. The logical relationship between interfaces is shown in Figure 3.

Image segmentation technology refers to the process of segmenting an image into some disjoint regions (segmentation of selected features showing consistency in the same region) based on features such as grayscale, color, spatial texture, and geometry and extracting the region of interest, which is the basis for fields such as image processing and computer vision. In the medical field, due to the differences in the imaging principles of medical imaging devices, the complexity of human anatomy, and the diversity of human tissue and organ shapes, the formation of images is often affected by, for example, noise, tissue motion, field offset effects, and local body effects, and thus has characteristics such as blurring and inhomogeneity, which brings great difficulties to the segmentation of medical images. To date, there is still no universal medical image segmentation technique for clinical applications, but scholars at home and abroad have reached a consensus on the general rules of image segmentation, and a considerable number of research results and methods have been produced as a result. At present, various medical image segmentation methods widely used around the world can be mainly classified into edge-based, region-based, and combined with specific theoretical image segmentation according to their segmentation characteristics. The typical ones are threshold segmentation, region growth, wavelet transform, statistics-based, and Artificial Neural Network- (ANN-) based methods. Image segmentation technology refers to the segmentation of an image into several disjoint regions based on features such as grayscale, color, spatial texture, and geometric shapes (the selected features of the segmentation show consistency in the same region), and the region of interest is extracted The process is the foundation of image processing and computer vision. Based on the theory of the above methods, two types of image segmentation have also arisen, namely, automatic segmentation and manual segmentation. Automatic intelligent segmentation is mainly done with the help of high-performance computers’ understanding of medical images and many operations to complete fully automatic image segmentation; manual segmentation requires human participation in the division and calibration in advance, and then computer operations to complete the medical image segmentation.

4. Analysis of Results

4.1. Smart Sensor Model Performance Analysis

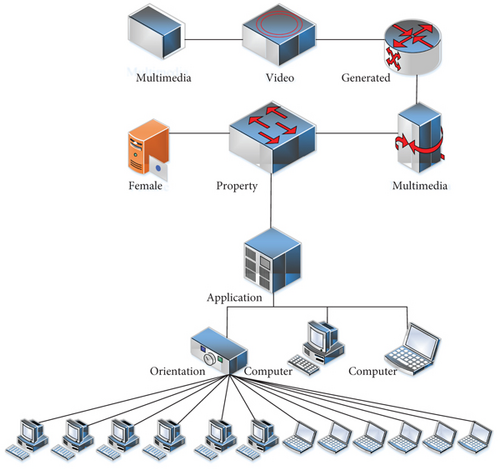

One way to create a vertical three-dimensional display space model is to use the placement of mezzanine space or the hollowing out of each layer of space and other techniques to form a display space with multiple layers of “viewing platforms” in the vertical direction. The multilayered space increases the space area that can accommodate the audience and divides several clear vertical levels in the display space, which is convenient for the audience to stay. At the same time, each level is relative. Independent and visually connected, it allows the people gathered on each platform to view the exhibition from different perspectives while forming an interactive exchange between the layers, exchanging the information acquired under their respective perspectives and thus guiding the flow of visitors between the layers. For example, in the design of the Kerkrade Museum in Limburg, the architects created a half-underground, half-above-ground spherical space for the display of digital images and used the hemispherical part of the ground floor to form an inverted spherical gallery. To make full use of the space to accommodate more visitors, a circular glass platform was inserted to form a mezzanine space to accommodate the audience, and a staircase was used to form a link between the levels inside the exhibition hall, so that people can look down on the dome at different heights in the space, creating a visual experience as if they were looking back at the Earth from space, as shown in Figure 4.

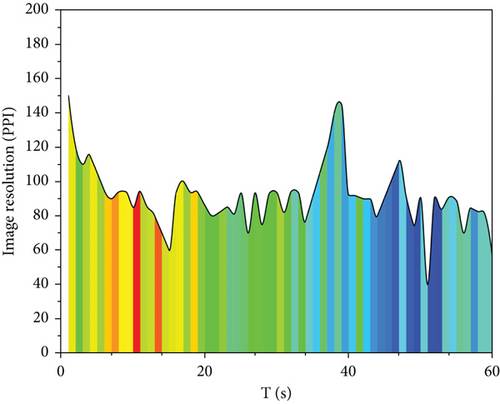

The shaping of spatial differentiation is the main way for landscape installations to shape the landscape space and stimulate public participation in the experience. The use of a variety of alienation methods to break the inherent thinking presents a personalized visual effect, while expressing the design concept, so that the landscape installation becomes unique and individual, making the whole landscape space more attractive. The intervention of virtual image technology provides more means for the shaping of spatial differentiation. In landscape space, strong color contrast will produce certain visual signals to the public, and in the subjective world of human beings, color also has certain symbolic meaning, cultural meaning, warning meaning, etc., according to the designer’s concept of expression and the needs of the landscape space atmosphere for image color saturation, contrast, and the brightness of different collocation, to cause different color feelings and connotations to the public. In the 2013 Sydney Christmas light show, St. Mary’s Cathedral became the protagonist of the light show; the designer used wall projection technology in the church facade projection show, by changing the color of the church, so that the audience’s visual experience of the church and the inner feelings have changed; green projection makes the church warm and romantic, and when the dark red projection in the church appears, in the audience’s heart is a majestic green projection that makes the church warm and romantic, while when the dark red projection appears in the church, it creates a majestic visual and inner feeling in the audience. The meaning of color is complex and has different meanings in different regions, as shown in Figure 5.

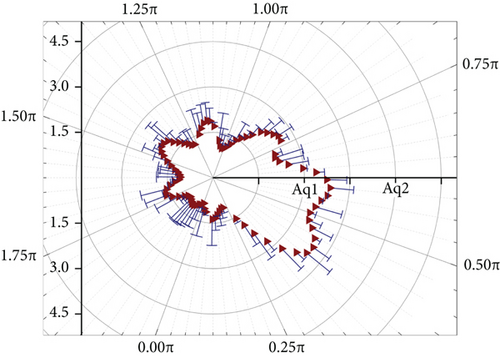

The common coordinate systems used in visual-inertial navigation are the world coordinate system, the camera coordinate system, and the IMU coordinate system. The world coordinate system is a fixed reference coordinate system, the camera coordinate system is a coordinate system bound to the camera with the shooting viewpoint as the coordinate origin, and the IMU coordinate system is bound to the IMU device and is a moving coordinate system. Since the reconstruction method used in this paper is single-view reconstruction, the camera coordinate system is used as the reference coordinate system, and the world coordinate system is not considered, and only the camera coordinate system and the IMU coordinate system are fused to solve the motion trajectory and pose of the camera in the indoor scene. In pure visual SLAM, the camera coordinate system of the first frame is generally used as the world coordinate system. In this paper, the camera coordinate system of the first panoramic image is used as the reference coordinate system, and the 3D model reconstructed from subsequent panoramic images is converted to the reference coordinate system to realize the stitching of the model. The layout models reconstructed by single panoramic images are all in their respective camera coordinate systems as the reference coordinate systems, i.e., the coordinate systems of the models reconstructed by different panoramic images are relatively independent. To realize the stitching of multiple 3D models, it is necessary to obtain the position relationship between each model, i.e., the relative position relationship of each panoramic image shooting viewpoint. When the IMU device is fixed to the camera device, the relative position relationship between the two viewpoints, i.e., the relationship between the two camera coordinate systems, can be calculated from the IMU data when switching the shooting viewpoints. The relationship between the two coordinate systems can be represented by a rotation matrix and a translation matrix, and the camera model between the two coordinate systems can be converted to the same coordinate system after obtaining the camera model between the two coordinate systems to achieve model stitching. This is shown in Figure 6.

4.2. Image Virtual Reconstruction Implementation

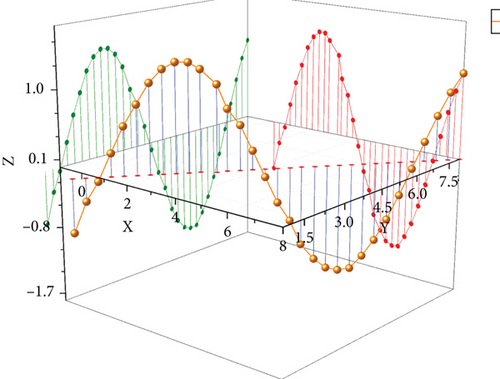

The projection ratio of the ceiling area to the wall area on the panoramic graphic, Ch is the camera height, Rh is the room height, and an f, ag denotes the vertical offset angle when the corresponding points of the ceiling area and the floor area are projected onto the sphere, respectively. The camera height and the height of the room scene affect the relationship between the projection of the ceiling area and the wall area on the image. The camera calibration ensures that the horizontal vanishing line in the scene is the x-axis of the image coordinate system and that the x-coordinate of the image coordinates of the two points corresponding to the floor and wall points in a real scene is the same when projected onto the panoramic image, and the relationship between the y-coordinates is determined by the projection scale. It mainly includes three interactive methods: translation, rotation, and zoom. In the actual interaction process, user interaction gestures are essentially two-dimensional (that is, the movement of the user’s finger on the display screen of the mobile device), but the MAR experience involves three dimensions in the real world. Therefore, this system chooses to limit the rotation of the virtual medical model to a single axis, and the translation range is limited to the plane where the virtual object was originally placed, to simplify the interaction between the user and the virtual object. This property is used to calculate the ground contour lines corresponding to the contour line segment of the ceiling area in the image. After determining the contour line segments of the ceiling and the floor, it is possible to find the ceiling area and the floor area in the image by the contour line segments. The closed-loop area enclosed by the contour line segments is the ceiling area, and in a panoramic image, the ceiling area contour line segments often span the entire image. When projected onto the sphere, it appears as a spherical region made up of several spherical triangles, each of which is enclosed by a line segment connecting the contour line segment to the upper vertex P of the sphere. When converted to the image coordinate system, the spherical triangles are represented as image regions above the contour line segments, combining this property. The ability to find the ceiling area in an image is shown in Figure 7.

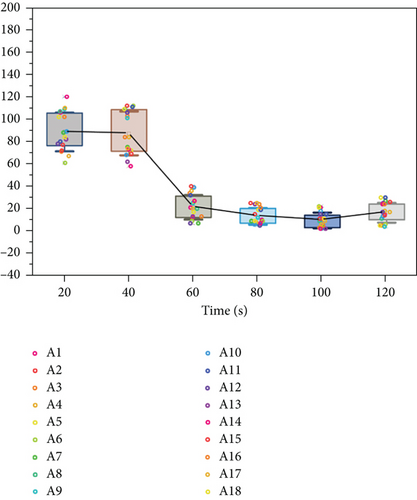

This chapter focuses on a comprehensive verification of the entire experimental system, using a real-life scenario built on an experimental platform. The corresponding error analysis is given for some of the experiments covered in this paper. In the section on camera calibration, the internal parameters of the camera are calculated using two calibration plates. In the image correction section, the symmetry axis errors obtained for the six images are within 0.3 pixels. In addition, the two measurements of the long and short axes of the images before and after correction were compared with a difference of 0.0934 pixels and 0.1229 pixels, respectively, and the ratio of the two was compared with the ratio of the length and width of the real artifacts, and the maximum error value obtained was 0.0018, and the average error value was 0.0012, which has high accuracy and can be used for 3D plotting using the bus data obtained by the algorithm of this paper. Finally, the plotted model is imported into the real scene to realize the virtual reality alignment. The object of the experimental study is a rotating ceramic vase with a maximum circumference of 43 cm and a height of 23 cm, and the diameter of the belly diameter is 13.6873 cm. The ratio of the maximum diameter length to the height is calculated to be approximately equal to 0.5954, and the maximum error value is 0.0018, and the average error value is 0.0012 when compared with the data in the above table.

5. Conclusion

The progress of digital technology has brought about an information revolution, the medium of information dissemination has achieved digital transformation, and people can access the information resources they need through various means at any time and anywhere. In the context of the intelligent era, development and innovation must be the integration and innovation of technology and art, technology leads the transformation of art and design concepts, and technology realizes art and design goals. The diversified functions and experience need of the public in the intelligent era are the internal driving force that drives continuous innovation and development. Based on the policy guidance of public digital culture construction, virtual image technology has been widely used. Virtual image technology effectively solves the technical constraints, form constraints, application constraints, and site constraints of visual communication and has the characteristics of digital technology in the intelligent era, forming a dynamic display, game entertainment, and auxiliary daily diversified functions, bringing a multisensory immersive experience of vision, hearing, and touch, thus enhancing the cultural value and commercial value, making the visual product better serve the interactive cultural experience zone. The visual products can better serve the construction of interactive cultural experience zones, enhance the interactivity and fun of public cultural services, meet the diversified functional and experiential needs of the public, and realize the multisensory immersive experience innovation, emotional experience innovation, service experience innovation, and commercial value innovation of visual products.

Conflicts of Interest

The authors declare that they have no known competing financial interests or personal relationships that could have appeared to influence the work reported in this paper.

Acknowledgments

The study was supported by School of Journalism and Media, Chongqing Normal University.

Open Research

Data Availability

The data used to support the findings of this study are available from the corresponding author upon request.