[Retracted] Scene Classification Using Deep Networks Combined with Visual Attention

Abstract

In view of the scene’s complexity and diversity in scene classification, this paper makes full use of the contextual semantic relationships between the objects to describe the visual attention regions of the scenes and combines with the deep convolution neural networks, so that a scene classification model using visual attention and deep networks is constructed. Firstly, the visual attention regions in the scene image are marked by using the context-based saliency detection algorithm. Then, the original image and the visual attention region detection image are superimposed to obtain a visual attention region enhancement image. Furthermore, the deep convolution features of the original image, the visual attention region detection image, and the visual attention region enhancement image are extracted by using the deep convolution neural networks pretrained on the large-scale scene image dataset Places. Finally, the deep visual attention features are constructed by using the multilayer deep convolution features of the deep convolution networks, and a classification model is constructed. In order to verify the effectiveness of the proposed model, the experiments are carried out on four standard scene datasets LabelMe, UIUC-Sports, Scene-15, and MIT67. The results show that the proposed model improves the performance of the classification well and has good adaptability.

1. Introduction

As a basic problem in the field of computer vision and image understanding, scene image classification has received extensive attention and research [1–7]. The most important problem to be solved in scene classification is to give proper expression to the content in the scene. In order to improve the accuracy of scene classification, the researchers constantly explore new ways, which has advantage of global features and local features as well as the middle to form visual word bag; the bag will represent a visual scene image word combination methods [8] and, by iteration and cross-validation, get the image block with degree of differentiation, as image middle expression method of [9]. The mean-shift algorithm is used to find the distinguishing mode in the image block distribution space, so as to create the image representation method of middle-level scene [10]. By establishing metric learning formulas and learning the best metric parameters, online metric learning and parallel optimization of large-scale and high-dimensional data can be solved [2]. Although these methods have achieved certain classification effects, the classification performance is reduced when there are many objects or complex contents in the scene image.

In recent years, the proposal of deep convolutional networks has made it possible to obtain richer high-level semantics of images [11–13]. Donahue et al. directly use convolutional neural networks (CNNs) [14], which are pretrained on ImageNet dataset, for scene classification. Zhou et al. constructed a large-scale dataset centered on the scene and trained convolutional neural network on this basis [15], which significantly improved the performance of scene classification. Bai proposed that through CNN transfer learning, deep features were used to express special scene targets for classification [16]. Zou et al. built a fusion method based on nonnegative matrix factorization, which can preserve feature nonnegative properties and improve their representation performance. Furthermore, an adaptive feature fusion and boosting algorithm is developed to improve the efficiency of image features. There are two versions of the proposed feature fusion method for nonnegative single-feature fusion and multifeature fusion [1].

Zhang et al. proposed a spatially aware aggregation network for scene classification, which detects a set of visually semantically significant regions from each scene through a semisupervised and structurally reserved nonnegative matrix decomposition (NMF). Gaze shift path (GSP) was used to characterize the process of human perception of each scene image, and a spatial perception CNN called SA-NET was developed to describe each GSP in depth. Finally, the deep GSP function learned from the whole scene image is integrated into the image kernel, which is integrated into the kernel SVM to classify the scene [3]. Yee et al. propose a DeepScene model that leverages convolutional neural network as the base architecture, which converts grayscale scene images to RGB images. Spatial Pyramid Pooling is incorporated into the convolutional neural network [17]. These methods have greatly improved the effect of scene classification.

However, most of the current algorithms regard the scene as a combination of multiple objects [18–20] and lack description of contextual semantic relations between objects, thus restricting the accuracy of scene classification [21]. For this purpose, the significance of detection algorithm based on context [22], annotation in the scene visual focus area, and the area contains the main target in the scene and can express the context of a part of the background region and at the same time, combined with the depth of the convolutional neural network, build a kind of fusion depth scene classification characteristic of visual attention model. It overcomes the limitation of using object and structure feature to classify effectively and obtains good scene classification performance.

2. The Construction of Scene Classification Model

In order to adapt to the diversity of images, this article will image the context of the significant characteristics as visual attention characteristics, superimposed onto the original image and into the depth of the convolution network; build scene classification model; make the model of images to express deep intrinsic characteristics at the same time; andalso can express the target in the scene context between semantic features.

2.1. Detection of Areas of Visual Attention

The area that has a major influence on visual judgment is called the area of visual attention. Here, the context-based saliency detection algorithm proposed by Goferman et al. is used to extract the visual areas of interest of the image [22]. The extracted saliency areas take full account of global and local features at different scales and mark saliency targets with their adjacent areas to varying degrees. It well reflects the contextual relationship between the objects in the scene and the surrounding scenery and filters out some repetitive texture information.

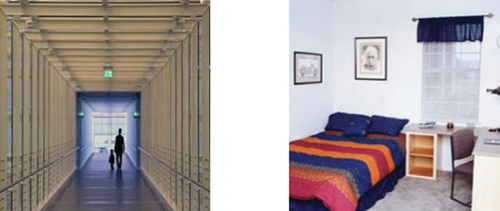

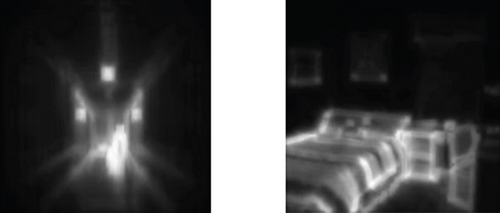

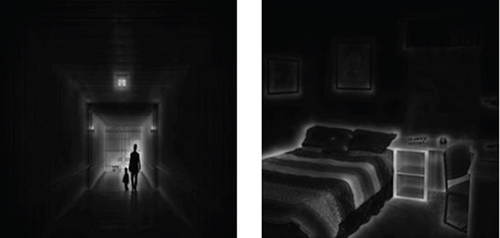

Figure 1 is an example of detecting the area of visual attention. The brightness value in Figure 1(b) is the visual attention of this position. It can be seen that the attention of the background area varies with the change of closeness to the target.

2.2. Construction of Enhanced Images of Visual Areas of Concern

Although the scene information contained in the original image was comprehensive, it could not distinguish effective information from invalid information. In order to enhance the scene area containing different information to different degrees, the detection map of visual area of concern was superimposed on the original image to obtain the enhanced image of visual area of concern.

Figure 2 shows the enhancement of visual attention area. It can be seen that different areas in the scene have different visual attention, and some repeated textures and less obvious regional information in the scene have been effectively suppressed, such as the passage in the airport scene and the decorative paintings in the bedroom. While preserving visual attention, the superimposed images supplement the information of some gray areas (transitional areas between the attention and unattention areas).

2.3. Deep Feature Fusion

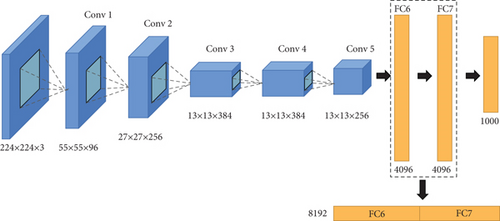

In order to describe the content attributes of scene images effectively, AlexNet network model, which has been pretrained on large-scale scene dataset Places, is used to extract the deep convolution features of original image, visual area of concern detection image, and visual area of concern enhancement image. In addition, since different layers of the deep convolutional network have different abstract expressions of the original image data, the output of multiple fully connected layers of the deep convolutional network is used in this paper to form the deep fusion feature as the final expression of the scene image.

Finally, the depth visual attention features of the training images in the target dataset are sent into the random forest to train the classifier, and the trained classifier is used for scene classification. Because the extracted features have both the contextual semantic relationship between objects in the image and the intrinsic characteristics of scene depth, the effectiveness of scene classification is greatly improved.

3. Experimental Results and Analysis

3.1. Datasets and Experimental Settings

- (i)

The LabelMe (OT) dataset contains 2688 color images of 8 categories, all 256 × 256 in size. In each category, 200 images were randomly used for training, and the rest images were used as test images

- (ii)

UIUC-Sports (SE) dataset contains 1579 color sports scene images of different sizes in 8 categories. Each category was randomly assigned 70 images for training and 60 images for test

- (iii)

Scene-15 (LS) dataset contains a total of 4485 indoor and outdoor scene images of 15 categories, of which 8 categories are the same as the LabelMe dataset. 100 images were randomly used in each category for training, and the rest of the images were used as test images

- (iv)

MIT67 (IS) is a challenging indoor scene image dataset containing a total of 15,620 images in 67 categories. 80 images were randomly used in each category for training, and 20 images were used as test images

3.2. Classification Performance Evaluation

Figure 5 shows the comparison test results of classification on four datasets between deep learning features without visual attention region detection and features proposed in this paper by using the same classification method.

It can be seen that all the features proposed in this paper have certain effects on the test datasets, and the classification accuracy effect is most significantly improved in the LS dataset, mainly because the dataset contains indoor and outdoor scenes, which indicates that the algorithm is universal. In addition, the classification effect of simple outdoor scenes is also significantly improved. However, the effect of the SE and IS dataset is limited, mainly due to the fact that there are many objects in the scene, and the context relationship of prominent objects in the scene is complex. People in many scenes are not the main objects to distinguish scenes, but sometimes, they are enhanced as prominent objects, which interferes with the discrimination of scene content. In particular, scene discrimination in the SE dataset is mainly determined by the relationship between characters’ actions and scenes, while characters’ actions are sometimes very similar in multiple scenes. Therefore, the classification effect of visual area of concern detection algorithm on these datasets is limited.

Precision and recall were used to evaluate and analyze the OT, SE, and LS dataset, respectively.

Table 1 shows the fusion matrix obtained by a test of the method in this paper on the OT dataset. It can be seen that this method can achieve 100% accuracy and recall rate in the “MITinsidecity” class and can also achieve good classification effect for other categories. It is easy to confuse the “MITopencountry” class with the “‘MITcoast” class. Figure 6 shows partial misclassification images of the OT dataset. These two images misclassify images of “MITopencountry” into “MITcoast,” because the context relationship between sky and land in “MITopencountry” is similar to that of sky and coast in “MITcoast.” Lawns and deserts on the slopes have a similar texture to sea level.

| Category | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | Recall (%) |

|---|---|---|---|---|---|---|---|---|---|

| MITcoast (1) | 154 | 2 | — | — | — | 4 | — | — | 96.3 |

| MITforest (2) | — | 127 | — | — | 1 | — | — | — | 99.2 |

| MIThighway (3) | — | — | 59 | — | — | 1 | — | — | 98.3 |

| MITinsidecity (4) | — | — | — | 108 | — | — | — | — | 100 |

| MITmountain (5) | — | 1 | — | — | 171 | 2 | — | — | 98.3 |

| MITopencountry (6) | 7 | 5 | — | — | 1 | 197 | — | — | 93.8 |

| MITstreet (7) | — | — | — | — | — | — | 91 | 1 | 98.9 |

| MITtallbuilding (8) | — | — | — | — | — | — | — | 156 | 100 |

| Precision (%) | 95.7 | 94.1 | 100 | 100 | 98.8 | 96.6 | 100 | 99.4 |

Table 2 shows the fusion matrix obtained by the proposed method in a certain test on the LS dataset. The most confusing categories are “Bedroom” and “Livingroom” and “MITtallbuilding” and “Industrial.” Figure 7 shows partial segmentation images of the LS dataset. In (a), the scene images of “Industrial” are misclassified to “MITtallbuilding,” because the high-rise buildings in the image are very similar in appearance to tall buildings, and the context relationship with the surrounding environment is similar to that of “MITtallbuilding.” On the right, the scene image of “PARoffice” is misclassified into “Kitchen.” The reason is that the cabinet in the upper part of the image has the same position and appearance with the cabinet, and the context relationship with the desktop is similar to that of “Kitchen,” thus causing the misclassification.

| Category | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 | 11 | 12 | 13 | 14 | 15 | Recall (%) |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Bedroom (1) | 107 | — | — | — | 9 | — | — | — | — | — | — | — | — | — | — | 92.2 |

| CALsuburb (2) | — | 141 | — | — | — | — | — | — | — | — | — | — | — | — | — | 100 |

| Industrial (3) | — | — | 192 | 1 | 1 | — | — | — | 3 | — | — | 1 | 5 | — | 8 | 91.0 |

| Kitchen (4) | — | — | — | 103 | 2 | — | — | — | — | — | — | — | — | 4 | 1 | 93.6 |

| Livingroom (5) | 4 | — | — | 2 | 179 | — | — | — | 1 | — | — | — | — | 2 | 1 | 94.7 |

| MITcoast (6) | — | — | — | — | — | 252 | 2 | — | — | 1 | 5 | — | — | — | — | 96.9 |

| MITforest (7) | — | — | — | — | — | — | 221 | — | — | 6 | 1 | — | — | — | — | 96.9 |

| MIThighway (8) | — | — | 1 | — | — | — | — | 158 | — | — | 1 | — | — | — | — | 98.8 |

| MITinsidecity (9) | — | 2 | 5 | 3 | — | — | — | — | 194 | — | — | 3 | 1 | — | — | 93.3 |

| MITmountain (10) | — | — | — | — | — | — | — | — | — | 273 | 1 | — | — | — | — | 99.6 |

| MITopencountry (11) | — | — | — | — | — | 6 | 1 | — | — | 6 | 297 | — | — | — | — | 95.8 |

| MITstreet (12) | — | — | 3 | — | — | — | — | — | 2 | — | — | 186 | — | — | 1 | 96.9 |

| MITtallbuilding (13) | — | — | 9 | — | — | — | 2 | — | 1 | — | — | — | 243 | — | 1 | 94.9 |

| PARoffice (14) | 1 | — | — | 3 | 1 | — | — | — | 1 | — | — | — | — | 109 | — | 94.8 |

| Store (15) | — | — | 1 | 1 | 1 | — | — | — | — | — | — | — | 1 | — | 211 | 98.1 |

| Precision (%) | 95.5 | 98.6 | 91.0 | 91.2 | 92.7 | 97.7 | 97.8 | 100 | 96.0 | 95.5 | 97.4 | 97.9 | 97.2 | 94.8 | 94.6 |

Table 3 shows the fusion matrix obtained by a certain test of the method in this paper on the SE dataset. It can be seen that the method can achieve 100% accuracy and recall rate in the “Sailing” class. However, “Bocce” has the lowest accuracy and recall rate, and it is most easily confused with “Croquet,” mainly because the identification of these two scenes is mainly based on character movements and the relationship between character and scene, and the character movements of these two sports are very similar to the surrounding environment, so it is easy to misjudge.

| Category | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | Recall (%) |

|---|---|---|---|---|---|---|---|---|---|

| Badminton (1) | 57 | 3 | — | — | — | — | — | — | 95.0 |

| Bocce (2) | 3 | 45 | 9 | — | 2 | — | — | 1 | 75.0 |

| Croquet (3) | — | 5 | 54 | — | — | 1 | — | — | 90.0 |

| Polo (4) | 1 | 3 | — | 56 | — | — | — | — | 93.3 |

| RockClimbing (5) | — | — | — | — | 59 | — | — | 1 | 98.3 |

| Rowing (6) | — | — | — | — | — | 60 | — | — | 100 |

| Sailing (7) | — | — | — | — | — | — | 60 | — | 100 |

| Snowboarding (8) | 1 | — | — | — | — | — | — | 59 | 98.3 |

| Precision (%) | 91.9 | 80.4 | 85.7 | 100 | 96.7 | 98.4 | 100 | 96.7 |

3.3. Comparison of Experimental Results

The experimental results of the proposed method on four standard scene datasets were compared with those of the reference method.

The comparison test results on the OT dataset are shown in Table 4. It can be seen that the algorithm using deep convolutional network has obvious advantages over the traditional feature extraction algorithm. GECMCT method [28] adds the far neighborhood information to the nonparametric transformation calculation and spatial information. Gist feature and spatial correction census transform are combined to form a new image descriptor, but this method lacks the deep description of scene images. HGD algorithm [29] uses pLSA to train multichannel classifier on the topic distribution vector of each image, which is not only complex in modeling but has also limited classification effect. Compared with other algorithms using deep convolutional networks, the deep convolutional classification model constructed in this paper based on the visual area of interest of images has obviously better classification effect.

The comparative test results on the SE dataset are shown in Table 5. It can be seen that the classification performance of the model in this paper is significantly better than other classification algorithms. Among them, SKDES+Grad+color+shape method [8] embeds image and label information into block-level kernel descriptors to form supervised kernel descriptors and uses visual word bags to learn low-level block expressions. The implementation process of this method is relatively complex. And the representational ability of visual word bag is limited. LGF methods classified using global and local features of images, not the analysis on the contents of the image; using visual attention area detection algorithms on different areas of the image effectively; and combining the depth have been trained on the Places dataset convolution network and can better access the spatial structure of image information [30]. AdaNSFF-Color boost [1] proposes a novel fusion framework of adaptive nonnegative feature fusion (AdaNFF) for scene classification. The AdaNFF integrates nonnegative matrix factorization, adaptive feature fusion, and feature fusion boosting into an end-to-end process. However, although this method fuses and enhances features, the training data lacks pertinence, which affects its generalization ability.

The comparison test results on the LS dataset are shown in Table 6. The algorithm in this paper still has a good classification effect, which is not only better than traditional classification methods but also better than many classification methods using deep learning. The features of SDO+fc algorithm of cooccurrence with all objects in the scene mode [4] and the correlation of different objects in the scene configuration to choose representative and distinguish between objects, thus, enhance the discriminability between the classes, with the emergence of identifying objects in the image block probability to represent the image descriptors and to eliminate the influence of the public target. Although the algorithm considers the correlation between objects in the scene, it is still limited to the simple object and does not consider the surrounding background area adjacent to the object, so the classification effect is limited. DeepScene [17] integrates Spatial Pyramid Pooling into the convolutional neural network to perform multilevel pooling on the scene image and implements the weighted average ensemble of convolutional neural networks to fuse the class scores thus improving the overall performance in scene classification. However, this method still takes the scene as a whole and does not enhance the information representing of scene, so the classification effect is limited.

| Methods | Accuracy (%) |

|---|---|

| Bow [25] | 74.80 |

| CMN [36] | 77.20 |

| SPMSM [37] | 82.50 |

| GECMCT [28] | 82.96 |

| EMFS [38] | 85.70 |

| DLGB (saliency) [5] | 87.40 |

| O2C kernels [18] | 88.80 |

| ISPR+IFV [39] | 91.06 |

| Hybrid-CNN [15] | 91.59 |

| DGSK [3] | 92.30 |

| MKL [30] | 92.50 |

| DDSFL+Caffe [11] | 92.81 |

| DeepScene [17] | 95.60 |

| SDO+ fc features [4] | 95.88 |

| Ours | 96.01 |

The comparative test results on the IS dataset are shown in Table 7. It can be seen that, similar to the results of other datasets, the effect of using convolutional neural network is significantly better than that of traditional features in general, and the classification results in this paper still have obvious advantages. Among them, the Places-CNN algorithm [15] using the Places dataset pretraining network outperforms the ImageNet-CNNS algorithm [11] using the ImageNet dataset pretraining network by nearly 12%, mainly because the network using the scene training is more effective in judging the scene category. However, hybrid-CNN algorithm uses the pretrained network of ImageNet and Places datasets to extract deep convolution features of images [15]. Therefore, the classification effect is improved compared with the first two algorithms. However, the algorithm in this paper only uses the pretrained convolutional neural network of the Places dataset and combines the context information of the visual attention area of the image to achieve a better classification effect than hybrid-CNN algorithm.

4. Conclusions

This paper proposes a scene classification model based on the depth feature of visual focus area. Based on the context of significant regional detecting scene image visual interest areas in the image, with the original image overlay, enhance image visual interest area, then, will the three images into AlexNet, respectively, extraction depth visual focus features, in the end, will it into to the random forest classifier for training and classification.

Since the feature extraction model of deep visual attention in this paper also describes the target information in the image and the contextual semantic information between the target and the surrounding scene, different visual attention is constructed and the expression ability of scene characteristics is improved. Combined with the feature of multilayer deep convolution, the deep visual expression of scene image is constructed. The test results on four standard scene image sets verify the effectiveness of the proposed method, and it is better than several methods with good characteristics.

The method for the classification of the test datasets as a whole has a good effect, but when individual scene image content itself exists, ambiguity or visual attention content area does not fully express the scene; there is still a fault phenomenon, to this, and another step in the future research work will be digging deeper visual focusing on context information, in order to obtain better classification effect.

Conflicts of Interest

The authors declare that there is no conflict of interest regarding the publication of this paper.

Acknowledgments

This research was funded by the Natural Science Basic Research Program of Shaanxi Province, Grant Number 2021JQ-487.

Open Research

Data Availability

The data used to support the findings of this study are available from the corresponding author upon request.