[Retracted] Research of Vertical Domain Entity Linking Method Fusing Bert-Binary

Abstract

To solve the problem of unclear entity boundaries and low recognition accuracy in Chinese text, we construct the crop dataset and propose a Bert-binary-based entity link method. Candidate entity sets are generated through entity matching in multiple data sources. The Bert-binary model is called to calculate the correct probability of the candidate entity, and the entity with the highest score is screened for linking. In comparative experiments with three models on the crop dataset, the F1 value is increased by 2.5% on the best method or by 8.8% on average. The experimental results show the effectiveness of Bert-binary method in this paper.

1. Introduction

As a key technology of natural language processing, entity linking is widely used in knowledge representation, knowledge retrieval, and other fields [1]. Entity linking methods mainly include dictionary-based methods [2–4], search engine methods [5, 6], context augmentation methods, and deep learning methods. For the past few years, with the development of deep learning and their wide application in various fields, the research on entity linking methods based on deep learning has been paid more and more attention [5, 7–29]. Ganea and Hofmann [7] carried out joint disambiguation in entity link by using the method of combining local and global. Le and Titov [8] proposed a neural entity linking model and introduce entity relations, which optimized entity linking in an end-to-end manner with relations as latent variables. Hosseini et al. [9] proposed a neural embedding-based feature function that enhances implicit entity linking through prior term dependencies and entity-based feature function interpolation. Shengchen et al. [10] proposed a domain-integrated entity linking method based on relation index and representation learning, to address the problem that existing entity linking methods cannot combine text information and knowledge base information well. Xie et al. [11] proposed a GRCCEL (graph-ranking collective Chinese entity linking) algorithm, aiming at the problem of ignoring the entity semantic association relationship and being limited by the size of knowledge graph, using the structural relationship between entities in the knowledge graph and the additional background information provided by external sources of knowledge base, to obtain more semantic and structural information; the purpose is to obtain stronger ability to distinguish similar entities. Xia et al. [12] proposed an integrated entity link algorithm that uses topic distribution to represent knowledge and generate candidate entities. Rosales et al. [13] proposed a fine-grained entity link classification model to distinguish different types of entities and relationships. Wang et al. [14] explored methods to resolve the ambiguity of HIN entities for heterogeneous information network (HIN). Huang et al. [15] constructed an entity linking model combining deep neural network and association graph, which enhanced referential and entity representation by adding character features, context, and deep semantics of information, and performed similarity matching. Li et al. [16] constructed an entity link model, and a candidate entity generation is proposed, which is combining knowledge base matching and word vector similarity calculation. Rosales-Méndez et al. [13] designed a questionnaire, proposed a fine-grained entity link classification scheme based on the survey results, relabeled three general entity link datasets according to the classification scheme, and created a fine-grained entity link system. Zhou et al. [17] graph-based joint feature method preprocesses the knowledge base and text and then combines the semantic similarity of multiple features and using restarted random walks and joint disambiguation to select referential linked entities in a graph model. Zhan et al. [18] introduced the BERT pretrained language model into the entity linking task and used TextRank keyword extraction technology to enhance the topic information of the comprehensive description information of the target entity.

The main tasks of entity linking are candidate entity generation and entity disambiguation [30]. The common methods of candidate entity generation include dictionary-based construction methods [19–22], context-based augmentation methods [23–25], and search engine-based construction methods [5, 26]. Entity disambiguation is to disambiguate the generated candidate entity set to determine the target entity in the current context, and its mainstream method is entity disambiguation based on deep learning [7, 27–29].

We make the following contributions in this paper: (1) a Bert-binary entity linking method is proposed, that combines Bert and binary classification methods; (2) the algorithm of candidate entity set generation is given; (3) Proposed the candidate entity disambiguation processing method.

2. Model Framework

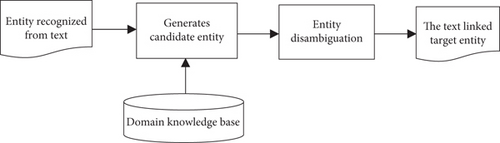

The entity linking method based on Bert-binary identifies one or more entity references to be linked according to the text after named entity recognition, generates a candidate entity set, performs joint disambiguation on all entity references in the candidate entity set, and returns the target entity corresponding to each entity reference expression item in the entity reference set in the knowledge base. The process is shown in Figure 1.

The entity linking method of Bert-binary mainly includes two main tasks: candidate entity generation and candidate entity disambiguation.

3. Candidate Entity Set Generated

The quality of candidate entity determines the effect of entity linking [31]. Normally, the entity representations identified by the named entity recognition technology may be ambiguous, and there are situations where the expressions of the same semantic entity are different or the expressions of different semantic entities are the same. Therefore, this paper constructs an entity mapping table corresponding to entity references for a specific domain, which should be able to contain all candidate entities corresponding to entity references. Taking the domain of agriculture as an example, some of the constructed entity mappings are shown in Table 1.

| Entity reference | Candidate entities corresponding to entity references |

|---|---|

| Rice | Paddy, millet |

| Clouds of rice disease | Brown leaf blight, leaf burn |

| Peony leaf tip blight | Tip-white blight |

| Potato | yáng yù、mǎ líng shŭ、dì dàn |

| Rice paddies aphids | Macrosiphum avenae, sitobion avenae |

| Downy mildew | Yellow stunt |

| Rice blast | Fire blast, knock blast |

| Sheath blight | Sharp eyespot, Moire disease |

| … | … |

Based on the constructed domain entity reference mapping table, the type of entity reference is determined according to the entity reference and its context information. The knowledge base and semantic dictionary are used to determine the candidate entity corresponding to the entity reference through retrieval, and the candidate entity set is generated. The specific processing is shown in Algorithm 1.

-

Algorithm 1: Candidate entity generation.

-

Input: Dictionary D, a set of entity mention M (m ∈ M), a set of named entities key=(k: value)

-

Output: Candidate entity set Em

-

1: for m (m ∈ M) do

-

2: if (k = = m ‖ entity name ∈ entity mention) then

-

3: Em = Em.add(key)

-

4: else if (The k exactly matches the first letter of all words in the M) then

-

5: Em = Em.add(key)

-

6: end if

-

7: end for

The candidate entity set is generated by fuzzy matching with the same semantic word dictionary and CNKI. Taking Ningxia rice as an example, the text to be processed is as follows: “In recent years, varieties widely promoted in Ningxia production include: Gongyuan 4, Ningjing 23, Ningjing 24….” The entities contained in the text are “Ningxia,” “Gongyuan 4,” “Ningjing 24,” and “Ningjing 23.” Algorithm 1 can be used to correspond “Ningxia” to “place name,” while entity types of “Gongyuan 4,” “Ningjing 24,” and “Ningjing 23” can be corresponding to “rice variety.”

4. Candidate Entity Disambiguation

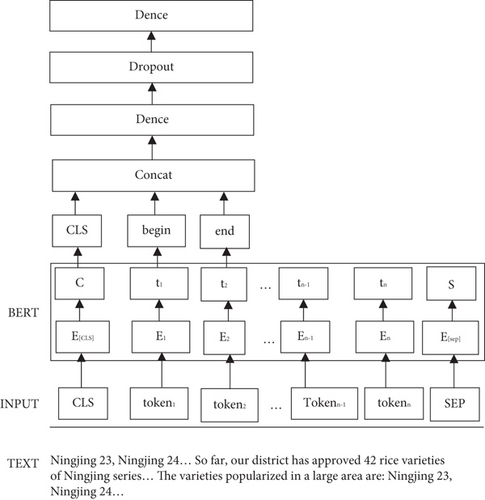

Aiming at the possible ambiguity in the generated candidate entity set, this paper adopts a method based on binary classification, invokes the Bert model, calculates the probability score of the candidate entity, and regards the entity with the highest score as the correct entity to be linked. The process is shown in Figure 2.

Among them, wjn is the weight of feature under the jn class.

Among them, SCLS represents the output vector of the CLS position, Sbegin represents the feature vector corresponding to the beginning of the candidate entity, and Send represents the feature vector corresponding to the end position of the candidate entity.

To solve the overfitting phenomenon in model training, a dropout layer is added between the two fully connected layers to reduce the overfitting phenomenon by reducing the number of feature detectors. In the entity disambiguation experiment, due to the paper uses only two fully connected layers and sigmoid layers, which belong to the shallow neural network, the dropout is 0.15.

Among them, is the positive probability of the model predicted sample, yj is the sample label. Assuming that the sample is a positive example, the value is 1; otherwise, the value is 0.

5. Method Validation

The experiment is based on the Python environment. On the constructed crop dataset, our method is compared with BiLSTM-Attention [32], LSTM-CNN-CRF [33], and BiLSTM-CNN [34], respectively. The experimental results are shown in Table 2.

Experimental results of BiLSTM-Attention [32] on crowdsourced annotated datasets in the field of information security show that the model significantly outperforms BiLSTM-Attention-CR, CRF-MA, Dawid & Skene-LSTM, BiLSTM-Attention-CRF-VT, and various model methods. The F1 was 3.6% higher from the optimal one. LSTM-CNN-CRF [33] used a hand-crafted feature, part-of-speech tagging information, and prebuilt lexicon information to augment features for representing sentence; the proposed method improves the performance of named entity recognition. The BiLSTM-CNN [34] incorporates the CNN ability to extract local and long-distance dependent features. Compared with CNN- and LSTM-based methods, our method has an average increase of 5.4% in accuracy, 11.9% in recall rate, and 8.8% in F1 value compared to the other three methods. It can be seen from the experimental results that our method has an improvement in accuracy recall rate and F1 value.

6. Conclusions

A Bert-binary method is proposed in this paper, which constructs an entity mapping table for a specific domain, calls semantic dictionary to generate candidate entity set, calls Bret-binary algorithm to conduct entity disambiguation for generated candidate entities, and filters referents of entity names through probability scores of candidate entities. The agricultural domain is selected, a crop dataset is constructed, and a Python experimental environment is set up to conduct comparative experiments with three models on the crop dataset. The experimental results show the effectiveness of Bret-binary method in this paper.

In the future, the combination of the fully connected network and sigmoid as classifiers can be improved by optimizing the decision variables of neural networks [35, 36] to obtain better accuracy, feasibility, and reliability.

Conflicts of Interest

The authors declare that there is no conflict of interest regarding the publication of this paper.

Acknowledgments

This work is supported by the North Minzu University Major Education and Teaching Reform Projects (2021) and the Key Laboratory of Images & Graphics Intelligent Processing of State Ethnic Affairs Commission.

Open Research

Data Availability

The crop dataset used to support the findings of this study have been deposited in the Baidu’s AI Studio repository (https://aistudio.baidu.com/aistudio/datasetdetail/153737).