Yosida Approximation Iterative Methods for Split Monotone Variational Inclusion Problems

Abstract

In this paper, we present two iterative algorithms involving Yosida approximation operators for split monotone variational inclusion problems (SpMVIP). We prove the weak and strong convergence of the proposed iterative algorithms to the solution of SpMVIP in real Hilbert spaces. Our algorithms are based on Yosida approximation operators of monotone mappings such that the step size does not require the precalculation of the operator norm. To show the reliability and accuracy of the proposed algorithms, a numerical example is also constructed.

1. Introduction

where u ∈ H1 is a given point and Wn is W-mapping which is generated by an infinite family of nonexpansive mappings. Similar results related to SpVIP can be found in [11–17].

The common figure among the above-explained iterative methods is that they used the resolvent of associated monotone mappings; secondly, the step size depends on the operator norm ‖A∗A‖. To avoid this obstacle, self-adaptive step size iterative algorithms have been introduced (see, for example, [18–24]). Lopez et al. [20] introduced a relaxed method for solving split feasibility problem with self-adaptive step size. Recently, Dilshad et al. [25] proposed two iterative algorithms to solve SpVIP in which the precalculation of the operator norm ‖A∗A‖ is not required. They studied the weak and strong convergence of the proposed methods to approximate the solution of SpVIP with the step size , which do not depend upon the precalculated operator norm.

The resolvent of a maximal monotone operator G is defined as , where λ is a positive real number. A resolvent operator of maximal monotone operator is single valued and firmly nonexpansive. Due to the fact that the zeros of maximal monotone operator are the fixed point sets of resolvent operator, the resolvent associated with a set-valued maximal monotone operator plays an important role to find the zeros of monotone operators. Following Byrne’s iterative method (5), which is mainly based on the resolvents of monotone mappings, many researchers introduced and studied various iterative methods for SpVIP (see, for example, [7–9, 18, 25, 26] and references therein).

Yosida approximation operator for a monotone mapping G and parameter λ > 0 is defined as . It is well known that set-valued monotone operator can be regularized into a single-valued monotone operator by the process known as the Yosida approximation. Yosida approximation is a tool to solve a variational inclusion problem using nonexpansive resolvent operator and has been used to solve various variational inclusions and system of variational inclusions in linear and nonlinear spaces (see, for example, [18, 25–30]).

Due to the fact that the zero of Yosida approximation operator associated with monotone operator G is the zero of inclusion problem 0 ∈ G(x) and inspired by the work of Moudafi, Byrne, Kazmi, and Dilshad et al., our motive is to propose two iterative methods to solve SpMVIP. The rest of the paper is organized as follows.

The next section contains some fundamental results and preliminaries. In Section 3, we describe two iterative algorithms using Yosida approximation of monotone mappings G1 and G2. Section 4 is devoted to the study of weak and strong convergence of the proposed iterative methods to the solution of SpMVIP. In the last section, we give a numerical example in support of our main results and show the convergence of sequence obtained from the proposed algorithm to the solution of SpMVIP.

2. Preliminaries

Let H be a real Hilbert space endowed with norm ‖·‖ and inner product 〈·, ·〉. The strong and weak convergence of a sequence {xn} to x is denoted by xn⟶x and xn⇀x, respectively. The operator T : H⟶H is said to be a contraction if ∀x, y ∈ H, ‖T(x) − T(y)‖ ≤ κ‖x − y‖, κ ∈ (0, 1); if κ = 1, then T is called nonexpansive and firmly nonexpansive if ∀x, y ∈ H, ‖T(x) − T(y)‖2 ≤ 〈x − y, Tx − Ty〉; T is called τ-inverse strongly monotone if there exists τ > 0 such that 〈T(x − T(y), x − y〉 ≥ τ‖T(x) − T(y)‖2.

Let G : H⟶2H be a set-valued operator. The graph of G is defined by {(x, y): y ∈ G(x)}, and inverse of G is denoted by G−1 = {(y, x): y ∈ G(x)}. A set-valued mapping G is said to be monotone if 〈u − v, x − y〉 ≥ 0, for all u ∈ G(x), v ∈ G(y). A monotone operator G is called a maximal monotone if there exists no other monotone operator such that its graph properly contains the graph of G.

Lemma 1 (see [31].)If {an} is a sequence of nonnegative real numbers such that

- (i)

- (ii)

or

then

Lemma 2 (see [32].)Let H be a Hilbert space. A mapping F : H⟶H is τ -inverse strongly monotone if and only if I − τF is firmly nonexpansive, for τ > 0.

Lemma 3 (see [33].)Let H be a Hilbert space and {xn} be a bounded sequence in H. Assume there exists a nonempty subset C ⊂ H satisfying the properties

- (i)

limn⟶∞‖xn − z‖ exists for every z ∈ C

- (ii)

ωw(xn) ⊂ C

Then, there exists x∗ ∈ C such that {xn} converges weakly to x∗.

Lemma 4 (see [34].)Let Γn be a sequence of real numbers that does not decrease at infinity in the sense that there exists a subsequence of Γn such that for all k ≥ 0. Also, consider the sequence of integers defined by

Then, is a nondecreasing sequence verifying limn⟶∞σ(n) = ∞ and for all n ≥ n0,

3. Yosida Approximation Iterative Methods

Let V1 : H1⟶H1, V2 : H2⟶H2 be single-valued monotone mappings and be set-valued mappings such that and are set-valued maximal monotone mappings; , and , are the resolvents and Yosida approximation operators of V1 + G1 and V2 + G2, respectively. We propose the following iterative methods to approximate the solution of SpMVIP.

Algorithm 1. For an arbitrary x0, compute the n + 1th iteration as follows:

Algorithm 2. For an arbitrary x0, compute the n + 1th iteration as follows:

4. Main Results

We assume that the problem SpMVIP is consistent and the solution set is denoted by Δ.

First, we prove following lemmas, which are used in the proof of our main results.

Lemma 5. Let V1 : H1⟶H1 be single-valued monotone mappings and be set-valued mappings such that be set-valued maximal monotone mapping. If and are the resolvent and Yosida approximation operators of V1 + G1, respectively, then for λ1 > 0, following are equivalent:

- (i)

x∗ ∈ H1 is the solution of

- (ii)

- (iii)

Proof. The proof is trivial which is an immediate consequence of definitions of resolvent and Yosida approximation operator of maximal monotone mapping V1 + G1.☐

Theorem 6. Let H1, H2 be real Hilbert spaces; V1 : H1⟶H1, V2 : H2⟶H2 be single-valued monotone mappings, , be set-valued maximal monotone mappings such that V1 + G1 and V2 + G2 are maximal monotone, and A : H1⟶H2 be a bounded linear operator. Assume that θ = min{2λ1, 2λ2} such that infτn(θ − τn) > 0. Then, the sequence {xn} generated by Algorithm 1 converges weakly to a point z ∈ Δ.

Proof. Let z ∈ Δ. Since the Yosida approximation operator is λ1-inverse strongly monotone, for λ1 > 0, then by Algorithm 1 and (12), we have

Now, using (17), we estimate that

Since is λ2-inverse strongly monotone and using (12), we estimate

By (18), it turns out that

It follows from (24) and (25) that

Combining (23) and (26), we get

Due to the assumption that infτn(θ − τn) > 0 and the properties of convergent series, we conclude that

Hence, there exist constants K1 and K2 such that

By Algorithm 1 and (30), we get

Let{x⋆} ∈ ωw(xn)andbe a subsequence of{xn}that converges weakly to{x⋆}, which implies thatandalso converge to{x⋆}. Recall that is λ1-inverse strongly monotone and converges to x⋆, and using (30), we get

Taking limit k⟶∞, we obtain

Replacing by , by with the same arguments, we get This completes the proof.☐

Theorem 7. Let H1, H2 be real Hilbert spaces; V1 : H1⟶H1, V2 : H2⟶H2 be single-valued monotone mappings, , be set-valued maximal monotone mappings such that V1 + G1 and V2 + G2 are maximal monotone, and A : H1⟶H2 be a bounded linear operator. If {αn}, {βn} are real sequences in (0, 1) and θ = min{2λ1, 2λ2} such that τn ∈ (0, θ) and

Proof. Let z = PΔ(0); then, from (23) and (26) of the proof of Theorem 6, we have

Since τn ≤ min{2λ1, 2λ2}, we get ∥vn − z∥≤∥un − z∥≤∥xn − z∥. From Algorithm 2, we have

Combining (35), (36), and (38), we obtain

We discuss the two possible cases.

Case 1. If the sequence{‖xn − z‖} is nonincreasing, then there exists a number k ≥ 0 such that ‖xn+1 − z‖ ≤ ‖xn − z‖, for each n ≥ k. Then, exists and hence, . Thus, it follows from (39) that

From (40), we conclude that and We observe from Algorithm 2 that xn+1 − un = αn(vn − un) + γnun⟶0; thus,

This shows that the sequence {xn} is asymptotically regular. By Theorem 6, we have that ωw(xn) ⊂ Δ. Settingzn = (1 − αn)un + αnvnand rewritingxn+1 = (1 − βn)zn + αnβn(vn − un), we have

From (42) and Algorithm 2, we get

Since ωw(xn) ⊂ Δ and z = PΔ(0), then using (40), we get

Thus, by Lemma 1, we obtain xn⟶z.

Case 2. If the sequence {‖xn − z‖} is not nonincreasing, we can select a subsequence of {‖xn − z‖} such that for all k ∈ ℕ. In this case, we define a subsequence of positive integers σ(n)⟶∞ with the properties

If ‖xn+1 − z‖ > ‖xn − z‖ for some n ≥ 0, then it follows from (39) that

Replacing n by σ(n) and taking limit n⟶∞, we get the following relation for the subsequences {xσ(n)}, {uσ(n)}, and {vσ(n)}:

Thus, we have ‖xσ(n + 1) − xσ(n)‖⟶0, as n⟶∞ and ωw(xσ(n)) ⊂ Δ. It is remaining to show that xn⟶z.

Replacing n by σ(n) in (47), using ‖xσ(n) − z‖ < ‖xσ(n)+1 − z‖ and boundedness of ‖xn − z‖, we have

Since z = PΔ(0), ω(xσ(n)) ⊂ Δ with using ‖vσ(n) − uσ(n)‖⟶0 and ‖xσ(n)+1 − xσ(n)‖⟶0, we have

From (49) and (52), we conclude that xσ(n)⟶z and

For τn = 1, we have the following result for the convergence of Algorithm 2.

Corollary 8. Let H1, H2, V1, V2, G1, G2, and A, A∗ be the same as defined in Theorem 7. If {αn}, {βn} are sequences in (0, 1) and assuming that λ1 > 1/2 and λ2 > 1/2 satisfying

For βn = 0, we have the following corollary for the convergence of Algorithm 2.

Corollary 9. Let H1, H2, V1, V2, G1, G2, and A, A∗ be the same as defined in Theorem 7. If {αn} is a sequence in (0, 1) and assuming that θ = min{2λ1, 2λ2} such that τn ∈ (0, θ) and

For τn = 1 and βn = 0, we have the following corollary for the convergence of Algorithm 2.

5. Numerical Example

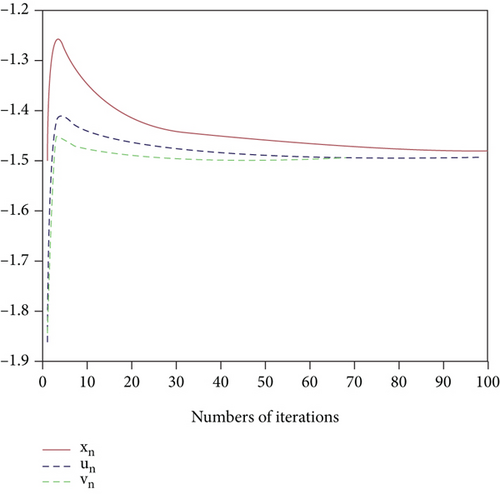

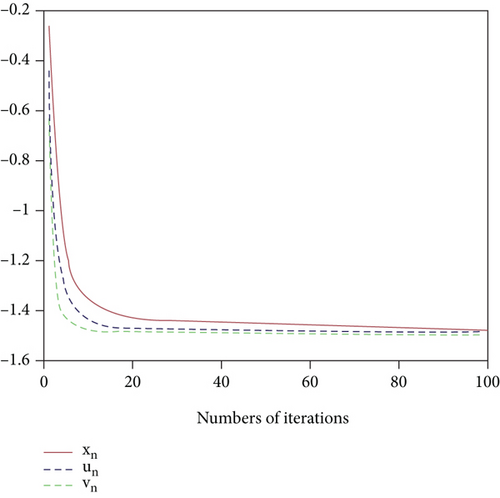

In Figures 1 and 2, we show that the obtained sequences {un}, {vn}, and {xn} converge to z = −(3/2) for randomly selected arbitrary values of x0 = −2 and 0.

6. Conclusions

We have proposed two iterative algorithms for SpMVIP which are mainly based on the Yosida approximation operators. Since the zero of Yosida approximation of monotone mapping V1 + G1 is the solution of , we used the Yosida approximations of monotone mappings V1 + G1 and V2 + G2 to solve SpMVIP. We proved the weak and strong convergence of the composed iterative algorithms to investigate the solution of SpMVIP under some suitable assumptions such that the estimation of step size does not require any prior calculation of the operator norm ‖A∗A‖. To show the accuracy and efficiency of our algorithms, we have present a numerical example and showed the convergence using different parameters.

Conflicts of Interest

The authors declare that they have no conflicts of interest.

Open Research

Data Availability

We claim that this work is a theoretical result, and there is no available data source.